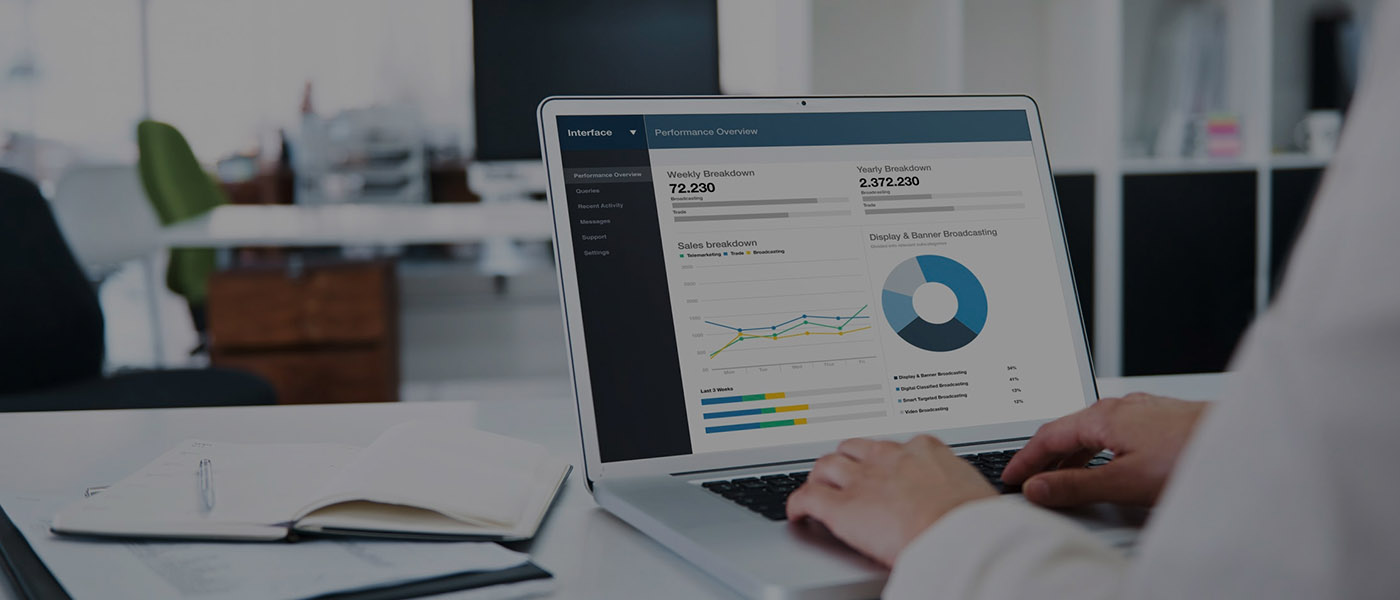

Data should be perceived as a strategic corporate tool, and data quality must be regarded as a strategic corporate responsibility. The corporate data universe is made up of a wide range of databases that are connected by infinite real-time and batch data feeds. Data is an ever-constant movement, and transition, the core of any solid and thriving business is high-quality data services which will, in turn, make for efficient and optimal business success.

However, the huge conundrum here is that data quality, solely on its own cannot improve. In truth, most IT processes have a negative impact on the quality of data. Therefore, if nothing is done, the quality of data will continue to plummet until the point that data will be considered a burden. It is also pertinent to point out that data quality is not a feat that can easily be achieved and after that, you brand the mission complete and heap praises on yourself for eternity. Rather, it must be viewed as a garden that must be continuously looked after.

Data Warehouse testing is becoming increasingly popular, and competent testers are being sought after. Data-driven decisions are termed to be accurate. A good grasp of data modelling and source to target data mappings can assist QA analysts with the relevant information to draw up an ideal testing strategy.

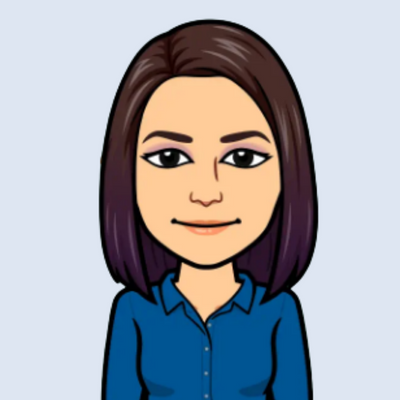

Data Quality Check-Verify Field Data Type and Length

Authenticate source and target fields data type and length.

In the verification pictured above, we have a mismatch of the data type and length in the target table. It is a good habit to verify data type and length uniformity between the source and target tables.

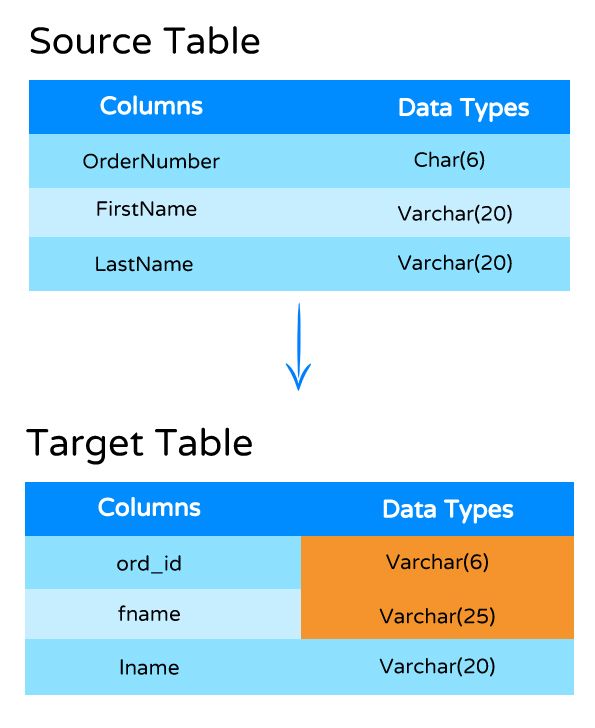

Data Quality Check-Verify Not Null Fields

Authenticate Null Values in a column which features a NOT NULL CONSTRAINT

When a source has a Not Null Constraint, it should not feature Null Values as demonstrated above.

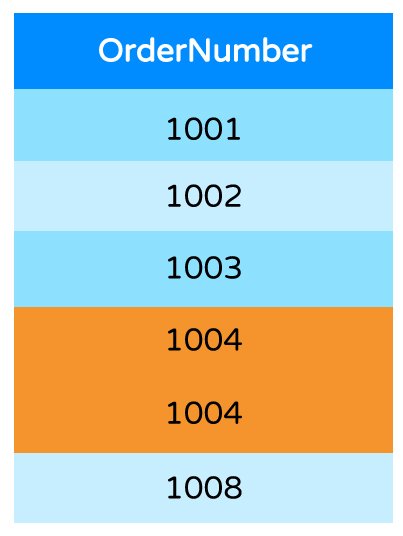

Data Quality Check-Verify For Duplicate Records

If your data warehouse features duplicated records, then your business decisions will be inaccurate and undependable. Poor data that is marred with inaccurate and duplicate data records will not enable stakeholders to properly forecast business targets.

A QA team should ensure that source data files are verified to spot duplicate records and any error in data. Similarly, you can integrate automated data testing by adopting Python to authenticate all data rather than just checking an example or subset.

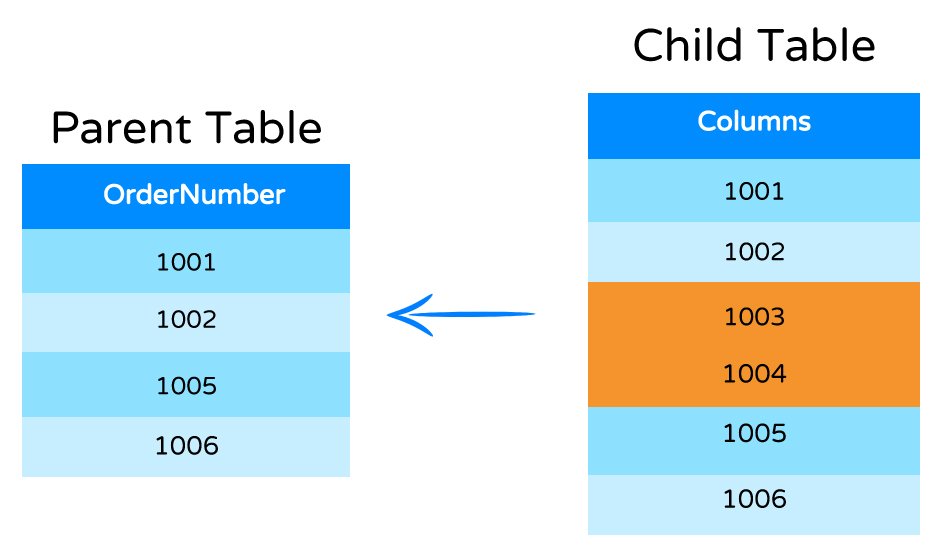

Data Quality Check-Verify for Orphan Records

A situation where there are child records with no matching parent records, then such records are termed “Orphan Records.” The business should outline Strategic relationship rules amongst parent and child tables.

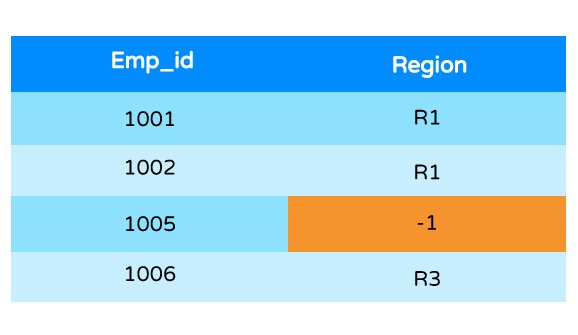

Data Quality Check-Verify for Unknown Data

On some occasions, unknown data validation is necessary to ensure that the right changes happened as planned for value encoding. For instance, consider during the transformation process, if there is a logic to encode the value “Male” as “M”, subsequently, the QA team should cross-check the Gender column to ensure that it does not feature a different encoded value.

Conclusion

Data quality is a broad and complicated field with many aspects. Corporate data universe is made up of different databases, linked in countless real-time and batch data interfaces. Irrespective of the industry, revenue size or its target market, virtually every organization depends on credible data to produce useful information for appropriate business decisions. Data quality can be jeopardized at any level; reception, entering, integration, maintenance, loading or processing.

Thus, efforts must be made to ensure that quality data via Data Warehouse testing/ETL testing is aimed at guaranteeing the production, availability, and use of high-quality data within an organization.