by Chris Adams | Jan 12, 2023 | Automation Testing, Blog |

Cypress is a great automation testing tool that has been gaining popularity in recent times. One of the key features of Cypress is that it operates in the same context as the application under test. This allows it to directly manipulate the application and provide immediate feedback when a test fails, making it easier to debug and troubleshoot issues in tests. But the fact of the matter is that no tool is perfect. Being a leading automation testing company, we have used Cypress in many of our projects. And based on our real-world usage, we have written this blog covering the various Cypress limitations that will be beneficial to know about if you have decided to use the tool. So let’s get started.

List of Cypress Limitations

Language Support

Let’s start off our list of Cypress limitations with the number of languages it supports as it is an important factor when you choose a framework. As Cypress is a JavaScript-based testing framework, it is primarily intended to be used with JavaScript and its related technologies.

It does support TypeScript as it is a typed superset of JavaScript. But there is a catch, as you’ll have to transpile your TypeScript code to JavaScript using a build tool like Webpack or Rollup.

Likewise, you can follow the same process to use Cypress with languages like CoffeeScript or ClojureScript by transpiling the code to JavaScript.

Though it seems like a workaround, it is important to note that Cypress wasn’t designed to work with such transpiled code. So you may encounter issues or limitations when using it with other languages other than pure JavaScript. If you do opt to use any other language apart from JavaScript, make sure to thoroughly test if it is fully compatible with the framework.

Testing Limitations

The second major Cypress limitation is the lack of testing capabilities for mobile and desktop applications. As of now, Cypress can be used to test web applications only.

Even in Selenium, you will be able to perform mobile app testing using Appium server. But when it comes to Cypress, you have no such provision to perform mobile app testing.

Browser Support

The next Cypress limitation is the lack of support for browsers such as Safari and Internet Explorer. Though Internet Explorer has been retired by Microsoft, certain legacy applications might still depend on Internet Explorer.

Cypress works with the latest versions of Chrome and Electron. But when it comes to other popular browsers such as Firefox and Microsoft Edge, you’d have to install additional browser extensions. Due to this, these browsers may not exhibit the same level of support as Chrome and Electron. So a few features may not work as expected.

It is also recommended you use the latest version of Chrome or Electron when running your Cypress tests. But if you wish to use an older version of these browsers, these are the supported browsers as of writing this blog.

- Chrome 64 and above.

- Edge 79 and above.

- Firefox 86 and above.

Complexity

Complexity is the next Cypress limitation on our list as it can use only node.js, which is complex and knowledge-demanding. Though typical JavaScript is Synchronous, we’ll have to use asynchronous JS in Cypress. In addition to that, other advanced JavaScript methodologies such as promises and jQuery should also be known.

So if you are starting to learn automation or if you have been using other languages such as Java or Python for your automation, you’ll have a tough time using Cypress.

Multi-Origin Testing

The next Cypress limitation is that performing multi-origin testing is not as easy as it can be done using Selenium. If your automation testing doesn’t involve switching from one domain to another or if it has a different port, problems will arise.

Though the issue was partially addressed with version 12.0.0, you’ll still have to use the cy.origin() command to specify the domain change during such scenarios. Whereas, Selenium doesn’t have any such limitation when it comes to changing the origin domain during the tests.

Similar to this partial workaround, the next two Cypress limitations we are about to see can also be partially overcome with the help of Cypress plugins. We have also written a blog featuring the best Cypress plugins that will make testing easier for you. So make sure to check it out.

XPath Support

XPath is a crucial aspect of automation testing as it is one of the key object locators. But unfortunately, Cypress does not support XPath by default. However, this Cypress limitation is at the tail-end of our list as you can use the cy.xpath() command to evaluate an XPath expression and retrieve the matching elements in the DOM. This command is provided by the cypress-xpath plugin that you’d have to install separately.

iFrame Support

By default Cypress does not support iframes. However, you can use the cy.iframe() command to evaluate iframe actions and retrieve the matching elements in the DOM. But this command can be used only if you have installed the cypress-iframe plugin.

Conclusion

As we had mentioned at the beginning of the blog, no tool is perfect and each would have its own fair share of limitations. Likewise, these are the major Cypress limitations encountered by our dedicated R&D team. Nevertheless, we were still able to use Cypress and reap all the benefits of the tool to deliver effective automation testing services to our clients as these limitations didn’t impact the project needs.

So if these limitations are not huge concerns for your testing needs, you can use Cypress with confidence. If these are actual concerns, it is better you look into the alternatives.

by Mollie Brown | Jan 9, 2023 | Automation Testing, Blog |

A Cypress plugin is a JavaScript module that extends the functionality of the Cypress test runner. They have the ability to create new commands, modify existing commands, and register custom task runners. So knowing the best Cypress plugins can be very useful as you will be able to save a lot of time and streamline your automation testing. Being a leading automation testing company, we have listed out the Best Cypress Plugins we have used in our projects. We have also explained the functionality of these plugins and have mentioned how you can install them as well.

It is important to note that Cypress plugins can be created by anyone. So you can also create a plugin if different projects share a common functionality or utility function that can use one.

But the great advantage here is that Cypress already has a wide range of community-created plugins that cover most of the required functionality such as using XPath, creating HTML reports, and so on.

But before we take a look at the best Cypress plugins and their functionalities, we’ll have to know how to install them.

Installing a Cypress Plugin

Once you have identified a Cypress plugin you wish to use, there are a few standard steps you’ll have to follow to add it to your project. Let’s find out what they are.

But there will be exceptions in a few cases and you’ll need to do a few extra steps to use the plugin. We will explore those exceptions when we look into the best Cypress plugins in detail.

1. Add it as a dependency to your project by using this command

npm install --save-dev @name_of_the_plugin

2. Register the plugin in your Cypress configuration file (cypress.config.js)

Add the object into the “plugins” array as shown in the below example

{

"name": "@name_of_the_plugin"

}

Now that we are aware of the prerequisites you’ll need to know, let’s head directly to our list of the Best Cypress Plugins and find out what makes them so great.

Best Cypress Plugins

@badeball/cypress-cucumber-preprocessor

It is a customized version of the cypress-cucumber-preprocessor plugin that you can use to write Cypress tests in the Gherkin format.

The advantage here is that Gherkin uses natural language statements that make the automation tests very easy to read and understand. Apart from technical people being able to understand the tests, people with no technical knowledge also will be able to understand them. Since that is an important aspect of automation testing, we have added this plugin to our list of the best Cypress plugins.

Once you have installed and registered the plugin using the above-mentioned instructions, you will be able to write your tests using the Gherkin syntax and save them with the .feature file extension. These files will be parsed and converted into executables by the plugin.

@bahmutov/cypress-esbuild-preprocessor

It is a plugin that uses esbuild, a JavaScript bundler to preprocess your test files before they are run by Cypress.

If you are using JavaScript features such as JSX or TypeScript in your tests, this plugin would be useful as esbuild will convert these features to plain JavaScript that can be run in older browsers too.

@badeball/cypress-cucumber-preprocessor/esbuild

The @badeball/cypress-cucumber-preprocessor/esbuild plugin is a custom version of one of the best Cypress plugins we have seen already. As the name suggests, it is a modified version of the cypress-cucumber-preprocessor plugin.

The @badeball/cypress-cucumber-preprocessor/esbuild plugin extends the functionality of writing tests using the Gherkin language by using esbuild. You will be able to transpile your test files using esbuild and parse them using Cucumber before they are run by Cypress.

This can be very useful if you are using modern JavaScript features such as JSX or TypeScript in your tests.

@cypress/xpath

Using an XPath to locate elements for your automation tests is a very common practice in automation testing. And you can make use of the @cypress/xpath plugin to add support for using XPath expressions in your tests.

It can be particularly useful when you have to target elements that don’t have unique ID attributes, or if you want to locate elements based on their exact position or relative position with respect to other elements.

You can target elements in your tests by using the cy.xpath() command.

Cypress-iframe

iframes are HTML elements that allow you to embed another HTML document within the current page. They are used in numerous websites as they can be useful for displaying content from external sources or for separating different sections of a page.

If the site you are testing does have an iframe, you’ll have to know how to interact with it in your automation tests. So you can make use of the cypress-iframe plugin to shift the focus of your tests to an iframe element.

Cy-verify-downloads

As the name suggests, this Cypress plugin can be used to validate if a file has been downloaded during your automation testing. It is common for applications to offer file downloads to their users and that is why this plugin has found its spot in our list of best Cypress plugins.

It basically uses two arguments to fulfill its purpose, and they are the file path and the expected content of the download file. So you can use cy.verifyDownload() command to ensure that your application is generating and serving the correct files to users.

Multiple-cucumber-html-reporter

Reporting is a very important part of automation testing and we have chosen a plugin that will help you generate HTML reports for your tests. The Multiple-cucumber-html-reporter is a plugin for the Cucumber test framework that generates an HTML report for your tests.

Cucumber is a tool that allows you to write tests using natural language statements (written in the Gherkin syntax), and the Multiple-cucumber-html-reporter plugin allows you to generate an HTML report that shows the results of these tests.

Of our list of the best Cypress plugins, this is the only plugin that has an exception when it comes to using it after installation and registration. You’ll have to configure the plugin to generate the report in the format you want.

As usual, you can use the below command to install the plugin as a dependency on your project.

npm install --save-dev multiple-cucumber-html-reporter

After which, you’ll have to configure the plugin by creating a JavaScript file that requires the plugin. You can configure the JavaScript file with the appropriate conditions based on which your HTML report will be generated. Let’s take a look at an example to help you understand it clearly.

Example:

Let’s generate an HTML report based on the JSON files in the “path/to/json/files” directory and save it to the “path/to/report/directory” directory. We will be including metadata about the browser & platform used to run the tests and a few custom data such as the title and additional details about the project and release.

const reporter = require("multiple-cucumber-html-reporter");

reporter.generate({

jsonDir: "path/to/json/files",

reportPath: "path/to/report/directory",

metadata: {

browser: {

name: "chrome",

version: "78.0"

},

device: "Desktop",

platform: {

name: "Windows",

version: "10"

}

},

customData: {

title: "My Report",

data: [

{ label: "Project", value: "My Project" },

{ label: "Release", value: "1.0.0" },

{ label: "Execution Date", value: "January 1, 2020" }

]

}

});

cypress-file-upload

Similar to applications having the provision to download files, the ability to upload files from the local system to the server is also a common function. So you can make use of this plugin to test if your application uploads a file as expected.

cy.upload_file is the command you’ll have to use to get this functionality.

Conclusion

As mentioned earlier, there are so many great Cypress plugins and we found these to be the most important ones that every automation tester must know. We hope you are now clear about how to install, register, and use all of the best Cypress plugins we’ve gone through in our blog. Even if you wish to use any other Cypress plugin, you can make use of the standard installation and registration process to get started with it. Being an experienced automated testing as a service provider, we have published numerous informative blogs about Cypress and will be publishing more. So make sure to subscribe to our newsletter to ensure you do not miss out on any of our latest posts.

by Arthur Williams | Dec 30, 2022 | Automation Testing, Blog |

Similar to all popular programming languages, Python also has numerous BDD frameworks that we can choose from. Out of the lot, Pytest BDD and Behave are the most widely used BDD frameworks. Being a leading automation testing company, we have used both Pytest BDD and Behave in our automation testing projects based on business needs. We believe it is crucial for every tester to know how to implement a readable and business-friendly automation testing solution. So in this blog, we will be pitting Pytest BDD vs Behave to help you choose the right framework for your automation testing needs by comparing the two based on our real-world usage.

For those who are unfamiliar or new to Python and BDD, let’s have a small introduction for both before we start our Pytest BDD vs Behave comparison. If you are already familiar with them, feel free to head straight to the comparison.

BDD

Behavior-Driven Development (or BDD) is an agile software development technique that promotes collaboration not only between developers and testers; but also with non-technical or business stakeholders in a software project. BDD achieves this by following a simple Given, When, and Then format (Gherkin) to write test cases that anybody can understand. Before we head to the Pytest BDD vs Behave comparison, let’s take a look at the list of popular Python BDD frameworks available.

List of Python BDD Frameworks

1. Behave

2. Pytest BDD

3. radish

4. lettuce

5. freshen

Though there are even more Python BDD frameworks, these are the most well-known and widely used options. But it is important to note that not every BDD framework is for everyone. That is why we have picked out Pytest BDD and Behave for our comparison.

Python Behave

If you have prior experience in using a Cucumber BDD framework, you would find Behave to be similar in many ways. But even if you are starting afresh, Behave is quite easy to get started with. The primary reason for that is Behave’s great online documentation and the availability of easy tutorials. We will be covering a direct Pytest BDD vs Behave comparison once we explore the individual pros and cons of both these frameworks.

Pros

- Simple Setup: No need for explicit scenario declarations.

- Dynamic Arguments from Command Line: Allows passing custom arguments dynamically, making test execution more flexible.

- Better Feature File Organization: Feature files are automatically detected without extra setup.

- Scenario Outlines & Backgrounds: Helps in reusing test steps and making tests more maintainable.

- BehaveX for Parallel Execution: Although Behave doesn’t natively support parallel execution, BehaveX helps achieve this.

Cons

- No Built-in Parallel Execution: Unlike Pytest BDD, Behave requires additional tools for parallel test execution.

- Limited IDE Support: Fully supported only in PyCharm Professional.

- Limited Reporting: Supports Allure, JSON, and JUnit reports, but lacks HTML reports.

Pytest BDD

Pytest BDD implements a subset of the Gherkin language to enable project requirements testing and behavioural-driven development. Pytest fixtures written for unit tests can be reused for feature step setup and actions with dependency injection. This enables true BDD with just the right amount of requirement specifications without having to maintain any context object containing the side effects of Gherkin imperative declarations.

Let’s view the listed pros & cons of Pytest and then proceed to the tabular column where we compare Pytest BDD vs Behave.

Pros

- Fixture Support: Reuse Pytest fixtures for setup and teardown, reducing redundant code.

- Seamless Integration: Works flawlessly with Pytest and major Pytest plugins like pytest-catchlog (for logging) and pytest-vscodedebug (for debugging), making troubleshooting much easier.

- Rich Plugin Ecosystem: Pytest supports 800+ external plugins, offering great flexibility.

- Parallel Test Execution: Pytest BDD allows running tests in parallel, which significantly reduces test execution time.

- Supports Multiple Report Types: Generate HTML reports, Allure reports, and JUnit reports.

Cons

- Feature File Declaration: You need to explicitly declare feature files in step definition modules using @scenario or scenarios().

- Scenario Outline Parsing: Requires additional parsing steps for scenario outlines.

- Limited Community Support: Compared to Behave, fewer people use Pytest BDD, meaning finding solutions for niche issues can be more challenging.

Pytest BDD vs Behave: Key Differences

| Description |

Pytest Bdd |

Python Behave |

| Pricing |

Free and Open Source |

Free and Open Source |

| Project Structure |

[project root directory]

|‐‐ [product code packages]

|– [test directories]

| |– features

| | `– *.feature

| `– step_defs

| |– __init__.py

| |– conftest.py

| `– test_*.py

`– [pytest.ini|tox.ini|setup.cfg]

|

[project root directory]

|‐‐ [product code packages]

|– features

| |– environment.py

| |– *.feature

| `– steps

| `– *_steps.py

`– [behave.ini|.behaverc|tox.ini|setup.cfg]

|

| Step Definition naming Syntax |

Step definition file name should be Prefixed or Suffixed with the word test Example:test_filename.py |

File name can be anything with the .py extension |

| Test directory naming Syntax |

Test directory should be named as ‘tests’ |

Test directory should be named as ‘features’ |

| IDE Support |

Supports only the Professional edition of Pycharm, Visual Studio Code and etc

|

Supports in Pycharm Professional Edition only.

Visual Studio Code and etc

|

| Reports |

Pytest Bdd Supports

1.HTML Report

2.Allure Report(Installed as a Separate Plugin)

It doesn’t support Extent report. |

Behave Supports

1. Allure Report

2. Output JSON Report

3. Junit Report

It doesn’t support both HTML & Extent reports.

|

| Parallel Execution |

It supports Parallel Execution |

It doesn’t support Parallel Execution |

| TestRunner |

Pytest (Installed as a Separate Plugin) |

Behave (Inbuilt Test Runner) |

| Support and Community |

Good |

Good |

| Test Launch |

Tests are launched by specific step definition files

Eg: pytest -k your step_definition.py file |

Tests are launched by specific feature files

Eg: behave features/your feature.feature file |

| Run by Tag |

Run the test file by using the keyword -m

Eg: pytest -m @yourTag

|

Run the test file by using the keyword –tags

Eg: behave –tags @yourTag

|

| Parsers |

Scenario Outline steps should be parsed separately |

No need to parse the scenario outline steps |

| Explicit Declaration |

Feature file should be explicitly declared on step definition via scenarios function |

Not needed to explicitly declare the feature file in step definition |

Pytest BDD vs Behave: Best Use Cases

Pytest BDD

Parallel Execution – Behave has no in-built features that made it possible to run the tests in parallel. Likewise, the once popular framework behave-parallel, which made it easier to run tests in parallel on Python behave, has been retired. So if parallel test execution is an important factor, Pytest will definitely be the better choice.

Pytest BDD allows for the unification of unit and functional tests. It also reduces the burden of continuous integration server configuration and the reuse of test setups.

HTML Reports – Behave doesn’t support HTML reports, and if that is one of your requirements, then you’ll have to pick Pytest BDD. Pytest BDD also has support for Allure reports which is also another widely used type.

Behave

Ease of Use – As seen in the Pytest BDD vs Behave comparison table, the step definition naming is much easier in Behave as Pytest requires a defined prefix or suffix. As additional code is required for declaring scenarios, implementing scenario outlines, and sharing steps in Pytest BDD, Behave in general is easier to use. It is also easier to setup as it has an inbuilt test runner.

The terminal log will be more elaborate in Behave when compared to Pytest. The reason for that is Behave runs the test with feature files that are written in the Given When & Then format. So you can easily identify where the error is.

Conclusion

So it is evident that both Pytest BDD and Behave have their own pros and cons. So based on your automation testing needs, you can use our Pytest BDD vs Behave comparison table and recommendations to make a well-educated decision. Being a test automation service provider, we have predominantly used Pytest BDD in many of our projects. If you could work around Pytest BDD’s complexity, it is a great option. If not, Behave could be a great alternative that you can choose.

Frequently Asked Questions

-

Which BDD framework is best for beginners?

Behave is often preferred by beginners due to its simple Given-When-Then structure, making it easier to write and understand test cases.

-

Which framework has better community support, Pytest BDD or Behave?

Behave has a larger community and more readily available solutions, while Pytest BDD, being an extension of Pytest, benefits from Pytest’s extensive plugin ecosystem.

-

How do I decide between Pytest BDD and Behave for my project?

If you need robust automation with parallel execution and integration with Pytest, choose Pytest BDD. If you require a business-friendly, easy-to-read framework, go with Behave.

-

Does Pytest BDD support parallel execution?

Yes, Pytest BDD natively supports parallel execution, making it a good choice for large test suites. Behave, on the other hand, requires additional tools like BehaveX for parallel execution.

-

Which is better for BDD testing in Python: Pytest BDD or Behave?

It depends on your needs. Pytest BDD is better for automation testers who prefer Pytest’s powerful features like parallel execution and extensive reporting. Behave is ideal for teams that prioritize business-readable tests and collaboration with non-technical stakeholders.

by Arthur Williams | Dec 29, 2022 | Automation Testing, Blog |

With Agile being so widely adopted across the globe in software development projects, the importance of Integration testing has also increased. Since different parts or modules of the software are developed individually in Agile, you can consider the work done only if everything works well together. A flaky test is never healthy for any type of testing, but it can be considered more critical when your integration tests are flaky.

Being an experienced automation testing company, we have encountered various factors that contribute to the flakiness of an integration test and are well-versed when it comes to fixing them. So in this blog, we will be explaining in detail how you can fix a flaky integration test.

How is Integration Testing Performed?

Integration testing is the process of making sure that two or more separate software modules are working together as defined by the requirements. In most organizations, the Unit/Component/Module integration will be performed by the developers. But automation testing companies that follow the Test-Driven-Development approach will be involved in the unit/integration testing too.

Types of Integration testing:

- The Big Bang Method

- Bottom-Up Method

- Hybrid Testing Method

- Incremental Method

- Stubs and Drivers

- Top-Down Approach

Causes of Flaky Integration Tests

1. Web Driver Test

2. Shared resources

3. Third party’s reliability

4. Sleep ()

5. UI test

WebDriver Test:

WebDriver is a web framework that allows us to execute cross-Browser tests. This tool is used to automate web applications to verify if it is working as expected. The Selenium WebDriver allows us to choose a programming language to create test cases, in that case, flakiness might cause.

Shared Resources

Shared data is one of the main reasons for integration tests to be flaky. It can be caused when tests are designed with incorrect assumptions about data and memory or due to unforeseen scenarios.

Third-Party Reliability

Integration tests could also rely more on external software or APIs in comparison to standalone tests. During such scenarios, there could be certain dependencies to which we might have limited or no access. This is yet another reason that causes a flaky integration test.

Sleep()

Since integration tests involve different components or modules of an app, sleep () time can contribute to the failure of many tests. Having too short of a wait time or a too long wait time can cause failures.

New Feature/Module

All your tests might be working fine until a new feature/module/code has been added to the existing build. Such new additions can cause flaky integration tests and so it is always important to check how stable the tests are once such new integrations are made.

UI Testing

UI Tests can turn out to be because they basically intend to run End to End testing methods and interact with the System like how real users would. Since such tests are prone to network issues or server slowness that might introduce some unexpected flakiness.

Steps to Fix Flaky Integration Tests:

Run the Test Regularly

Running your test suite at regular intervals is the first step in fixing flaky integration tests as it will help you identify them. So make sure to rerun the tests daily or at least once a week.

The more tests we rerun, the more we will be able to understand the tests. We will be able to determine helpful data such as the time it takes for the test to be executed on average. We will even know when and how the particular test is being triggered.

Once we have such data, it will be easier to identify the anomalies when they happen.

Identify the Unstable tests

Now that we have the data to identify any deviation, we will know where to look to find unstable tests. But be sure that not all failures attribute to a flaky test as there could be real scenarios impacting the result. So the first step would be to look into the error logs to see what has caused the failure.

But those tests might fail due to many reasons such as latency issues, network issues, environmental issues, coordination problems, and so on. So make sure to analyze why similar tests pass and fail randomly. If you dive deep and analyze the internal workings, you’ll be able to identify flaky test patterns.

Isolate the Flaky tests

A single flaky test can disrupt the integrity of your entire test suite. So the best course of action would be to isolate the flaky integration test from your test suite. Find out if removing the particular integration test will have any impact on your test suite on the whole and adapt accordingly.

Fix Flaky tests one at a time

In order to fix a flaky integration test, you must first identify the root cause of the problem, You can rerun the same test multiple times using the same code and try to identify the root cause of the flakiness. But this might turn out to be a tedious process if the tests end up failing continuously. So make sure to follow these steps

- Review the existing wait/ sleep time delay of the existing threads and make needful changes.

- Reorder the data source while running a test and have the process draw data from a different source. Though reordering the source station can help, it requires careful execution.

- Identify if any dependencies on external sources are causing flaky integration tests. It will be helpful if you simplify multiple threads to create a simple form as failures can be fixed when we have full access to our program instead of relying on external venues.

- Use mock testing to focus on the code more than the behaviors or the dependencies of the feature.

By posting comments to some of the code, adding print and wait-for statements as necessary, setting breakpoints, and closely watching the logs, you will be able to debug the problem’s root cause and resolve it.

Tip: A common trap that most testers fall into is wanting to find & fix all the flaky tests all at once. It will only cause them to take more time as it will be very hard to identify the root cause and come up with a permanent solution.

So focus on one flaky test at a time.

Add Stable tests back to the Test Suite

Once you are certain that the flakiness has been eliminated, you can add the isolated test back to your test suite. But you’ll have to rerun the tests over and over again to determine if the results you are getting are consistent. The reruns don’t end there as you’ll have to rerun your entire test suite after adding the fixed tests to make sure nothing else breaks.

Best Way to Handle Flaky Tests

One of the best ways to handle flaky tests is to prevent them. So when you write your automation scripts, make sure the tests are not dependent on one another. If there is too much dependency, you’ll not be able to evaluate the failures without looking into the application’s code. The objective of our tester should be able to write automation scripts in a way that you can execute them in any random order you want without any impact. But if there are such issues, you should consider splitting up those tests.

Conclusion:

Flaky integration tests or flaky tests in general are very common when it comes to automation testing. But as a leading test automation service provider, we take the necessary steps to ensure that we don’t create flaky tests in the first place. Though it is not possible to completely avoid it, the overall flakiness can be considerably lowered. So even if we do face flakiness, the above-mentioned steps have always helped fix those issues and keep our test suites healthy.

by Anika Chakraborty | Dec 20, 2022 | Automation Testing, Blog |

Designing an effective test automation framework is an integral part of automation testing. It is important that your test automation framework has no code duplication, great readability, and is easy to maintain. There are many design patterns that you can use to achieve these goals, but in our experience, we have always found Page Object Model to be the best and would recommend you follow the same. As Cypress has been gaining a lot of popularity over the years, we will be looking at how you can implement Page Object Model in Cypress. To help you understand easily, we have even used a Cypress Page Object Model example. So let’s get started.

In a Page Object Model, the page objects are always separated from the automation test scripts. Since Cypress has the ability to enable Page Object Model, the page files in POM are separated into different page objects and the test cases are written in test scripts.

How to Implement the Page Object Model in Cypress?

Being an experienced automation testing company, we have implemented Page Object Model in Cypress for various projects. Based on that experience, we have created a Cypress Page Object Model example that will make it easy for you to understand.

Let’s assume that we have to automate the login process in a sample page and that we have two pages (Home Page & Login Page) to focus on.

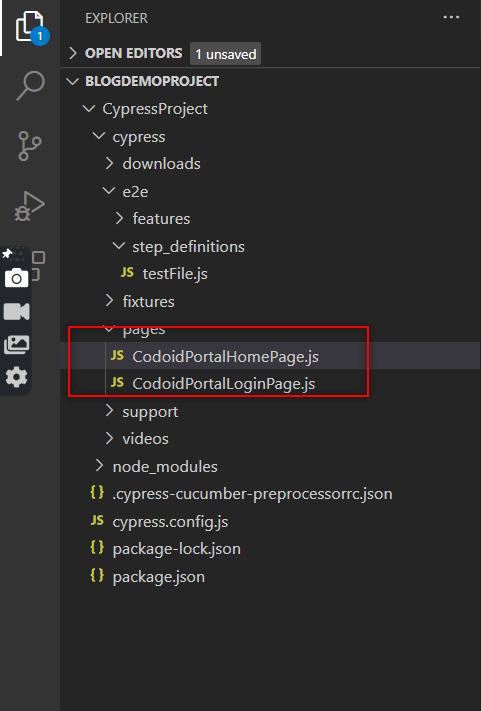

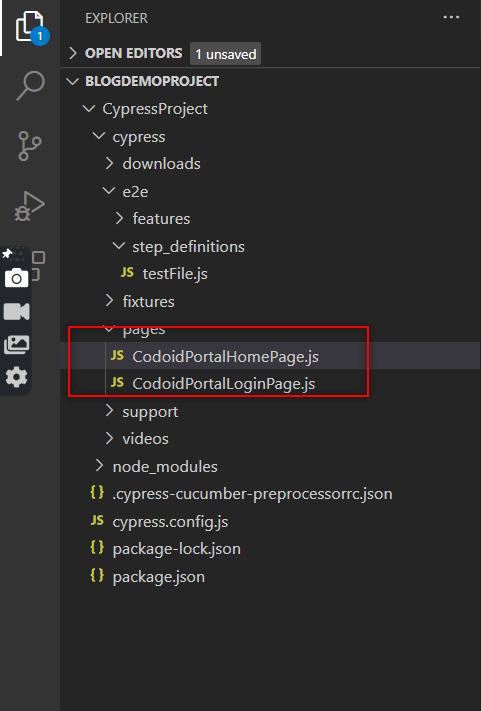

Create a Pages Folder

The project structure is an aspect we’ll need to know for implementing Page Object Model in Cypress. So we have created a separate folder called pages under the Cypress folder to store both the Home and Login pages.

In the example above, we can see both the CodoidPortalHomePage.js and CodoidPortalLoginPage.js. Under the ‘pages’ folder we created.

Add Methods & Locators

Now that we have created separate pages, we have added all the required methods related to Codoid’s home page to CodoidPortalhomePage.js and the ones related to Codoid’s login page to CodoidPortalLoginPage.js.

To increase the reusability, we have added the locators as variables in both instances.

Home Page

export class CodoidPortalHomePage{

txtBox_Search = ':nth-child(2) > .oxd-input-group > :nth-child(2) > .oxd-input'

codoidLogo = ':nth-child(3) > .oxd-input-group > :nth-child(2) > .oxd-input'

btnSearchIcon = '.oxd-button'

enterSearchKeyword(Username){

cy.get(this.txtBox_Search).type(Username)

}

verifyCodoidLogo(Password){

cy.contains(this.codoidLogo)

}

clickSearchIcon(){

cy.get(this.btnSearchIcon).click()

}

}

Login Page

export class CodoidPortalLoginPage{

txtBox_UserName = ':nth-child(2) > .oxd-input-group > :nth-child(2) > .oxd-input'

txtBox_Passwords = ':nth-child(3) > .oxd-input-group > :nth-child(2) > .oxd-input'

btnLogin = '.oxd-button'

txtDashBoardInHomePage = '.oxd-topbar-header-breadcrumb > .oxd-text'

txtInvalidcredentials = 'Invalid credentials'

subTabAdmin = 'Admin'

txtSystemUserInAdminTab = 'System Users'

enterUserName(Username){

cy.get(this.txtBox_UserName).type(Username)

}

enterPassword(Password){

cy.get(this.txtBox_Passwords).type(Password)

}

clickLoginBtn(){

cy.get(this.btnLogin).click()

}

verifyHomePage(){

cy.get(this.txtDashBoardInHomePage)

}

verifyAdminSection(){

cy.contains(this.subTabAdmin).click()

}

verifySystemUser(){

cy.contains(this.txtSystemUserInAdminTab)

}

}

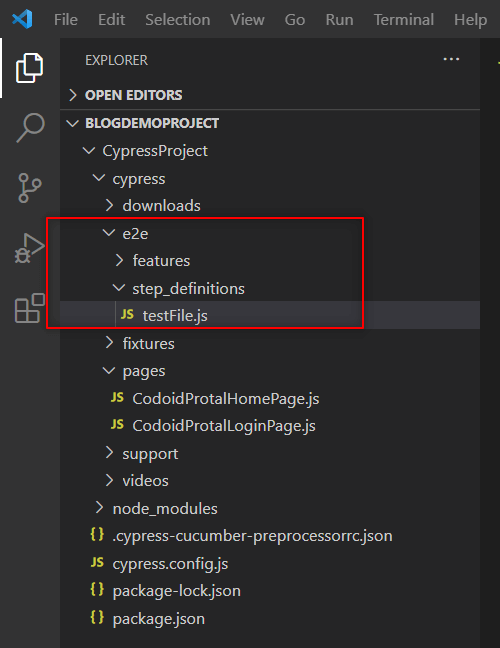

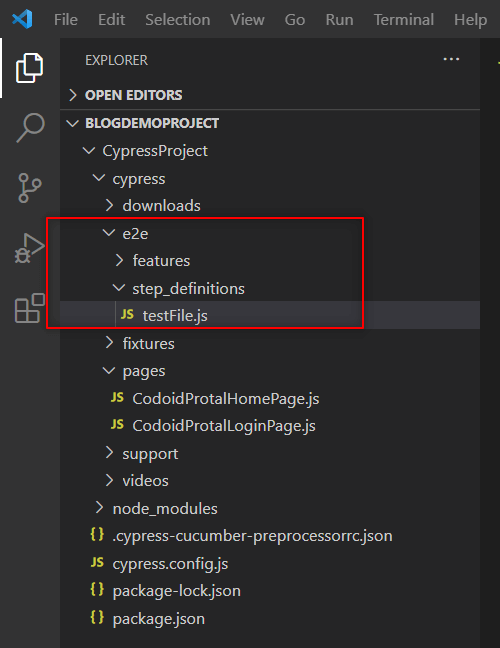

Create a Step Definition Folder

The next step in implementing Page Object Model in Cypress would be to create a ‘Step Definition folder. We have created it under the ‘e2e’ folder, which stores all the test cases. We have then created a test spec file by the name ‘testFile.js’ under the ‘step definitions’ folder.

Note: If you are using a Cypress version that is below 10, you’ll have to create a ‘step definitions’ folder under ‘integration’ and not under e2e.

Import & Execute

The final step in implementing the Page Object Model in Cypress would be to import the pages we have created to access its methods and execute them. So we will be creating instances of the CodoidPortalHomePage.js and CodoidPortalLoginPage.js classes and call the respective methods as shown in the code snippet below.

import {

Given,

When,

Then,

} from "@badeball/cypress-cucumber-preprocessor";

import { HomePage } from "../../pages/CodoidPortalHomePage";

import { LoginPage } from "../../pages/CodoidPortalLoginPage";

const homeage = new CodoidPortalHomePage()

const loginPage = new CodoidPortalLoginPage()

Given('User is at the login page', () => {

cy.visit('https://opensource-demo.orangehrmlive.com/')

})

When('User enters username as {string} and password as {string}', (username, password) => {

loginPage.enterUserName('Admin')

loginPage.enterPassword('admin123')

})

When('User clicks on login button', () => {

loginPage.clickLoginBtn()

})

Then('User is able to successfully login to the Website', () => {

homeage.verifyHomePage()

})

Now that we have implemented Page object model in Cypress, it’s time to execute the test file in Cypress. So you can open Cypress by running the following command in the terminal.

Once you have opened Cypress, all you have to do is run the testfile.spec.js file.

Best Practices for Page Object Model in Cypress

Assertions should not be included in page objects

When building end-to-end test scripts, aim to include only one main assertion or a set of assertions per script. Additionally, you should not place assertions in any of the functions provided by your page objects.

Identify the page object class by using a clear name

It’s important to make sure the name you choose makes it 100% clear what’s inside the page object. If there is a scenario where you are unable to pinpoint what the page object does, then it is a sign that the page object does too much. So make sure to keep your page objects focused on particular functions.

Methods in the page class should interact with the HTML pages or components

Make sure your page class has only the methods that an end user can use to interact with the web application.

Use Locators as variables

There are high chances for the “Xpath” of an element changes in the future. So if you use a locator as a variable, you can just change the Xpath of the single file, and it will be applied to all the other files that call the same “Xpath”.

Separate the Verification Steps

Ensure to separate the UI operations and flow from the verification steps to make your code clean and easy to understand.

Conclusion:

So in addition to using a Cypress Page Object Model example, we have also listed the best practices that you can use to implement the same in your project. Implementing Page Object Model in Cypress will make it very easy when you have to scale your project and make maintaining it seamless as well. Being a leading automation testing service provider, we will be publishing such insightful blogs regularly. So make sure you subscribe to our newsletter to never miss out.

by Hannah Rivera | Dec 13, 2022 | Automation Testing, Blog |

JetBrains (formerly IntelliJ Software ) is a Czech software development company that specializes in tools for programmers and project managers. Jetbrains has developed many popular Integrated Development Environments (IDEs) such as Intellij IDEA, Pycharm, WebStorm and so on. Their IDEs support various programming languages such as Java, Groovy, Kotlin, Ruby, Python, PHP, C, Objective-C, C++, C#, F#, Go, JavaScript, and SQL. And Aqua is their newest addition to their existing lineup. It is a powerful IDE developed by keeping Test Automation in focus. It is a treat for QA and test engineering professionals in modern software development. Being a leading automation testing company, we are always on the lookout for new tools and technologies that can help us enhance our testing process. So in this blog, we will be focusing on Jetbrains Aqua and its features that make it a great IDE for testers.

There are a lot of features in Aqua that a test automation engineer needs on a daily basis, which includes

- A Multi-language IDE (with support for JVM, Python, JavaScript, and so on)

- A new, powerful web inspector for UI automation

- Built-in HTTP client for API Testing

- Database management functionality

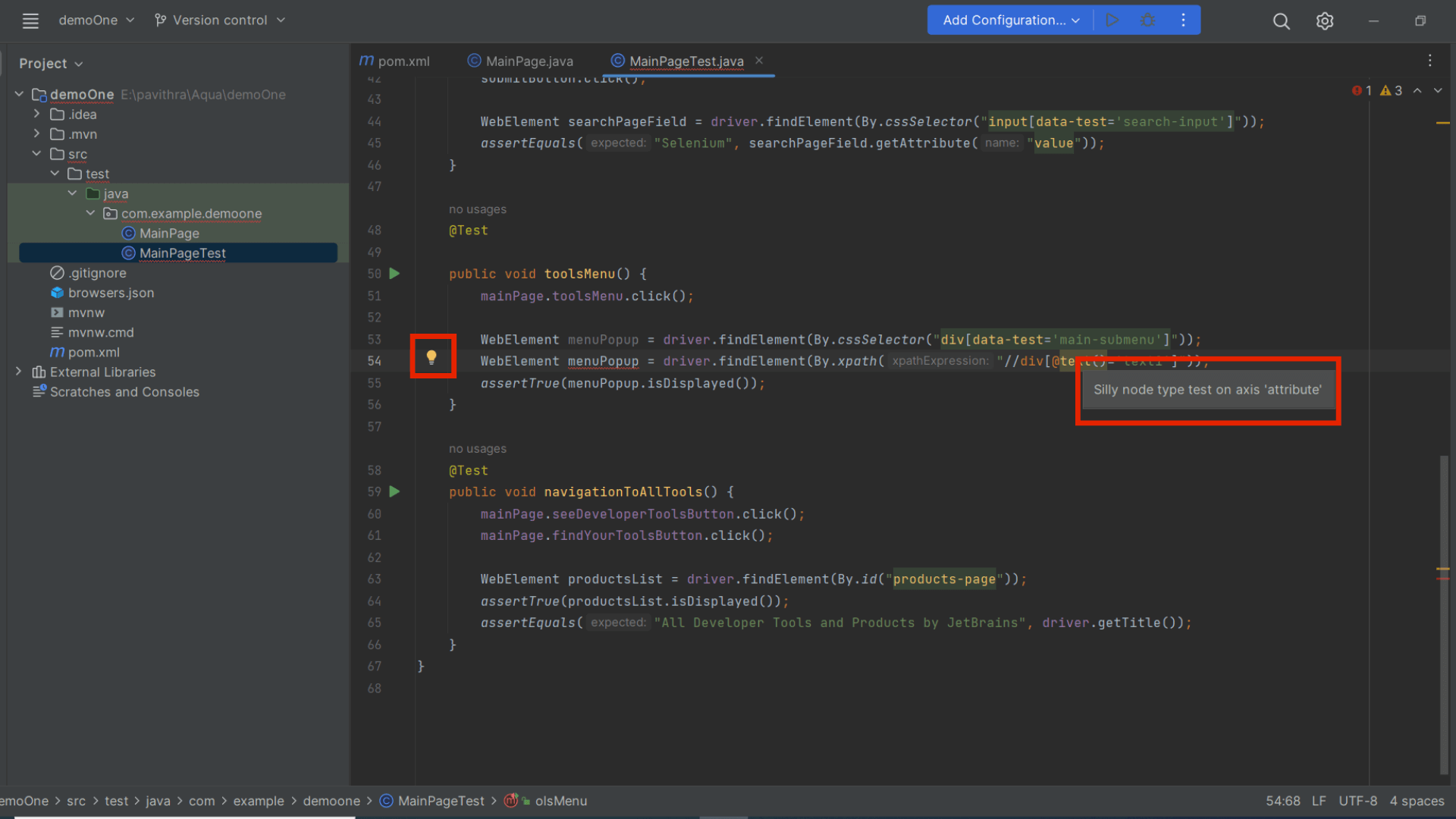

Intelligent Coding Assistance

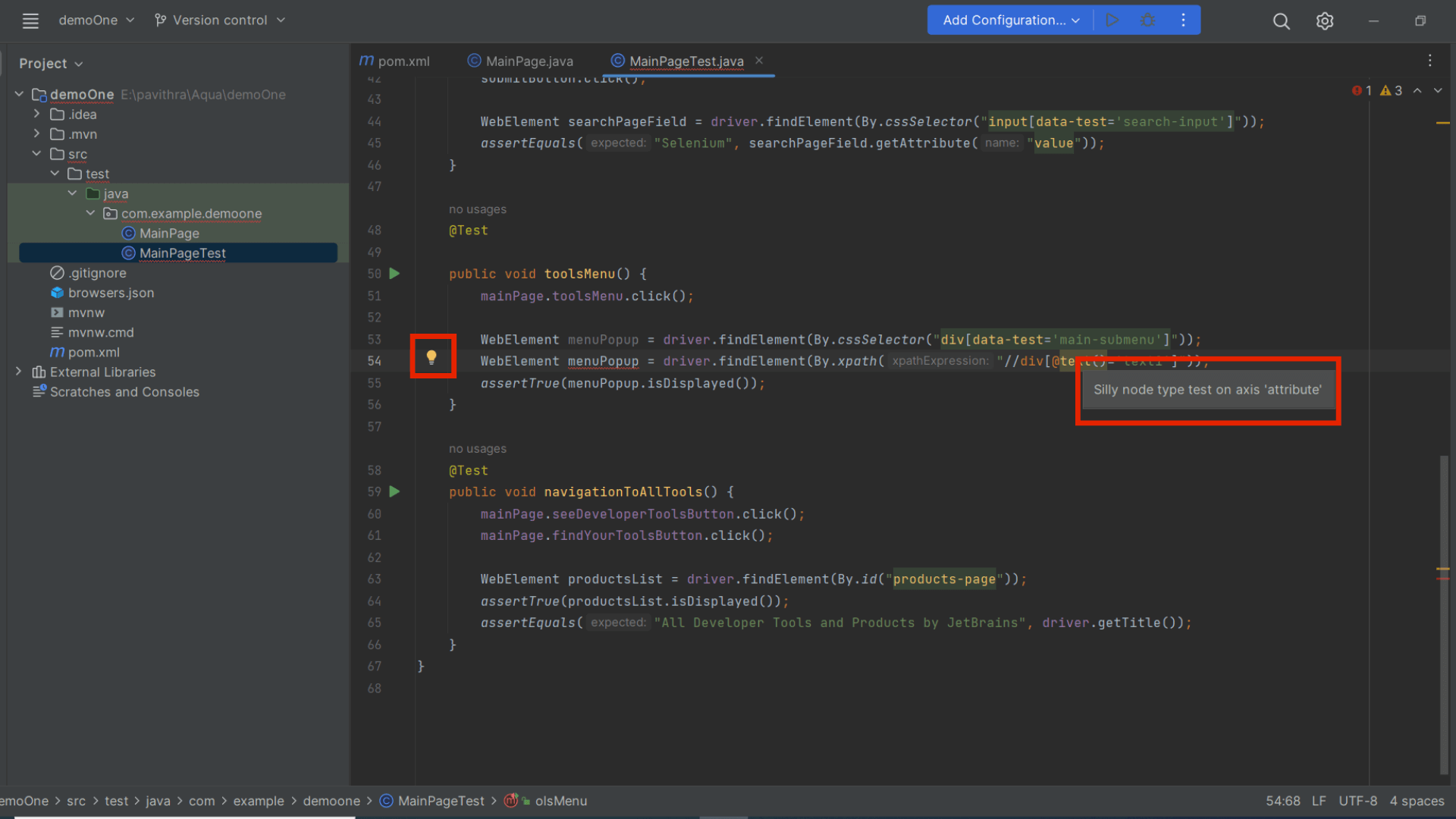

As with other JetBrains IDEs, Aqua checks your code on-the-fly for quality and validity. If issues are found, the IDE suggests context actions to help you resolve them. As of writing this blog, Aqua provides intelligent coding assistance for Java, Kotlin, Python, JavaScript, TypeScript, and SQL. To use the context action, click the light bulb icon (or press Alt+Enter).

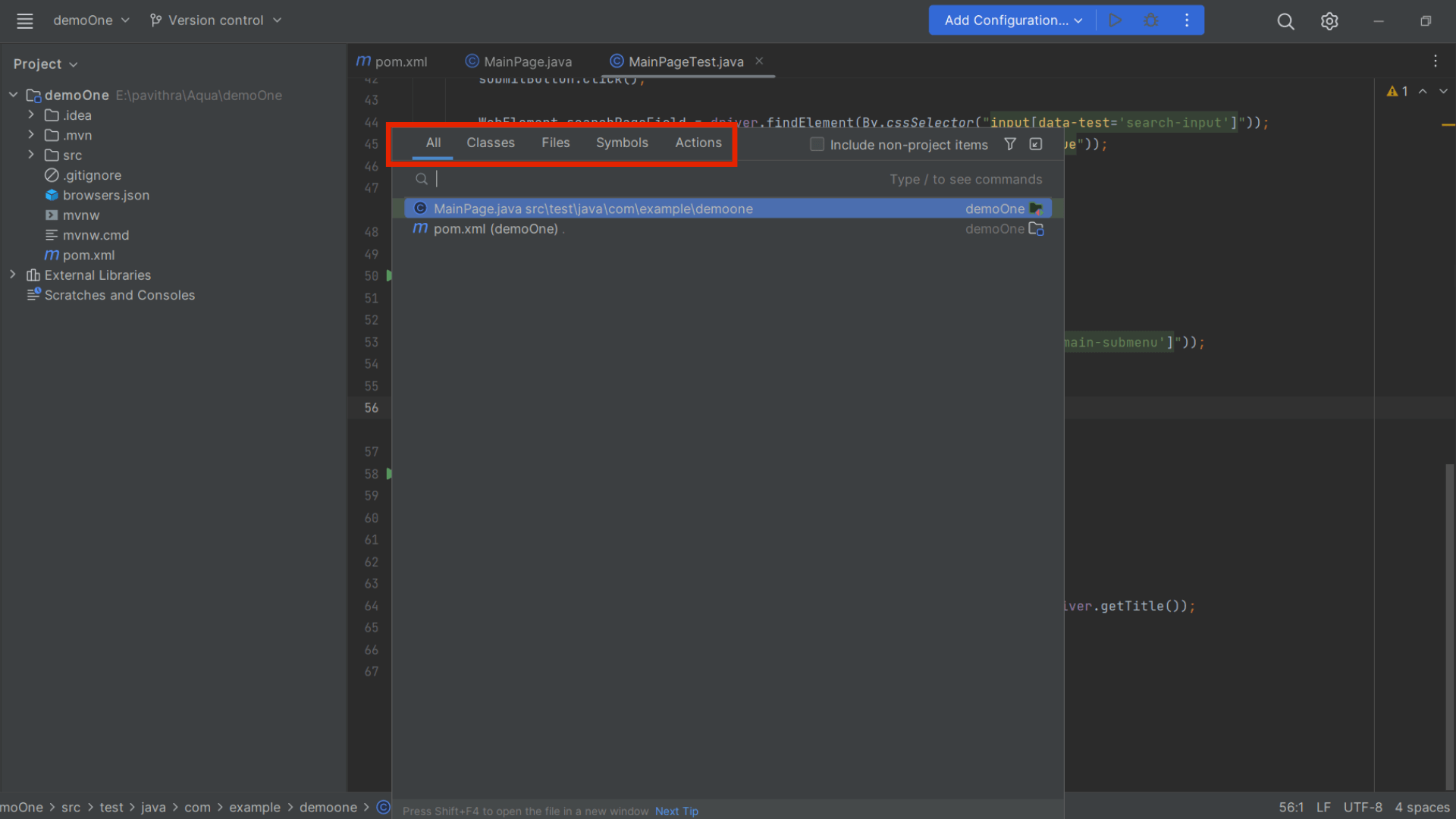

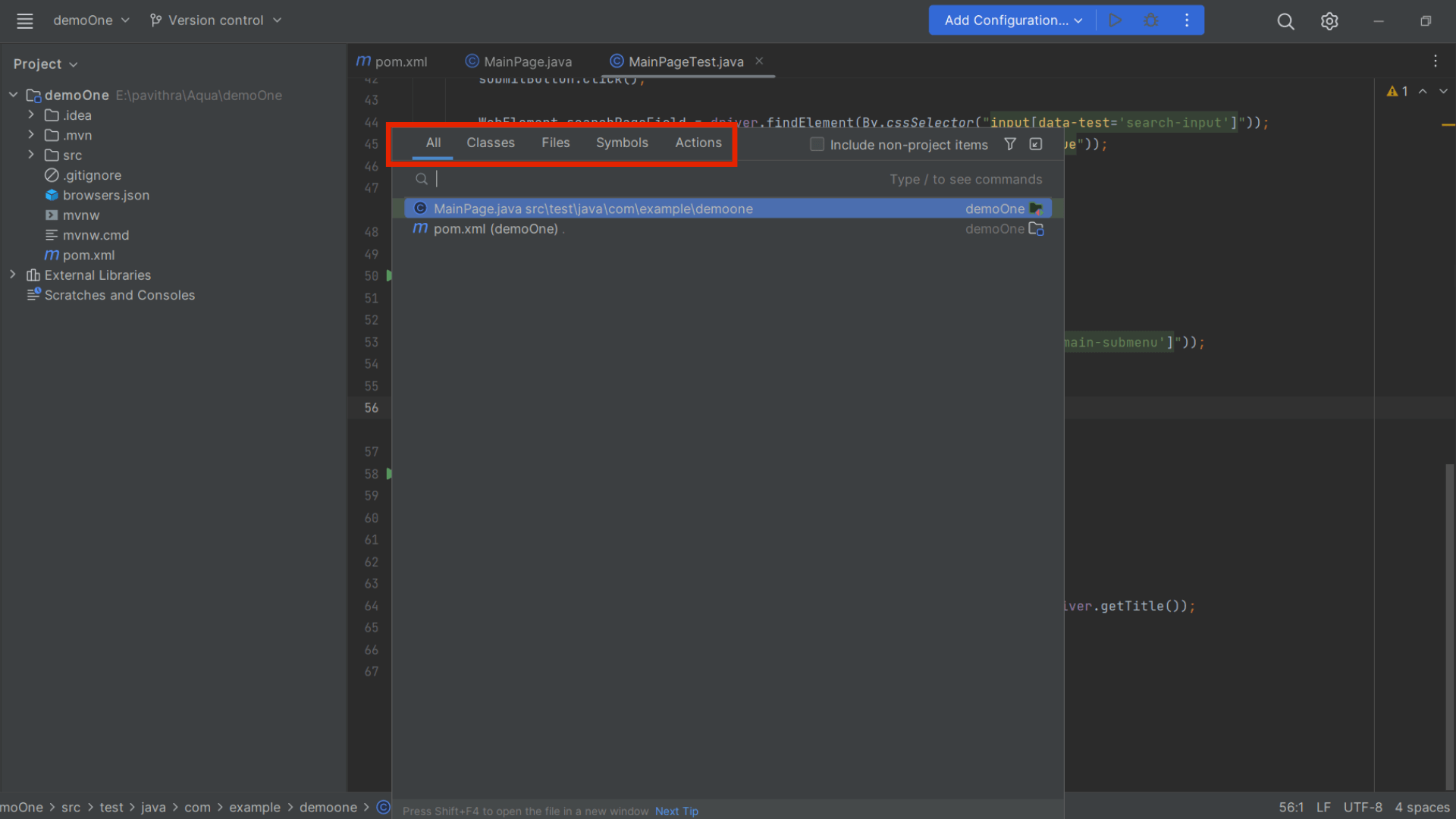

In addition to that, clicking Shift twice allows you to search across classes, files, Action, and Database.

Starting a New Project

Creating a new project is so cool in Jetbrains Aqua as you can choose the build tool (Maven or Gradle), Test runner (JUnit or TestNG), JDK, and language for the project. To make it even easier for you, JetBrains Aqua even provides you with a sample script when you are creating a project for the first time.

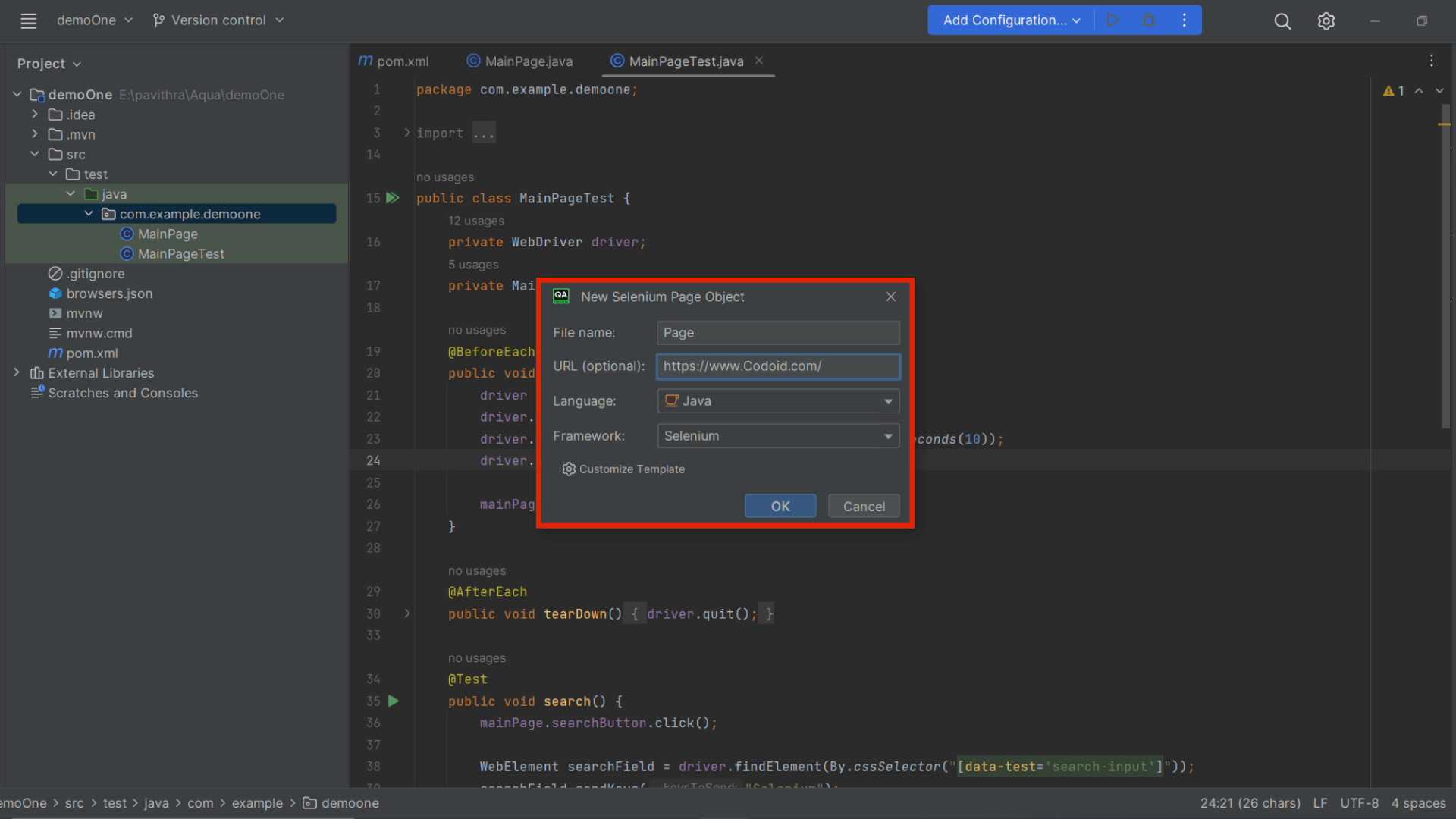

Aqua supports JUnit, TestNG, Pytest, Jest, Mocha, and other popular frameworks for writing, running, and debugging unit tests. Code insights are also provided by Jetbrains Aqua for CSS, XPath, and many other libraries used in UI testing.

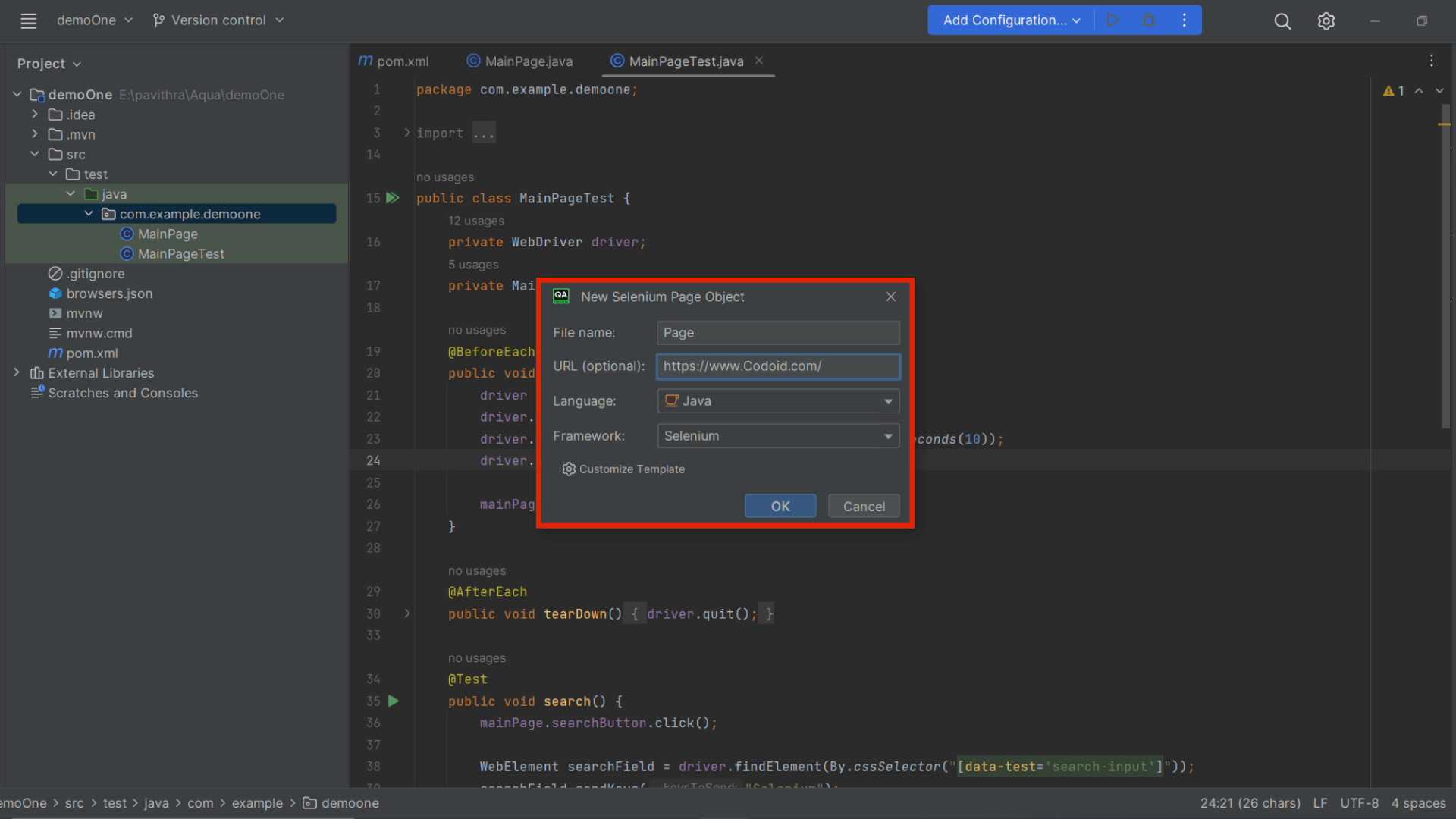

You can choose the page object pattern you want to use in the IDE and Jetbrains Aqua will use the corresponding pattern when adding the locators. Though the URL field is optional, it will be useful when identifying the locators.

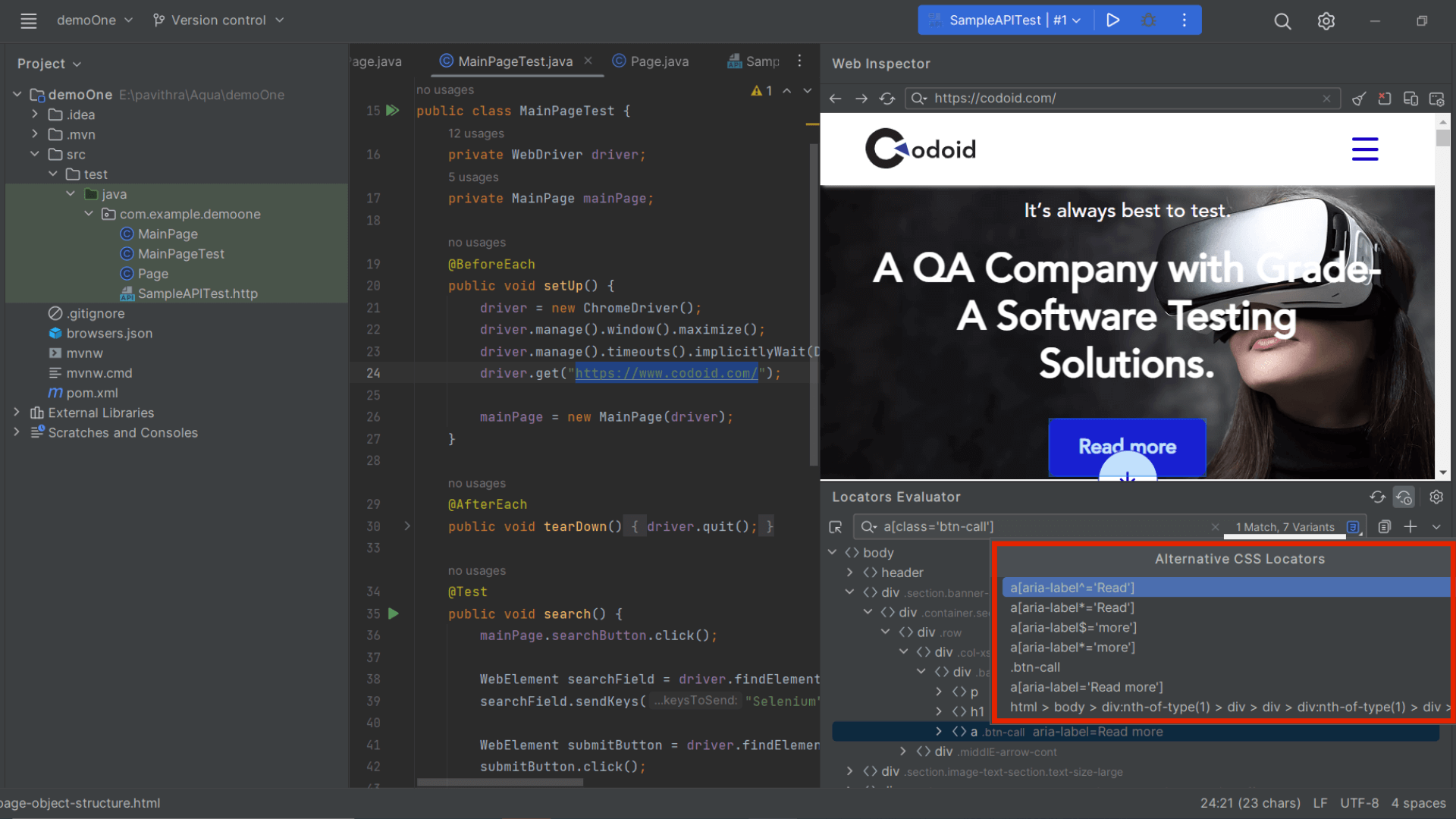

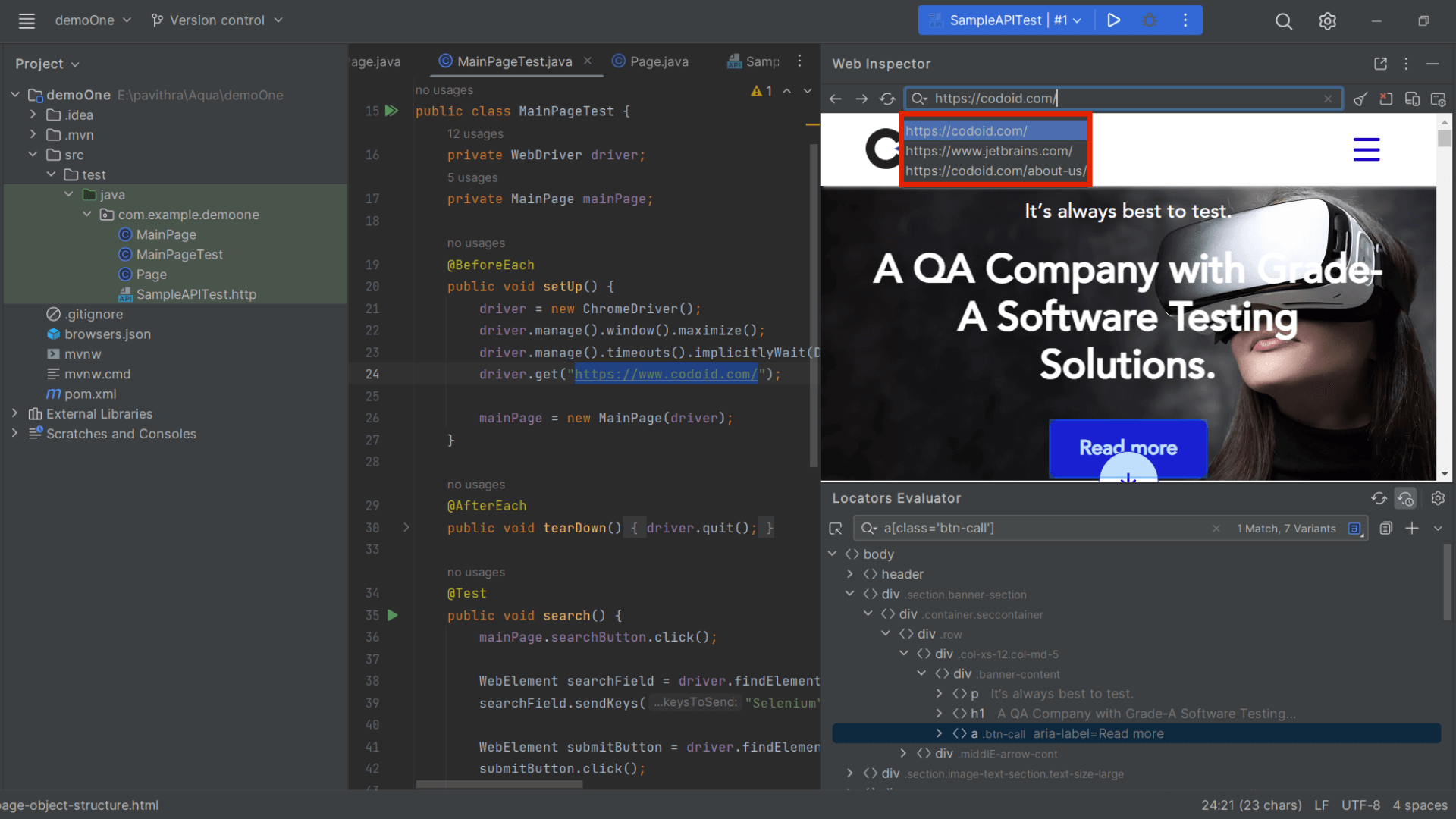

Web Inspector

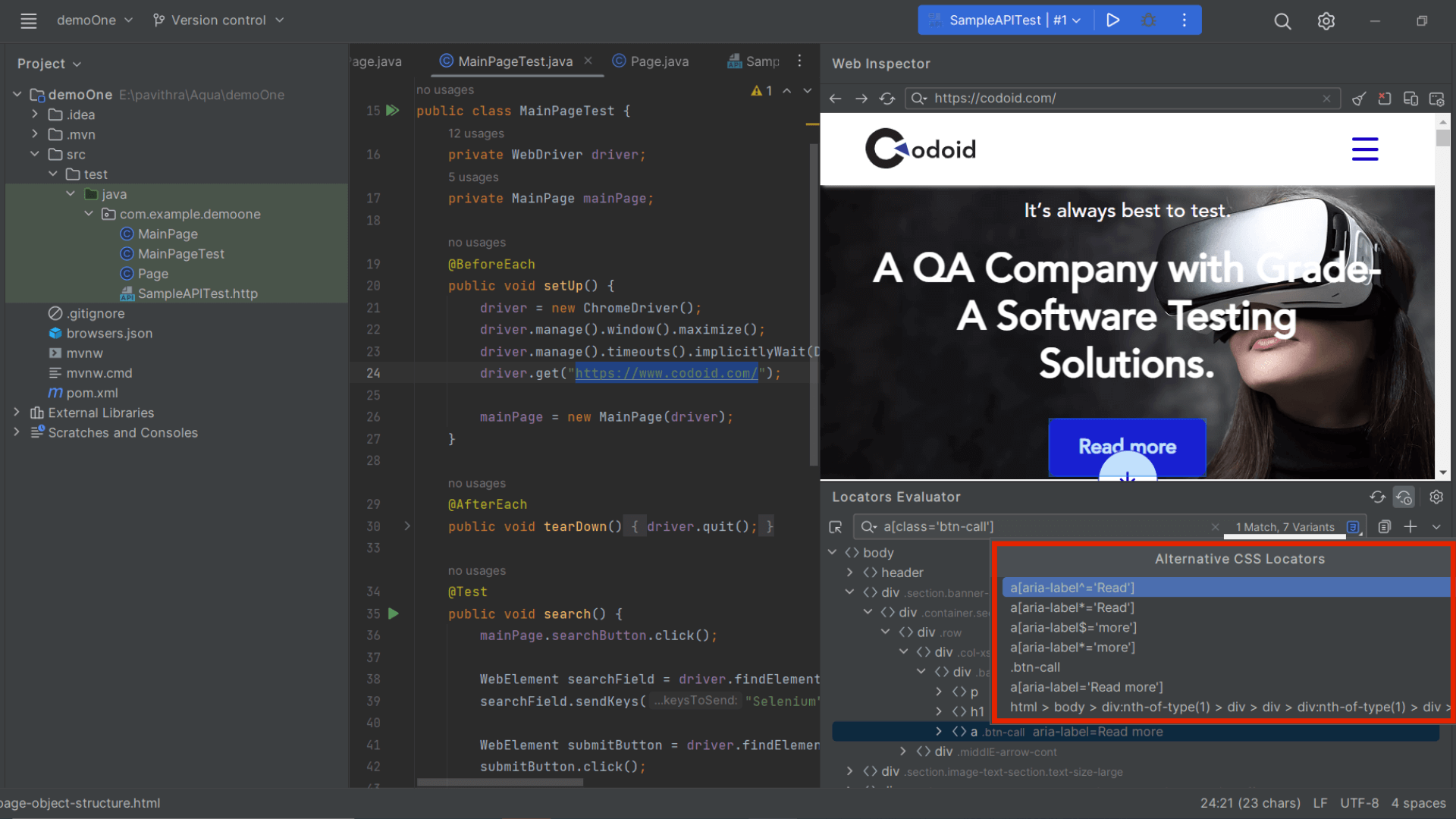

Now that there is an inbuilt Web Inspector in Jetbrain Aqua, you can explore the web application you want to perform automation for and collect the required page elements. Aqua provides CSS or XPath locators for the chosen elements on the webpage and aids in adding it to the source code.

The best part is that it will assign a valid name to the web elements you chose instead of assigning a random name. It is known by all that an element might have more than one unique Xpath or CSS for a web element. So the Web Inspector will fetch all the available variations as shown in the below screenshot.

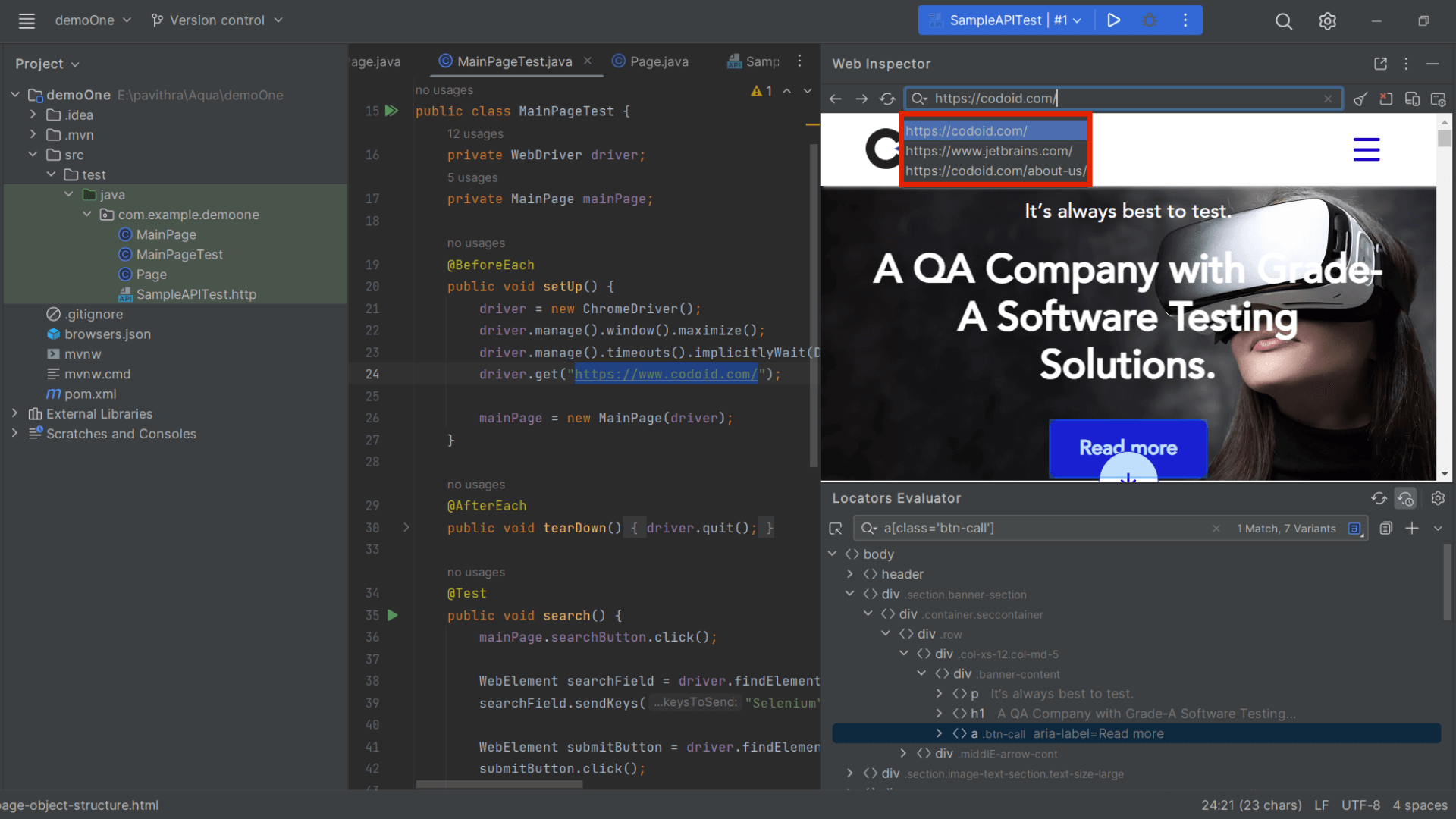

If you are working with multiple sites, the web inspector has a history feature that will come in handy. You can use it to select the site you want from the drop-down in the search bar as shown below.

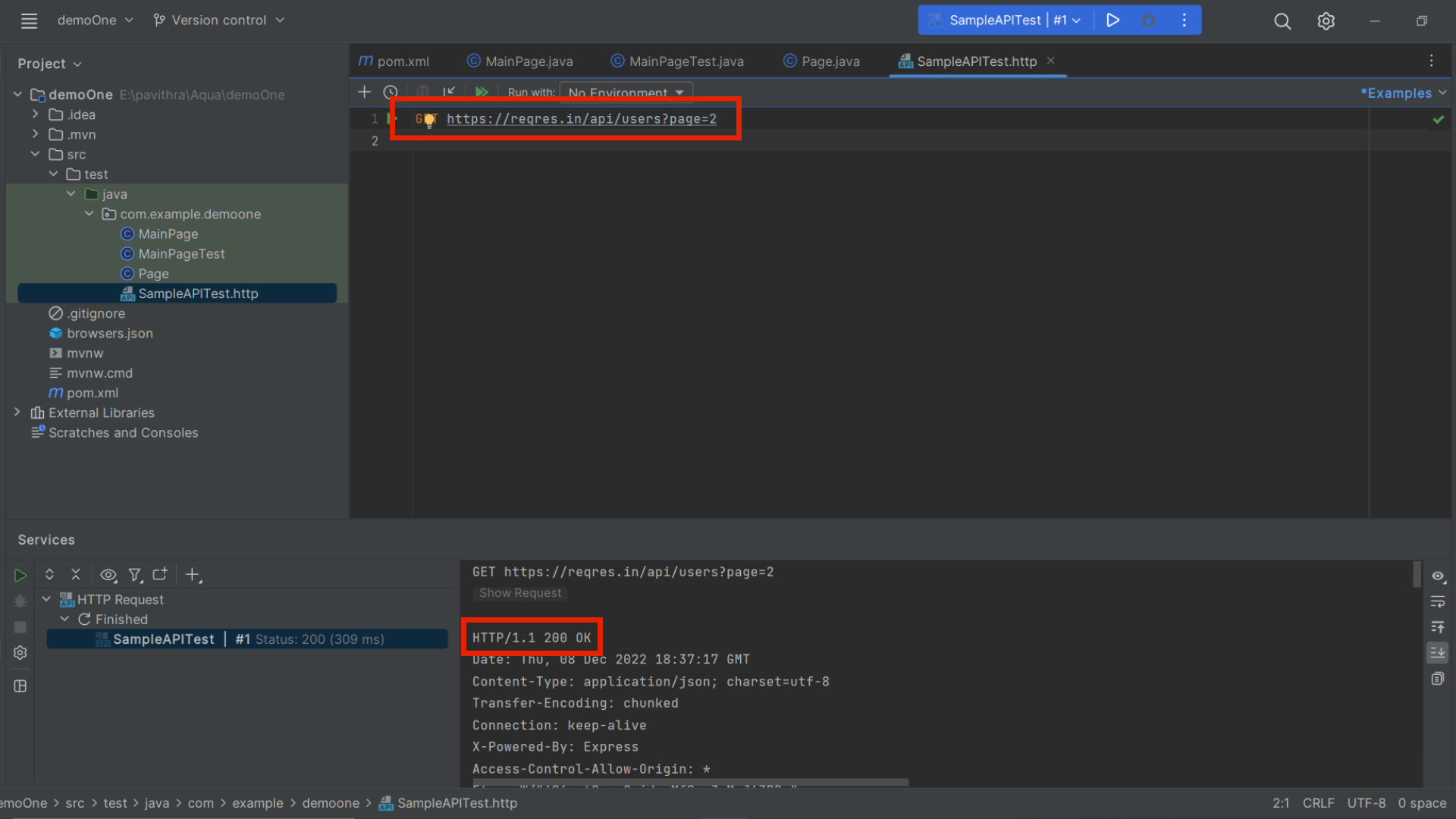

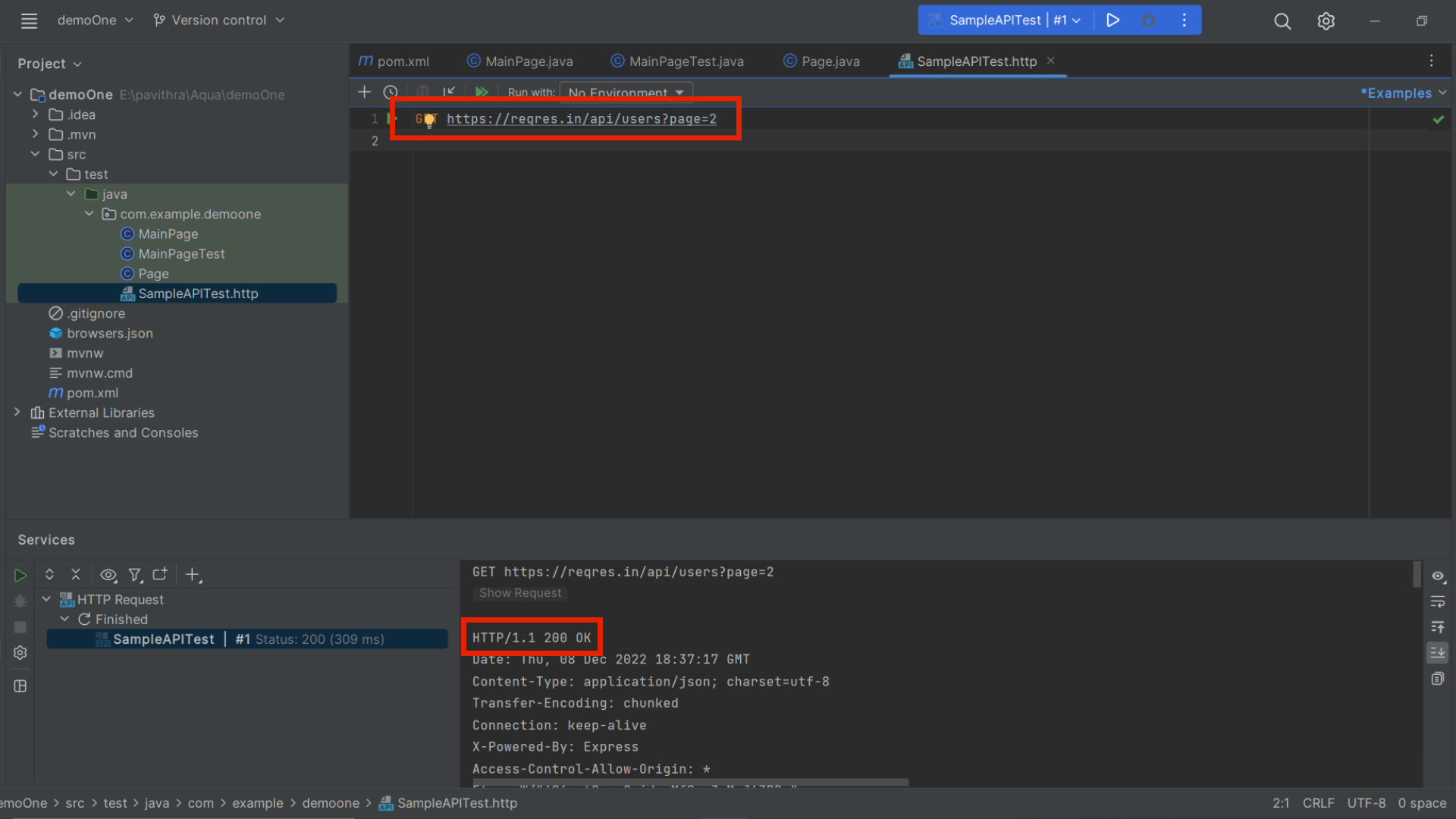

HTTP Client

Every web service will send and receive numerous HTTP requests. As Jetbrain Aqua has an inbuilt HTTP client, you will be able to create and edit the requests. So you will be able to perform API tests where you can use commands such as get, post, put, etc in the IDE to check the response body and response code.

In the above image, we have used the GET command and got 200 as the response code.

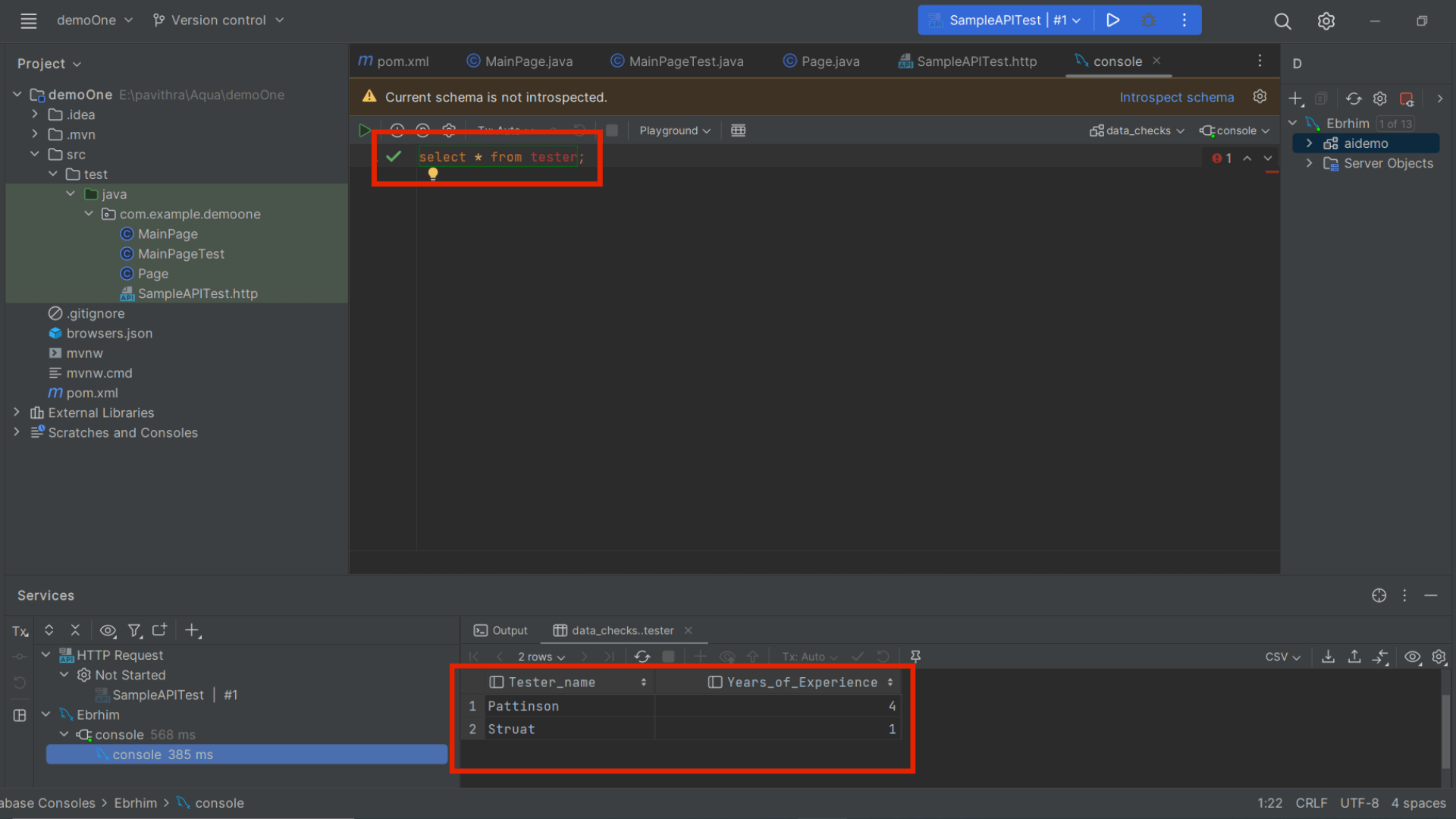

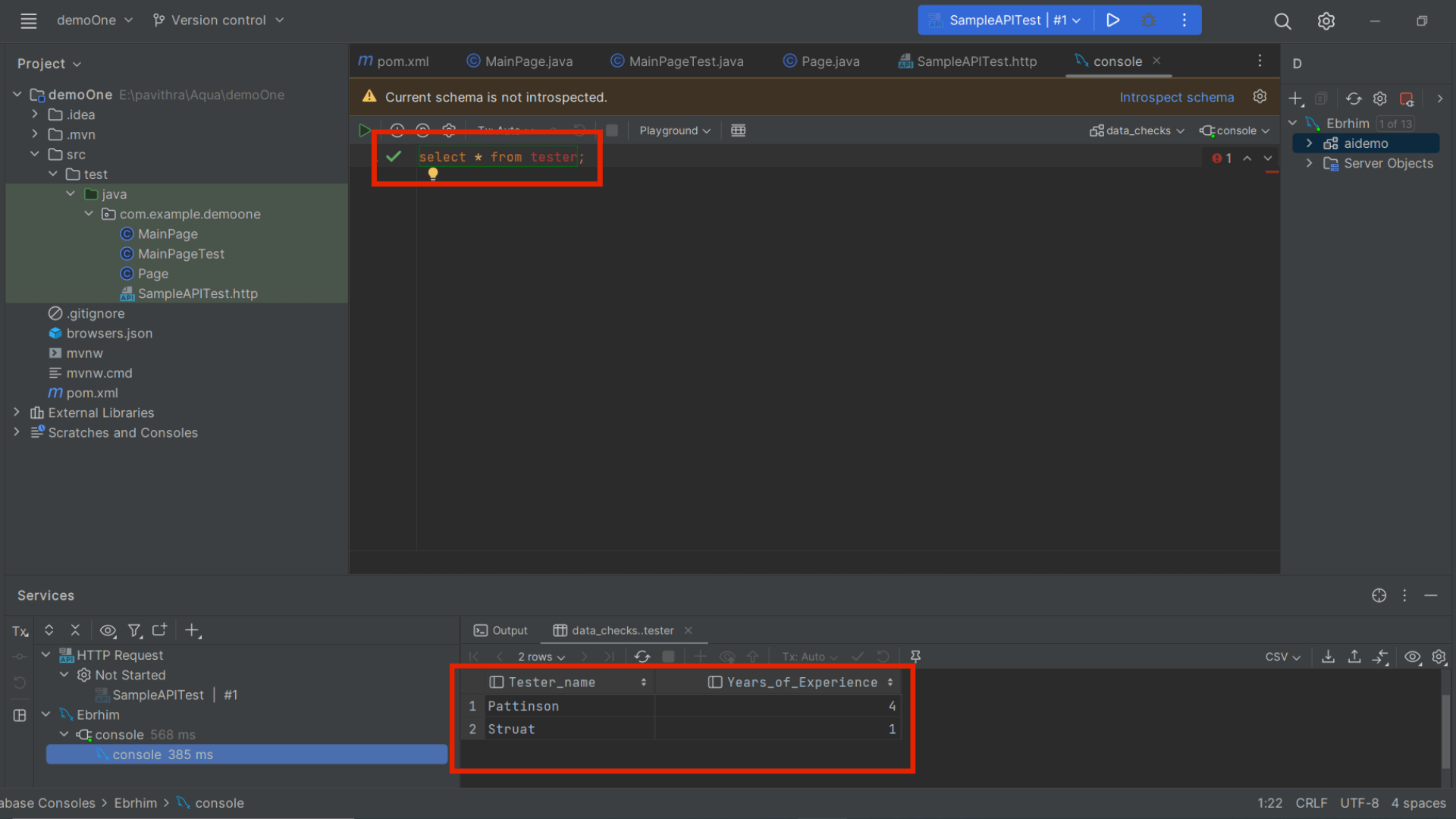

Database Management

If you have been performing automated data analytics testing, you’ll definitely love this new feature in Jetbrain Aqua. Using Aqua, you’ll be able to handle multiple databases, develop SQL scripts, and perform data assertions to a certain extent right from the IDE. So you’ll be able to connect to live databases, run & validate the required queries, export the data, and manage the schemes with the help of a visual interface. Some of the well-known databases that you can access are Oracle, SQL Server, PostgreSQL, MySQL, and so on.

If you take a look at the above image, we have connected to an SQL server and used the Select command to extract the required details.

Conclusion

Even if you have existing projects in Maven, Eclipse, and Gradle, you can import those projects to Jetbrains Aqua and make use of all these new features. As one of the leading automation testing companies in the industry, we are excited to use Jetbrains Aqua in our projects. Hopefully, we have explained the features of Jetbrains Aqua in a way that has encouraged you to use it as well. Subscribe to our newsletter to stay updated with all the latest test automation tools, methods, and so on.