by Charlotte Johnson | Feb 24, 2025 | AI Testing, Blog, Latest Post |

Modern web browsers have evolved tremendously, offering powerful tools that assist developers and testers in debugging and optimizing applications. Among these, Google Chrome DevTools stands out as an essential toolkit for inspecting websites, monitoring network activity, and refining the user experience. With continuous improvements in browser technology, Chrome DevTools now includes AI Assistant, an intelligent feature that enhances the debugging process by providing AI-powered insights and solutions. This addition makes it easier for testers to diagnose issues, optimize web applications, and ensure a seamless user experience.

In this guide, we will explore how AI Assistant can be used in Chrome DevTools, particularly in the Network and Elements tabs, to assist in API testing, UI validation, accessibility checks, and performance improvements.

Uses of the AI Assistant Tool in Chrome DevTools

Chrome DevTools offers a wide range of tools for inspecting elements, monitoring network activity, analyzing performance, and ensuring security compliance. Among these, the AI Ask Assistant stands out by providing instant, AI-driven insights that simplify complex debugging tasks.

1. Debugging API and Network Issues

Problem: API requests fail, take too long to respond, or return unexpected data.

How AI Helps:

- Identifies HTTP errors (404 Not Found, 500 Internal Server Error, 403 Forbidden).

- Detects CORS policy violations, incorrect API endpoints, or missing authentication tokens.

- Suggests ways to optimize API performance by reducing payload size or caching responses.

- Highlights security concerns in API requests (e.g., unsecured tokens, mixed content issues).

- Compares actual API responses with expected values to validate data correctness.

2. UI Debugging and Fixing Layout Issues

Problem: UI elements are misaligned, invisible, or overlapping.

How AI Helps:

- Identifies hidden elements caused by display: none or visibility: hidden.

- Analyzes CSS conflicts that lead to layout shifts, broken buttons, or unclickable elements.

- Suggests fixes for responsiveness issues affecting mobile and tablet views.

- Diagnoses z-index problems where elements are layered incorrectly.

- Checks for flexbox/grid misalignments causing inconsistent UI behavior.

3. Performance Optimization

Problem: The webpage loads too slowly, affecting user experience and SEO ranking.

How AI Helps:

- Identifies slow-loading resources, such as unoptimized images, large CSS/JS files, and third-party scripts.

- Suggests image compression and lazy loading to speed up rendering.

- Highlights unnecessary JavaScript execution that may be slowing down interactivity.

- Recommends caching strategies to improve page speed and reduce server load.

- Detects render-blocking elements that delay the loading of critical content.

4. Accessibility Testing

Problem: The web application does not comply with WCAG (Web Content Accessibility Guidelines).

>How AI Helps:

- Identifies missing alt text for images, affecting screen reader users.

- Highlights low color contrast issues that make text hard to read.

- Suggests adding ARIA roles and labels to improve assistive technology compatibility.

- Ensures proper keyboard navigation, making the site accessible for users who rely on tab-based navigation.

- Detects form accessibility issues, such as missing labels or incorrectly grouped form elements.

5. Security and Compliance Checks

Problem: The website has security vulnerabilities that could expose sensitive user data.

How AI Helps:

- Detects insecure HTTP requests that should use HTTPS.

- Highlights CORS misconfigurations that may expose sensitive data.

- Identifies missing security headers, such as Content-Security-Policy, X-Frame-Options, and Strict-Transport-Security.

- Flags exposed API keys or credentials in the network logs.

- Suggests best practices for secure authentication and session management.

6. Troubleshooting JavaScript Errors

Problem: JavaScript errors are causing unexpected behavior in the web application.

>How AI Helps:

- Analyzes console errors and suggests fixes.

- Identifies undefined variables, syntax errors, and missing dependencies.

- Helps debug event listeners and asynchronous function execution.

- Suggests ways to optimize JavaScript performance to avoid slow interactions.

7. Cross-Browser Compatibility Testing

Problem: The website works fine in Chrome but breaks in Firefox or Safari.

How AI Helps:

- Highlights CSS properties that may not be supported in some browsers.

- Detects JavaScript features that are incompatible with older browsers.

- Suggests polyfills and workarounds to ensure cross-browser support.

8. Enhancing Test Automation Strategies

Problem: Automated tests fail due to dynamic elements or inconsistent behavior.

How AI Helps:

- Identifies flaky tests caused by timing issues and improper waits.

- Suggests better locators for web elements to improve test reliability.

- Provides workarounds for handling dynamic content (e.g., pop-ups, lazy-loaded elements).

- Helps in writing efficient automation scripts by improving test structure.

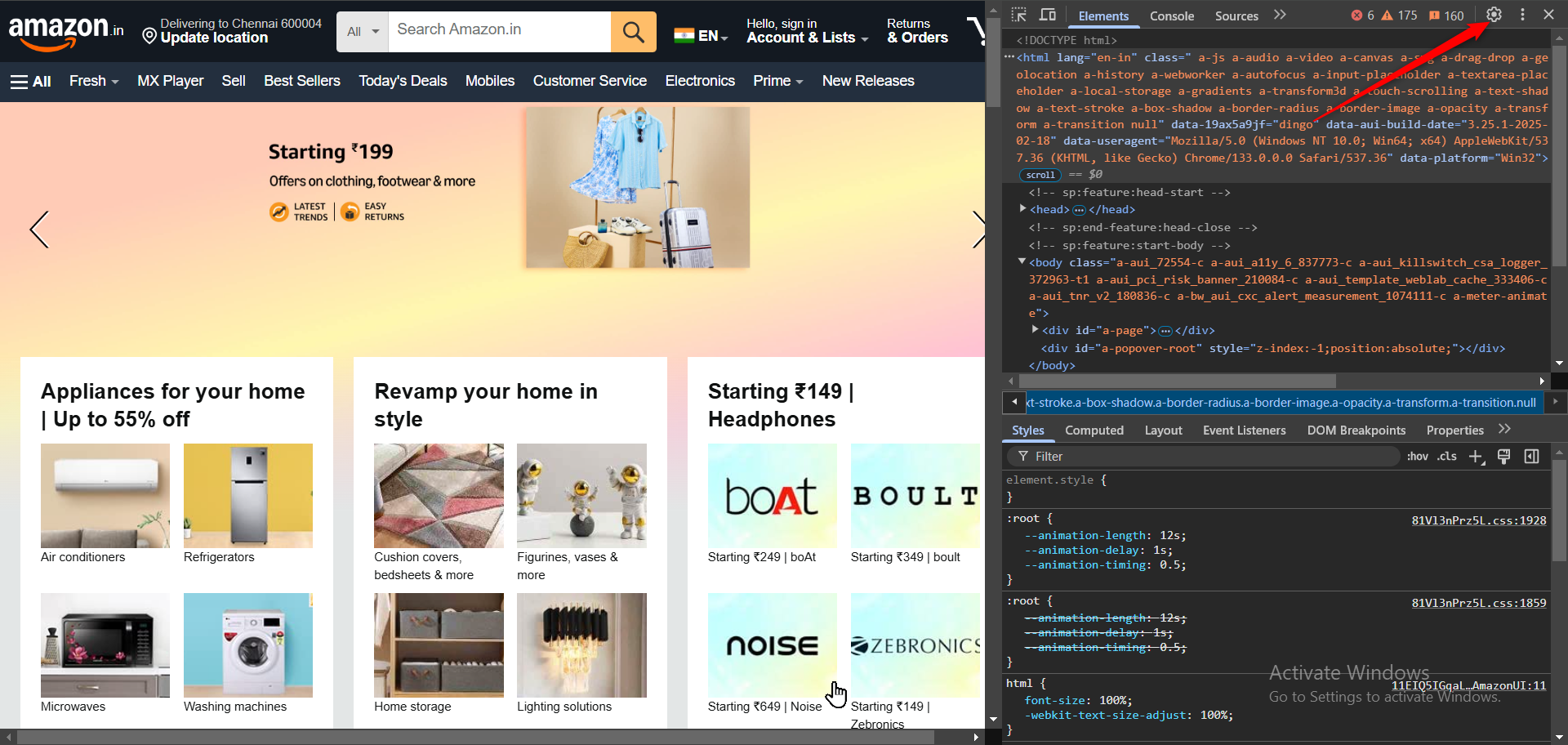

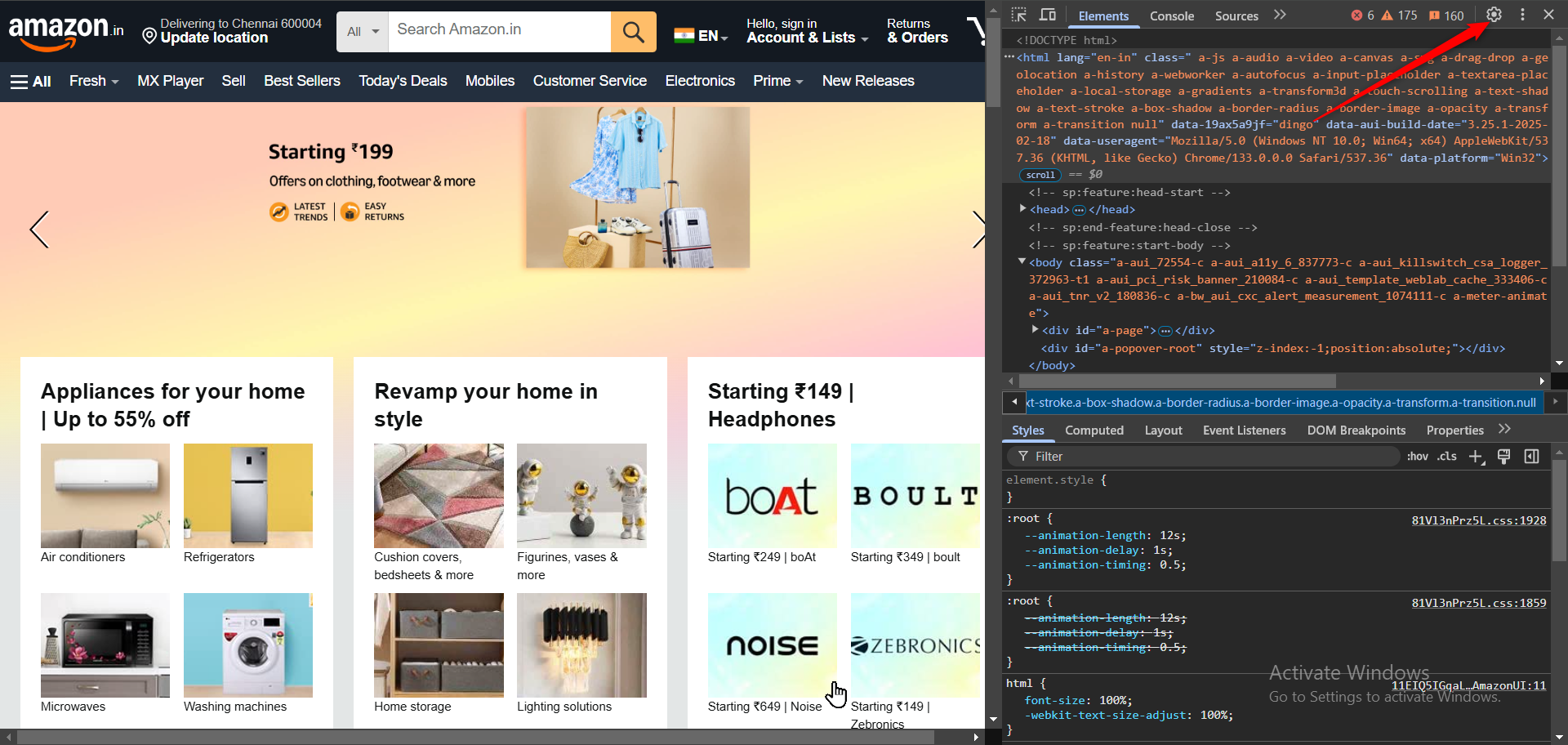

Getting Started with Chrome DevTools AI Ask Assistant

Before diving into specific tabs, let’s first enable the AI Ask Assistant in Chrome DevTools:

Step 1: Open Chrome DevTools

- Open Google Chrome.

- Navigate to the web application under test.

- Right-click anywhere on the page and select Inspect, or press F12 / Ctrl + Shift + I (Windows/Linux) or Cmd + Option + I (Mac).

- In the DevTools panel, click on the Experiments settings.

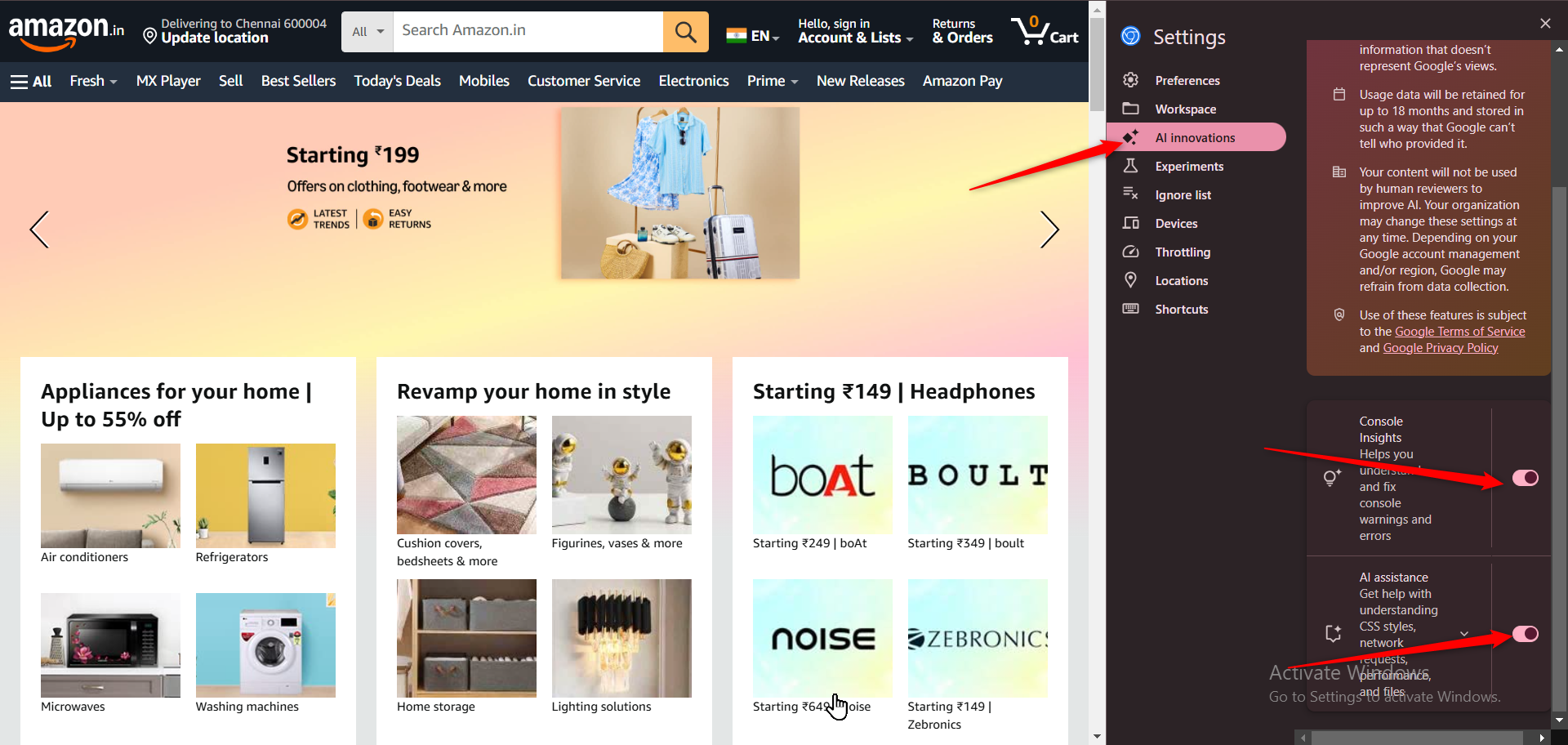

Step 2: Enable AI Ask Assistant

- Enable AI Ask Assistant if it’s available in your Chrome version.

- Restart DevTools for the changes to take effect.

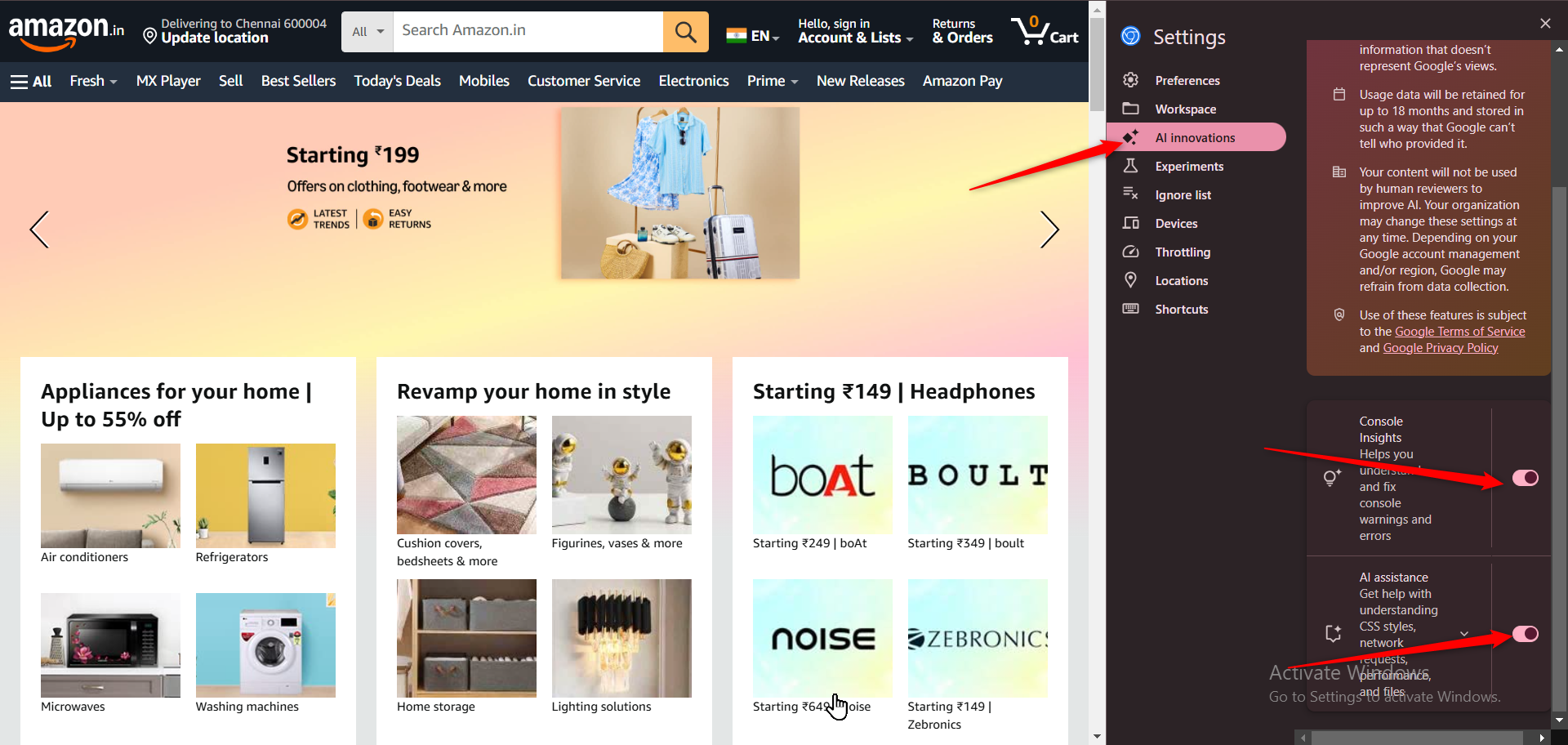

Using AI Ask Assistant in the Network Tab for Testers

The Network tab is crucial for testers to validate API requests, analyze performance, and diagnose failed network calls. The AI Ask Assistant enhances this by providing instant insights and suggestions.

Step 1: Open the Network Tab

- Open DevTools (F12 / Ctrl + Shift + I).

- Navigate to the Network tab.

- Reload the page (Ctrl + R / Cmd + R) to capture network activity.

Step 2: Ask AI to Analyze a Network Request

- Identify a specific request in the network log (e.g., API call, AJAX request, third-party script load, etc.).

- Right-click on the request and select Ask AI Assistant.

- Ask questions like:

- “Why is this request failing?”

- “What is causing the delay in response time?”

- “Are there any CORS-related issues in this request?”

- “How can I debug a 403 Forbidden error?”

Step 3: Get AI-Powered Insights for Testing

- AI will analyze the request and provide explanations.

- It may suggest fixes for failed requests (e.g., CORS issues, incorrect API endpoints, authentication errors).

- You can refine your query for better insights.

Step 4: Debug Network Issues from a Tester’s Perspective

Some example problems AI can help with:

- API Testing Issues: AI explains 404, 500, or 403 errors.

- Performance Bottlenecks: AI suggests ways to optimize API response time and detect slow endpoints.

- Security Testing: AI highlights CORS issues, mixed content, and security vulnerabilities.

- Data Validation: AI helps verify response payloads against expected values.

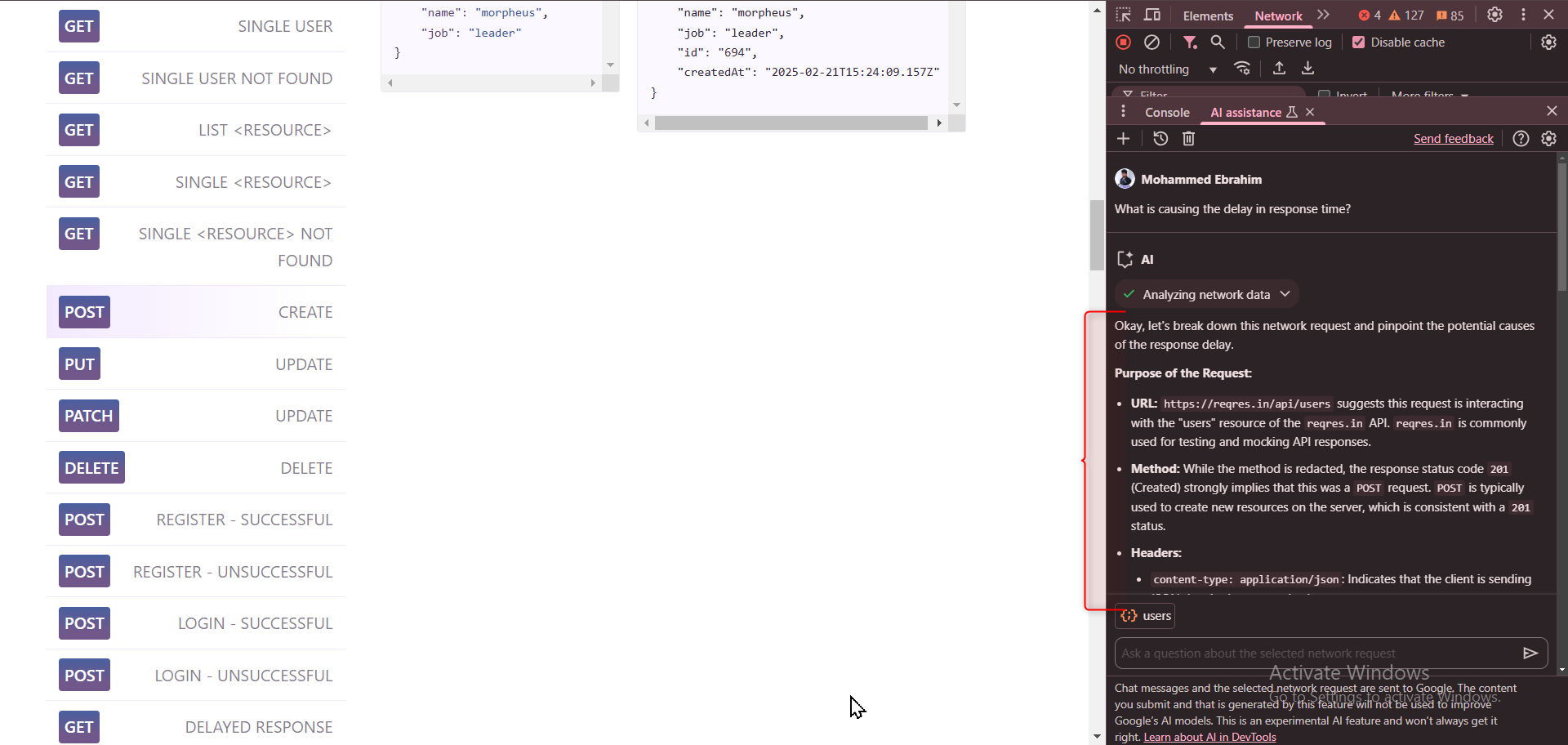

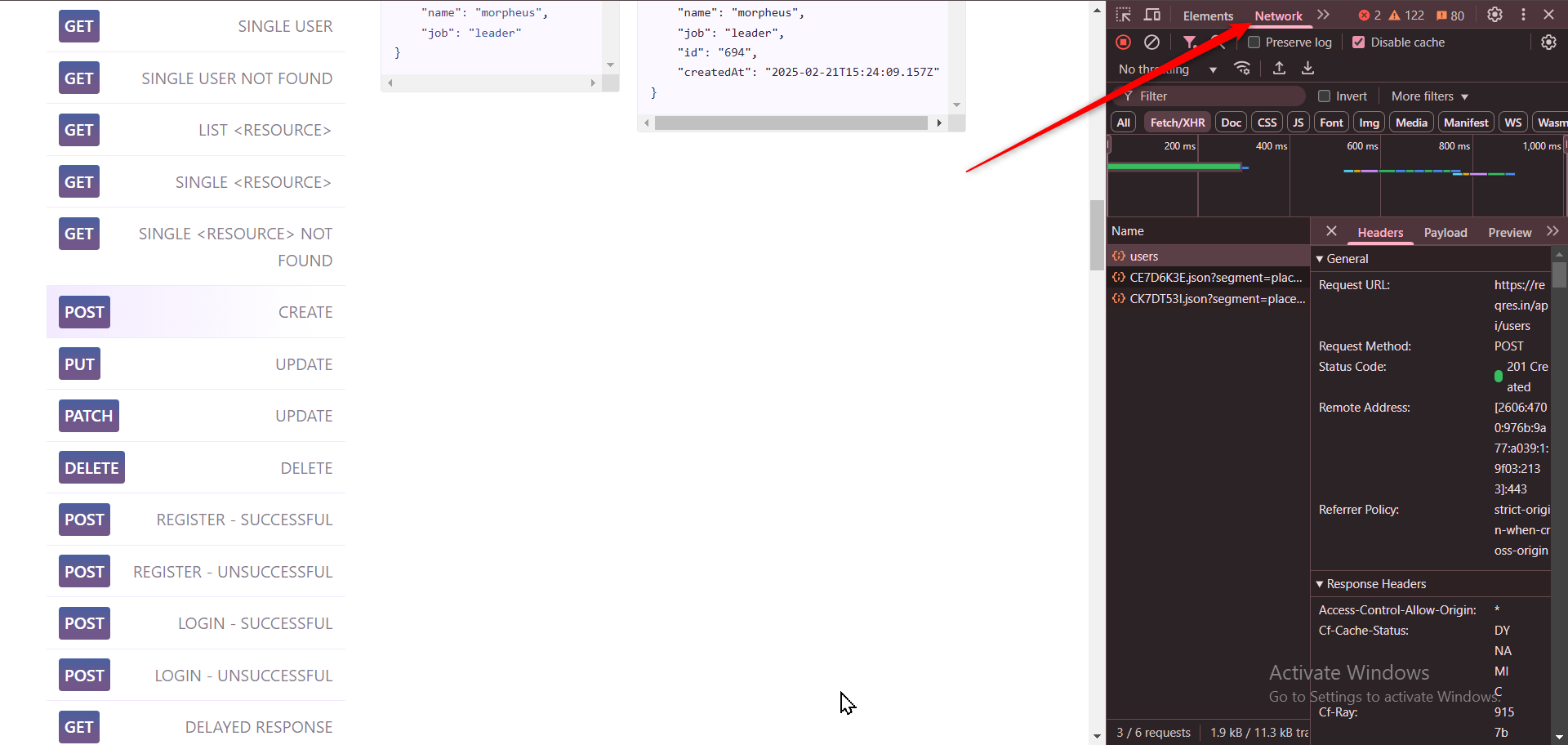

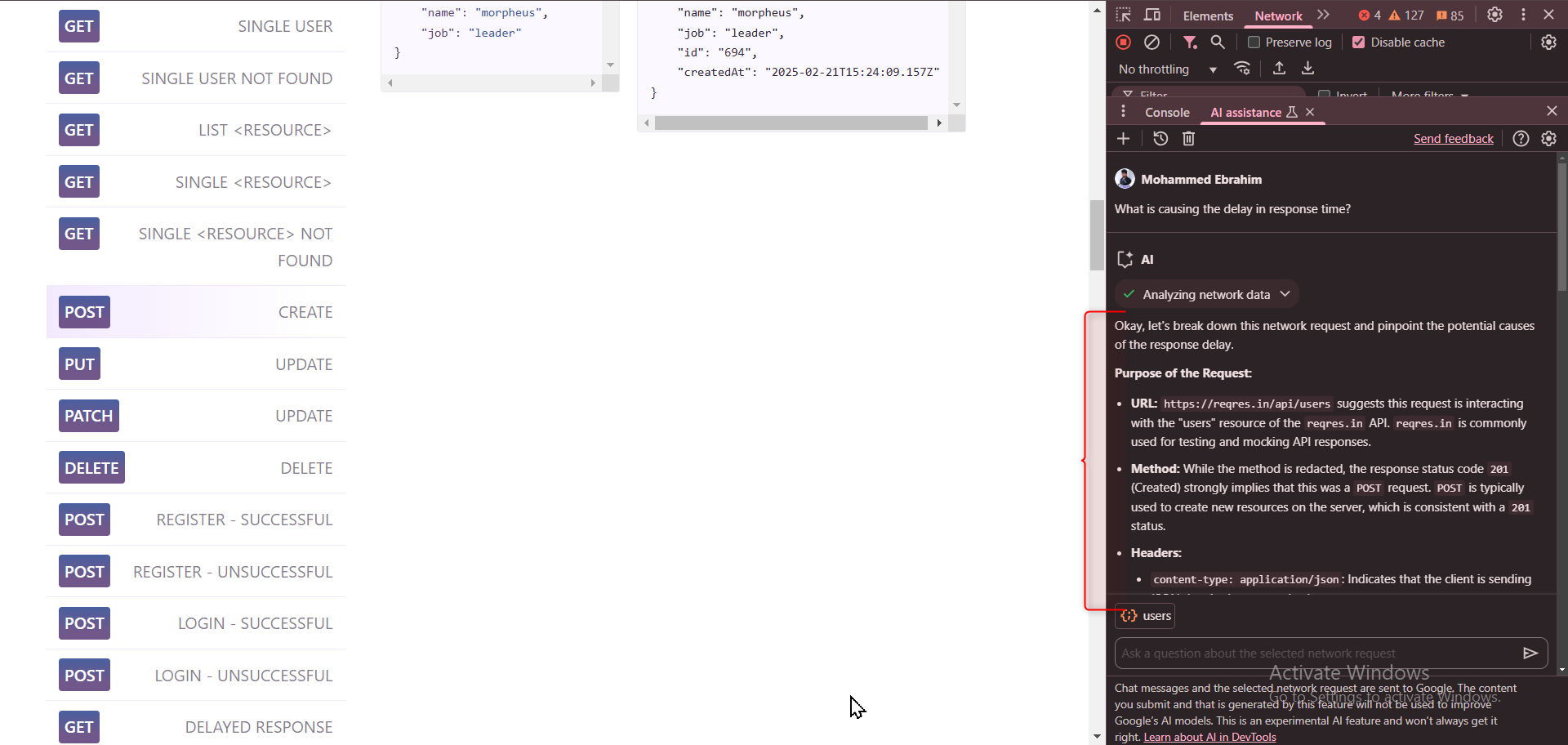

Here I asked: “What is causing the delay in response time?”

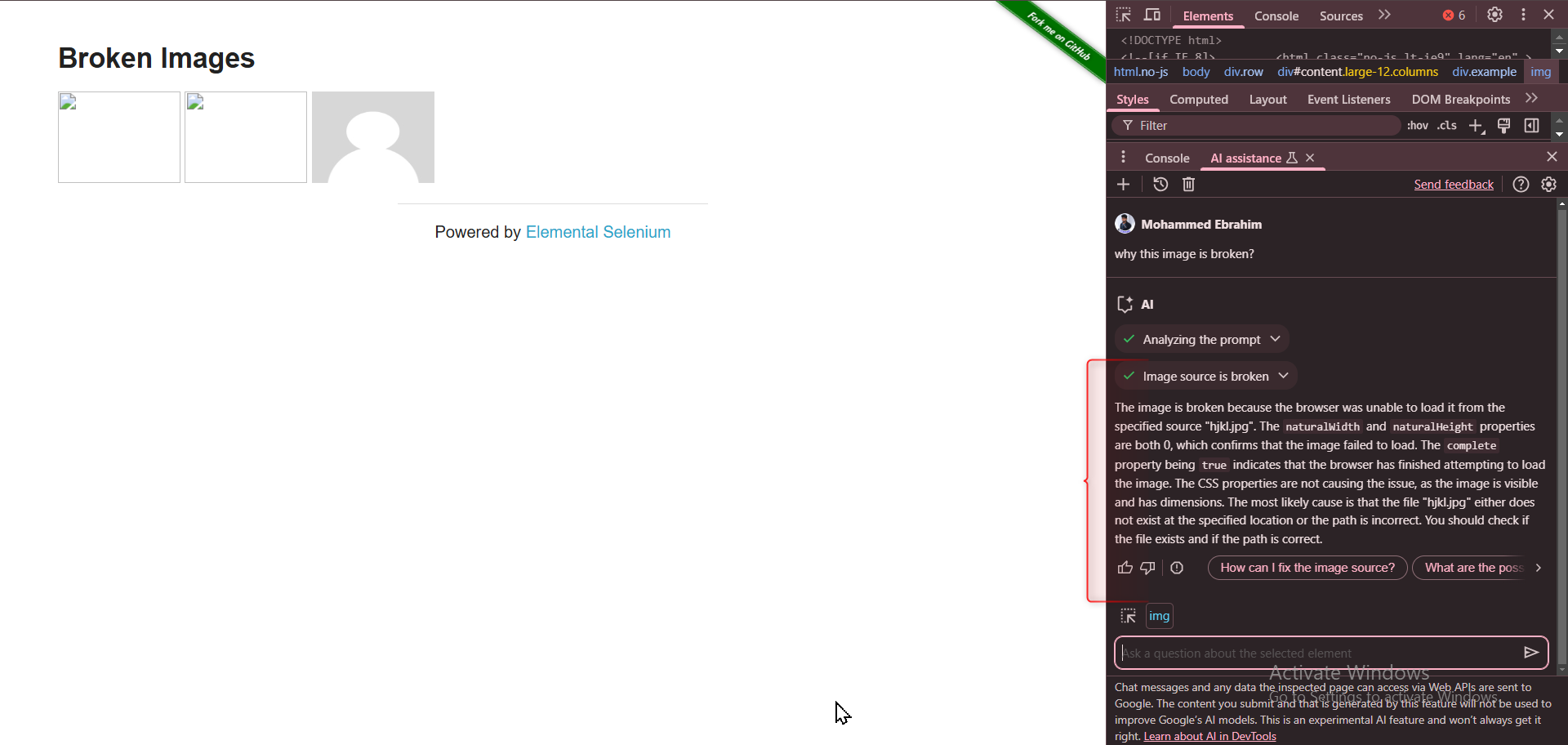

Using AI Ask Assistant in the Elements Tab for UI Testing

The Elements tab is used to inspect and manipulate HTML and CSS. AI Ask Assistant helps testers debug UI issues efficiently.

Step 1: Open the Elements Tab

- Open DevTools (F12 / Ctrl + Shift + I).

- Navigate to the Elements tab.

Step 2: Use AI for UI Debugging

- Select an element in the HTML tree.

- Right-click and choose Ask AI Assistant.

- Ask questions like:

- “Why is this button not clickable?”

- “What styles are affecting this dropdown?”

- “Why is this element overlapping?”

- “How can I fix responsiveness issues?”

Practical Use Cases for Testers

1. Debugging a Failed API Call in a Test Case

- Open the Network tab → Select the request → Ask AI why it failed.

- AI explains 403 error due to missing authentication.

- Follow AI’s solution to add the correct headers in API tests.

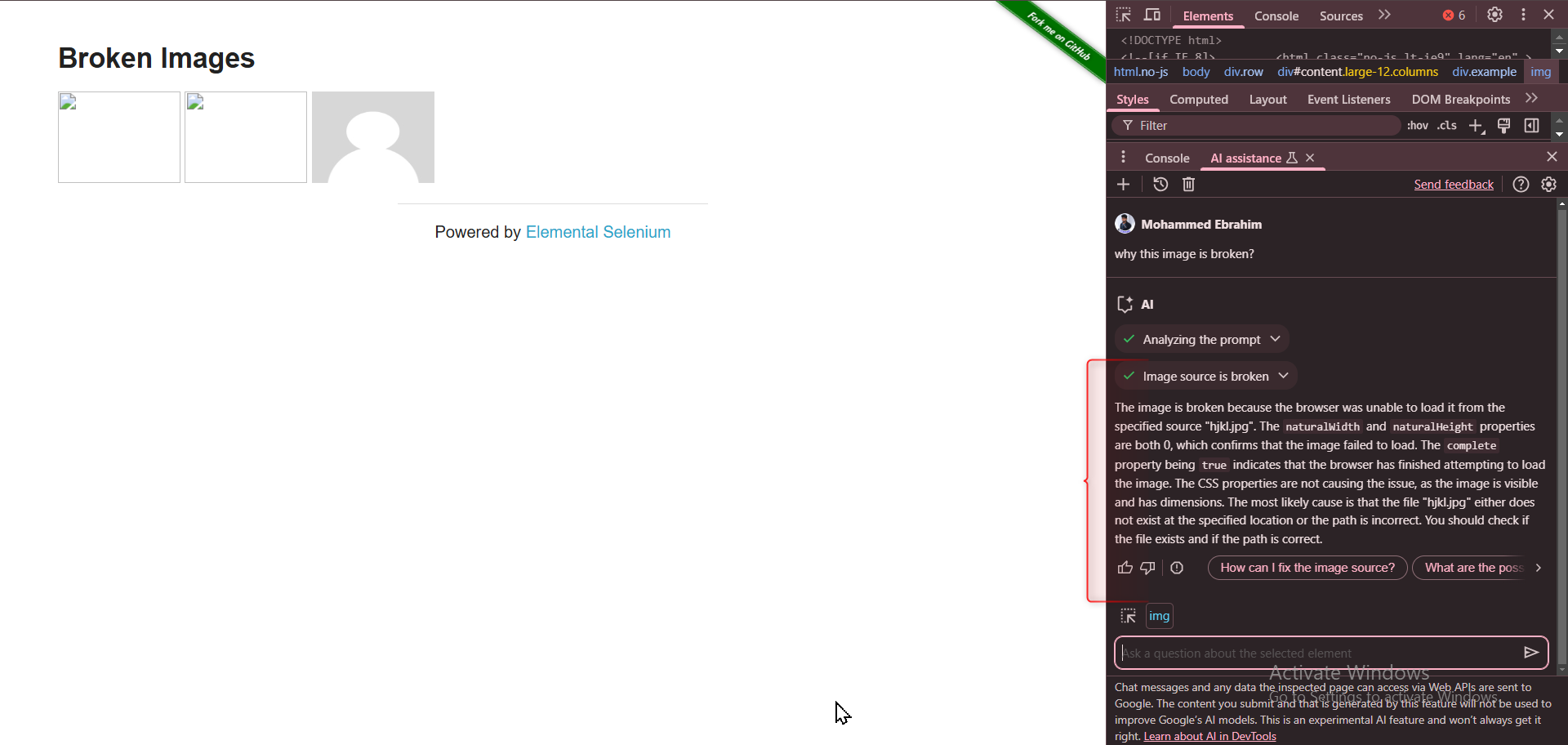

2. Identifying Broken UI Elements

- Open the Elements tab → Select the element → Ask AI why it’s not visible.

- AI identifies display: none in CSS.

- Modify the style based on AI’s suggestion and verify in different screen sizes.

3. Validating Page Load Performance in Web Testing

- Open the Network tab → Ask AI how to optimize resources.

- AI suggests reducing unnecessary JavaScript and compressing images.

- Implement suggested changes to improve performance and page load times.

4. Identifying Accessibility Issues

- Use the Elements tab → Inspect accessibility attributes.

- Ask AI to suggest ARIA roles and label improvements.

- Verify compliance with WCAG guidelines.

Conclusion

The AI Ask Assistant in Chrome DevTools makes debugging faster and more efficient by providing real-time AI-driven insights. It helps testers and developers quickly identify and fix network issues, UI bugs, performance bottlenecks, security risks, and accessibility concerns, ensuring high-quality applications. While AI tools improve efficiency, expert testing is essential for delivering reliable software. Codoid, a leader in software testing, specializes in automation, performance, accessibility, security, and functional testing. With industry expertise and cutting-edge tools, Codoid ensures high-quality, seamless, and secure applications across all domains.

Frequently Asked Questions

-

How does AI Assistant help in debugging API and network issues?

AI Assistant analyzes API requests, detects HTTP errors (404, 500, etc.), identifies CORS issues, and suggests ways to optimize response time and security.

-

Can AI Assistant help fix UI layout issues?

Yes, it helps by identifying hidden elements, CSS conflicts, and responsiveness problems, ensuring a visually consistent and accessible UI.

-

Can AI Assistant be used for accessibility testing?

Yes, it helps testers ensure WCAG compliance by identifying missing alt text, color contrast issues, and keyboard navigation problems.

-

What security vulnerabilities can AI Assistant detect?

It highlights insecure HTTP requests, missing security headers, and exposed API keys, helping testers improve security compliance.

-

Can AI Assistant help with cross-browser compatibility?

Yes, it detects CSS properties and JavaScript features that may not work in certain browsers and suggests polyfills or alternatives.

by Mollie Brown | Feb 17, 2025 | AI Testing, Blog, Latest Post |

Artificial Intelligence (AI) is transforming software testing by making it faster, more accurate, and capable of handling vast amounts of data. AI-driven testing tools can detect patterns and defects that human testers might overlook, improving software quality and efficiency. However, with great power comes great responsibility. Ethical concerns surrounding AI in software testing cannot be ignored. AI in software testing brings unique ethical challenges that require careful consideration. These concerns include bias in AI models, data privacy risks, lack of transparency, job displacement, and accountability issues. As AI continues to evolve, these ethical considerations will become even more critical. It is the responsibility of developers, testers, and regulatory bodies to ensure that AI-driven testing remains fair, secure, and transparent.

Real-World Examples of Ethical AI Challenges

Training Data Gaps in Facial Recognition Bias

Dr. Joy Buolamwini’s research at the MIT Media Lab uncovered significant biases in commercial facial recognition systems. Her study revealed that these systems had higher error rates in identifying darker-skinned and female faces compared to lighter-skinned and male faces. This finding underscores the ethical concern of bias in AI algorithms and has led to calls for more inclusive training data and evaluation processes.

Source : en.wikipedia.org

Misuse of AI in Misinformation

The rise of AI-generated content, such as deepfakes and automated news articles, has led to ethical challenges related to misinformation and authenticity. For instance, AI tools have been used to create realistic but false videos and images, which can mislead the public and influence opinions. This raises concerns about the ethical use of AI in media and the importance of developing tools to detect and prevent the spread of misinformation.

Source: theverge.com

AI and the Need for Proper Verify

In Australia, there have been instances where lawyers used AI tools like ChatGPT to generate case summaries and submissions without verifying their accuracy. This led to the citation of non-existent cases in court, causing adjournments and raising concerns about the ethical use of AI in legal practice.

Source: theguardian.com

Overstating AI Capabilities (“AI Washing”)

Some companies have been found overstating the capabilities of their AI products to attract investors, a practice known as “AI washing.” This deceptive behavior has led to regulatory scrutiny, with the U.S. Securities and Exchange Commission penalizing firms in 2024 for misleading AI claims. This highlights the ethical issue of transparency in AI marketing.

Source: reuters.com

Key Ethical Concerns in AI-Powered Software Testing

As we use AI more in software testing, we need to think about the ethical issues that come with it. These issues can harm the quality of testing and its fairness, safety, and clarity. In this section, we will discuss the main ethical concerns in AI testing, such as bias, privacy risks, being clear, job loss, and who is responsible. Understanding and fixing these problems is important. It helps ensure that AI tools are used in a way that benefits both the software industry and the users.

1. Bias in AI Decision-Making

Bias in AI occurs when testing algorithms learn from biased datasets or make decisions that unfairly favor or disadvantage certain groups. This can result in unfair test outcomes, inaccurate bug detection, or software that doesn’t work well for diverse users.

How to Identify It?

- Analyze training data for imbalances (e.g., lack of diversity in past bug reports or test cases).

- Compare AI-generated test results with manually verified cases.

- Conduct bias audits with diverse teams to check if AI outputs show any skewed patterns.

How to Avoid It?

- Use diverse and representative datasets during training.

- Perform regular bias testing using fairness-checking tools like IBM’s AI Fairness 360.

- Involve diverse teams in testing and validation to uncover hidden biases.

2. Privacy and Data Security Risks

AI testing tools often require large datasets, some of which may include sensitive user data. If not handled properly, this can lead to data breaches, compliance violations, and misuse of personal information.

How to Identify It?

- Check if your AI tools collect personal, financial, or health-related data.

- Audit logs to ensure only necessary data is being accessed.

- Conduct penetration testing to detect vulnerabilities in AI-driven test frameworks.

How to Avoid It?

- Implement data anonymization to remove personally identifiable information (PII).

- Use data encryption to protect sensitive information in storage and transit.

- Ensure AI-driven test cases comply with GDPR, CCPA, and other data privacy regulations.

3. Lack of Transparency

Many AI models, especially deep learning-based ones, operate as “black boxes,” meaning it’s difficult to understand why they make certain testing decisions. This can lead to mistrust and unreliable test outcomes.

How to Identify It?

- Ask: Can testers and developers clearly explain how the AI generates test results?

- Test AI-driven bug reports against manual results to check for consistency.

- Use explainability tools like LIME (Local Interpretable Model-agnostic Explanations) to interpret AI decisions.

How to Avoid It?

- Use Explainable AI (XAI) techniques that provide human-readable insights into AI decisions.

- Maintain a human-in-the-loop approach where testers validate AI-generated reports.

- Prefer AI tools that provide clear decision logs and justifications.

4. Accountability & Liability in AI-Driven Testing

When AI-driven tests fail or miss critical bugs, who is responsible? If an AI tool wrongly approves a flawed software release, leading to security vulnerabilities or compliance violations, the accountability must be clear.

How to Identify It?

- Check whether the AI tool documents decision-making steps.

- Determine who approves AI-based test results—is it an automated pipeline or a human?

- Review previous AI-driven testing failures and analyze how accountability was handled.

How to Avoid It?

- Define clear responsibility in testing workflows: AI suggests, but humans verify.

- Require AI to provide detailed failure logs that explain errors.

- Establish legal and ethical guidelines for AI-driven decision-making.

5. Job Displacement & Workforce Disruption

AI can automate many testing tasks, potentially reducing the demand for manual testers. This raises concerns about job losses, career uncertainty, and skill gaps.

How to Identify It?

- Monitor which testing roles and tasks are increasingly being replaced by AI.

- Track workforce changes—are manual testers being retrained or replaced?

- Evaluate if AI is being over-relied upon, reducing critical human oversight.

How to Avoid It?

- Focus on upskilling testers with AI-enhanced testing knowledge (e.g., AI test automation, prompt engineering).

- Implement AI as an assistant, not a replacement—keep human testers for complex, creative, and ethical testing tasks.

- Introduce retraining programs to help manual testers transition into AI-augmented testing roles.

Best Practices for Ethical AI in Software Testing

- Ensure fairness and reduce bias by using diverse datasets, regularly auditing AI decisions, and involving human reviewers to check for biases or unfair patterns.

- Protect data privacy and security by anonymizing user data before use, encrypting test logs, and adhering to privacy regulations like GDPR and CCPA.

- Improve transparency and explainability by implementing Explainable AI (XAI), keeping detailed logs of test cases, and ensuring human oversight in reviewing AI-generated test reports.

- Balance AI and human involvement by leveraging AI for automation, bug detection, and test execution, while retaining human testers for usability, exploratory testing, and subjective analysis.

- Establish accountability and governance by defining clear responsibility for AI-driven test results, requiring human approval before releasing AI-generated results, and creating guidelines for addressing AI errors or failures.

- Provide ongoing education and training for testers and developers on ethical AI use, ensuring they understand potential risks and responsibilities associated with AI-driven testing.

- Encourage collaboration with legal and compliance teams to ensure AI tools used in testing align with industry standards and legal requirements.

- Monitor and adapt to AI evolution by continuously updating AI models and testing practices to align with new ethical standards and technological advancements.

Conclusion

AI in software testing offers tremendous benefits but also presents significant ethical challenges. As AI-powered testing tools become more sophisticated, ensuring fairness, transparency, and accountability must be a priority. By implementing best practices, maintaining human oversight, and fostering open discussions on AI ethics, QA teams can ensure that AI serves humanity responsibly. A future where AI enhances, rather than replaces, human judgment will lead to fairer, more efficient, and ethical software testing processes. At Codoid, we provide the best AI services, helping companies integrate ethical AI solutions in their software testing processes while maintaining the highest standards of fairness, security, and transparency.

Frequently Asked Questions

-

Why is AI used in software testing?

AI is used in software testing to improve speed, accuracy, and efficiency by detecting patterns, automating repetitive tasks, and identifying defects that human testers might miss.

-

What are the risks of AI in handling user data during testing?

AI tools may process sensitive user data, raising risks of breaches, compliance violations, and misuse of personal information.

-

Will AI replace human testers?

AI is more likely to augment human testers rather than replace them. While AI automates repetitive tasks, human expertise is still needed for exploratory and usability testing.

-

What regulations apply to AI-powered software testing?

Regulations like GDPR, CCPA, and AI governance policies require organizations to protect data privacy, ensure fairness, and maintain accountability in AI applications.

-

What are the main ethical concerns of AI in software testing?

Key ethical concerns include bias in AI models, data privacy risks, lack of transparency, job displacement, and accountability in AI-driven decisions.

by Mollie Brown | Dec 23, 2024 | AI Testing, Blog, Latest Post |

In the fast-paced world of software development, maintaining efficiency while ensuring quality is paramount. AI in API testing is transforming API testing by automating repetitive tasks, providing actionable insights, and enabling faster delivery of reliable software. This blog explores how AI-driven API Testing strategies enhance testing automation, leading to robust and dependable applications.

Key Highlights

- Artificial intelligence is changing the way we do API testing. It speeds up the process and makes it more accurate.

- AI tools can make test cases, handle data, and do analysis all on their own.

- This technology can find problems early in the software development process.

- AI testing reduces release times and boosts software quality.

- Using AI in API testing gives you an edge in today’s fast-changing tech world.

The Evolution of API Testing: Embracing AI Technologies

API testing has really changed. It was done by hand before, but now we have automated tools. These tools help make the API testing process easier. Software has become more complex. We need to release updates faster, and old methods can’t keep up. Now, AI is starting a new chapter in the API testing process.

This change is happening because we want to work better and more accurately. We also need to manage complex systems in a smarter way. By using AI, teams can fix these issues on their own. This helps them to work quicker and makes their testing methods more reliable.

Understanding the Basics of API Testing

API testing focuses on validating the functionality, performance, and reliability of APIs without interacting with the user interface. By leveraging AI in API testing, testers can send requests to API endpoints, analyze responses, and evaluate how APIs handle various scenarios, including edge cases, invalid inputs, and performance under load, with greater efficiency and accuracy.

Effective API testing ensures early detection of issues, enabling developers to deliver high-quality software that meets user expectations and business objectives.

The Shift Towards AI-Driven Testing Methods

AI-driven testing uses machine learning (ML) to enhance API testing. It looks at earlier test data to find important test cases and patterns. This helps in making smarter choices, increasing the efficiency of test automation.

AI-powered API testing tools help automate boring tasks. They can create API test cases, check test results, and notice strange behavior in APIs. These tools look at big sets of data to find edge cases and guess possible problems. This helps to improve test coverage.

With this change, testers can spend more time on tough quality tasks. They can focus on exploratory testing and usability testing. By incorporating AI in API testing, they can streamline repetitive tasks, allowing for a better and more complete testing process.

Key Benefits of Integrating AI in API Testing

Enhanced Accuracy and Efficiency

AI algorithms analyze existing test data to create extensive test cases, including edge cases human testers might miss. These tools also dynamically update test cases when APIs change, ensuring continuous relevance and reliability.

Predictive Analysis

Using machine learning, AI identifies patterns in test results and predicts potential failures, enabling teams to prioritize high-risk areas. Predictive insights streamline testing efforts and minimize risks.

Faster Test Creation

AI tools can automatically generate test cases from API specifications, significantly reducing manual effort. They adapt to API design changes seamlessly.

Improved Test Data Generation

AI simplifies the generation of comprehensive datasets for testing, ensuring better coverage and more robust applications.

How AI is Revolutionizing API Testing Strategies

AI offers several advantages for API testing, like:

- Faster Test Creation: AI can read API specifications and make test cases by itself.

- Adaptability: AI tools can change with API designs without needing any manual help.

- Error Prediction: AI can find patterns to predict possible issues, which helps developers solve problems sooner.

- Efficient Test Data Generation: AI makes it simple to create large amounts of data for complete testing.

Key Concepts in AI-Driven API Testing

Before we begin with AI-powered testing, let’s review the basic ideas of API testing:

- API Testing Types:

- Functional Testing: This checks if the API functions as it should.

- Performance Testing: This measures how quickly the API works during high demand.

- Security Testing: This ensures that the data is secure and protected.

- Contract Testing: This confirms that the API meets the specifications.

- Popular Tools: Some common tools for API testing include Postman, REST-Assured, Swagger, and new AI tools like Testim and Mabl.

How to Use AI in API Testing

1. Set Up Your API Testing Environment

- Start with simple API testing tools such as Postman or REST-Assured.

- Include AI libraries like Scikit-learn and TensorFlow, or use existing AI platforms.

2. AI for Test Case Generation

AI can read your API’s definition files, such as OpenAPI or Swagger. It can suggest or even create test cases automatically. This can greatly reduce the manual effort needed.

Example:

A Swagger file explains the endpoints and what inputs and responses are expected. AI in API testing algorithms use this information to automate test generation, validate responses, and improve testing efficiency.

- Create test cases.

- Find edge cases, such as large data or strange data types.

3. Train AI Models for Testing

To improve testing, train machine learning (ML) models. These models can identify patterns and predict errors.

Steps:

- Collect Data: Gather previous API responses, including both successful and failed tests.

- Preprocess Data: Change inputs, such as JSON or XML files, to a consistent format.

- Train Models: Use supervised learning algorithms to organize API responses into groups, like pass or fail.

Example: Train a model using features like:

- Response time.

- HTTP status codes.

- Payload size.

4. Dynamic Validation with AI

AI can easily handle different fields. This includes items like timestamps, session IDs, and random values that appear in API responses.

AI algorithms look at response patterns rather than sticking to fixed values. This way, they lower the chances of getting false negatives.

5. Error Analysis with AI

AI tools look for the same mistakes after execution. They also identify the main causes of those mistakes.

Use anomaly detection to find performance issues when API response times go up suddenly.

Code Example: with Python

Below is a simple example of how AI can help guess the results of an API test:

1. Importing Libraries

import requests

from sklearn.ensemble import RandomForestClassifier

import numpy as np

- requests: Used to make HTTP requests to the API.

- RandomForestClassifier: A machine learning model from sklearn to classify whether an API test passes or fails based on certain input features.

- numpy: Helps handle numerical data efficiently.

2. Defining the API Endpoint

url = "https://jsonplaceholder.typicode.com/posts/1"

- This is the public API we are testing. It returns a mock JSON response, which is great for practice.

3. Making the API Request

try:

response = requests.get(url)

response.raise_for_status() # Throws an error if the response is not 200

data = response.json() # Parses the response into JSON format

except requests.exceptions.RequestException as e:

print(f"Error during API call: {e}")

response_time = 0 # Default value for failed requests

status_code = 0

data = {}

else:

response_time = response.elapsed.total_seconds() # Time taken for the request

status_code = response.status_code # HTTP status code (e.g., 200 for success)

- What Happens Here?

- The code makes a GET request to the API.

- If the request fails (e.g., server down, bad URL), it catches the error, prints it, and sets default values (response time = 0, status code = 0).

- If the request is successful, it calculates the time taken (response_time) and extracts the HTTP status code (status_code).

4. Defining the Training Data

X = np.array([

[0.1, 1], # Example: A fast response (0.1 seconds) with success (1 for status code 200)

[0.5, 1], # Slower response with success

[1.0, 0], # Very slow response with failure

[0.2, 0], # Fast response with failure

])

y = np.array([1, 1, 0, 0]) # Labels: 1 = Pass, 0 = Fail

- What is This?

- This serves as the training data for the machine learning model used in AI in API testing, enabling it to identify patterns, predict outcomes, and improve test coverage effectively.

- It teaches the model how to classify API tests as “Pass” or “Fail” based on:

- Response time (in seconds).

- HTTP status code, simplified as 1 (success) or 0 (failure).

5. Training the Model

clf = RandomForestClassifier(random_state=42)

clf.fit(X, y)

- What Happens Here?

- A RandomForestClassifier model is created and trained using the data (X) and labels (y).

- The model learns patterns to predict “Pass” or “Fail” based on input features.

6. Preparing Features for Prediction

features = np.array([[response_time, 1 if status_code == 200 else 0]])

- What Happens Here?

- We take the response_time and the HTTP status code (1 if 200, otherwise 0) from the API response and package them as input features for prediction.

7. Predicting the Outcome

prediction = clf.predict(features)

if prediction[0] == 1:

print("Test Passed: The API is performing well.")

else:

print("Test Failed: The API is not performing optimally.")

- What Happens Here?

- The trained model predicts whether the API test is a “Pass” or “Fail”.

- If the prediction is 1, it prints “Test Passed.”

- If the prediction is 0, it prints “Test Failed.”

Complete Code

import requests

from sklearn.ensemble import RandomForestClassifier

import numpy as np

# Public API Endpoint

url = "https://jsonplaceholder.typicode.com/posts/1"

try:

# API Request

response = requests.get(url)

response.raise_for_status() # Raise an exception for HTTP errors

data = response.json() # Parse JSON response

except requests.exceptions.RequestException as e:

print(f"Error during API call: {e}")

response_time = 0 # Set default value for failed response

status_code = 0

data = {}

else:

# Calculate response time

response_time = response.elapsed.total_seconds()

status_code = response.status_code

# Training Data: [Response Time (s), Status Code (binary)], Labels: Pass(1)/Fail(0)

X = np.array([

[0.1, 1], # Fast response, success

[0.5, 1], # Slow response, success

[1.0, 0], # Slow response, error

[0.2, 0], # Fast response, error

])

y = np.array([1, 1, 0, 0])

# Train Model

clf = RandomForestClassifier(random_state=42)

clf.fit(X, y)

# Prepare Features for Prediction

# Encode status_code as binary: 1 for success (200), 0 otherwise

features = np.array([[response_time, 1 if status_code == 200 else 0]])

# Predict Outcome

prediction = clf.predict(features)

if prediction[0] == 1:

print("Test Passed: The API is performing well.")

else:

print("Test Failed: The API is not performing optimally.")

Summary of What the Code Does

- Send an API Request: The code fetches data from a mock API and measures the time taken and the status code of the response.

- Train a Machine Learning Model: It uses example data to train a model to predict whether an API test passes or fails.

- Make a Prediction: Based on the API response time and status code, the code predicts if the API is performing well or not.

Case Studies: Success Stories of AI in API Testing

Many case studies show the real benefits of AI for API testing. These stories show how different companies used AI to make their software development process faster. They also improved the quality of their applications and gained an edge over others.

A leading e-commerce company used an AI-driven API testing solution. This made their test execution faster. It also improved their test coverage with NLP techniques. Because of this, they had quicker release cycles and better application performance. Users enjoyed a better experience as a result.

| Company |

Industry |

Benefits Achieved |

| Company A |

E-commerce |

Reduced testing time by 50%, increased test coverage by 20%, improved release cycles |

| Company B |

Finance |

Enhanced API security, reduced vulnerabilities, achieved regulatory compliance |

| Company C |

Healthcare |

Improved data integrity, ensured HIPAA compliance, optimized application performance |

Popular AI-Powered API Testing Tools

- Testim: AI helps you set up and maintain test automation.

- Mabl: Tests that fix themselves and adapt to changes in the API.

- Applitools: Intelligent checking using visual validation.

- RestQA: AI-driven API testing based on different scenarios.

Benefits of AI in API Testing

- Less Manual Effort: It automates repeated tasks, like creating test cases.

- Better Accuracy: AI reduces the chances of human errors in testing.

- Quicker Feedback: Spot issues faster using intelligent analysis.

- Easier Scalability: Handle large testing easily.

Challenges in AI-Driven API Testing

- Data Quality Matters: Good data is important for AI models to learn and get better.

- Hard to Explain: It can be hard to see how AI makes its choices.

- Extra Work to Set Up: At first, setting up and adding AI tools can require more work.

Ensuring Data Privacy and Security in AI-Based Tests

AI-based testing relies on a large amount of data. It’s crucial to protect that data. The information used to train AI models can be sensitive. Therefore, we need strong security measures in place. These measures help stop unauthorized access and data breaches.

Organizations must focus on keeping data private and safe during the testing process. They should use encryption and make the data anonymous. It’s important to have secure methods to store and send data. Also, access to sensitive information should be limited based on user roles and permissions.

Good management of test environments is key to keeping data secure. Test environments need to be separate from the systems we use daily. Access to these environments should be well controlled. This practice helps stop any data leaks that might happen either accidentally or intentionally.

Conclusion

In conclusion, adding AI to API testing changes how testing is done. This is very important for API test automation. It makes testing faster and more accurate. AI also helps to predict results better. By using AI, organizations can improve their test coverage and processes. They can achieve this by automating test case generation and managing data with AI. Many success stories show the big benefits of AI in API testing. However, there are some challenges, like needing special skills and protecting data. Even so, the positive effects of AI in API testing are clear. Embracing AI will help improve your testing strategy and keep you updated in our fast-changing tech world.

Frequently Asked Questions

-

How does AI improve API testing accuracy?

AI improves API testing. It creates extra test cases and carefully checks test results. This helps find small problems that regular testing might overlook. Because of this, we have better API tests and software that you can trust more.

-

Can AI in API testing reduce the time to market?

AI speeds up the testing process by using automation. This means there is less need for manual work. It makes test execution better. As a result, software development can go faster. It also helps reduce the time needed to launch a product.

-

Are there any specific AI tools recommended for API testing?

Some popular API testing tools that people find efficient and functional are Parasoft SOAtest and others that use OpenAI's technology for advanced test case generation. The best tool for you will depend on your specific needs.

by Anika Chakraborty | Dec 13, 2024 | AI Testing, Blog, Latest Post |

Effective prompt engineering for question answering is a key skill in natural language processing (NLP) and text generation. It involves crafting clear and specific prompts to achieve precise outcomes from generative AI models. This is especially beneficial in QA and AI Testing Services, where tailored prompts can enhance automated testing, identify edge cases, and validate software behavior effectively. By focusing on prompt engineering, developers and QA professionals can streamline testing processes, improve software quality, and ensure a more efficient approach to detecting and resolving issues.

Key Highlights

- Prompt Engineering for QA is important for getting the best results from generative AI models in quality assurance.

- Good prompts give context and explain what kind of output is expected. This helps AI provide accurate responses.

- Techniques such as chain-of-thought prompting, few-shot learning, and AI-driven prompt creation play a big role in Prompt Engineering for QA.

- Real-life examples show how Prompt Engineering for QA has made test scenarios automatic, improved user experience, and helped overall QA processes.

- Despite challenges like technical limits, Prompt Engineering for QA offers exciting opportunities with the growth of AI and automation.

Understanding Prompt Engineering

In quality assurance, Prompt Engineering for QA is really important. It links what people need with what AI can do. This method helps testers improve their automated testing processes. Instead of only using fixed test cases, QA teams can use Prompt Engineering for QA. This allows them to benefit from AI’s strong reasoning skills. As a result, they can get better accuracy, work more efficiently, and make users happier with higher-quality software.

The Fundamentals of Prompt Engineering

At its core, Prompt Engineering for QA means crafting clear instructions for AI models. This allows AI to give precise answers that support human intelligence. QA experts skilled in Prompt Engineering understand what AI can do and what it cannot. They change prompts according to their knowledge in computer science to fit the needs of software testing. These experts are also interested in prompt engineer jobs. For example, instead of just saying, “Test the login page,” a more effective prompt could be:

- Make test cases for a login page.

- Consider different user roles.

- Add possible error situations.

In prompt engineering for QA, this level of detail is usual. It helps ensure that all tests are complete. This also makes certain that the results work well.

The Significance of Prompt Engineering for QA

Prompt engineering for quality assurance has changed our approach to QA. It helps AI tools test better and faster. With simple prompts, QA teams can make their own test cases, identify potential bugs, and write test reports.

Prompt Engineering for QA helps teams find usability problems before they occur. This shift means they fix issues before they happen instead of after. Because of this, users enjoy smoother and better experiences. Therefore, Prompt Engineering for QA is key in today’s quality assurance processes.

The Mechanics of Prompt Engineering

To get the best results from prompt engineering for QA, testers should create prompts that match what AI can do and the tasks they need to complete, resulting in relevant output that leads to specific output. They should provide clear instructions and use important keywords. Adding specific examples, like code snippets, can help too. By doing this, QA teams can effectively use prompt engineering to improve software.

Types of Prompts in QA Contexts

The versatility of prompt engineering for quality assurance (QA) is clear. It can be used for various tasks. Here are some examples:

- Test Case Generation Prompts: “Make test cases for a login page with various user roles.”

- Bug Prediction Prompts: “Check this module for possible bugs, especially in tricky situations.”

- Test Report Prompts: “Summarize test results, highlighting key issues and areas where we can improve.”

These prompts display how helpful prompt engineering is for quality assurance. It makes sure that the testing is complete and works well.

Sample Prompts for Testing Scenarios

1. Automated Test Script Generation

Prompt:“Generate an automated test script for testing the login functionality of a web application. The script should verify that a user can successfully log in using valid credentials and display an error message when invalid credentials are entered.”

2. Bug Identification in Test Scenarios

Prompt:“Analyze this test case for potential issues in edge cases. Highlight any scenarios where bugs might arise, such as invalid input types or unexpected user actions.”

3. Test Data Generation

Prompt:“Generate a set of valid and invalid test data for an e-commerce checkout process, including payment information, shipping address, and product selections. Ensure the data covers various combinations of valid and invalid inputs.”

4. Cross-Platform Compatibility Testing

Prompt:“Create a test plan to verify the compatibility of a mobile app across Android and iOS platforms. The plan should include test cases for different screen sizes, operating system versions, and device configurations.”

5. API Testing

Prompt:“Generate test cases for testing the REST API of an e-commerce website. Include tests for product search, adding items to the cart, and placing an order, ensuring that correct status codes are returned and that the response time is within acceptable limits.”

6. Performance Testing

Prompt:“Design a performance test case to evaluate the load time of a website under high traffic conditions. The test should simulate 1,000 users accessing the homepage and ensure it loads within 3 seconds”.

7. Security Testing

Prompt:“Write a test case to check for SQL injection vulnerabilities in the search functionality of a web application. The test should include attempts to inject malicious SQL queries through input fields and verify that proper error handling is in place”.

8. Regression Testing

Prompt:“Create a regression test suite to validate the key functionalities of an e-commerce website after a new feature (product recommendations) is added. Ensure that the checkout process, user login, and search functionalities are not impacted”.

9. Usability Testing

Prompt:“Generate a set of test cases to evaluate the usability of a mobile banking app. Include scenarios such as ease of navigation, clarity of instructions, and intuitive design for performing tasks like transferring money and checking account balances”.

10. Localization and Internationalization Testing

Prompt:Create a test plan to validate the localization of a website for different regions (US, UK, and Japan). Ensure that the content is correctly translated, date formats are accurate, and currencies are displayed properly”.

Each example shows how helpful and adaptable prompt engineering can be for quality assurance in various testing situations.

Crafting Effective Prompts for Automated Testing

Creating strong prompts is important for good prompt engineering in QA. They assist in answering user queries. When prompts provide details like the testing environment, target users, and expected outcomes, they result in better AI answers. Refining these prompts makes prompt engineering even more useful for QA in automated testing.

Advanced Techniques in Prompt Engineering

New methods are expanding what we can achieve with prompt engineering in quality assurance.

- Chain-of-Thought Prompting: This simplifies difficult tasks into easy steps. It helps AI think more clearly.

- Dynamic Prompt Generation: This uses machine learning to enhance prompts based on what you input and your feedback.

- These methods show how prompt engineering for QA is evolving. They are designed to handle more complex QA tasks effectively.

Leveraging AI for Dynamic Prompt Engineering

AI and machine learning play a pivotal role in generative artificial intelligence prompt engineering for quality assurance (QA). They help make prompts better over time. By analyzing a lot of data and updating prompts regularly, AI-driven prompt engineering offers more accurate and useful results for various testing tasks.

Integrating Prompt Engineering into Workflows

Companies should include prompt engineering in their existing workflows to use prompt engineering for QA effectively. It’s important to teach QA teams how to create prompts well. Collaborating with data scientists is also vital. This approach will improve testing efficiency while ensuring that current processes work well.

Case Studies: Real-World Impact of Prompt Engineering

Prompt engineering for QA has delivered excellent results in many industries.

| Industry |

Use Case |

Outcome |

| E-commerce |

Improved chatbot accuracy |

Faster responses, enhanced user satisfaction. |

| Software Development |

Automated test case generation |

Reduced testing time, expanded test coverage. |

| Healthcare |

Enhanced diagnostic systems |

More accurate results, better patient care. |

These examples show how prompt engineering can improve Quality Assurance (QA) in today’s QA methods.

Challenges and Solutions in Prompt Engineering

| S. No |

Challenges |

Solutions |

| 1 |

Complexity of Test Cases |

– Break down test cases into smaller, manageable parts.

– Use AI to generate a variety of test cases automatically.

|

| 2 |

Ambiguity in Requirements |

– Make prompts more specific by including context, expected inputs, and relevant facts regarding type of output outcomes, especially in relation to climate change.

– Use structured templates for clarity.

|

| 3 |

Coverage of Edge Cases |

– Use AI-driven tools to identify potential edge cases.

– Create modular prompts to test multiple variations of inputs.

|

| 4 |

Keeping Test Scripts Updated |

– Regularly update prompts to reflect any system changes.

– Automate the process of checking test script relevance with CI/CD integration.

|

| 5 |

Scalability of Test Cases |

– Design prompts that allow for scalability, such as allowing dynamic data inputs.

– Use reusable test components for large test suites.

|

| 6 |

Handling Large and Dynamic Systems |

– Use data-driven testing to scale test cases effectively.

– Automate the generation of test cases to handle dynamic system changes.

|

| 7 |

Integration with Continuous Testing |

– Integrate prompts with CI/CD pipelines to automate testing.

– Create prompts that support real-time feedback and debugging.

|

| 8 |

Managing Test Data Variability |

– Design prompts that support a wide range of data types.

– Leverage synthetic data generation to ensure complete test coverage.

|

| 9 |

Understanding Context for Multi-Platform Testing |

– Provide specific context for each platform in prompts (e.g., Android, iOS, web).

– Use cross-platform testing frameworks like BrowserStack to ensure uniformity across devices.

|

| 10 |

Reusability and Maintenance of Prompts |

– Develop reusable templates for common testing scenarios.

– Implement a version control system for prompt updates and changes.

|

Conclusion

Prompt Engineering for QA is changing the way we test software. It uses AI to make testing more accurate and efficient. This approach includes methods like chain-of-thought prompting, specifically those that leverage the longest chains of thought, and AI-created prompts, which help teams tackle tough challenges effectively by mimicking a train of thought. As AI and automation continue to grow, Prompt Engineering for QA has the power to transform QA work for good. By adopting this new strategy, companies can build better software and offer a great experience for their users.

Frequently Asked Questions

-

What is Prompt Engineering and How Does It Relate to QA?

Prompt engineering in quality assurance means creating clear instructions for a machine learning model, like an AI language model. The aim is to help the AI generate the desired output without needing prior examples or past experience. This output can include test cases, bug reports, or improvements to code. In the end, this process enhances software quality by providing specific information.

-

Can Prompt Engineering Replace Traditional QA Methods?

Prompt engineering supports traditional QA methods, but it can't replace them. AI tools that use effective prompts can automate some testing jobs. They can also help teams come to shared ideas and think in similar ways for complex tasks, even when things get complicated, ultimately leading to the most commonly reached conclusion. Still, human skills are very important for tasks that need critical thinking, industry know-how, and judging user experience.

-

What Are the Benefits of Prompt Engineering for QA Teams?

Prompt engineering helps QA teams work better and faster. It allows them to achieve their desired outcomes more easily. With the help of AI, testers can automate their tasks. They receive quick feedback and can tackle tougher problems. Good prompts assist AI in providing accurate responses. This results in different results that enhance the quality of software.

-

Are There Any Tools or Platforms That Support Prompt Engineering for QA?

Many tools and platforms are being made to help with prompt engineering for quality assurance (QA). These tools come with ready-made prompt templates. They also let you connect AI models and use automated testing systems. This helps QA teams use this useful method more easily.

by Charlotte Johnson | Jul 18, 2024 | AI Testing, Blog, Featured |

Large Language Model (LLM) software testing requires a different approach compared to conventional mobile, web, and API testing. This is due to the fact that the output of such LLM or AI applications is unpredictable. A simple example is that even if you give the same prompt twice, you will receive unique outputs from the LLM model. We faced similar challenges when we ventured into GenAI development. So based on our experience of testing the AI applications we developed and other LLM testing projects we have worked on, we were able to develop a strategy for testing AI and LLM solutions. So in this blog, we will be helping you get a comprehensive understanding of LLM software testing.

LLM Software Testing Approach

By identifying the quality problems associated with LLMs, you can effectively strategize your LLM software testing approach. So let’s start by comprehending the prevalent LLM quality and safety concerns and learn how to find them with LLM quality checks.

Hallucination

As the word suggests, Hallucination is when your LLM application starts providing irrelevant or nonsensical responses. It is in reference to how humans hallucinate and see things that do not exist in real life and think them to be real.

Example:

Prompt: How many people are living on the planet Mars?

Response: 50 million people are living on Mars.

How to Detect Hallucinations?

Given that the LLM can hallucinate in multiple ways for different prompts, detecting these hallucinations is a huge challenge that we have to overcome during LLM software testing. We recommend using the following methods,

Check Prompt-Response Relevance – Checking the relevance between a given prompt and response can assist in recognizing hallucinations. We can use the BLEU scoreBLEU scoreMeasures how closely a generated text matches reference texts by comparing short sequences of words and BERT scoreBERT scoreAssesses how similar a generated text is to reference texts by comparing their meanings using BERT language model embeddings to check the relevance between prompt and LLM response.

- BLEU score is calculated with exact matching by utilizing the Python Evaluate library. The score ranges from 0 to 1 and a higher score indicates a greater similarity between your prompt and response.

- BERT score is calculated with semantic matching and it is a powerful evaluation metric to measure text similarity.

Check Against Multiple Responses – We can check the accuracy of the actual response by comparing it to various randomly generated responses for a given prompt. We can use Sentence Embedding Cosine Distance & LLM Self-evaluation to check the similarity.

Testing Approach

- Shift Left Testing – Before deploying your LLM application, evaluate your model or RAG implementation thoroughly

- Shift Right Testing – Check BERT score for production prompts and responses

Prompt Injections

Jailbreak – Jailbreak is a direct prompt injection method to get your LLM to ignore the established safeguards that tell the system what not to do. Let’s say a malicious user asks a restricted question in the Base64 formatBase64 formatIt is a way of encoding binary data into a text format using a set of 64 different ASCII characters , your LLM application should not answer the question. Security experts have already identified various Jailbreaking methods in commonly used LLMs. So it is important to analyze such methods and ensure your LLM system is not affected by them.

Indirect Injection

- Hidden prompts are often added by attackers in your original prompt.

- Attackers intentionally make the model to get data from unreliable sources. Once training data is incorrect, the response from LLM will also be incorrect.

Refusals – Let’s say your LLM model refuses to answer for a valid prompt, it could be because the prompt might be modified before sending it to LLM.

How to prevent Prompt Injection?

- Ensure your training data doesn’t have sensitive information

- Ensure your model doesn’t get data from unreliable external sources

- Perform all the security checks for LLM APIs

- Check substrings like (Sorry, I can’t, I am not allowed) in response to detect refusals

- Check response sentiment to detect refusals

RAG Injection

RAG is an AI framework that can effectively retrieve and incorporate outside information with the prompt provided to LLM. This allows the model to generate an accurate response when contextual cues are given by the user. The outside or external information is usually retrieved and stored in a vector database.

If poisoned data is obtained from an external source, how will LLM respond? Clearly, your model will start producing hallucinated responses. This phenomenon in LLM software testing is referred to as RAG injection.

Data Leakage

Data Leakage occurs when confidential or personal information is exposed either through a Prompt or LLM response.

Data Leak from Prompt – Let’s assume a user mentions their credit card number or password in their prompt. In that case, the LLM application must identify this information to be confidential even before it sends the request to the model for processing.

Data Leak from Response – Let’s take a Healthcare LLM application as an example here. Even if a user asks for medical records, the model should never disclose sensitive patient information or personal data. The same applies to other types of LLM applications as well.

How to prevent Data Leakage?

- Ensure training data doesn’t store any personal or confidential information.

- Use Regex to check all the incoming prompts or outgoing responses for Personal Identifiable Information.(PII)

Grounding Issues

Grounding is a method for tailoring your LLM to a particular domain, persona, or use case. We can cover this in our LLM software testing approach through prompt instructions. When an LLM is limited to a specific domain, all of its responses must fall within that domain. So manual testers have a vital responsibility here in identifying any LLM grounding problems.

Testing Approach

- Ask multiple questions that are not relevant to the Grounding instructions.

- Add an active response monitoring mechanism in Production to check the Groundedness score.

Token Usage

There are numerous LLM APIs in the market that charge a fee for the tokens generated from the prompts. Let’s say your LLM application is generating more tokens after a new deployment, this will result in a surge in the monthly billing for API usage.

The pricing of LLM products for many companies is typically determined by Token consumption and other resources utilized. If you don’t calculate & monitor token usage, your LLM product will not make the expected revenue from it.

Testing Approach

- Monitor token usage and the monthly cost constantly.

- Ensure the response limit is working as expected before each deployment.

- Always look for optimizing token usage.

General LLM Software Testing Tips

For effective LLM software testing, there are several key steps that should be followed. The first step is to clearly define the objectives and requirements of your application. This will provide a clear roadmap for testing and help determine what aspects need to be focused on during the testing process

Moreover, continuous integration (CI) plays an important role in ensuring a smooth development workflow by constantly integrating new code into the existing codebase while running automated tests simultaneously. This helps catch any issues early on before they pile up into bigger problems.

It is crucial to have a dedicated team responsible for monitoring and managing quality assurance throughout the entire development cycle. A competent team will ensure effective communication between developers and testers resulting in timely identification and resolution of any issues found during testing.

Conclusion:

LLM software testing may seem like a daunting and time-consuming process, but it is an essential step in delivering a high-quality product to end-users. By following the steps outlined above, you can ensure that your LLM application is thoroughly tested and ready for success in the market. As it is an evolving technology, there will be rapid advancements in the way we approach LLM application testing. So make sure to keep updating your approach by keeping yourself updated. Also, make sure to keep an eye out on this space for more informative content.

by admin | Aug 16, 2021 | AI Testing, Blog, Latest Post |

In recent years organizations have invested significantly in structuring their testing process to ensure continuous releases of high-quality software. But all that streamlining doesn’t apply when artificial intelligence enters the equation. Since the testing process itself is more challenging, organizations are now in a dire need of a different approach to keep up with the rapidly increasing inclusion of AI in the systems that are being created. AI technologies are primarily used to enhance our experience with the systems by improving efficiency and providing solutions for problems that require human intelligence to solve. Despite the high complexity of the AI systems that increase the possibility of errors, we have been able to successfully implement our AI testing strategies to deliver the best software testing services to our clients. So in this AI Testing Tutorial, we’ll be exploring the various ways we can handle AI Testing effectively.

Understanding AI

Let’s start this AI Testing Tutorial with a few basics before heading over to the strategies. The fundamental thing to know about machine learning and AI is that you need data, a lot of data. Since data plays a major role in the testing strategy, you would have to divide it into three parts, namely test set, development set, and training set. The next step is to understand how the three data sets work together to train a neural network before testing your AI-based application.

Deep learning systems are developed by feeding several data into a neural network. The data is fed into the neural network in the form of a well-defined input and expected output. After feeding data into the neural network, you wait for the network to give you a set of mathematical formulae that can be used to calculate the expected output for most of the data points that you feed the neural network.

For example, if you were creating an AI-based application to detect deformed cells in the human body. The computer-readable images that are fed into the system make up the input data, while the defined output for each image forms the expected result. That makes up your training set.

Difference between Traditional systems and AI systems

It is always smart to understand any new technology by comparing it with the previous technology. So we can use our experience in testing the traditional systems to easily understand the AI systems. The key to that lies in understanding how AI systems differ from traditional systems. Once we have understood that, we can make small tweaks and adjustments to the already acquired knowledge and start testing AI systems optimally.

Traditional Software Systems

Features:

Traditional software is deterministic, i.e., it is pre-programmed to provide a specific output based on a given set of inputs.

Accuracy:

The accuracy of the software depends upon the developer’s skill and is deemed successful only if it produces an output in accordance with its design.

Programming:

All software functions are designed based on loops and if-then concepts to convert the input data to output data.

Errors:

When any software encounters an error, remediation depends on human intelligence or a coded exit function.

AI Systems:

Now, we will see the contrast of the AI systems over the traditional system clearly to structure the testing process with the knowledge gathered from this understanding.

Features:

Artificial Intelligence/machine learning is non – deterministic, i.e., the algorithm can behave differently for different runs since the algorithms are continuously learning.

Accuracy:

The accuracy of AI learning algorithms depends on the training set and data inputs.

Programming:

Different input and output combinations are fed to the machine based on which it learns and defines the function.

Errors:

AI systems have self-healing capabilities whereby they resume operations after handling exceptions/errors.

From the difference between each topic under the two systems we now have a certain understanding with which we can make modifications when it comes to testing an AI-based application. Now let’s focus on the various testing strategies in the next phase of this AI Testing Tutorial.

Testing Strategy for AI Systems

It is better not to use a generic approach for all use cases, and that is why we have decided to give specific test strategies for specific functionalities. So it doesn’t matter if you are testing standalone cognitive features, AI platforms, AI-powered solutions, or even testing machine learning-based analytical models. We’ve got it all covered for you in this AI Testing Tutorial.

Testing standalone cognitive features

Natural Language Processing:

1. Test for ‘precision’ – Return of the keyboard, i.e., a fraction of relevant instances among the total retrieved instances of NLP.

2. Test for ‘recall’ – A fraction of retrieved instances over the total number of retrieved instances available.

3. Test for true positives, True negatives, False positives, False negatives. Confirm that FPs and FNs are within the defined error/fallout range.

Speech recognition inputs:

1. Conduct basic testing of the speech recognition software to see whether the system recognizes speech inputs.

2. Test for pattern recognition to determine if the system can identify when a unique phrase is repeated several times in a known accent and whether it can identify the same phrase when repeated in a different accent.

3. Test how speech translates to the response. For example, a query of “Find me a place where I can drink coffee” should not generate a response with coffee shops and driving directions. Instead, it should point to a public place or park where one can enjoy coffee.

Optical character recognition:

1. Test the OCR and Optical word recognition basics by using character or word input for the system to recognize.

2. Test supervised learning to see if the system can recognize characters or words from printed, written or cursive scripts.

3. Test deep learning, i.e., check whether the system can recognize the characters or words from skewed, speckled, or binarized documents.

4. Test constrained outputs by introducing a new word in a document that already has a defined lexicon with permitted words.

Image recognition:

1. Test the image recognition algorithm through basic forms and features.

2. Test supervised learning by distorting or blurring the image to determine the extent of recognition by the algorithm.

3. Test pattern recognition by replacing cartoons with the real image like showing a real dog instead of a cartoon dog.

4. Test deep learning scenarios to see if the system can find a portion of an object in a large image canvas and complete a specific action.

Now we will be focusing on the various strategies for algorithm testing, API integration, and so on in this AI Testing Tutorial as they are very important when it comes to testing AI platforms.

Algorithm testing:

1. Check the cumulative accuracy of hits (True positives and True negatives) over misses (False positives and False negatives)

2. Split the input data for learning and algorithm.

3. If the algorithm uses ambiguous datasets in which the output for a single input is not known, then the software should be tested by feeding a set of inputs and checking if the output is related. Such relationships must be soundly established to ensure that the algorithm doesn’t have defects.

4. If you are working with an AI which involves neural networks, you have to check it to see how good it is with the mathematical formulae that you have trained it with and how much it has learned from the training. Your training algorithm will show how good the neural network algorithm is with its result on the training data that you fed it with.

The Development set

However, the training set alone is not enough to evaluate the algorithm. In most cases, the neural network will correctly determine deformed cells in images that it has seen several times. But it may perform differently when fed with fresh images. The algorithm for determining deformed cells will only get one chance to assess every image in real-life usage, and that will determine its level of accuracy and reliability. So the major challenge is knowing how well the algorithm will work when presented with a new set of data that it isn’t trained on.

This new set of data is called the development set. It is the data set that determines how you modify and adjust your neural network model. You adjust the neural network based on how well the network performs on both the training and development sets, this means that it is good enough for day-to-day usage.

But if the data set doesn’t do well with the development set, you need to tweak the neural network model and train it again using the training set. After that, you need to evaluate the new performance of the network using the development set. You could also have several neural networks and select one for your application based on its performance on your development set.

API integration:

1. Verify the input request and response from each application programming interface (API).

2. Conduct integration testing of API and algorithms to verify the reconciliation of the output.

3. Test the communication between components to verify the input, the response returned, and the response format & correctness as well.

4. Verify request-response pairs.

Data source and conditioning testing:

1. Verify the quality of data from the various systems by checking their data correctness, completeness & appropriateness along with format checks, data lineage checks, and pattern analysis.

2. Test for both positive and negative scenarios.

3. Verify the transformation rules and logic applied to the raw data to get the output in the desired format. The testing methodology/automation framework should function irrespective of the nature of the data, be it tables, flat files, or big data.

4. Verify if the output queries or programs provide the intended data output.

System regression testing:

1. Conduct user interface and regression testing of the systems.

2. Check for system security, i.e., static and dynamic security testing.

3. Conduct end-to-end implementation testing for specific use cases like providing an input, verifying data ingestion & quality, testing the algorithms, verifying communication through the API layer, and reconciling the final output on the data visualization platform with the expected output.

Testing of AI-powered solutions

In this part of the AI Testing Tutorial, we will be focusing on strategies to use when testing AI-powered solutions.

RPA testing framework:

1. Use open-source automation or functional testing tools such as Selenium, Sikuli, Robot Class, AutoIT, and so on for multiple purposes.

2. Use a combination of pattern, text, voice, image, and optical character recognition testing techniques with functional automation for true end-to-end testing of applications.

3. Use flexible test scripts with the ability to switch between machine language programming (which is required as an input to the robot) and high-level language for functional automation.

Chatbot testing framework:

1. Maintain the configurations of basic and advanced semantically equivalent sentences with formal & informal tones, and complex words.

2. Generate automated scripts in python for execution.

3. Test the chatbot framework using semantically equivalent sentences and create an automated library for this purpose.

4. Automate an end-to-end scenario that involves requesting for the chatbot, getting a response, and finally validating the response action with accepted output.

Testing ML-based analytical models

Analytical models are built by the organization for the following three main purposes.

Descriptive Analytics:

Historical data analysis and visualization.

Predictive Analytics:

Predicting the future based on past data.

Prescriptive Analytics:

Prescribing course of action from past data.

Three steps of validation strategies are used while testing the analytical model:

1. Split the historical data into test & train datasets.

2. Train and test the model based on generated datasets.

3. Report the accuracy of the model for the various generated scenarios as well.

All types of testing are similar:

It’s natural to feel overwhelmed after seeing such complexity. But as a tester, if one is able to see through the complexity, they will be able to that the foundation of testing is quite similar for both AI-based and traditional systems. So what we mean by this is that the specifics might be different, but the processes are almost identical.

First, you need to determine and set your requirements. Then you need to assess the risk of failure for each test case before running tests and determining if the weighted aggregated results are at a predefined level or above the predefined level. After that, you need to run some exploratory testing to find biased results or bugs as in regular apps. Like we said earlier, you can master AI testing by building on your existing knowledge.

With all that said, we know for a fact that an AI-based system provides a highly functional dynamic output with the same input when it is run again and again since the ML algorithm is a learning algorithm. Also, most of the applications today have some type of Machine Learning functionality to enhance the relationship of the applications with the users. AI inclusion on a much larger scale is inevitable as we humans will stop at nothing until the software we create has human-like functionalities. So it’s necessary for us to adapt to the progress of this AI revolution.

Conclusion:

We hope that this AI Testing Tutorial has helped you understand the AI algorithms and their nature that will enable you to tailor your own test strategies and test cases that cater to your needs. Applying out-of-the-box thinking is crucial for testing AI-based applications. As a leading QA company, we always implement the state of the art strategies and technologies to ensure quality irrespective of the software being AI-based or not.