by admin | Jan 6, 2022 | Automation Testing, Blog, Latest Post |

As per a recent report, the global automation testing market is expected to grow from an already high $20.7 Billion to a mammoth $49.9 Billion by the year 2026. So in such a fast-growing domain, you might lose out on a lot of potentials if you fail to keep up with the current trends in automation testing. Being a leading QA company that provides top-of-the-line automation testing services to all our clients, we have created this list of current trends in automation testing based on real-world scenarios and not assumptions. So let’s take a look at automation testing trends to keep an eye on for 2022.

Automation Tools Powered by AI and ML

Artificial Intelligence and Machine Learning are two technologies that have the potential to take automation to the next level as it eases everything from test creation to test maintenance. With the help of AI and ML, we will be able to automate unit tests, validate UI changes, ease regression testing, and detect bugs earlier than before. So we will be able to achieve seamless continuous testing with the least amount of effort and resources. Though such capabilities aren’t possible now, the prospect of being able to achieve such a feat doesn’t seem to be far away.

Codeless Automation Solutions

Such growth in AI and ML will also yield the rise of codeless automation solutions that are slowly gaining popularity. So the learning curve can be greatly reduced and a lot of time can be saved with such codeless automation solutions. If you’re wondering that codeless automation sounds a little too similar to Selenium Testing, make sure to read our blog about codeless automation testing to know its advantages and to understand how it is different from Selenium testing. As an experienced automation testing company, we have to say that codeless automation will not replace automation. But it will definitely come in handy.

Cloud-Based Solutions

Be it testing or collaboration tools, cloud-based solutions are already flying high and there seems to be no slowing them down in the near future as well. They have so many advantages to offer such as cost-effective infrastructure, reduced execution time with parallel testing, better test coverage, bug management, and so on. With the never-ending pandemic raging on, the implementation of such solutions will definitely be high.

The Emergence of IoT Testing

We are slowly seeing IoT being implemented in more and more avenues like smart speakers, smart home appliances like thermostats, lights, and so on. It goes without saying that all these products would have to be tested. And since almost all information sharing across these devices is achieved through APIs, the need for API testing will definitely be on the rise. Making it an undeniable entry to our list of current trends in automation testing.

Security Testing

Security breaches were also on the rise during the pandemic and privacy concerns with regards to the user data also became a talking point. So you will no longer be able to use real-world data to test security. Instead, you’d have to use masking or synthetic test data generation tools to perform security testing and finally conclude with various compliance tools as well.

Conclusion

Pretty much every single point we have seen in this list of the current trends in automation testing is about the various tools that will be useful. But we should also make sure to focus on our automation skills and self-improvement to make use of the state-of-the-art tools and methods to achieve continuous testing.

by admin | Dec 24, 2021 | Automation Testing, Blog, Latest Post |

As the name suggests, Endtest.io is a great option if you are looking for a great option for automating your end-to-end and regression testing. The best part about Endtest.io is that you could do all this for both mobile and web apps without having to code. So the end result here is that you get is quicker evaluation of quality for your products since it is instrumental in overcoming the bottlenecks that come with traditional testing. Being one of the best automation testing companies, we are always on the lookout for the best tools that can streamline our automation testing process. Endtest.io is one such tool that we have found to be very resourceful. So in this blog, we will be exploring why you should consider Endtest.io, and help you get started with it as well.

Why is end-to-end testing (E2E testing) Important?

E2E testing is crucial as it helps to thoroughly test the entire software by modeling and validating real-world scenarios. Since it is executed from the standpoint of an end-user, it helps establish system dependencies and guarantee that all features & integrations function as intended. So the chances of a system failure due to the failure of any of the subsystems becomes very less.

Why Endtest.io?

If you have done your own research on test automation solutions, you might have come across Selenium which is a very popular open-source tool. But Selenium lacks various features such as native video recording of tests, integrations with Jenkins, Jira, or Slack, e-mail notifications, and test scheduling. That is where Endtest.io comes into the picture as it addresses all these issues to give you a complete package.

Endtest.io is simple to use and has a very intuitive user interface. So even junior QA Engineers can work with it without any prior experience. Endtest’s website is also surprisingly simple to use and offers a lot of helpful documentation that can guide you through any doubts. If you are still finding it hard to resolve an issue or if you need additional help, you can reach out to their super responsive customer service via email or chat to get swift replies. Since they are open to suggestions that can help better your workflow or their product, you can approach them with your ideas. In fact, we have already discussed an option to include special characters with their team.

As stated earlier, we can also use Endtest.io to automate regression tests. Similar to E2E testing, regression testing is also very important and it will ensure that a recent program or code modification hasn’t broken any current features of the product. So regression testing becomes a must when introducing new features, repairing errors, or dealing with performance difficulties.

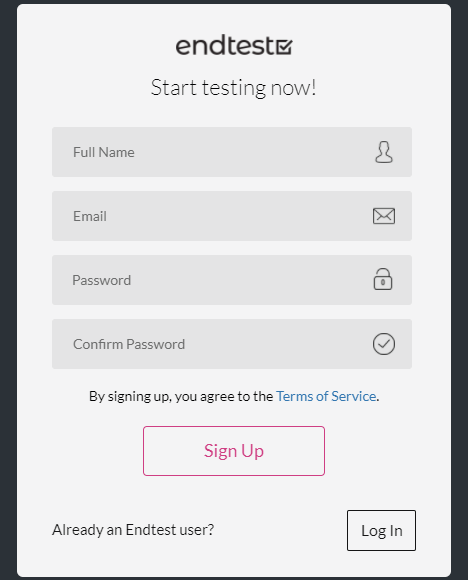

Getting Started with Endtest.io’s End-to-End testing

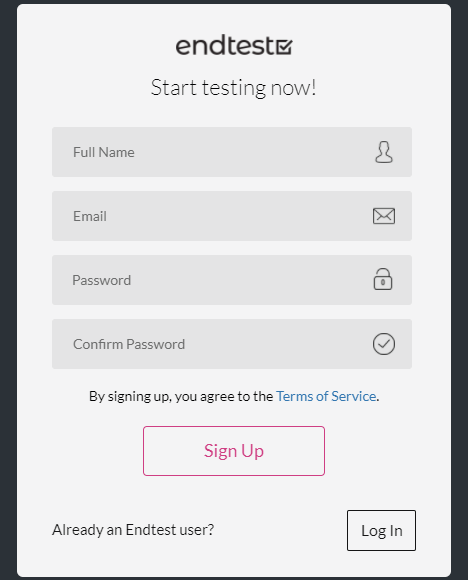

Now that we have seen the capabilities of Endtest.io, let’s find out how you can start using it. First and foremost, you would have to Sign up to EndTest by visiting this link and then log in using the valid credentials. Once your account creation is complete, you’d have to install the Endtest.io Chrome Extension (Web test recorder Codeless Automated Testing). So you needn’t have to download any separate software for Endtest.io to work.

Recording Function

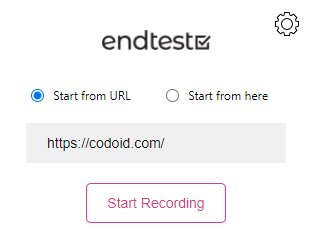

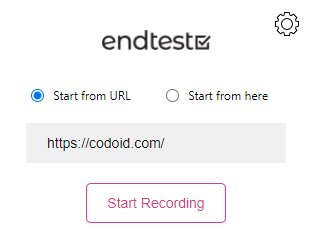

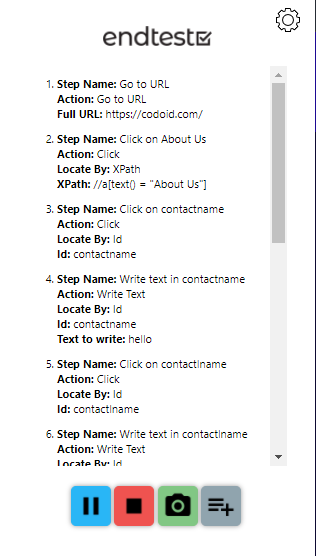

Once you open the Chrome extension, you’d have to select either the ‘Start from here’ or the ‘Start from URL’ and hit the ‘Start Recording’ button.

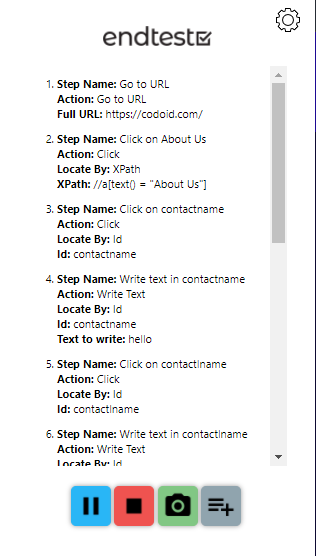

So Endtest.io will now record each and every step you take while performing the test scenario. If needed, you can even pause the recording at any given moment. Once you’ve finished your test scenario, head back to the Endtest.io Chrome Extension and click on the Stop recording button.

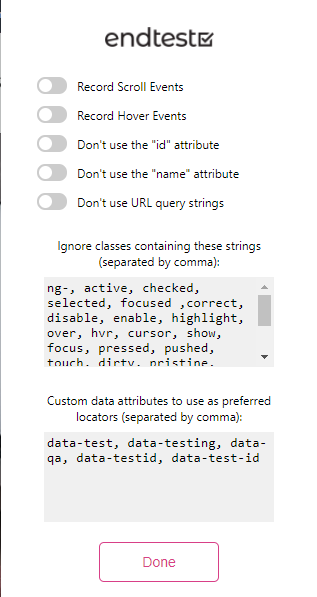

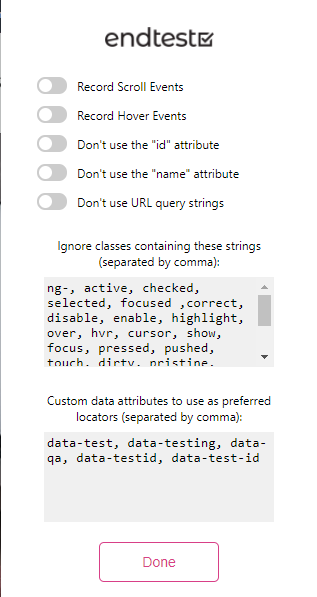

Endtest.io has some predefined settings from which we can choose different options based on the testing requirements and needs. These user-friendly options shown in the image make it even easier for us to use the recording feature with full accuracy.

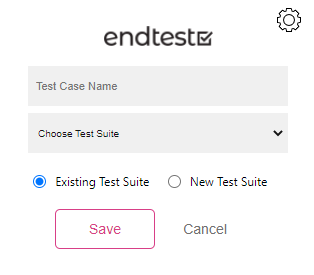

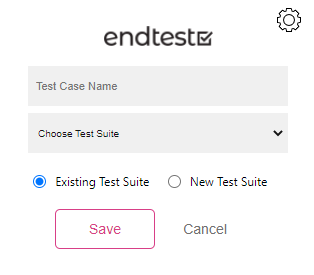

Test Case and Test Suite

So once the recording part has been done, you will be able to assign a name to the test case you just recorded. If you want to add the test case to an existing test suite, you can do so. If not, you can also create a test suite and add the test case to that.

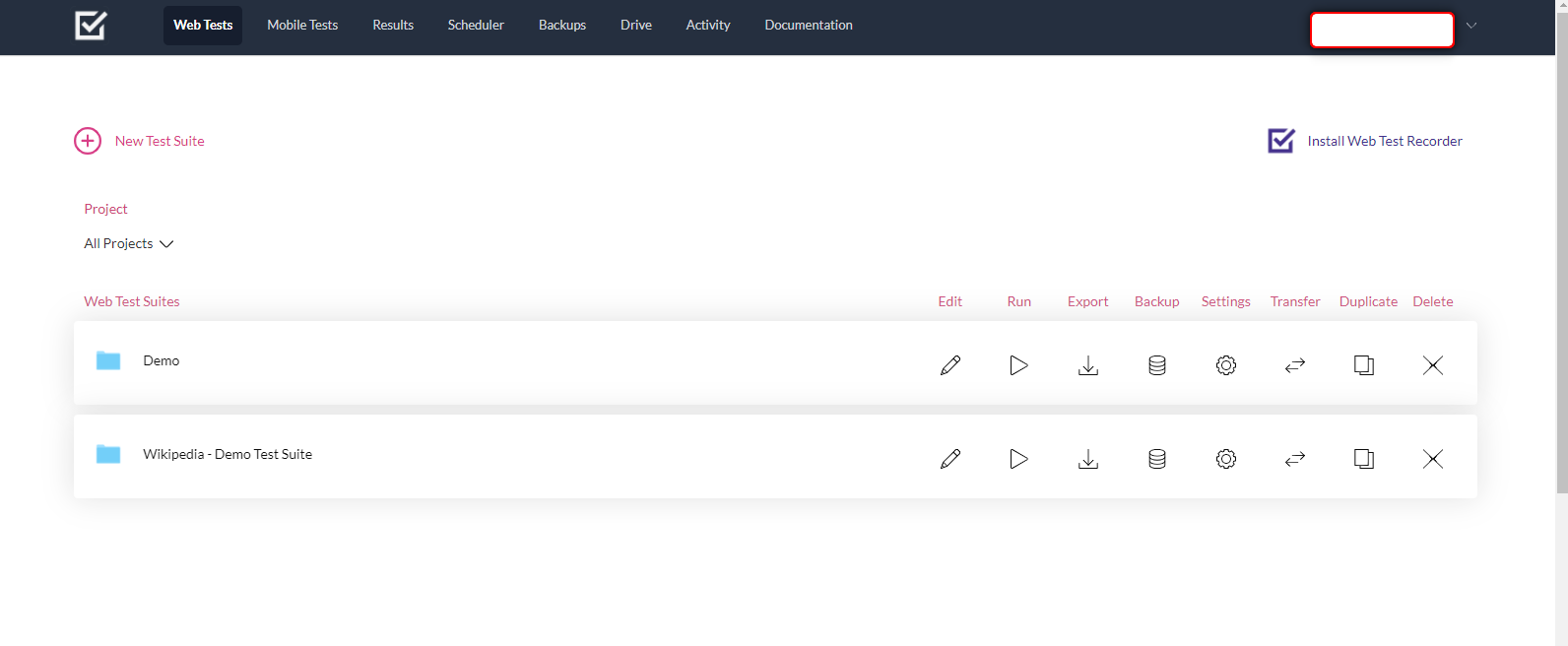

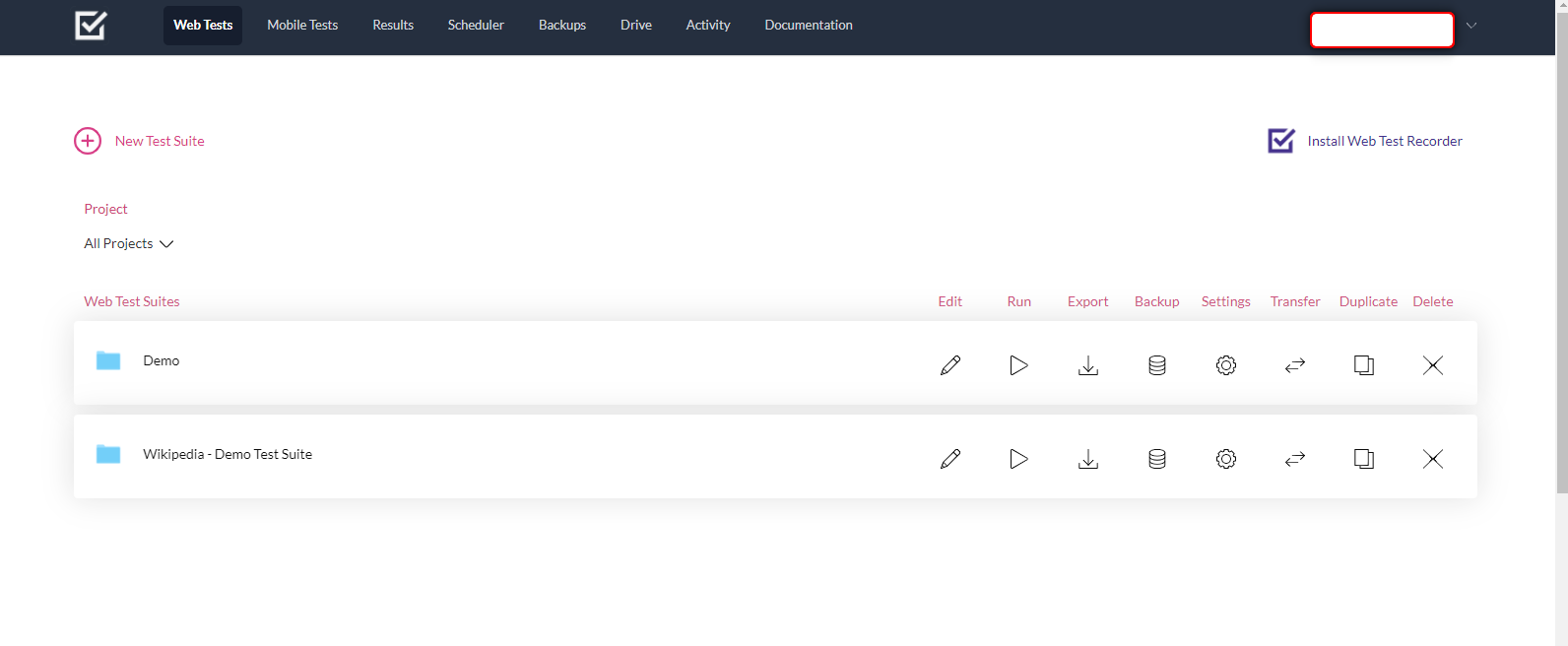

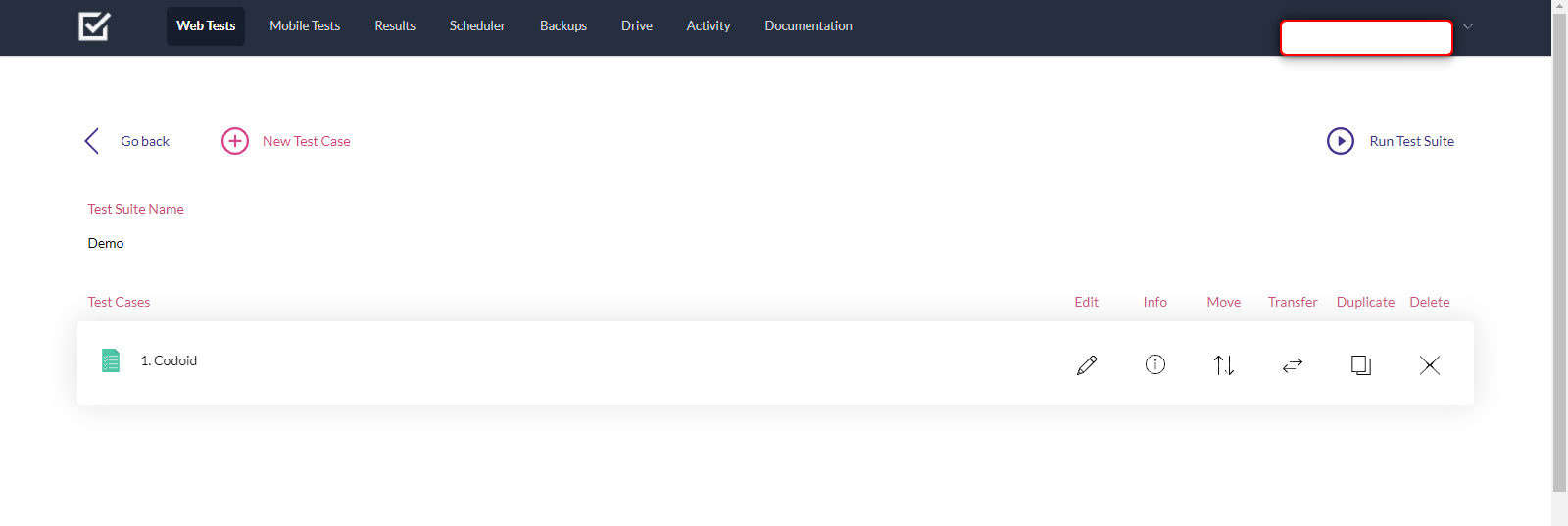

For the sake of explanation, we have named this test case as ‘Codoid’ and created a test suite by the name ‘Demo’. Once you press the ‘Save’ button, you will be taken to the Endtest.io site’s Web Tests page as shown below.

As seen in the image, there are various options such as edit, run, export, and so on. But since we’re not trying to do any of that with the test suite now, let’s just click on the Test Suite named Demo.

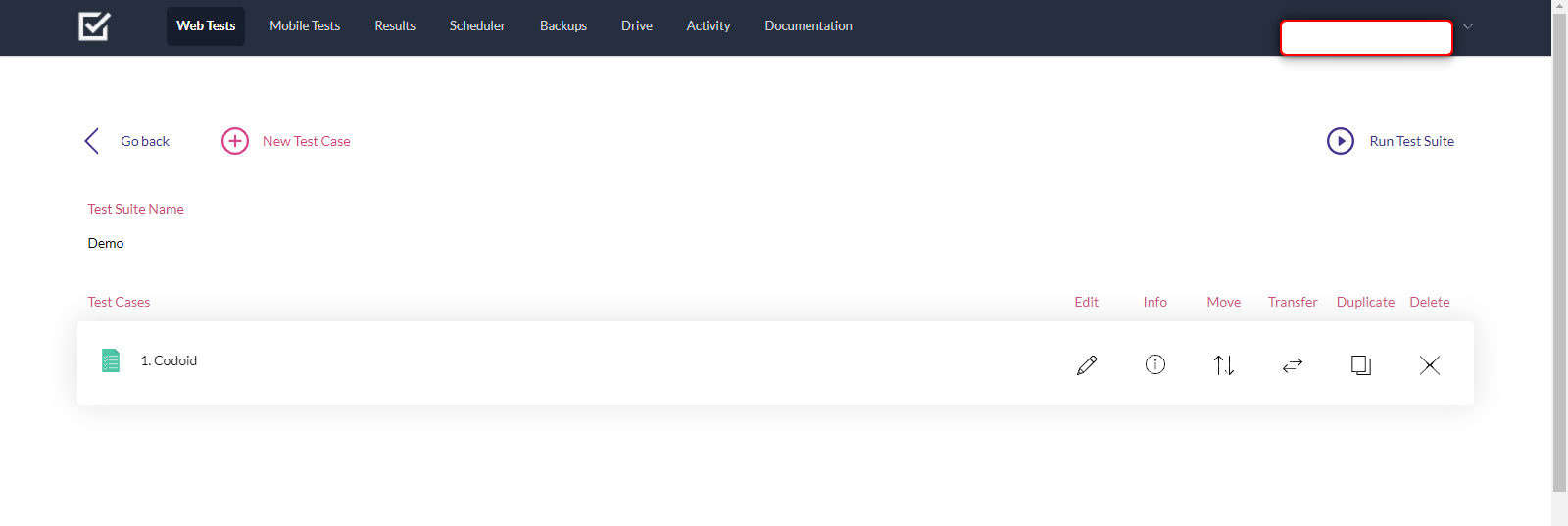

Inside you will find all the test cases that you have created under that particular Test Suite. In this case, we have created just one test case named ‘Codoid’. So let’s click on that to see the steps that were previously recorded.

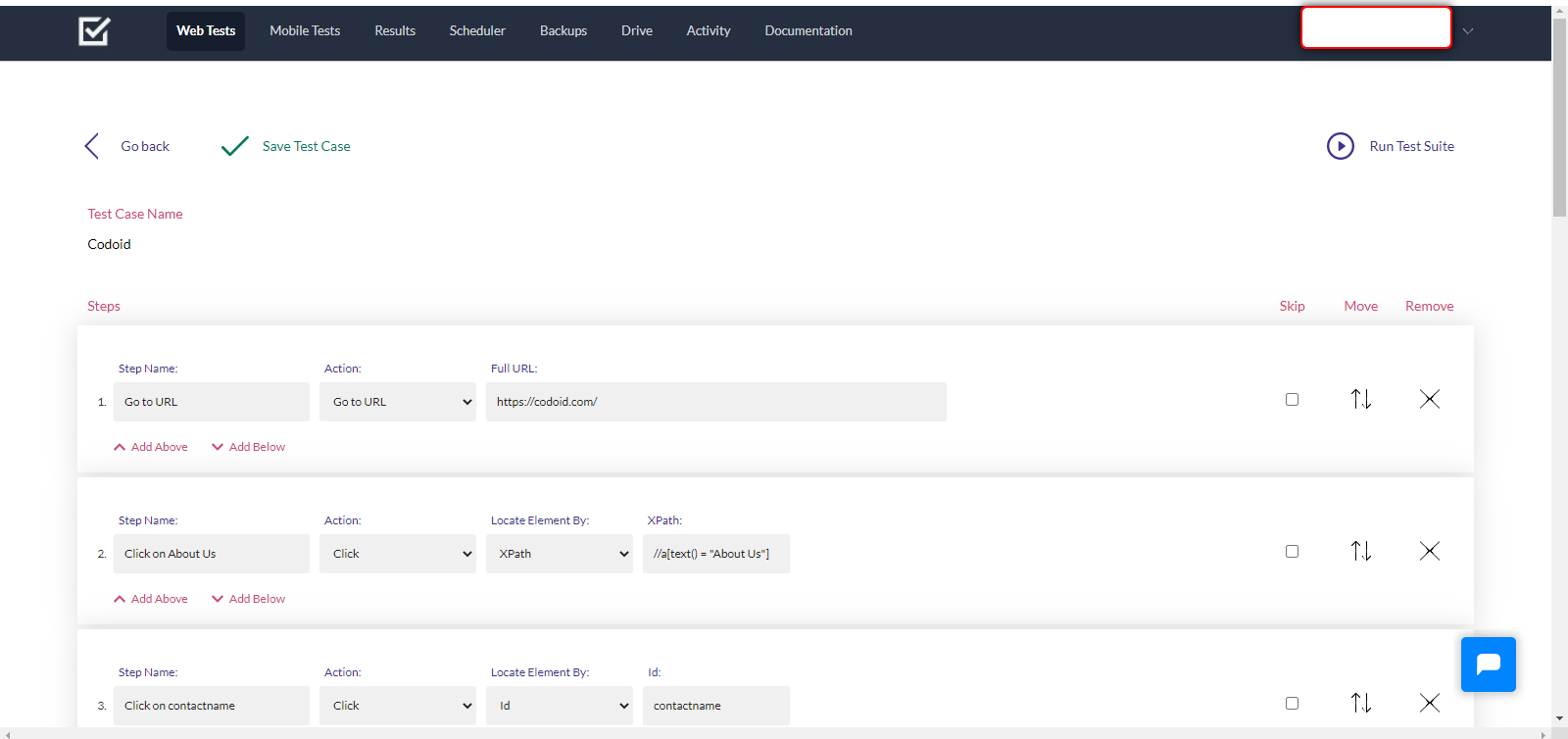

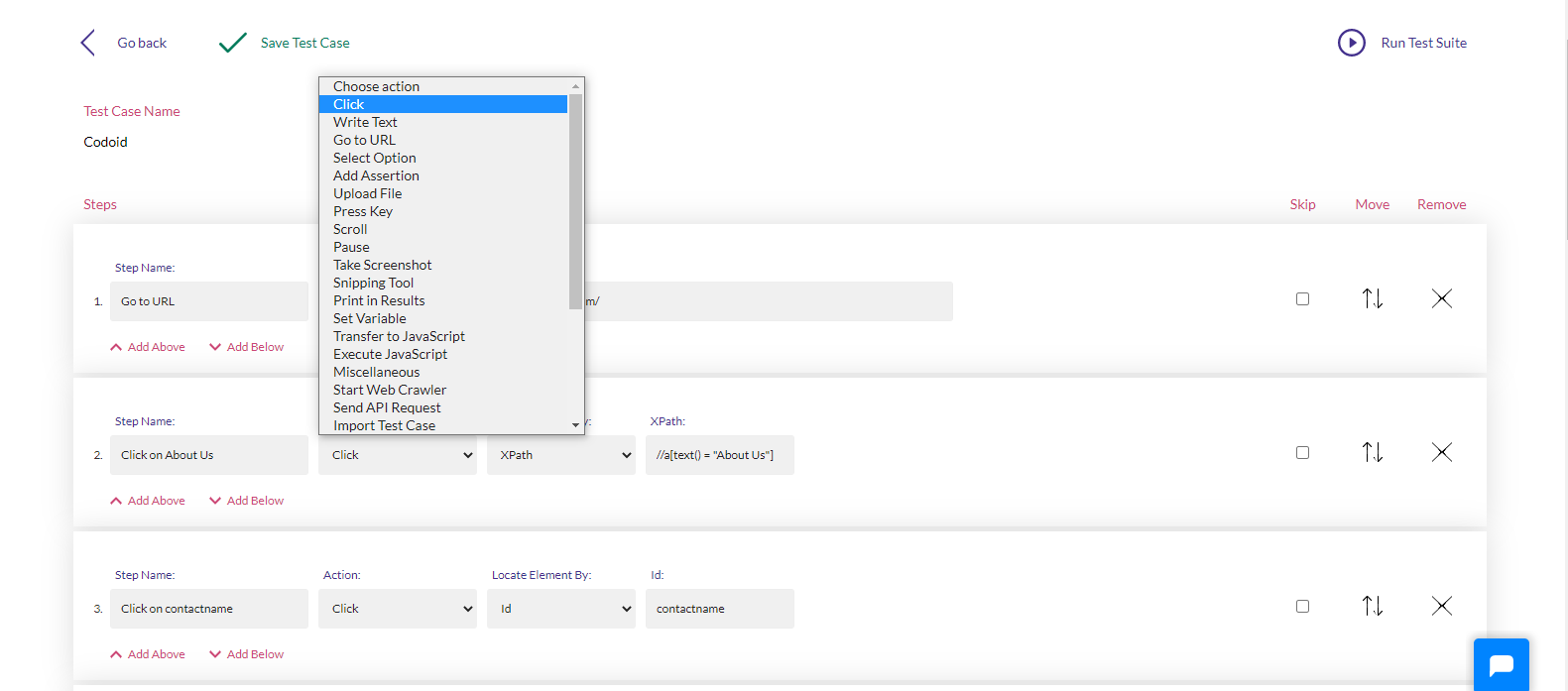

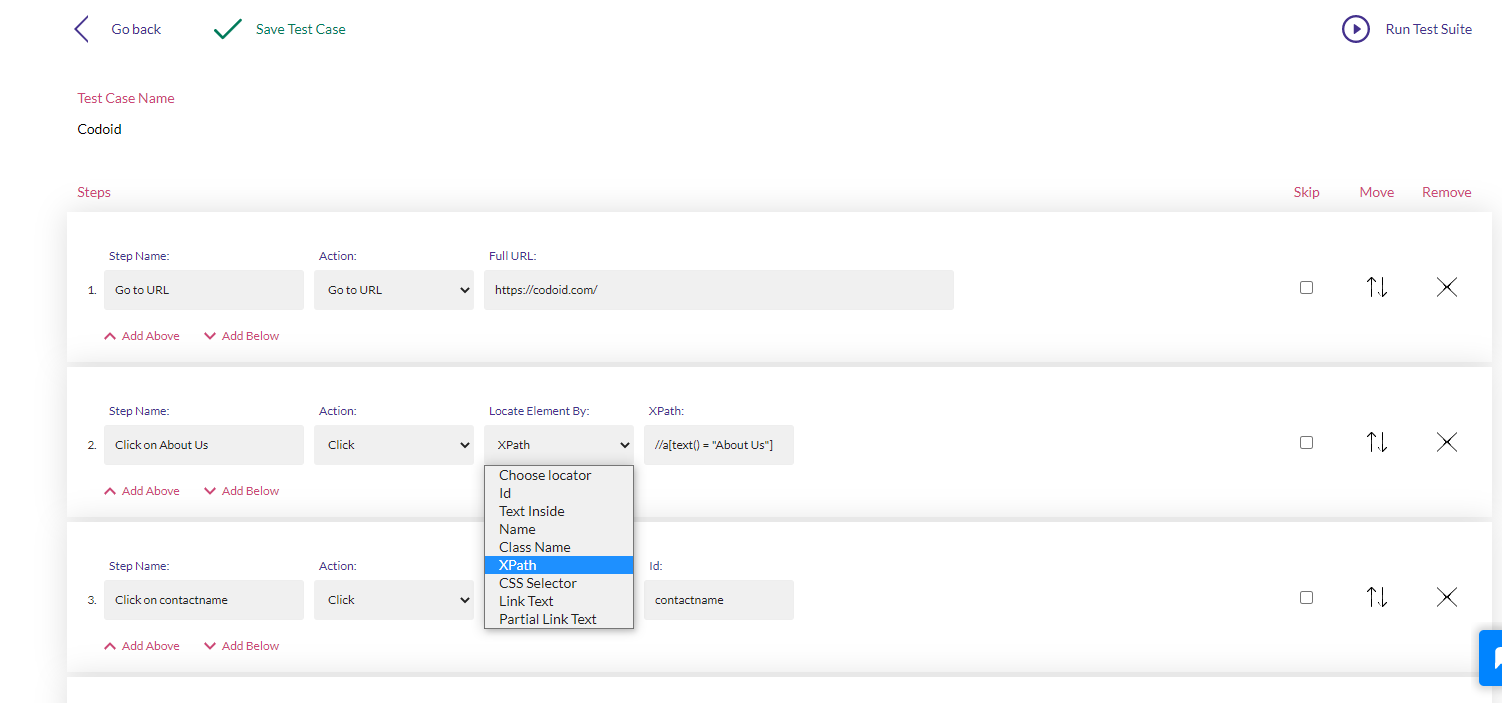

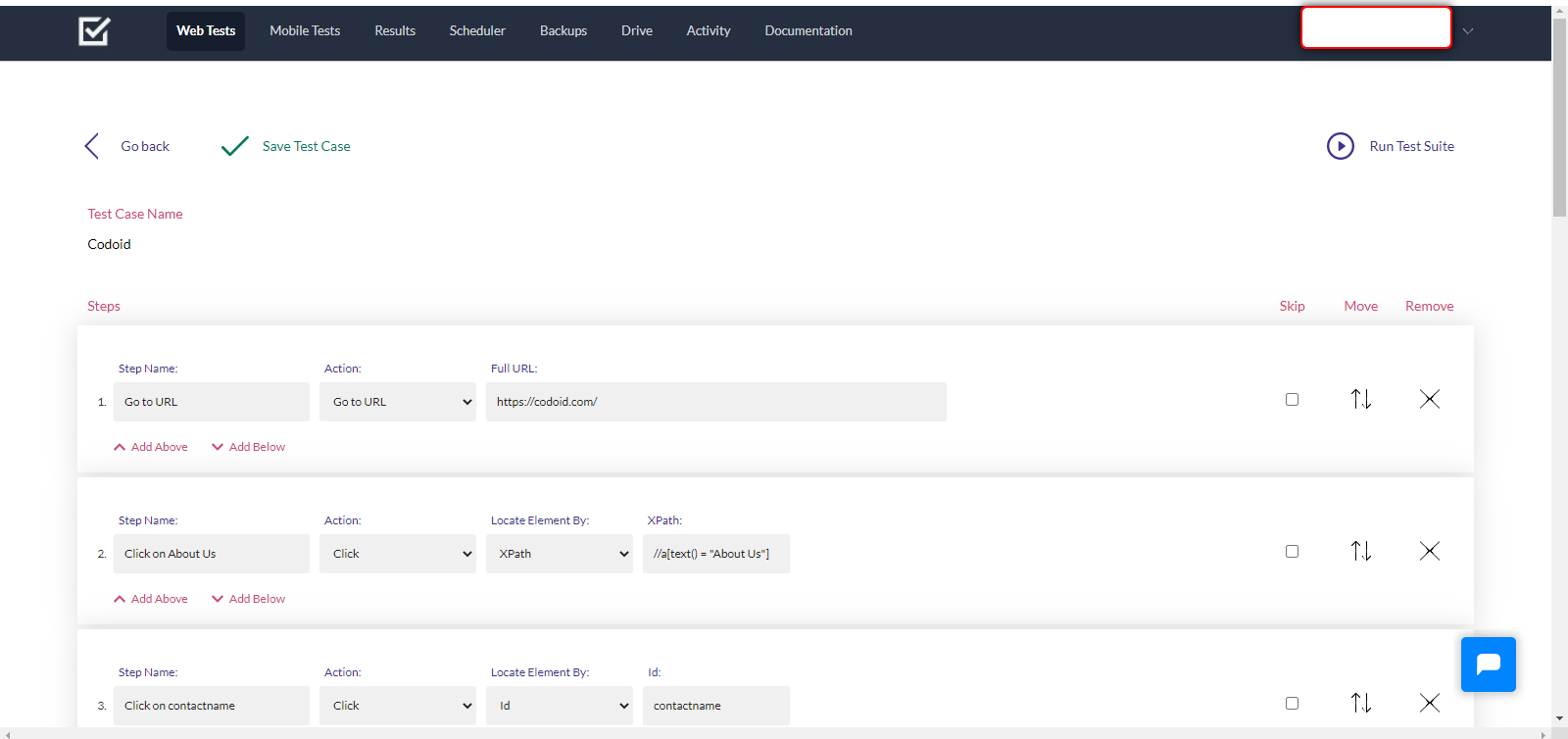

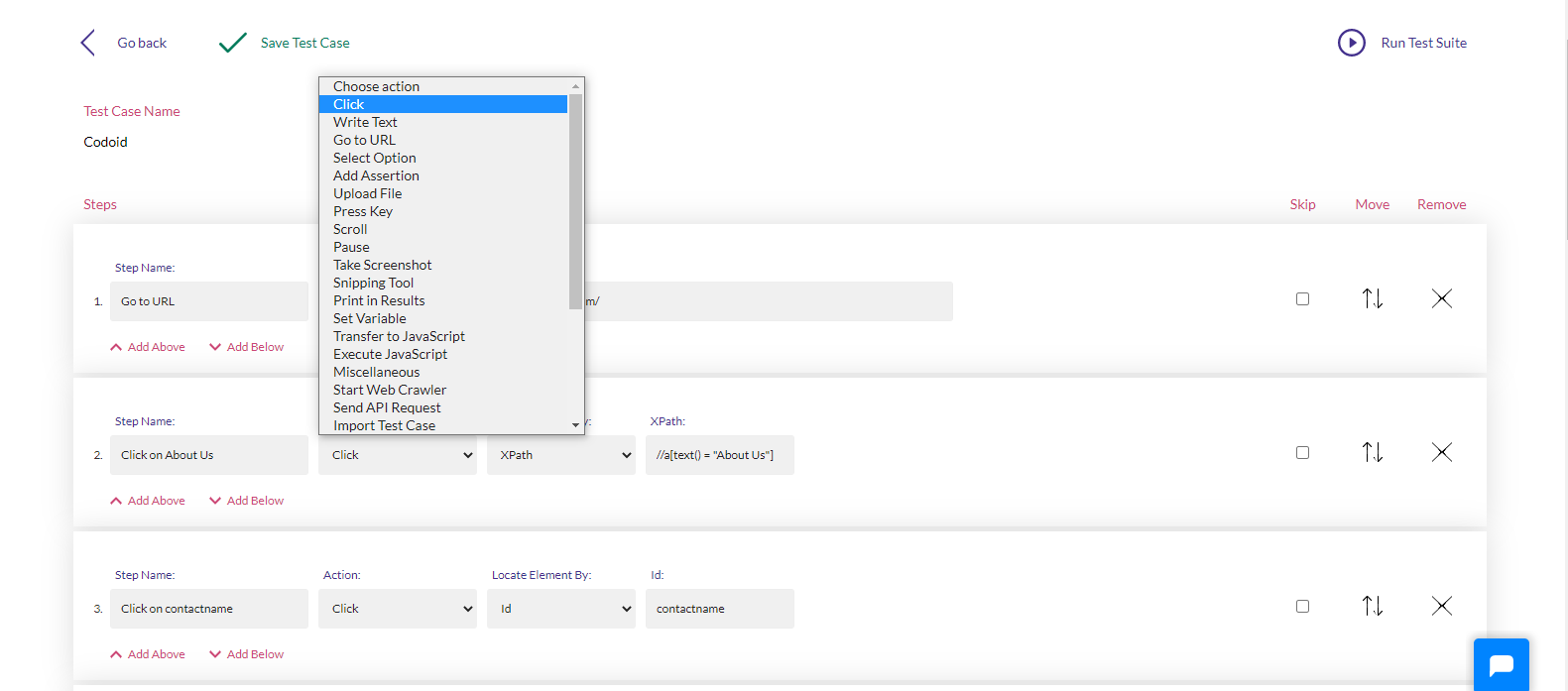

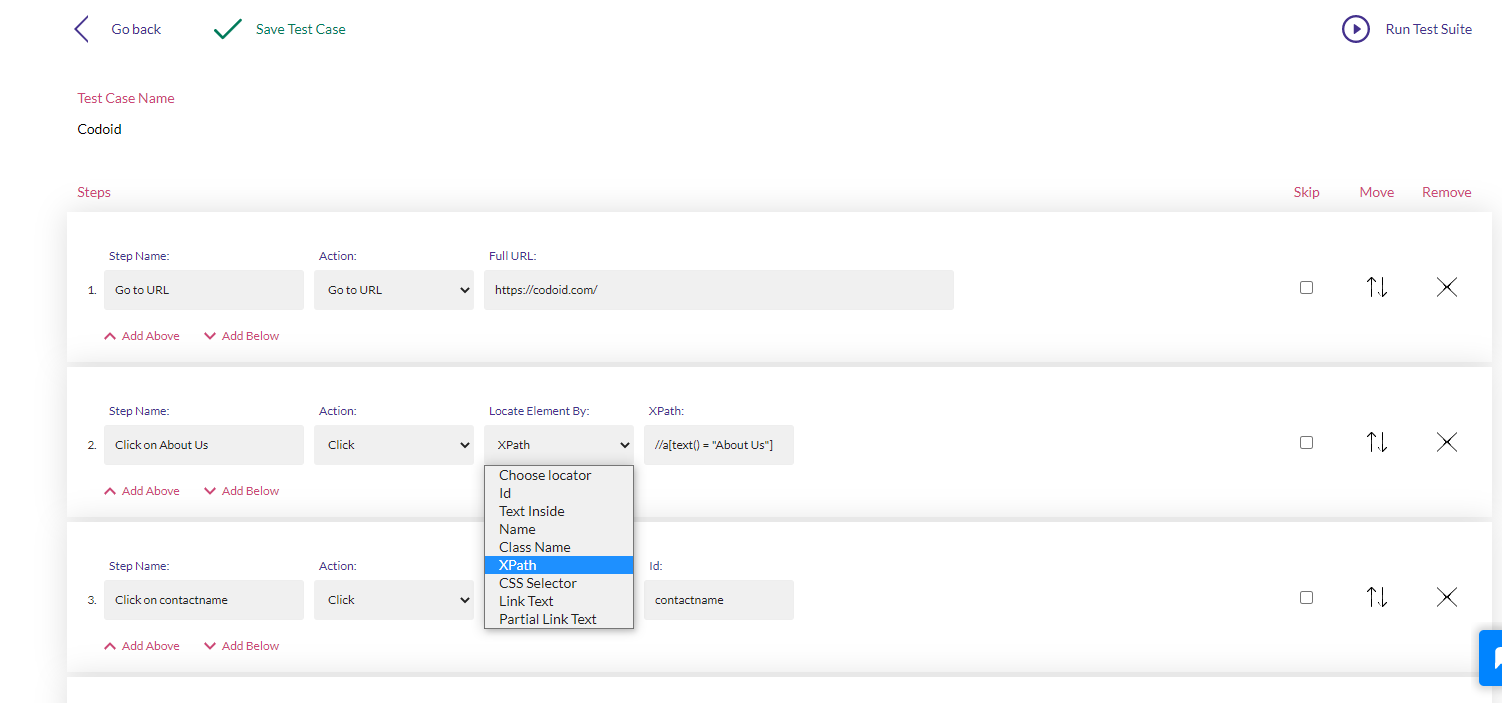

So it is evident here that the test case can be edited without any hassle as there are options to skip, move, and even remove a particular action which we had recorded earlier. In addition to such basic options, you can even edit the type of action that you recorded and choose the type of locating technique that you want to use.

Conclusion:

With all such easy editing options and its ability to easily generate all the possible locators for the particular function in the application, Endtest.io makes it easy to write, edit, and scale automation scripts for even complex applications. In addition to that, you also be able to view detailed results of the execution, take screenshots, schedule the test execution, detect changes, and so much more. Here at Codoid Innovations, we are always focused on delivering true automation solutions that require no interference or supervision. Endtest.io is an automation tool that helps us achieve this target and enable us to provide our clients with top-notch automation testing services.

by admin | Dec 23, 2021 | Automation Testing, Blog, Latest Post |

As the name suggests, codeless automation testing is the process of performing automation tests without having to write any code. Codeless Automation Testing can be instrumental in implementing continuous testing as the majority of automation scripts fail due to the lack of proper coding. It will also enable you to focus more on test creation and analysis instead of worrying about getting the code to work, maintaining it, and scaling it when needed as well. So if you are fairly new to codeless automation testing, then you will find this blog useful as we will be pointing out the advantages of codeless automation testing by comparing it with Selenium Testing. So let’s get started.

How is Codeless Automation Testing Different from Selenium Testing?

Selenium is a tool that greatly simplified the automation testing process. It even allows software testers to record their testing and play it back using Selenium IDE to create basic automation. But there was no easy option to edit the created test cases without being strong in coding. So if you weren’t strong in coding, the only other option would be to rerecord the entire test. But now with the introduction of codeless automation testing, you can go beyond the record and playback technique. So the scope of usage is broadened as it even makes it possible to edit the test cases with basic HTML, CSS, and XPath knowledge. Owing to the minimal use of coding, tests can be easily understood by people without much programming knowledge as well. To top it all off, the setup process is so simple that you can set it up in no time.

Getting Started with Codeless Automation Testing

So it is evident that codeless solutions are a lot more powerful in comparison. But one has to keep in mind the fact that it will work effectively only if they are used appropriately. It is always a good idea to get started with simple tests that can be validated easily. For example, if you are testing an e-commerce product, start by seeing if you are able to add a product to the cart. Once you familiarise yourself, you can go a notch higher and try testing the purchase and return/exchange process. It wouldn’t be wise to use codeless solutions in products that use third-party integrations or has a dynamic and unpredictable output as it becomes very difficult to validate them.

Local and Cloud Options

You can either opt for local codeless solutions or cloud-based solutions. As one of the best automation testing companies, we always prefer cloud-based solutions as they offer more advantages. Collaboration is one of the main plus points as it will enable seamless sharing of test data and test scenarios. In addition to that, you will be to scale your services better with the help of the many virtual machines and mobile devices available online. Since your infrastructure becomes more robust, your overall process quality will also drastically improve.

The Future of Codeless Automation Testing

Though complete codeless automation isn’t yet possible in the same way how all manual tests can’t be automated, it is the natural next step that testers have to take. Repetitive tests were replaced with automation using scripts and now repetitive automation coding is being replaced with codeless solutions that utilize machine learning and AI. But as it always has been, manual testing and scripted automated testing will still play a major role in software testing.

by admin | Dec 21, 2021 | Automation Testing, Blog, Latest Post |

In today’s world, success is heavily dependent on the pace at which we work. So automating repetitive work is one of the quickest ways to attain functional performance. But if you find automation to be daunting, you might have a negative approach of just manually doing the tasks over and over again to enter an endless loop of wasting time. So if you are someone who uses Google Sheets regularly to create and maintain data, this blog will be a lifesaver for you. As a leading automation testing services company, we are focused on dedicating our time to our core service by automating repetitive tasks. So in this blog, we will be exploring how to achieve Google Sheet Automation and get the job done in no time. Without any further ado, let’s get started.

The pace isn’t the only advantage that comes with automation. Since repetitive tasks are prone to oversight and manual errors, we will be able to avoid such issues by implementing automation. Now that we have established what we are targeting to achieve, let’s first see how to do it. We will be making use of the available Google APIs to examine and add data in Google Sheets using Python. So you wouldn’t have to spend hours of your time extracting data and then replica-pasting it to other spreadsheets.

Prerequisites:

In order to achieve Google Sheet Automation, you’ll be needing a few prerequisites such as

- A Google account.

- A Google Cloud Platform project with the API enabled.

- The pip package management tool

- Python 2.6 or greater

How to Achieve Google Sheet Automation using Google API Services?

Here are the few steps that need to be followed to start using the Google sheets API.

i. Create a project on Google Cloud console

ii. Activate the Google Drive API

iii. Create credentials for the Google Drive API

iv. Activate the Google Sheets API

v. Install a few modules with pip

vi. Open the downloaded JSON file and get the client email

vii. Share the desired sheet with that email

viii. Connect to Google Sheet using the Python code

Create a project on Google cloud console

In order to read and update the data from Google Sheets in Python, we will have to create a Service Account. The reason behind this need is that it is a special form of account that can be used to make authorized API calls to Google Cloud Services. Almost everybody has a Google account today. In case you don’t, make sure to create one and then follow all the steps and comply with the requirements to create a Google Services account.

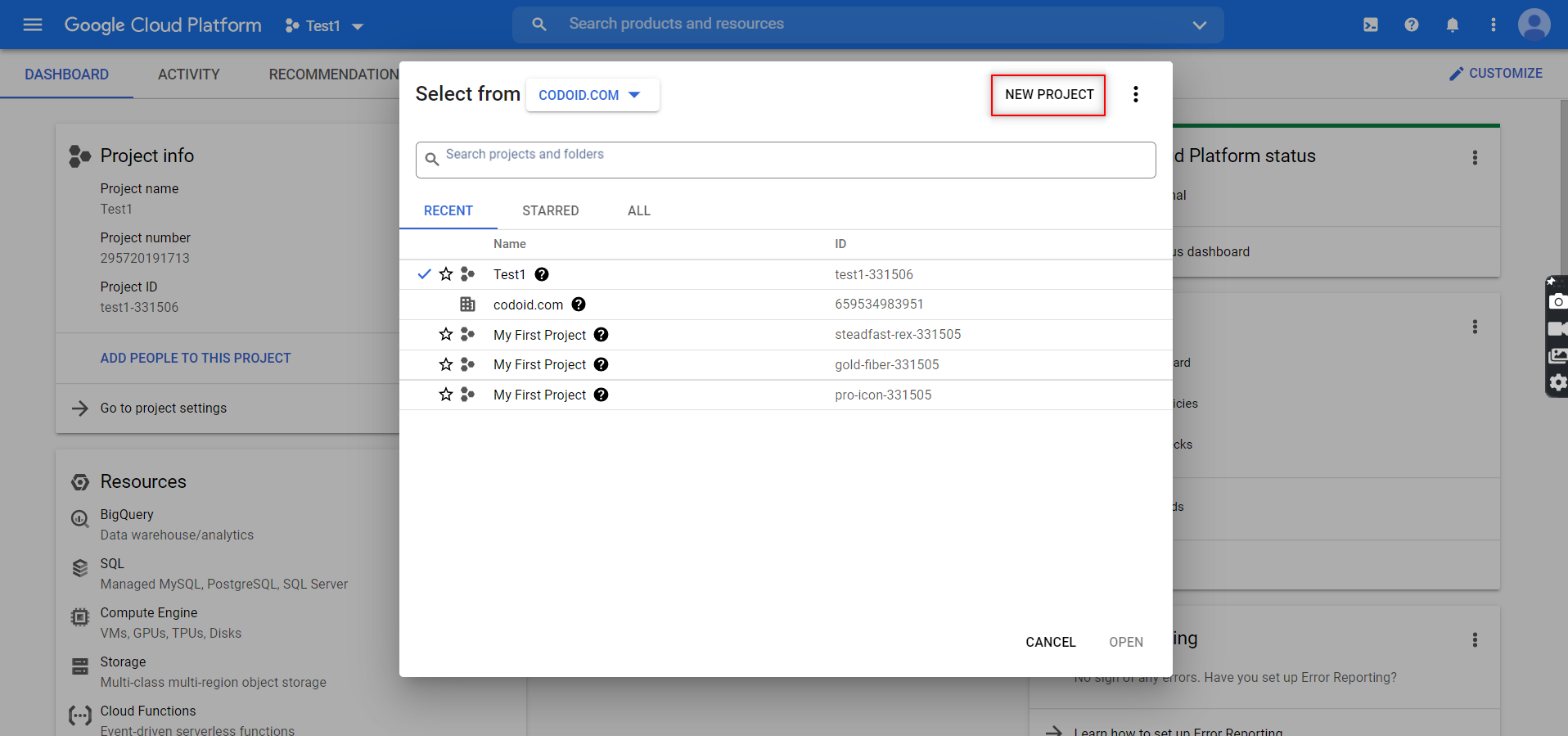

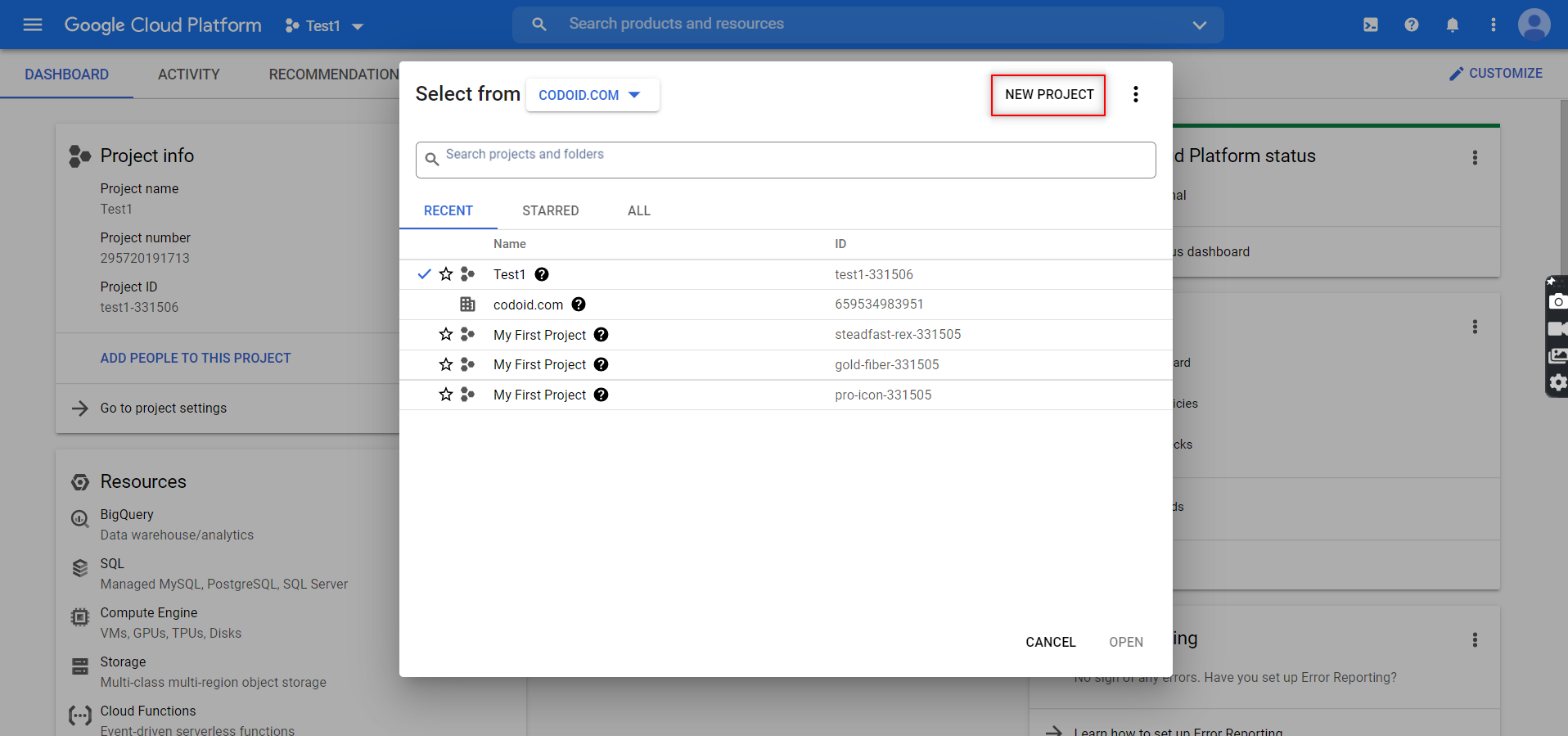

1. You will be able to create a new project by going to the developer’s console and clicking on the ‘New Project’ button.

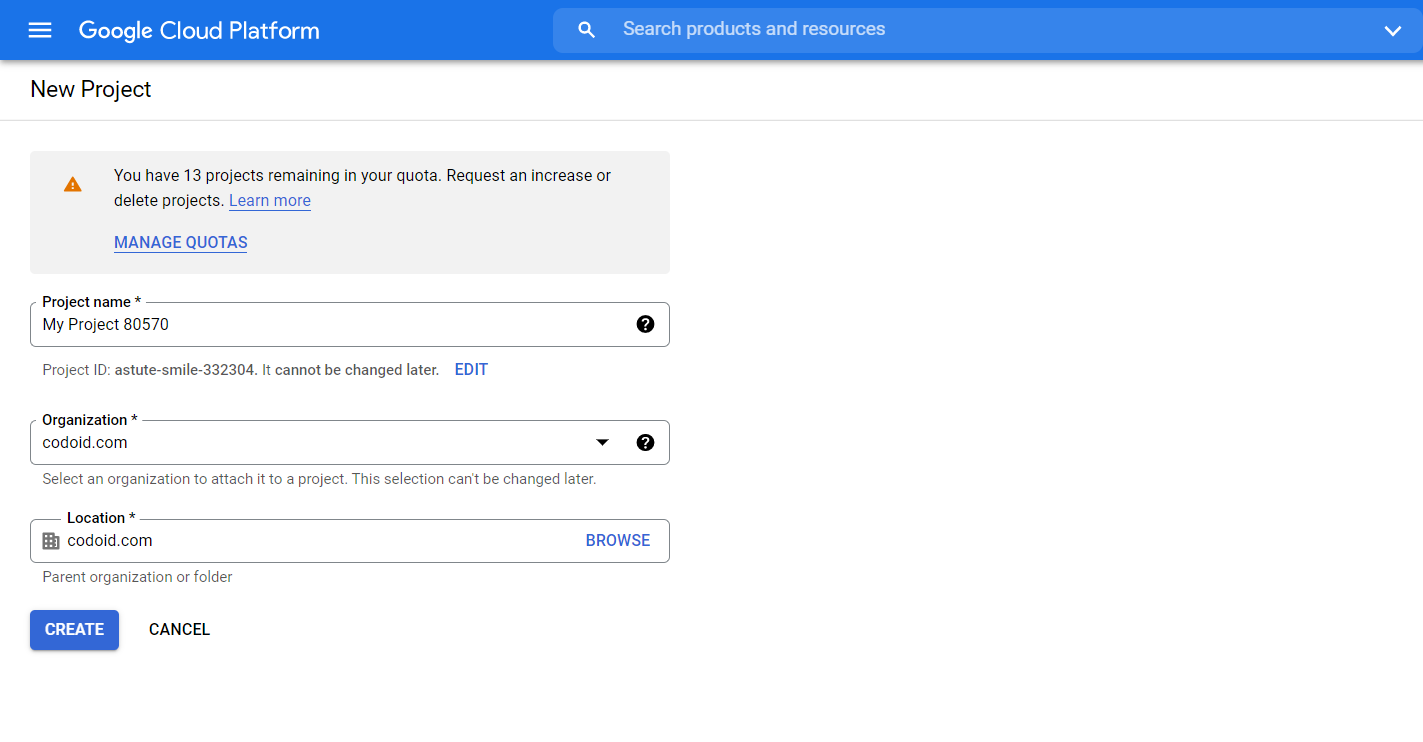

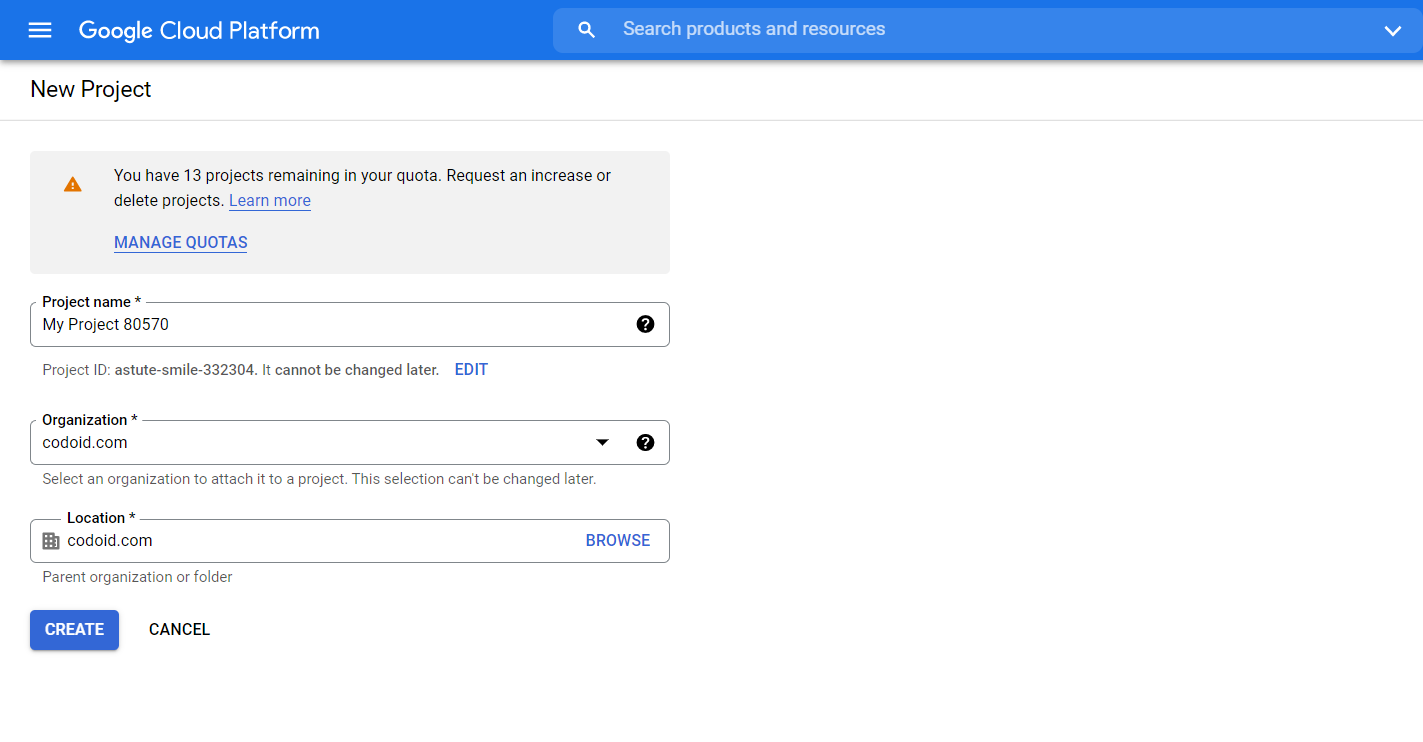

2. You can then assign the project name and even enter the organization name if you prefer to. Once you are done, click on the ‘Create’ button.

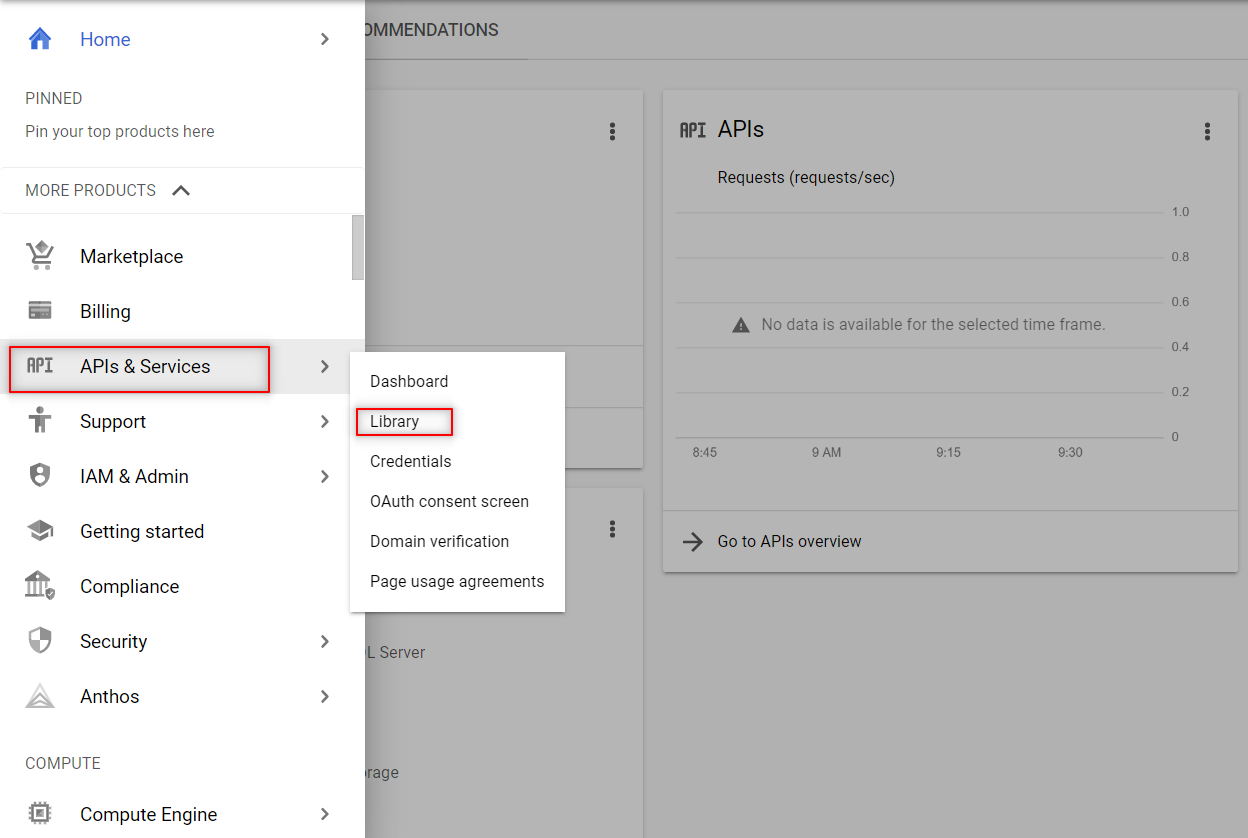

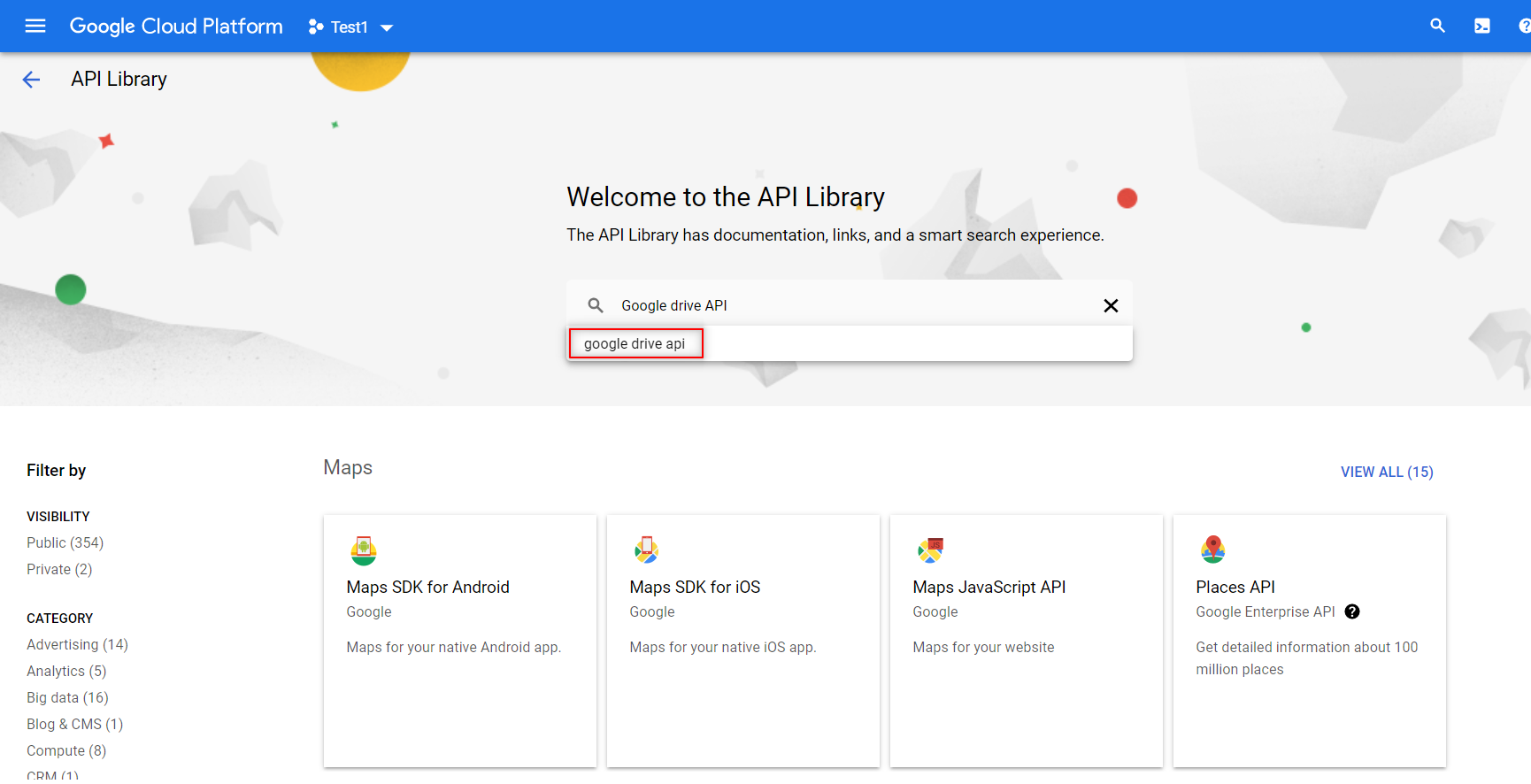

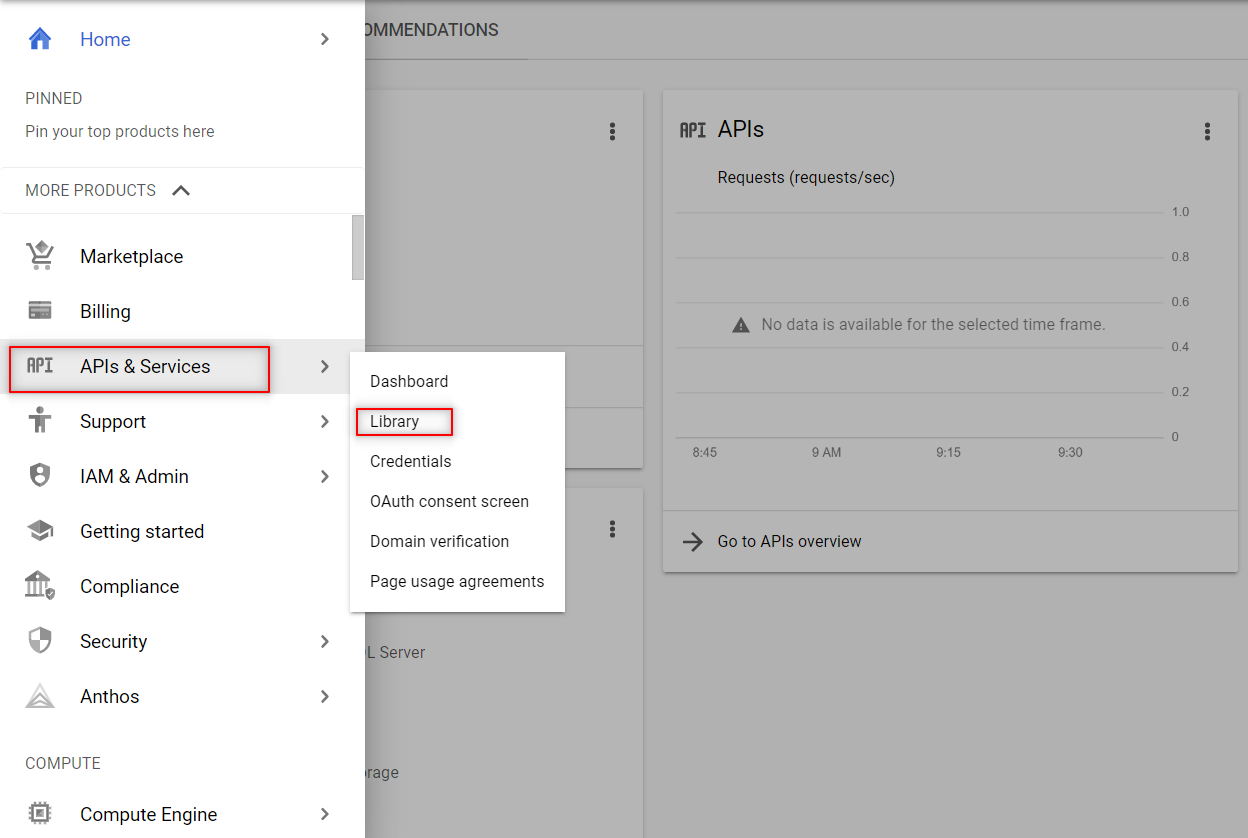

3. The next step after creating the project would be to enable the APIs that we require in this it. To access the different API options provided by Google, you have to click on Menu -> APIs & Services -> Library.

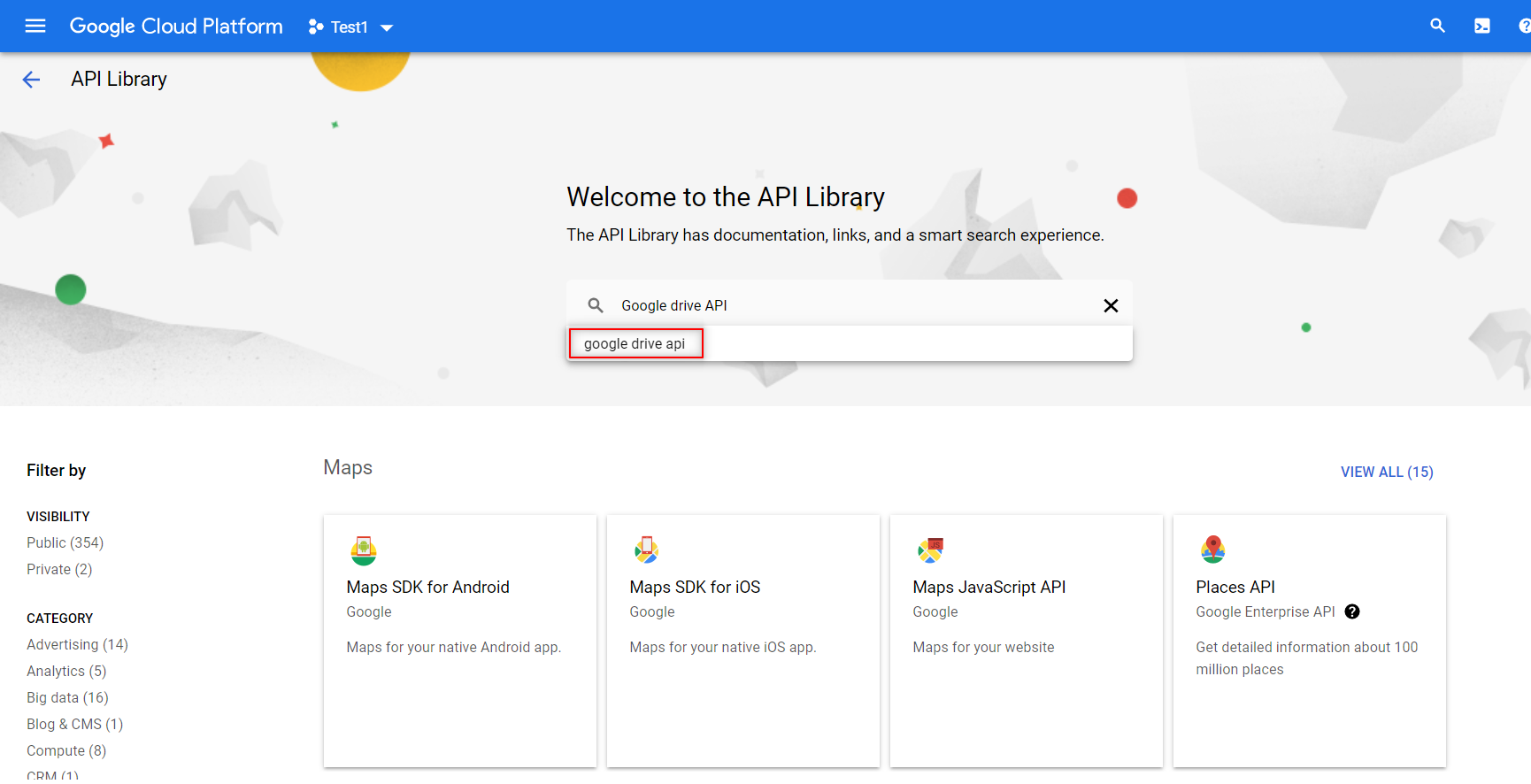

4. You have to search and enable the following two APIs from the library as shown below. You can enable them by just clicking on the enable button that appears.

- Google Sheets API

-

- Google Drive API

The Google Sheets API is the important one that will enable you to read and regulate the data in Google Sheets.

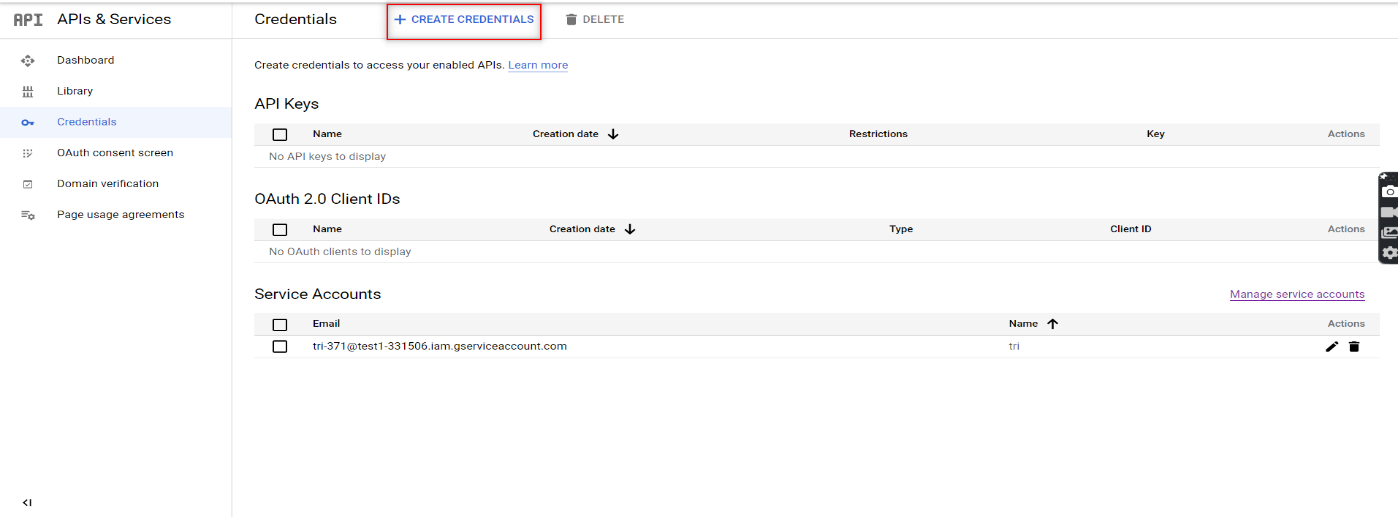

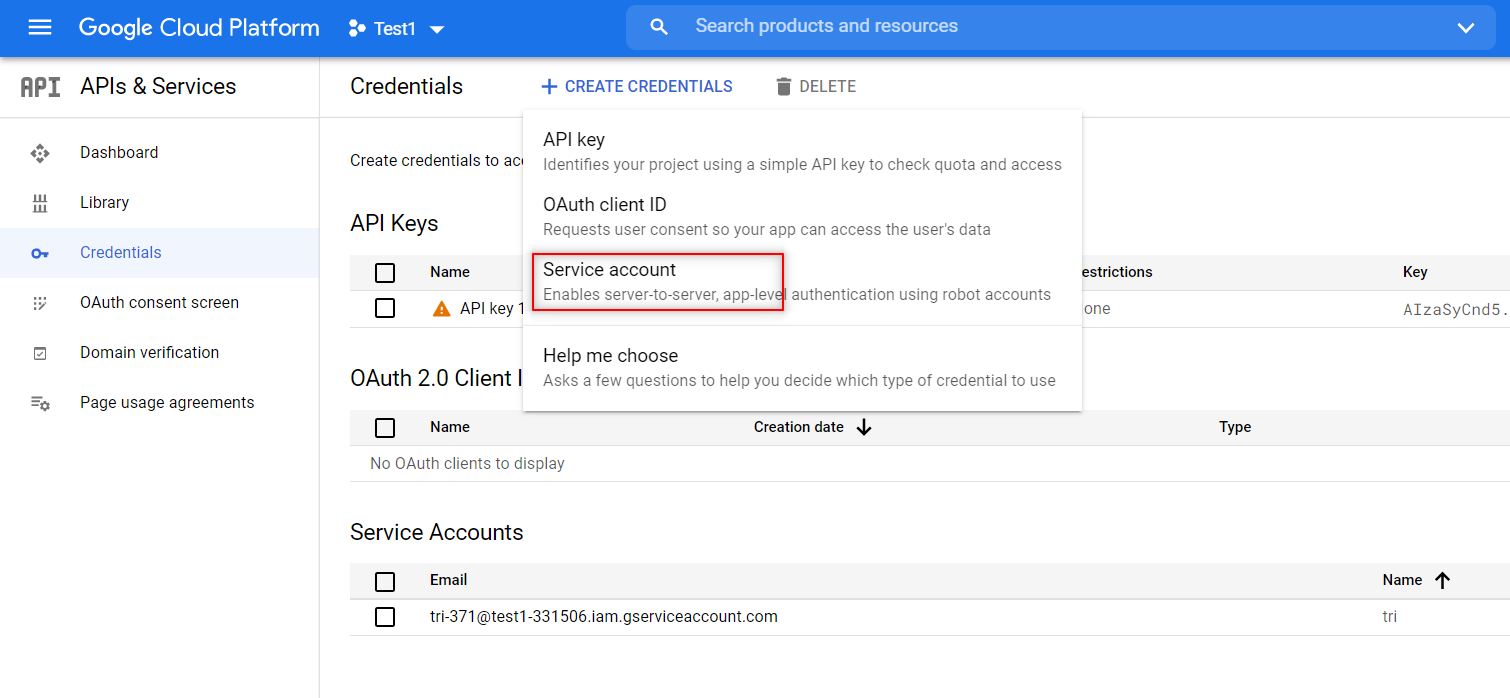

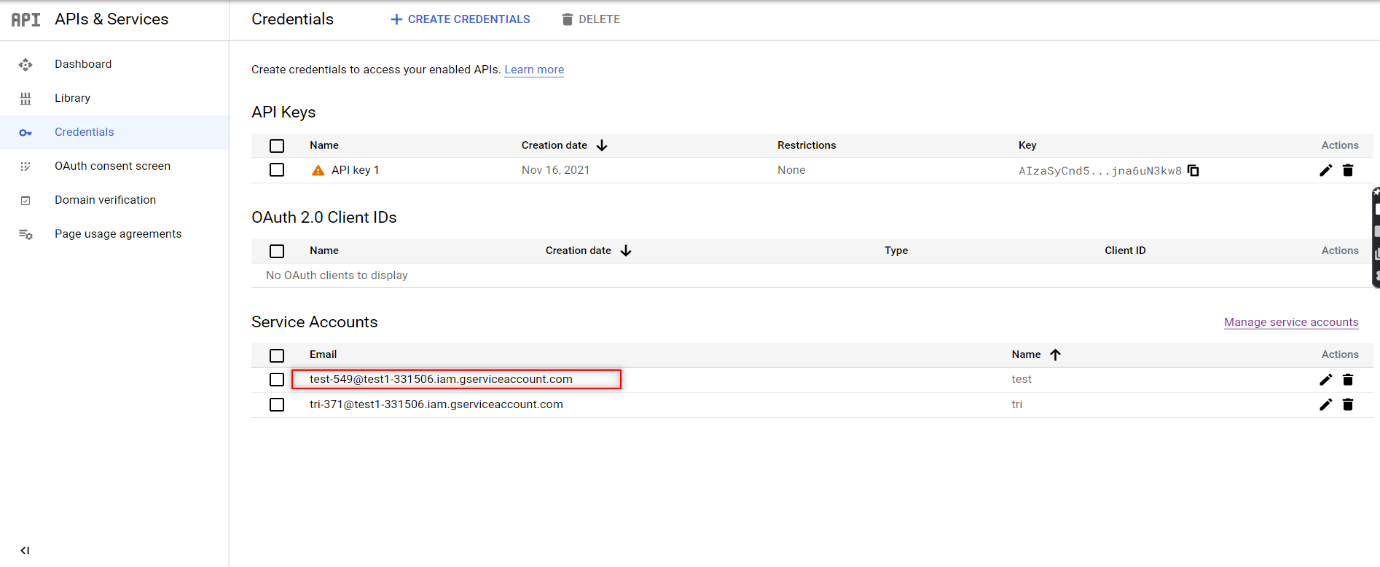

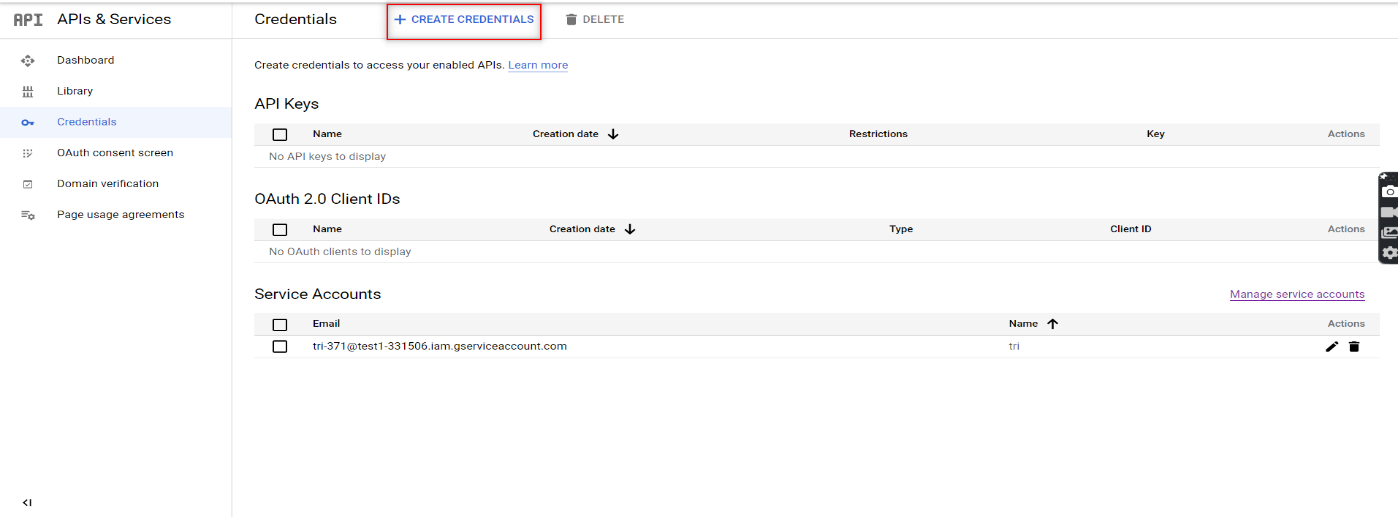

5. Now that you have enabled the required APIs to beat your automation challenge, it’s time to create the credentials for the services account. You can do that by clicking the ‘Create Credentials’ button that can be found in the Credentials menu as shown in the image.

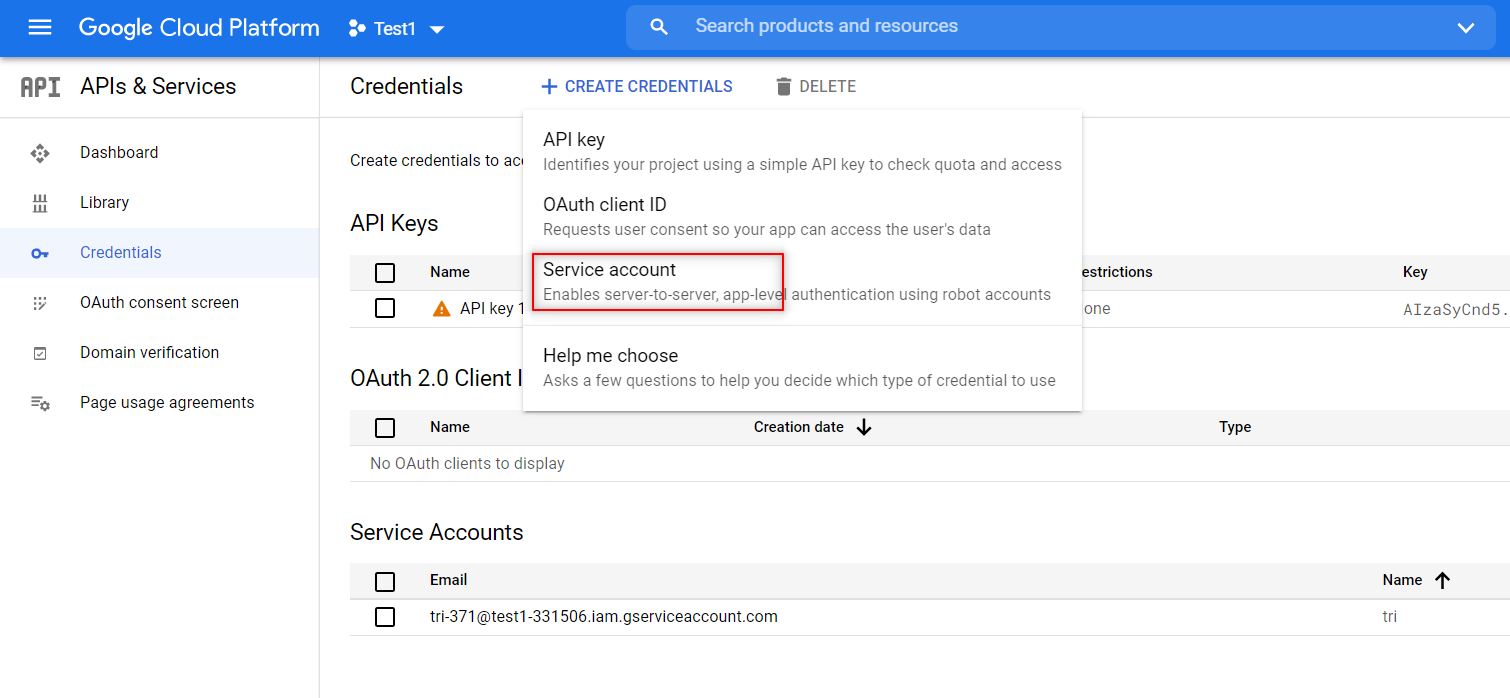

6. Once you click on that, a drop-down list will appear. Choose the ‘Service Account’ option from the list.

7. You would have to provide the Service Account details here in order to continue. That is why we had mentioned that you would have to create one prior to starting this process. Once you have provided the info, you can create the credentials by clicking on the ‘Create and Continue’ option.

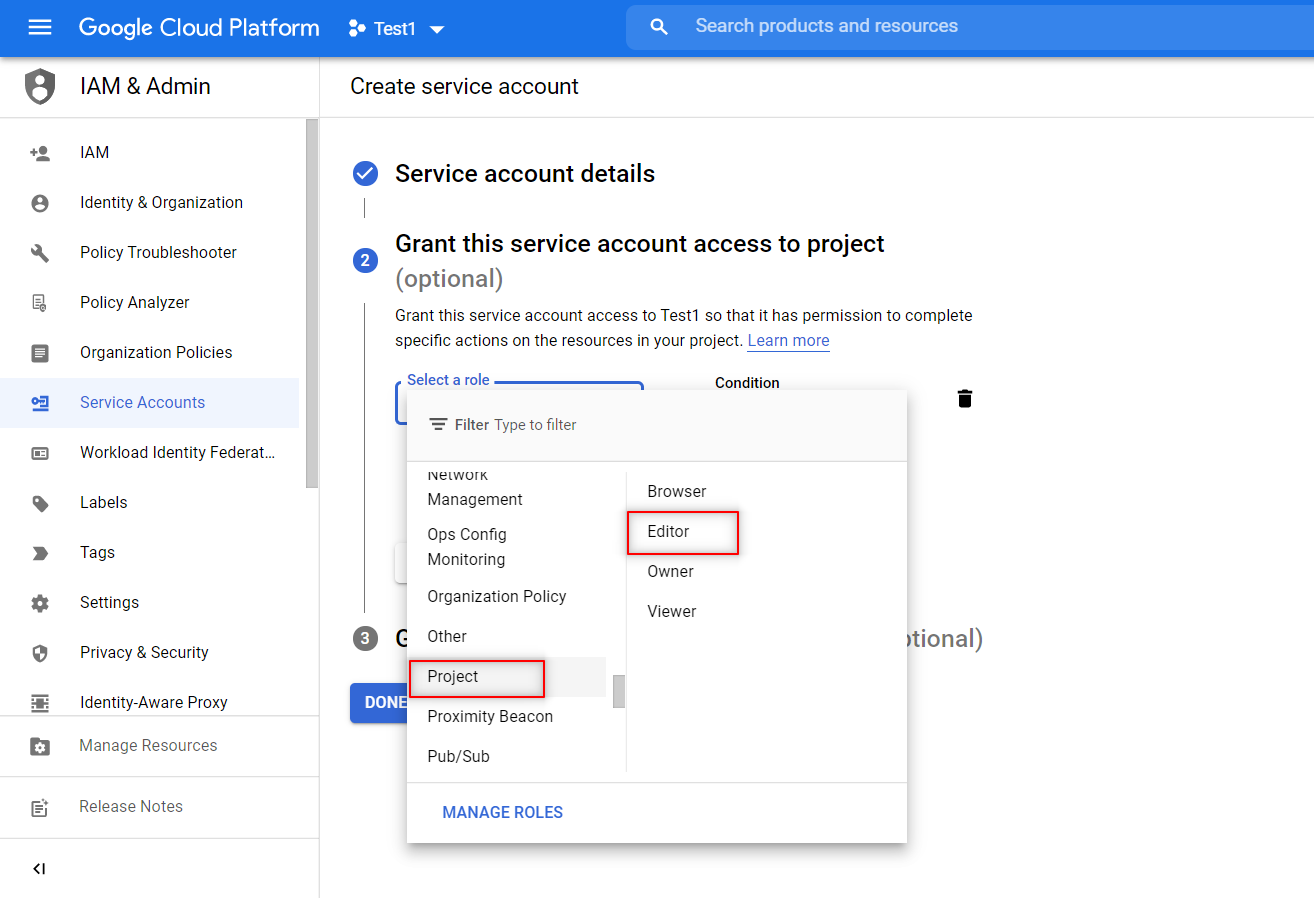

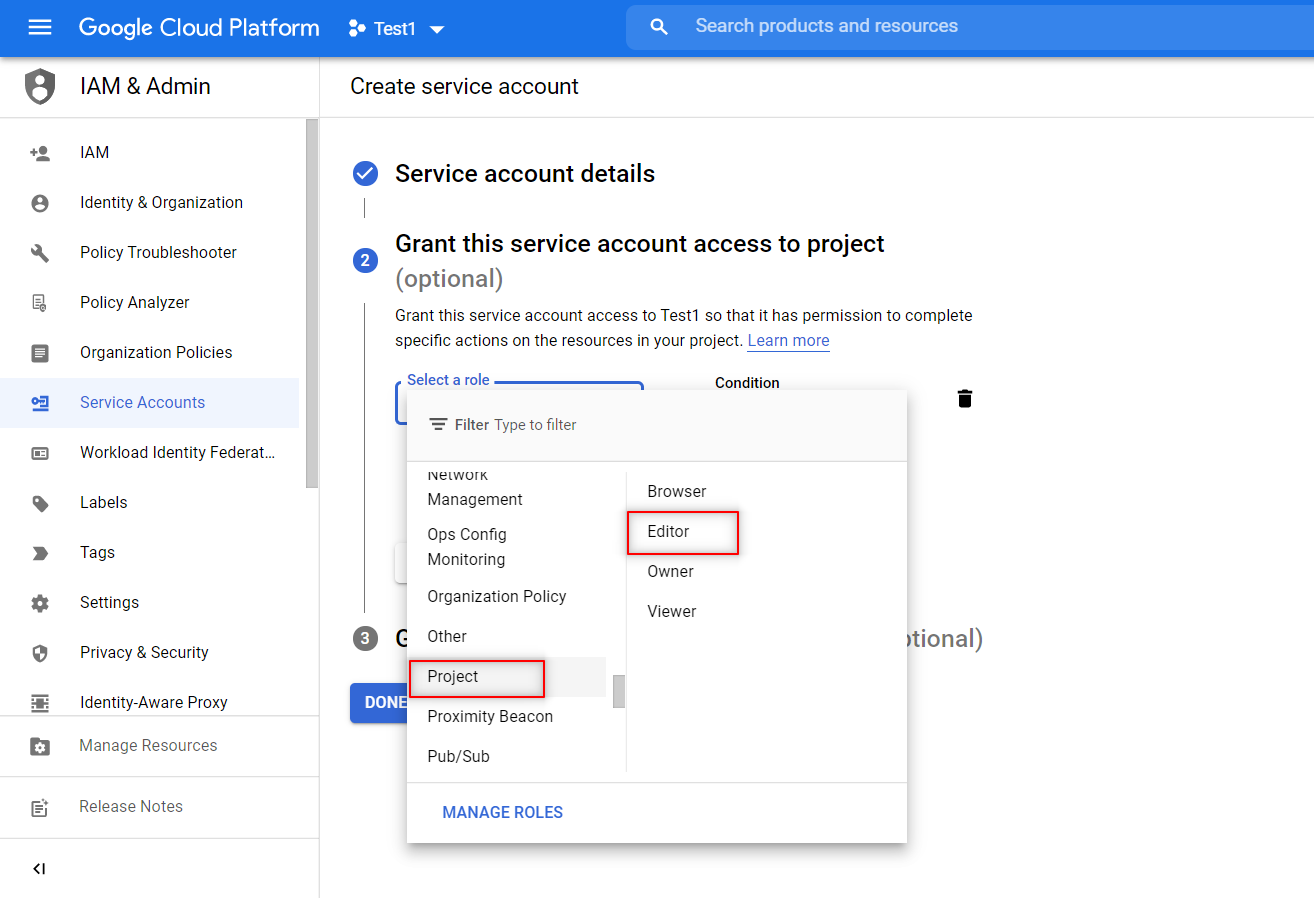

8. Similar to how we share the Google spreadsheets with other collaborators by providing them various access permissions like edit or view only, we will have to provide access to our service account as well. Since we have to both read and write in the spreadsheets, you would have to give editing access or not the view-only option.

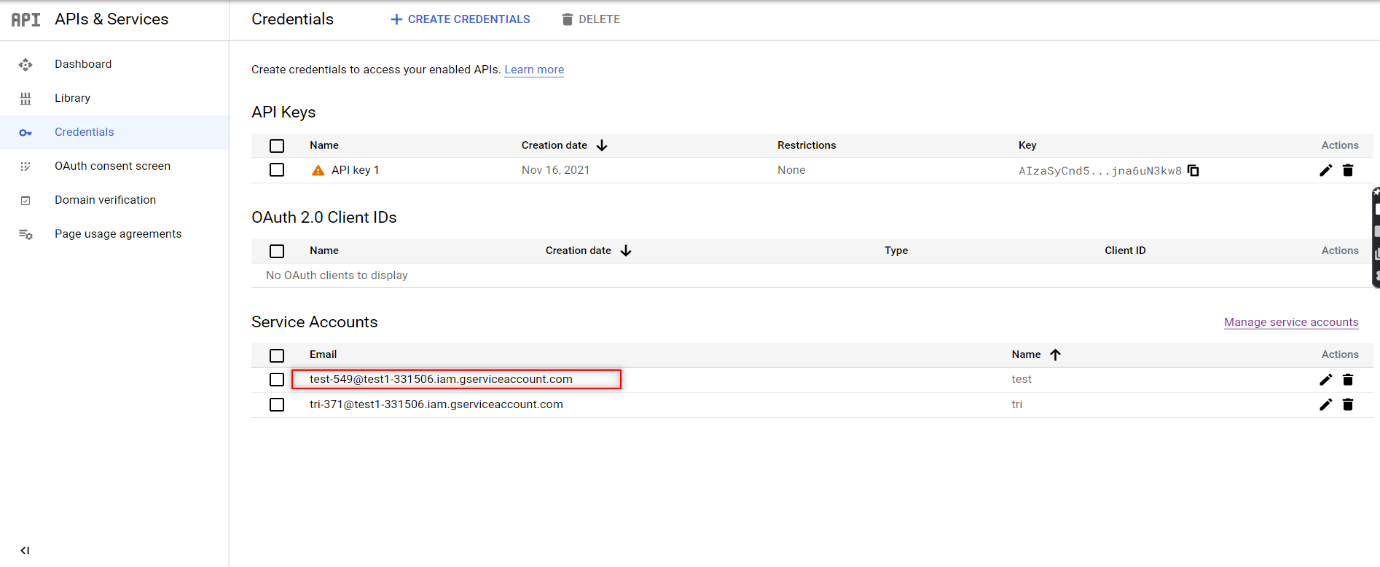

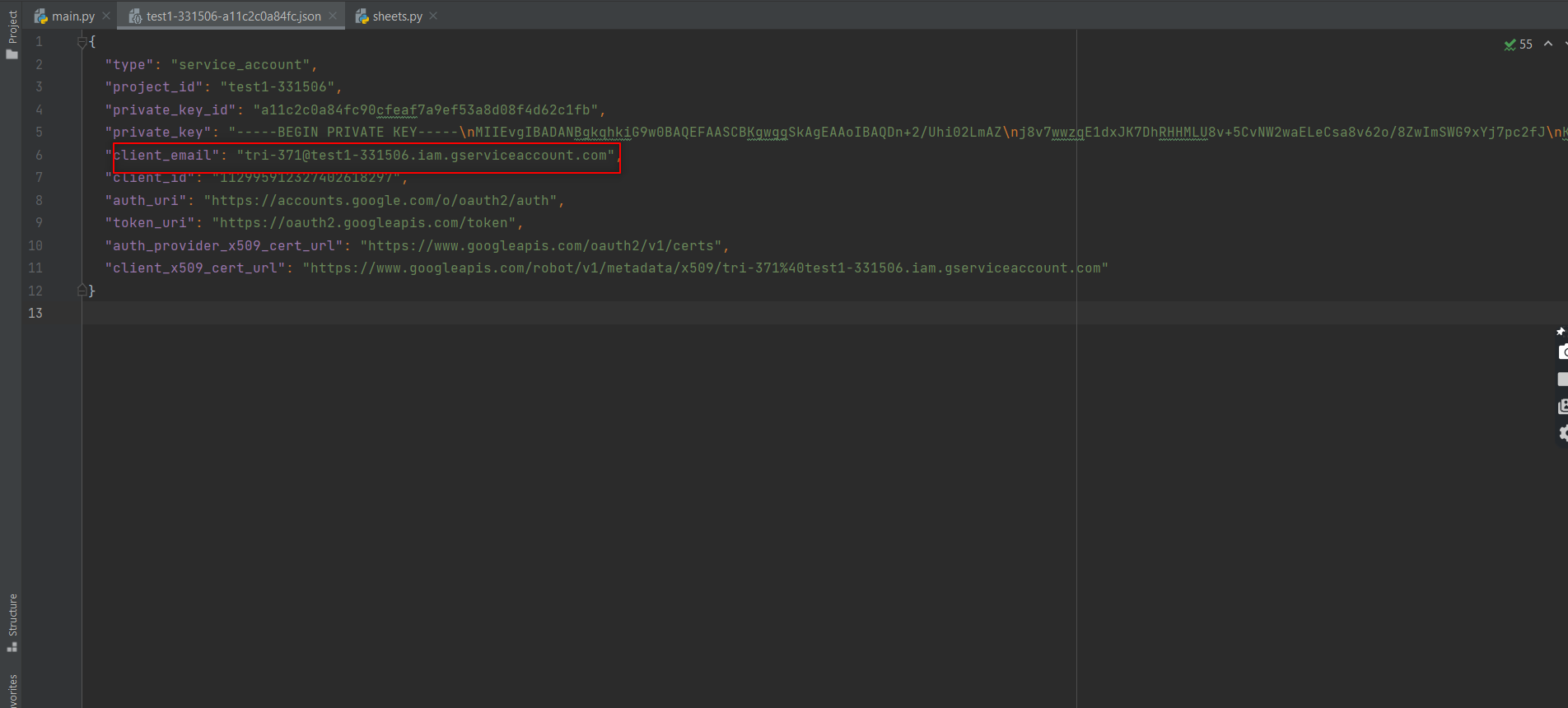

9. Once the credentials have been created, download the JSON file for the credentials. The JSON file will contain the keys that you will need to access the API. So our Google Service account is now ready to use.

Share the desired sheet with that email

Now that the Services account credentials have been created, you have to provide the email using which you will access the spreadsheet.

Open the Google Sheet that you want to automate and click on the Share button to provide access to this client email. Now, you are all set to code and access the sheet using Python.

Connect to Google Sheet using Python Code

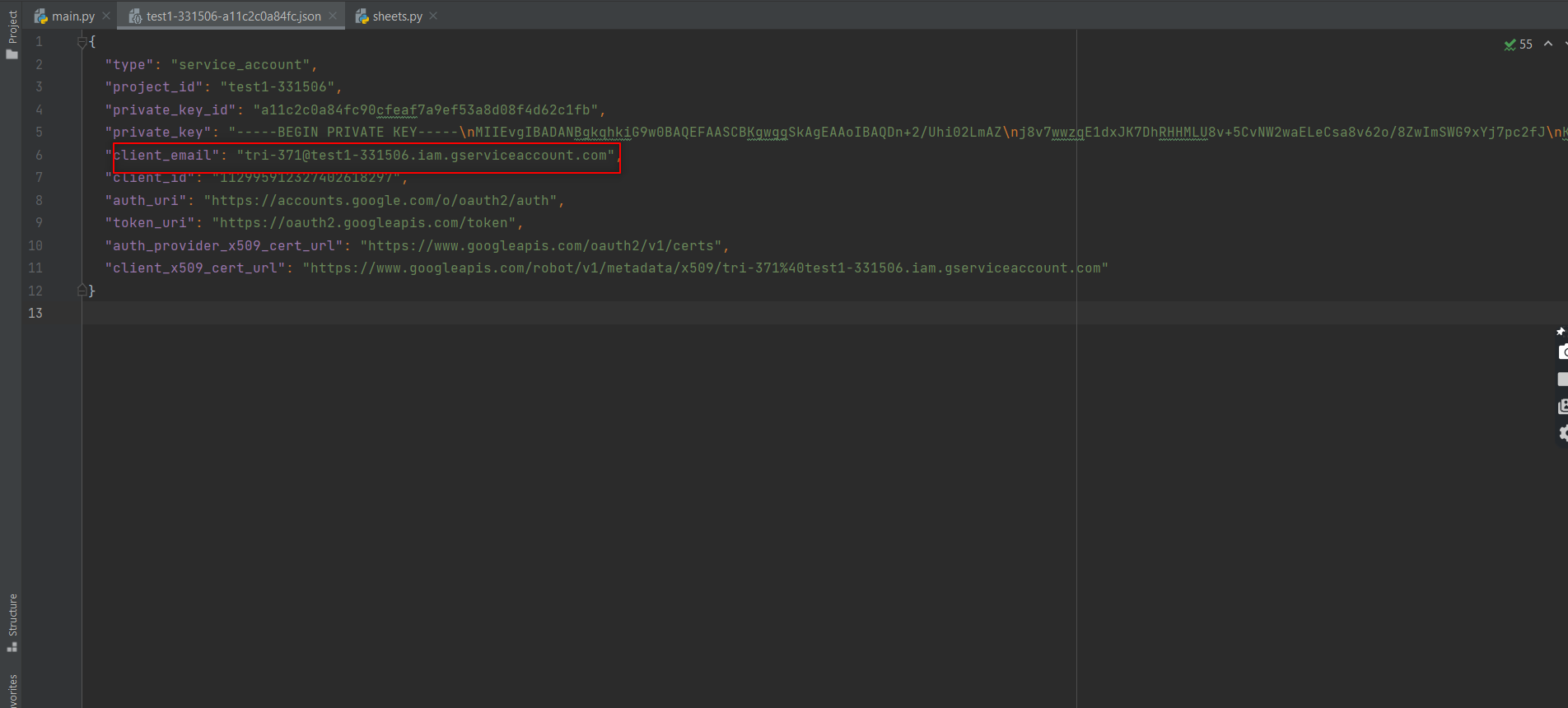

First up, you have to open the downloaded JSON file in PyCharm. You can then create a Python file in the same project folder and start writing your script.

We then have to install two packages (gspread and oauth2client) from PIP. To do that in PyCharm, we have to open command prompt and use the below command

pip install gspread oauth2client

Now let’s take a look at the different segments of the python code one by one to understand it easily and successfully implement it to achieve Google Sheet Automation.

1. Importing the Libraries

We will need both the gspread and oauth2client services to authorize and make API calls to Google Cloud Services.

import gspread

from oauth2client.service_account import ServiceAccountCredentials

from pprint import print

2. Define the scope of the application

Then, we will define the scope of the application and add the JSON file that has the credentials to access the API.

scope = ["https://spreadsheets.google.com/feeds",'https://www.googleapis.com/auth/spreadsheets',"https://www.googleapis.com/auth/drive.file",

"https://www.googleapis.com/auth/drive"]

3. Add credentials to the account

Once the scope has been defined, you have to add the credentials to the account.

creds = ServiceAccountCredentials.from_json_keyfile_name("test1-331506-a11c2c0a84fc.json", scope)

4. Authorize the client sheet

The next stage is authorizing the client sheet.

client = gspread.authorize(creds)

5. To open a Google Sheet

There is nothing that can be done without opening the Google Sheet in the first place.

sheet = client.open("Automation").sheet1

6. Get all records

Once the sheet is open, you can get all the data present in the sheet using the get_all_records function. It will return a JSON string that contains the data.

data = sheet.get_all_records()

pprint(data)

7. To get a specific row

Though reading all the data is a great feature, that wouldn’t be needed every single. So this is the code that you can use to get data from a specific row.

row = sheet.row_values(3) # Get a specific row

pprint(row)

8. To get a specific Column

Similarly, we will also be able to access data from a specific column.

col = sheet.col_values(2) # Get a specific column

pprint(col)

9. To Get the value of a specific cell

We can even be very precise and access data from a specific cell too.

cell = sheet.cell(1,2).value # Get the value of a specific cell

pprint(cell)

10. To insert the data into a sheet

We have seen how to read the data, now let’s see how we can insert data.

insertRow = [ 15, "Logesh"]

sheet.insert_row(insertRow, 15)

11. To delete certain row

Not all data in a sheet will be needed forever and so you can even delete a row of data from your sheet.

12. To update one cell

If at all you want to change the data in an existing cell, you wouldn’t have to delete the content and then add the new one again. Instead, you can just update the content in the cell.

sheet.update_cell(2,4, "CHANGED") # Update one cell

13. To Get the number of rows in the sheet

Beyond reading and editing the content in the sheet, you can even get to know the number of rows in a sheet as it might be needed for your automation process.

numRows = sheet.row_count # Get the number of rows in the s

pprint(numRows)

14. To get the length of the data

Likewise, we can even get the length of the data in the sheet if you want to use that in your automation as well.

Source Code:

Since we have explained everything part by part, now it’ll be much easier for you to go through the source code and understand it clearly.

import gspread

from oauth2client.service_account import ServiceAccountCredentials

from pprint import pprint

scope = ["https://spreadsheets.google.com/feeds",'https://www.googleapis.com/auth/spreadsheets',"https://www.googleapis.com/auth/drive.file",

"https://www.googleapis.com/auth/drive"]

creds = ServiceAccountCredentials.from_json_keyfile_name("test1-331506-a11c2c0a84fc.json", scope)

client = gspread.authorize(creds)

sheet = client.open("Automation").sheet1

data = sheet.get_all_records()

pprint(data)

row = sheet.row_values(3) # Get a specific row

pprint(row)

col = sheet.col_values(2) # Get a specific column

pprint(col)

cell = sheet.cell(1,2).value # Get the value of a specific cell

pprint(cell)

insertRow = [ 15, "Logesh"]

sheet.insert_row(insertRow, 15) # Insert the list as a row at index 4 its will over write

sheet.delete_rows(14)

sheet.update_cell(2,4, "CHANGED") # Update one cell

numRows = sheet.row_count # Get the number of rows in the sheet

pprint(numRows)

pprint(len(data))

Conclusion:

We hope you now have a clear idea of how to achieve google sheet automation as per your needs and make the most out of the tool to save your valuable time. As a test automation services provider, we understand that not everything can be automated, but if you approach any automation task with a negative mindset, you’ll never be able to unravel the solutions to overcome your obstacles. So follow and implement these methods with a positive mindset and you will definitely witness performance improvement at your end.

by admin | Nov 29, 2021 | Automation Testing, Blog, Latest Post |

We live in a world where automation is already considered to be a huge advantage now for most companies. The same goes for testing mobile and web applications. You need to have the right tools that will help you automate many of the functions of testing so you can get a higher level of test coverage and execute them at a faster rate. Let’s look at some of the tools that quality teams are looking for when testing mobile apps and web apps.

Tools for Mobile App Testing

While most web developers and testers use the Selenium framework to write tests, there are many different tools for mobile app testing. The first question to ask when choosing a tool is whether it is open-sourced or closed-source. Since most mobile apps are built with open-source frameworks, it is best to select an open-source tool, if possible.

The next question is whether the tool runs on more than one OS or just on Android or just on iOS. You will probably write tests in a language that does not depend on OS, but you should remember that Android and iOS require different test frameworks.

Not every mobile app test framework is best for every application type: native apps, web apps with a mobile interface, and hybrid apps with heavy WebView use can require different tools.

If you are running into problems deciding which tool is best for your needs, keep in mind that many companies offer free trials or free versions of their products. Just do a little bit of research before you jump in headfirst.

Tools for Web App Testing

More and more businesses rely on their web applications to streamline their operations and improve their marketing efforts. The continuous, high load and growing market expectations require web apps to undergo a variety of tests to make sure that it’s compliant with the UI standards, as well as in terms of compatibility and usability. Web app testers need to use a variety of automated software testing tools that allow them to test their products from different perspectives.

Which Testing Tool to Use?

Before making a decision on which testing tool to use for your applications, you need to take into account all the other factors that could affect how your testing will go. Some of the questions you need to consider are:

- How many tests do you need to run?

- Who will run the tests?

- How frequently do you need to run them?

- How many users do you intend to simulate?

- What scripting languages do you have experience working with?

- What platforms and browsers do you need to cover?

- Are you testing a finished product or a pre-release version?

- How important is automation for you?

- Do you have a system of tracking and analyzing the results?

- How important is a visual reporting system for you?

Conclusion

Mobile and web app testing is a crucial step in software development where companies can’t afford to cut corners. The only way to make testing much more efficient is to use the right kind of tools for the job. This guide should help you decide what kind of tools you can use for testing that will help you automate many of its functions.

If you’re in need of a QA company with a seamless track record in web and mobile testing services, Codoid is your best choice. Every new software product deserves high-quality automation testing, and our team of highly skilled QA professionals can handle any job. When it comes to QA automation services, there’s no better choice than Codoid. Partner with us today!

by admin | Nov 12, 2021 | Automation Testing, Blog, Latest Post |

Robot Framework is an open-source Python-based automation framework used for Robotic Process Automation (RPA) and also for Acceptance Testing & Acceptances Test-Driven Development (ATDD). It is used with the keyword-driven testing approach for which testers can write automation test scripts easily. The Test cases are written using the keyword style in a tabular format making them easy to understand. Robot Framework does provide good support for external libraries. But Selenium library is the most popular library used with Robot Framework for web development and UI testing. As a leading mobile app testing service provider, we have been using Robot Framework in our various Android and iOS app testing projects. So we have written this end-to-end Robot Framework Tutorial that covers everything one has to know.

The Basic requirements for robot framework

Since the Robot framework is built based on Python, we have to install the latest version of Python by downloading it from their official site. After successful installation, set up the Path environment variable in your local system.

Steps to Install Robot Framework:-

1. You can check if the Python setup has been installed properly by opening Command Prompt and typing the below command.

2. Use the below command to check the pip version. (Pip is a Python package manager)

3. Now we’re all set to install the Robot framework

a. For a straightforward installation of the robot framework, you can use the command

pip install robotframework

b. If there is a need to install the robot framework without any cache, you can use this code instead.

pip install --no-cache-dir robotframework

c. If for any reason, you want to uninstall the Robot framework, then you can do so by using the below code.

pip uninstall robotframework

4. Just like how we verified Java, we can use the below commands to check it using Command Prompt.

(i)

This command will show the details of the robot framework and the framework location.

(ii.)

Once we enter this command, the desired result that should be displayed is “No broken requirements found.” If any other message apart from this (i.e.) a warning is displayed, we need to check the warning related to the setup and install it properly.

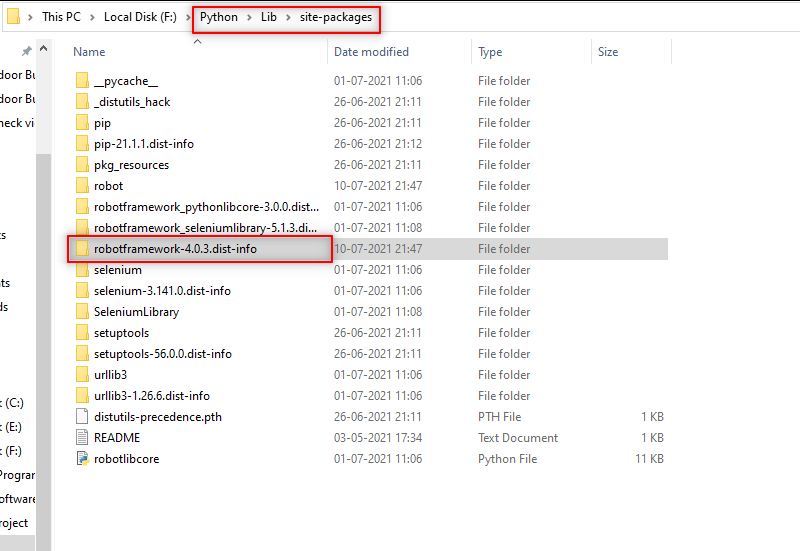

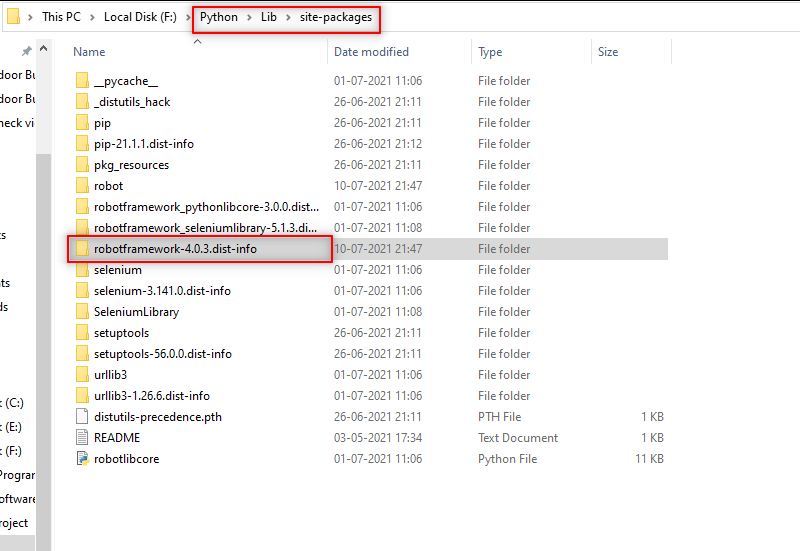

We can also verify if the Robot Framework has also been installed properly in our Local system.

Go to the Python folder > Lib > site-packages > verify if Robot Framework is installed.

5. To verify the version of the installed Robot Framework, use this code

6. You might face a scenario where you would need to use a specific version of the Robot Framework, you can accomplish this by mentioning the specific version you need as shown below.

pip install robotframework==4.0.0 //mention the specific version

7. In time, you might want to upgrade the old version of the Robot Framework. You can use the below code to do it.

pip install --upgrade robotframework

8. In addition to verifying if the Robot Framework has been installed correctly, you might also have to check if the python libraries.

(i.)

This command will show all the python libraries installed with the robot framework version.

(ii.)

This command will show all the python libraries installed with their version numbers.

For writing automation test case:

In the Robot Framework Tutorial, we would be exploring how to perform web browser testing using Selenium Library. But for that to happen, we have to import the Selenium library file to this Robot project

Step 1: Enter the Command-Line to install Selenium

Step 2: To upgrade the pip installation with the Selenium library, enter the below-mentioned command

pip install --upgrade robotframework-seleniumlibrary

Step 3: Finally, you can check if the pip Robot framework Selenium library has been installed properly by using this command.

pip check robotframework-seleniumlibrary

IDE for the Robot framework:

We can conveniently use the following IDE’s to write the Robot Framework automation testing codes.

1. PyCharm

2. Eclipse with RED plugin

3. Visual Studio with one of the plugin

4. RIDE

In this Robot Framework Tutorial, we have used PyCharm with Selenium to write a website automation testing code.

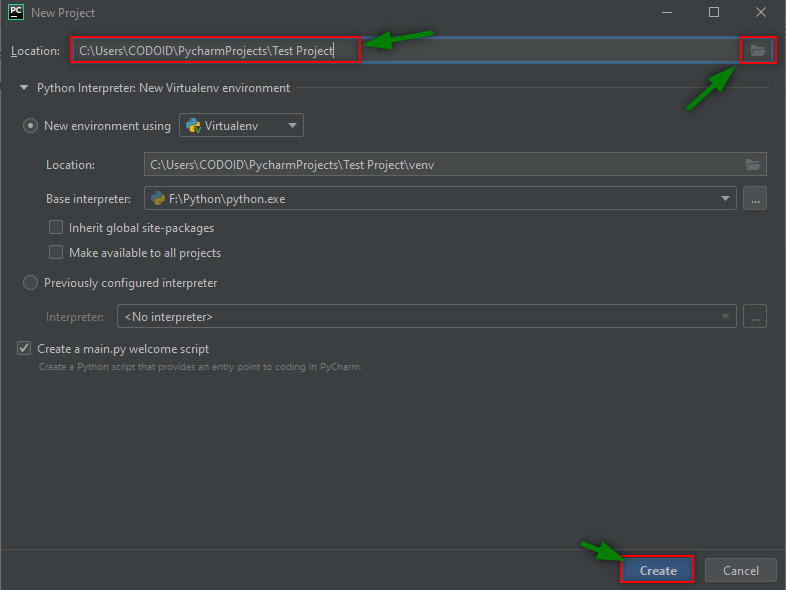

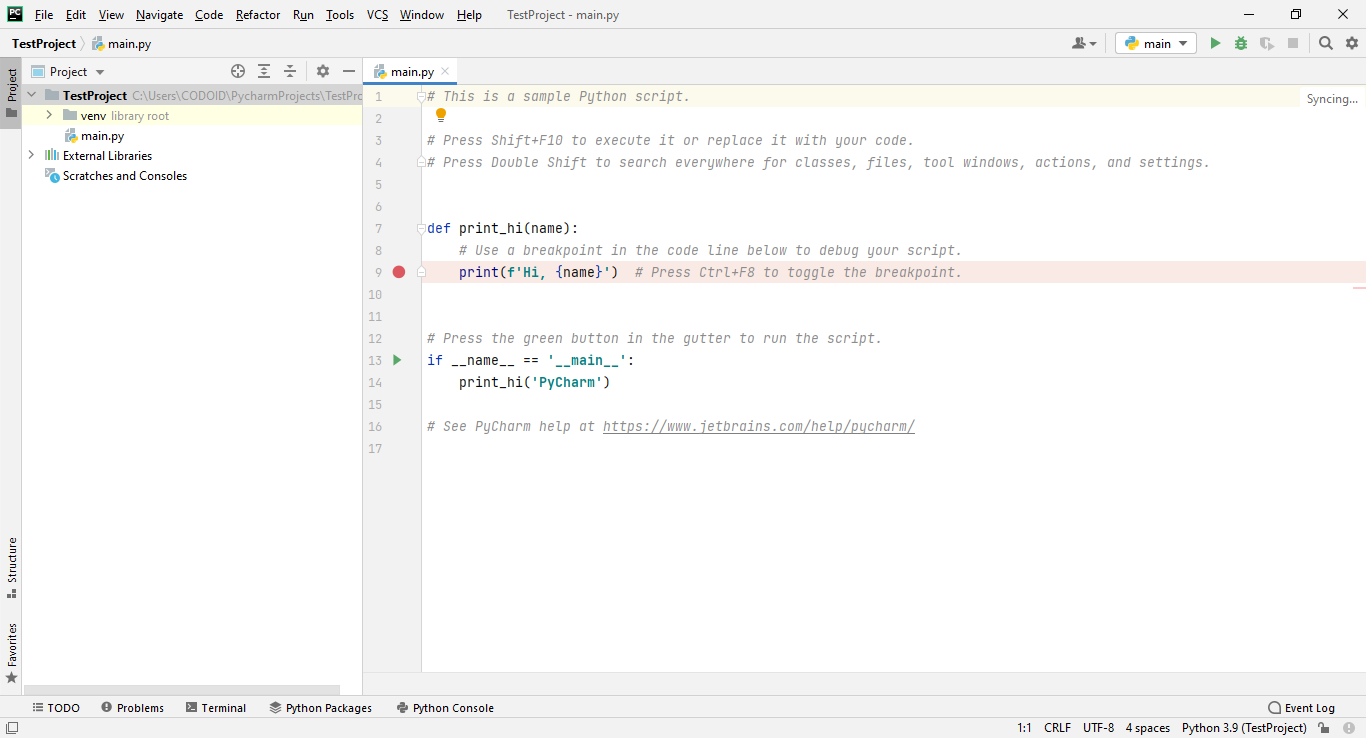

PyCharm IDE Installation and a New Project Creation:-

Step 1: Go to https://www.jetbrains.com/pycharm/download/#section=windows

Step 2: Download the free Open-Source Community Version of the PyCharm .exe file.

Step 3: Once you run the .exe file of the PyCharm setup, you’ll see the ‘Install Location’ window. If you want to change the location from the default option, you can click on the “BROWSE” button, and select your own file setup location and click on the ‘Next’ button.

Step 4: In the 4th Window, select the 64-bit launcher and click the ‘NEXT’ button to move to the next window where you can directly click on the ‘INSTALL’ button to install all the files.

Step 5: Once the setup and jar files are properly installed and updated, tick the checkbox and finish by clicking on the “OK” button in the “Import Pycharm Settings” pop-up.

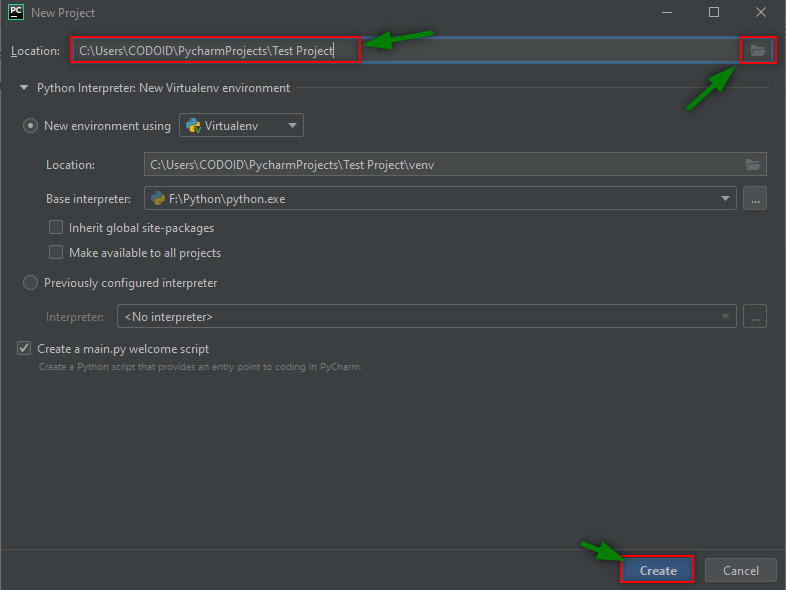

Step 6: Now, you will see the ‘Welcome to PyCharm’ window. Click on the “Create New Project” option and it will automatically generate a project fill location to set up the project. If you would like to change the location, then you may click on the file option to customize it as you please. Enter the “Project Name”, and click on the “CREATE” button.

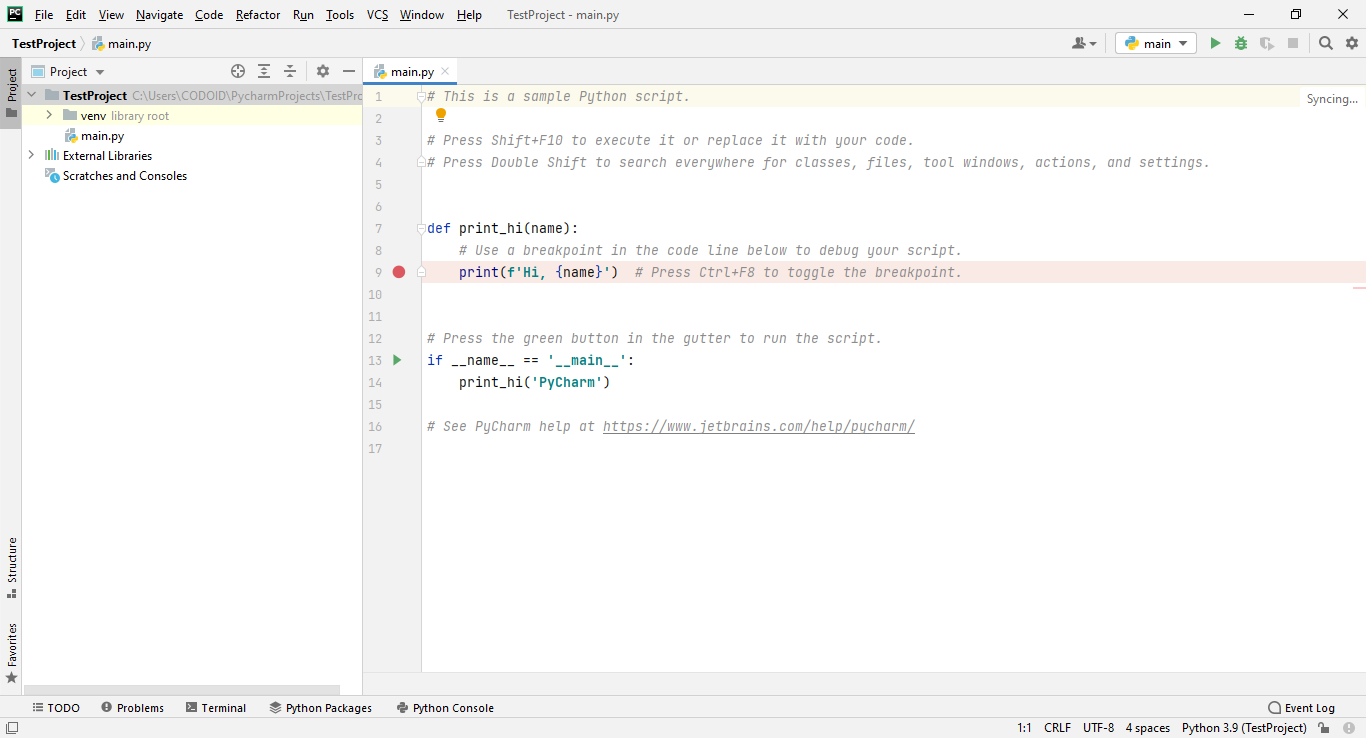

Step 7: Finally the PyCharm Window will display with New Project.

In this Pycharm IDE, we are going to create a Robot framework for a website’s automation testing. So let’s explore how to generate a report and all the other essential actions in the upcoming topics of this Robot Framework Tutorial.

How to Create a New Robot Project and the required Setups:

In the Robot framework, we have to set up interpreters and add some plugins as well. Let see how to do those things now.

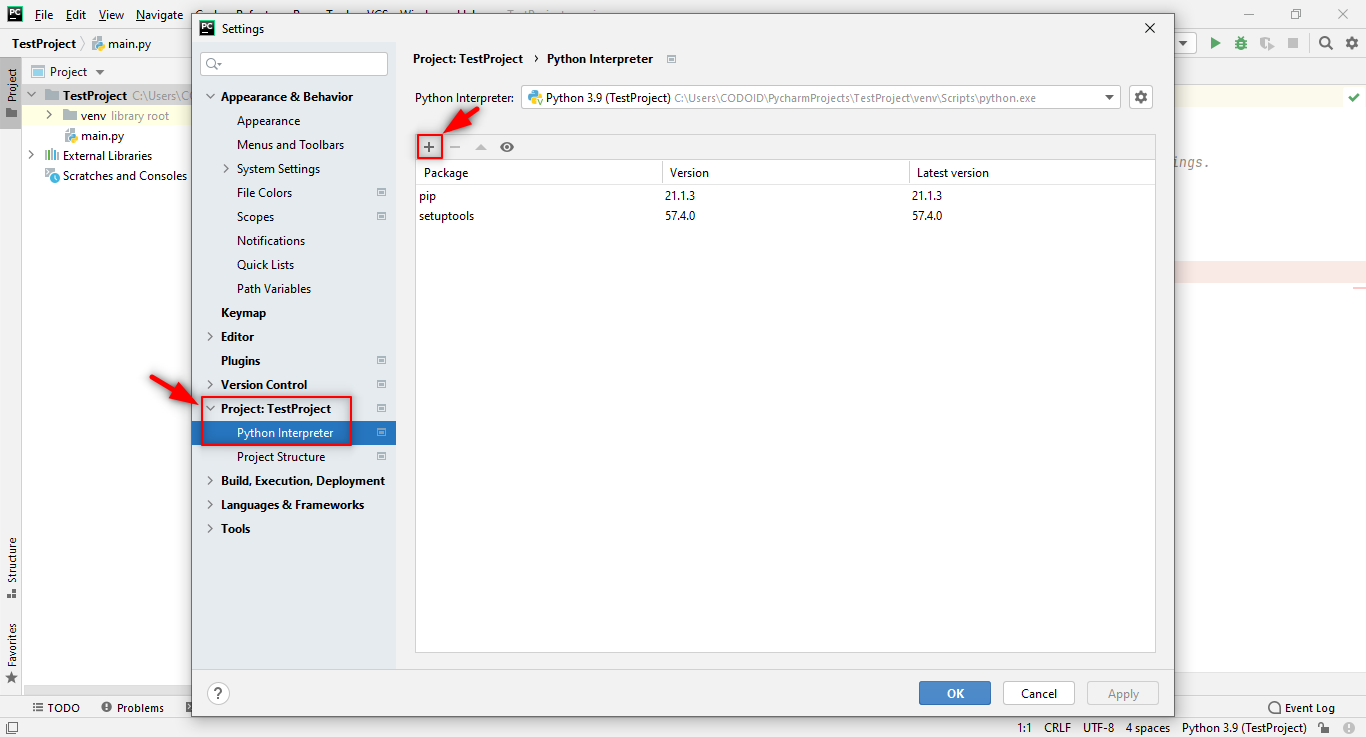

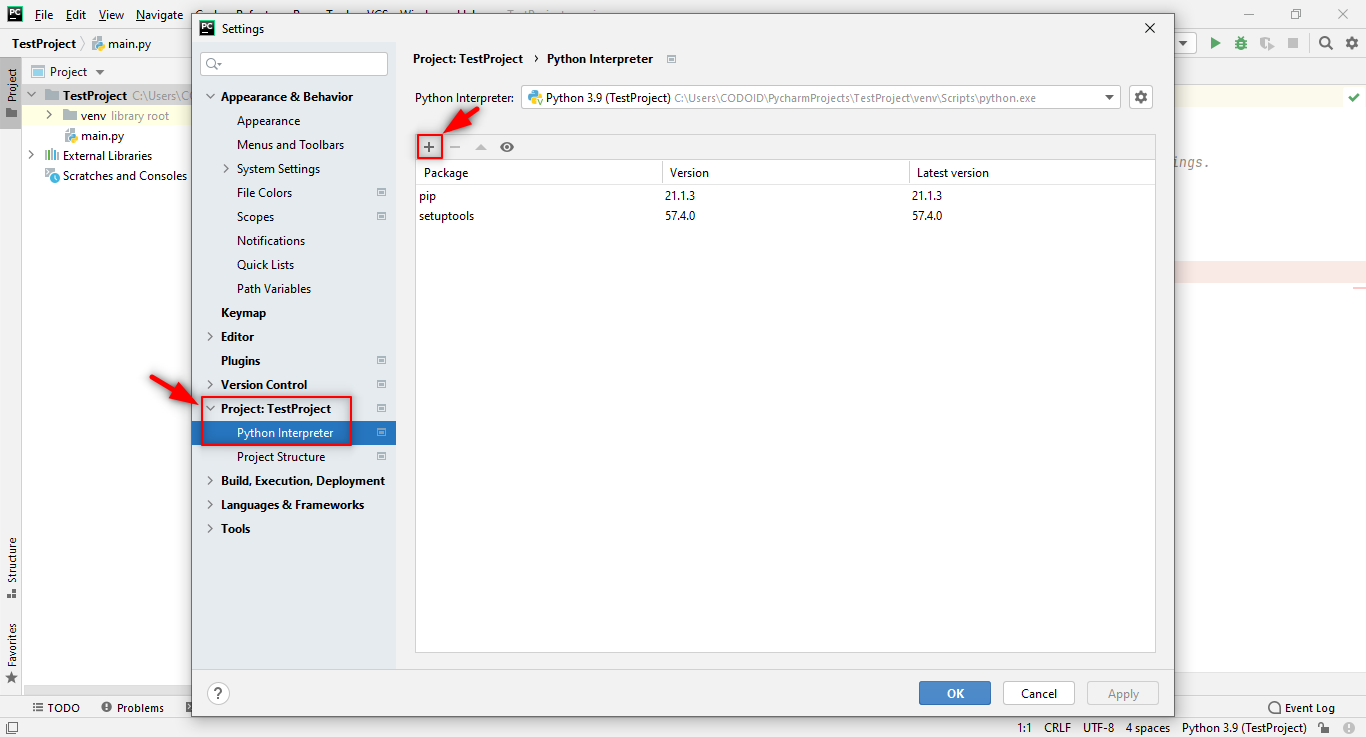

Step 1: You’ve already created the Initial Project for which you need to add the interpreters to work with the Selenium and Robot framework. So follow the below steps, and use the image as a reference if needed.

Go to File > Settings > Click on Project: “current project name” > Python Interpreter > + icon

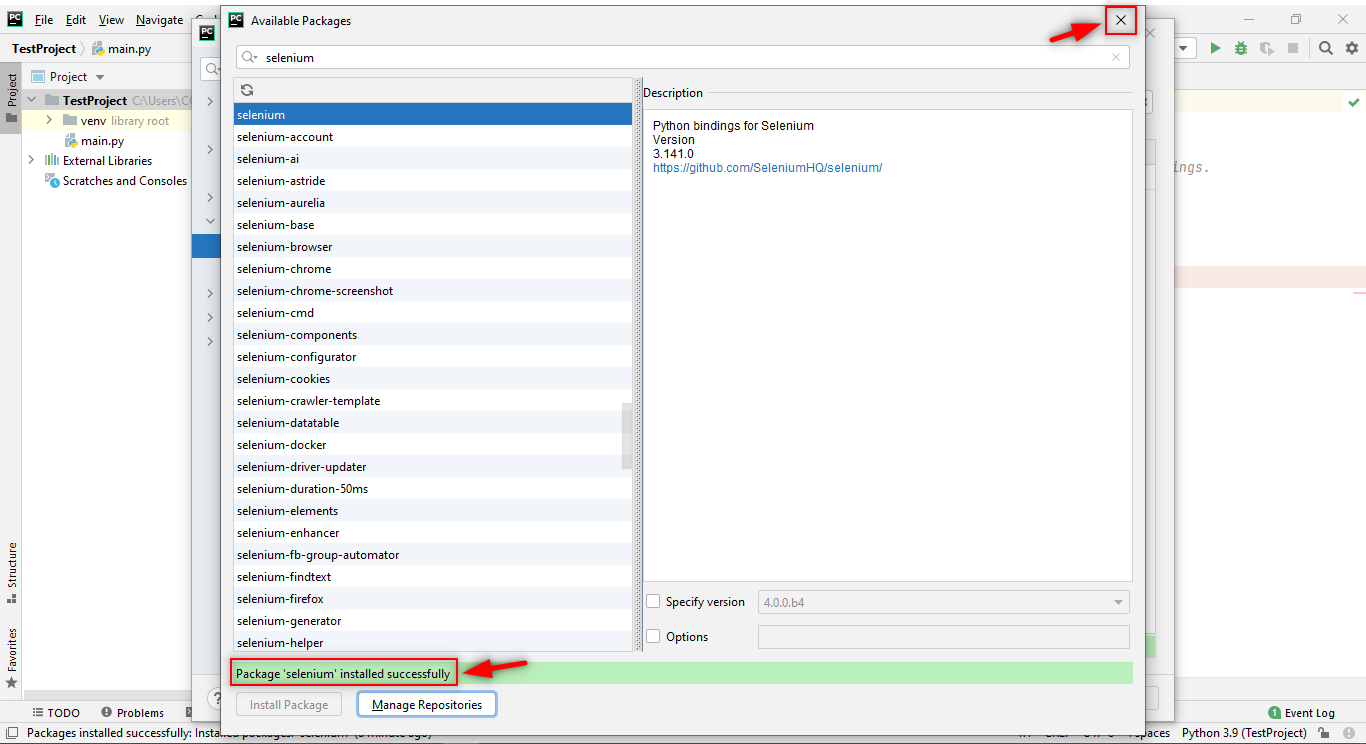

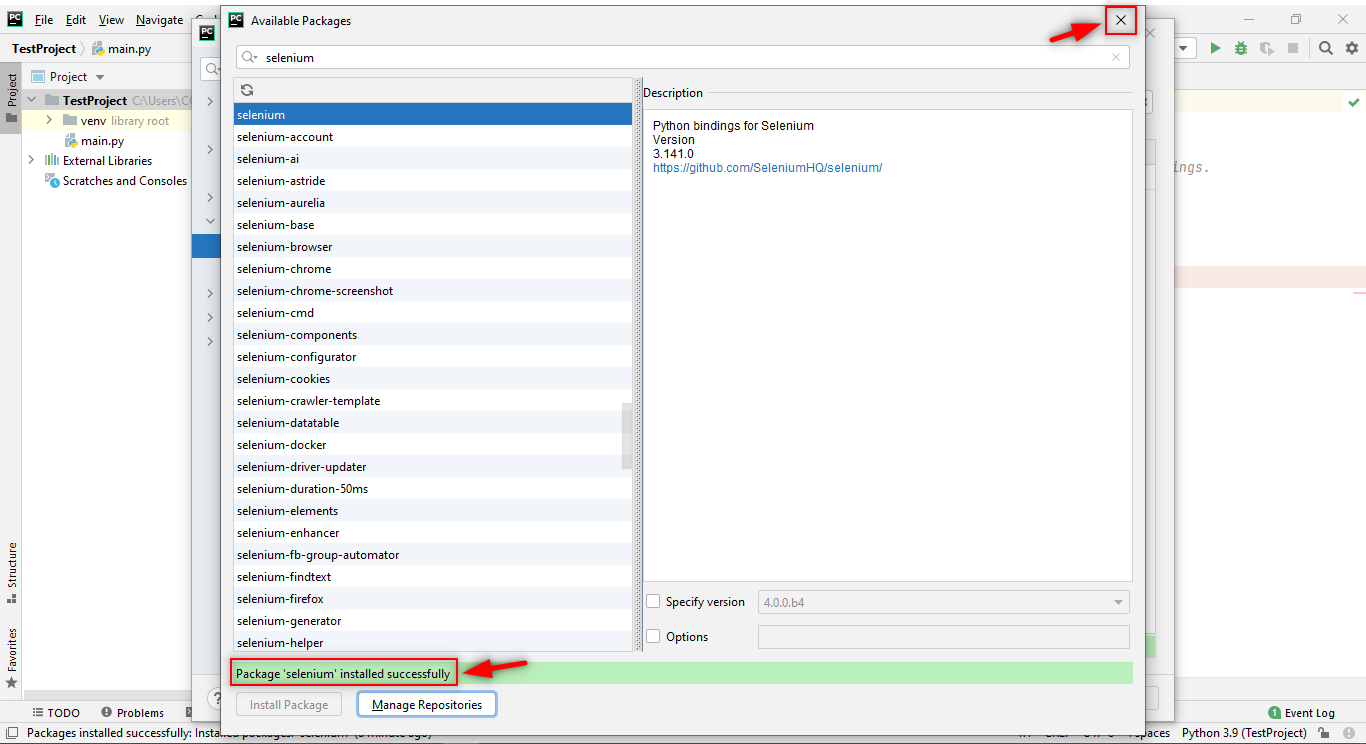

Step 2: You need to install the following packages for this project

i. Selenium

ii. robotframework

iii. robotframework-seleniumlibrary

You have to enter these three keywords one by one in the search input field and search for the packages. Once you find them, you have to click on the “Install Package” button and wait for a while for the packages to install in the back end. Once it is successfully installed, the highlighted message will appear and you can close the window by clicking on the “OK” button in the settings window.

Note: Make sure to close the window only after installing the three packages one by one.

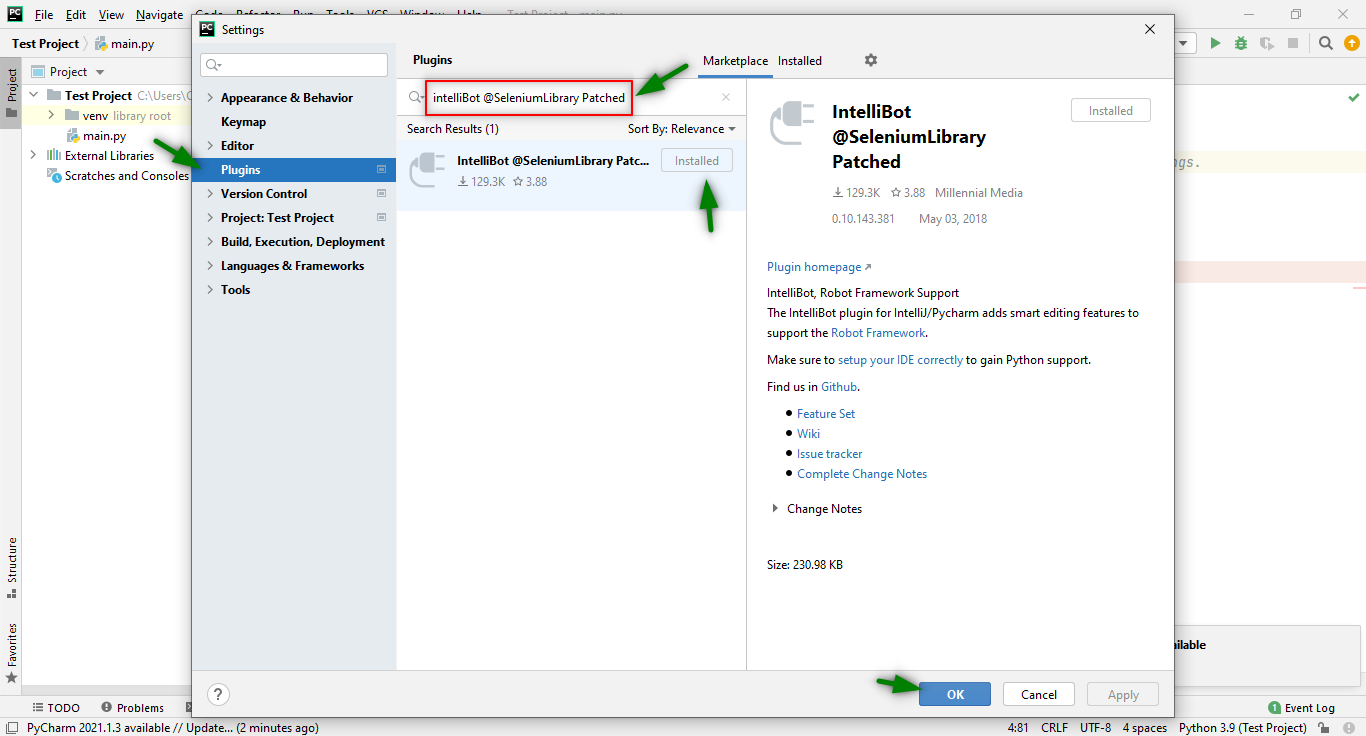

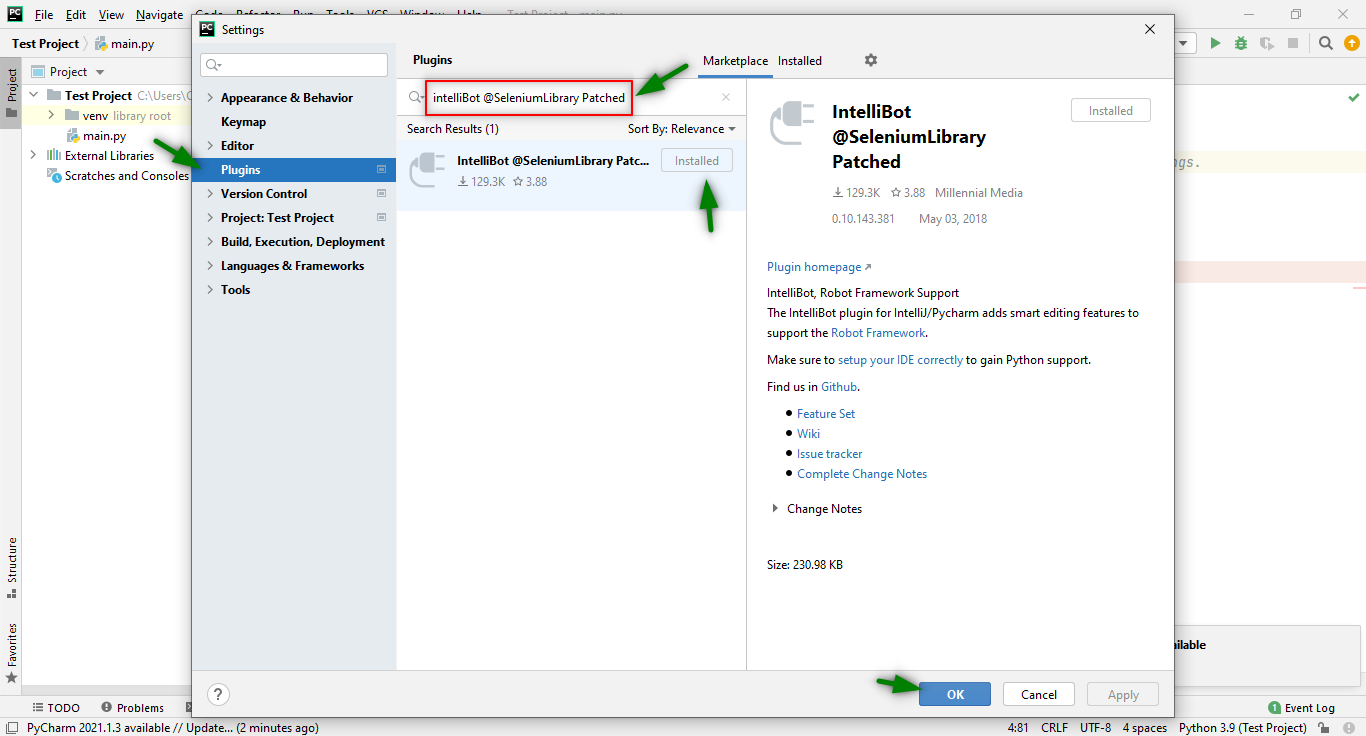

Step 3: Now, you have to add the “IntelliBot @SeleniumLibrary Patched” Plugin as it’ll help us find the Robot framework files and give auto suggestions for the keywords. So without this plugin, PyCharm will not be able to find the “.robot” files. The steps are as follows,

Settings > Plugin > Marketplace >Search “IntelliBot @SeleniumLibrary Patched” > Install

Once the Plugin has been installed, you have to restart the IDE for the initial configuration.

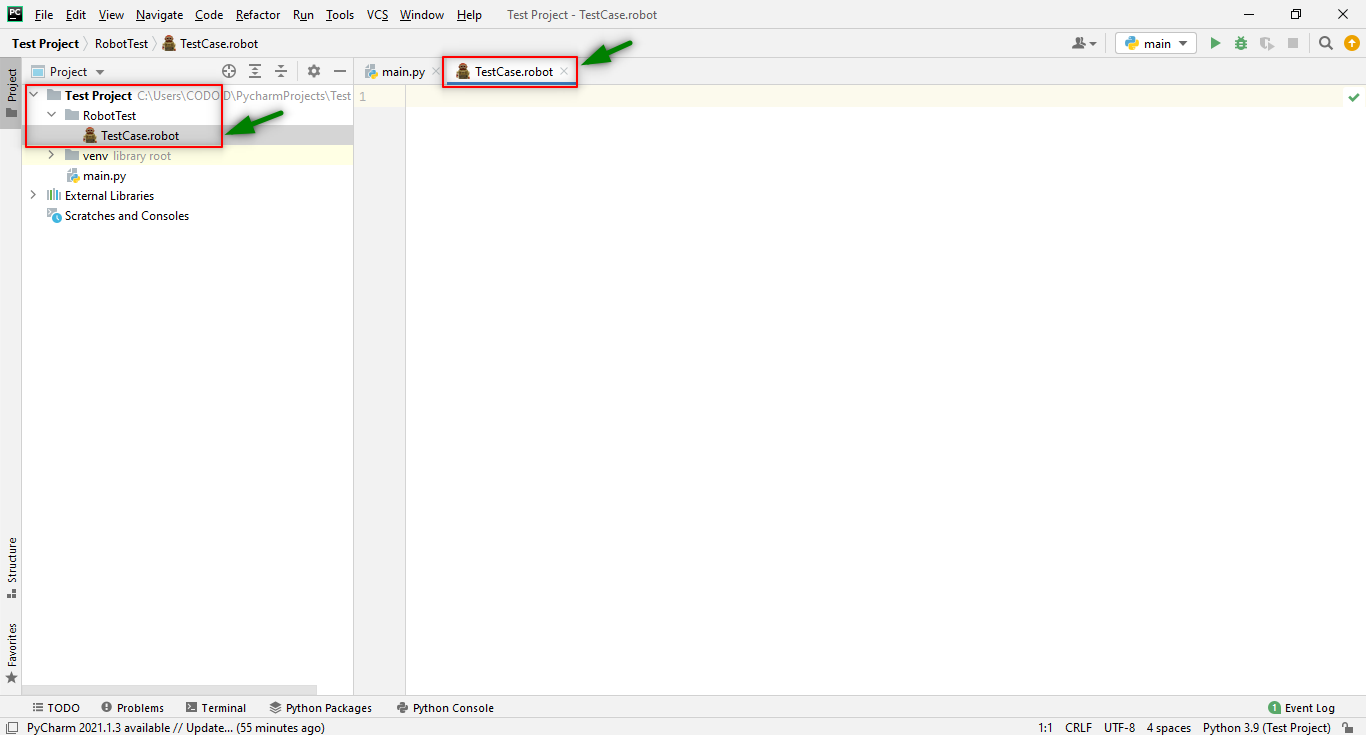

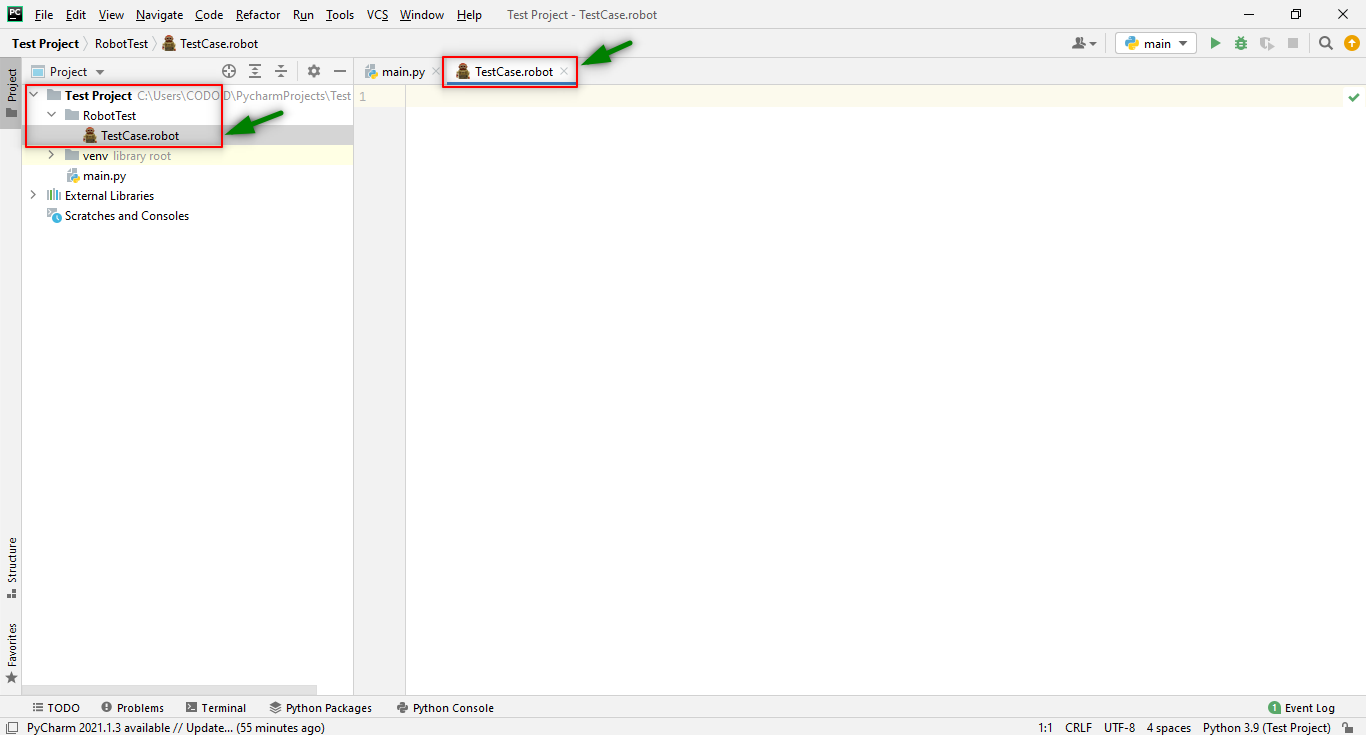

Step 4: Normally, the Project Structure contains multiple directories and each directory contains a similar kind of file. So in this Test project, we are going to create a test case inside the directory first.

Right-click Test Project > New > Directory > Enter the Directory Name

That directory will be created under the Test Project and so our Robot test cases can be created inside it.

Step 5: Create the “.robot” file under the created directory.

Right Click on Created Directory > New > File > Enter a File name that ends with “.robot”

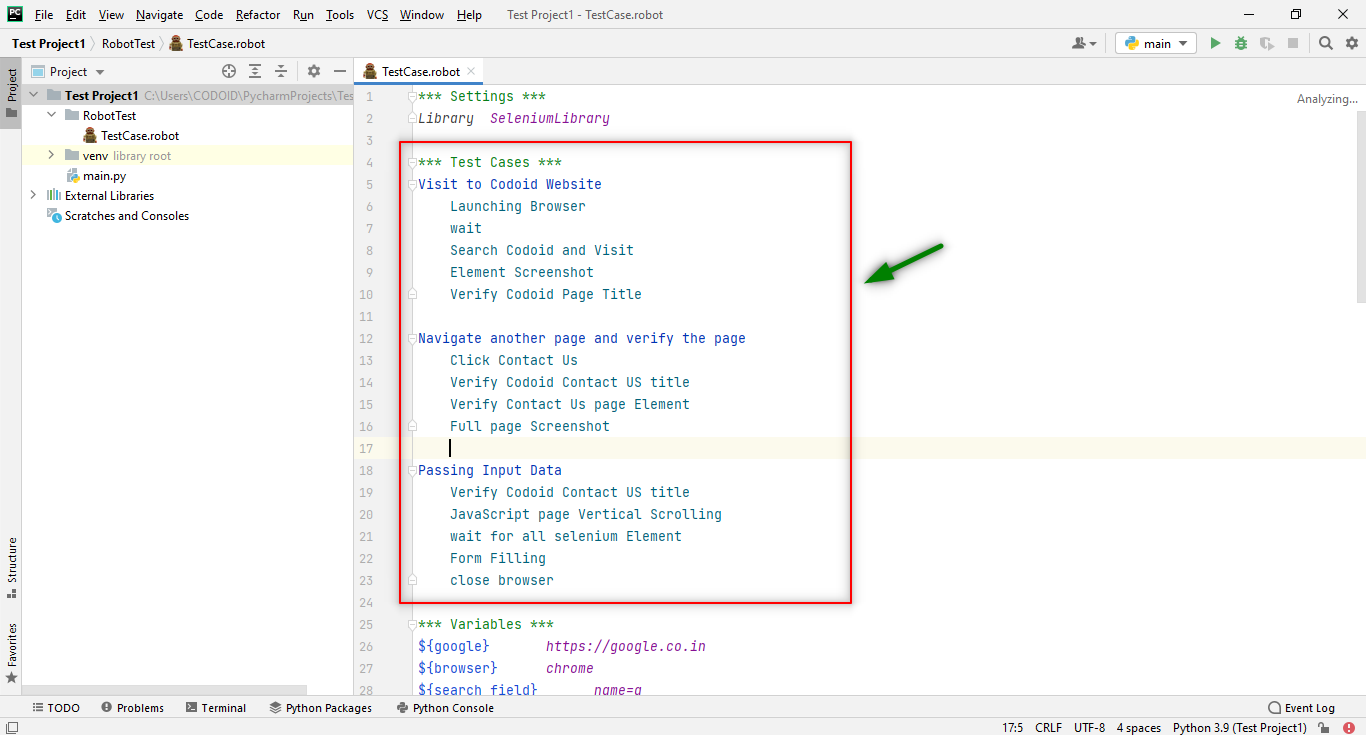

Once the robot project has been created, the structure of the project will be how it’s shown in the following image.

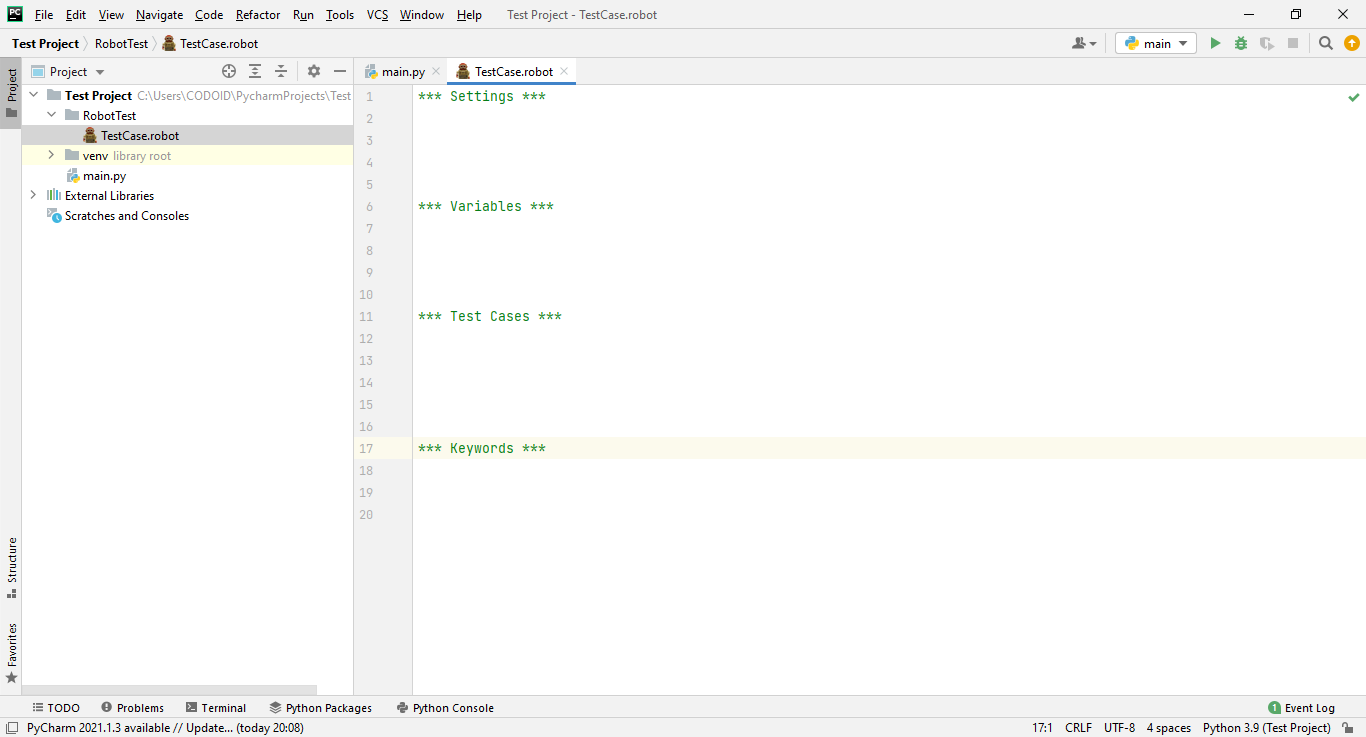

Prerequisites to run Automation Test Cases in the Robot Framework:

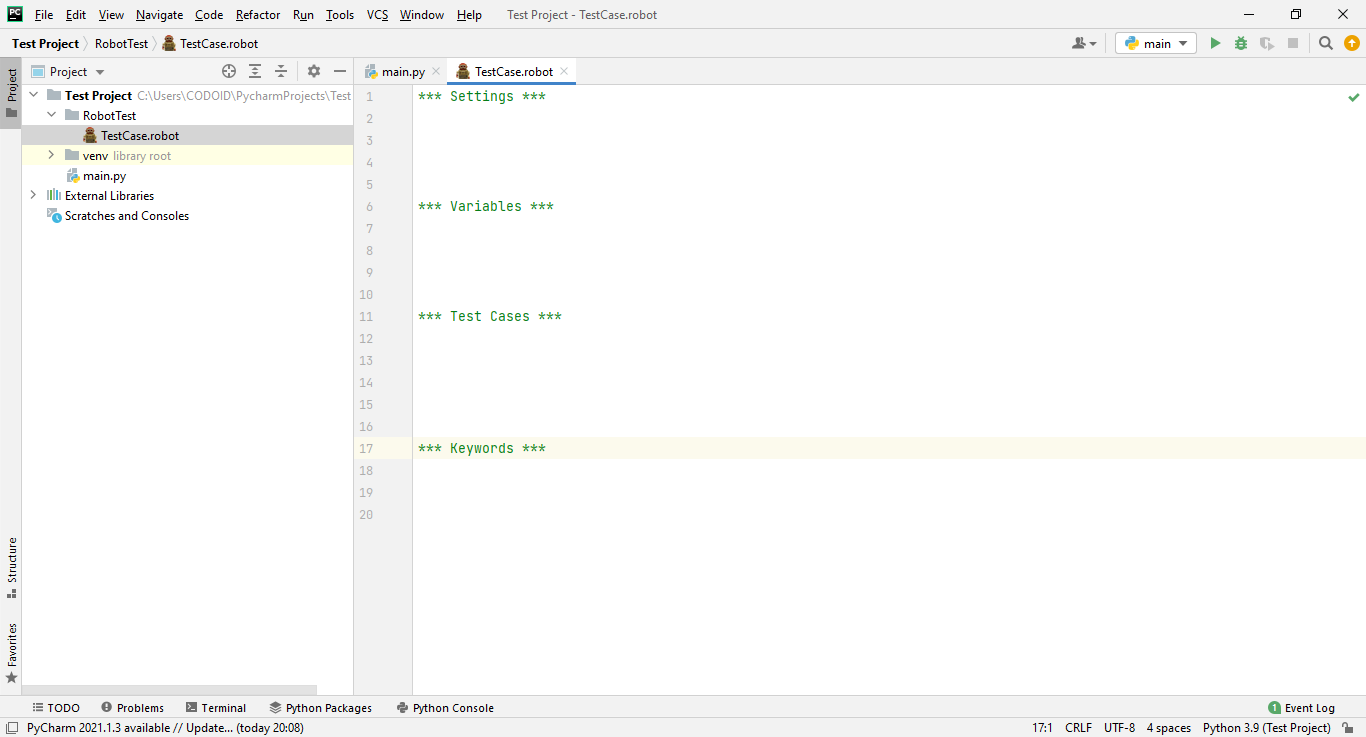

Whenever you write automation test cases in the Robot framework, 4 sections should be there. They are

- Settings

- Variables

- Test Cases

- Keywords

Note:

- These 4 sections are must-know aspects from a beginner’s point of view.

- Each and every section starts with ‘***’ and is followed by the Name of the Section. It also ends with ‘***’.

- One (or) Two Tab spaces are needed in between the Keyword and the Test data (or) Data (or) input data because indentation is very important in Python.

- When you’re about to write a test case, you should first write the test case name and then go to the next line to write the keyword after leaving a tab space.

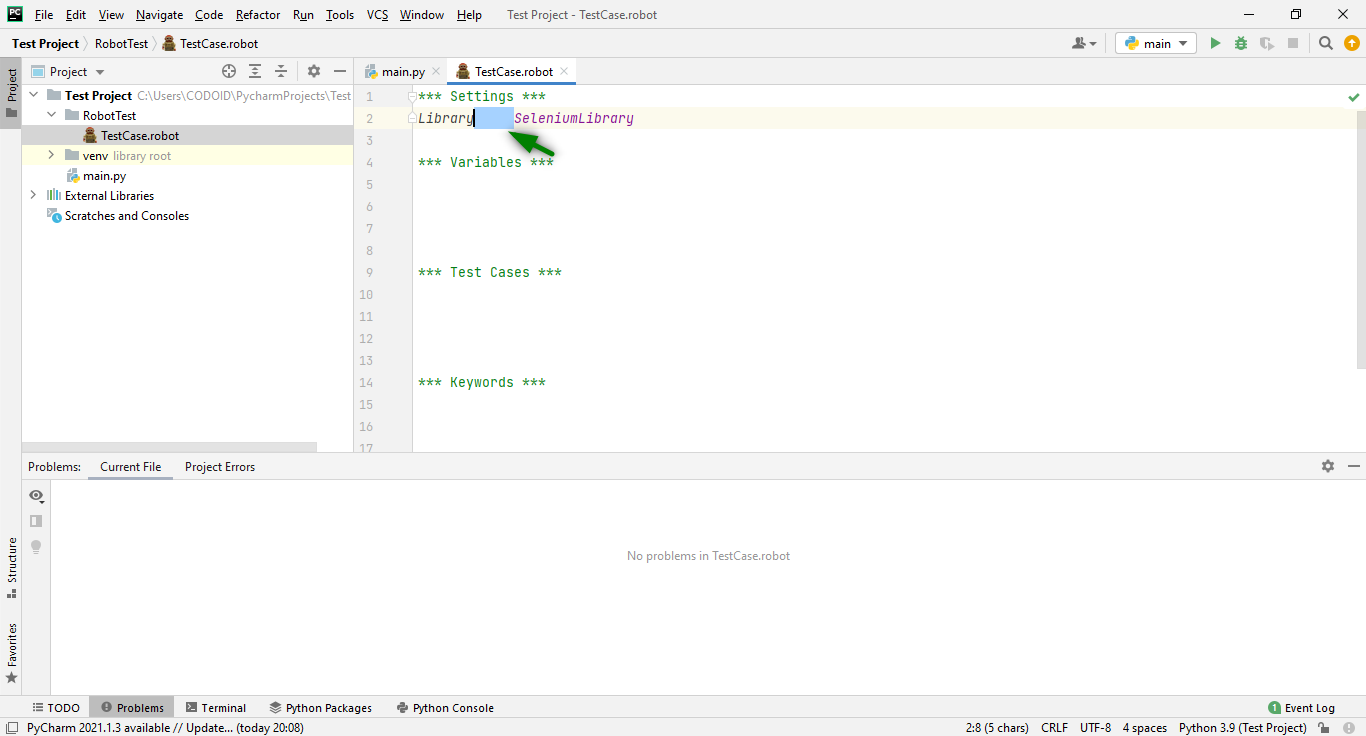

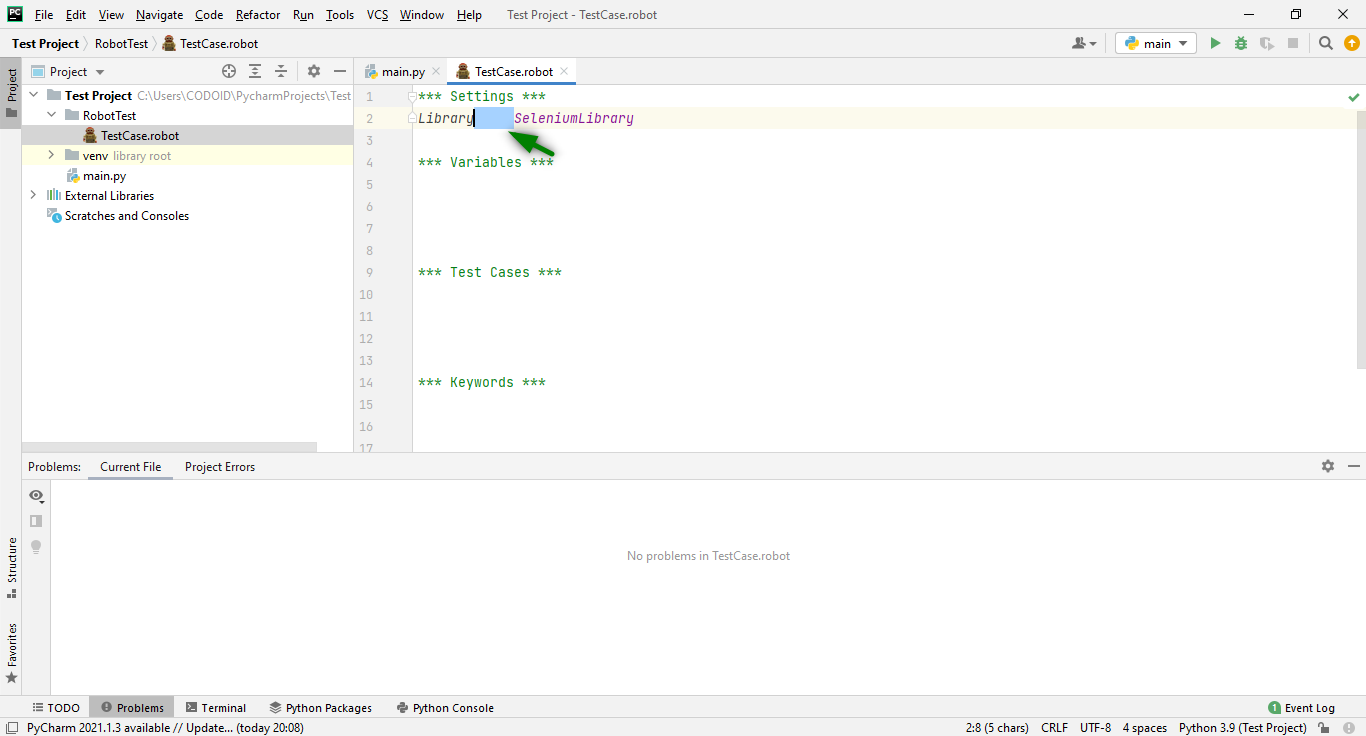

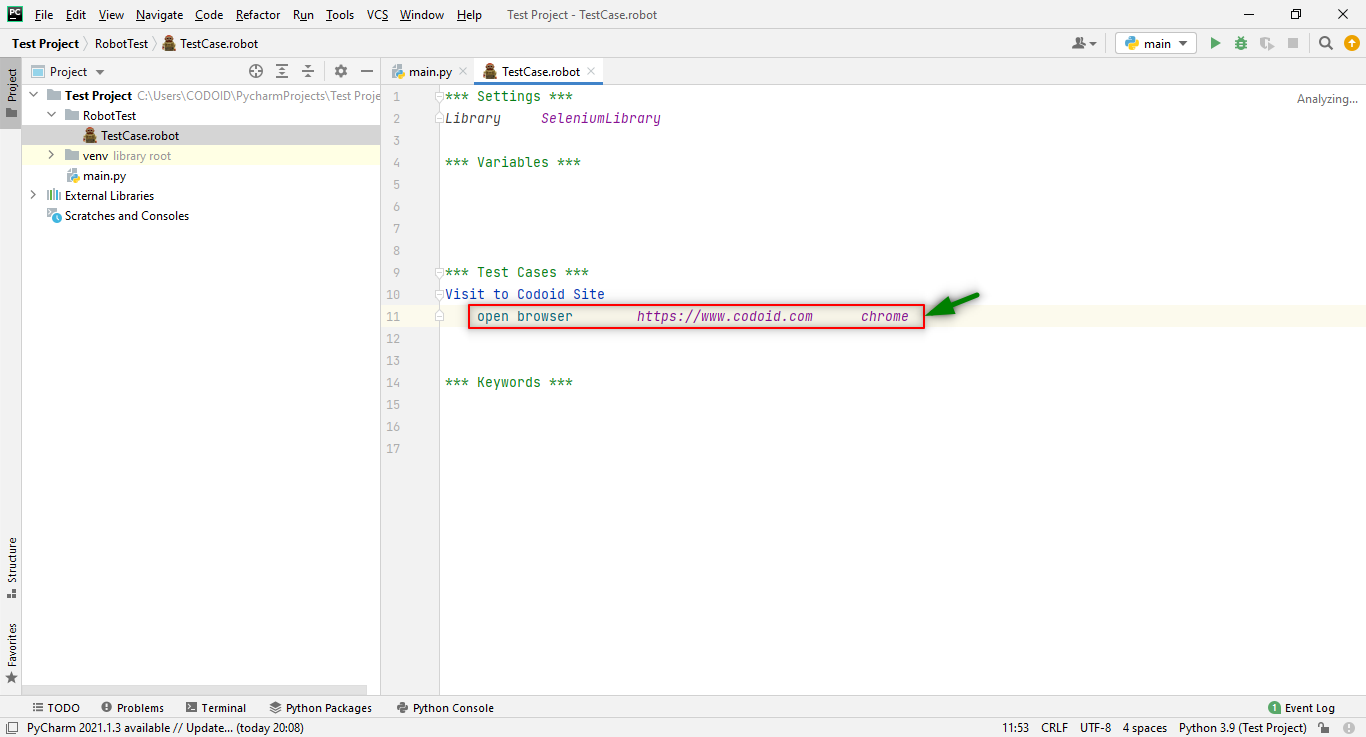

Step 1: Settings

You are going to import the Selenium Library in this Test case.

The first line indicates the code block and the second line uses the “Library” keyword to import the “SeleniumLibrary”.

Syntax:

***Settings***

Keyword LibraryName

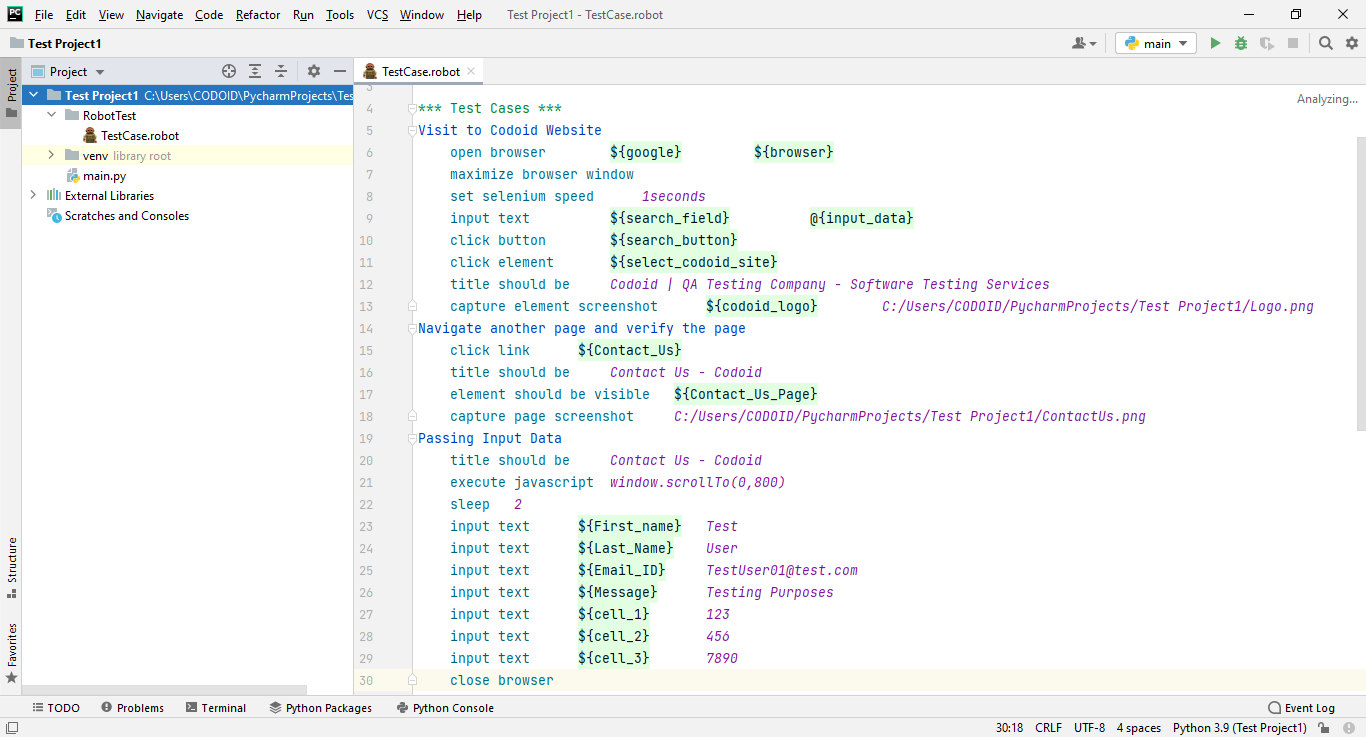

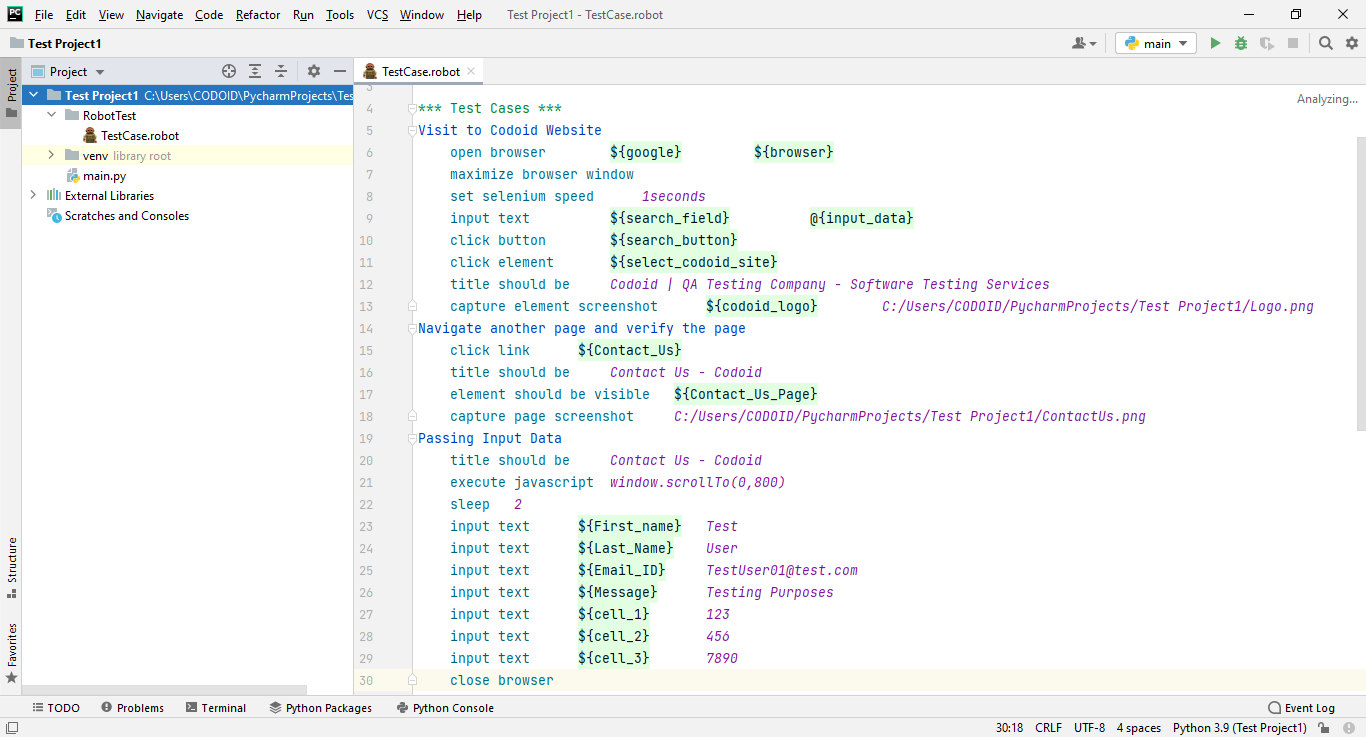

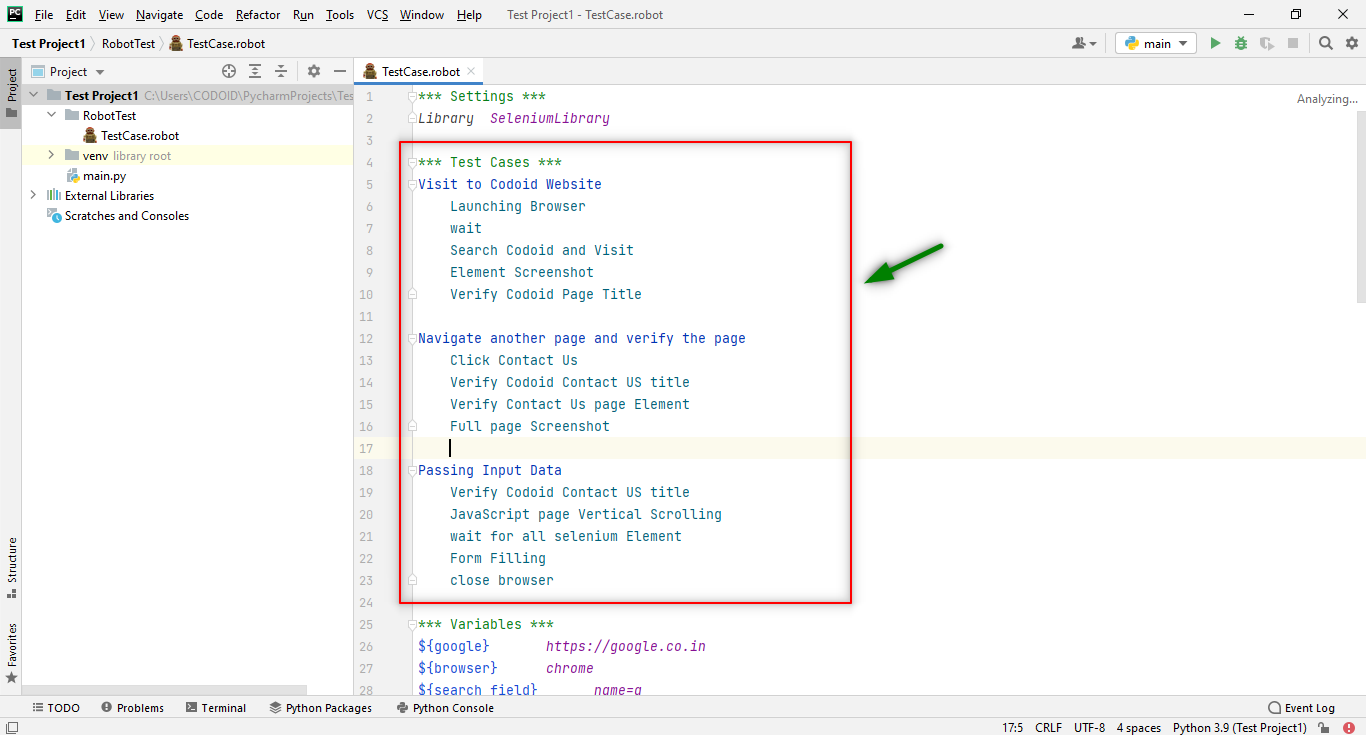

Step 2: Write the Test Cases

Now, you have to write exact test cases as per your requirements. First, you have to assign a test case name, and then enter the test case information in the next line. You can write these test cases using free defined Selenium Keywords. Being a leading QA Company, we wanted our Robot Framework Tutorial to be a comprehensive guide even for beginners. That is why we’re going through everything from scratch.

Syntax:

***Test Cases***

TestCase_Name

Keyword Locators(or)data Testdata

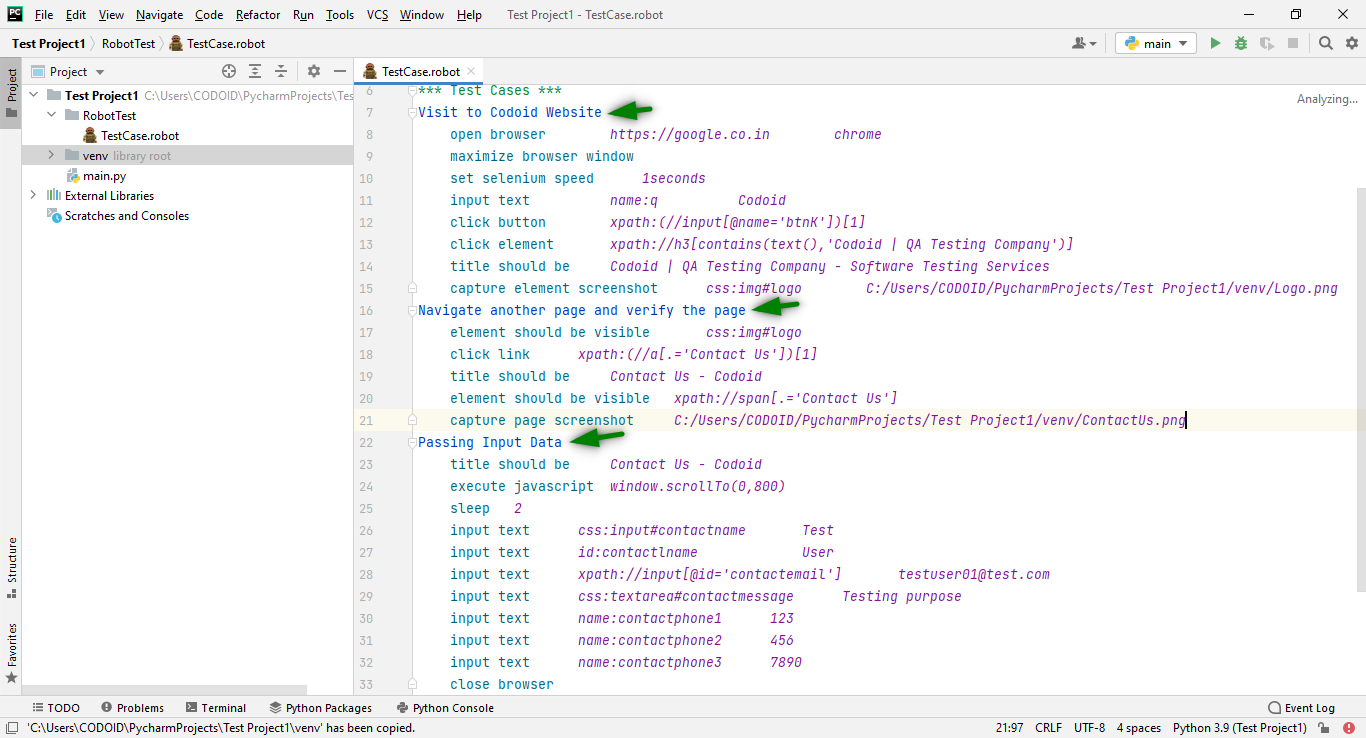

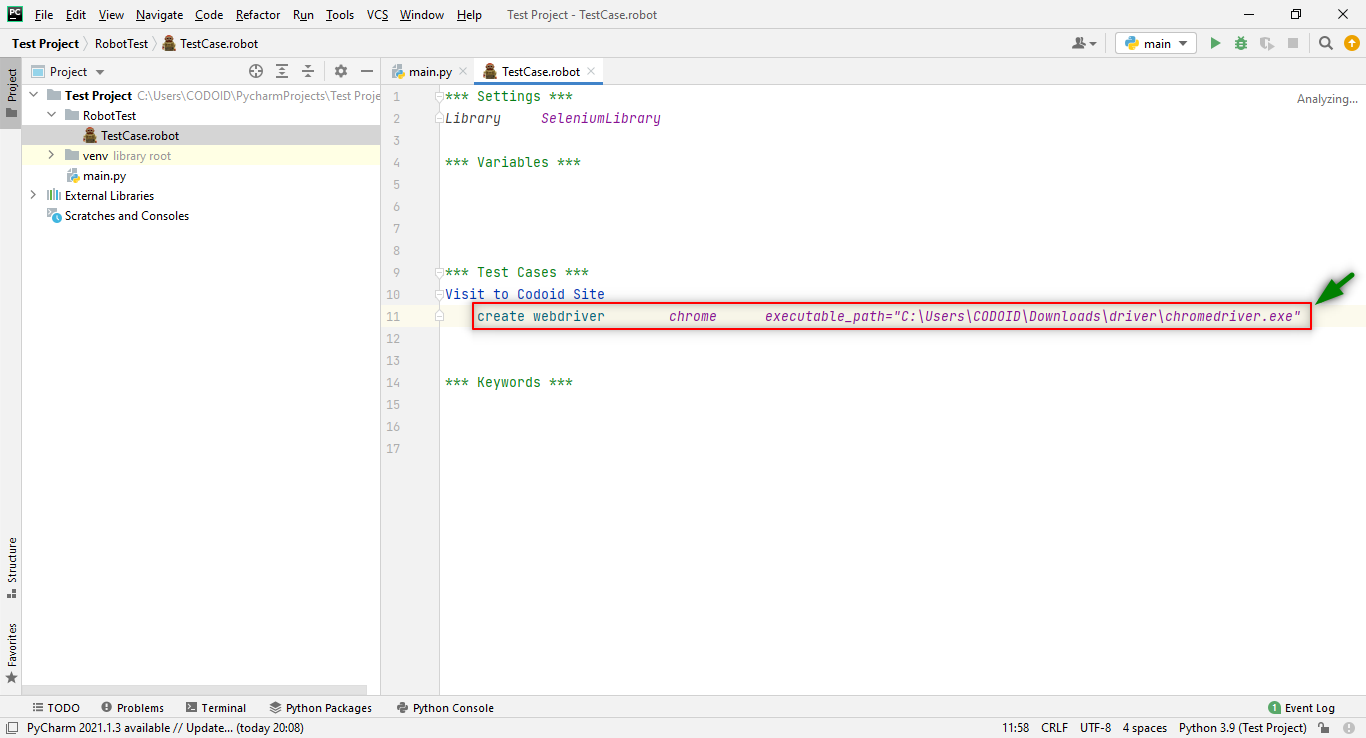

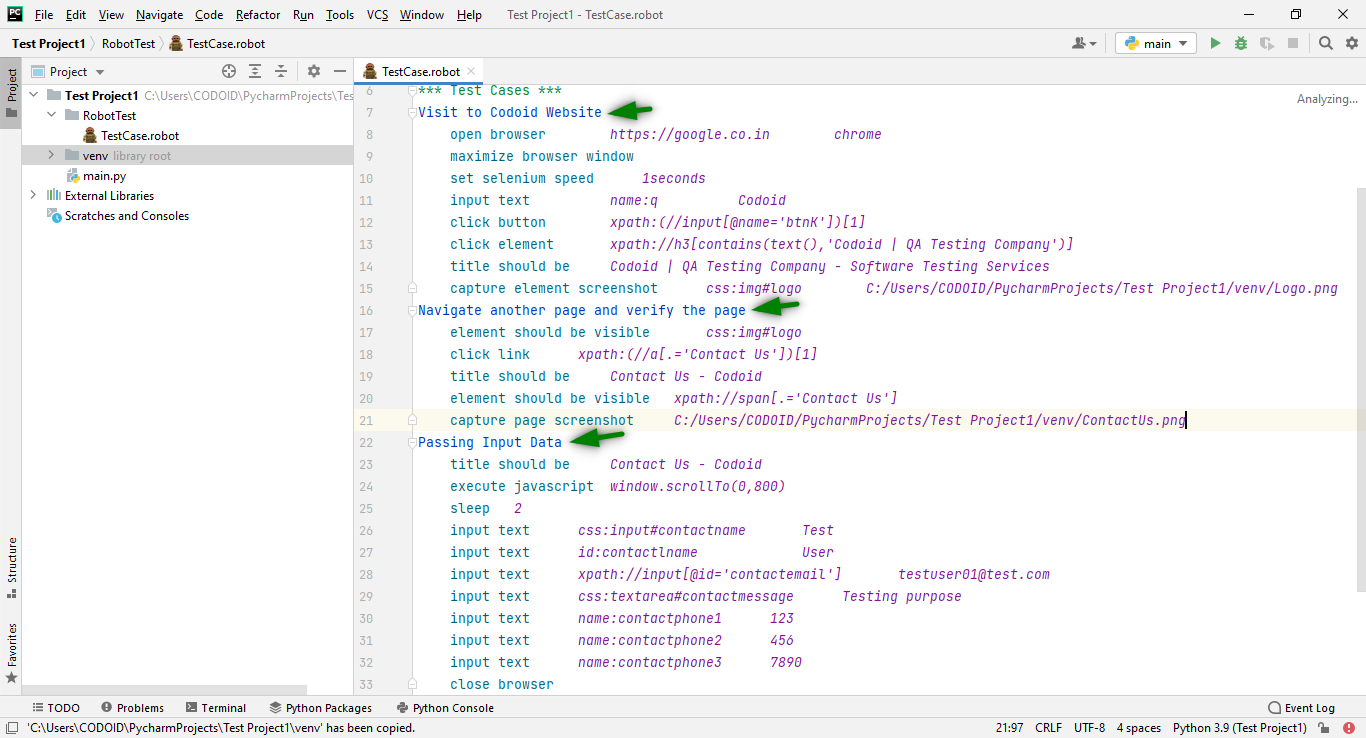

In this Robot Framework Tutorial, we are going to perform the following test cases:-

1. Visit the Codoid Website.

2. Navigate to another page and verify it.

3. Pass the Input data.

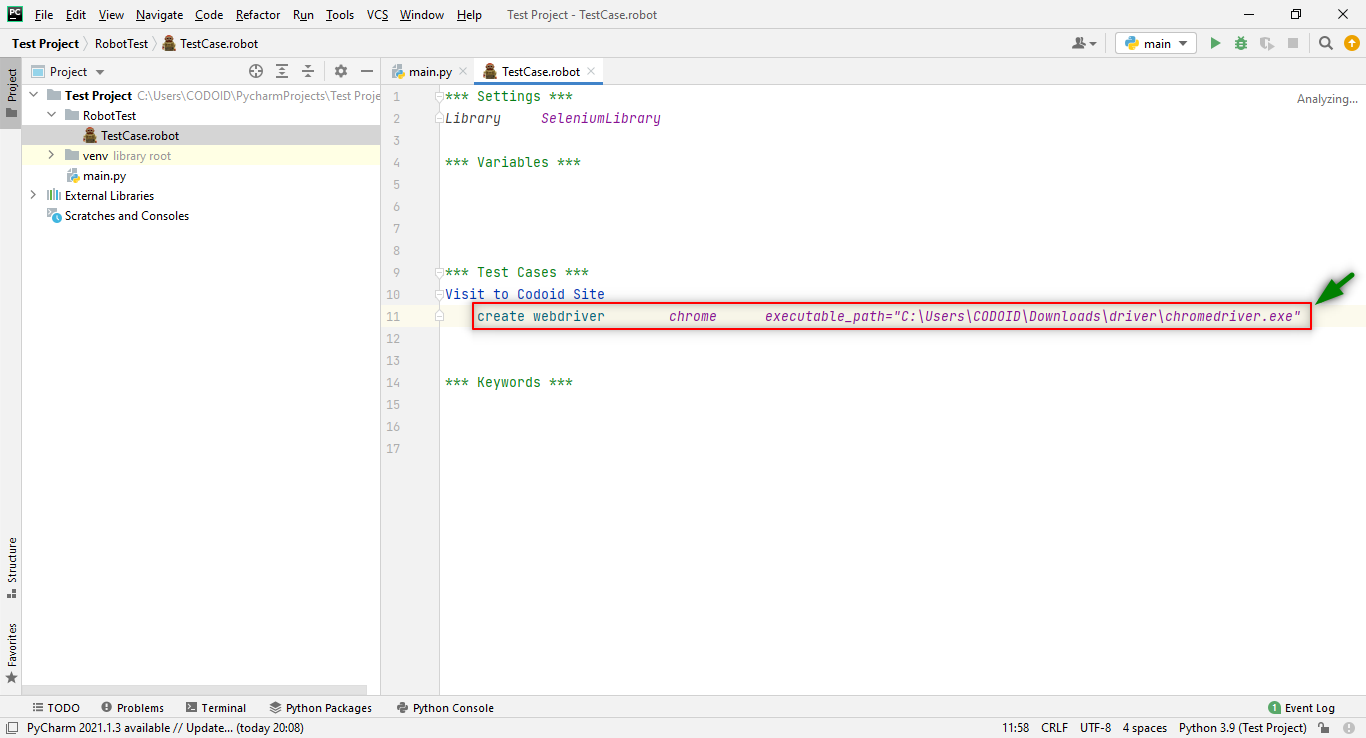

The first step towards automation is launching your browser. Based on the browser you are using (Chrome, Firefox, etc.), you have to download the various drivers. Once you have downloaded the drivers, you have to extract the downloaded file and copy the driver.exe file path. We will be using Chrome for our demonstration.

Syntax for the Chrome launch:

***Test Cases***

TestCase_Name

Keyword driver_name executable_path= “driver_path”

There is also another method that can help us save some valuable time by reducing the time to launch the browser. You can,

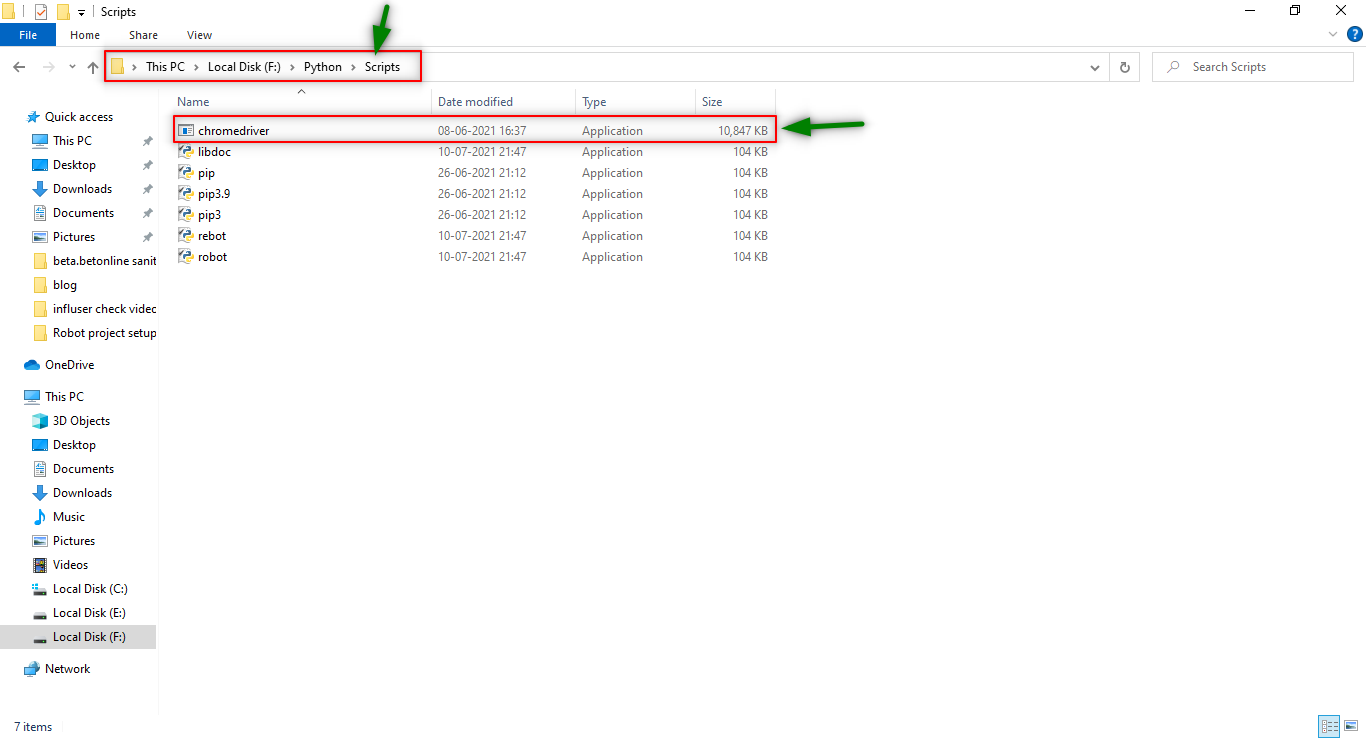

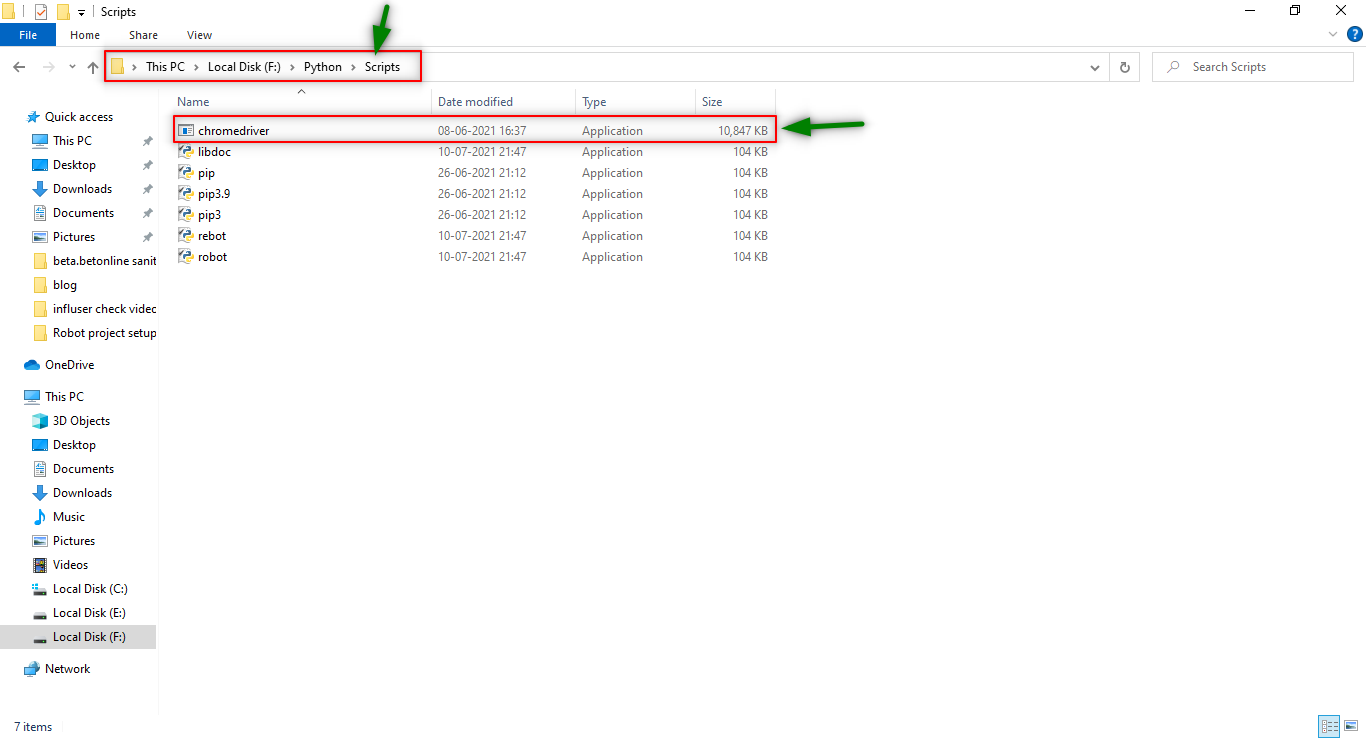

1. Copy or Cut “various_driver.exe” file from your local.

2. Paste them under the Python/Scripts page under your local system as shown below.

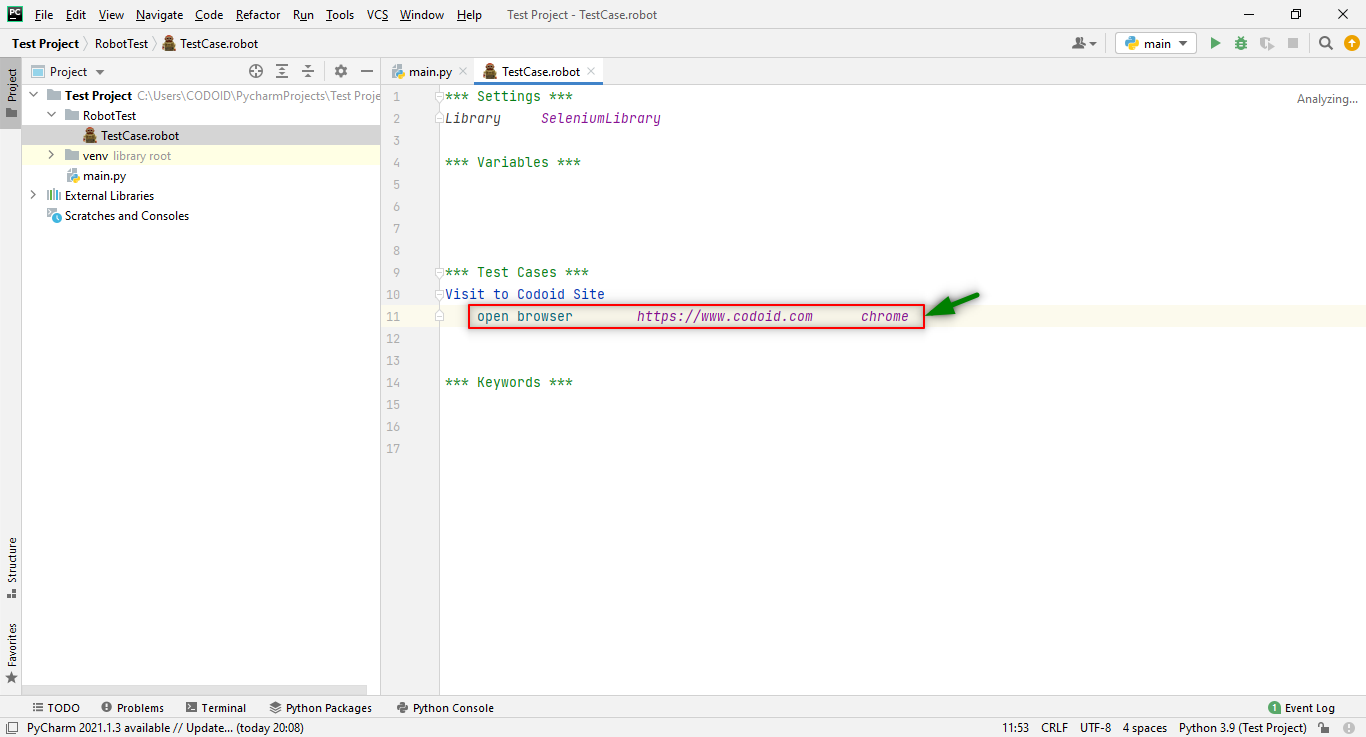

After this step, we can directly launch the browser without pointing to the driver location in the framework. We have to write the following syntax instead of using the “Create webdriver” keyword to set up the path.

Direct browser launching syntax:

***Test Cases***

TestCase_Name

Keyword input_data (or) URL driver_name

Based on the requirements, we have to write the test steps under the test case name with the Selenium Library Keywords and respected Locators along with the test data.

In this example, will be performing the test case with limited Selenium Keywords in the robot framework. For more Selenium Library keywords please visit their website.

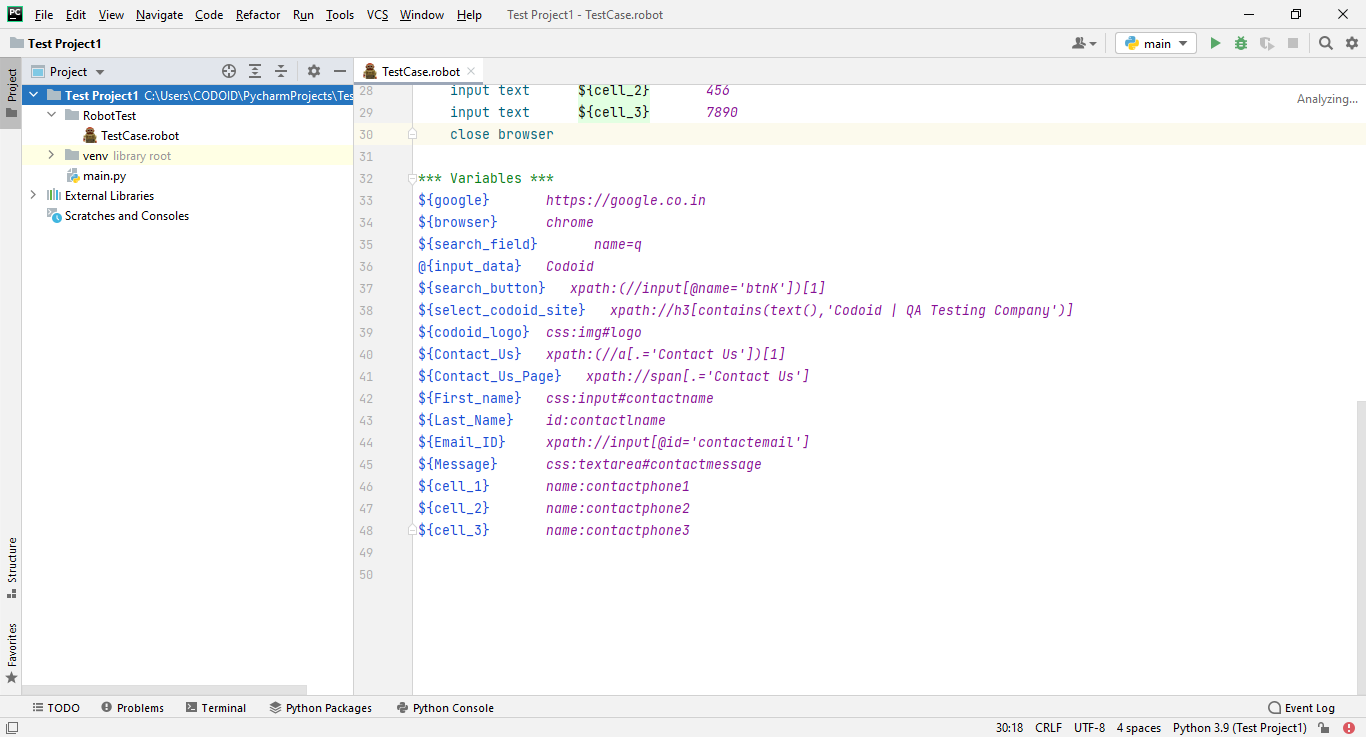

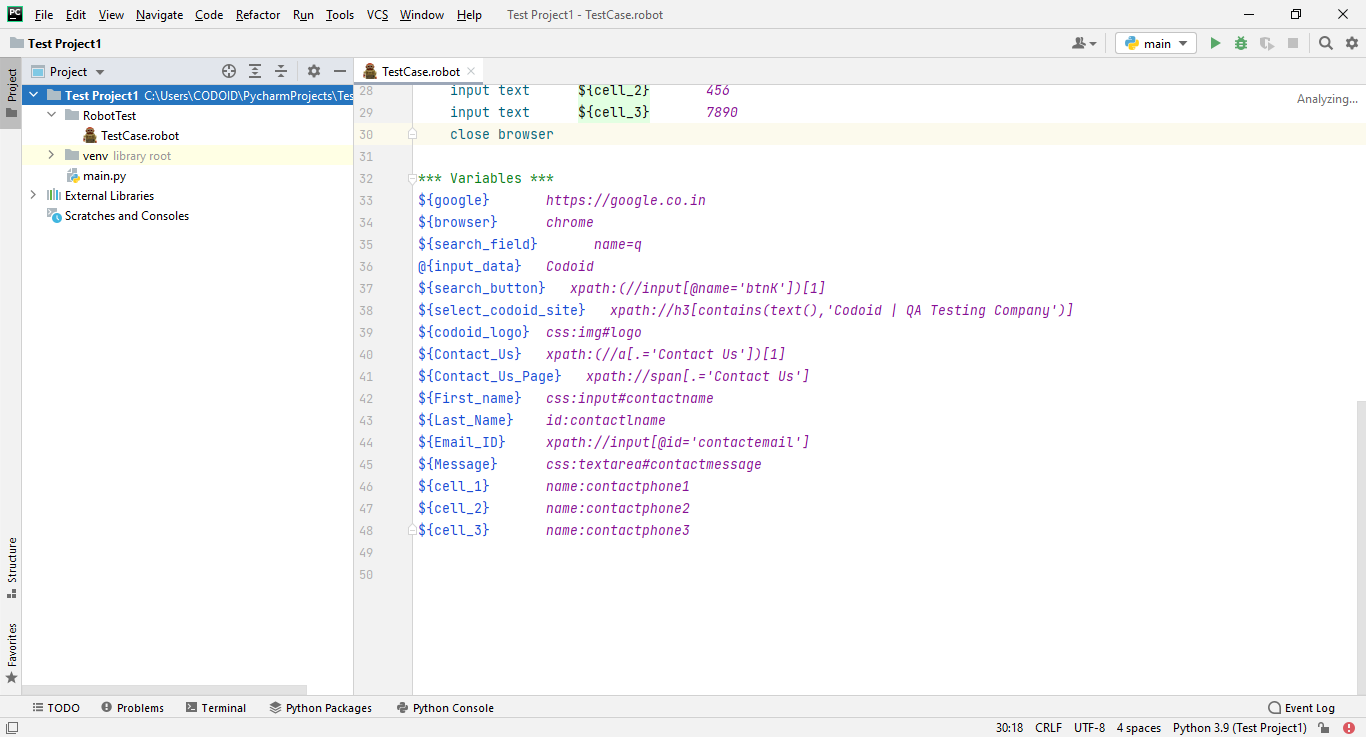

Step 3: Define the Variable

The variables are elements used to store values which can be referred to by other elements.

Syntax:

***Variables ***

${URL} url

@{UserCredentials} inputs

Defined variables can be replaced in test cases and we can even reuse them anywhere in the test case when needed.

Apart from this, we have a lot of variables available in Robot Framework. We have mentioned only the required ones here

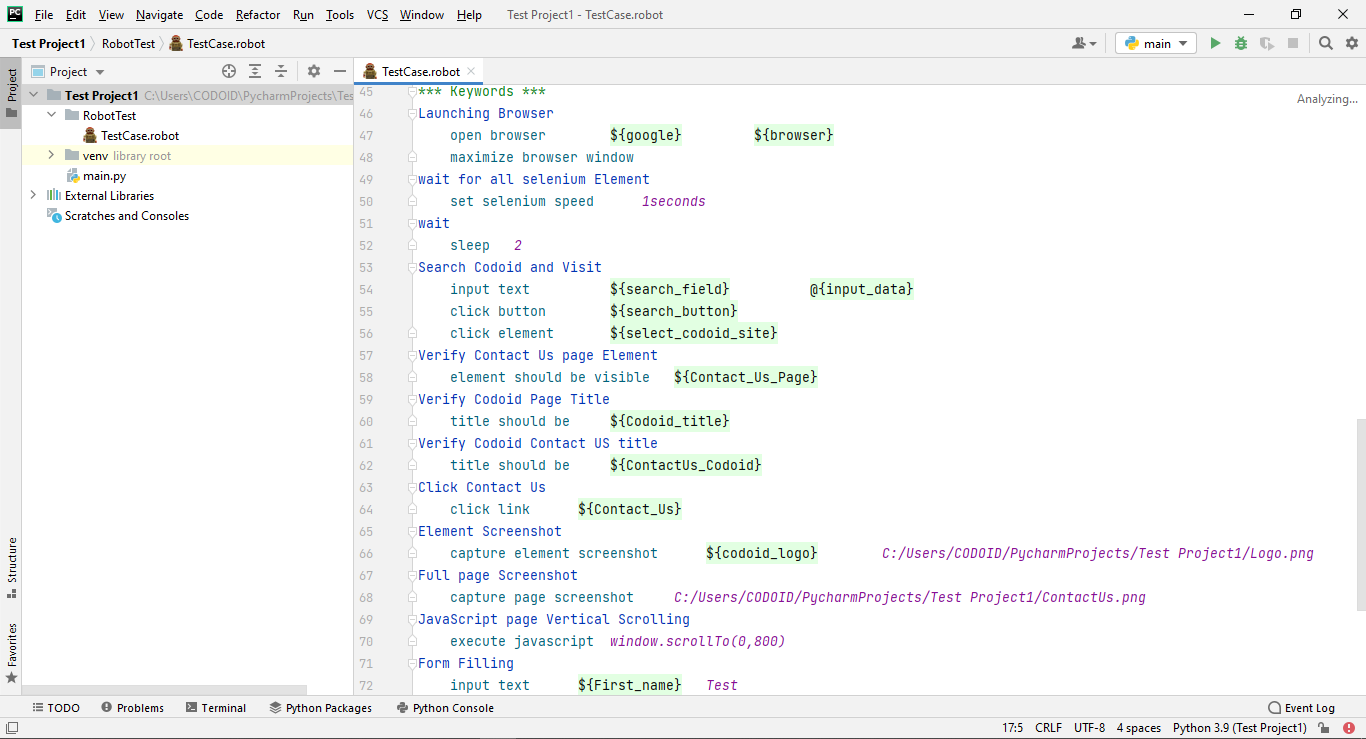

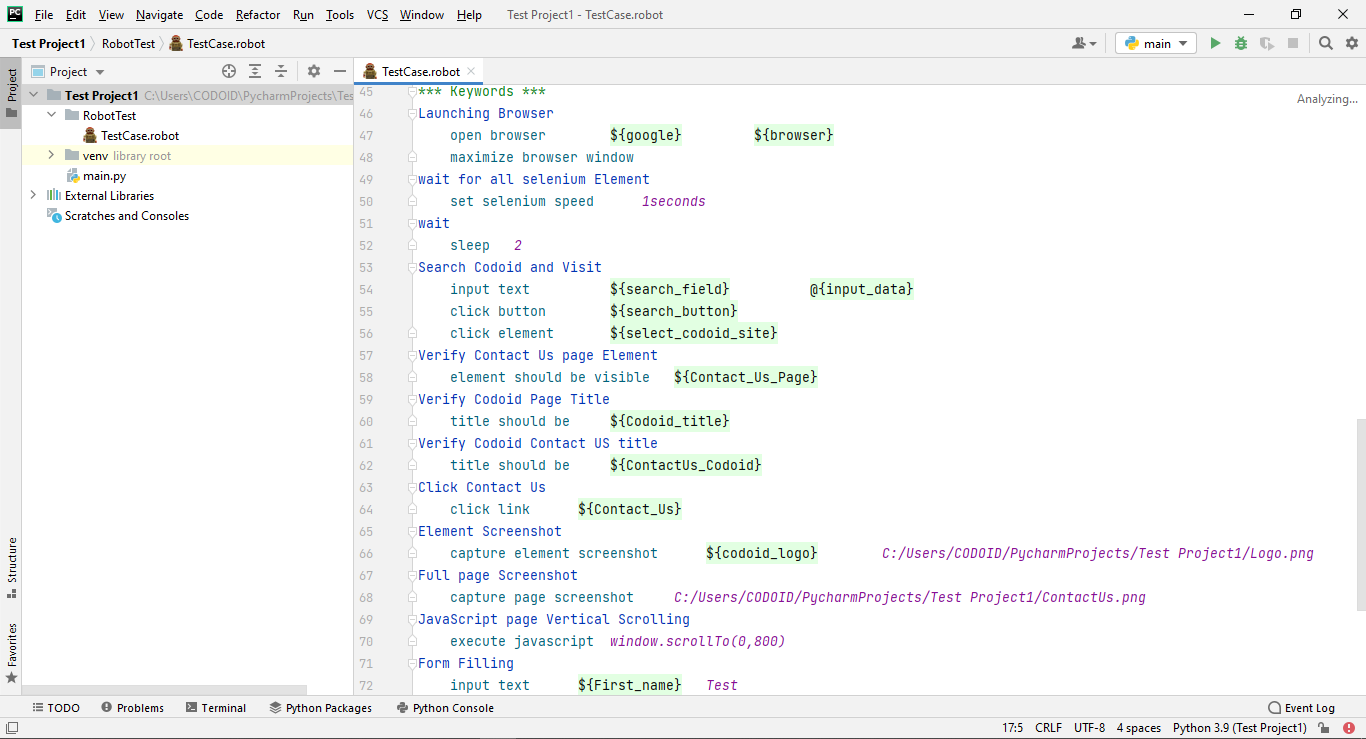

Step 4: Define the KEYWORDS

Since the keywords are reusable in the Robot framework, it helps manage the changes in a faster and efficient manner. You can even create our own keywords here.

Syntax:

***Keywords***

Keyword_Name

Selenium_keyword locators input_data

Once you write your own keyword, you can replace the test case steps with the keywords.

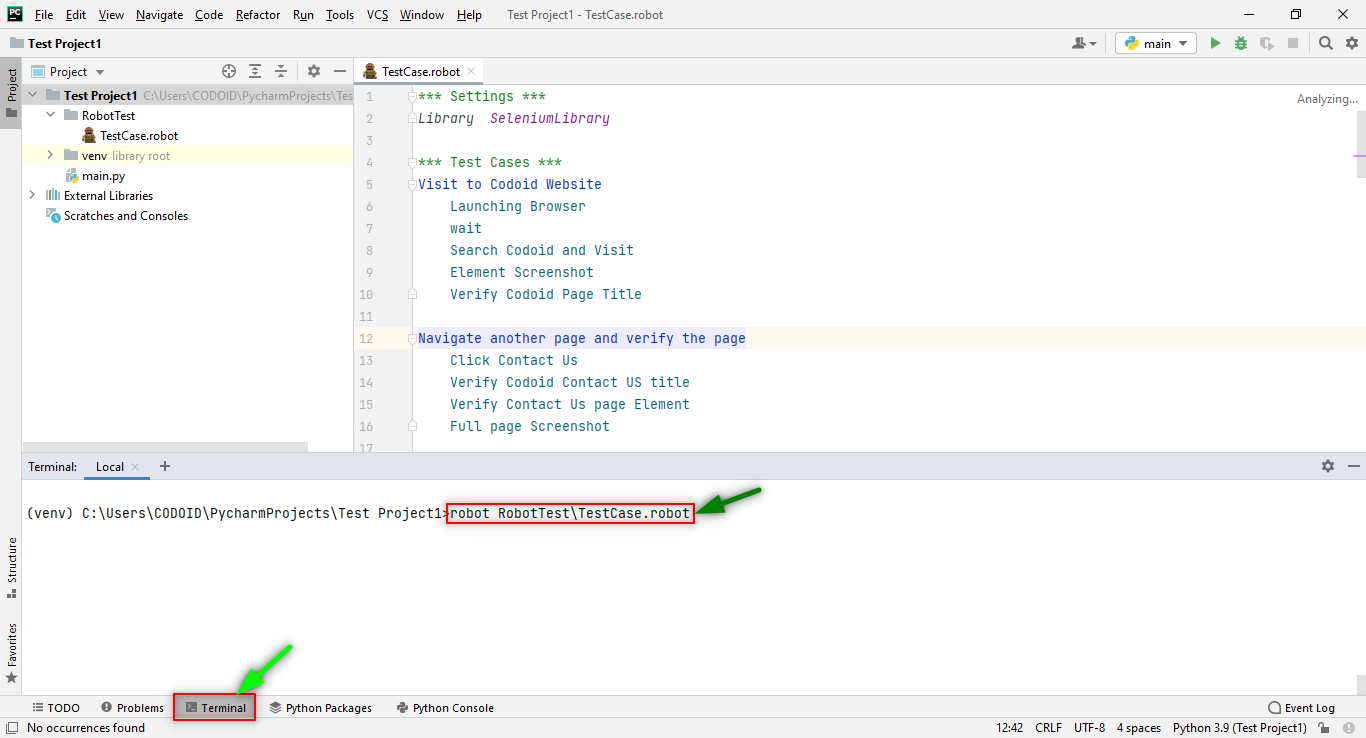

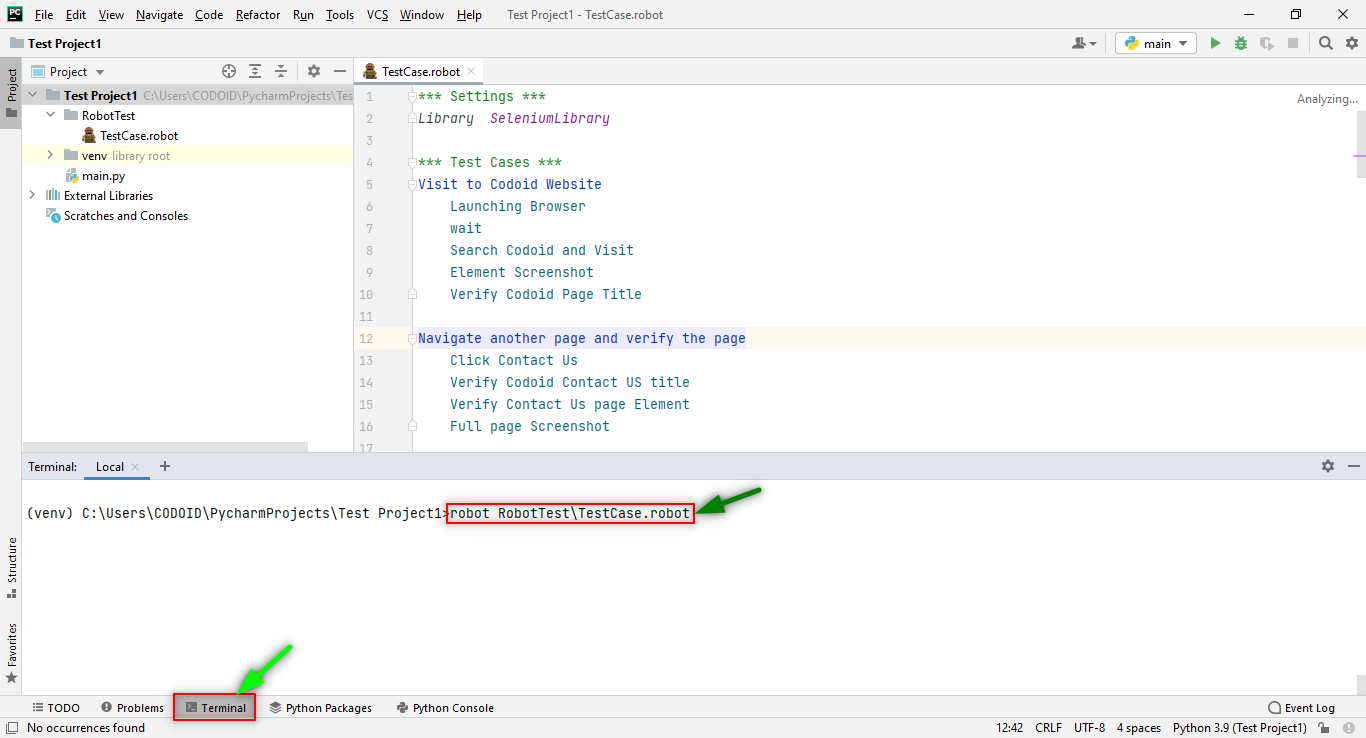

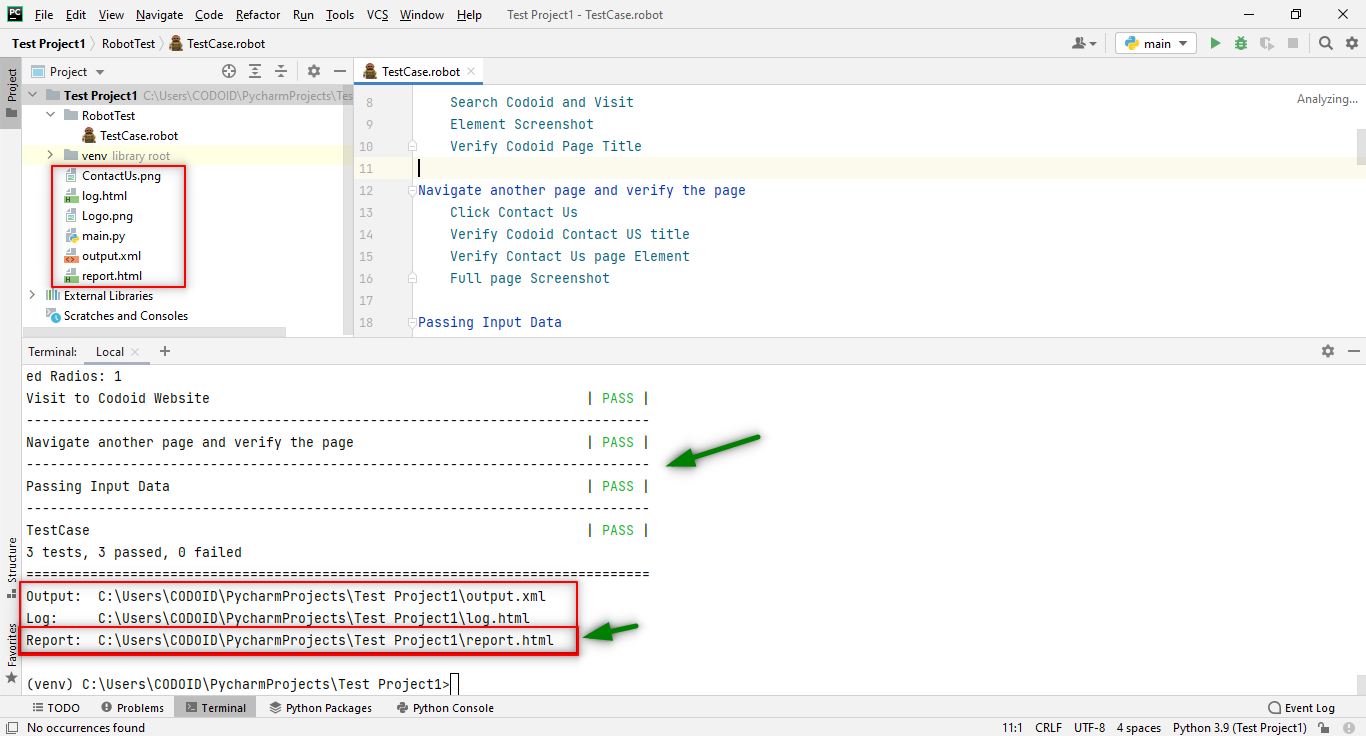

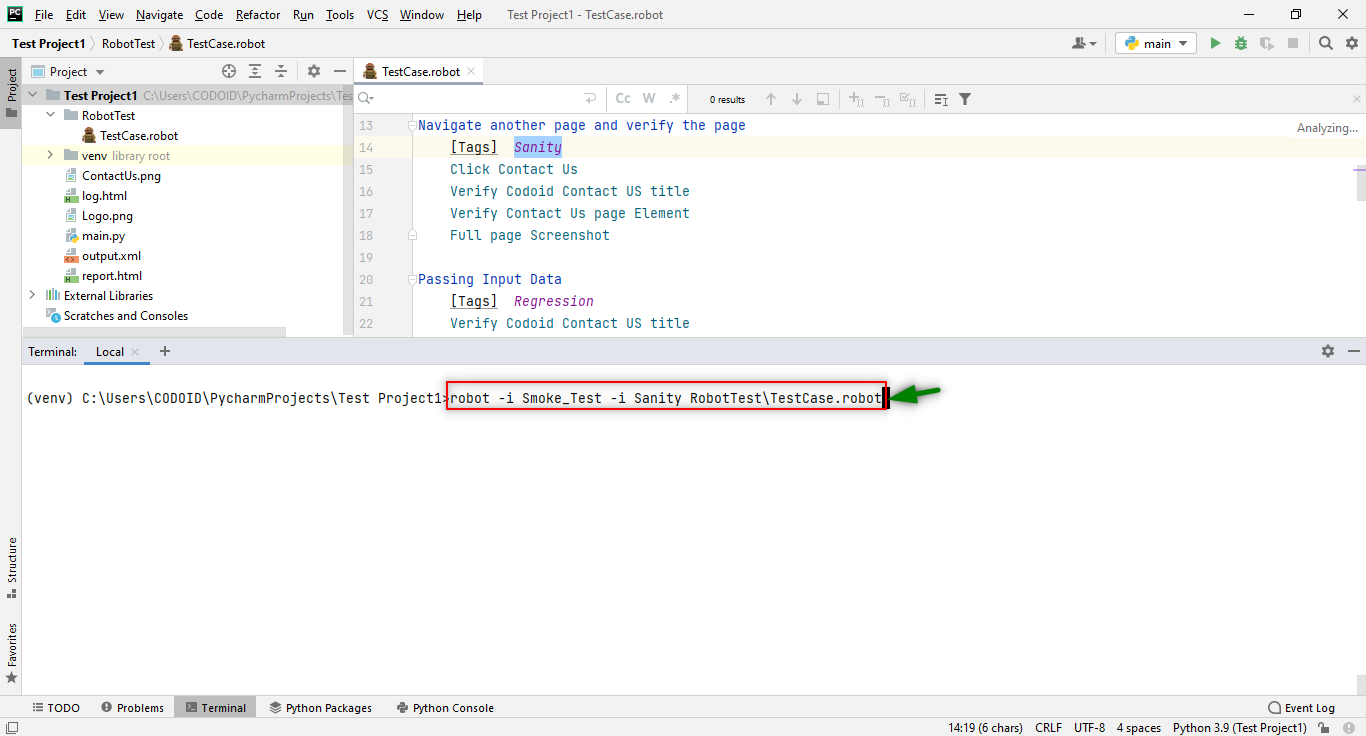

Even once all of this is done, we won’t be able to run our project directly in the PyCharm Robot framework. We would have to go to the Terminal and use the below command

robot directory_name\file_name.robot

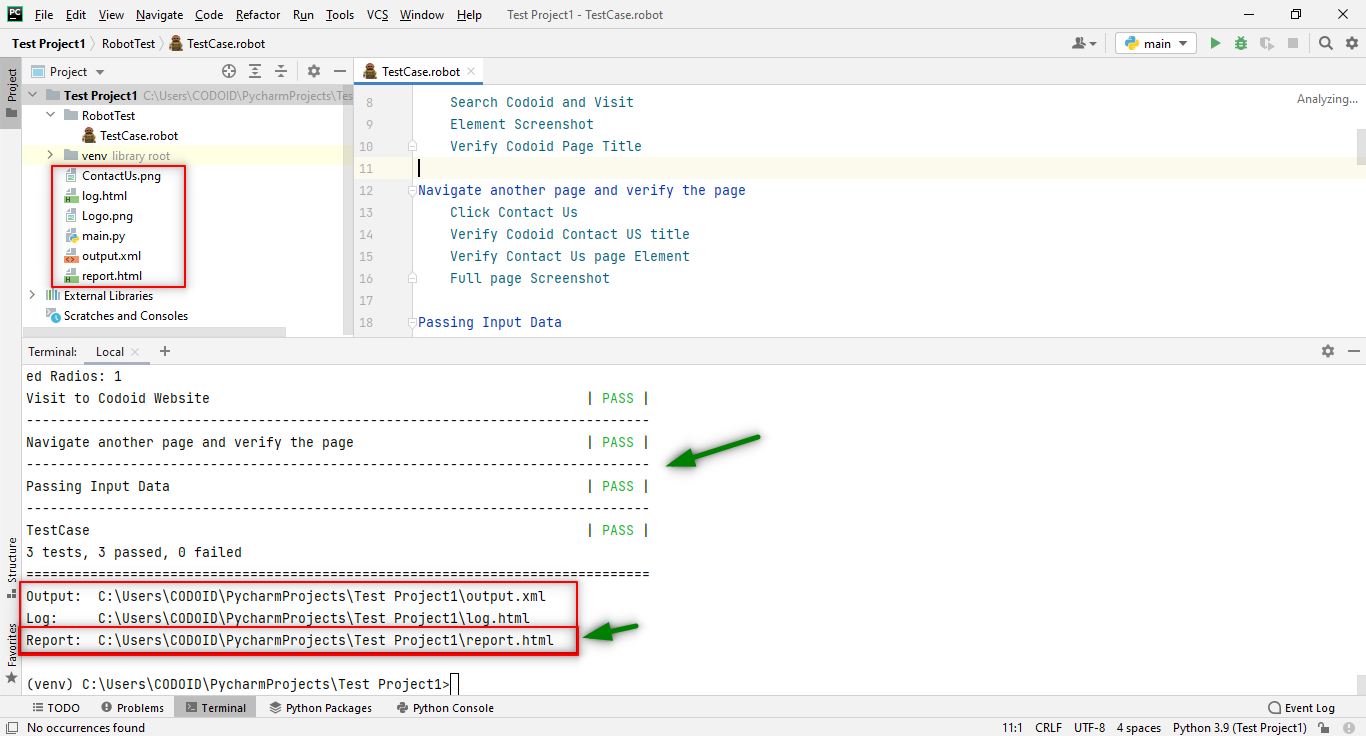

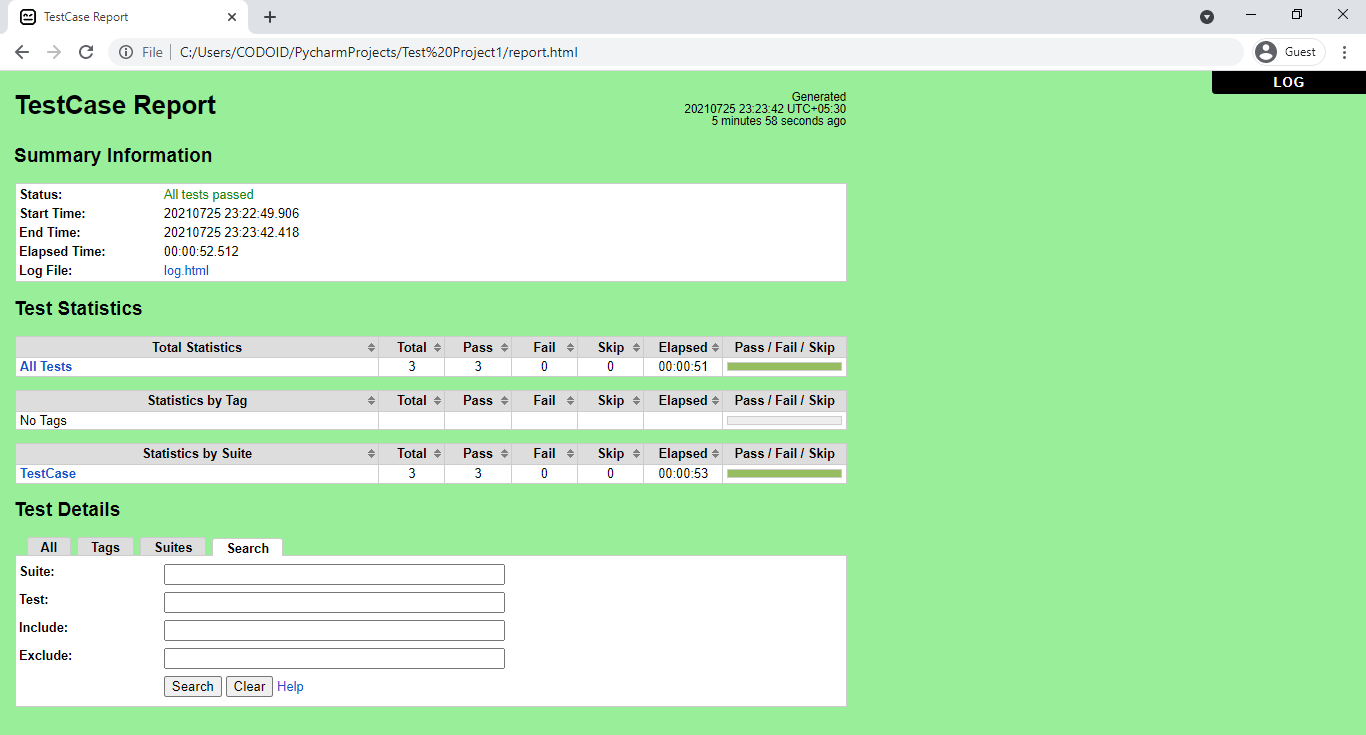

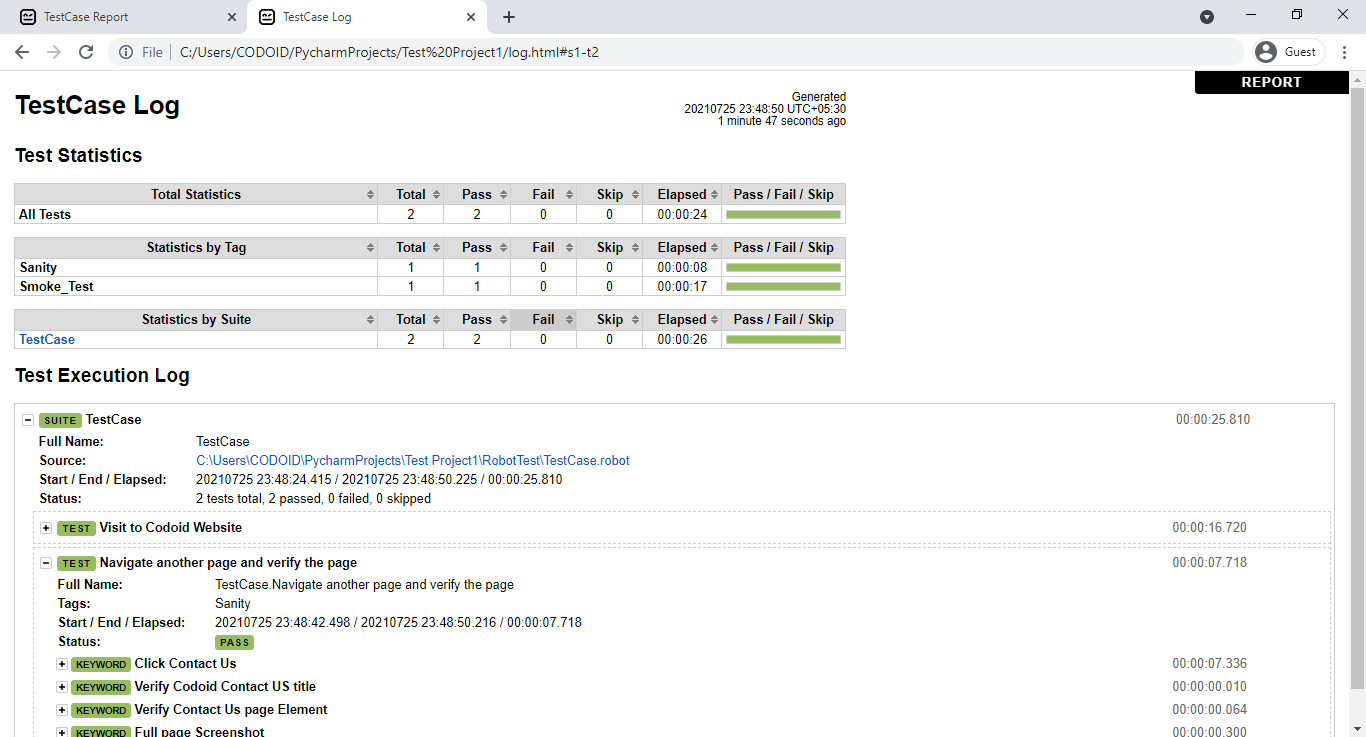

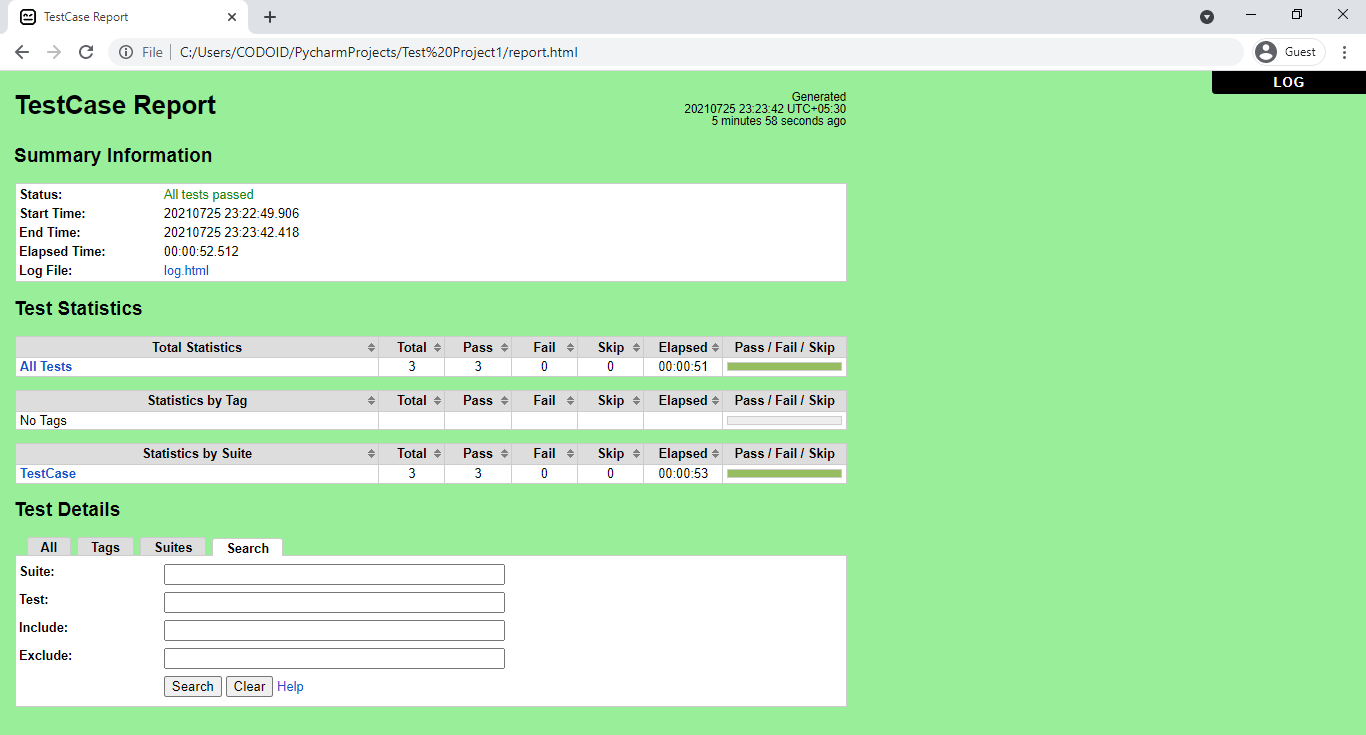

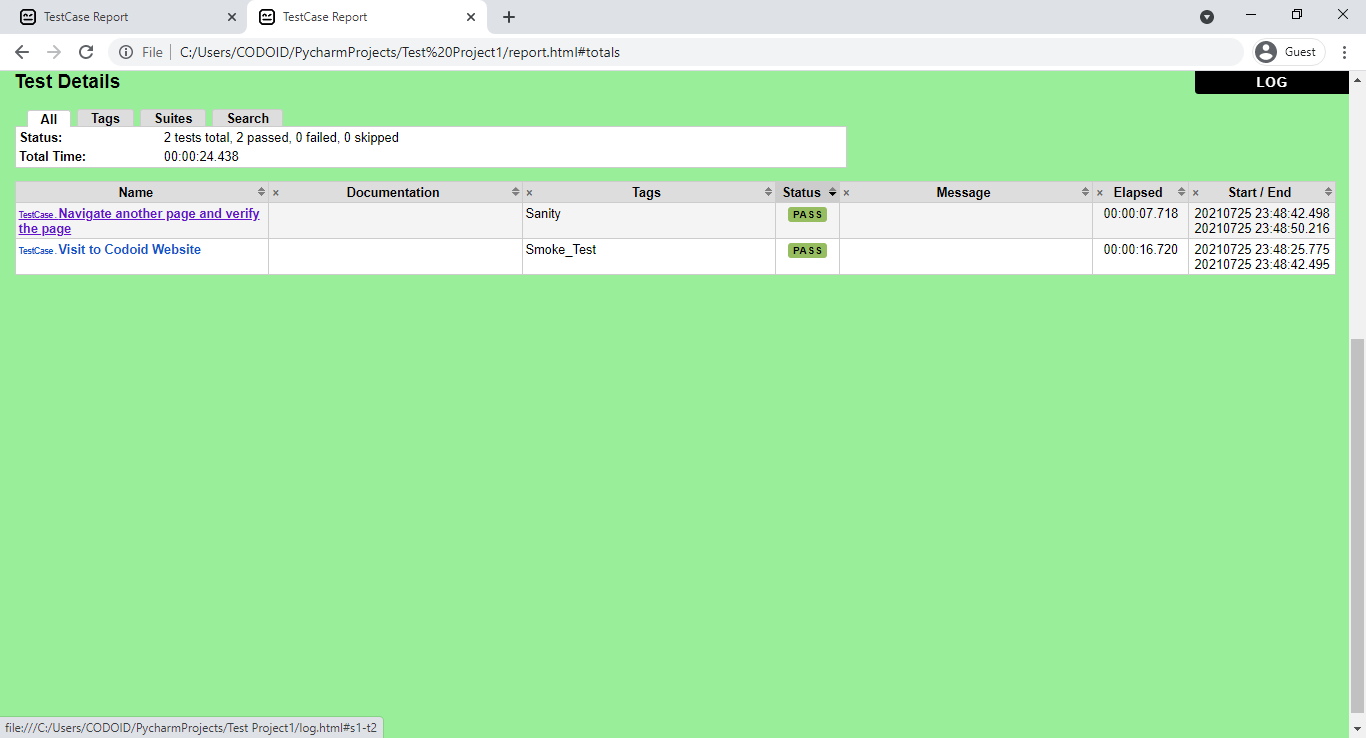

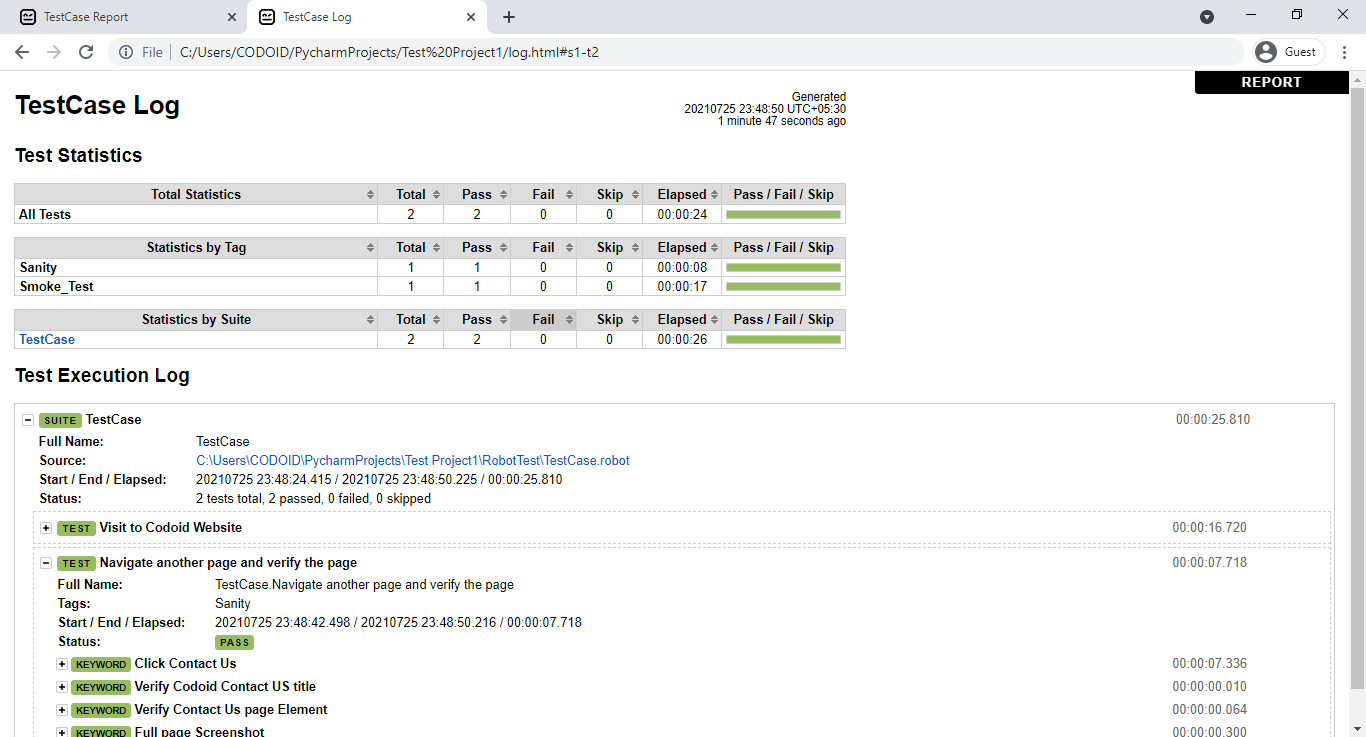

Once the project has been successfully executed, the output.html, the log.html, and the report.html files along with the screenshots for each test case will be automatically created in our project structure. So we can copy and paste it into any browser to check the report of the execution of our project.

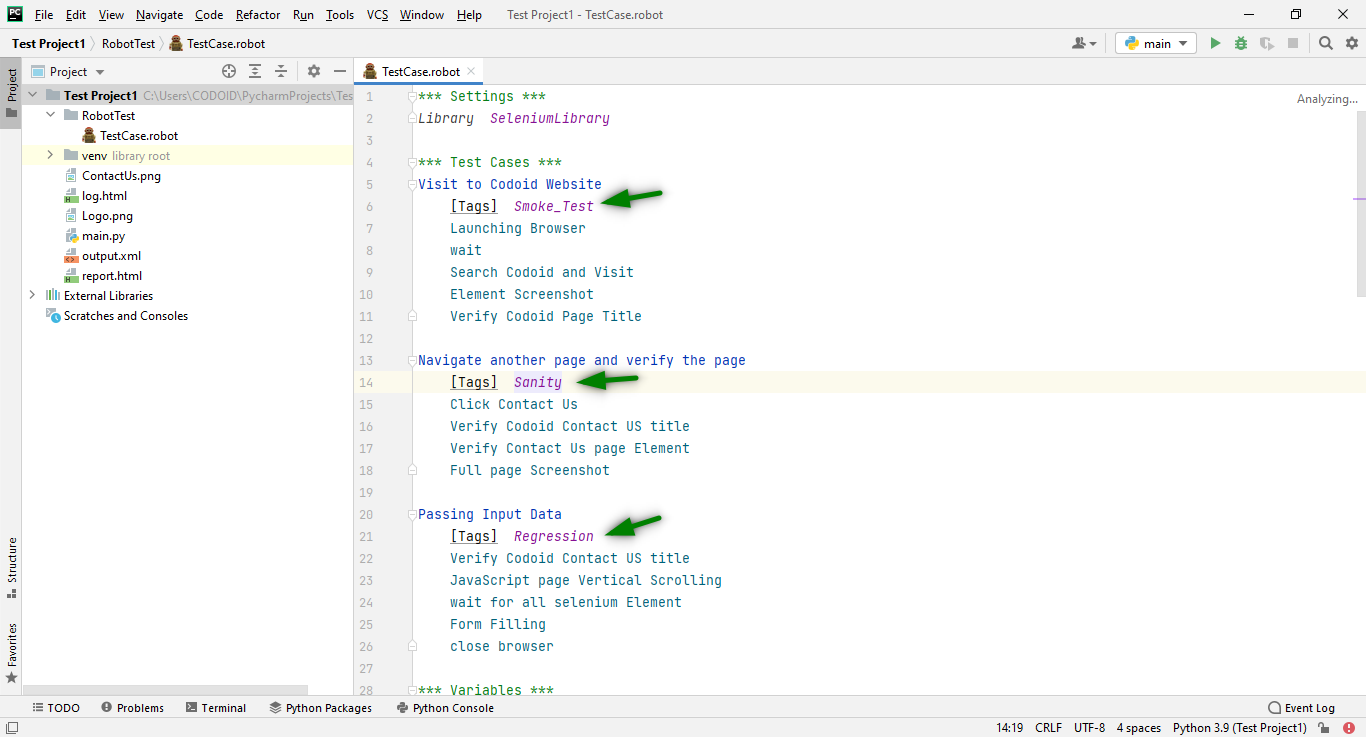

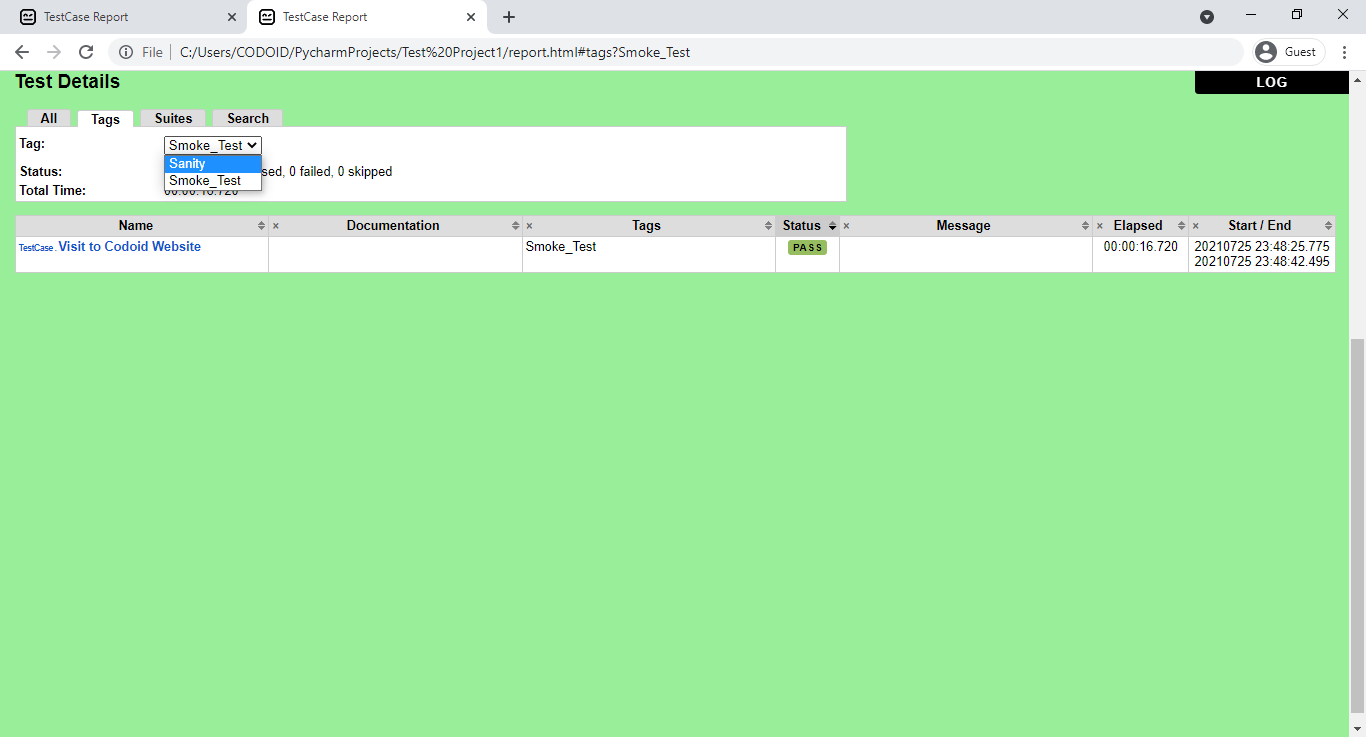

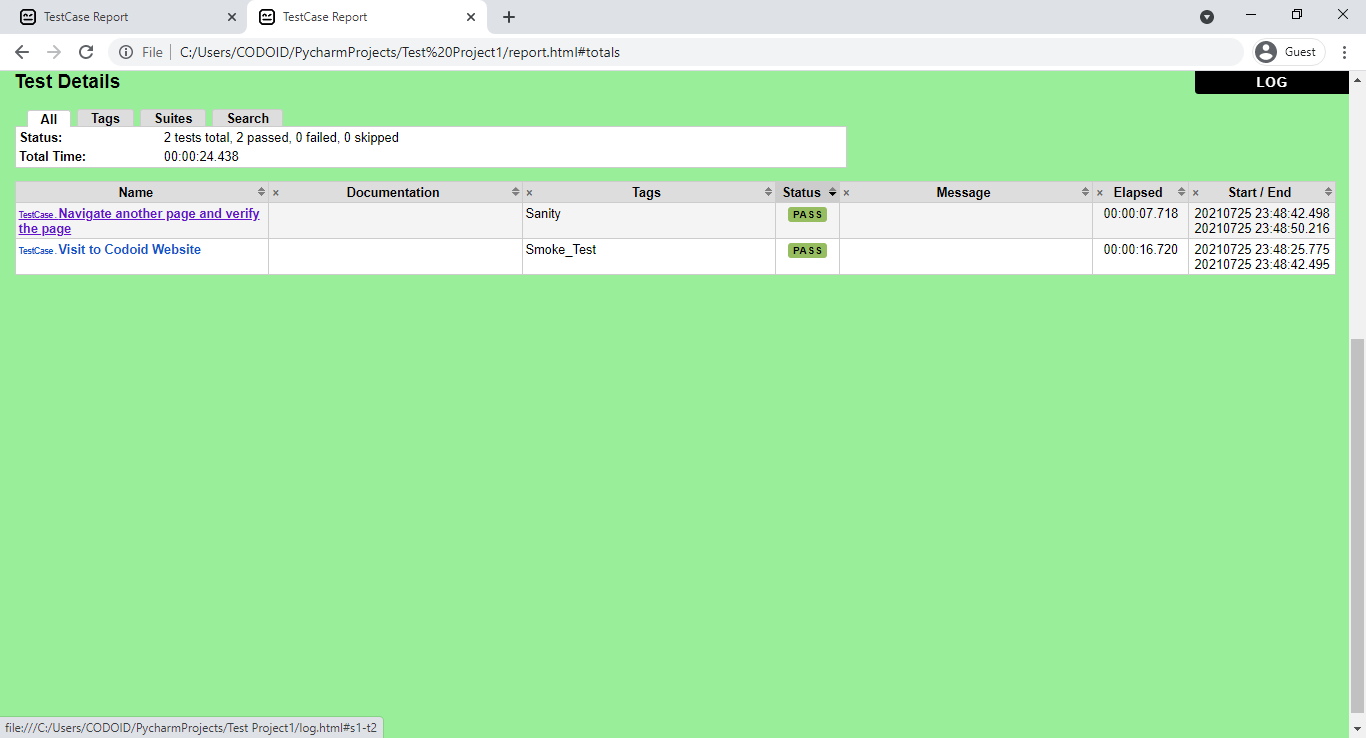

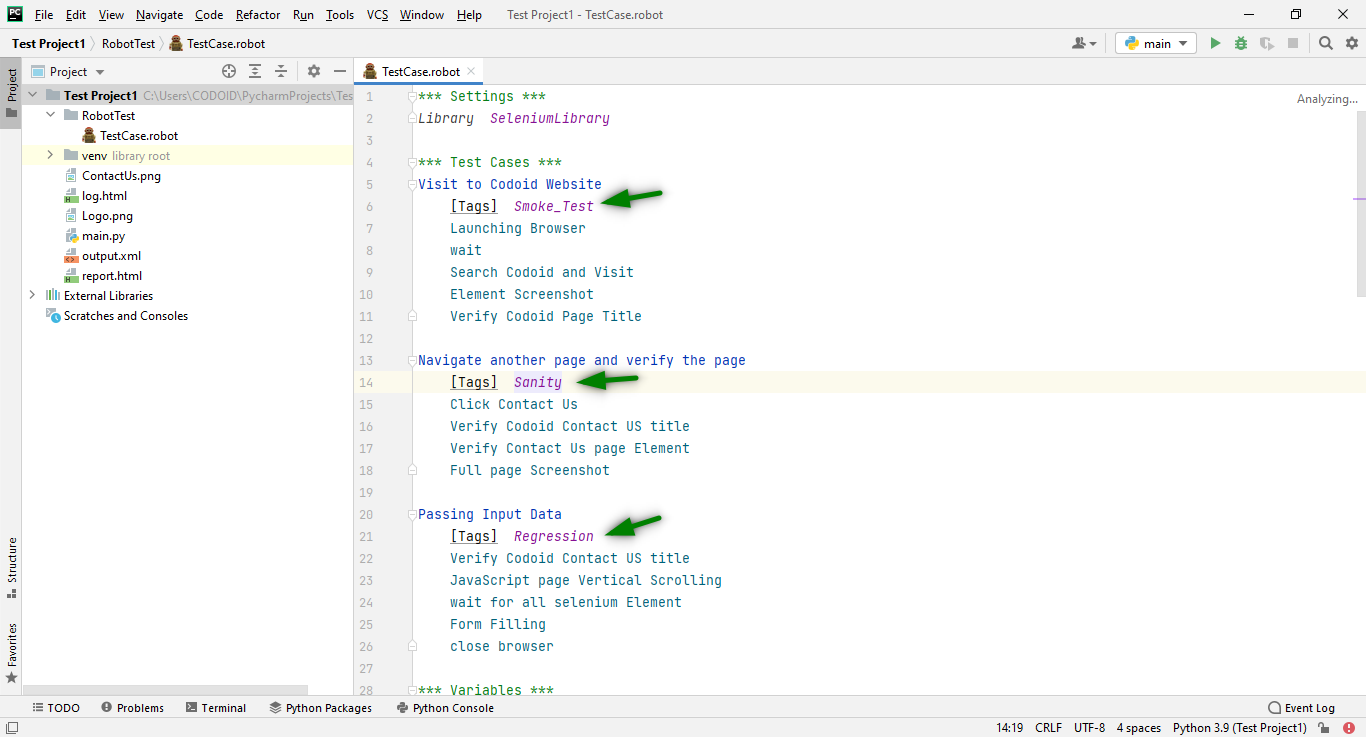

Here we have executed all the test cases. But if you are looking to run a separate test case, you can do so by using Tags. You should include the tags with the name of your choice inside the test cases section under the test case name as shown in the below image.

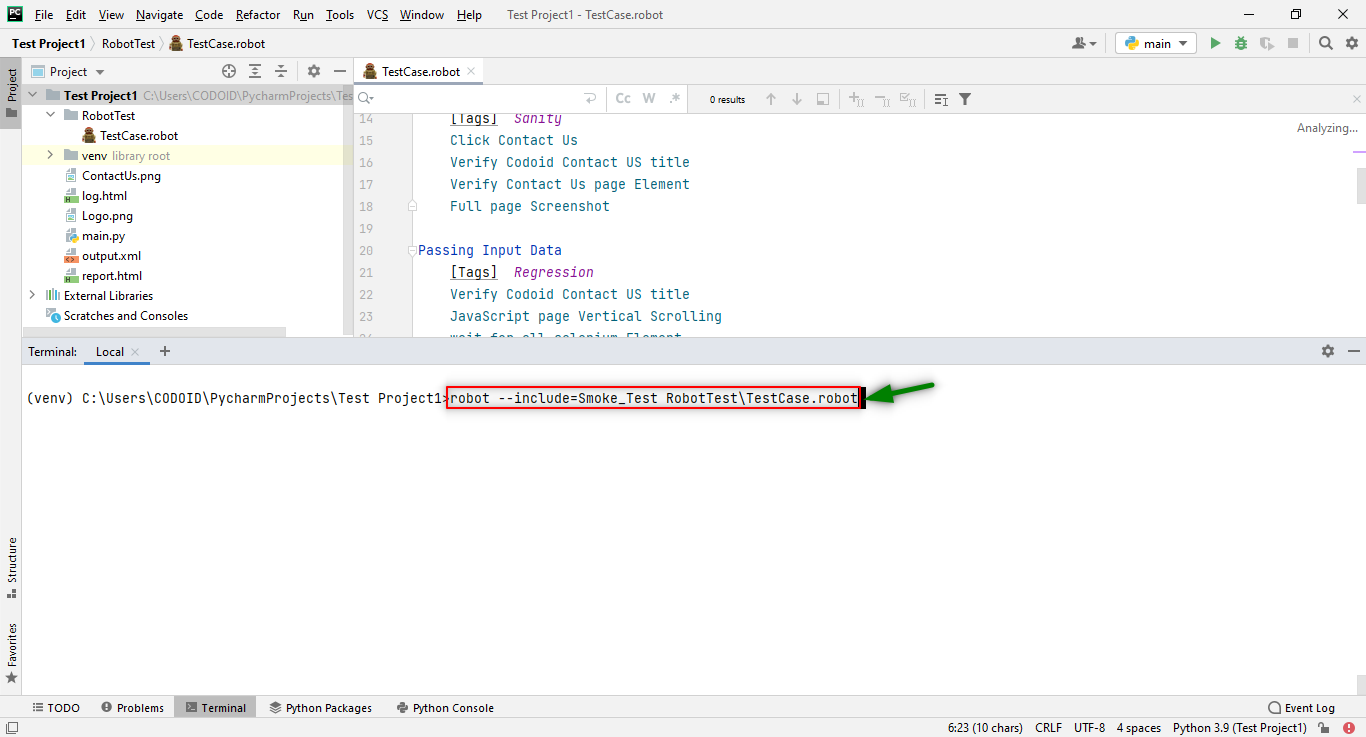

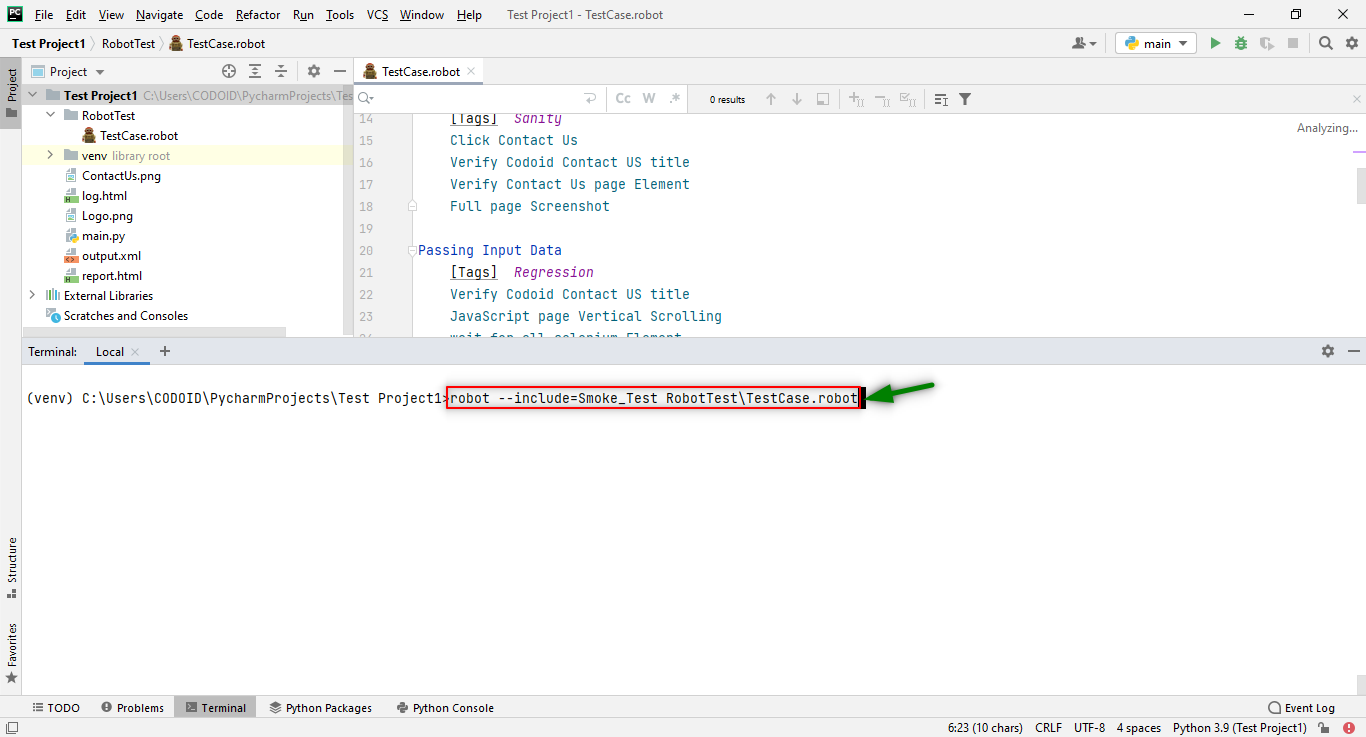

You can either run one or a particular set of test cases by using this command in the Terminal.

For one particular Tag:

robot --include=Tag_name directory_name\file_name.robot

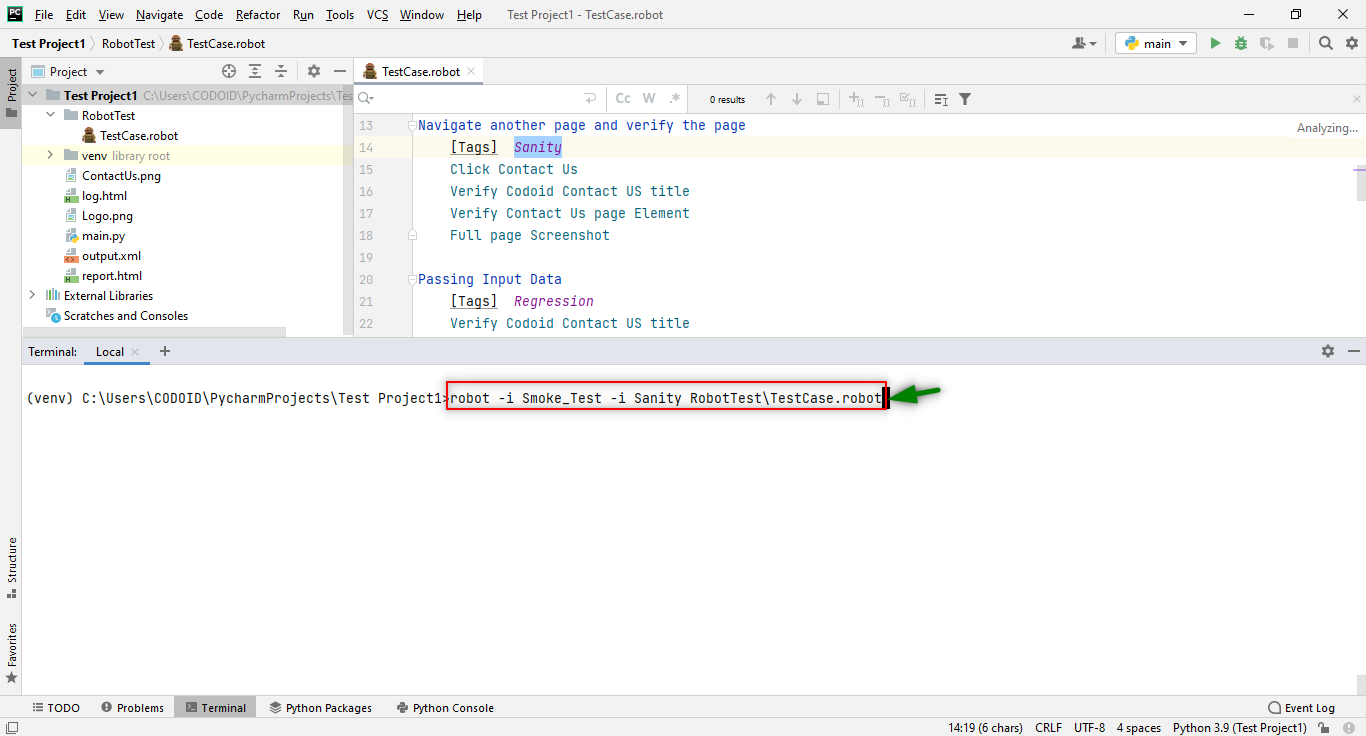

For Two Tags at a Time:

robot –i Tag_name -i Tag_name directory_name\file_name.robot

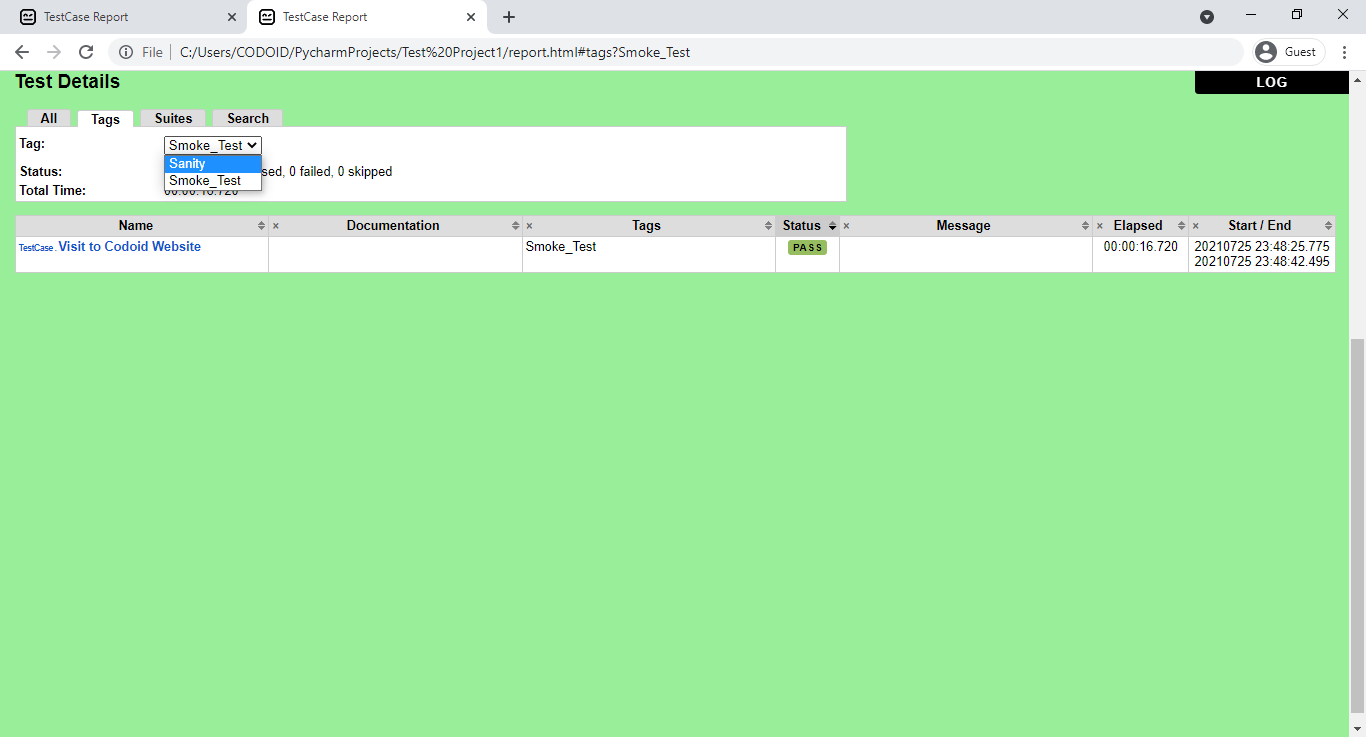

So using tags you will be able to get more concise reports as you can execute the test cases based on priorities. Refer to the following images to get a better understanding of what we mean.

Conclusion:

We hope you have found our end-to-end Robot framework tutorial to be useful as the Robot framework is an easy-to-understand open-source tool that provides a modular interface to build custom automation test cases based on your varying requirements. So it has been widely adopted by various large organizations for this very purpose, and for good reason.