by admin | Nov 19, 2021 | Performance Testing, Blog, Latest Post |

If your development is focused on providing HTTP support, Gatling is one of the best performance testing tools that we can use in load generation. Gatling is a Scala, Akka, and Netty-based open-source load and performance testing framework. Despite the fact that it is written in a domain-specific language, the tool provides us with a graphical user interface that allows us to record the scenario. When we finish recording the scenario, the GUI generates the Scala script that represents the simulation. Excited to learn how to master such a tool with so many features like the Gatling Recorder and a guide to creating a new Maven project? You’re at the right place, as we are one of the leading QA Companies with a lot of experience in using Gatling to its full potential.

Features of Gatling:

Let’s kickstart this blog by exploring the prominent features of Gatling.

Excellent HTTP Protocol – It makes Gatling a great choice for load testing web applications or directly calling APIs.

Scala simulation scripts – Since all the Gatling scripts are written in the Scala language, writing load testing scenarios in Scala provides great flexibility.

The Gatling Recorder – The Gatling recorder helps convert the flow of an application into a Gatling scenario. It is very much useful if you come across a complex web application.

The Code can be kept in the Version Control System – As the Gatling load test scenarios are written in the Scala language, they can be easily stored in the Version Control System. So the process of scenario code maintenance gets simplified.

Why Gatling?

Now that we have seen the main features of Gatling, let’s explore the other real-world benefits Gatling offers. Since it is an excellent tool for load/stress testing your system without regarding other performance requirements, several thousand concurrent users can be created from a single JVM. So, Gatling is an excellent tool for you to include in your continuous integration as it doesn’t require you to set up a distributed network of machines to perform testing. Apart from that, it is also useful if you want to write your own code rather than just recording the scripts. Now let’s see how to install Gatling.

Installing Gatling from Website

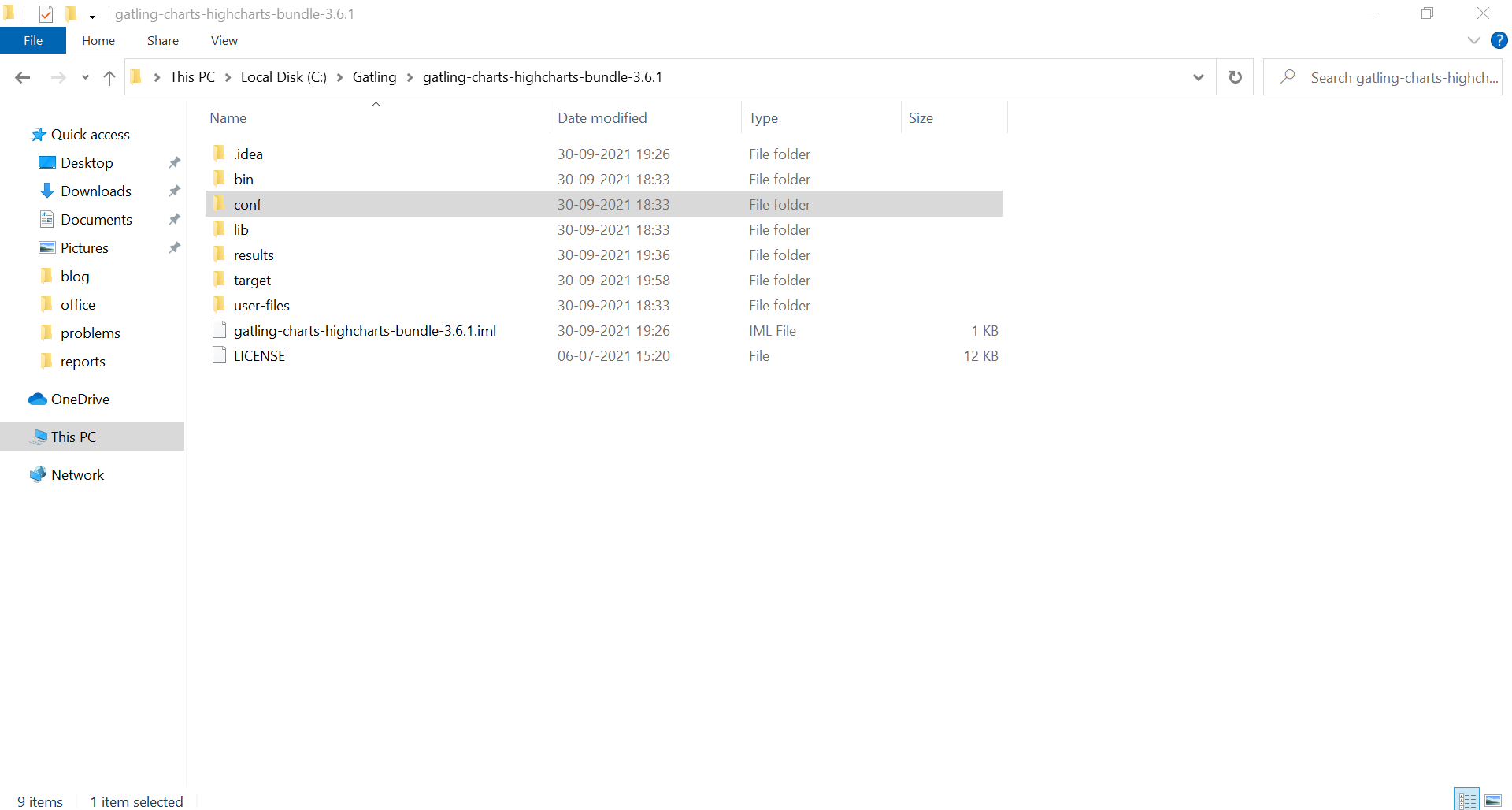

First and foremost, you have to download the Gatling performance testing tool from their official website. You will find two download options available there, one is the open-source version and the other is the Enterprise edition. We will be using the open-source version for this blog, and so you can go ahead and download that. The Enterprise edition does have a few additional features that you can explore once you are well-worse with the free version. Once your download has been completed, open the folder and unzip it.

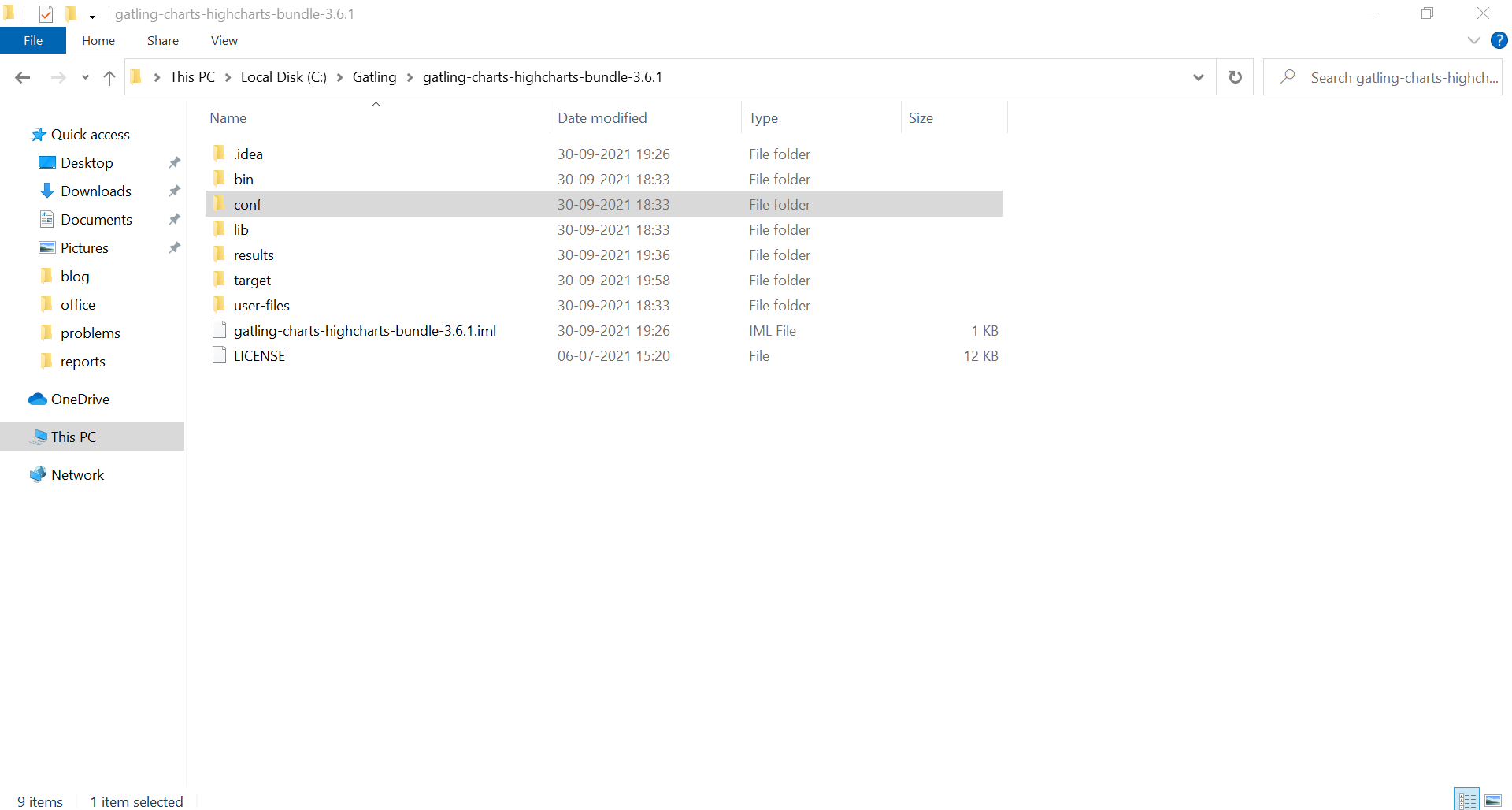

Gatling has its own set of installation requirements for Windows and macOS. So you must have a JDK installed to execute the basic version. In addition to that, the utility requires JDK8.

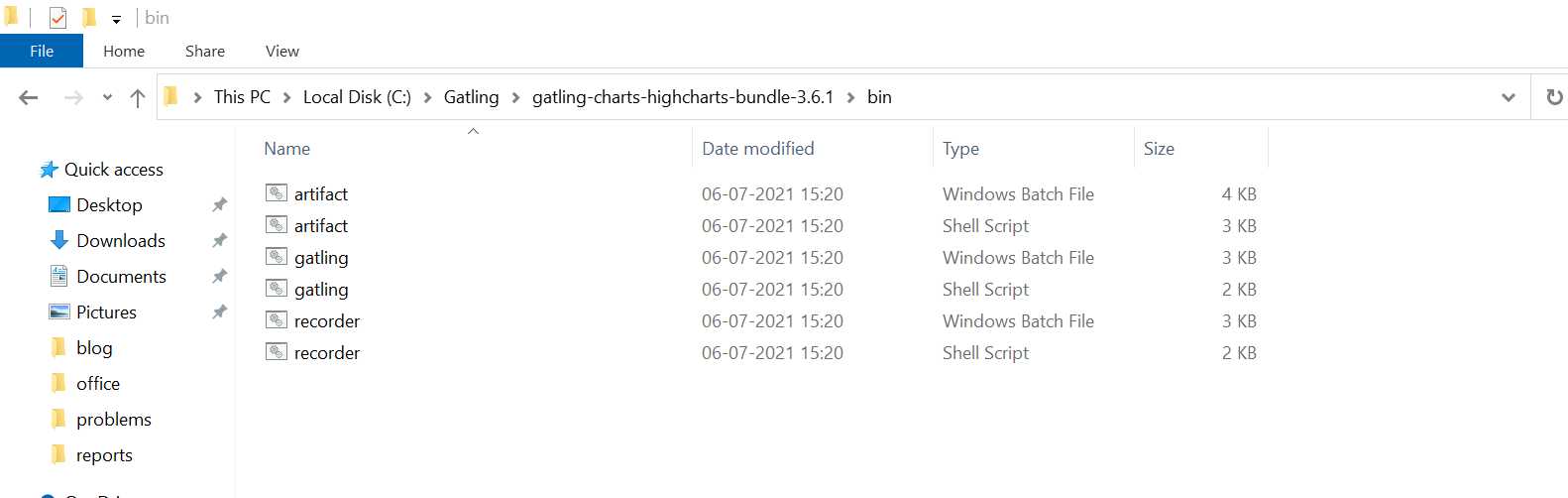

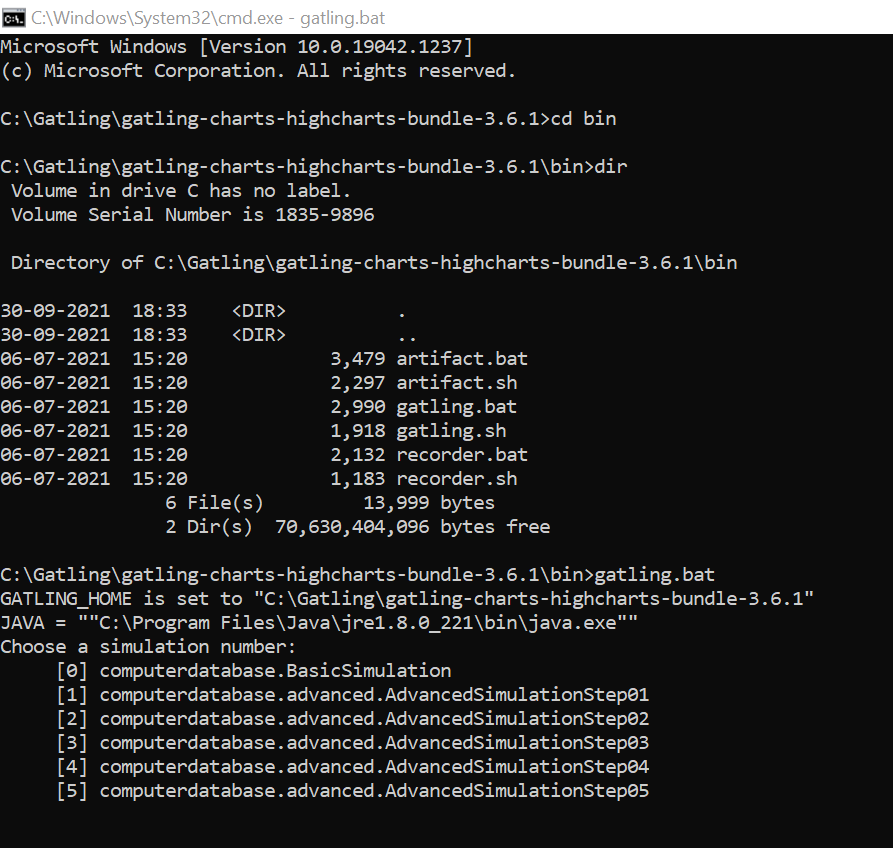

Before we start, make sure to go through the Gatling manual to verify if you have got all the necessary prerequisites ready. Start by going to the bin folder in the unzipped Gatling folder to get Gatling from here. You could also do it by using the command prompt.

You could use Gatling.sh if you’re a Mac user. But since we’re on Windows, we’ll be using Gatling.bat. The tool will start up and run once you double-click on Gatling.bat. We will also be able to run a few example scripts provided by Gatling in the user directories.

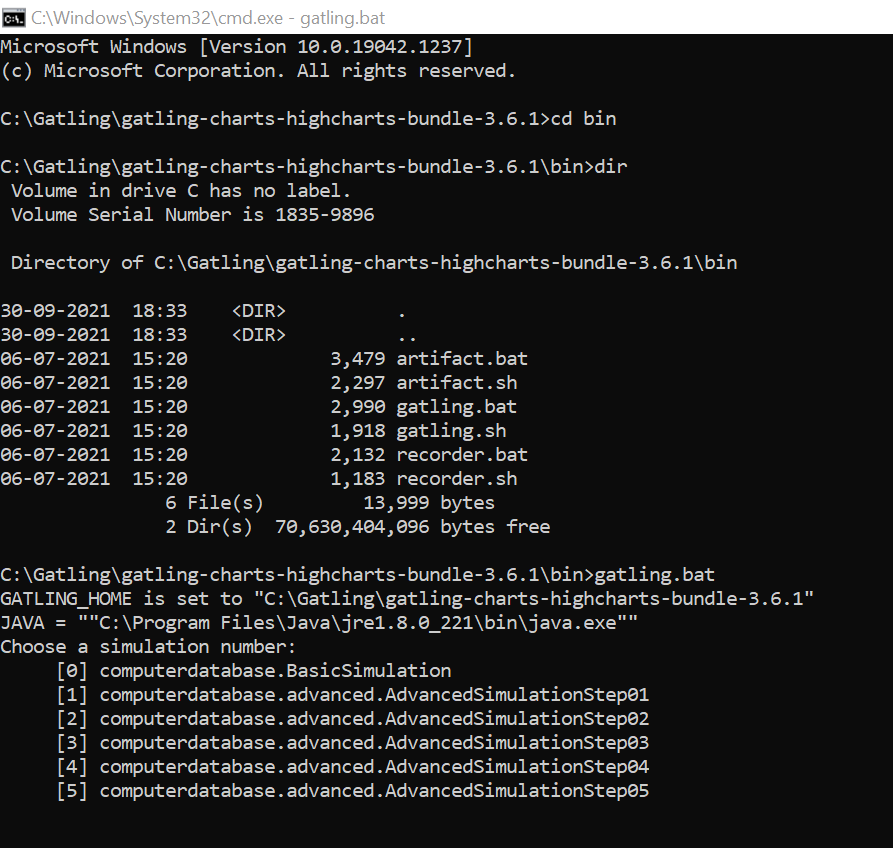

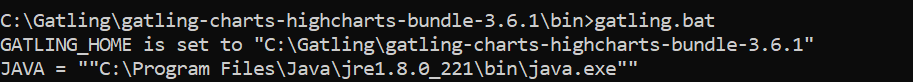

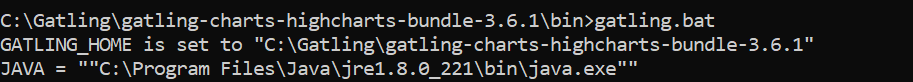

If you wish to use command prompt, go to the Gatling Directory and open command prompt. Once you’ve opened the Gatling Directory Command Prompt, you can get the Gatling.bat file by using the following commands as shown in the image below.

Gatling Recorder

We have already established the fact that the Gatling recorder is one of the prominent features of Gatling. As one of the leading Test Automation Companies, we have found this feature to be very resourceful. So let’s learn how to set up and run your recorder as an HTTP proxy or a HAR converter.

The Gatling Recorder assists you in swiftly generating scenarios by acting as an HTTP proxy between the browser and the HTTP server or converting HAR (HTTP Archive) files. In either case, the recorder creates a rudimentary simulation that closely resembles your previously recorded navigation.

So, let’s take a look at how we can record using the HAR option.

Gatling Recorder Prerequisites

1) Gatling should be put in place.

2) The path to the Java Home should be set.

3) The Gatling Home path must be defined.

Gatling Recorder using the HAR File Converter

A HAR file (HTTP Archive) can be imported into the Recorder and converted into a Gatling simulation. The Chrome Developer Tools or Firebug or the NetExport Firebug add-on can be used to obtain the HAR files. Follow the below steps to convert the HAR files to Gatling simulations.

Step 1:

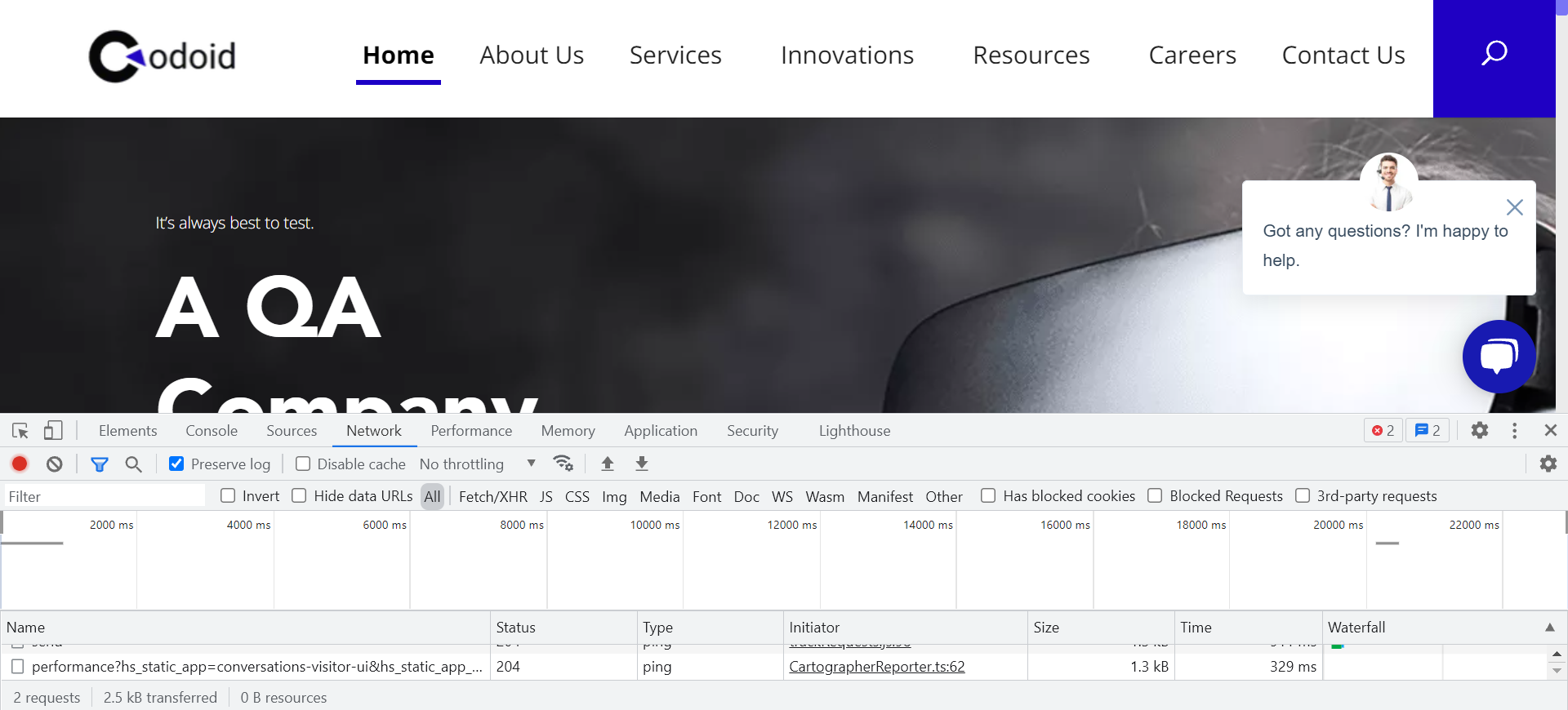

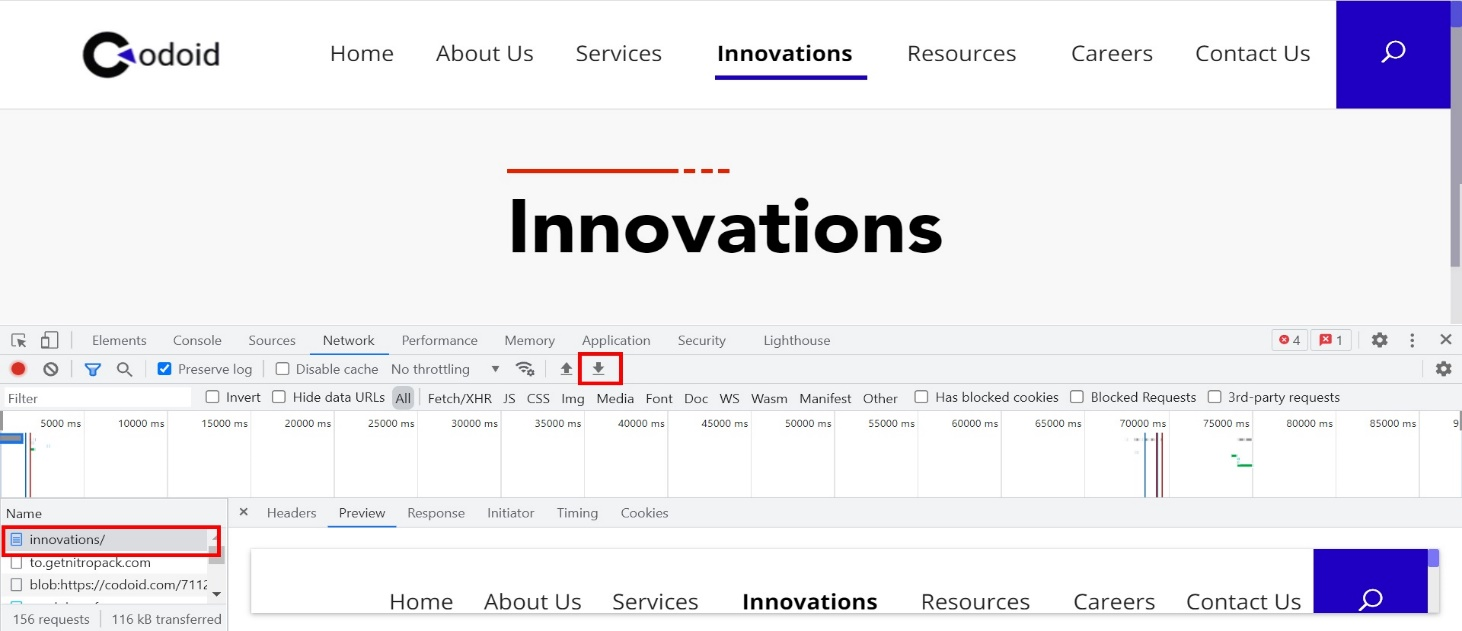

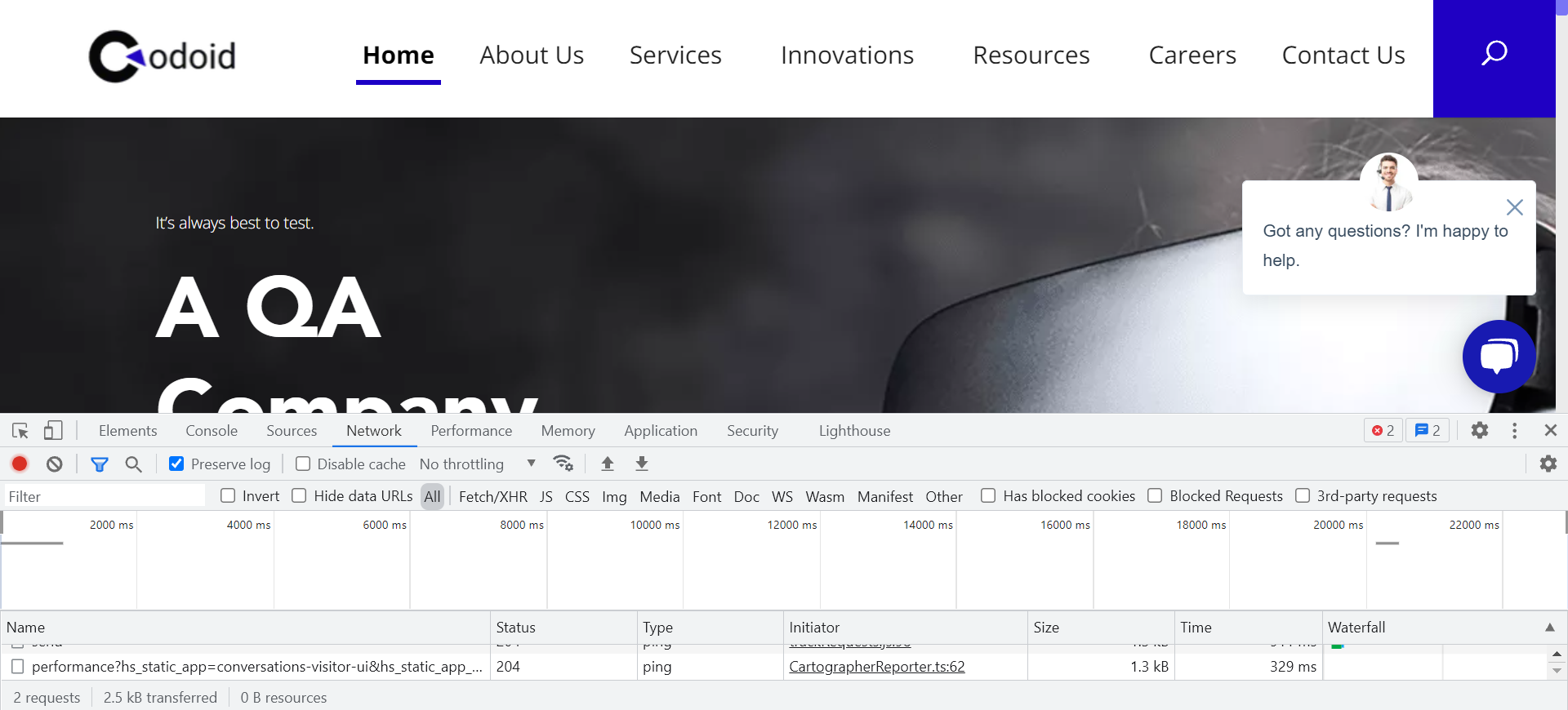

Right-click on the page and click on Inspect to open Developer Tools. Make sure the Preserve log option is checked under the ‘Network’ tab.

Step 2:

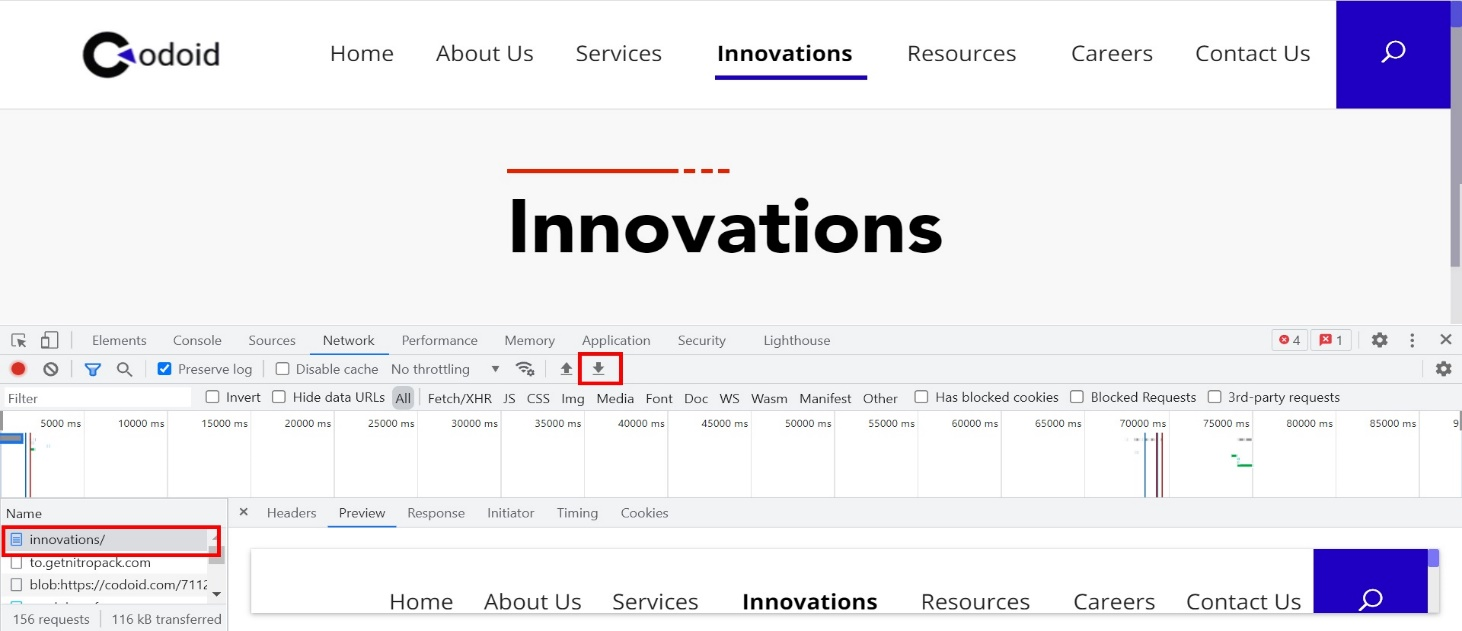

Once you finish navigating the website, right-click on the requests you want to export and click on the ‘Export’ option as shown in the below image.

Step 3:

Launch Gatling recorder

Use the below-mentioned command in command prompt to execute the file recorder file from the Gatling directory.

C:\Gatling\gatling-charts-highcharts-bundle-3.6.1\bin>recorder.bat

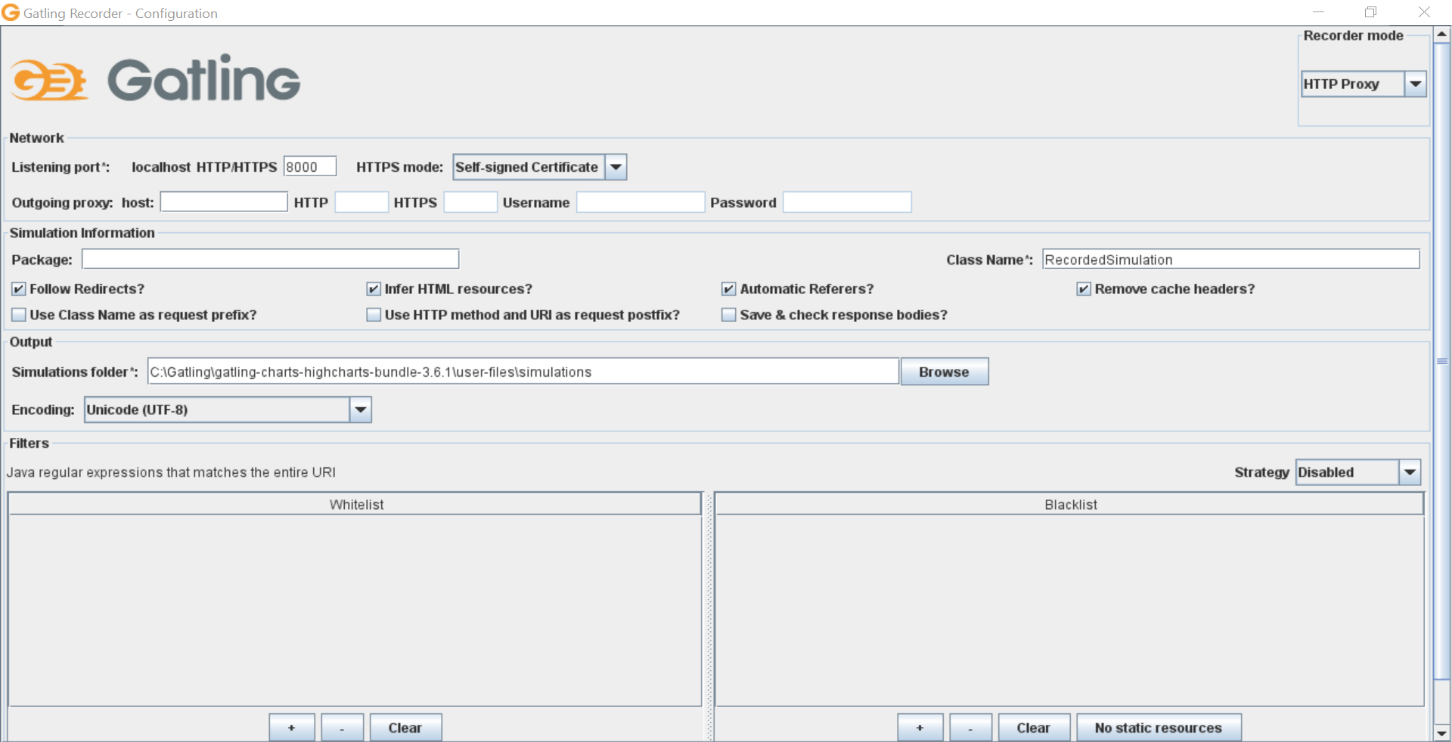

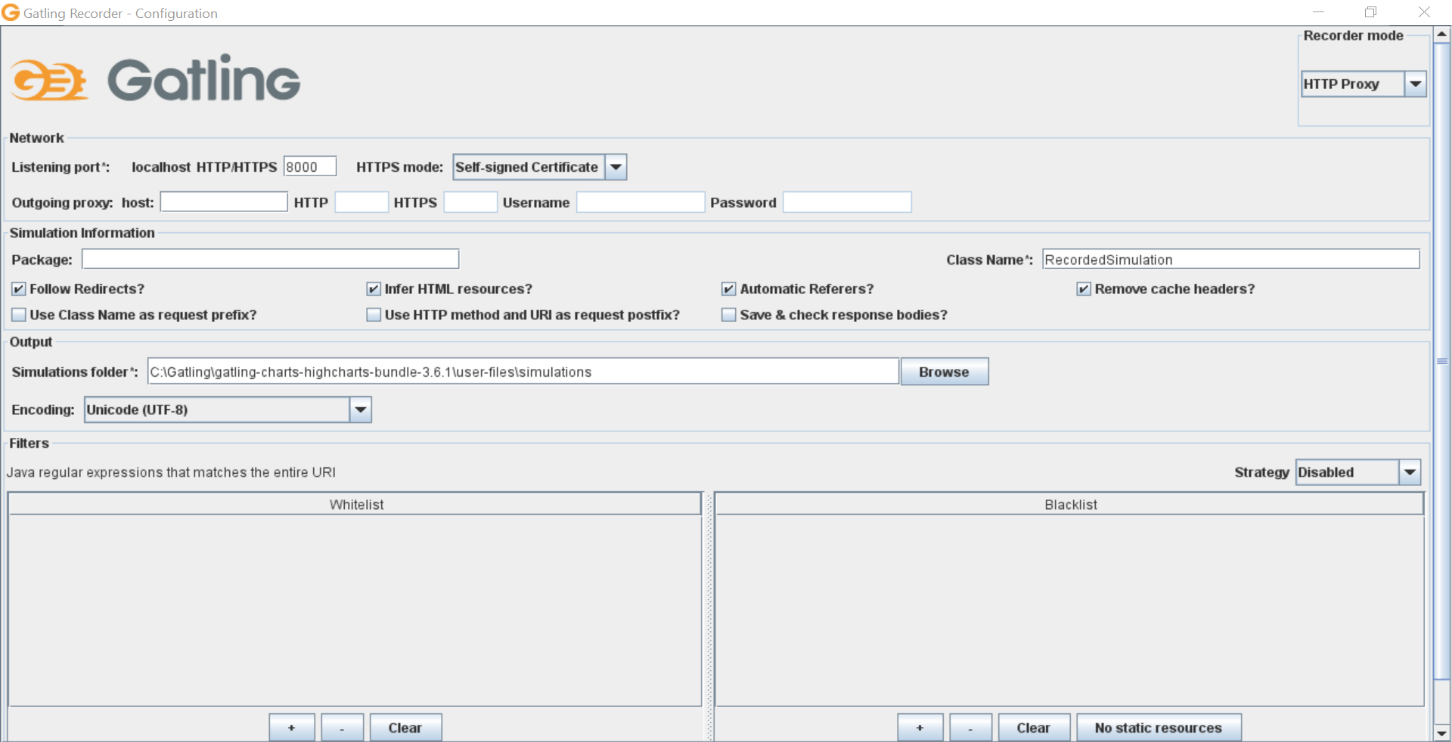

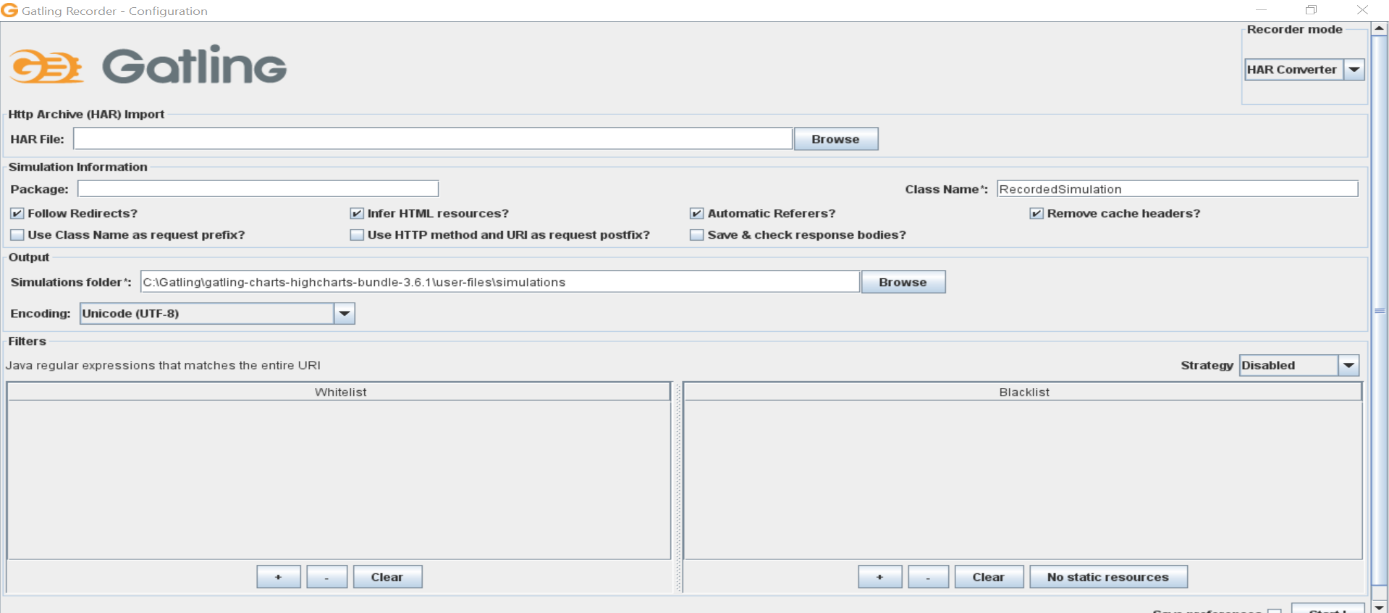

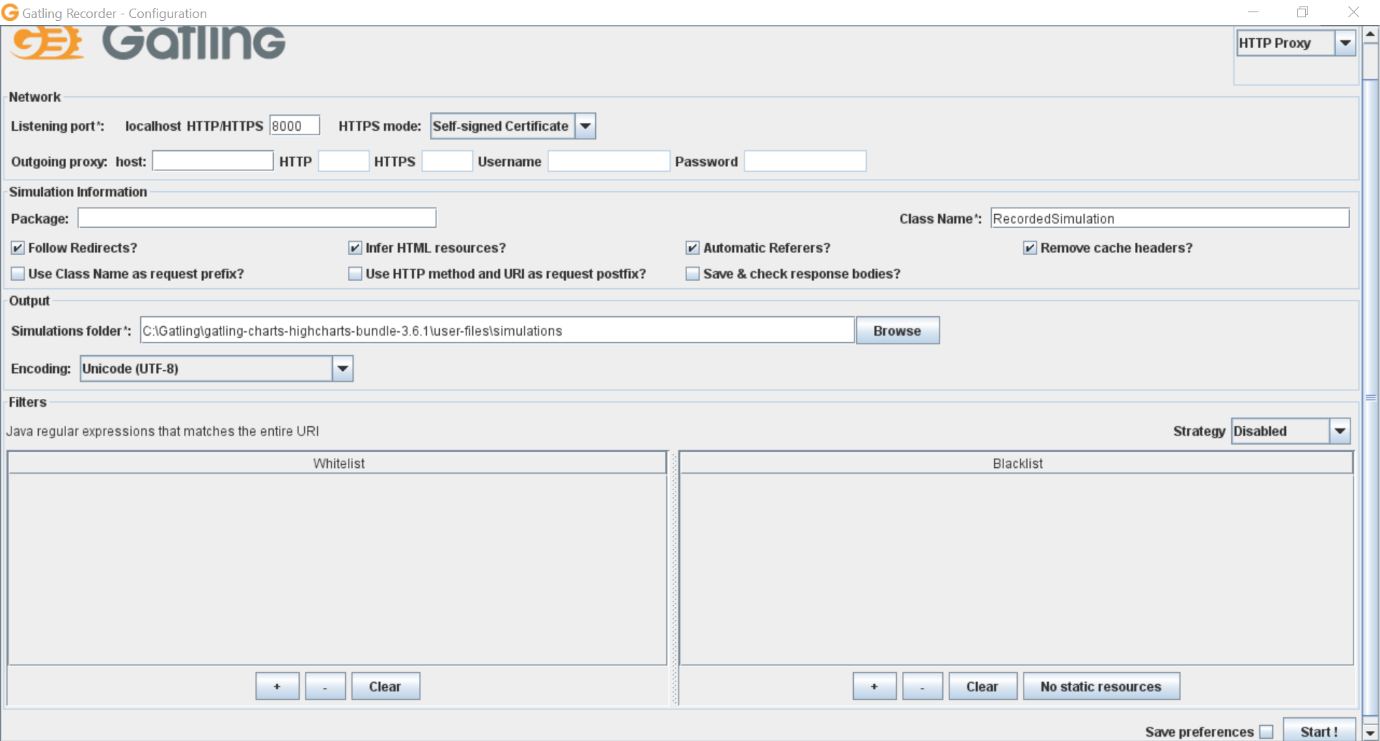

Once you’ve run the above command, the Gatling Recorder will start and you will be able to see the following page.

Step 4:

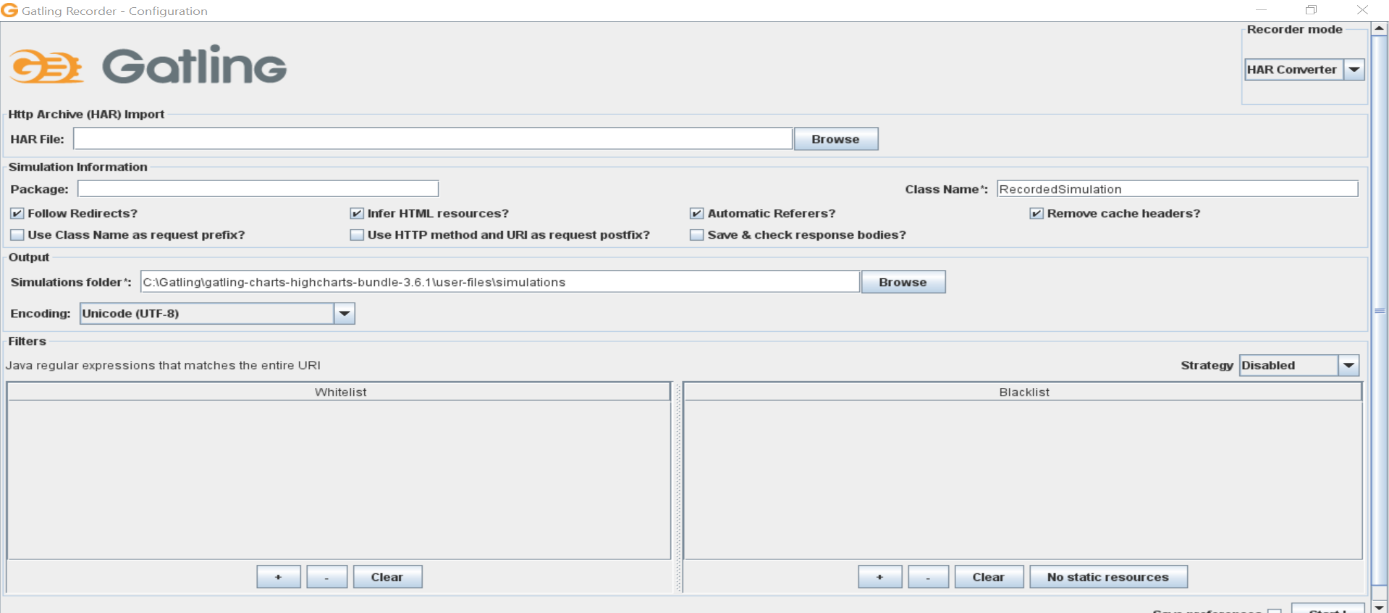

Check the Recorder mode and make sure that the HAR Converter mode is selected. In this scenario, it is set as ‘HTTP proxy’ by default and so let’s change it to HAR Converter. We will also be seeing how to record using the ‘HTTP Proxy’ mode in the next stage of the blog.

Step 5:

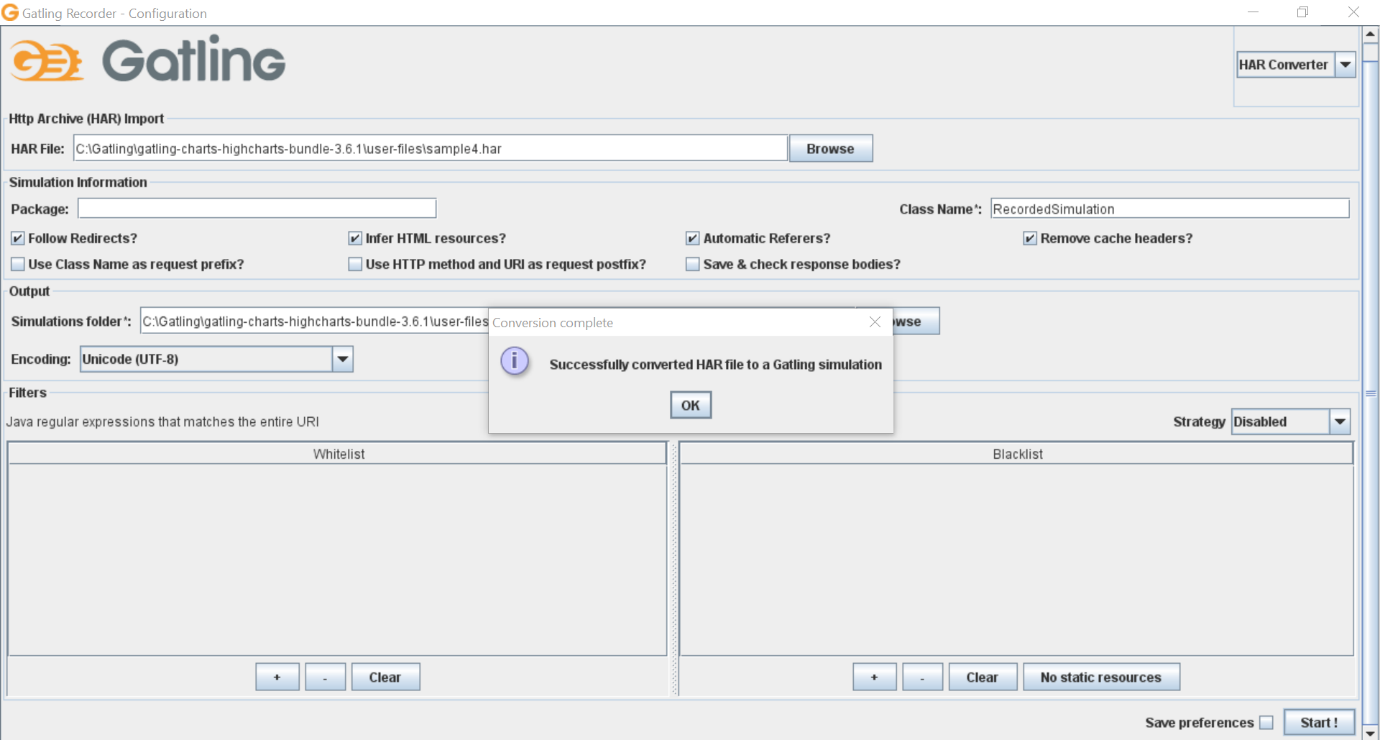

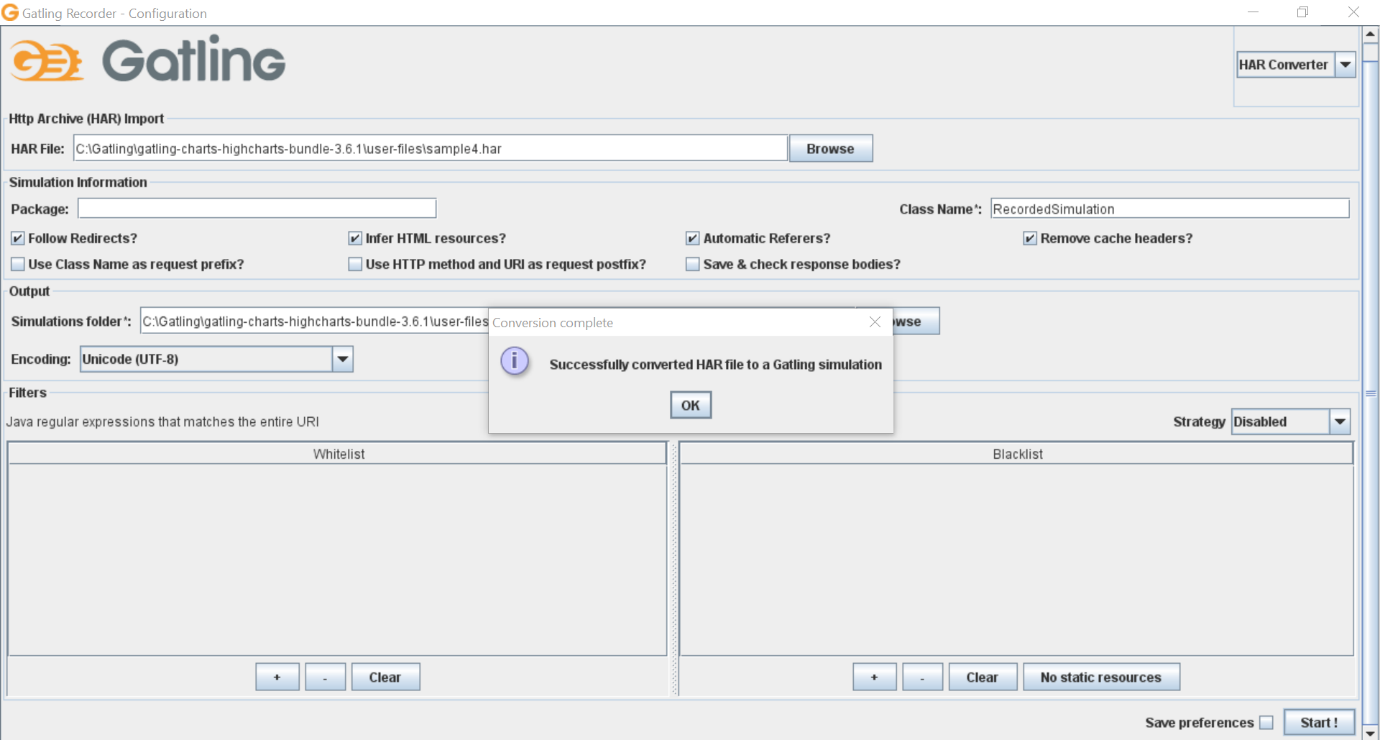

Import the saved HAR file into the recorder and click on the ‘Start’ button.

Step 6:

Once it has been successfully imported, you would have to run the following script using the below command.

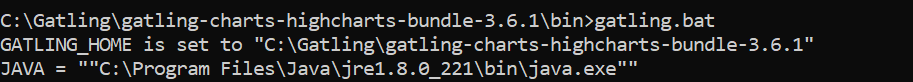

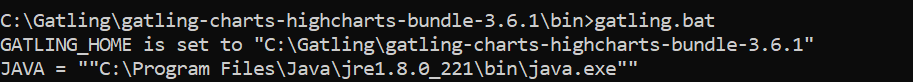

C:\Gatling\gatling-charts-highcharts-bundle-3.6.1\bin>gatling.bat

Gatling Recorder using HTTP Proxy

So as stated above, we will now be seeing the steps you’ll need to follow to use the recorder using HTTP Proxy.

Step 1:

Configure the Browser

In order to use Gatling to capture our scenario, we must first configure our browser’s proxy settings. The following instructions will help you to set up Chrome for Gatling recording.

- Open the Chrome Browser.

- In the top right corner, click on the three dots to get a drop-down menu.

- Click on the ‘Settings’ option.

- Scroll down to the bottom of the settings page and click on the ‘Advanced’ drop down option.

- Click on System from the list of options that appear.

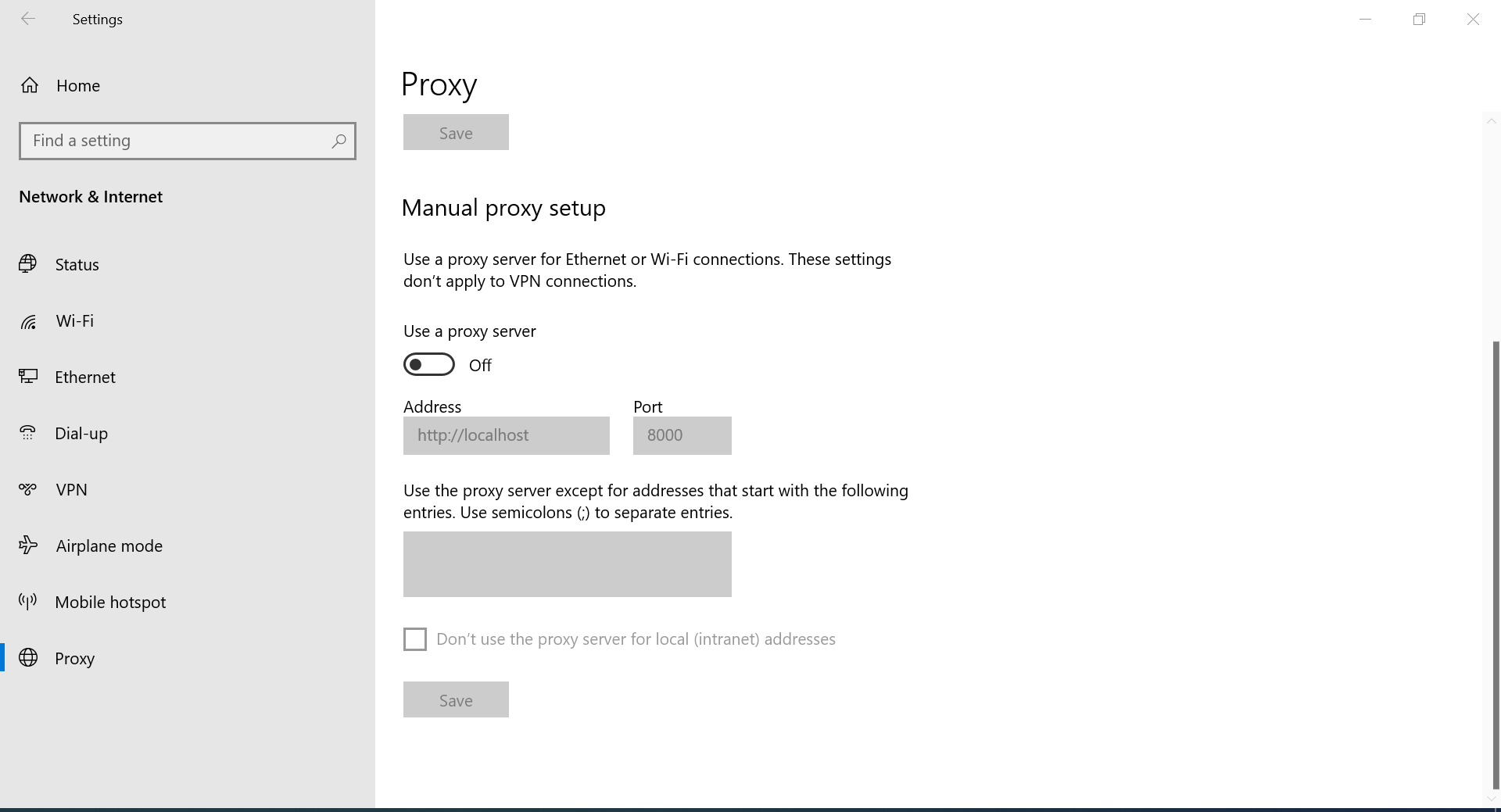

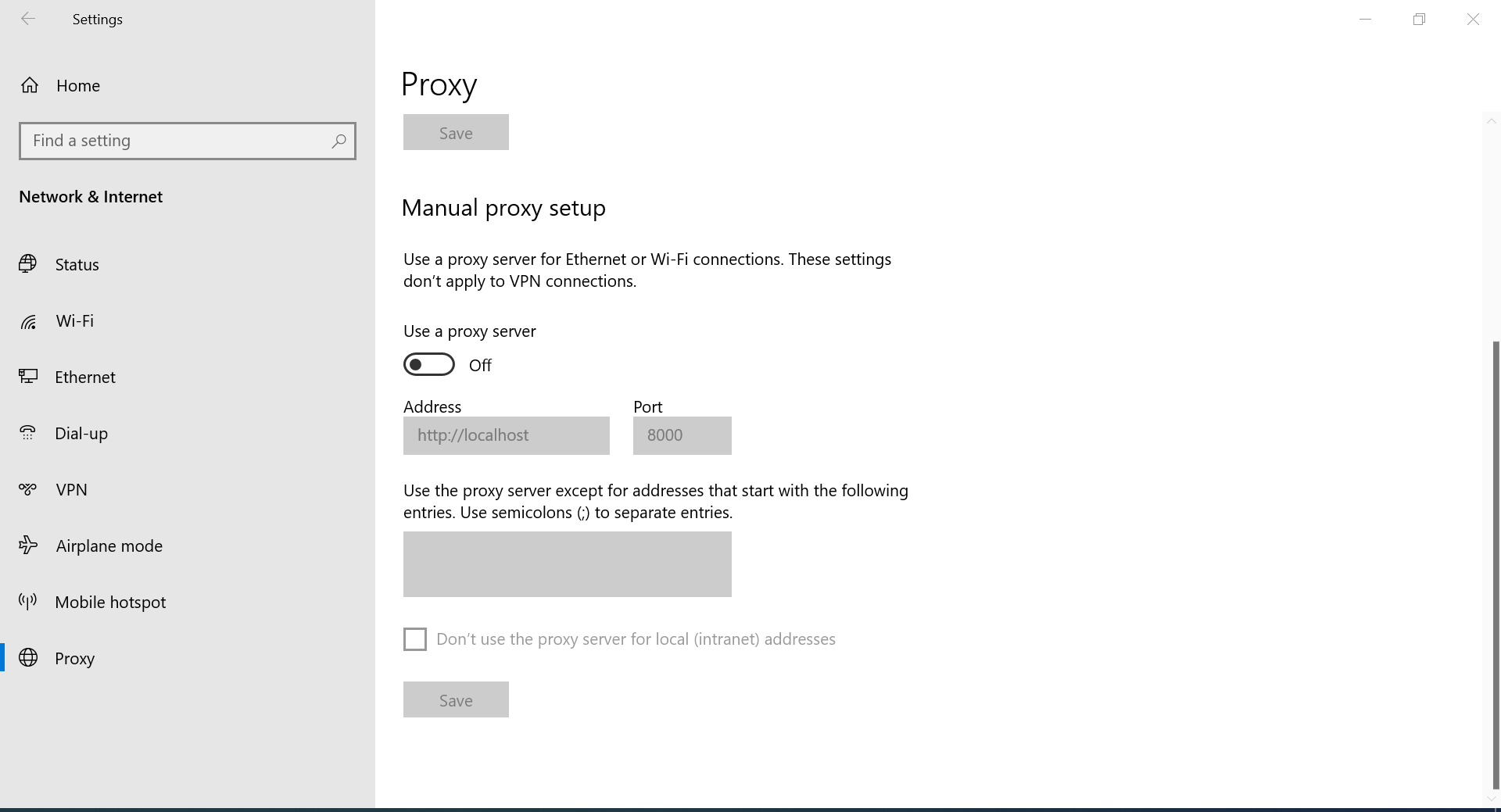

- Once you click on ‘Open your Computer Proxy Setting’, the proxy page will be shown.

- Uncheck the ‘Automatically detect settings’ option.

- Check the ‘Use the proxy server for your LAN’ option.

- The address should be “localhost” and the port should be “8000”.

- Click on the ‘Save’ Button.

Step 2:

Recording the Scenario

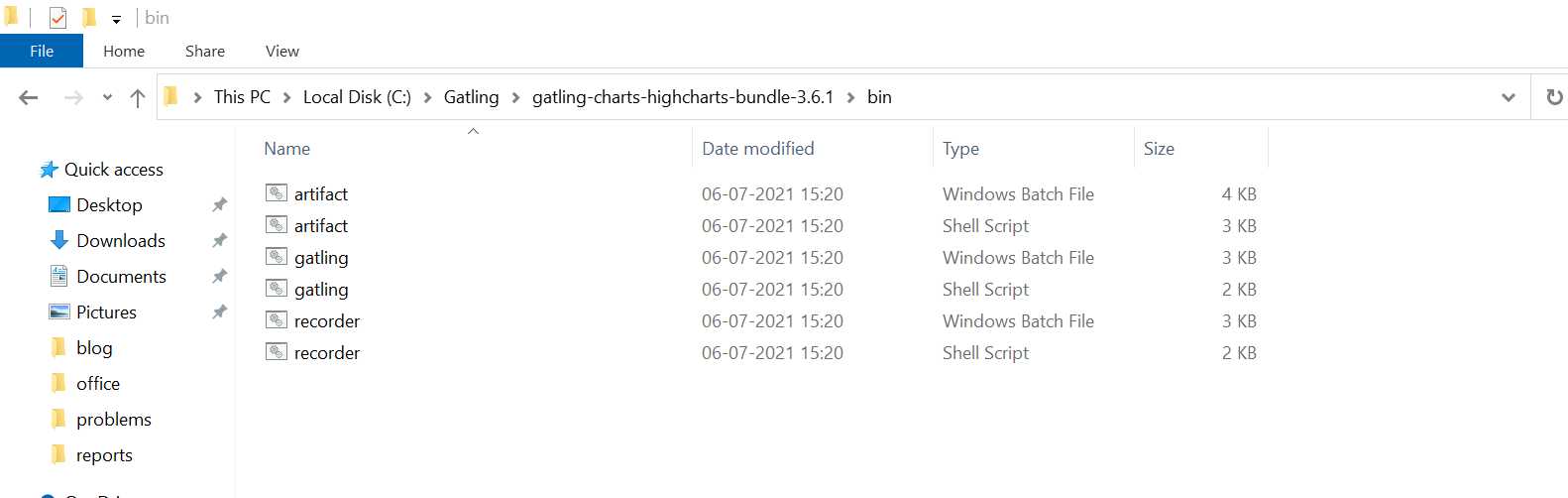

- First, you have to go to the bin folder of the Gatling bundle. (In our case: C:\Gatling\gatling-charts-highcharts-bundle-3.6.1\bin)

- Double click the “recorder.bat” file or run it using command prompt. Follow the same procedure as you did when using the HAR converter.

- The recorder window will be displayed.

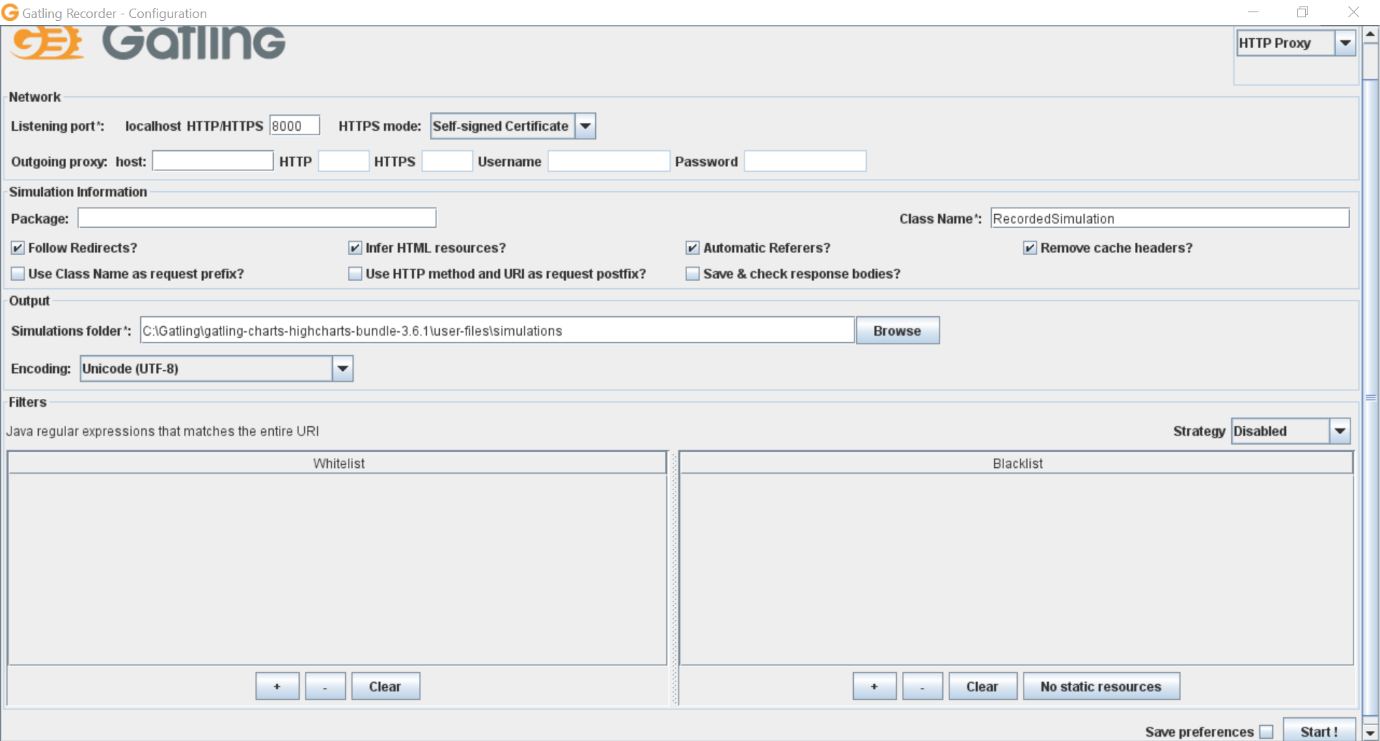

- Enter the port number in the local host box as per your preference. (In our case, we have used 8000).

- Enter the package name.

- Enter the class name.

- Select the following options: “Follow Redirects,” “Infer HTML resources,” “Remove Cache Headers,” and “Automatic Referrers.”

- Choose the location of the output files. We have used C:\Gatling\gatling-charts-highcharts-bundle-3.6.1\user-files\simulations.

- Note: It’s a good idea to set simulation folders as defaults so that you wouldn’t have to copy the recorder file during load testing.

-

- Keep all the other options to be the same and don’t change any existing options as well.

- Click on the ‘Start’ button.

- Open Chrome browser and navigate the flow you wish to record.

- Once you are done, click the “Stop & Save” button to close the Gatling recording window.

- The recorded file will be saved and ready to run. The file will be stored in the directory that you specified in the previous step.

Step 3:

Executing load testing using Gatling

After the import is complete, you can run the script using the command given below.

C:\Gatling\gatling-charts-highcharts-bundle-3.6.1\bin>gatling.bat

Creation of First Maven Project Using Scala for Gatling

Before creating a maven Project using Scala, we will look at the prerequisites you will need for its creation.

1) JDK 1.8 or Higher version should be installed in your system

2) The following environment variables have to be set

a. Java_Home Environment Variable

b. Maven_Home Environment Variable

c. Gatling_Home Environment Variable

If you want to check if you have all the prerequisites, you can simply follow the following steps.

Step 1:

Right Click on ‘This PC’ > Properties > Advanced System Settings > Environment Variables > System Variables

Now, you will find all the environment variables available in your system. If you have all the required prerequisites, you can start with the creation of your first Maven Project.

Creating Your First Project

The easiest way to create a new project is by using an “archetype”. An archetype is a general skeleton structure or template for a project.

To create the project we need to follow a few steps:

Step 1:

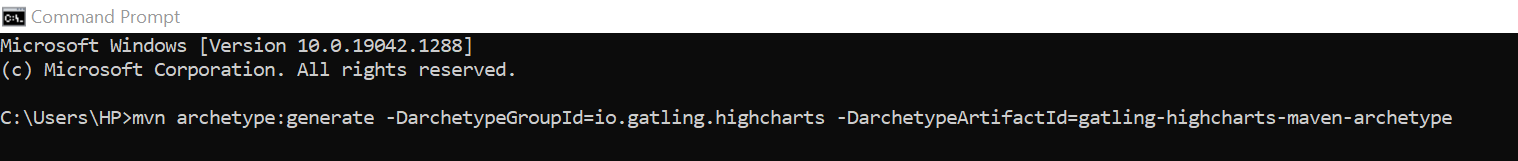

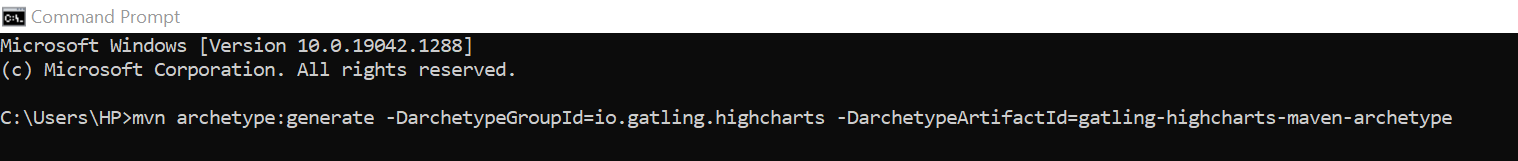

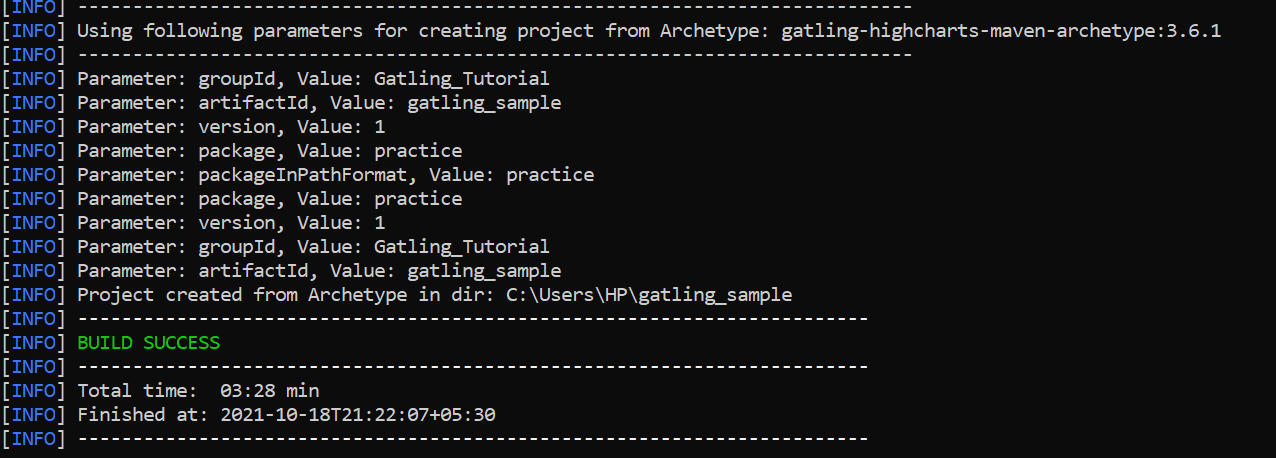

Run the archetype plugin using command prompt by using the following command.

mvn archetype:generate -DarchetypeGroupId=io.gatling.highcharts -DarchetypeArtifactId=gatling-highcharts-maven-archetype

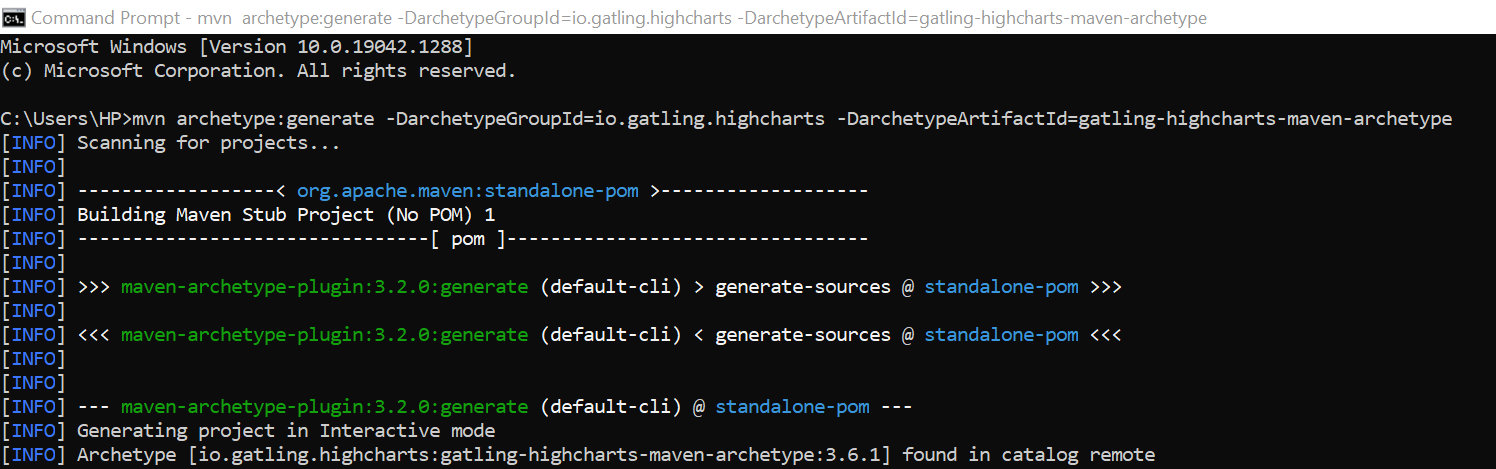

Once you press enter, you will see Maven downloading many Jar Files by resolving dependencies and downloading them as needed (Only once).

Step 2:

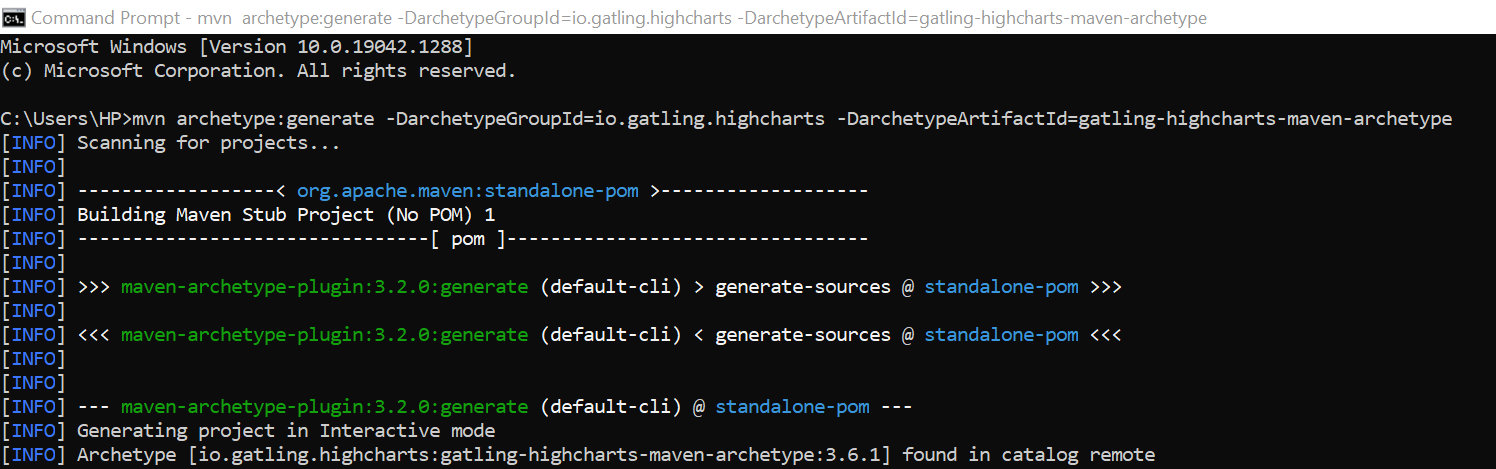

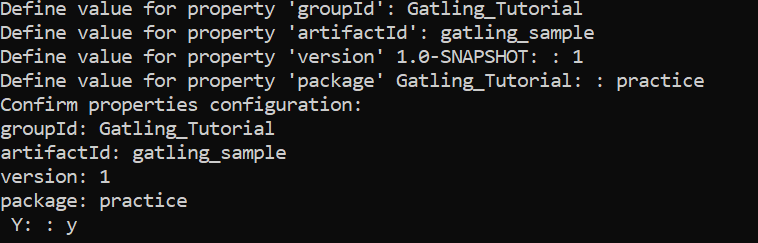

Once all the jar files have been downloaded, assign the groupId, artifactId, and the package name for your classes before confirming the archetype creation.

Step 3:

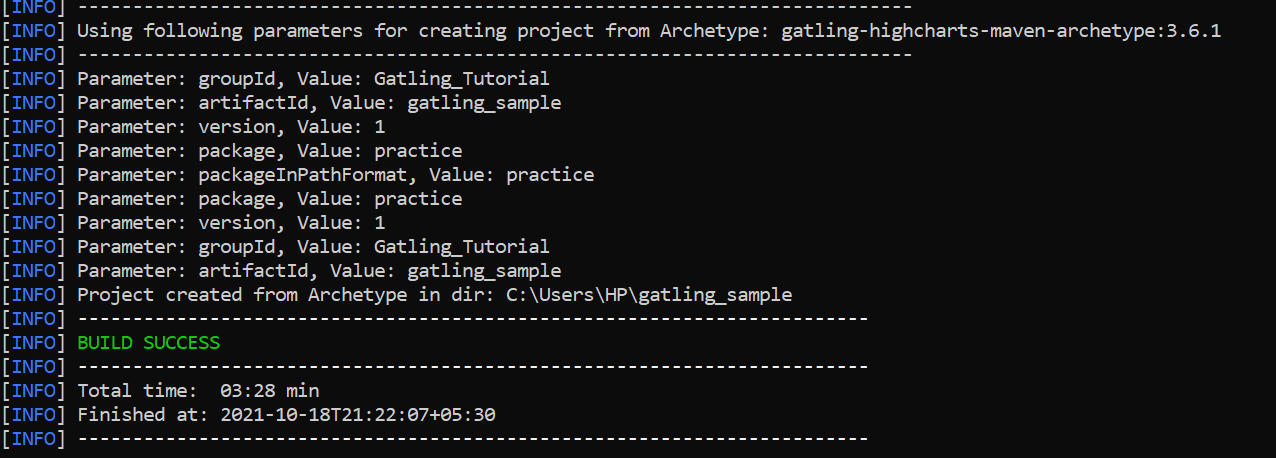

Once you’ve provided all the required details, you will see a build success message and the location of the particular project that implies your project has been successfully created.

Step 4:

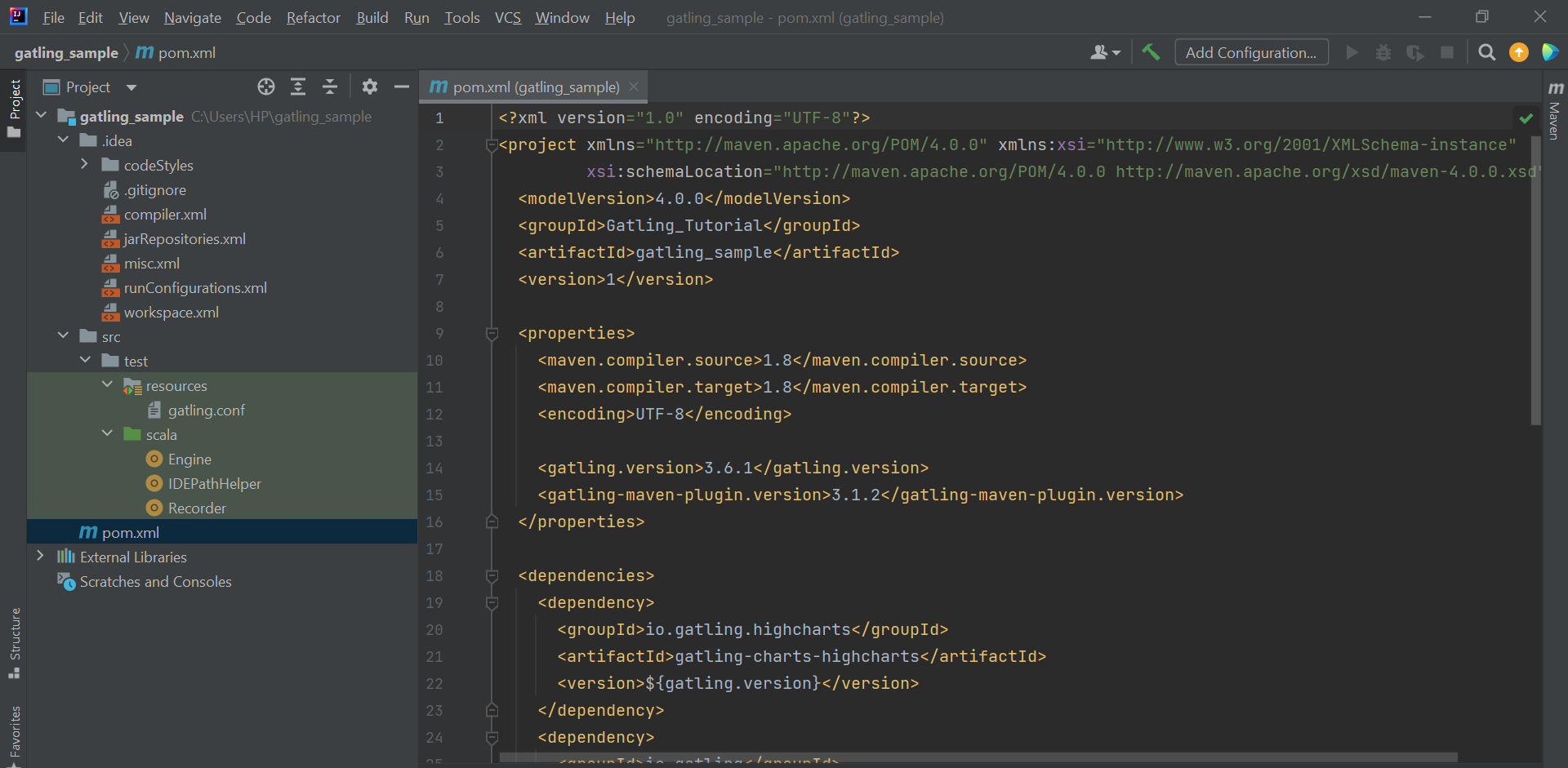

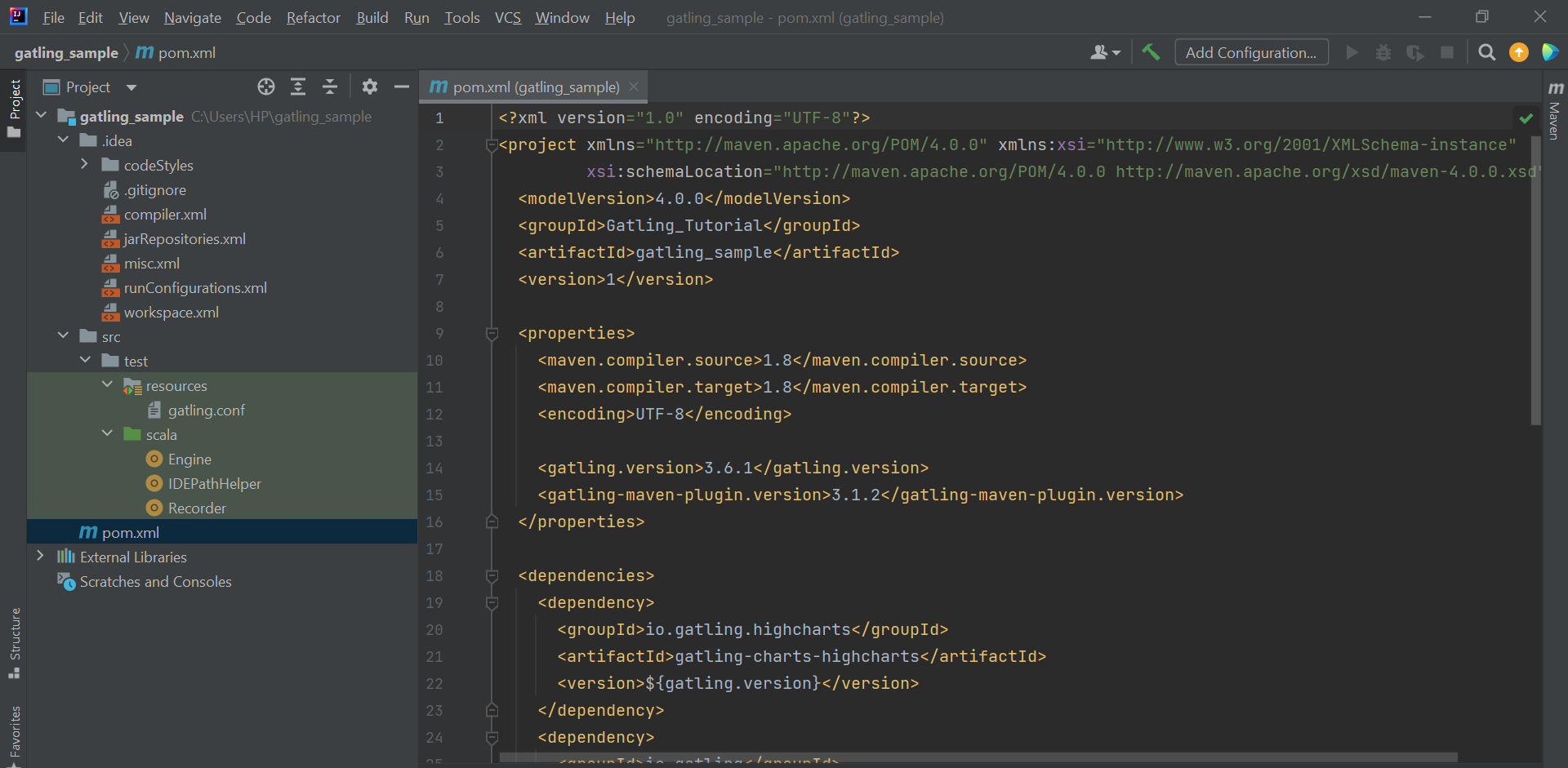

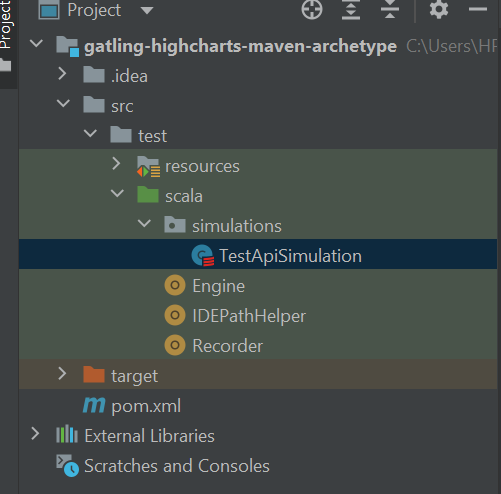

Open the Generated Project using Intellij Idea and follow the below steps to open the project using Intellij.

File > Open > Select the directory for the generated project > Click on the pom.xml file

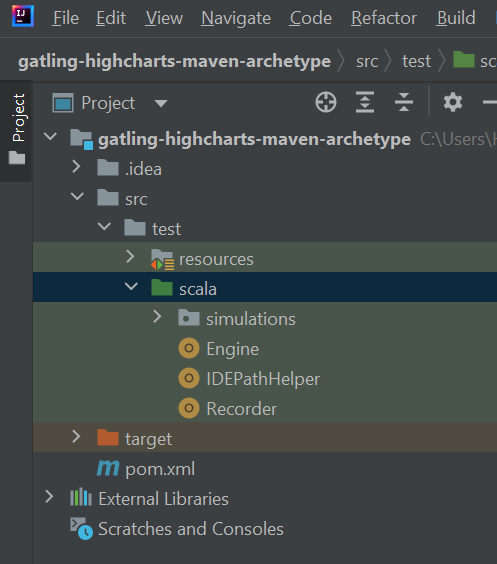

Once you click to open the pom.xml, you will notice that it takes a little time to load all the dependencies and the project structure. It will also contain the following launchers.

- Gatling Engine

- Gatling Recorder

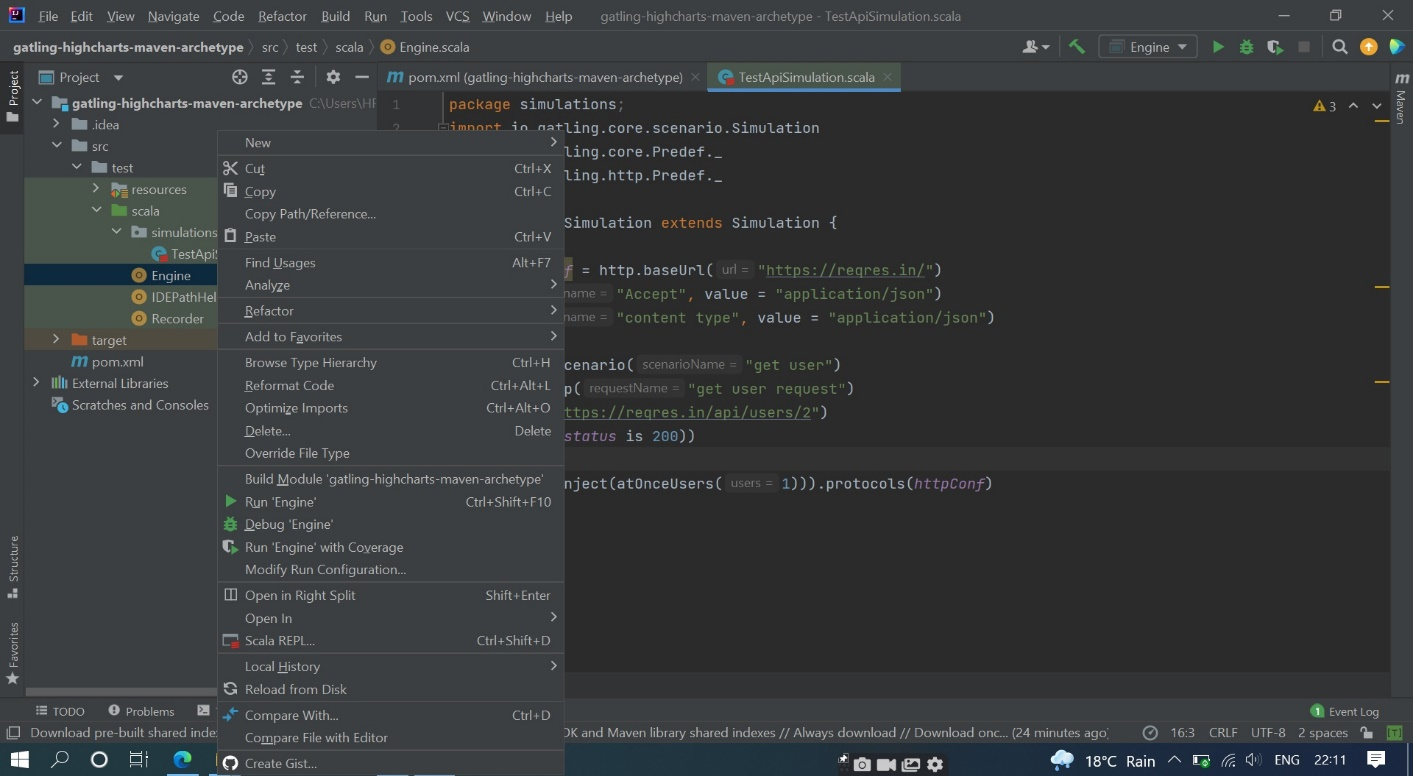

The Gatling load test engine can be launched by right-clicking on the Engine class in your IDE. The target/Gatling directory will be used to store the simulation reports.

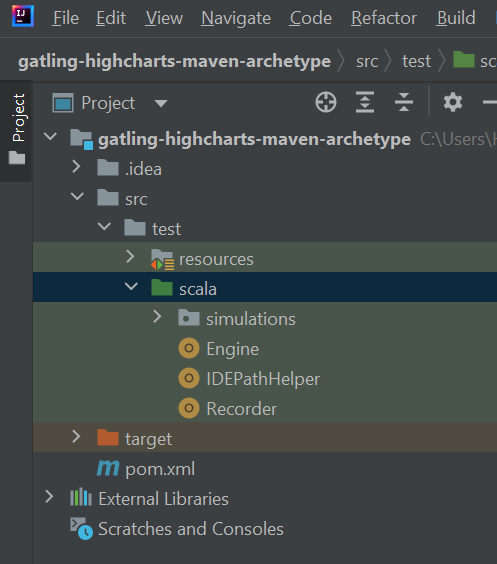

You can easily start the Recorder by right-clicking on the Recorder class in your IDE. The src/test/Scala directory will be used to construct the simulations. We have attached an image reference to show how it’ll look once everything has been loaded.

Now, you are all set and ready to write your first Gatling script.

The pom file will contain the following things:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>io.gatling.highcharts</groupId>

<artifactId>gatling-highcharts-maven-archetype</artifactId>

<version>1</version>

<properties>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<encoding>UTF-8</encoding>

<gatling.version>3.6.1</gatling.version>

<gatling-maven-plugin.version>3.1.2</gatling-maven-plugin.version>

</properties>

<dependencies>

<dependency>

<groupId>io.gatling.highcharts</groupId>

<artifactId>gatling-charts-highcharts</artifactId>

<version>${gatling.version}</version>

</dependency>

<dependency>

<groupId>io.gatling</groupId>

<artifactId>gatling-app</artifactId>

<version>${gatling.version}</version>

</dependency>

<dependency>

<groupId>io.gatling</groupId>

<artifactId>gatling-recorder</artifactId>

<version>${gatling.version}</version>

</dependency>

</dependencies>

<build>

<testSourceDirectory>src/test/scala</testSourceDirectory>

<plugins>

<plugin>

<groupId>io.gatling</groupId>

<artifactId>gatling-maven-plugin</artifactId>

<version>${gatling-maven-plugin.version}</version>

</plugin>

</plugins>

</build>

</project>

Writing First Gatling Script:

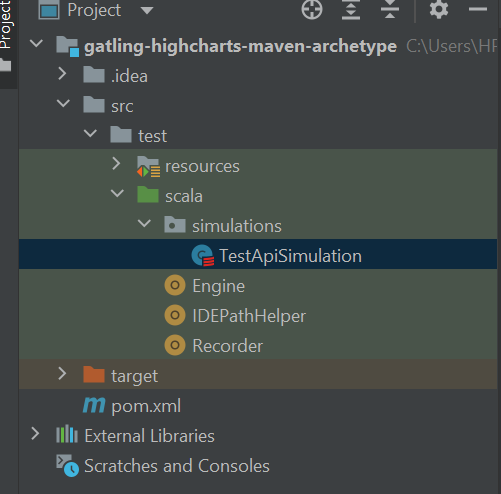

To start writing your first Gatling Script first of all we need to create a package with the name simulation under SRC > test > scala as shown below.

Once you have created the simulations folder, we need to create a class under that particular folder and name it as you wish.

Now, you can start writing your script in that particular class. If you’re not aware of the general procedures to follow while writing your script, don’t be worried as we’ve got you covered.

The protocols to be followed for writing the scripts:

- The Package Name should be Mentioned

- Make sure to import all the important Gatling Classes

- The Class should extend the Simulation Class

- The script should consist of the below three integral parts

- HTTP Configuration

- Scenario

- Setup

- In the HTTP configuration part, we should provide the base URL to pass the header and the value.

- Here, we give the scenario a name and execute the request by providing the request URL and an assertion for the particular request.

- When it comes to the setup part, we must inject the number of concurrent users utilizing the setup method and pass the protocols.

The Script should be as follows:

package simulations;

import io.gatling.core.scenario.Simulation

import io.gatling.core.Predef._

import io.gatling.http.Predef._

class TestApiSimulation extends Simulation {

//http conf

val httpConf = http.baseUrl("https://reqres.in/")

.header("Accept", value = "application/json")

.header("content type", value = "application/json")

//scenario

val scn = scenario("get user")

.exec(http("get user request")

.get("https://reqres.in/api/users/2")

.check(status is 200))

//setup

setUp(scn.inject(atOnceUsers(1))).protocols(httpConf)

}

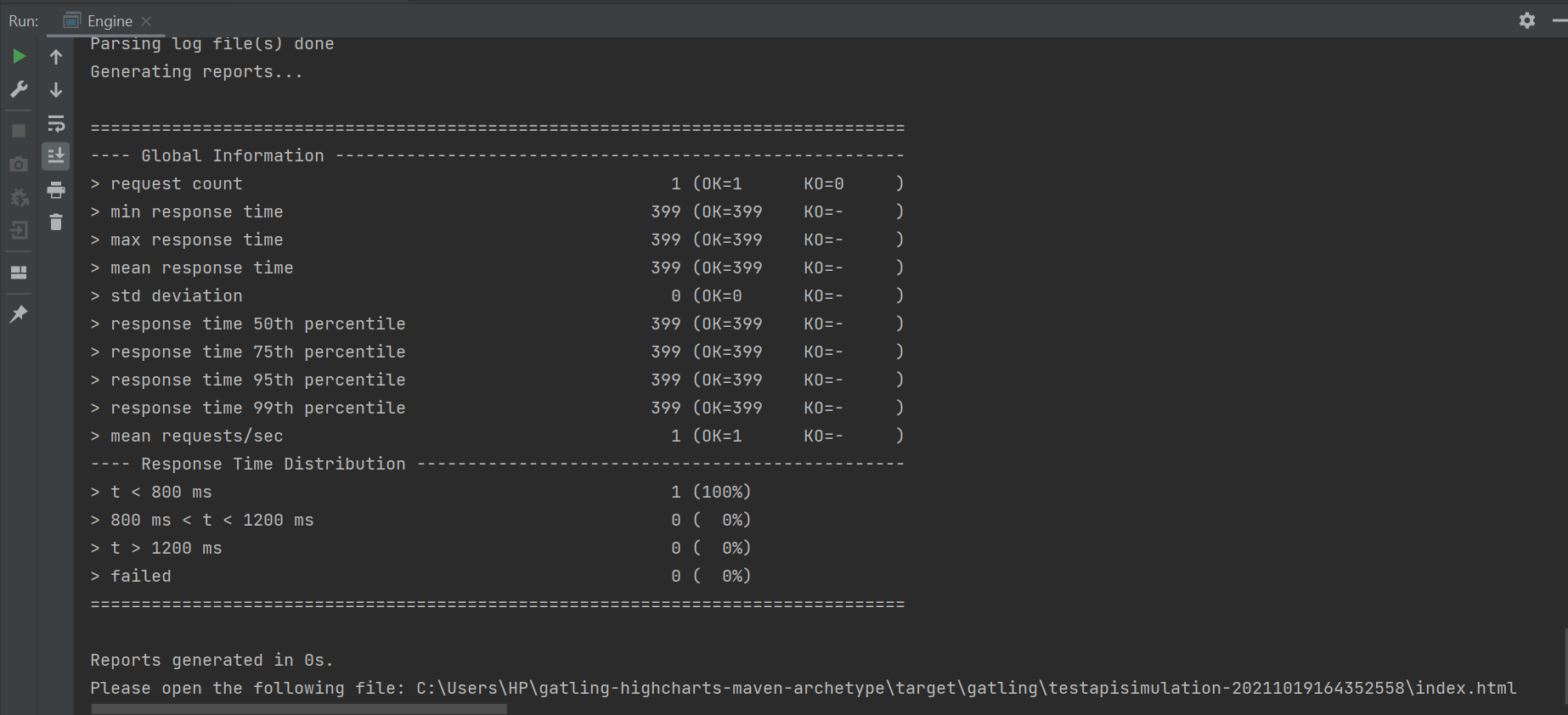

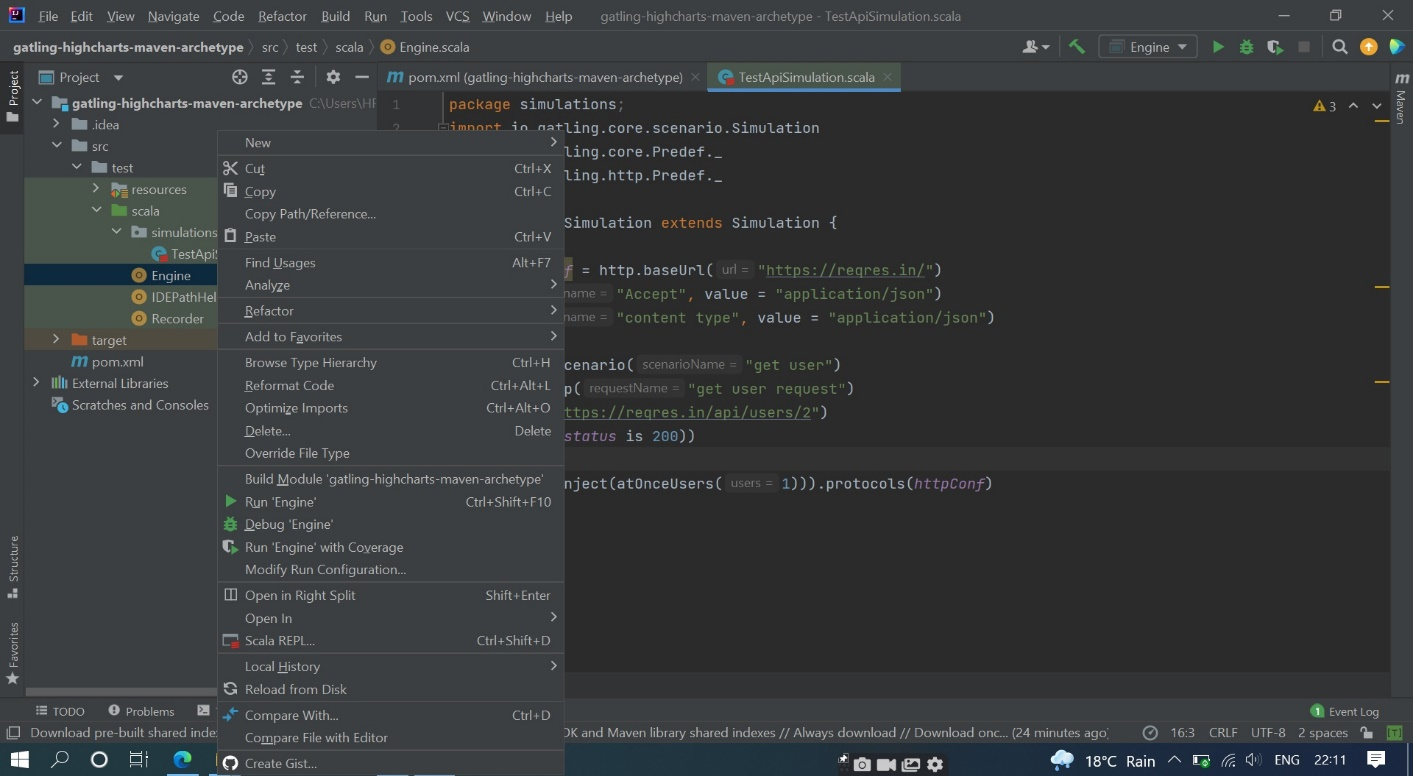

Once the script is ready, you can run it by

- Clicking right on the ‘Engine’ option

- Click Run Engine

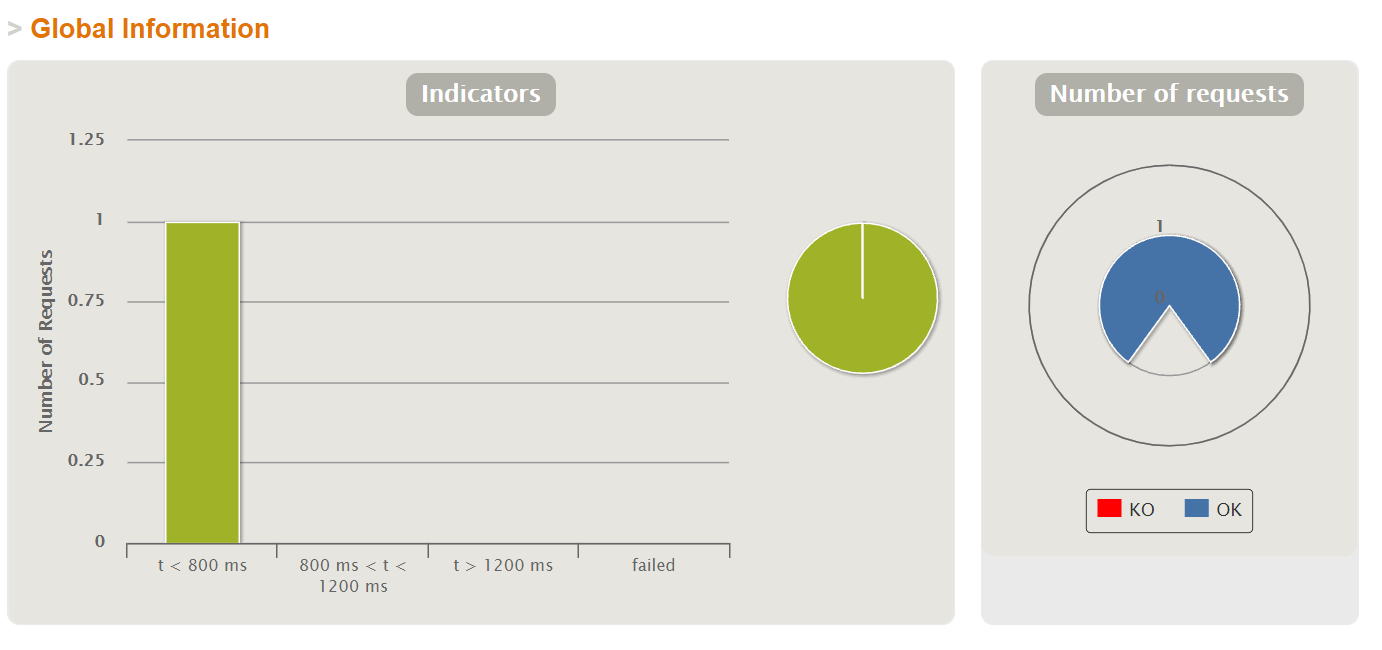

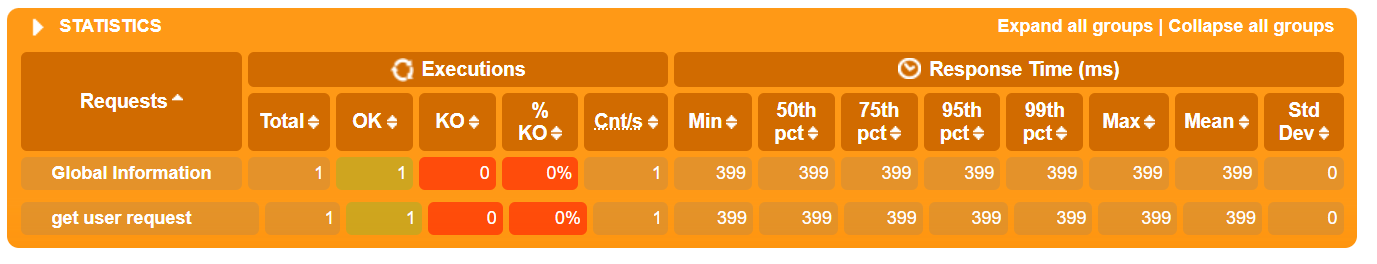

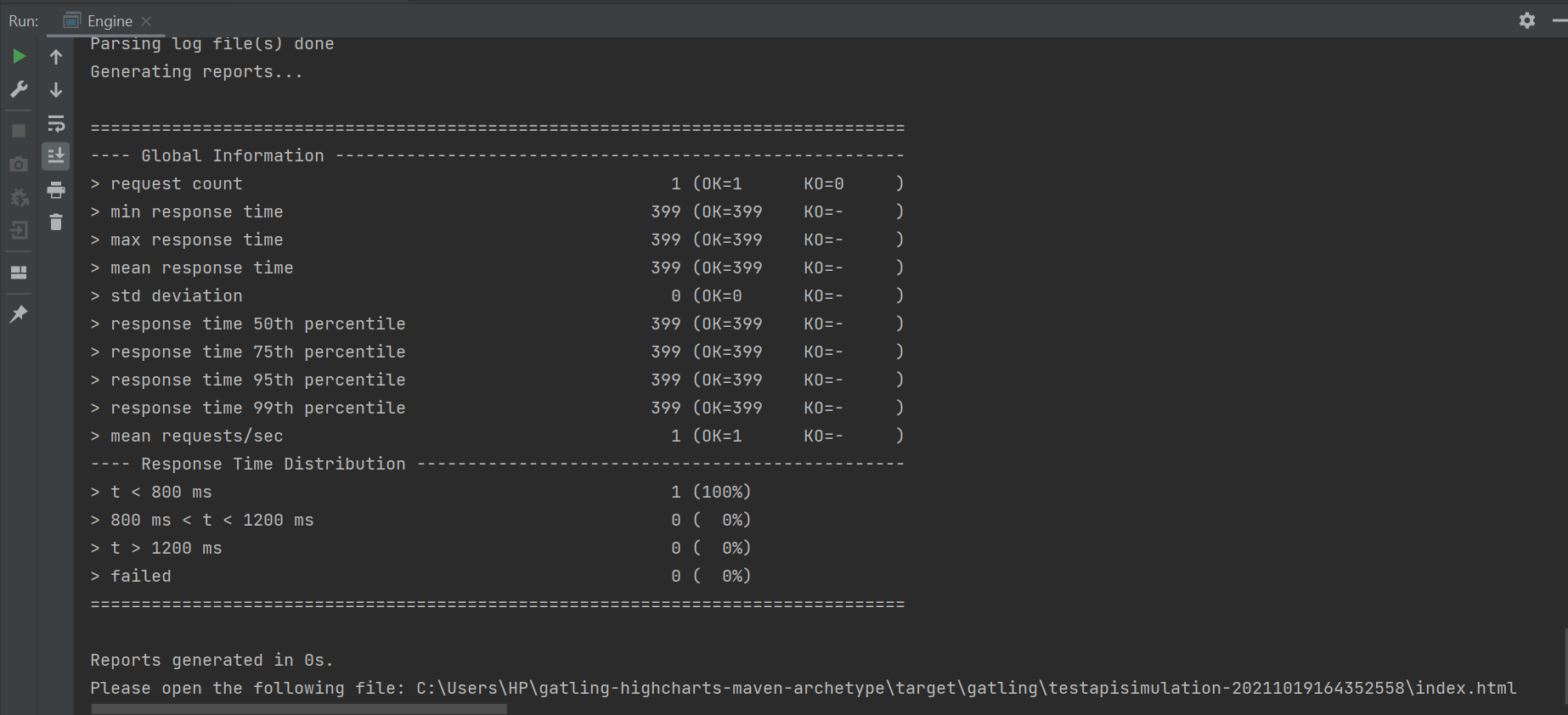

After you click on run Engine, you will see the script run and you will be asked to enter the description of the run in the console. Once you have entered the description, you will get the output in the console along with the report link. If you want to view the report, all you have to do is just copy the report link and paste it into any browser.

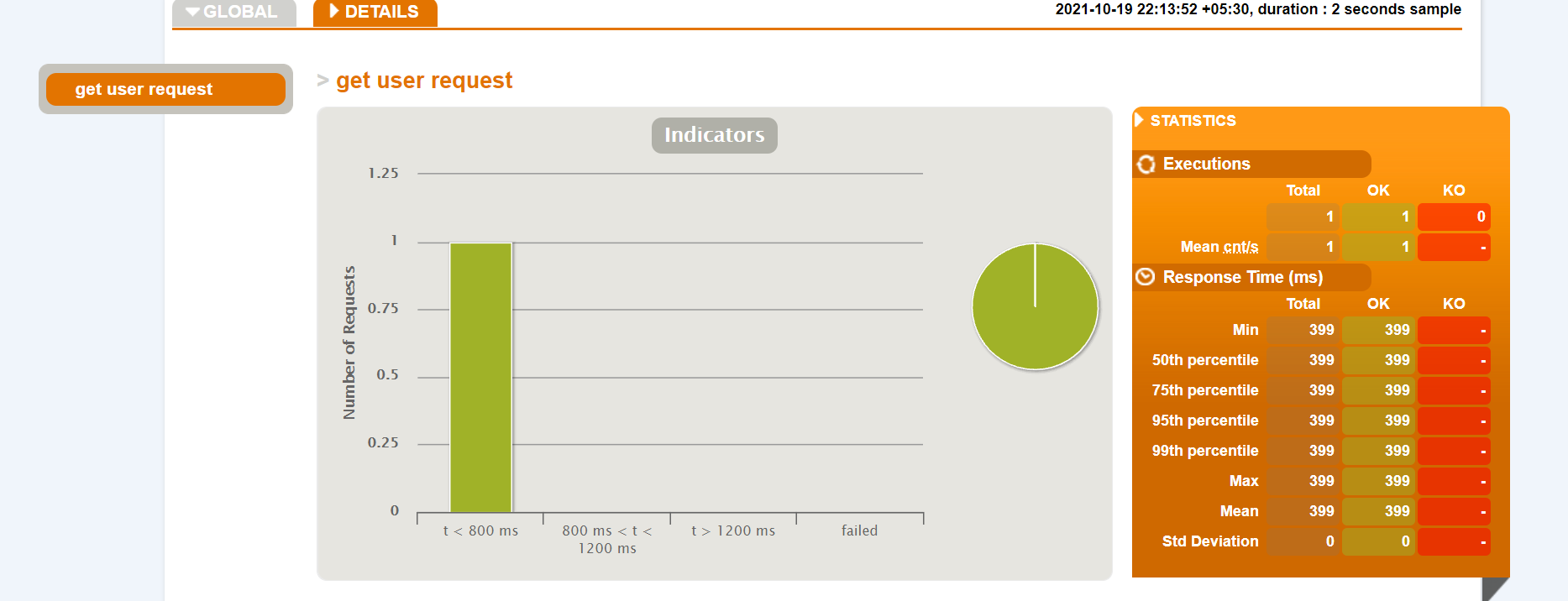

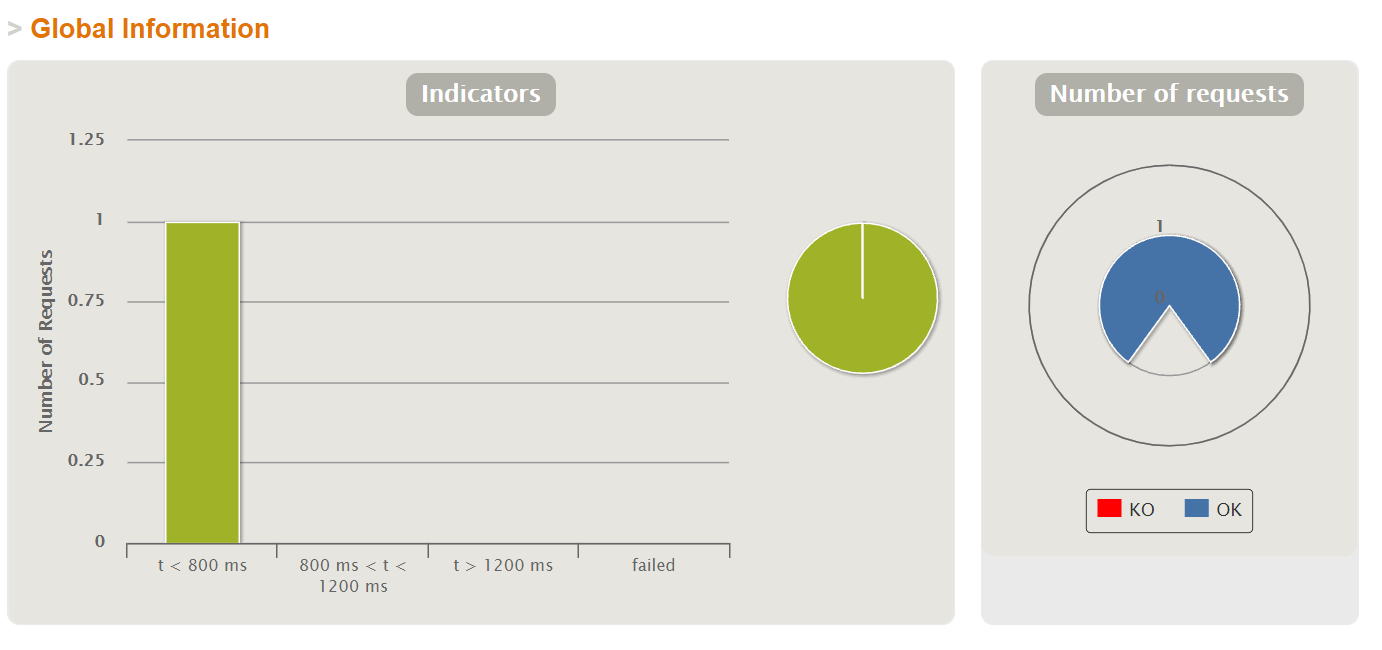

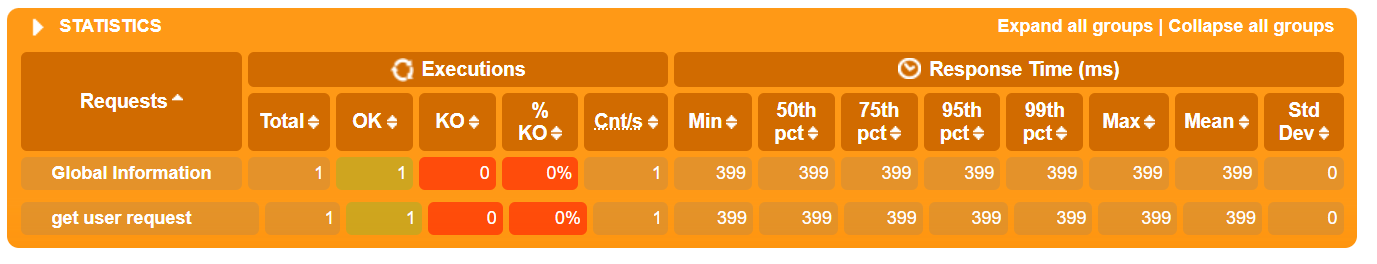

Global Information Results

Statics Report

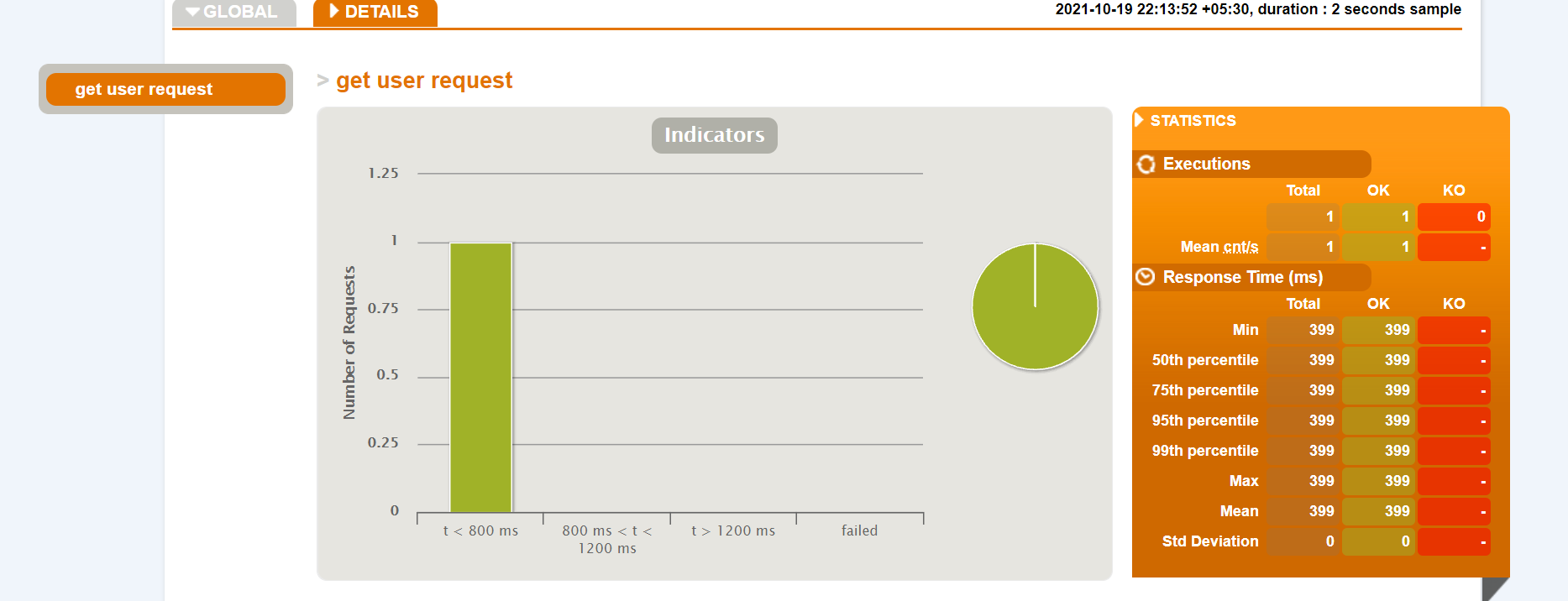

Detail Report

Conclusion:

We hope you enjoyed reading our blog while learning how to install & use Gatling to load test an HTTP server and also how to create your own maven project. Using a graphical user interface, we can record a simulation based on a defined scenario, and once the recording is complete, we’ll be good to start our test.

by admin | Nov 26, 2020 | Performance Testing, Fixed, Blog |

Load Testing is one of the types of performance testing. The typical load testing process is Test Design, Test Execution, Analysis the report, Fix the performance bottlenecks, and Re-run the scripts. To identify responsiveness and stability issues, you need to use different kinds of loads.

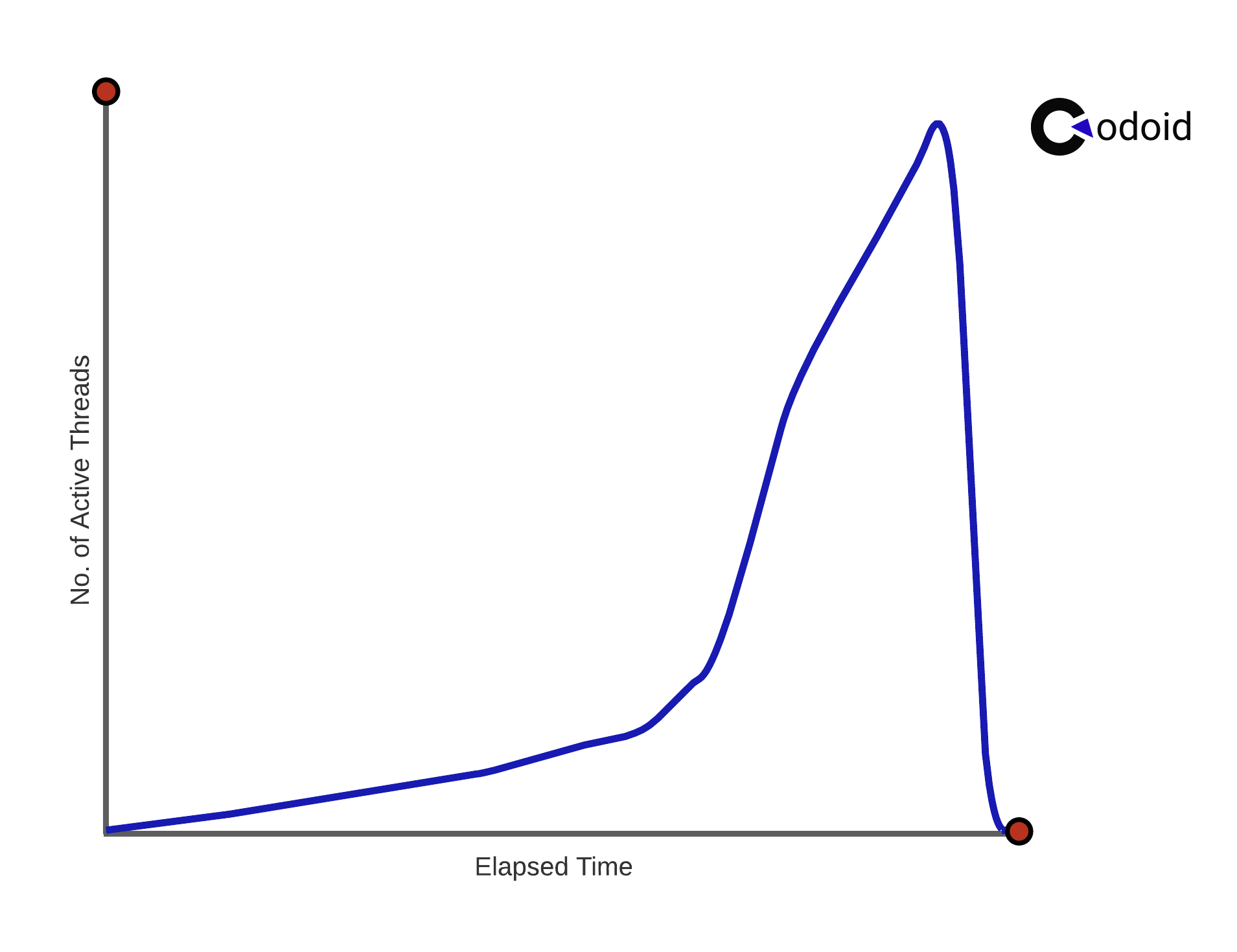

In every load testing, two parameters are involved – no. of virtual users and Test Duration. So the goal is you need to load the targeted no. of users within the stipulated time.

In this blog article, you will learn how to ramp-up users using different load test strategies.

Basic Load

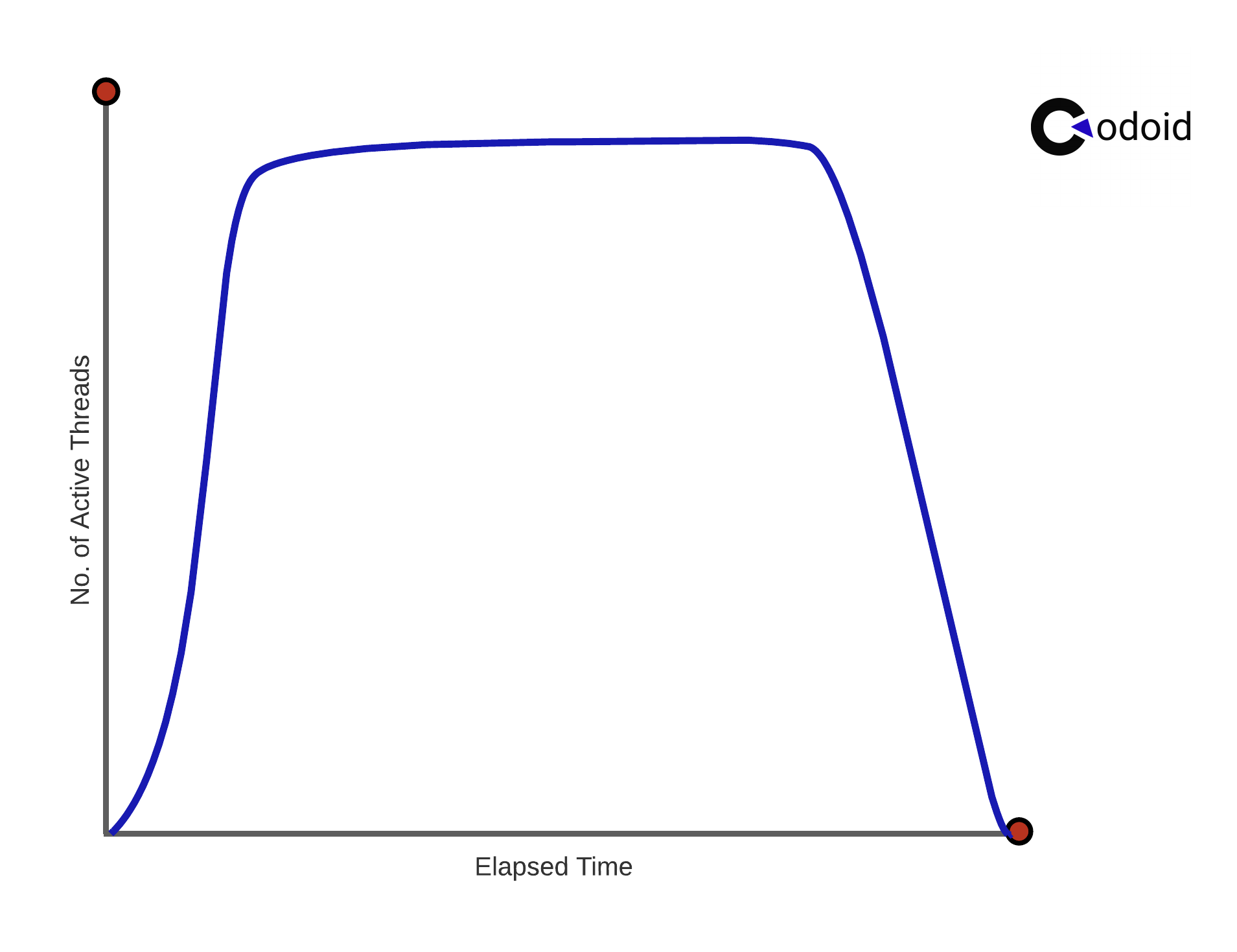

In this type, you ramp-up all the users quickly and see how the application behaves with concurrent user load.

For example: Let’s say you have planned for 2000 users and two hours test duration. All the 2000 users will be ramped-up in 120 seconds, and the test will run for 2 hours with a 2000 concurrent user load.

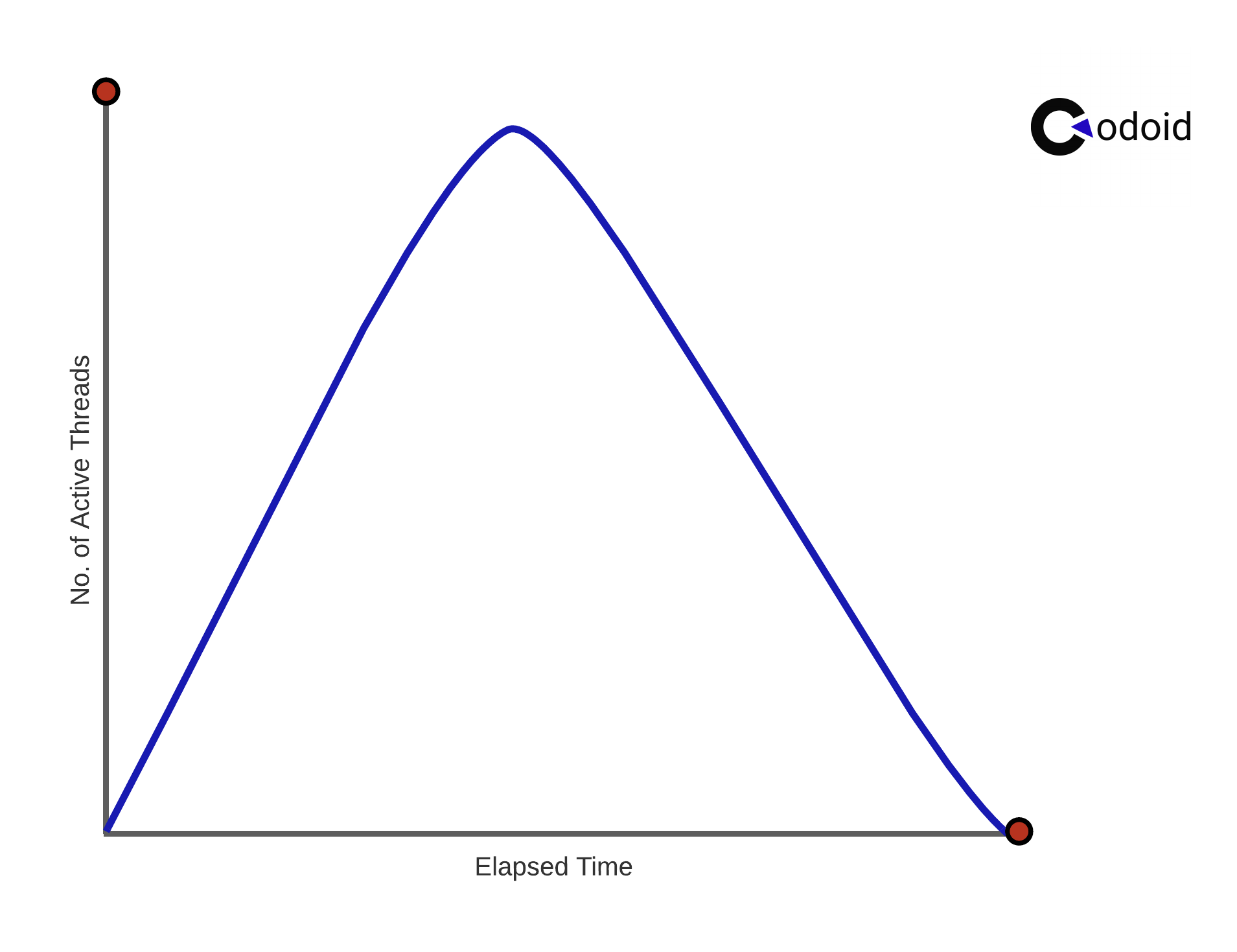

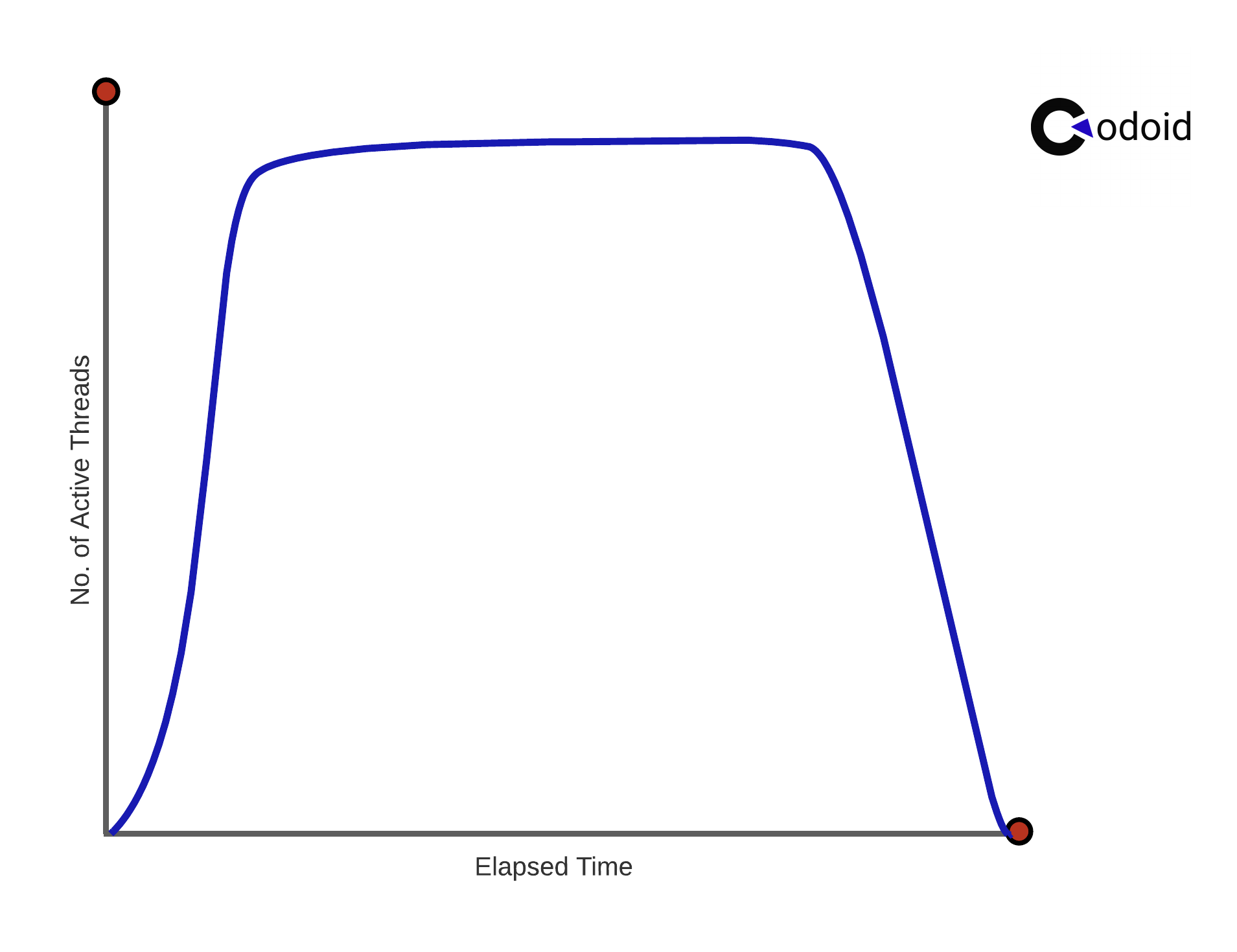

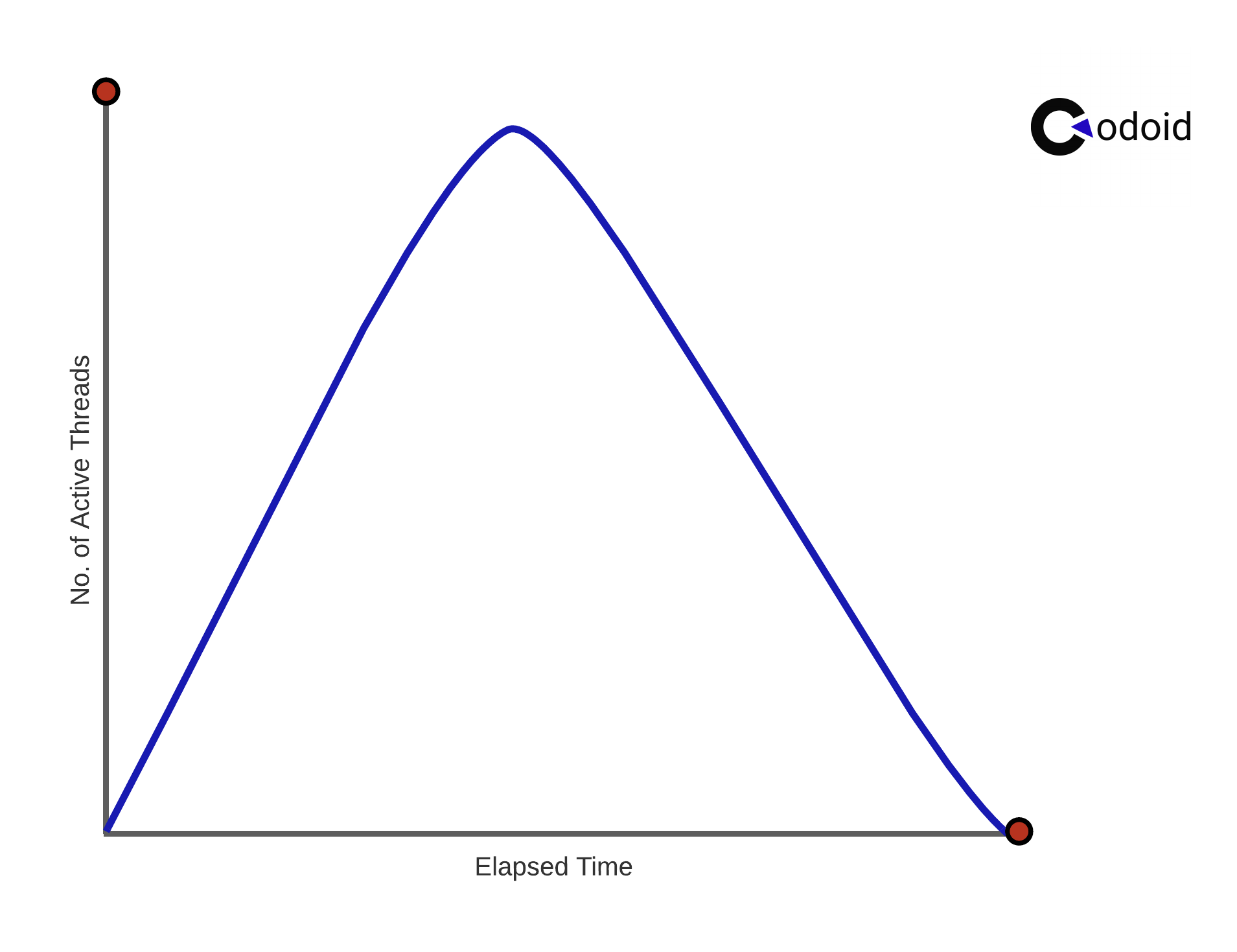

Ramp-up and Ramp-down Load

In this load, you ramp-up and ramp-down gradually. Instead of loading all the users quickly, you will load slowly and see how resources are utilized.

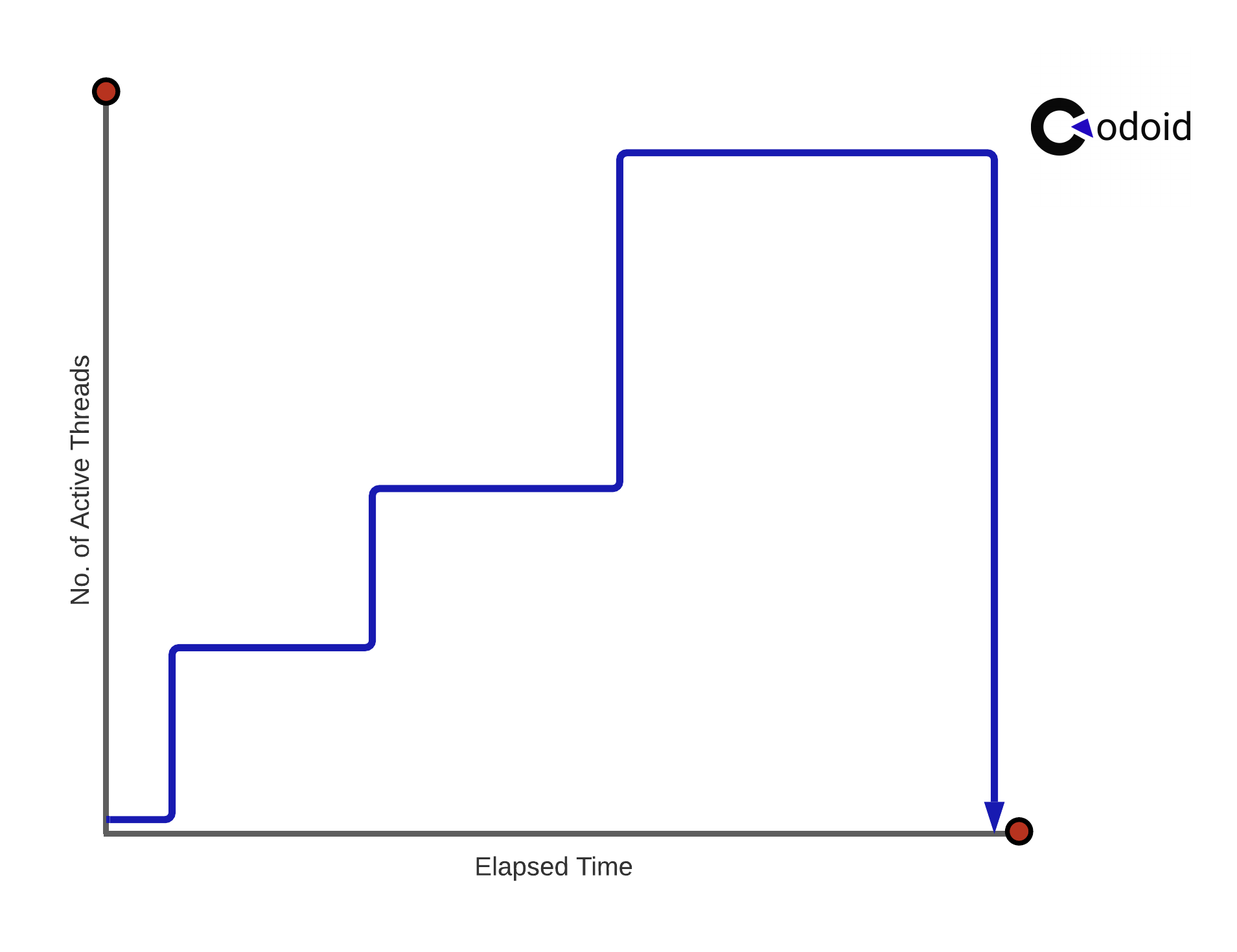

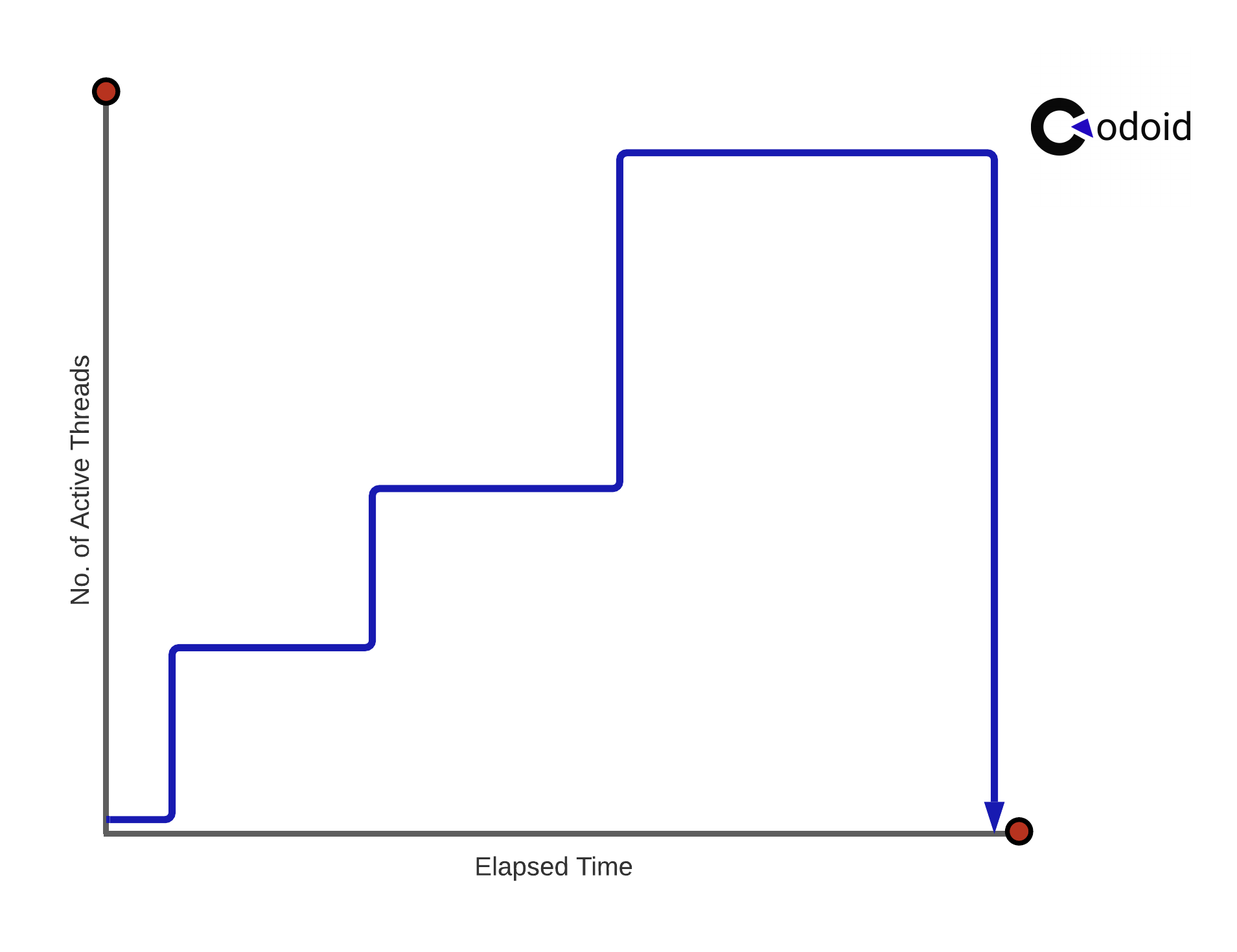

Multi-Step Load

The multi-step load is well suitable if the end-users are using/visiting the web application from different time-zones. A sudden increase in the load may not help you to identify the performance bottlenecks. Multi-step load adds a set of users in the given interval instead of loading all the users in one go.

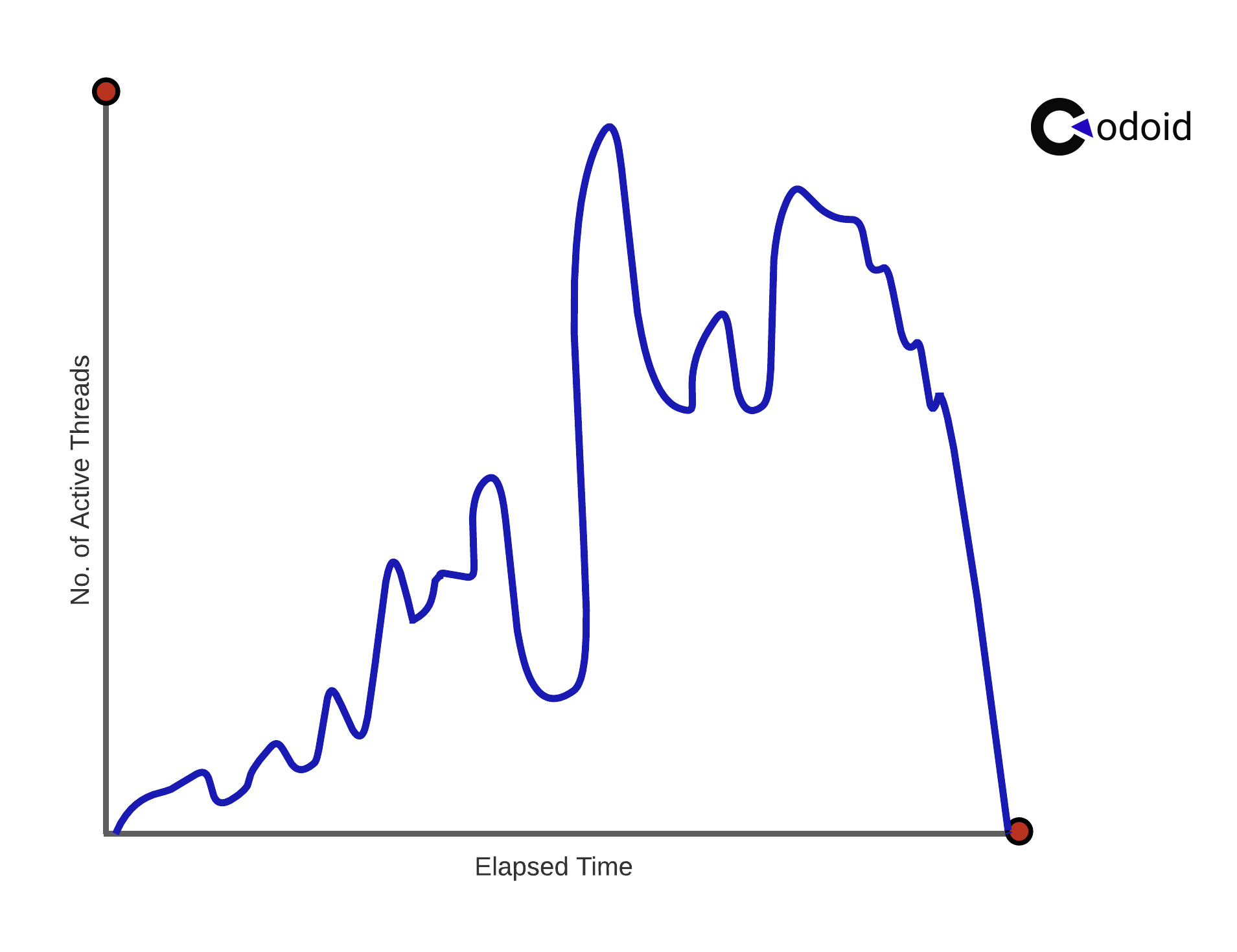

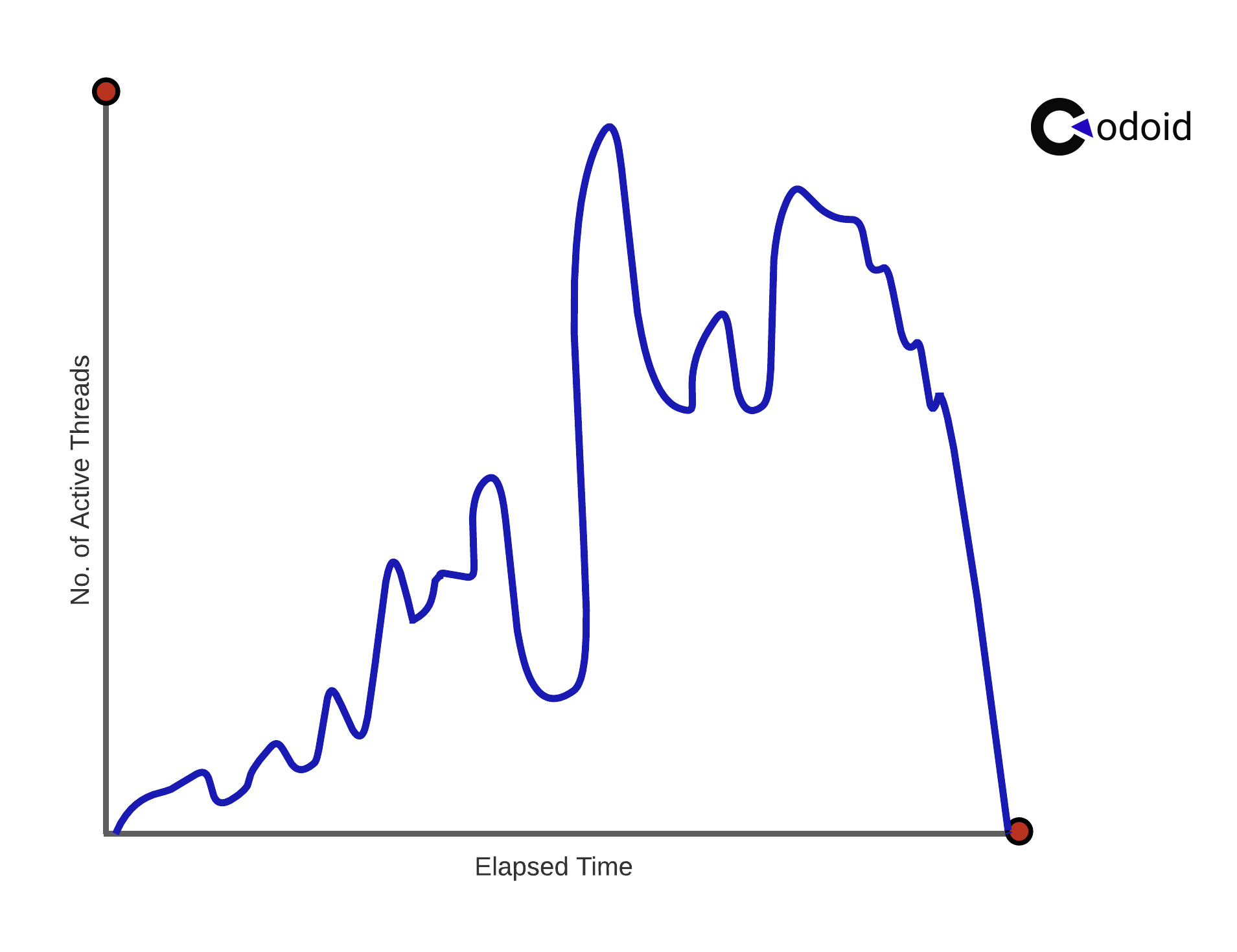

Random Load

Sometimes It is impossible to predict the user load. We will recommend adding a random load test. You can analyze how the application is reacting to the fluctuating load.

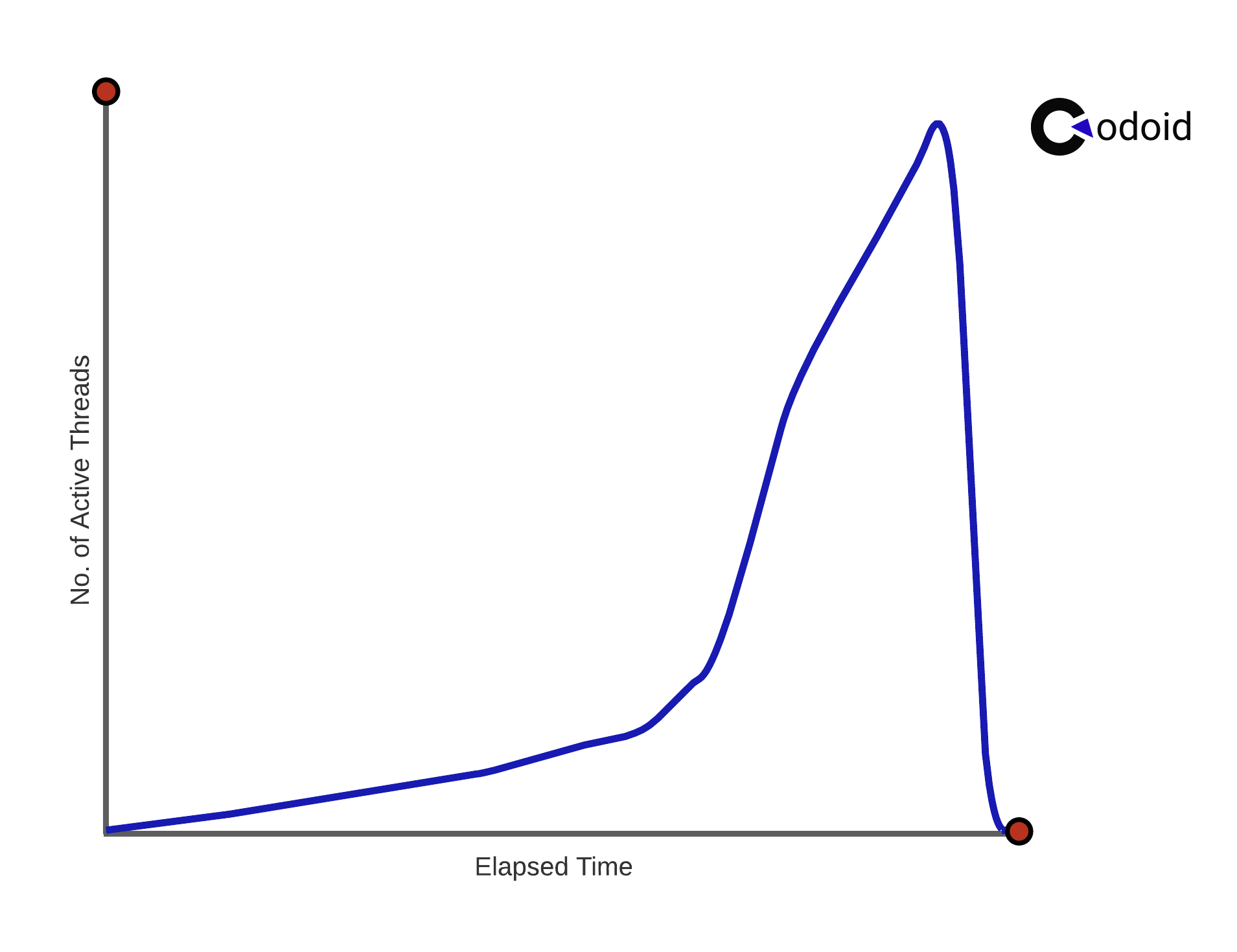

Exponential Load

In this test, you load a small set of users for a while and suddenly increase the load exponentially to test how the system scales when the load is super fast.

In Conclusion

Choosing the right load strategy will help you to identify the performance issues before releasing the system/app to the end-users. As one of the best QA companies, we create a concrete performance testing plan after gathering requirements from our clients.

Testing the responsiveness and stability of your application is vital to avoid unexpected issues. If your application is not responding, the user may never visit again. Performance testing is expensive. You need an expert team to accomplish the goals accurately. Contact us for your Load Testing needs.

by admin | Feb 27, 2020 | Performance Testing, Fixed, Blog |

This blog is mainly going to cover performance testing and its strategy. Performance Testing helps the business to understand the stability and reliability of the application upon stringent circumstances.

What is performance Testing?

To define in laymen terms, a Performance test is conducted to mainly observe the behavior of application which is hosted on a server upon various loads. The goal is not to validate the functional flow and identify the bugs in that manner, rather test a maturity attained application under critical load, volume, stress conditions to verify the set benchmark parameters are satisfied before the application goes live.

Types of Performance Testing

In levels of testing, the performance test is classified under the non-functional testing , given the nature of the test. This is again categorized into various types of testing which are listed below

Load testing

This test determines the application behavior under a certain load. When we say load we can consider a couple of illustrations as the number of user hits per second or the number of transactions. The goal is to verify how the application performance when there is a load on the server.

Stress Testing

Unlike the above test, this is done by injecting the load higher than the threshold to see the behavior of the application; this can tell us the breakeven point of an application also the frequency of getting down.

Volume Testing

This test is also called as flood testing as we are going to inject too much of data into the application to see, how the queue or any other interface is withstanding the inflow

Endurance Testing

This testing is done by subjecting the application to a nominal load but to make it operate for quite longer than its usage in the field. This test gives the reliability of the application as we are considering the time that the application lasts before it sees failure.

Spike testing

This test is performed by applying a significant steep of raise load on the application to see the withstanding nature of an application.

Scalability testing

This testing is done to verify how the application behaving when the load is kept increasing by adding a lot of user data volume.

Why is performance testing needed?

Given the capabilities of functional testing, a performance test is more useful to understand the reliability parameters. The intention of the test is not just to find the defects but more about examining the application behavior under load. Spec is defined in such a way that it mainly denotes following

How many concurrent users can log in to the application?

How many transactions should the application be processing upon peak load?

How much minimum the response time must be of?

There are a few other parameters which are gathered as part of Non-Functional Requirements (NFR) gathering and tested accordingly.

Has there been no performance testing we wouldn’t be able to understand the capacity, flexibility and the critical parameters of an application under load and there are great chances that we fail in field due to the improper understanding of the benchmark parameters.

Parameters that are validated during test

When any test is conducted, we look for certain parameters as validation elements. In performance testing, these parameters are again considered as two parameters

Server-side parameters:-

CPU utilization

Memory utilization

Disc utilization

Garbage collector

Heap dump/ thread pooling

Client-side parameters:-

Load

Hits per second

Transactions per second

Response times

Entry & Exit criteria

Like every other type of test, performance test also defines its own entry and exit criteria.

Entry criteria

This imperatively defines, when the application is eligible to undergo a performance test. Following are the key pre-requisites to define entry criteria

Functional testing must have been thoroughly conducted

Application must be stable

No major showstoppers must have been in open state

When a dedicated NFT environment is made available

Needed test data should be ready

Exit criteria

This defines how and when to tear down the test, the following activities must be completed to give a proper sign-off from a performance test perspective

An application must have been tested with all the NFR requirements

No major defects or customer use cases are to be in failing state

All the test results should be properly analyzed

Reports and results must be published to all stakeholders for sign-off

After discussing about various parameters and basics about Performance Testing, we will now discuss in detail about Performance Testing Strategy

Performance Testing Strategy

Below are the key pointers to consider in defining the Performance Testing strategy

Understanding Organization Requirement

Assess the Application nature and category

Take stock of last year’s performance of existing live applications

Defining Scope

Tool identification

Environment readiness

Risk assessment

Results analysis and report preparation

Understanding Organization Requirement

Every Organization provides certain service to Customer and based on the services and the nature of assessing requirement to its Customer, Performance Testing requirement gain importance. For example, an e-commerce company is relying 100% on Customer hit rates and successful transactions. 1000+ customers login at a time to buy products. During Offer sale, this can turn to the peak of million customers login at a time. This type of Organization needs strong performance testing before hosting it live. Their spending on Performance tool is inevitable.

Assess the Application nature and category

Identify the # of Applications that are in life on that year and assess the application based on its nature, whether it is Customer facing or assessed by the back end-user or assessed by operations team.

This brings in the criticality of application for performance testing. Also, understand the technology in which it is built with and the number of releases planned with a criticality of release. Prepare the list based on the above pointers.

Take stock of last year’s performance of existing live applications

Few applications would be in live for the past few years. Understand the challenges with respect to performance it faced and do the impact assessment based on past history. This exercise would give us the concrete requirement for Performance Testing for that application.

Defining Scope

After assessing the application nature and taking stock of past history, it gives us a requirement on what to test. Prepare NFR Requirements. This is nothing but the process of coming up with a well-documented test plan for that application. This phase essentially includes the identification of the non-functional requirements such as stated below

Whether the application is a multiuser purpose one or a limited user purpose one.,

Identification of key business transactions that are needed to test

Identify key blockers in the past from performance perspective and target to include in the testing scope

Forecasting the future load on certain services and conducting testing as needed

Tool identification

This is essential in the business; the right choice of tool will help in managing the cost as well as the hassle-free test conduction. The proper analysis must be done before we go for a selection that best suits the assignment.

Whether or not it’s an open-source tool or licensed

The tool that is selected should support any test activity with minimal configuration

The tool should help us in generating the best reporting mechanism

The tool must have got a decent community to get any solution for the problem being faced

To insist on the first point, the tool need not be freeware all the time, it’s completely a business call considering the high-end features that a licensed tool offers, no possibility for any breaches. If at all the test engineer identifies that the same can be accomplished by going with any other alternative tool that is available for free and supports the operation equally with a licensed one, then the choice of open-source one is desired as that way we can save the cost that is incurred. Below are some tools list

Popular Performance Testing tools are:

Jmeter (open source)

Load runner (commercial)

Silk performer (commercial)

Neo load (Commercial)

Application performance management tools:

App Dynamics

Wily-introscope

Perf mon

N-mon

Environment Readiness

This is one of the major considerations as far as the performance test is concerned. Unless and otherwise there is an isolated environment for NFT testing we can deem the test results and the application critical parameters (response time, think time, throughput..etc). So, a dedicated environment is highly recommended as the tasks more or related to

Insert a huge number of transactions in DB through API hit

Perform concurrent login scenario

Observe the response time while navigating between the pages

The environment must be maintained properly and should be highly stable

Risk assessment

It is quite obvious that on certain tasks, there are one or multiple hurdles seen down the line. We should foresee them during the initial discussions about the planning and proactively calling them out to all stakeholders will help come up with a stringent plan, that way we can reduce the negative impact on the business.

This concept is to learn the impact on a business if at all

Analyzing the dependency with the development and environmental team to get the support required during application being broke/ downtime instances

Going live with the presence of a defect that may occur at customer sight

Can’t test a particular scenario due to the unavailability of the infrastructure in the lower environments

Testing a feature by taking some deviation

Results analysis and report preparation

For any execution we make, test results are the key as they are the outcome of the effort that is being spent.

Post execution, results generated from the performance testing tool have to be analyzed and various parameters are assessed and a decent report in an easily understandable way has to be shared to all stakeholders on the outcome. We have tools which capture results of a test run, we can fairly extract from them.

Few instances, performance engineering activity also done by going one step further deep to understand where the blockers are and what could be the solution. This activity requires architectural understanding and finding the error-prone areas.

by admin | Dec 8, 2019 | Performance Testing, Fixed, Blog |

Performance testing falls within the wide and interesting gamut of software testing, and the aim of this form of testing is to ascertain how the system functions under defined load conditions. This form of testing focuses on the performance of the system based on standards and benchmarks, and is not about uncovering defects / bugs. As a leading Performance Testing Services Company we understand the importance of this type of testing in providing problem solving information to help with removing system blocks. Test execution and result analysis are the two sub-phases within the performance test plan, which encompasses comprehensive information of all the tests that would be executed (as mentioned below) within performance testing.

Defining Test Execution within Performance Testing

Test execution within performance testing denotes testing with the help of performance testing tools, and includes tests such as load test, soak test, stress test, spike test and others. There are several activities that are performed within test execution. These include – implementation of the pre-determined performance test, evaluation of the test result, verification of the result against the predefined non-functional requirements (NFRs), preparation of a provisional performance test report, and finally a decision to end or repeat the test cycle basis the provisional report.

Our team has years of experience in performance testing services, and includes experts who consistently execute tests within the timelines specified for test completion. A structured approach is quintessential to the success of test execution within performance testing. Once the tests are underway, the testers must consistently study the stats and graphs that figure on the live monitors of the testing tool being used.

It is important that the testers pay close attention to the performance metrics such as the number of active users, transactions and hits per second. Metrics such as throughput, error count and type, and more also need to be closely monitored, while also examining the ‘behavior’ of users against a pre-determined workload. Finally, the test must halt properly and the test results should be appropriately collated for the location. Post the completion of test execution, it is important that testers gather the result and commence result analysis – a post execution task. It is necessary for testers to understand the importance of a structured analysis of the test result – the second sub-phase of the performance test plan.

Methodology for Result Analysis

Result analysis is an important and more technical portion of performance testing. This is the task of experts who would need to assess the blocks and the best options for alleviating the problems. The solutions would need to be applied to the appropriate level of the software to optimize the effect. It is also essential for testers to understand certain points prior to starting the test result analysis. Some of these include – ensuring the tests run for a pre-determined period, remove ramp up and down duration, assess that no errors specific to the tool are present (load generator, memory issues, and others), ensure that there are no network related issues, and evaluate that the testing tool collects the results from all load generators and a consolidated test report is prepared. Further, adequate granularity must be there to identify the right peaks and lows, and the testers must make a note of the utilization percentage of the CPU and Memory before, during, and post the test. Filter options must be used and any unrequired transactions must be eliminated.

Testers must use some basic metrics to begin the result analysis – number of users, response time, transactions per second, throughput, error count, and passed count transactions (of first and last transactions). Testers must also analyze the graphs and other reports in order to ensure accurate result analysis.

As leaders in performance testing within the realm of software testing services, we believe there are some tips and practices that when implemented, would enhance the quality of test execution and result analysis.

- Generate individual test reports for each test

- Use a pre-determined and set template for the test report and for report generation

- Accurate observations (including root cause) and listing of the defects (description and ID) must be part of the test report

- All relevant reports (AWR, heap dump analysis and others) must be attached with the provisional test report

- Conclude the result with a Pass or Fail status

A successful digital strategy is one where there is speedy and reliable software that is released fast into the market. Being able to create such software with the help of a structured performance testing process is the edge that a business would gain. We at Codoid are leaders in this realm and more, since our testing methods are designed by experts who understand the importance of consistently superior load and performance testing. Connect with us to accelerate the cause of your business through highest quality software – we ensure it.

by admin | Oct 8, 2019 | Performance Testing, Fixed, Blog |

When customers walk into a brick-and-mortar store/shop they expect to be greeted warmly and enter clean and well-stocked premises. Most businesses also have an online presence and the websites serve as the store in a digital world – it is the face of a business. A good website is one that allows speedy and easy navigation and ensures that customers remain happy with their experience. Customer happiness is directly proportionate to the ROI of any business – there is a proven connection between the loading speed of a website and gaining new customers and retaining existing ones. Fast loading pages will keep visitors and customers coming back, and they would be more likely to conduct business on the site. However, as a company excelling at Performance Testing Services we know the converse of this truth as well. A slow loading site is one of the top reasons for customers/visitors to skip a website and abandon shopping carts midway. Customers expect websites to load within a few seconds and if a business expects them to remain engaged, the customer wishes must be granted.

We at Codoid consistently help clients to keep a strict watch on how their website functions and perform – and ensure that all functionalities and features run optimally, even under load conditions. There are times when every business experiences peak loads such as during holidays, major announcements or events, and other such times. It is necessary for a business to know the peak volume/load their website can withstand else it may shut down leaving customers furious. When existing and new customers are unable to access services and products, a slump in revenue and reputation are expected outcomes. Keep your business safe from these risks – speak with our experts.

The Importance and Best Practices of Load Testing

Load testing is a critical method that helps to alleviate risk. A fast-loading site is a great way to ensure customer happiness, bring peace of mind for the stakeholders, and add significantly to the bottom line of a business. We, therefore, put forth some of the best practices that we as a leading performance testing company follow, and apply to the projects entrusted to us.

Understanding Business GoalsWe help businesses understand the correlation between their goals and the testing environment, such that the right aspects of the application are tested to ensure performance under load. Businesses must pay attention to the user experiences that would be the drivers for engagement, revenue, advertising, and other such metrics, and know what expectations users have that would accelerate these metrics.

Understanding Web and Application Key Performance IndicatorsKnowing business objectives would not suffice without establishing some indicators of performance – real-time performance compared to expected performance. Some of the KPIs would be response time, the number of requests the system processes per second, utilization of resources (memory and CPU consumption and other resources)

Selecting the Right Tool for Load Testing Since the success of load testing depends on the kind of tool and methodology, your business would need the best-in-class partner to deliver proactive service. It is important to understand the possible issues even before they affect the performance – this will ensure an optimally functioning website and ultimately happy customers consistently doing business and spreading the word of mouth to others, which would create more customers.

Putting Together the Best Test CaseA test case that simulates the user behavior in the real world will be best equipped to predict the performance of the website under a similar load. Experts create these test cases with virtual users on emulators or even physical devices.

Ascertaining the Load EnvironmentThe premise of load testing is the similar replication of the production environment – we understand that a test environment can never exactly duplicate the production environment, but it is important to gain as much accuracy as possible. It is necessary for a business to understand the limits of the hardware and through that ascertain the possible defects before they occur.

Load Tests to be Run GraduallyIt is never wise or feasible to test everything at once – beginning to test a smaller number of scattered users is a better way to identify any problems and the breakpoint of the system. Post running the first set of tests, it is necessary to check the results and remedy any defects/obstacles, before moving to the next set of testing scenarios.

Load Testing is Best Done from the End User PerspectiveThe entire objective of load testing is to ensure that the end-user/customer is happy with their experience when using the website and or application of a company. Load testing must revolve around the aim of elevating these experiences, such that customers remain engaged and continue to do business with the company thereby maintaining a good bottom line.

In Conclusion

As experts we know that there are several things that are strictly unacceptable to run load tests – for instance running tests in a real environment. There are several factors and network traffic that affect a website, and running tests in such a scenario is sure to skew results. Another thing that must not be done is trying to break down the tested server – this is not the objective of load testing – but rather to check the performance of a website under varying load conditions. The best practices mentioned do not constitute an exhaustive list but do provide a clear indication of how to ensure meticulous load testing. Connect with our load testing champions and leave the worrying to them.

by admin | Nov 3, 2019 | Performance Testing, Fixed, Blog |

Performance Testing checks the ‘performance’ of an application or software under workload conditions, in order to determine stability and responsiveness. The objective of performance testing is also to discover and eliminate any hindrances that may lower performance. As an expert Performance Testing Services Company we conduct this type of testing using contemporary methods and tools to ensure that software meets all the expected requirements such as speed (response time of the application), stability (steady under varying loads), and scalability (load capacity of the software). Performance testing falls within the larger realm of performance engineering.

Why is Performance Testing Required?

Most importantly performance testing uncovers areas that may need to be improved and enhanced before launch, and ensure that the business is aware of the speed, stability, and scalability of their software/application. As an experienced software testing company, we understand that without this type of testing, software could contain several issues such as slow performance when being used by several users, inconsistent ‘behavior’ across varying operating systems and devices, and other such issues.

If a software/application were to be released with issues to the market not only would it not meet the sales goals, the company would also gain a bad reputation and customer ire. Performance testing is especially necessary for applications that would be critical to the mission of companies – applications need to run interrupted and without variations and divergent behavior.

Types of Performance Testing

There are different types of performance tests within the realm of software testing and fall under non-functional testing (determining the readiness of the software) and functional testing (focus on individual functions of the software). It is important for companies to partner with a professional and experienced service provider to undertake this type of testing, amongst others, for enhanced ROI in business.

Load TestingAs a highly proficient load testing services company we have helped several businesses to measure the performance of their software under increasing workload – a number of users or transactions simultaneously on the system. Load testing is about measuring the response time and stability of the system with the increase in workload – which is within the bounds of normal working conditions.

Stress TestingThis is also referred to as ‘fatigue testing’ and measures the performance of software beyond the bounds of normal working conditions. The software is loaded with users /transactions that would be beyond the capacity of the software, and the aim of this testing is to check the stability of the software under such ‘stress’ conditions. It helps to determine the point that software would crash and how soon it would be able to ‘recover’ from the crash.

Spike Testing This is a type of testing within the realm of stress testing and tests the performance of software when there are quick, repeated, and substantial increases in workloads. The workloads are beyond the normally expected loads in shorter timeframes.

Endurance TestingThis is also referred to as soak testing and evaluates the performance of software under normal work conditions but over an extended timeframe. The aim of endurance testing is to determine problems such as memory leaks in the system (a memory leak happens when the system is unable to release discarded memory, and this can significantly damage the performance of the system)

Scalability TestingThis testing determines whether or not the software is able to successfully handle increases in workloads, and is done by gradually enhancing data volume or user load while monitoring the performance of the system. In addition, factors such as the CPU and memory could be changed while workload remains constant – the system should perform well.

Volume TestingThis is also referred to as flood testing and is conducted to determine the efficiency of the software with projected large amounts of data – ‘flooding’ of the system with data.

Common Problems Occurring During Performance Testing

Apart from slow responses and load times, testers usually encounter several other problems while conducting performance testing.

Blockages: Data flow is interrupted or stopped when the system does not have the capacity to handle the workload.

Reduced Scalability: When the software is unable to handle the required number of simultaneous tasks, the results may not be as expected, there could be an increase in the errors and possible other unexpected results.

Issues with Software Configuration: The settings may not be sufficient to handle workload.

Inadequate Hardware: There could be poorly performing CPUs or even lowered physical memory of the system.

Steps within Performance Testing

Experienced testers use what is known as a ‘test bed’ or the testing environment, wherein the software, hardware, and networks are put up to run performance tests. The following are the steps to conduct performance testing within the testbed.

Ascertain the Testing Environment By ascertaining and putting together the required tools, hardware, software, network configurations, testers would be better equipped to put design the tests and also assess the possible testing challenges early on in the process.

Determine Performance Metrics Testers must determine performance metrics such as response time, throughput and constraints, while also assessing the success criteria for performance testing.

Meticulous Planning and Designing of Tests Experienced testers would take into account target metrics, test data, and variability of users, which would constitute test scenarios. In addition, testers would need to put together the elements of the test environment and monitor resources.

Put Together Test Design and Execute Tests The next step would be to develop the tests based on the above, followed by the structured execution of the tests. This would include monitoring and capturing all the generated data.

Lastly – Analysis, Reporting, and Retesting For successful completion of the testing, it is necessary to analyze and report the findings. Post analysis and reporting, a rerun of the tests using varying parameters – some same and some different, is essential.

Performance Testing Metrics Measured

Every round of performance testing would not be able to use all the metrics to measure speed, scalability, and stability. Some of the metrics for performance testing are:

Response Time – The total time spent to send and receive a response from the system

Wait Time – Developers are able to determine the time elapsed before they receive the first byte after sending a request – this is also known as average latency.

Average Load Time – From the user perspective one of the top indicators of quality is the average time the system takes to deliver their request.

Peak Response Time – This measures the longest time taken to complete a request, and a peak response time that goes above the average, could be an indication of a variance that could likely pose a problem later.

Error Rate – When compared to all requests, this would be a percentage of requests that end in errors, and usually occurs when the load goes beyond the capacity of the system.

Concurrent Users – Also referred to as load size or the number of active users at any point of time.

Requests per Second and Number of Passed / Failed Transactions – The number of requests handled and the measurement of the total successful and unsuccessful requests.

Throughput, CPU and Memory Utilization – Throughput displays the bandwidth utilized during testing and CPU Utilization is the time that the CPU need to process requests, and Memory utilization is the amount of memory required to process each request.

Right time to Conduct Performance Testing

There are two phases in the lifecycle of an application – the development and deployment phases – and this is true for both mobile and web applications. The performance testing during development focuses on components, and the sooner these components are tested the earlier would anomalies be detected, lowering the amount of time and costs required to rectify the errors. As the application is developed, performance tests must be more comprehensive and some should be carried out in the deployment phase.

In Conclusion

Performance Testing is an absolute necessity in the realm of Performance Engineering – and must be conducted prior to the release of the software. By ensuring that the software/application is working well, a business would be able to gain customer satisfaction and long-term engagement. Costs of poor performance of an application can be extremely high and would include damaged reputation, customer ire, bad publicity, and huge losses in sales. To protect your company from flawed products, connect with our experts and leave the worrying to us.