by Rajesh K | Jun 1, 2025 | Artificial Intelligence, Blog, Latest Post |

Automated UI testing has long been a critical part of software development, helping ensure reliability and consistency across web applications. However, traditional automation tools like Selenium, Playwright, and Cypress often require extensive scripting knowledge, complex framework setups, and time-consuming maintenance. Enter Operator GPT, an intelligent AI agent that radically simplifies UI testing by allowing testers to write tests in plain English. Built on top of large language models like GPT-4, it can understand natural language instructions, perform UI interactions, validate outcomes, and even adapt tests when the UI changes. In this blog, we’ll explore how Operator GPT works, how it compares to traditional testing methods, when to use it, and how it integrates with modern QA stacks. We’ll also explore platforms adopting this technology and provide real-world examples to showcase its power.

What is Operator GPT?

Operator GPT is a conversational AI testing agent that performs UI automation tasks by interpreting natural language instructions. Rather than writing scripts in JavaScript, Python, or Java, testers communicate with Operator GPT using plain language. The system parses the instruction, identifies relevant UI elements, performs interactions, and returns test results with screenshots and logs.

Key Capabilities of Operator GPT:

- Natural language-driven testing

- Self-healing test flows using AI vision and DOM inference

- No-code or low-code test creation

- Works across browsers and devices

- Integrates with CI/CD pipelines and tools like Slack, TestRail, and JIRA

Traditional UI Testing vs Operator GPT

| S. No |

Feature |

Traditional Automation Tools (Selenium, Playwright) |

Operator GPT |

| 1 |

Language |

Code (Java, JS, Python) |

Natural Language |

| 2 |

Setup |

Heavy framework, locator setup |

Minimal, cloud-based |

| 3 |

Maintenance |

High (selectors break easily) |

Self-healing |

| 4 |

Skill Requirement |

High coding knowledge |

Low, great for manual testers |

| 5 |

Test Creation Time |

Slow |

Fast & AI-assisted |

| 6 |

Visual Recognition |

Limited |

Built-in AI/vision mapping |

How Operator GPT Works for UI Testing

- Input Instructions: You give Operator GPT a prompt like:

“Test the login functionality by entering valid credentials and verifying the dashboard.”

- Web/App Interaction: It opens a browser, navigates to the target app, locates elements, interacts (like typing or clicking), and performs validation.

- Result Logging: Operator GPT provides logs, screenshots, and test statuses.

- Feedback Loop: You can refine instructions conversationally:

“Now check what happens if password is left blank.”

Example: Login Flow Test with Operator GPT

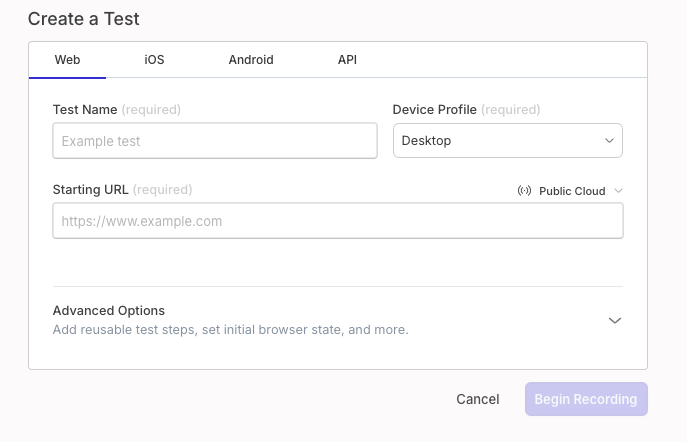

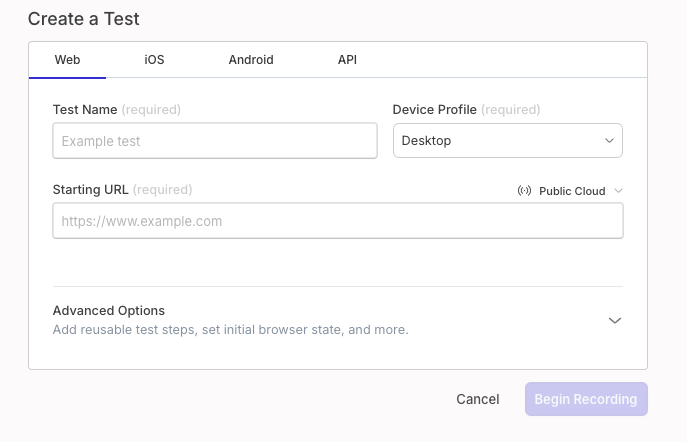

Let’s walk through a real-world example using Reflect.run or a similar GPT-powered testing tool.

Test Scenario:

Goal: Test the login functionality of a demo site

URL: https://practicetestautomation.com/practice-test-login/

Credentials:

- Username: student

- Password: Password123

Natural Language Test Prompt:

- Go to https://practicetestautomation.com/practice-test-login/.

- Enter username as “student”.

- Enter password as “Password123”.

- Clicks the login button

- Click the login button.

Verify that the page navigates to a welcome screen with the text “Logged In Successfully”.

{

"status": "PASS",

"stepResults": [

"Navigated to login page",

"Entered username: student",

"Entered password: *****",

"Clicked login",

"Found text: Logged In Successfully"

],

"screenshot": "screenshot-logged-in.png"

}

This test was created and executed in under a minute, without writing a single line of code.

Key Benefits of Operator GPT

The real strength of Operator GPT lies in its ability to simplify, accelerate, and scale UI testing.

1. Reduced Time to Test

Natural language eliminates the need to write boilerplate code or configure complex test runners.

2. Democratized Automation

Manual testers, product managers, and designers can all participate in test creation.

3. Self-Healing Capability

Unlike static locators in Selenium, Operator GPT uses vision AI and adaptive learning to handle UI changes.

4. Enhanced Feedback Loops

Faster test execution means earlier bug detection in the development cycle, supporting true continuous testing.

Popular Platforms Supporting GPT-Based UI Testing

- Reflect.run – Offers no-code, natural language-based UI testing in the browser

- Testim by Tricentis – Uses AI Copilot to accelerate test creation

- AgentHub – Enables test workflows powered by GPT agents

- Cogniflow – Combines AI with automation for natural instruction execution

- QA-GPT (Open Source) – A developer-friendly project using LLMs for test generation

These tools are ideal for fast-paced teams that need to test frequently without a steep technical barrier.

When to Use Operator GPT (And When Not To)

Ideal Use Cases:

- Smoke and regression tests

- Agile sprints with rapid UI changes

- Early prototyping environments

- Teams with limited engineering resources

Limitations:

- Not built for load or performance testing

- May struggle with advanced DOM scenarios like Shadow DOM

- Best paired with visual consistency for accurate element detection

Integrating Operator GPT into Your Workflow

Operator GPT is not a standalone tool; it’s designed to integrate seamlessly into your ecosystem.

You can:

- Trigger tests via CLI or REST APIs in CI/CD pipelines

- Export results to TestRail, Xray, or JIRA

- Monitor results directly in Slack with chatbot integrations

- Use version control for prompt-driven test cases

This makes it easy to blend natural-language testing into agile and DevOps workflows without disruption.

Limitations to Consider

- It relies on UI stability; drastic layout changes can reduce accuracy.

- Complex dynamic behaviors (like real-time graphs) may require manual checks.

- Self-healing doesn’t always substitute for code-based assertions.

That said, combining Operator GPT with traditional test suites offers the best of both worlds.

The Future of Testing:

Operator GPT is not just another automation tool; it represents a shift in how we think about testing. Instead of focusing on how something is tested (scripts, locators, frameworks), Operator GPT focuses on what needs to be validated from a user or business perspective. As GPT models grow more contextual, they’ll understand product requirements, user stories, and even past defect patterns, making intent-based automation not just viable but preferable.

Frequently Asked Questions

-

What is Operator GPT?

Operator GPT is a GPT-powered AI agent for automating UI testing using natural language instead of code.

-

Who can use Operator GPT?

It’s designed for QA engineers, product managers, designers, and anyone else involved in software testing no coding skills required.

-

Does it replace Selenium or Playwright?

Not entirely. Operator GPT complements these tools by enabling faster prototyping and natural language-driven testing for common flows.

-

Is it suitable for enterprise testing?

Yes. It integrates with CI/CD tools, reporting dashboards, and test management platforms, making it enterprise-ready.

-

How do I get started?

Choose a platform (e.g., Reflect.run), connect your app, type your first test, and watch it run live.

by Rajesh K | May 30, 2025 | Artificial Intelligence, Blog, Latest Post |

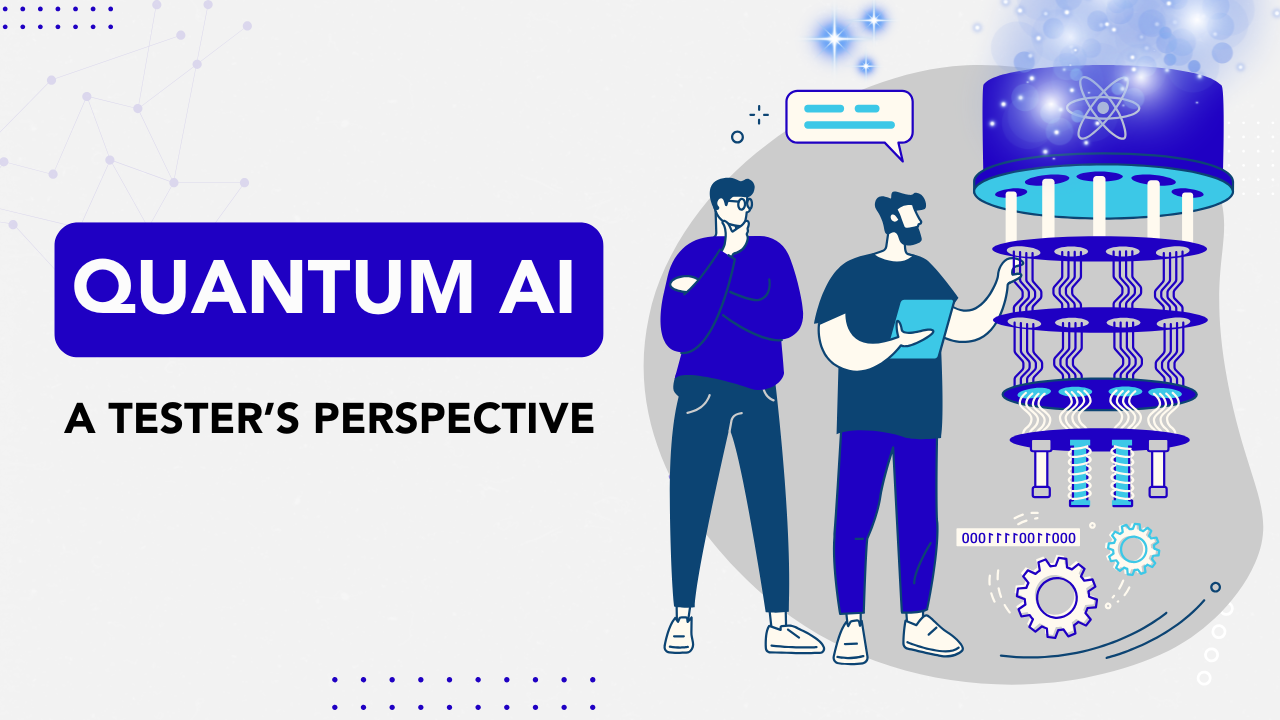

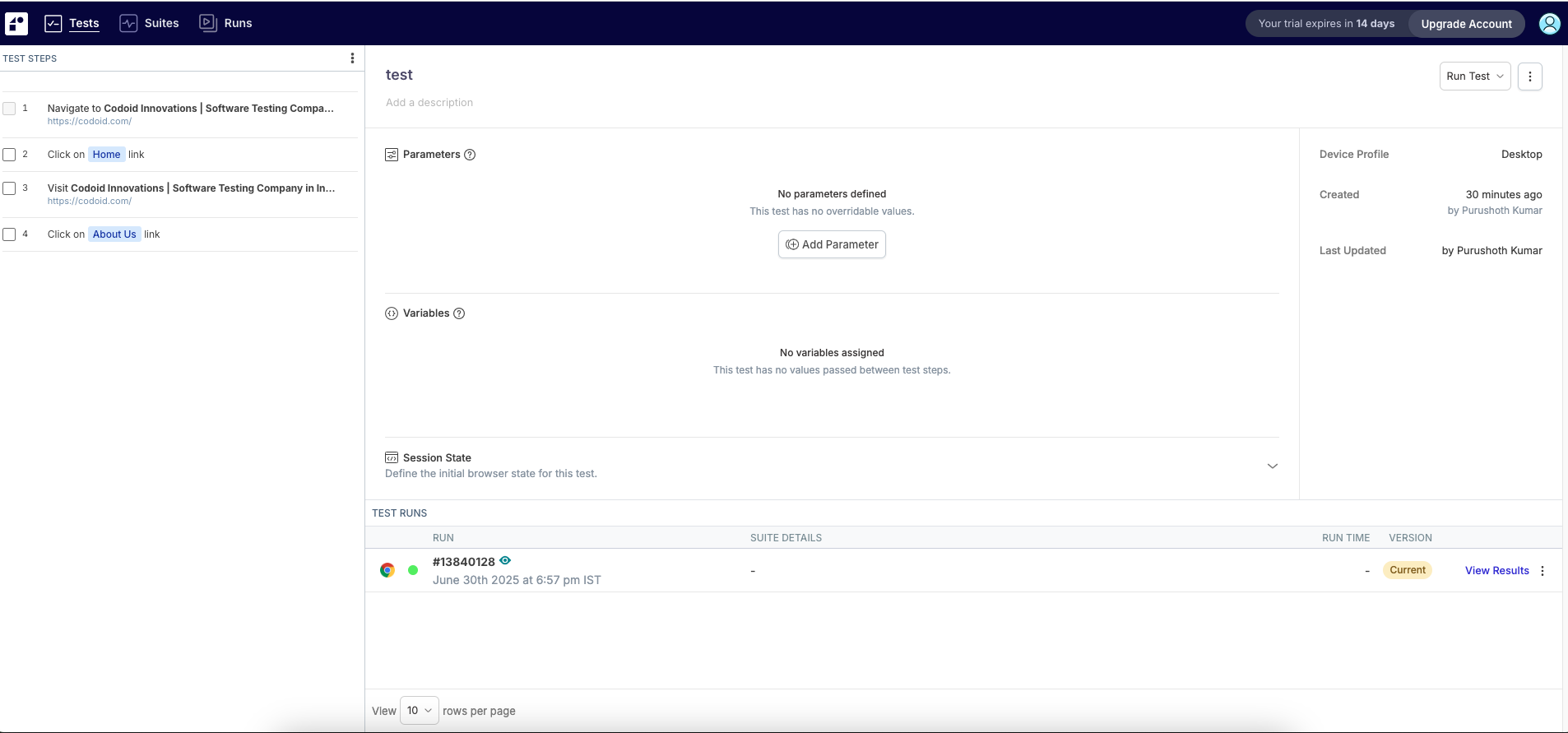

AI agents are everywhere from Siri answering your voice commands to self-driving cars making split-second decisions on the road. These autonomous programs are transforming the way businesses operate by handling tasks, improving efficiency, and enhancing decision-making across industries. But what exactly is an AI agent? In simple terms, an AI agent is an intelligent system that can process data, learn from interactions, and take action without constant human supervision. Unlike traditional software, AI agents often work 24/7 and can tackle many processes simultaneously, delivering instant responses and never needing a break. This means companies can provide round-the-clock support and analyze vast data faster than ever before. In this article, we’ll explore AI agent examples across various domains to see how these systems are transforming technology and everyday life. We’ll also compare different types of AI agents (reactive, deliberative, hybrid, and learning-based) and discuss why AI agents are so important. By the end, you’ll understand not only what AI agents are, but also why they’re a game-changer for industries and individuals alike.

Types of AI Agents: Reactive, Deliberative, Hybrid, and Learning-Based

Not all AI agents work in the same way. Depending on their design and capabilities, AI agents generally fall into a few categories. Here’s a quick comparison of the main types of AI agents and how they function:

- Reactive Agents: These are the simplest AI agents. They react to the current situation based on predefined rules or stimuli, without recalling any past events. A reactive agent does not learn or consider experience it just responds with pre-programmed actions to specific inputs. This makes them fast and useful for straightforward tasks or predictable environments. Example: a basic chatbot that answers FAQs with fixed responses, or a motion-sensor light that switches on when movement is detected and off shortly after both follow simple if-then rules without learning over time.

- Deliberative Agents: Deliberative (or goal-based) agents are more advanced. They maintain an internal model of the world and can reason and plan to achieve their goals. In other words, a deliberative agent considers various possible actions and their outcomes before deciding what to do. These agents can handle more complex, adaptive tasks than reactive agents. Example: a route finding GPS AI that plans the best path by evaluating traffic data, or a robot that plans a sequence of moves to assemble a product. Such an agent thinks ahead rather than just reacting, using its knowledge to make decisions.

- Hybrid Agents: As the name suggests, hybrid agents combine reactive and deliberative approaches. This design gives them the best of both worlds: they can react quickly to immediate events when needed, while also planning and reasoning for long-term objectives. Hybrid agents are often layered systems a low-level reactive layer handles fast, simple responses, and a higher deliberative layer handles strategic planning. Example: an autonomous car is a hybrid agent. It plans a route to your destination and also reacts in real-time to sudden obstacles or changes (like a pedestrian stepping into the road). By blending reflexive reactions with strategic planning, hybrid AI agents operate effectively in complex, changing environments.

- Learning Agents: Learning agents are AI agents that improve themselves over time. They have components that allow them to learn from feedback and experience – for example, a learning element to update their knowledge or strategies, and a critic that evaluates their actions to inform future decisions. Because they adapt, learning agents are suited for dynamic, ever-changing tasks. They start with some initial behavior and get better as they go. Example: recommendation systems on e-commerce or streaming platforms are learning agents they analyze your behavior and learn your preferences to suggest relevant products or movies (as seen with platforms like eBay or Netflix), Similarly, some modern chatbots use machine learning to refine their responses after interacting with users. Over time, a learning agent becomes more accurate and effective as it gains more experience

Understanding these agent types helps explain how different AI systems are built. Many real-world AI agents are hybrid or learning-based, combining multiple approaches. Next, let’s look at how these agents are actually used in real life, from helping customers to guarding against cyber threats.

AI Agents in Customer Service

One of the most visible applications of AI agents is in customer service. Companies today deploy AI chatbots and virtual agents on websites, messaging apps, and phone lines to assist customers at any hour. These AI agents can greet users, answer frequently asked questions, help track orders, and even resolve basic issues all without needing a human operator on the line. By automating routine inquiries, AI agents ensure customers get instant, round-the-clock support, while human support staff are freed up to handle more complex problems. This not only improves response times but also enhances the overall customer experience.

Examples of AI agents in customer support include:

- ChatGPT-Powered Support Bots: Many businesses now use conversational AI models like ChatGPT to power their customer service chatbots. ChatGPT-based agents can understand natural language questions and respond with helpful answers in a very human-like way. For example, companies have built ChatGPT-based customer service bots to handle common questions without human intervention, significantly improving response times. These bots can field inquiries such as “Where is my order?” or “How do I reset my password?” and provide immediate, accurate answers. By leveraging ChatGPT’s advanced language understanding, support bots can handle nuanced customer requests and even escalate to a human agent if they detect a question is too complex. This results in faster service and happier customers.

- Drift’s Conversational Chatbots: Drift is a platform known for its AI-driven chatbots that specialize in marketing and sales conversations. Drift’s AI chat agents engage website visitors in real time, greeting them, answering questions about products, and even helping schedule sales calls. Unlike static rule-based bots, Drift’s AI agents carry dynamic, personalized conversations, effectively transforming a website chatbot into an intelligent digital sales assistant. For instance, if a potential customer visits a software company’s pricing page, a Drift bot can automatically pop up to ask if they need help, provide information, or book a meeting with sales. These AI agents work 24/7, qualifying leads and guiding customers through the sales funnel, which ultimately drives business growth. They act like tireless team members who never sleep, ensuring every website visitor gets attention. (Related: How AI Is Revolutionizing Customer Experience)

By deploying AI agents in customer service, businesses can provide fast and consistent support. Customer service AI agents don’t get tired or frustrated by repetitive questions – they answer the hundredth query with the same patience as the first. This leads to quicker resolutions and improved customer satisfaction. At the same time, human support teams benefit because they can focus on high-priority or complex issues while routine FAQs are handled automatically. In short, AI agents are revolutionizing customer service by making it more responsive, scalable, and cost-effective.

AI Agents in Healthcare

Beyond answering customer queries, AI agents are making a profound impact in healthcare. In hospitals and clinics, AI agents serve as intelligent assistants to doctors, nurses, and patients. They can analyze large volumes of medical data, help in diagnosing conditions, suggest treatments, and even communicate with patients for basic health inquiries. By doing so, AI agents in healthcare help medical professionals make more informed decisions faster and improve patient outcomes. They also automate administrative tasks like scheduling or record-keeping, allowing healthcare staff to spend more time on direct patient care.

Let’s look at two powerful AI agent examples in healthcare:

- IBM Watson for Healthcare: IBM’s Watson is a famous AI system that has been applied in medical settings to support decision-making. An AI agent like IBM Watson can analyze medical records and vast research literature to help doctors make informed diagnoses and treatment plans. For example, Watson can scan through millions of oncology research papers and a patient’s health history to suggest potential cancer therapies that a physician might want to consider. It essentially acts as an expert assistant with an encyclopedic memory something no single human doctor can match. By cross-referencing symptoms, test results, and medical knowledge, this AI agent provides recommendations (for instance, which diagnostic tests to run or which treatments have worked for similar cases) that aid doctors in their clinical decision-making. The result is a more data-driven healthcare approach, where practitioners have AI-curated insights at their fingertips.

- Google’s Med-PaLM: One of the latest advances in AI for healthcare is Med-PaLM, a medical domain large language model developed by Google. Med-PaLM is essentially “a doctor’s version of ChatGPT,” capable of analyzing symptoms, medical imaging like X-rays, and other data to provide diagnostic suggestions and answer health-related questions. In trials, Med-PaLM has demonstrated impressive accuracy on medical exam questions and even the ability to explain its reasoning. Imagine a patient could describe their symptoms to an AI agent, and the system could respond with possible causes or advise whether they should seek urgent care – that’s the promise of models like Med-PaLM. Hospitals are exploring such AI agents to assist clinicians: for example, by summarizing a patient’s medical history and flagging relevant information, or by providing a second opinion on a difficult case. While AI will not replace doctors, agents like Med-PaLM are poised to become trusted co-pilots in healthcare, handling information overload and providing data-driven insights so that care can be more accurate and personalized.

AI agents in healthcare illustrate how autonomy and intelligence can be life-saving. They reduce the time needed to interpret tests and research, they help catch errors or oversights by always staying up-to-date on the latest medical findings, and they can extend healthcare access (think of a chatbot that gives preliminary medical advice to someone in a remote area). As these agents become more advanced, we can expect earlier disease detection, more efficient patient management, and generally a higher quality of care driven by data. In short, doctors plus AI agents make a powerful team in healing and saving lives.

AI Agents in Cybersecurity

In the digital realm, cybersecurity has become a critical area where AI agents shine. Modern cyber threats – from hacking attempts to malware outbreaks move at incredible speed and volume, far beyond what human teams can handle alone. AI agents act as tireless sentinels in cybersecurity, continuously monitoring networks, servers, and devices for signs of trouble. They analyze system logs and traffic patterns in real time, detect anomalies or suspicious behavior, and can even take action to neutralize threats all autonomously. By leveraging AI agents, organizations can respond to security incidents in seconds and often prevent breaches automatically, before security staff are even aware of an issue.

Key examples of AI agents in cybersecurity include:

- Darktrace: Darktrace is a leader in autonomous cyber defense and a prime example of an AI agent at work in security. Darktrace’s AI agents continuously learn what “normal” behavior looks like inside a company’s network and then autonomously identify and respond to previously unseen cyber-attacks in real time. The system is often described as being like an “immune system” for the enterprise it uses advanced machine learning algorithms modeled on the human immune response to detect intruders and unusual activity. For instance, if a user’s account suddenly starts downloading large amounts of data at 3 AM, the Darktrace agent will flag it as abnormal and can automatically lock out the account or isolate that part of the network. All of this can happen within moments, without waiting for human intervention. By hunting down anomalies and deciding the best course of action on the fly, Darktrace’s agent frees up human security teams to focus on high-level strategy and critical investigations rather than endless monitoring. It’s easy to see why this approach has been referred to as the “cybersecurity of the future” it’s a shift from reactive defense to proactive, autonomous defense.

- Autonomous Threat Monitoring Tools: Darktrace is not alone; many cybersecurity platforms now include autonomous monitoring AI agents. These tools use machine learning to sift through vast streams of security data (logins, network traffic, user behavior, etc.) and can spot the needle in the haystack – that one malicious pattern hidden among millions of normal events. For example, an AI security agent might notice that a normally low-traffic server just started communicating with an unusual external IP address, or that an employee’s account is performing actions they never did before. The AI will raise an alert or even execute a predefined response (like blocking a suspicious IP or quarantining a workstation) in real time. Such agents essentially act as digital guards that never sleep. They drastically cut down the time it takes to detect intrusions (often from days or weeks, down to minutes or seconds) and can prevent minor incidents from snowballing into major breaches. By automating threat detection and first response, AI agents in cybersecurity help organizations stay one step ahead of hackers and reduce the workload on human analysts who face an overwhelming number of alerts each day.

In summary, AI agents are transforming cybersecurity by making it more proactive and adaptive. They handle the heavy lifting of monitoring and can execute instant countermeasures to contain threats. This means stronger protection for data and systems, with fewer gaps for attackers to exploit. As cyber attacks continue to evolve, having AI agents on the digital front lines is becoming essential for any robust security strategy.

AI Agents as Personal Assistants

AI agents aren’t just found in business and industry – many of us interact with AI agents in our personal lives every day. The most familiar examples are virtual personal assistants on our phones and smart devices. Whether you say “Hey Siri” on an iPhone or “OK Google” on an Android phone, you’re engaging with an AI agent designed to make your life easier. These assistants use natural language processing to understand your voice commands and queries, and they connect with various services to fulfill your requests. In essence, they serve as personal AI agents that can manage a variety of daily tasks.

Examples of personal AI agents include:

- Smartphone Virtual Assistants (Siri & Google Assistant): Apple’s Siri and Google Assistant are prime AI agents that help users with everyday tasks through voice interaction. With a simple spoken command, these agents can do things like send messages, set reminders, check the weather, play music, manage your calendar, or control smart home devicesgetguru.com. For instance, you can ask Google Assistant “What’s on my schedule today?” or tell Siri “Remind me to call Mom at 7 PM,” and the AI will understand and execute the task. These assistants are context-aware to a degree as well if you ask a follow-up question like “What about tomorrow?”, they remember the context (your schedule) from the previous query. Over time, virtual assistants learn your preferences and speech patterns, providing more personalized responses. They might learn frequently used contacts or apps for example, so when you say “text Dad,” the AI knows who you mean. They can even anticipate needs (for example, alerting you “Time to leave for the airport” based on traffic and your flight info). In short, Siri, Google Assistant, and similar AI agents serve as handy digital butlers, adapting to their users’ behavior to offer useful, personalized help.

- Home AI Devices (Amazon Alexa and Others): (While not explicitly listed in the prompt, it’s worth noting) devices like Amazon’s Alexa, which powers the Echo smart speakers, are also AI agents functioning as personal assistants. You can ask Alexa to order groceries, turn off the lights, or answer trivia questions. These home assistant AI agents integrate with a wide range of apps and smart home gadgets, essentially becoming the voice-activated hub of your household. They illustrate another facet of personal AI agents: ubiquitous availability. Without lifting a finger, you can get information or perform actions just by speaking, which is especially convenient when multitasking.

Personal assistant AI agents have quickly moved from novelty to necessity for many users. They demonstrate how AI can make technology more natural and convenient to use – you interact with them just by talking, as you would with a human assistant. As these agents get smarter (through improvements in AI and access to more data), they are becoming more proactive. For example, an assistant might suggest a departure time for a meeting based on traffic, without being asked. They essentially extend our memory and capabilities, helping us handle the small details of daily life so we can focus on bigger things. In the future, personal AI agents are likely to become even more integral, coordinating between our devices and services to act on our behalf in a truly seamless way.

AI Agents for Workflow Automation

Another powerful application of AI agents is in workflow automation – that is, using AI to automate complex sequences of tasks, especially in business or development environments. Instead of performing a rigid set of instructions like traditional software, an AI agent can intelligently decide what steps to take and in what order to achieve a goal, often by interacting with multiple systems or tools. This is a big leap in automation: workflows that normally require human judgment or glue code can be handled by an AI agent figuring things out on the fly. Tech enthusiasts and developers are leveraging such agents to offload tedious multi-step processes onto AI and streamline operations.

A notable example in this space is LangChain, an open-source framework that developers use to create advanced AI agents and workflows.

- LangChain AI Agents: LangChain provides the building blocks for connecting large language models (like GPT-4) with various tools, APIs, and data sources in a sequence. In other words, it’s a framework that helps automate AI workflows by connecting different components seamlessly. With LangChain, you can build an AI agent that not only converses in natural language but also performs actions like database queries, web searches, or calling other APIs as needed to fulfill a task. For example, imagine a workflow for customer support: a LangChain-based AI agent could receive a support question, automatically look up the answer in a knowledge base, summarize it, and then draft a response to the customer all without human help. Or consider a data analysis scenario: an AI agent could fetch data from multiple sources, run calculations, and generate a report or visualization. LangChain makes such scenarios possible by giving the agent access to “tools” (functions it can call) and guiding its decision-making on when to use which tool. Essentially, the agent can reason, “I need information from a web search, then I need to use a calculator tool, then I need to format an email,” and it will execute those steps in order. This ability to orchestrate different tasks is what sets AI workflow automation apart from simpler, single-task bots.

Using frameworks like LangChain, developers have created AI agents for a variety of workflow automation use cases. Some real-world examples include automated research assistants that gather and summarize information, sales and marketing agents that update CRM entries and personalize outreach, and IT assistants that can detect an issue and open a ticket or even attempt a fix. AI workflow agents can handle tasks like data extraction, transformation, and report generation all in one go, acting as an intelligent glue between systems. The benefit is a huge boost in productivity, repetitive multi-step processes that used to take hours of human effort can be done in minutes by an AI agent. Moreover, because the agent can adapt to different inputs, the automation is more flexible than a hard-coded script. Businesses embracing these AI-driven workflows are finding that they can scale operations and respond faster to events, since their AI agents are tirelessly working in the background on complex tasks.

It’s worth noting that workflow automation agents often incorporate one or more of the agent types discussed earlier. For instance, many are learning agents that improve as they process more tasks, and they may have hybrid characteristics (some decisions are reactive, others are deliberative planning). By chaining together tasks and decisions, these AI agents truly act like autonomous coworkers, handling the busywork and letting people focus on higher-level planning and creativity.

Conclusion

From the examples above, it’s clear that AI agents are transforming technology and industry in profound ways. Each AI agent example we explored – whether it’s a customer service chatbot, a medical diagnosis assistant, a network security monitor, a virtual assistant on your phone, or an automation agent in a business workflow – showcases the benefits of autonomy and intelligence in software. AI agents can operate continuously and make decisions at lightning speed, handling tasks that range from the mundane to the highly complex. They bring a level of efficiency and scalability that traditional methods simply cannot match, like providing instant 24/7 support or analyzing data far beyond human capacity.

The transformative impact of AI agents comes down to augmented capability. Businesses see higher productivity and lower costs as AI agents take over repetitive work and optimize processes. Customers enjoy better experiences, getting faster and more personalized service. Professionals in fields like healthcare and cybersecurity gain new decision-support tools that improve accuracy and outcomes potentially saving lives or preventing disasters. And in our personal lives, AI agents simplify daily chores and information access, effectively giving us more free time and convenience.

Crucially, AI agents also unlock possibilities for innovation. When routine tasks are automated, human creativity can be redirected to new challenges and ideas. Entirely new services and products become feasible with AI agents at the helm (for example, consider how self-driving car agents could revolutionize transportation, or how smart home agents could manage energy usage to save costs and the environment). In essence, AI agents act as a force multiplier for human effort across the board.

In summary, AI agents are ushering in a new era of technology. They learn, adapt, and work alongside us as capable partners. The examples discussed in this post underscore that this isn’t science fiction or distant future it’s happening now. Companies and individuals who embrace AI agents stand to gain efficiency, insight, and a competitive edge. As AI continues to advance, we can expect even more sophisticated agents that further transform how we live and work, truly making technology more autonomous, intelligent, and empowering for everyone. The age of AI agents has arrived, and it’s transforming technology one task at a time.

Frequently Asked Questions

-

What Are the Most Common Types of AI Agents?

AI agents can look like many things and do many jobs. Some are chatbots that help people with customer service. Others are recommendation systems that give you ideas for what to watch or buy. There are also tools that can guess what might happen next, like in finance. Each of these has a special job. They help things move faster, make people happy, and help their work teams use data to choose the best way forward in different fields.

-

How Do AI Agents Learn and Improve Over Time?

AI agents get better by always seeing new data. They use machine learning to learn and change over time. AI agents look at what people do and say, and then they use that information to get better. They change their answers using feedback. This process helps them to grow and work better as time goes on. When AI agents do this again and again, they start to give more correct results.

-

Can AI Agents Make Decisions Independently?

AI agents are able to make some decisions by themselves. They do this with the help of algorithms and by looking at data. But, people set rules and limits for these ai agents to follow. This makes sure the ai agents stay on track with good values and the goals of the business. It also helps stop anything bad from happening when they act on their own.

-

What Are the Future Trends in AI Agent Development?

Future trends in ai agent development will bring more personalized experiences to people. This will happen by using new and better algorithms. There will also be more use of edge computing, which will make ai agents process things faster. Developers are starting to add more ethical ai practices, and this helps reduce bias. Also, ai agents will soon work better across different fields, so they can do more complex tasks without problems.

-

What are examples of AI agents in daily life?

AI agents are now a part of daily life for many people. You can find them in virtual assistants like Siri and Alexa. You also see them in recommendation systems on websites like Netflix and Amazon. Smart home devices use AI to learn the habits of each person in the house. Chatbots with AI often help people with customer service. All of these things make life easier. They also help give a better experience to the user.

-

Is ChatGPT an AI agent?

Yes, ChatGPT is an AI agent. It is made to read and write in a way that sounds like a real person. It uses natural language and natural language processing to talk with people. This helps make things better when you use it for customer support or to help you write new things. So, you can see the many ways AI can be used in today’s technology.

-

What are the challenges of using AI agents?

The use of ai agents brings some big challenges. People have to think about privacy and keep data safe. It is also important to stop bias when ai agents make decisions. They need to work in a way that is open and clear. The data also has to be good and right. These problems mean that people need to keep watching ai agents and think about what is right and fair. Doing this helps make sure ai agents work well and are helpful for all kinds of people and businesses.

-

What are some popular examples of AI agents in use today?

Some popular examples of ai agents today are virtual shopping assistants. These improve people's shopping experiences online. There are also chatbots that help with customer service. In healthcare, ai agents help by working in diagnostic tools. Farming also changes with precision agriculture systems that help grow more crops. All these examples of ai agents show how much AI can change various industries and make them better for us.

-

How can AWS help with your AI agent requirements?

AWS offers a full set of tools and services that help you build ai agents. You get computing power that can grow with your needs. There are also machine learning tools and strong ways to store data. With these, you can work faster and better. AWS makes it easier for all businesses to meet their ai agent needs in the best way.

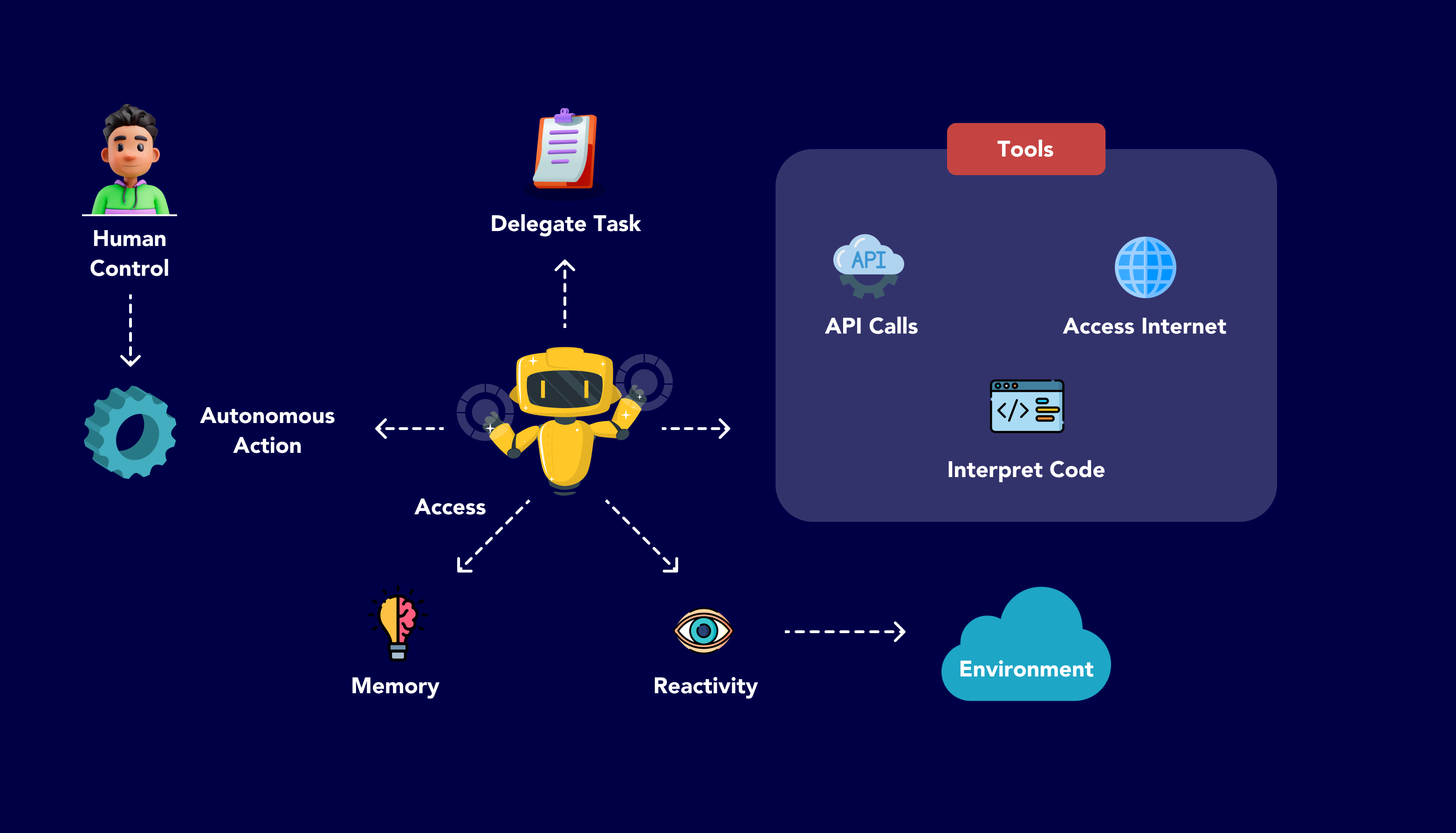

by Rajesh K | May 27, 2025 | Artificial Intelligence, Blog, Latest Post |

Software development has always been about solving complex problems, but the speed at which we’re now expected to deliver solutions is faster than ever. With agile methodologies and DevOps practices becoming the norm, teams are under constant pressure to ship high-quality code in increasingly shorter cycles. This demand for speed and quality places immense pressure on both developers and testers to find smarter, more efficient ways to work. Enter GitHub Copilot, an AI-powered code completion tool developed by GitHub and OpenAI. Initially viewed as a coding assistant for developers, Copilot is now gaining traction across multiple functions including QA engineering, DevOps, and documentation thanks to its versatility and power. By interpreting natural language prompts and understanding code context, Copilot enables teams to generate, review, and enhance code with unprecedented speed. Whether you’re a full-stack engineer looking to speed up backend logic, a QA tester creating robust automation scripts, or a DevOps engineer maintaining YAML pipelines, GitHub Copilot helps reduce manual effort and boost productivity. It seamlessly integrates into popular IDEs and workflows, enabling users to remain focused on logic and innovation rather than boilerplate and syntax. This guide explores how GitHub Copilot is reshaping software development and testing through practical use cases, expert opinions, and competitive comparisons. You’ll also learn how to set it up, maximize its utility, and responsibly use AI coding tools in modern engineering environments.

What is GitHub Copilot?

GitHub Copilot is an AI-powered code assistant that suggests code in real time based on your input. Powered by OpenAI’s Codex model, it’s trained on billions of lines of publicly available code across languages like JavaScript, Python, Java, C#, TypeScript, and Ruby.

A Brief History

- June 2021: GitHub Copilot launched in technical preview.

- July 2022: It became generally available with subscription pricing.

- 2023–2024: Expanded with features like Copilot Chat (natural language prompts in the IDE) and Copilot for CLI.

With Copilot, you can write code faster, discover APIs quickly, and even understand legacy systems using natural language prompts. For example, typing a comment like // create a function to sort users by signup date can instantly generate the full implementation.

Core Features of GitHub Copilot

Copilot brings a blend of productivity and intelligence to your workflow. It suggests context-aware code, converts comments to code, and supports a wide range of languages. It helps reduce repetitive work, offers relevant API examples, and can even summarize or document unfamiliar functions.

A typical example:

// fetch user data from API

fetch('https://api.example.com/users')

.then(response => response.json())

.then(data => console.log(data));

This saves time and ensures consistent syntax and best practices.

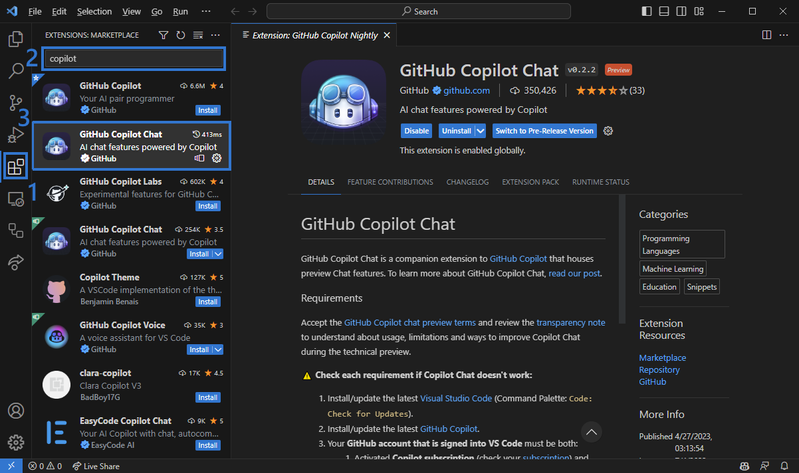

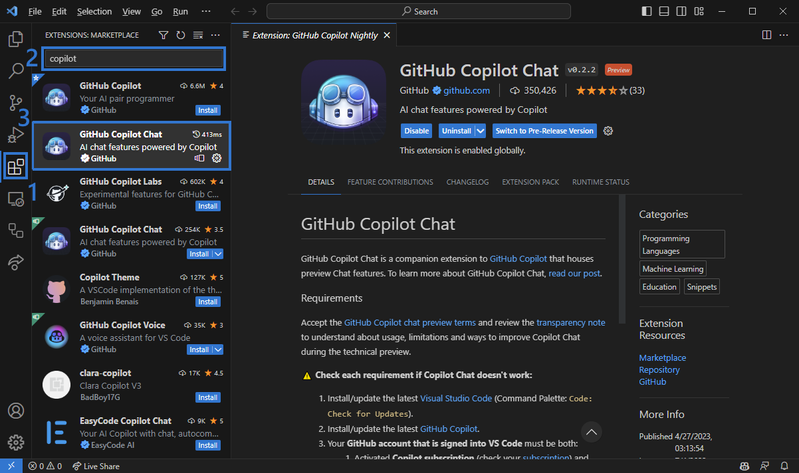

Setting Up GitHub Copilot and Copilot Chat in VS Code

To get started with GitHub Copilot, you’ll need a GitHub account and Visual Studio Code installed on your machine. Here are the steps to enable Copilot and Copilot Chat:

- Open Visual Studio Code and click on the Extensions icon on the sidebar.

- In the search bar, type “GitHub Copilot” and select it from the list. Click Install.

- Repeat this step for “GitHub Copilot Chat”.

- Sign in with your GitHub account when prompted.

- Authorize the extensions by clicking Allow in the GitHub authentication dialog.

- A browser window will open for authentication. Click Continue and then Open Visual Studio Code.app when prompted.

- Return to VS Code, confirm the URI access pop-up by clicking Open.

- Restart VS Code to complete the setup.

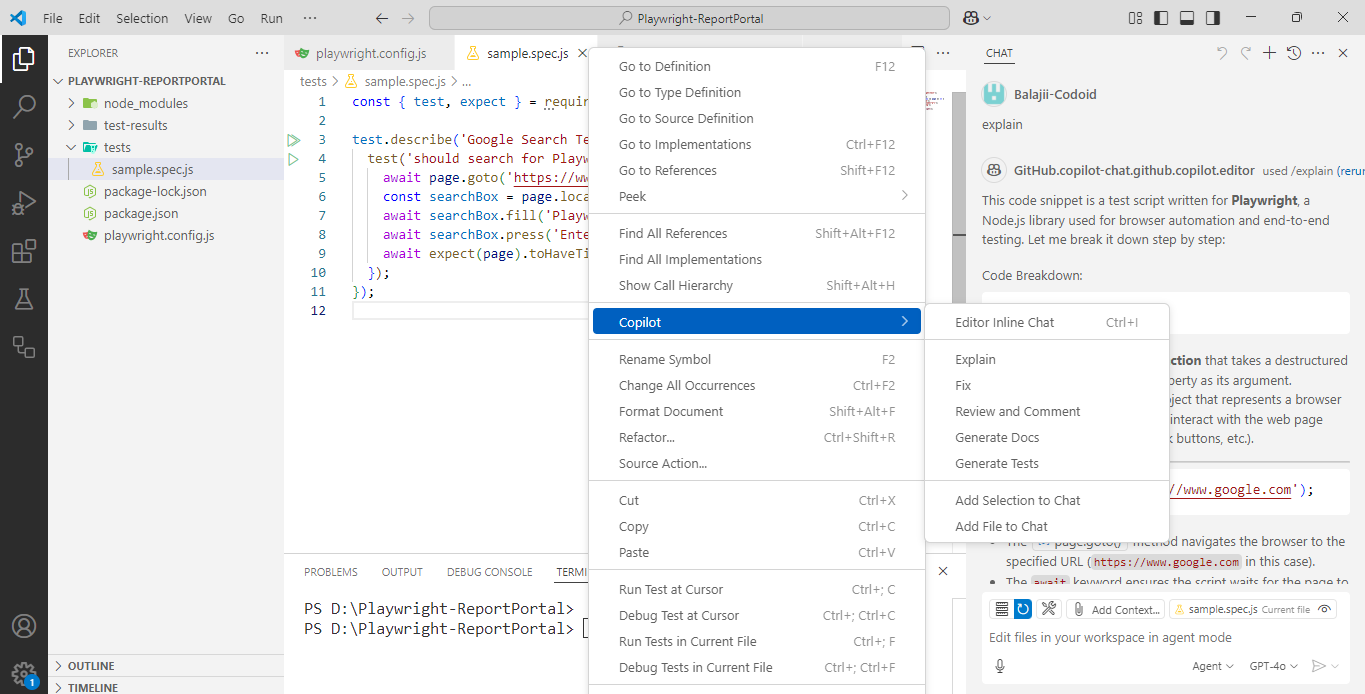

- Open any .js, .py, or similar file. Right-click and select Editor Inline Chat to use Copilot Chat.

- Type your query (e.g., “explain this function”) and hit Send. Copilot will generate a response below the query.

Copilot Chat enhances interactivity by enabling natural language conversation directly in your editor, which is especially useful for debugging and code walkthroughs.

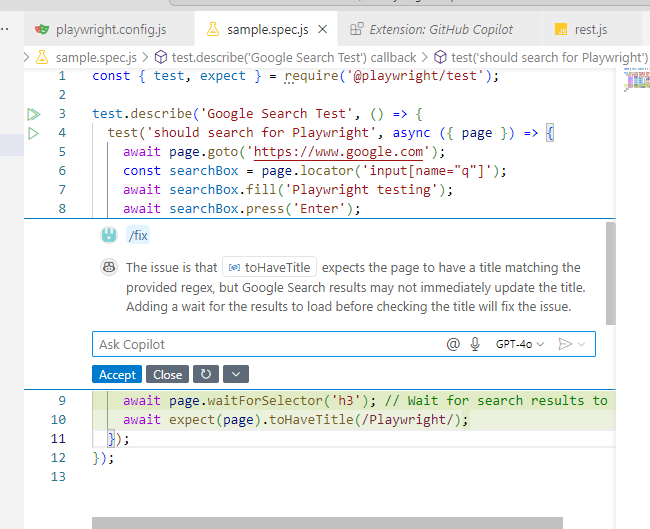

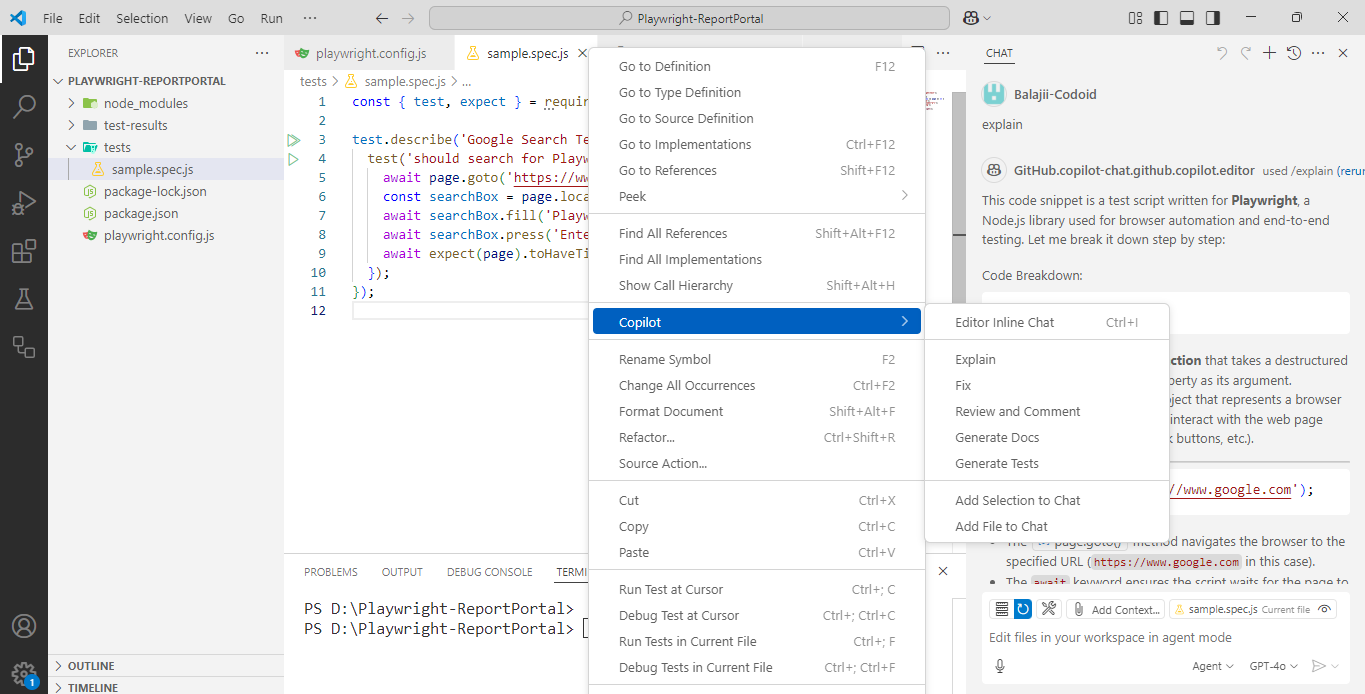

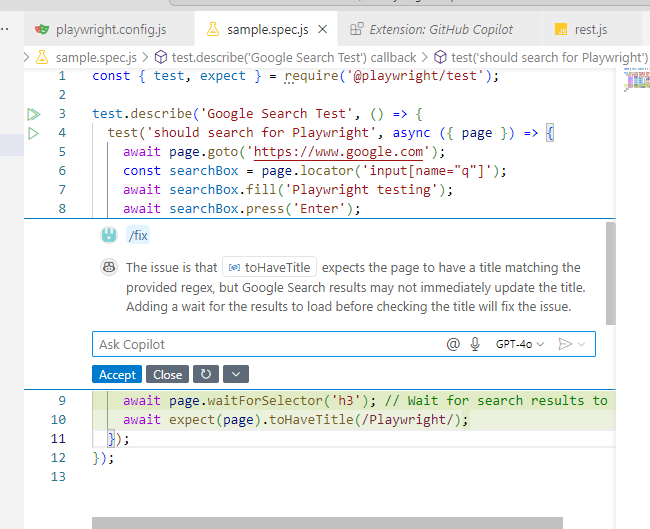

How Testers Benefit from GitHub Copilot

Testers can leverage GitHub Copilot to boost automation efficiency, create robust test scripts, and reduce repetitive manual coding. Below are several high-impact use cases:

- Unit Test Generation: Writing a comment like // write a unit test for calculateTotal leads to an auto-generated Jest test case.

- API Testing: Prompting // test POST /login with invalid data generates a structured HTTP test.

- BDD Support: Copilot helps build Given-When-Then scenarios based on feature files or comments.

- Debugging: Highlight a broken test, ask “Why is this failing?” in Copilot Chat, and receive explanations.

- Legacy Code Insight: Copilot Chat explains old test suites, helping new team members onboard faster.

Example Prompts and Outputs for Testers

Prompt: // Write a Playwright test for login functionality

Output

import { test, expect } from '@playwright/test';

test('Login to dashboard', async ({ page }) => {

await page.goto('https://example.com/login');

await page.fill('#username', 'user123');

await page.fill('#password', 'securePassword');

await page.click('button[type="submit"]');

await expect(page).toHaveURL('https://example.com/dashboard');

});

Prompt: // Test GET /users API with query parameters

Output

import axios from 'axios';

test('should fetch users with filters', async () => {

const response = await axios.get('https://api.example.com/users?status=active');

expect(response.status).toBe(200);

expect(response.data).toBeDefined();

});

These examples illustrate how natural language can be converted into reliable, executable code.

GitHub Copilot vs. Other AI Code Tools (Competitor Comparison)

| S. No |

Feature |

GitHub Copilot |

Amazon CodeWhisperer |

Tabnine |

| 1 |

Language Support |

Broad (Python, JavaScript, etc.) |

Focused on AWS-related languages |

Broad |

| 2 |

Integration |

VS Code, JetBrains, Neovim |

AWS Cloud9, VS Code |

VS Code, JetBrains, IntelliJ |

| 3 |

Customization |

Limited |

Tailored for AWS services |

High (train on your codebase) |

| 4 |

Privacy |

Sends code to GitHub servers |

Data may be used for AWS model training |

Offers on-premises deployment |

| 5 |

Best For |

General-purpose coding and testing |

AWS-centric development |

Teams needing private, customizable AI |

Note: Always review the latest documentation and privacy policies of each tool before integration.

Limitations & Ethical Considerations

While GitHub Copilot offers significant productivity gains, it’s essential to be aware of its limitations and ethical considerations:

- Code Quality: Copilot may generate code that is syntactically correct but logically flawed. Always review and test AI-generated code thoroughly.

- Security Risks: Suggested code might introduce vulnerabilities if not carefully vetted.

- Intellectual Property: Copilot is trained on publicly available code, raising concerns about code originality and potential licensing issues. Developers should ensure compliance with licensing terms when incorporating AI-generated code.

- Bias and Representation: AI models can inadvertently perpetuate biases present in their training data. It’s crucial to remain vigilant and ensure that generated code aligns with inclusive and ethical standards.

For a deeper understanding of these concerns, refer to discussions on the ethical and legal challenges of GitHub Copilot .

The Future of Test Automation with AI Tools

The integration of AI into test automation is poised to revolutionize the software testing landscape:

- Adaptive Testing: AI-driven tools can adjust test cases in real-time based on application changes, reducing maintenance overhead.

- Predictive Analytics: Leveraging historical data, AI can predict potential failure points, allowing proactive issue resolution.

- Enhanced Test Coverage: AI can identify untested code paths, ensuring more comprehensive testing.

- Self-Healing Tests: Automated tests can autonomously update themselves in response to UI changes, minimizing manual intervention.

As AI continues to evolve, testers will transition from manual script writing to strategic oversight, focusing on test strategy, analysis, and continuous improvement.

Expert Insights on GitHub Copilot in QA

Industry professionals have shared their experiences with GitHub Copilot:

- Shallabh Dixit, a QA Automation Engineer, noted that Copilot significantly streamlined his Selenium automation tasks, allowing for quicker test script generation and reduced manual coding .

- Bhabani Prasad Swain emphasized that while Copilot accelerates test case creation, it’s essential to review and validate the generated code to ensure it aligns with the application’s requirements .

These insights underscore the importance of combining AI tools with human expertise to achieve optimal results in QA processes.

Conclusion

GitHub Copilot isn’t just a productivity boost it represents a significant shift in how software is written, tested, and maintained. By combining the speed and scale of AI with human creativity and critical thinking, Copilot empowers teams to focus on innovation, quality, and strategy. For software developers, it removes the friction of boilerplate coding and speeds up the learning curve with intuitive suggestions. For testers, it automates test case generation, accelerates debugging, and enables better integration with tools like Selenium and Playwright. For managers and technical leads, it supports faster delivery cycles without compromising on quality. As AI tools continue to mature, GitHub Copilot will likely evolve into an indispensable assistant across the entire software development lifecycle. Whether you’re building features, verifying functionality, or writing infrastructure code, Copilot serves as a reliable partner in your engineering toolkit.

Frequently Asked Questions

-

Is GitHub Copilot free?

It offers a 30-day free trial. Afterward, a subscription is required.

-

Can I use it offline?

No, Copilot requires an internet connection to function.

-

Is Copilot secure for enterprise?

Yes, especially with proper configuration and access policies.

-

Can testers use it without deep coding experience?

Yes. It’s excellent for generating boilerplate and learning syntax.

-

What frameworks does it support?

Copilot understands Playwright, Cypress, Selenium, and many other test frameworks.

by Rajesh K | May 2, 2025 | Artificial Intelligence, Blog, Latest Post |

As engineering teams scale and AI adoption accelerates, MLOps vs DevOps have emerged as foundational practices for delivering robust software and machine learning solutions efficiently. While DevOps has long served as the cornerstone of streamlined software development and deployment, MLOps is rapidly gaining momentum as organizations operationalize machine learning models at scale. Both aim to improve collaboration, automate workflows, and ensure reliability in production but each addresses different challenges: DevOps focuses on application lifecycle management, whereas MLOps tackles the complexities of data, model training, and continuous ML integration. This blog explores the distinctions and synergies between the two, highlighting core principles, tooling ecosystems, and real-world use cases to help you understand how DevOps and MLOps can intersect to drive innovation in modern engineering environments.

What is DevOps?

DevOps, a portmanteau of “Development” and “Operations,” is a set of practices that bridges the gap between software development and IT operations. It emphasizes collaboration, automation, and continuous delivery to enable faster and more reliable software releases. DevOps emerged in the late 2000s as a response to the inefficiencies of siloed development and operations teams, where miscommunication often led to delays and errors.

Core Principles of DevOps

DevOps is built on the CALMS framework:

- Culture: Foster collaboration and shared responsibility across teams.

- Automation: Automate repetitive tasks like testing, deployment, and monitoring.

- Lean: Minimize waste and optimize processes for efficiency.

- Measurement: Track performance metrics to drive continuous improvement.

- Sharing: Encourage knowledge sharing to break down silos.

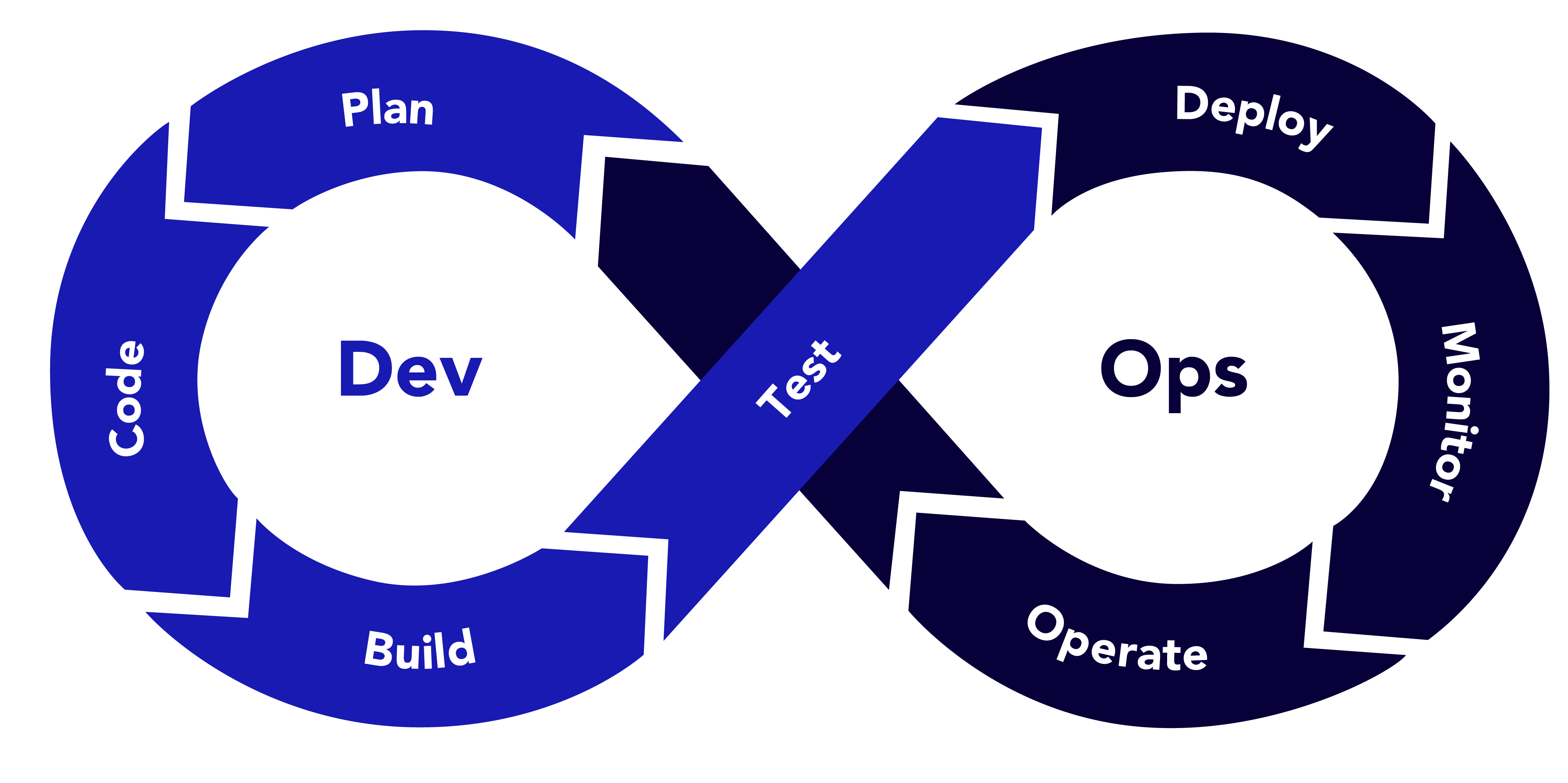

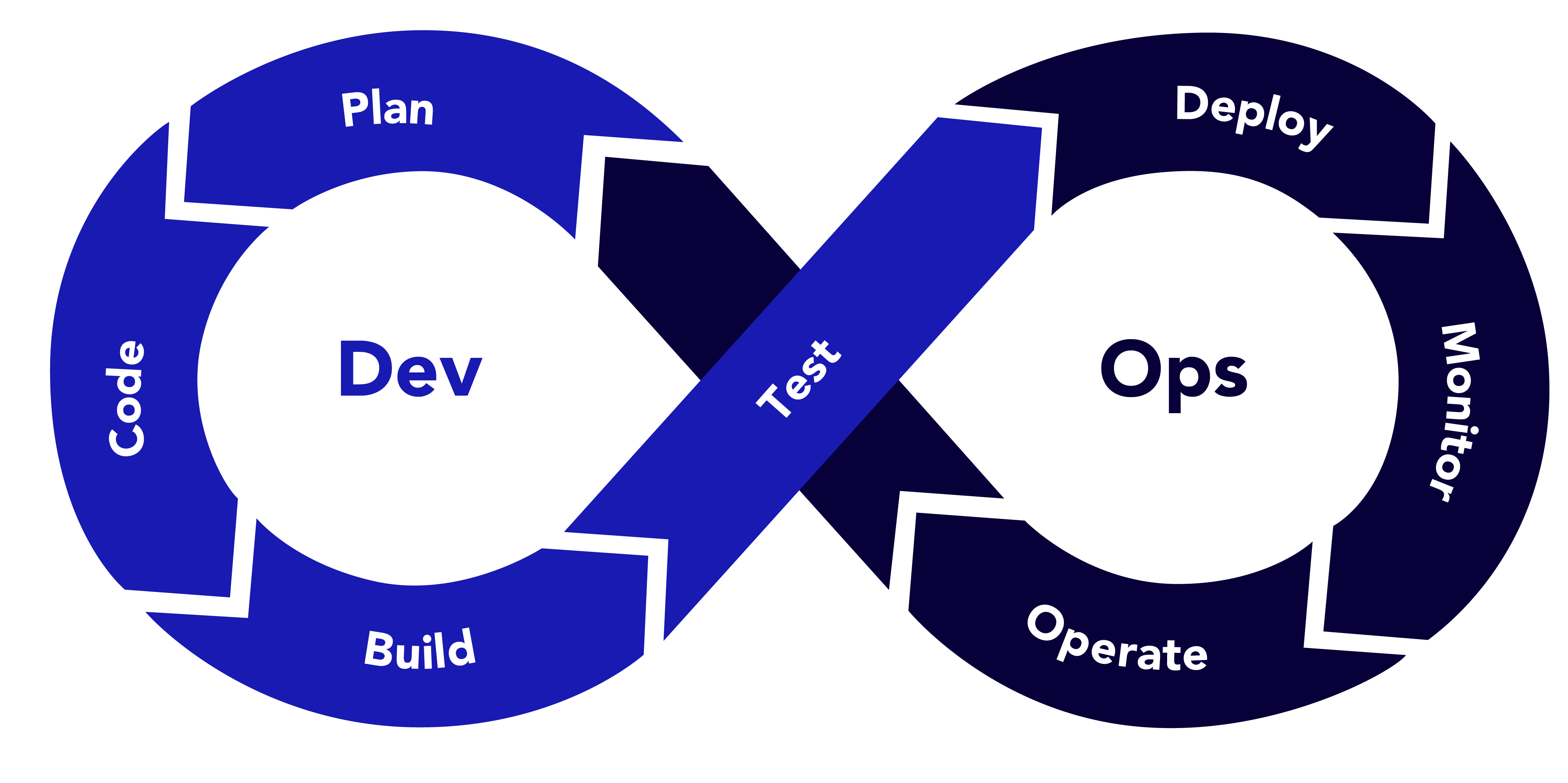

DevOps Workflow

The DevOps lifecycle revolves around the CI/CD pipeline (Continuous Integration/Continuous Deployment):

1. Plan: Define requirements and plan features.

2. Code: Write and commit code to a version control system (e.g., Git).

3. Build: Compile code and create artefacts.

4. Test: Run automated tests to ensure code quality.

5. Deploy: Release code to production or staging environments.

6. Monitor: Track application performance and user feedback.

7. Operate: Maintain and scale infrastructure.

Example: DevOps in Action

Imagine a team developing a web application for an e-commerce platform. Developers commit code to a Git repository, triggering a CI/CD pipeline in Jenkins. The pipeline runs unit tests, builds a Docker container, and deploys it to a Kubernetes cluster on AWS. Monitoring tools like Prometheus and Grafana track performance, and any issues trigger alerts for the operations team. This streamlined process ensures rapid feature releases with minimal downtime.

What is MLOps?

MLOps, short for “Machine Learning Operations,” is a specialised framework that adapts DevOps principles to the unique challenges of machine learning workflows. ML models are not static pieces of code; they require data preprocessing, model training, validation, deployment, and continuous monitoring to maintain performance. MLOps aims to automate and standardize these processes to ensure scalable and reproducible ML systems.

Core Principles of MLOps

MLOps extends DevOps with ML-specific considerations:

- Data-Centric: Prioritise data quality, versioning, and governance.

- Model Lifecycle Management: Automate training, evaluation, and deployment.

- Continuous Monitoring: Track model performance and data drift.

- Collaboration: Align data scientists, ML engineers, and operations teams.

- Reproducibility: Ensure experiments can be replicated with consistent results.

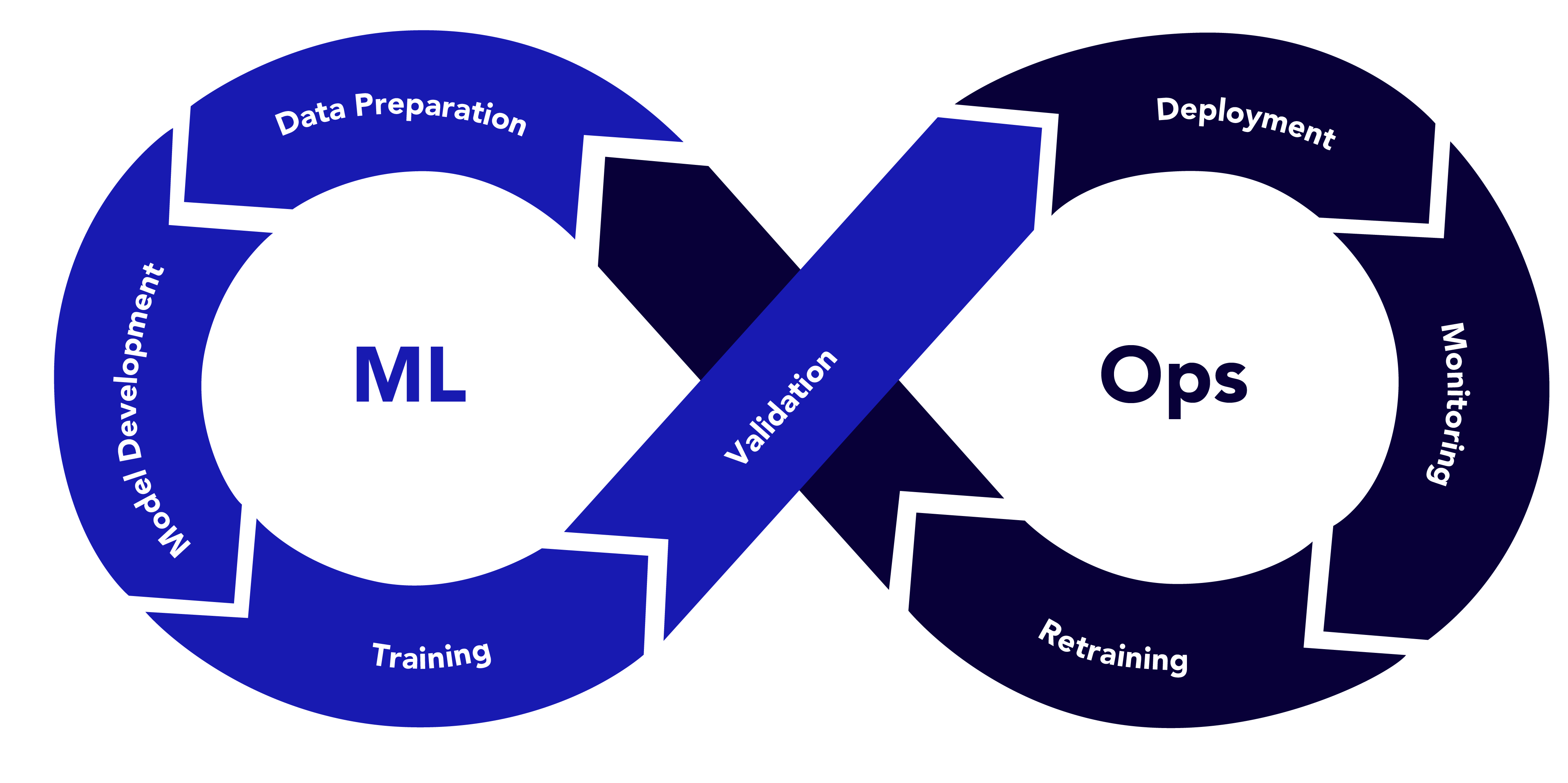

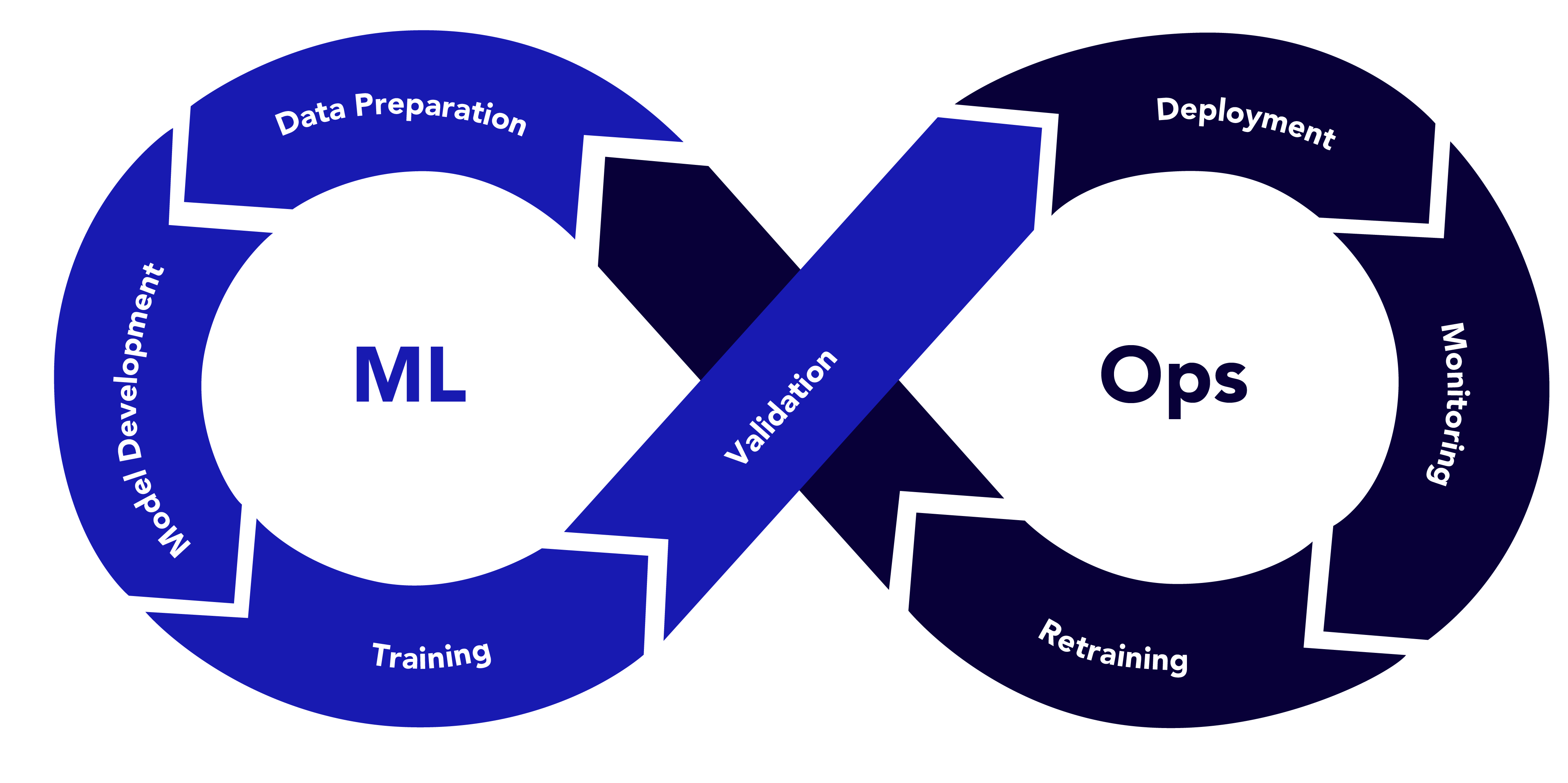

MLOps Workflow

The MLOps lifecycle includes:

1. Data Preparation: Collect, clean, and version data.

2. Model Development: Experiment with algorithms and hyperparameters.

3. Training: Train models on large datasets, often using GPUs.

4. Validation: Evaluate model performance using metrics like accuracy or F1 score.

5. Deployment: Deploy models as APIs or embedded systems.

6. Monitoring: Track model predictions, data drift, and performance degradation.

7. Retraining: Update models with new data to maintain accuracy.

Example: MLOps in Action

Consider a company building a recommendation system for a streaming service. Data scientists preprocess user interaction data and store it in a data lake. They use MLflow to track experiments, training a collaborative filtering model with TensorFlow. The model is containerized with Docker and deployed as a REST API using Kubernetes. A monitoring system detects a drop in recommendation accuracy due to changing user preferences (data drift), triggering an automated retraining pipeline. This ensures the model remains relevant and effective.

Comparing MLOps vs DevOps

While MLOps vs DevOpsshare the goal of streamlining development and deployment, their focus areas, challenges, and tools differ significantly. Below is a detailed comparison across key dimensions.

| S. No |

Aspect |

DevOps |

MLOps |

Example |

| 1 |

Scope and Objectives |

Focuses on building, testing, and deploying software applications. Goal: reliable, scalable software with minimal latency. |

Centres on developing, deploying, and maintaining ML models. Goal: accurate models that adapt to changing data. |

DevOps: Output is a web application.

MLOps: Output is a model needing ongoing validation. |

| 2 |

Data Dependency |

Software behaviour is deterministic and code-driven. Data is used mainly for testing. |

ML models are data-driven. Data quality, volume, and drift heavily impact performance. |

DevOps: Login feature tested with predefined inputs.

MLOps: Fraud detection model trained on real-world data and monitored for anomalies. |

| 3 |

Lifecycle Complexity |

Linear lifecycle: code → build → test → deploy → monitor. Changes are predictable. |

Iterative lifecycle with feedback loops for retraining and revalidation. Models degrade over time due to data drift. |

DevOps: UI updated with new features.

MLOps: Demand forecasting model retrained as sales patterns change. |

| 4 |

Testing and Validation |

Tests for functional correctness (unit, integration) and performance (load). |

Tests include model evaluation (precision, recall), data validation (bias, missing values), and robustness. |

DevOps: Tests ensure payment processing.

MLOps: Tests ensure the credit model avoids discrimination. |

| 5 |

Monitoring |

Monitors uptime, latency, and error rates. |

Monitors model accuracy, data drift, fairness, and prediction latency. |

DevOps: Alerts for server downtime.

MLOps: Alerts for accuracy drop due to new user demographics |

| 6 |

Tools and Technologies |

Git, GitHub, GitLab

Jenkins, CircleCI, GitHub Actions

Docker, Kubernetes

Prometheus, Grafana, ELK

Terraform, Ansible

|

DVC, Delta Lake

MLflow, Weights & Biases

TensorFlow, PyTorch, Scikit-learn

Seldon, TFX, KServe

Evidently AI, Arize AI

|

DevOps: Jenkins + Terraform

MLOps: MLflow + TFX |

| 7 |

Team Composition |

Developers, QA engineers, operations specialists |

Data scientists, ML engineers, data engineers, ops teams. Complex collaboration |

DevOps: Team handles code reviews.

MLOps: Aligns model builders, data pipeline owners, and deployment teams. |

Aligning MLOps and DevOps

While MLOps and DevOps have distinct focuses, they are not mutually exclusive. Organisations can align them to create a unified pipeline that supports both software and ML development. Below are strategies to achieve this alignment.

1. Unified CI/CD Pipelines

Integrate ML workflows into existing CI/CD systems. For example, use Jenkins or GitLab to trigger data preprocessing, model training, and deployment alongside software builds.

Example: A retail company uses GitLab to manage both its e-commerce platform (DevOps) and recommendation engine (MLOps). Commits to the codebase trigger software builds, while updates to the model repository trigger training pipelines.

2. Shared Infrastructure

Leverage containerization (Docker, Kubernetes) and cloud platforms (AWS, Azure, GCP) for both software and ML workloads. This reduces overhead and ensures consistency.

Example: A healthcare company deploys a patient management system (DevOps) and a diagnostic model (MLOps) on the same Kubernetes cluster, using shared monitoring tools like Prometheus.

3. Cross-Functional Teams

Foster collaboration between MLOps vs DevOps teams through cross-training and shared goals. Data scientists can learn CI/CD basics, while DevOps engineers can understand ML deployment.

Example: A fintech firm organises workshops where DevOps engineers learn about model drift, and data scientists learn about Kubernetes. This reduces friction during deployments.

4. Standardised Monitoring

Use a unified monitoring framework to track both application and model performance. Tools like Grafana can visualise metrics from software (e.g., latency) and models (e.g., accuracy).

Example: A logistics company uses Grafana to monitor its delivery tracking app (DevOps) and demand forecasting model (MLOps), with dashboards showing both system uptime and prediction errors.

5. Governance and Compliance

Align on governance practices, especially for regulated industries. Both DevOps and MLOps must ensure security, data privacy, and auditability.

Example: A bank implements role-based access control (RBAC) for its trading platform (DevOps) and credit risk model (MLOps), ensuring compliance with GDPR and financial regulations.

Real-World Case Studies

Case Study 1: Netflix (MLOps vs DevOps Integration)

Netflix uses DevOps to manage its streaming platform and MLOps for its recommendation engine. The DevOps team leverages Spinnaker for CI/CD and AWS for infrastructure. The MLOps team uses custom pipelines to train personalisation models, with data stored in S3 and models deployed via SageMaker. Both teams share Kubernetes for deployment and Prometheus for monitoring, ensuring seamless delivery of features and recommendations.

Key Takeaway: Shared infrastructure and monitoring enable Netflix to scale both software and ML workloads efficiently.

Case Study 2: Uber (MLOps for Autonomous Driving)

Uber’s autonomous driving division relies heavily on MLOps to develop and deploy perception models. Data from sensors is versioned using DVC, and models are trained with TensorFlow. The MLOps pipeline integrates with Uber’s DevOps infrastructure, using Docker and Kubernetes for deployment. Continuous monitoring detects model drift due to new road conditions, triggering retraining.

Key Takeaway: MLOps extends DevOps to handle the iterative nature of ML, with a focus on data and model management.

Challenges and Solutions

DevOps Challenges

Siloed Teams: Miscommunication between developers and operations.

- Solution: Adopt a DevOps culture with shared tools and goals.

Legacy Systems: Older infrastructure may not support automation.

- Solution: Gradually migrate to cloud-native solutions like Kubernetes.

MLOps Challenges

Data Drift: Models degrade when input data changes.

- Solution: Implement monitoring tools like Evidently AI to detect drift and trigger retraining.

Reproducibility: Experiments are hard to replicate without proper versioning.

- Solution: Use tools like MLflow and DVC for experimentation and data versioning.

Future Trends

- AIOps: Integrating AI into DevOps for predictive analytics and automated incident resolution.

- AutoML in MLOps: Automating model selection and hyperparameter tuning to streamline MLOps pipelines.

- Serverless ML: Deploying models using serverless architectures (e.g., AWS Lambda) for cost efficiency.

- Federated Learning: Training models across distributed devices, requiring new MLOps workflows.

Conclusion

MLOps vs DevOps are complementary frameworks that address the unique needs of software and machine learning development. While DevOps focuses on delivering reliable software through CI/CD, MLOps tackles the complexities of data-driven ML models with iterative training and monitoring. By aligning their tools, processes, and teams, organisations can build robust pipelines that support both traditional applications and AI-driven solutions. Whether you’re deploying a web app or a recommendation system, understanding the interplay between DevOps and MLOps is key to staying competitive in today’s tech-driven world.

Start by assessing your organisation’s needs: Are you building software, ML models, or both? Then, adopt the right tools and practices to create a seamless workflow. With MLOps vs DevOps working in harmony, the possibilities for innovation are endless.

Frequently Asked Questions

-

Can DevOps and MLOps be used together?

Yes, integrating MLOps into existing DevOps pipelines helps organizations build unified systems that support both software and ML workflows, improving collaboration, efficiency, and scalability.

-

Why is MLOps necessary for machine learning projects?

MLOps addresses ML-specific challenges like data drift, reproducibility, and model degradation, ensuring that models remain accurate, reliable, and maintainable over time.

-

What tools are commonly used in MLOps and DevOps?

DevOps tools include Jenkins, Docker, Kubernetes, and Prometheus. MLOps tools include MLflow, DVC, TFX, TensorFlow, and monitoring tools like Evidently AI and Arize AI.

-

What industries benefit most from MLOps and DevOps integration?

Industries like healthcare, finance, e-commerce, and autonomous vehicles greatly benefit from integrating DevOps and MLOps due to their reliance on both scalable software systems and data-driven models.

-

What is the future of MLOps and DevOps?

Trends like AIOps, AutoML, serverless ML, and federated learning are shaping the future, pushing toward more automation, distributed learning, and intelligent monitoring across pipelines.

by Rajesh K | Apr 22, 2025 | Artificial Intelligence, Blog, Latest Post |

Picture this: you describe your dream app in plain English, and within minutes, it’s a working product no coding, no setup, just your vision brought to life. This is Vibe Coding, the AI powered revolution redefining software development in 2025. By turning natural language prompts into fully functional applications, Vibe Coding empowers developers, designers, and even non-technical teams to create with unprecedented speed and creativity. In this blog, we’ll dive into what Vibe Coding is, its transformative impact, the latest tools driving it, its benefits for QA teams, emerging trends, and how you can leverage it to stay ahead. Optimized for SEO and readability, this guide is your roadmap to mastering Vibe Coding in today’s fast-evolving tech landscape.

What Is Vibe Coding?

Vibe Coding is a groundbreaking approach to software development where you craft applications using natural language prompts instead of traditional code. Powered by advanced AI models, it translates your ideas into functional software, from user interfaces to backend logic, with minimal effort.

Instead of writing:

const fetchData = async (url) => {

const response = await fetch(url);

return response.json();

};

You simply say:

"Create a function to fetch and parse JSON data from a URL."

The AI generates the code, tests, and even documentation instantly.

Vibe Coding shifts the focus from syntax to intent, making development faster, more accessible, and collaborative. It’s not just coding; it’s creating with clarity

Why Vibe Coding Matters in 2025

As AI technologies like large language models (LLMs) evolve, Vibe Coding has become a game-changer. Here’s why it’s critical today:

- Democratized Development: Non-coders, including designers and product managers, can now build apps using plain language.

- Accelerated Innovation: Rapid prototyping and iteration mean products hit the market faster.

- Cross-Team Collaboration: Teams align through shared prompts, reducing miscommunication.

- Scalability: AI handles repetitive tasks, letting developers focus on high-value work.

Key Features of Vibe Coding

1. Natural Language as Code

Write prompts in plain English, Spanish, or any language. AI interprets and converts them into production-ready code, bridging the gap between ideas and execution.

2. Full-Stack Automation

A single prompt can generate:

- Responsive frontends (e.g., React, Vue)

- Robust backend APIs (e.g., Node.js, Python Flask)

- Unit tests and integration tests

- CI/CD pipelines

- API documentation (e.g., OpenAPI/Swagger)

3. Rapid Iteration

Not happy with the output? Tweak the prompt and regenerate. This iterative process cuts development time significantly.

4. Cross-Functional Empowerment

Non-technical roles like QA, UX designers, and business analysts can contribute directly by writing prompts, fostering inclusivity.

5. Intelligent Debugging

AI not only generates code but also suggests fixes for errors, optimizes performance, and ensures best practices.

Vibe Coding vs. Traditional AI-Assisted Coding

| S. No |

Feature |

Traditional AI-Assisted Coding |

Vibe Coding |

| 1 |

Primary Input |

Code with AI suggestions |

Natural language prompts |

| 2 |

Output Scope |

Code snippets, autocomplete |

Full features or applications |

| 3 |

Skill Requirement |

Coding knowledge |

Clear communication |

| 4 |

QA Role |

Post-coding validation |

Prompt review and testing |

| 5 |

Example Tools |

GitHub Copilot, Tabnine |

Cursor, Devika AI, Claude |

| 6 |

Development Speed |

Moderate |

Extremely fast |

Mastering Prompt Engineering: The Heart of Vibe Coding

The secret to Vibe Coding success lies in Prompt Engineering the art of crafting precise, context-rich prompts that yield accurate AI outputs. A well written prompt saves time and ensures quality.

Tips for Effective Prompts:

- Be Specific: “Build a responsive e-commerce homepage with a product carousel using React and Tailwind CSS.”

- Include Context: “The app targets mobile users and must support dark mode.”

- Define Constraints: “Use TypeScript and ensure WCAG 2.1 accessibility compliance.”

- Iterate: If the output isn’t perfect, refine the prompt with more details.

Example Prompt:

"Create a React-based to-do list app with drag-and-drop functionality, local storage, and Tailwind CSS styling. Include unit tests with Jest and ensure the app is optimized for mobile devices."

Result: A fully functional app with clean code, tests, and responsive design.

Real-World Vibe Coding in Action

Case Study: Building a Dashboard

Prompt:

"Develop a dashboard in Vue.js with a bar chart displaying sales data, a filterable table, and a dark/light theme toggle. Use Chart.js for visuals and Tailwind for styling. Include API integration and error handling."

Output:

- A Vue.js dashboard with interactive charts

- A responsive, filterable table

- Theme toggle with persistent user preferences

- API fetch logic with loading states and error alerts

- Unit tests for core components

Bonus Prompt:

"Generate Cypress tests to verify the dashboard’s filtering and theme toggle."

Result: End-to-end tests ensuring functionality and reliability.

This process, completed in under an hour, showcases Vibe Coding’s power to deliver production-ready solutions swiftly.

The Evolution of Vibe Coding: From 2023 to 2025

Vibe Coding emerged in 2023 with tools like GitHub Copilot and early LLMs. By 2024, advanced models like GPT-4o, Claude 3.5, and Gemini 2.0 supercharged its capabilities. In 2025, Vibe Coding is mainstream, driven by:

- Sophisticated LLMs: Models now understand complex requirements and generate scalable architectures.

- Integrated IDEs: Tools like Cursor and Replit offer real-time AI collaboration.

- Voice-Based Coding: Voice prompts are gaining traction, enabling hands-free development.

- AI Agents: Tools like Devika AI act as virtual engineers, managing entire projects.

Top Tools Powering Vibe Coding in 2025

| S. No |

Tool |

Key Features |

Best For |

| 1 |

Cursor IDE |

Real-time AI chat, code diffing |

Full-stack development |

| 2 |

Claude (Anthropic) |

Context-aware code generation |

Complex, multi-file projects |

| 3 |

Devika AI |

End-to-end app creation from prompts |

Prototyping, solo developers |

| 4 |

GitHub Copilot |

Autocomplete, multi-language support |

Traditional + Vibe Coding hybrid |

| 5 |

Replit + Ghostwriter |

Browser-based coding with AI |

Education, quick experiments |

| 6 |

Framer AI |

Prompt-based UI/UX design |

Designers, front-end developers |

These tools are continuously updated, ensuring compatibility with the latest frameworks and standards.

Benefits of Vibe Coding

1. Unmatched Speed: Build features in minutes, not days, accelerating time-to-market.

2. Enhanced Productivity: Eliminate boilerplate code and focus on innovation.

3. Inclusivity: Empower non-technical team members to contribute to development.

4. Cost Efficiency: Reduce development hours, lowering project costs.

5. Scalable Creativity: Experiment with ideas without committing to lengthy coding cycles.

QA in the Vibe Coding Era

QA teams play a pivotal role in ensuring AI-generated code meets quality standards. Here’s how QA adapts:

QA Responsibilities:

- Prompt Validation: Ensure prompts align with requirements.

- Logic Verification: Check AI-generated code for business rule accuracy.

- Security Audits: Identify vulnerabilities like SQL injection or XSS.

- Accessibility Testing: Verify compliance with WCAG standards.

- Performance Testing: Ensure apps load quickly and scale well.

- Test Automation: Use AI to generate and maintain test scripts.

Sample QA Checklist:

- Does the prompt reflect user requirements?

- Are edge cases handled (e.g., invalid inputs)?

- Is the UI accessible (e.g., screen reader support)?

- Are security headers implemented?

- Do automated tests cover critical paths?

QA is now a co-creator, shaping prompts and validating outputs from the start.

Challenges and How to Overcome Them

AI Hallucinations:

- Issue: AI may generate non-functional code or fake APIs.

- Solution: Validate outputs with unit tests and manual reviews.

Security Risks:

- Issue: AI might overlook secure coding practices.

- Solution: Run static code analysis and penetration tests.

Code Maintainability:

- Issue: AI-generated code can be complex or poorly structured.

- Solution: Use prompts to enforce modular, documented code.

Prompt Ambiguity:

- Issue: Vague prompts lead to incorrect outputs.

- Solution: Train teams in prompt engineering best practices.

The Future of Vibe Coding: What’s Next?

By 2026, Vibe Coding will evolve further:

- AI-Driven Requirements Gathering: LLMs will interview stakeholders to refine prompts.

- Self-Healing Code: AI will detect and fix bugs in real time.

- Voice and AR Integration: Develop apps using voice commands or augmented reality interfaces.

- Enterprise Adoption: Large organizations will integrate Vibe Coding into DevOps pipelines.

The line between human and AI development is blurring, paving the way for a new era of creativity.

How to Get Started with Vibe Coding

1. Choose a Tool: Start with Cursor IDE or Claude for robust features.

2. Learn Prompt Engineering: Practice writing clear, specific prompts.

3. Experiment: Build a small project, like a to-do app, using a single prompt.

4. Collaborate: Involve QA and design teams early to refine prompts.

5. Stay Updated: Follow AI advancements on platforms like X to leverage new tools.

Final Thoughts

Vibe Coding is a mindset shift, empowering everyone to create software with ease. By focusing on ideas over syntax, it unlocks creativity, fosters collaboration, and accelerates innovation. Whether you’re a developer, QA professional, or product manager, Vibe Coding is your ticket to shaping the future.

The next big app won’t be coded line by line—it’ll be crafted prompt by prompt.

Frequently Asked Questions

-

What is the best way for a beginner to start with Vibe Coding?

To begin vibe coding, beginners need to prepare their workspace for better efficiency. Then, they should learn some basic coding practices and check out AI tools that can boost their learning. Lastly, running simple code will help them understand better.

-

How do I troubleshoot common issues in Vibe Coding?

To fix common problems in vibe coding, begin by looking at error messages for hints. Check your code for any syntax errors. Make sure you have all dependencies installed correctly. Use debugging tools to go through your code step by step. If you need help, you can ask for support in online forums.

-

Can Vibe Coding be used for professional development?

Vibe coding can really improve your professional growth. It helps you get better at coding and increases your creativity. You can also use AI tools to work more efficiently. When you use these ideas in real projects, you boost your productivity. You also become more adaptable in the changing tech world.

-

What role does QA play in Vibe Coding?

QA plays a critical role in validating AI-generated code. With the help of AI testing services, testers ensure functionality, security, and quality—right from prompt review to deployment.

-

Is Vibe Coding only for developers?

No it’s designed to be accessible. Designers, project managers, and even non-technical users can create functional software using AI by simply describing what they need.

by Arthur Williams | Feb 13, 2025 | Artificial Intelligence, Blog, Latest Post |

Software testing has always been a critical part of development, ensuring that applications function smoothly before reaching users. Traditional testing methods struggle to keep up with the need for speed and accuracy. Manual testing, while thorough, can be slow and prone to human error. Automated testing helps but comes with its own challenges—scripts need frequent updates, and maintaining them can be time-consuming. This is where AI-driven testing is making a difference. Instead of relying on static test scripts, AI can analyze code, understand changes, and automatically update test cases without requiring constant human intervention. Both DeepSeek vs Gemini offer advanced capabilities that can be applied to software testing, making it more efficient and adaptive. While these AI models serve broader purposes like data processing, automation, and natural language understanding, they also bring valuable improvements to testing workflows. By incorporating AI, teams can catch issues earlier, reduce manual effort, and improve overall software quality.

DeepSeek AI & Google Gemini – How They Help in Software Testing

DeepSeek AI vs Google Gemini utilize advanced AI technologies to improve different aspects of software testing. These technologies automate repetitive tasks, enhance accuracy, and optimize testing efforts. Below is a breakdown of the key AI Components they use and their impact on software testing.

Natural Language Processing (NLP) – Automating Test Case Creation

NLP enables AI to read and interpret software requirements, user stories, and bug reports. It processes text-based inputs and converts them into structured test cases, reducing manual effort in test case writing

Machine Learning (ML) – Predicting Defects & Optimizing Test Execution

ML analyzes past test data, defect trends, and code changes to identify high-risk areas in an application. It helps prioritize test cases by focusing on the functionalities most likely to fail, reducing unnecessary test executions and improving test efficiency.

Deep Learning – Self-Healing Automation & Adaptability

Deep learning enables AI to recognize patterns and adapt test scripts to changes in an application. It detects UI modifications, updates test locators, and ensures automated tests continue running without manual intervention.

Code Generation AI – Automating Test Script Writing

AI-powered code generation assists in writing test scripts for automation frameworks like Selenium, API testing, and performance testing. This reduces the effort required to create and maintain test scripts.

Multimodal AI – Enhancing UI & Visual Testing

Multimodal AI processes both text and images, making it useful for UI and visual regression testing. It helps in detecting changes in graphical elements, verifying image placements, and ensuring consistency in application design.

Large Language Models (LLMs) – Assisting in Test Documentation & Debugging

LLMs process large amounts of test data to summarize test execution reports, explain failures, and suggest debugging steps. This improves troubleshooting efficiency and helps teams understand test results more effectively.

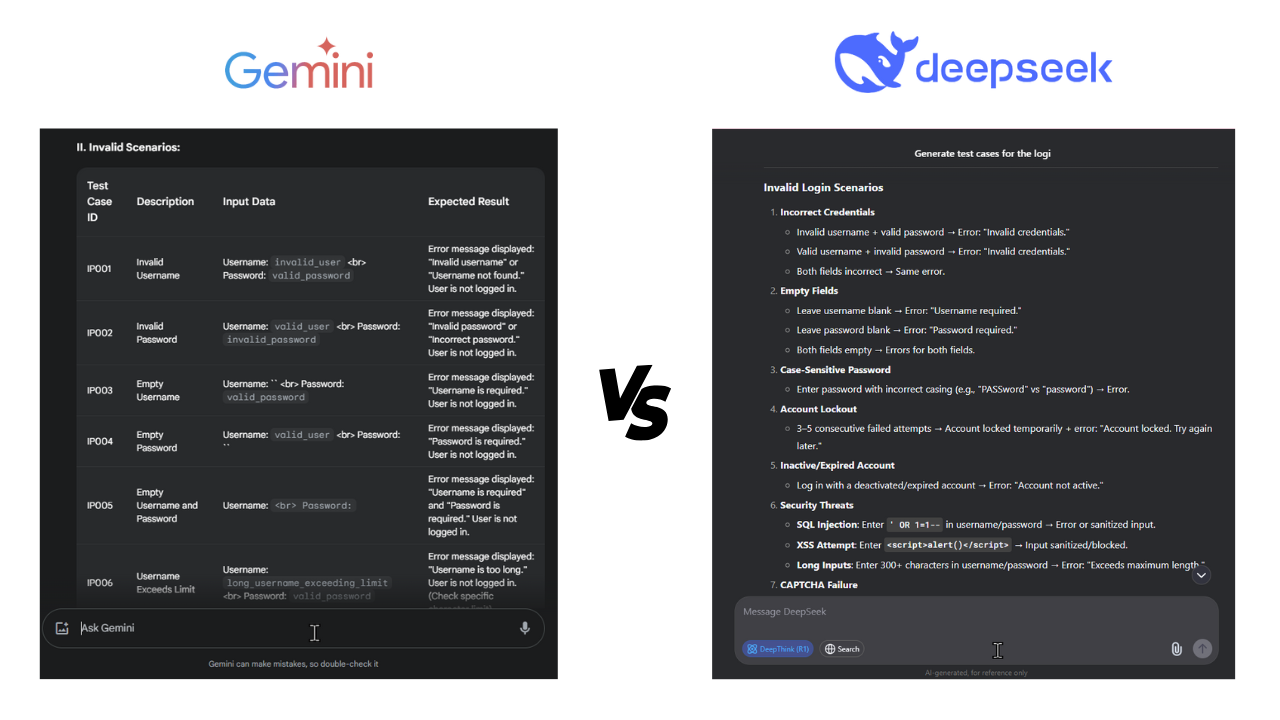

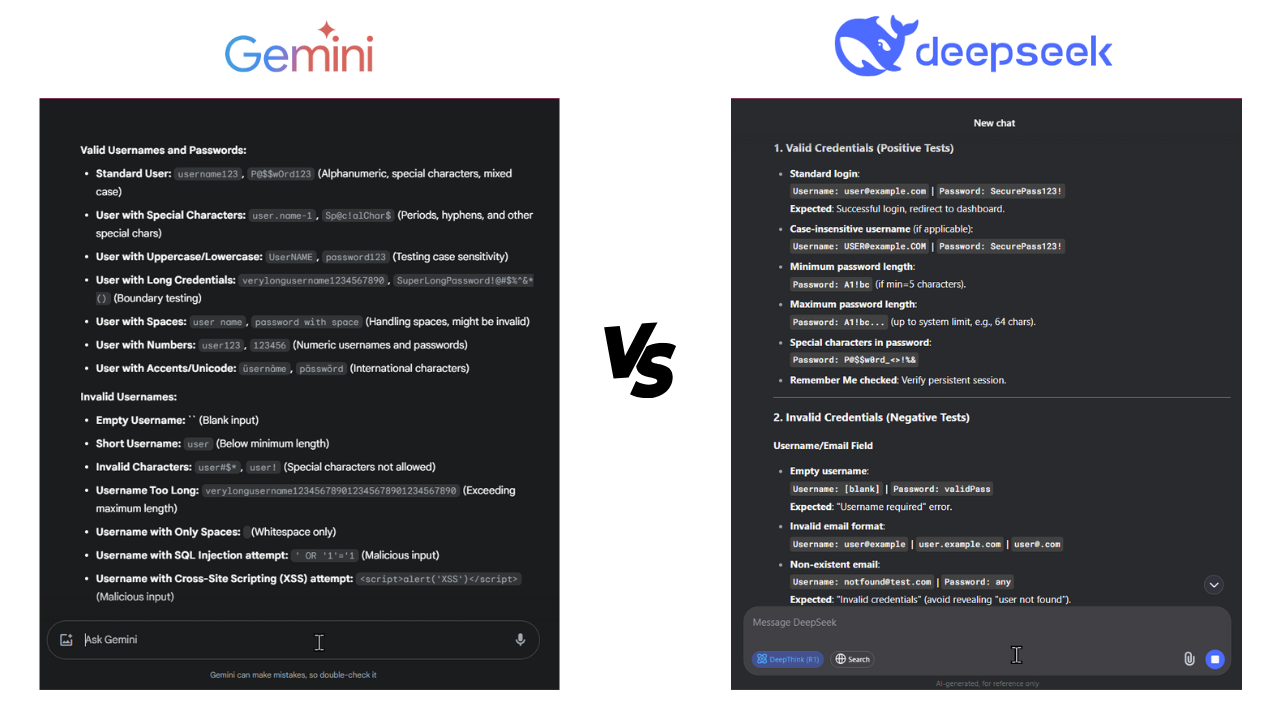

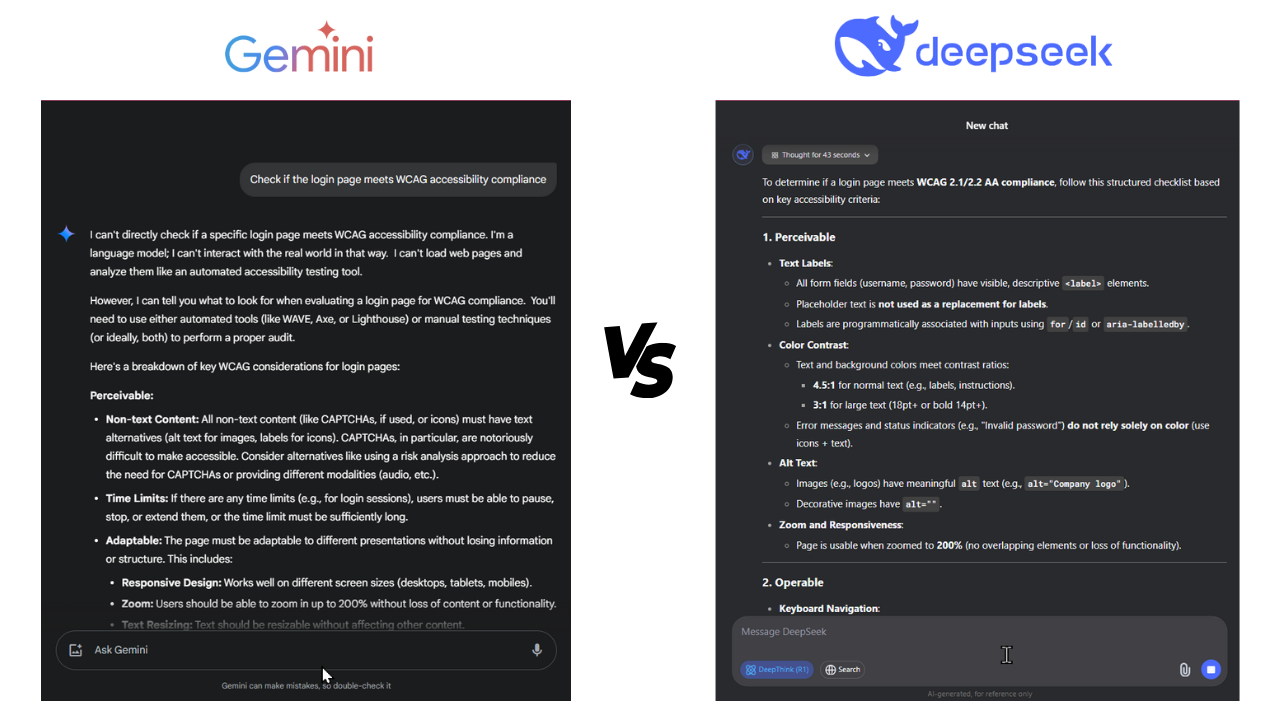

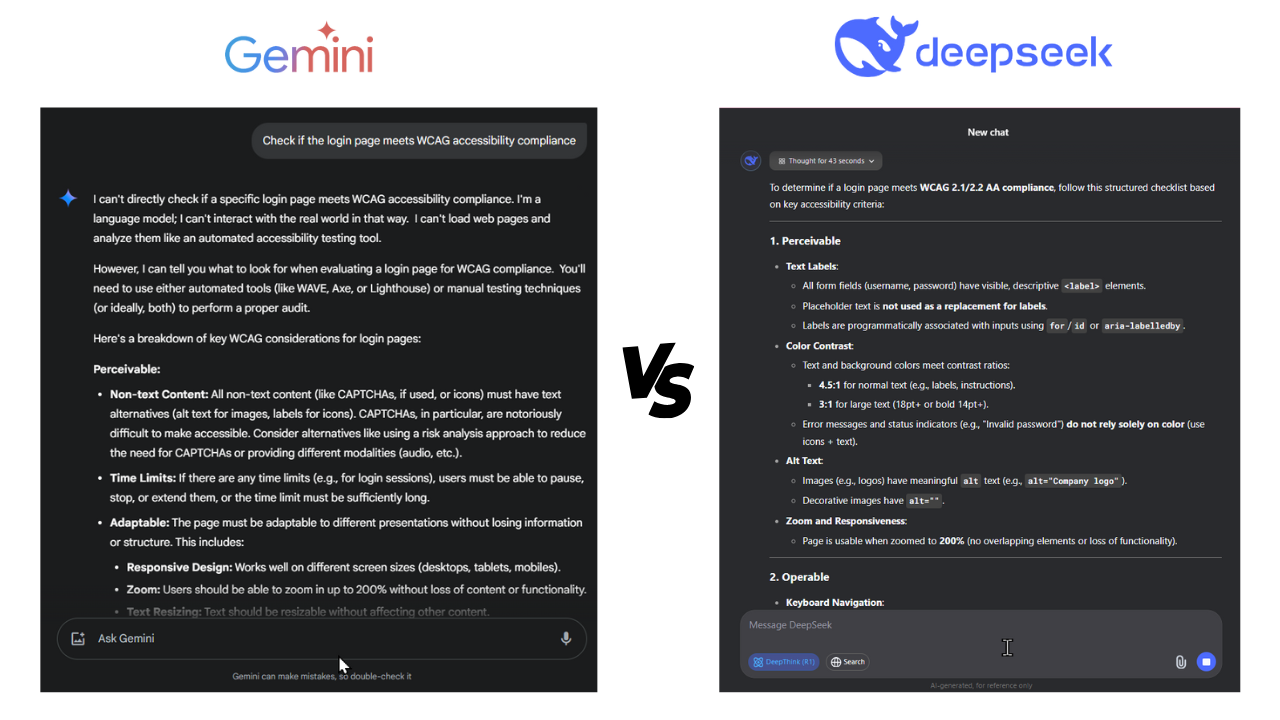

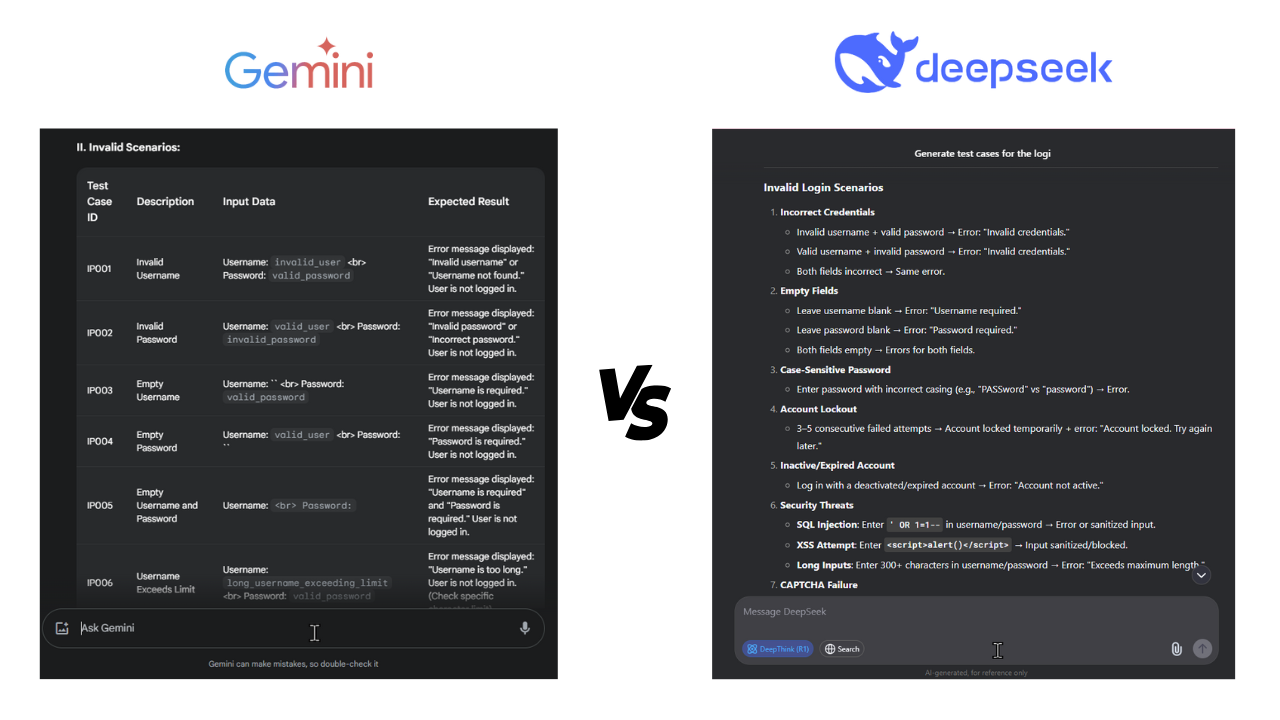

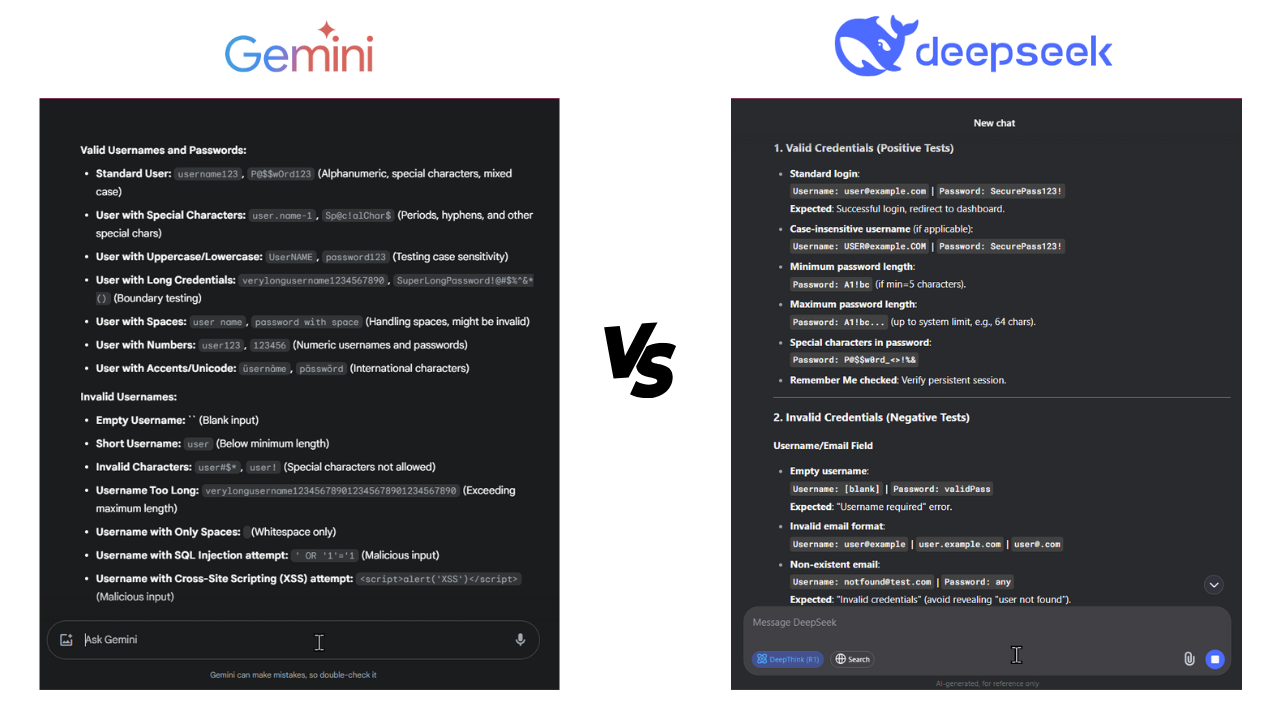

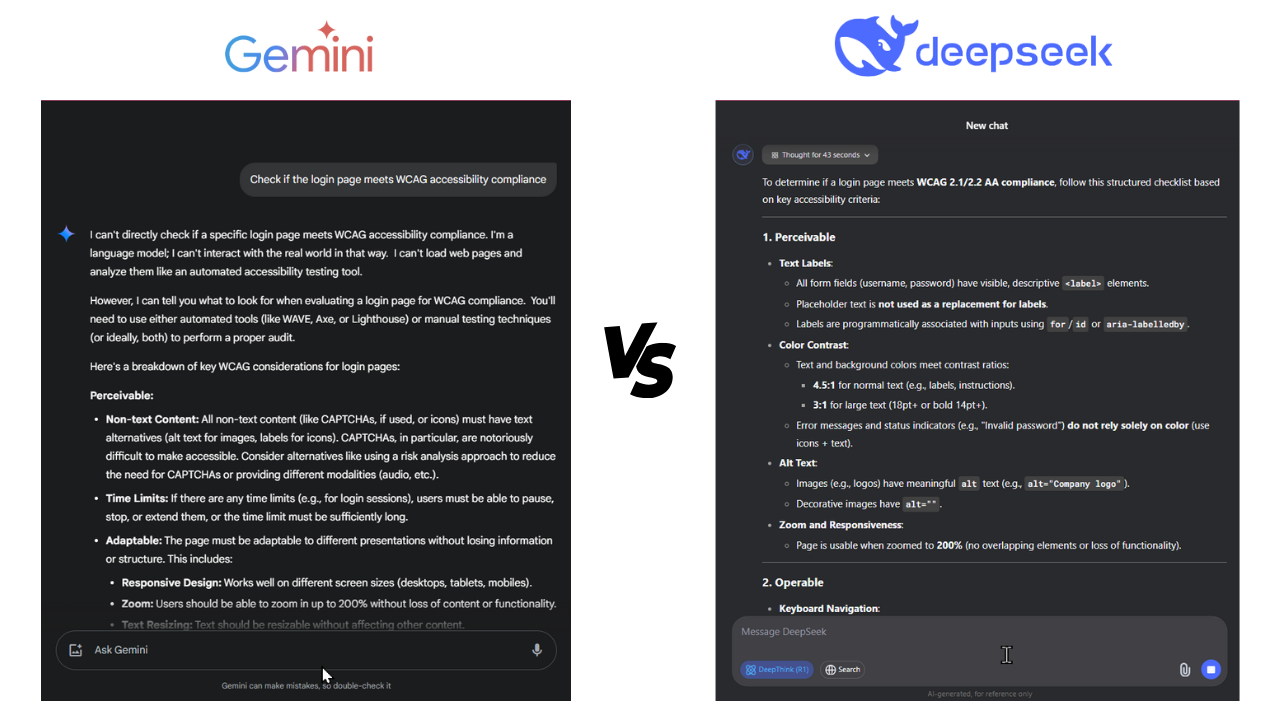

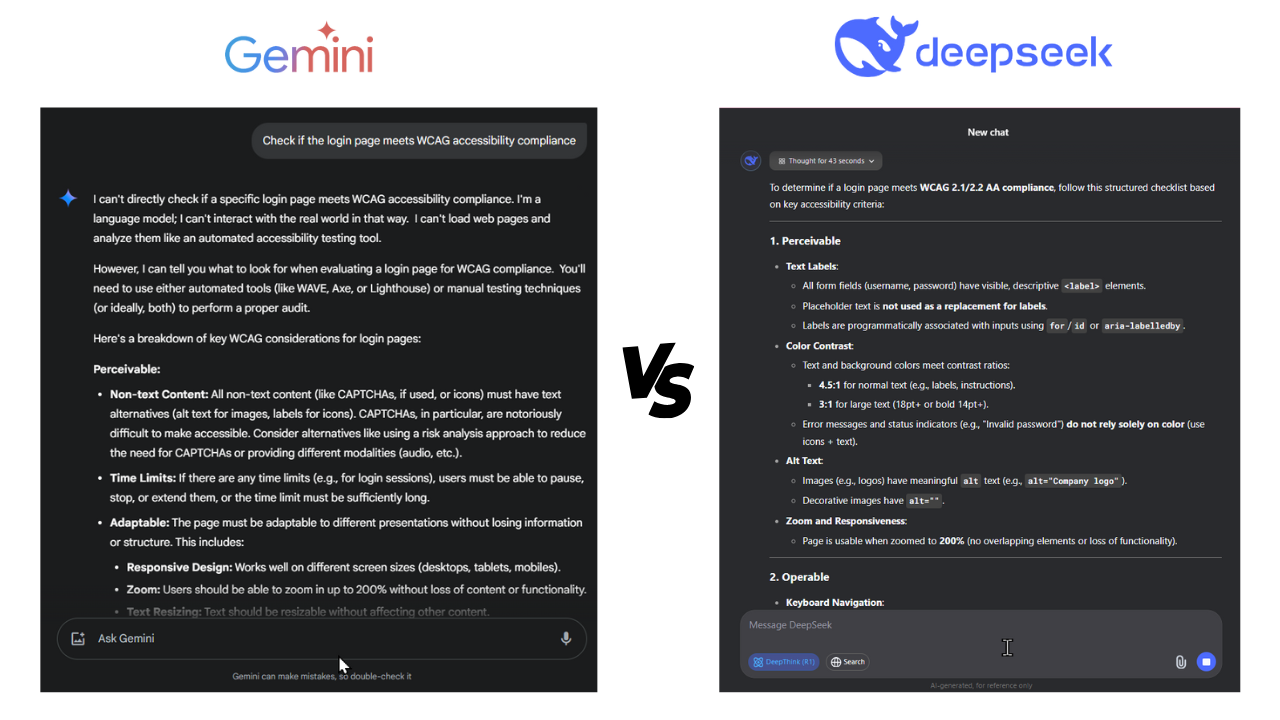

Feature Comparison of DeepSeek vs Gemini: A Detailed Look

| S. No |

Feature |

DeepSeek AI |

Google Gemini |

| 1 |

Test Case Generation |

Structured, detailed test cases |

Generates test cases but may need further refinement |

| 2 |

Test Data Generation |

Diverse datasets, including edge cases |

Produces test data but may require manual fine-tuning |

| 3 |

Automated Test Script Suggestions |

Generates Selenium & API test scripts |

Assists in script creation but often needs better prompt engineering |

| 4 |

Accessibility Testing |

Identifies WCAG compliance issues |

Provides accessibility insights but lacks in-depth testing capabilities |

| 5 |

API Testing Assistance |

Generates Postman requests & API tests |

Helps with request generation but may require additional structuring |

| 6 |

Code Generation |

Strong for generating code snippets |

Capable of generating code but might need further optimization |

| 7 |

Test Plan Generation |

Generates basic test plans |

Assists in test plan creation but depends on detailed input |

How Tester Prompts Influence AI Responses