by Arthur Williams | Nov 26, 2024 | Software Development, Blog, Latest Post |

In today’s world, where mobile devices are becoming increasingly popular, ensuring your website and its various user interfaces and elements are accessible and functional on all screen sizes is more important than ever. Responsive web design (RWD) allows your website to adapt its layout to the size of the screen it is being viewed on, whether that’s a smartphone, tablet, or desktop computer. This adaptability improves user experience (UX), which in turn, boosts your website’s performance, SEO rankings, and overall success.

Our Software Development Services ensure that your website is fully responsive, delivering seamless performance across all devices. From design to implementation, we focus on creating functional, user-friendly, and visually appealing websites tailored to meet your business needs. Let us help you stay ahead in today’s mobile-first world

This blog will guide you through the best practices for responsive web design, helping you ensure that your website delivers an optimal viewing experience across all devices.

Key Highlights

- Responsive Web Design is essential for creating a mobile-friendly website that adapts to various screen sizes.

- Mobile-first design is a core concept of responsive web design, ensuring the smallest screen version is prioritized.

- Fluid grid layouts and flexible images allow for smoother scaling across devices.

- Using media queries ensures your content looks great on any device.

- Performance optimization plays a crucial role in responsiveness, reducing load times and improving user experience.

What is Responsive Web Design?

Responsive web design refers to the approach of designing a website as a design strategy that makes it work well on a variety of devices and window or screen sizes, including accounting for browser width. This means using flexible layouts, images, and CSS media queries to ensure your site looks good and functions properly, no matter if it’s viewed on a desktop, tablet, or smartphone.

With mobile traffic accounting for over half of global web traffic, ensuring your website is responsive is not just a design trend but a necessity for a positive user experience.

Why is Responsive Web Design Important?

- Enhanced User Experience: A responsive website automatically adjusts to fit the user’s screen size, which improves usability. This means fewer zooming, scrolling, and resizing for users.

- SEO Benefits: Google rewards mobile-friendly websites with higher rankings. A responsive site is considered more valuable because it provides a seamless experience across devices.

- Cost-Effective: Building a responsive website saves you the cost of developing a separate mobile version of the site. Maintaining one website that works across all devices is more efficient.

- Faster Load Times: Proper responsive design helps optimize loading speeds, which is critical for keeping users engaged and reducing bounce rates.

- Future-Proofing: With the increasing number of devices, including smartwatches, TVs, and tablets, a responsive design ensures that your website will continue to perform well on new devices as they emerge.

Best Practices for Responsive Web Design

1. Mobile-First Design Approach

Mobile-first design means designing for the smallest screen size first and gradually scaling up to larger screens, including a desktop version. By starting with a mobile-first approach, which is aligned with adaptive web design principles, you ensure your website is fully optimized for the device that is most likely to face performance challenges (smartphones).

Why Mobile-First?

- Higher Mobile Traffic: More people browse the web using mobile devices than desktops.

- Prioritize Content: Designing for mobile first forces you to prioritize the most important content.

- Improved Load Times: Mobile-first designs are lighter and often load faster, especially on mobile networks.

Example: Mobile-First Media Query

/* Mobile-first design - default styles */

body {

font-size: 14px;

}

header {

padding: 10px;

}

/* Larger screens (tablets and desktops) */

@media screen and (min-width: 768px) {

body {

font-size: 16px;

}

header {

padding: 20px;

}

}

2. Use Fluid Grid Layouts

Fluid grid layouts use percentages rather than fixed units like pixels to define the width of elements. This ensures that all content can resize proportionally, making it perfect for responsiveness.

Why Use Fluid Grids?

- Flexibility: Fluid grids adapt to different screen sizes.

- Better Visual Balance: Fluid grids help maintain the proportionality of elements even as screen sizes change.

Example: Fluid Grid Layout

.container {

width: 100%;

max-width: 1200px;

margin: 0 auto;

}

.column {

width: 30%;

float: left;

padding: 20px;

}

@media screen and (max-width: 768px) {

.column {

width: 100%;

}

}

3. Flexible Images

For a website to be fully responsive, the images and file formats within it must also scale according to the screen size. By using flexible images, you ensure that they resize appropriately, preventing them from appearing too large or too small.

Why Use Flexible Images?

- Prevents Overflow: Images won’t break the layout by becoming too large for smaller screens.

- Improves Load Times: Flexible images load faster, especially when using responsive image formats like srcset.

Example: Flexible Images

<img src="image.jpg" alt="Image" style="max-width: 100%; height: auto;">

4. Use of Media Queries

Media queries are a CSS technique used to apply different styles based on the device’s characteristics such as screen size, resolution, and orientation. They are essential in adjusting the layout, font sizes, and other styles for various devices.

Why Use Media Queries?

- Tailored User Experience: Different devices require different styles, and media queries help provide that.

- Specificity: They allow for targeting specific devices with tailored styles for mobile, tablet, or desktop screens.

Example: Media Queries for Various Devices

/* Default styles for small screens (smartphones) */

body {

font-size: 14px;

}

/* For tablets and larger devices */

@media screen and (min-width: 768px) {

body {

font-size: 16px;

}

}

/* For desktops */

@media screen and (min-width: 1024px) {

body {

font-size: 18px;

}

}

5. Optimizing Performance

Performance is critical when it comes to responsive web design. A slow-loading site can lead to higher bounce rates and poor user experience. Optimization techniques can include:

- Lazy Loading: Images and videos load only when they are visible in the viewport.

- Image Compression: Reduce the size of images without sacrificing quality.

- Minification of CSS and JavaScript: Remove unnecessary spaces and comments in code files.

Example: Lazy Loading

<img src="image.jpg" alt="Description" loading="lazy">

6. Typography and Readability

Typography plays a huge role in the user experience of any website, and it is especially important on mobile devices where screen space is limited, particularly in relation to viewport width, which is managed by the viewport meta tag. Use scalable fonts that adjust to different screen sizes.

Why Prioritize Typography?

- User Engagement: Readable text keeps users engaged.

- Mobile Optimization: Proper font sizes and spacing ensure content is legible on smaller devices.

Best Practices for Typography

- Use em or rem units instead of px for font sizes.

- Adjust line heights and letter spacing for better readability on smaller screens.

- Keep the text contrast high to improve readability in different lighting conditions.

Example: Responsive Typography

body {

font-size: 1rem;

line-height: 1.5;

}

h1 {

font-size: 2rem;

}

@media screen and (min-width: 768px) {

body {

font-size: 1.25rem;

}

}

7. Test Responsiveness Across Devices

After implementing the above best practices, it’s crucial to test your website’s responsiveness across different devices. Use browser developer tools or online testing tools to simulate various devices and screen sizes.

Why Testing is Essential?

- Catch Issues Early: Testing ensures that potential issues are addressed before the website goes live.

- Ensure Consistency: It ensures the design maintains its integrity across different devices.

8. Focus on Touch Events for Mobile

Touch interactions are fundamental to mobile devices. Ensure that your website is optimized for touch events, such as tap, swipe, and pinch-to-zoom.

Best Practices for Touch Events

- Use Larger Clickable Areas: Buttons and links should be large enough to be easily tapped on small screens.

- Avoid Hover Effects: Hover-based interactions don’t work on touch devices, so consider alternatives like tap events.

Conclusion

Implementing the best practices for responsive web design ensures that your website delivers a superior user experience for mobile user experience across a wide range of devices. By focusing on mobile-first design and the design process involving fluid layouts, flexible images, and performance optimization, you can create a site that is both functional and user-friendly.

Responsive web design is not just about making your mobile website look good on smaller screens; it’s about making sure it performs well across all devices, improving engagement, and ultimately contributing to your website’s success.

By following these best practices, you’re setting your website up for future growth, whether it’s on a new device or on evolving web standards.

Frequently Asked Questions

-

How Do Fluid Grids Work in Responsive Design?

Fluid grids, known as flexible grids, are important in web design. They make websites look good on different devices. Instead of using fixed pixel measurements like older designs, fluid grids use percentages for the width of columns. This allows the layout to change based on the available space. As a result, fluid grids, or the fixed version of the content, work well on various screen sizes.

-

Why is the Mobile-First Approach Recommended for Responsive Design?

The mobile-first approach focuses on mobile users first, taking into account the limited screen real estate and varying screen width. You begin by designing for small screens. Afterwards, you change the design for larger screens. This creates a good layout for your content. It also helps your site load faster and improves search results for responsive websites. Search engines like sites that work well on mobile devices.

-

What is the responsiveness principle?

The responsiveness principle is important in design knowledge. The Interaction Design Foundation supports this idea of the democratization of knowledge. It helps make designs look good on different devices and screen sizes. The aim is to be flexible. This way, it ensures a good user experience on any platform.

-

What are the principles of responsive architecture?

Responsive architecture builds on ideas from responsive design. It uses design thinking to create spaces that can change when needed. This type of architecture is flexible and adapts easily. Its main goal is to improve user experience. It helps buildings act in tune with their surroundings and the people using them.

-

What are the fundamentals of responsive web design?

Responsive web design makes websites look good on all kinds of devices. It makes sure your site works well on smartphones, tablets, and computers. The major principles of responsive design include fluid grid systems, flexible images, and media queries. These elements allow you to change from desktop to mobile web design easily. This greatly improves the user experience.

-

What is the main goal of responsive design?

The goal of responsive design is to provide a good user experience on different devices. It changes the layout and content so they fit well on any screen size. This way, responsive design makes websites easy to read and use, no matter what device you are using.

by Chris Adams | Nov 22, 2024 | E-Learning Testing, Blog, Recent, Latest Post |

In today’s digital world, eLearning services and Learning Management Systems (LMS) are crucial for schools and organizations. Testing these systems ensures users have a seamless learning experience. It focuses on evaluating usability, performance, security, and accessibility while ensuring the system meets diverse learning needs. This comprehensive testing is essential to guarantee that the LMS functions smoothly, providing effective and enjoyable educational opportunities for all users.

Key Highlights

- LMS testing looks at the quality, function, and usability of learning management systems.

- It uses a clear plan to find and fix technical issues, errors in content, and problems with user experience.

- Good LMS testing includes several tests, like checking functionality, usability, performance, and security, while also ensuring easy access.

- Using the right tools and methods can make testing simpler and more effective.

- Real-life examples and best practices offer helpful ideas for successful LMS testing and setting up these systems.

Understanding LMS Testing

LMS testing is an important process. It makes sure that a learning management system (LMS) works well. This helps to give users a good learning experience. The testing checks every part of the system carefully. It looks at everything, from simple tasks to more advanced features.

The goal is to find and fix problems, bugs, or issues with usability. These problems can stop the platform from working well or make it hard for users to feel satisfied. By doing thorough LMS testing, organizations can launch a strong and reliable platform. This will meet the needs of both learners and administrators.

The Role of LMS in Education and Training

- Centralizes content delivery and organization for easy access by learners.

- Enables personalized learning paths to cater to individual needs and pace.

- Supports tracking and reporting to monitor learner progress and outcomes.

- Facilitates interactive and engaging learning through quizzes, videos, and discussions.

- Streamlines administrative tasks like scheduling, grading, and communication.

- Enhances accessibility, allowing learners to engage with content anytime, anywhere.

- Promotes collaboration through forums, group projects, and peer reviews.

Key Components of an Effective LMS

An effective learning management system (LMS) needs key features that create a fun and helpful learning environment. First, it should have a user-friendly interface. This makes it easy for learners to navigate the platform. They should access course content and connect with instructors and classmates without any problems.

The LMS should be easy to use for managing content. Admins must be able to upload, organize, and update learning materials without difficulty. Key LMS features include ways to assess learning and provide feedback. There should also be tools for communication, like discussion forums and messaging. Strong reporting and analytics options are very important as well.

These features help teachers and administrators watch how students are doing. They can find areas where students can improve. Then, they can change the learning experience to meet each person’s needs.

The Importance of Testing in LMS Implementation

Testing is important for showing that an LMS works properly. It checks if the system does what it is meant to do. It also makes sure it meets the needs of the users. Plus, it shows the LMS matches the organization’s learning objectives.

A good testing plan helps reduce risks. It stops expensive problems from happening after the launch. A strong plan also gives people confidence in using the LMS platform.

Why Testing Matters for Successful LMS Deployment

Testing is key to using a learning management system (LMS) effectively. It ensures that the LMS meets an organization’s needs and works well with their technology. A solid testing plan checks several aspects. It looks at how well the LMS functions, its ease of use, performance, security, and how it integrates with existing systems.

By testing the LMS carefully, organizations can find and solve issues before it goes live. This helps avoid problems that could harm the user experience. A good user experience means more people will use the system. This leads to better returns on investment. When testing is done well, it greatly helps in launching the LMS successfully and reaching learning objectives.

Common Challenges in LMS Testing

LMS testing can come with several challenges that organizations need to tackle for a successful implementation. A main issue is finding unexpected technical problems. This might mean having compatibility issues with different browsers or operating systems. These problems can affect the user experience and slow down learning progress.

Another challenge is checking user management. This means looking at registration and enrollment. We also need to check access based on roles and reporting. If we do not test these areas well, we can have data errors, security problems, and bad support for users.

Connecting the LMS software with current systems, such as HR management or student information systems, requires careful testing. This ensures that data stays consistent and helps communication between the systems to run smoothly.

Strategies for Effective LMS Testing

To have good LMS testing, you need a simple plan. This plan should explain what you want to test, how you will do it, when it will happen, and who is in charge of each task.

Organizations can make their LMS testing better and more successful. They can achieve this by following best practices. It is also important to include everyone who is involved.

Planning Your LMS Testing Process

A strong test plan is key for effective LMS testing. This plan must list what will be tested, the goals of the tests, and how the LMS solution will be evaluated. It should also explain how to set up the testing environment. This means including information about the hardware, software, and network that will be used.

- Include people from various teams, like IT, training, and users.

- They can help collect important needs and ideas.

- A clear test plan must have a detailed schedule.

- This schedule will show when each testing part will happen.

- It should also include all the test cases.

- These test cases must cover the main functions and features of the LMS.

The plan needs to explain how to write down and keep an eye on any problems found. It should also say how to report test results and share what we learned with the right people.

Types of LMS Tests to Conduct

Many tests are required to fully check an LMS.

Below are the main types of tests:

- Functionality Testing: This test checks if all the features work well. It looks at tasks like creating courses, managing them, doing assessments, grading, and reporting.

- Usability Testing: This test examines user experience. It checks how easy it is to use the platform, how friendly it feels, and how accessible and simple it is to navigate.

- Performance Testing: This test checks how well the system works when many users are on it and when there is a lot of data. It ensures the system stays fast and does not crash due to high demand.

- Security Testing: This test looks at the platform’s security. It checks user authentication, data encryption, and how it protects against unauthorized access.

- Compatibility Testing: This test makes sure that the LMS works with different web browsers, operating systems, and mobile devices.

By doing these tests, organizations can find problems early and fix them. This reduces risks. It can also help lead to a successful LMS implementation.

Best Practices for Conducting LMS Testing?

Following best practices is important for good LMS testing. One useful practice is to set up a testing area that resembles the real system. This can help you get better results. Testers need to have clear test data that shows real-life situations. This data should cover various user roles, course sign-ups, and learning activities.

It is a smart choice to let end-users take part in testing. This way, you can see how easy the platform is to use and find ways to improve it. A clear feedback loop is important too. With a feedback loop, testers can report problems, suggest changes, and share what they find easily.

It’s really important to stay connected and work together. This includes everyone, such as developers, testers, and instructional designers. When they talk to each other well, they can solve problems more quickly. This helps to create a good learning environment.

Tools and Techniques for LMS Testing

Many tools and methods can improve LMS testing. This makes it easier for companies to complete their work. These tools include free testing frameworks and special LMS testing software.

Using these tools can help test tasks get better. They can make the tasks more accurate and cover more areas. They can also handle the boring parts. This saves time and resources.

Essential Tools for Efficient LMS Testing

- Organizations can use tools to save time and resources.

- These tools can make LMS testing more precise.

- They assist with automation and give detailed reports.

- Test management tools keep all test cases, test data, and bug reports together in one place.

- They can connect easily with other project management and communication tools.

- This makes working as a team a lot easier.

- Performance testing tools can mimic many users at once.

- They help find slow spots and possible issues.

- These tools give live data on response times, system use, and server load.

Security Testing Tools: These tools find weak points and check security. They make sure the LMS platform is safe and keep the data secure.

By choosing tools that fit what an organization needs and what its team can use, testing will be easier and quicker.

Automation in LMS Testing: Benefits and Considerations

Automation makes repetitive tasks easier. It helps increase test coverage. It also saves time and energy during testing. Test automation tools can perform actions like real users. They can log in, go through courses, submit assignments, and participate in discussion forums. This allows testers to focus on more challenging testing situations.

Organizations should carefully decide which tests to automate. Automating tests that occur frequently, take a lot of time, or are prone to errors is a good idea. This involves tests for regression or performance. It’s also key to ensure that your test automation framework is robust enough to handle changes in your LMS system or responsive design.

Regular care and updates for automated test scripts are very important. These updates help keep everything up-to-date with software updates and new features.

Case Studies: Successful LMS Testing Scenarios

Seeing real-world examples helps us understand how to test Learning Management Systems (LMS) in different areas. In higher education, schools tested LMS with success. They made sure it works well with student information systems. This helps make the online learning experience better for students.

Companies use LMS testing to improve employee training. This process helps workers recall what they have learned. It also reviews how effective the training is. As a result, employee performance and productivity can increase.

Lessons Learned from Implementing LMS in Higher Education

Higher education institutions that use LMS platforms like Canvas LMS can offer useful lessons. A main lesson is to involve both teachers and students in testing. This way, we can gather feedback on the system’s ease of use. It also helps identify any problems that may come up in the school setting.

Institutions now see that proper training and support for teachers and students are essential for starting the system. Clear guides, FAQs, and support channels can help fix problems. This helps everyone adjust to the new platform more easily.

Regular communication and teamwork among the IT department, instructional designers, and faculty is very important. This teamwork helps fix technical problems. It also improves course content. Plus, it makes sure the LMS works well to meet learning objectives.

Corporate Training Success Stories: LMS Testing Insights

Corporate organizations gain a lot from having strong plans for testing their Learning Management Systems (LMS) for employee training. Online studies show that connecting the LMS to clear business goals and learning results is important. Companies that use an LMS stress the need for training content that is interesting and meets the different needs of their employees.

They found out that using data and analytics from the LMS helps keep track of how learners are doing. It shows where things need to get better and checks how effective the training programs are. By using these data insights, organizations can keep improving their training efforts and make sure they get a good return on their investment. A successful LMS setup shows how organizations can help their employees through development programs.

LMS Testing for Various Learning Environments

LMS testing methods often need changes based on where they are used. For example, educational institutions might focus more on accessibility features. In contrast, companies might find it useful to connect with performance management systems.

It is important to adjust test cases to match the users, the types of content, and the learning objectives of the learning environment you are testing.

Adapting LMS Testing for K-12 Education

Adapting LMS testing for K-12 education needs careful thought. You should think about what works best for each age group. Student privacy is important, so keep that in mind. Also, think about how parents can get involved. The tests should look at how well the platform supports different ways of learning and paces. Additionally, it should offer personalized learning experiences.

It’s important to see if the LMS meets the learning objectives and standards for each grade. We should also test how well the platform provides detailed reports and analytics. This helps teachers to track student performance. They can then notice where students might struggle and make good decisions about teaching.

Working with teachers, school leaders, and parents is important during tests. Their thoughts are valuable. They help solve problems and ensure that the LMS meets the unique needs of the K-12 learning environment.

Customizing Tests for Corporate Learning Platforms

Corporate learning platforms need tests that fit their unique needs. Here are some important things to think about: they should link up with current HR and talent management systems. This helps with a smooth data flow and creates good reports. The tests should also see if the platform can provide timely training content. This content should meet the changing needs of the business and follow current industry trends.

The evaluation process should look at how well the platform can expand as we gain more users and their course needs change. Usability testing plays a key role in this. It must ensure that the platform is easy to use and engaging for people in all kinds of roles and departments. We also need to keep updating and maintaining the testing tools. This will help us keep up with changes in training content, new features, and feedback from users.

Special Considerations for Non-Profit and Government Training Programs

Non-profit and government groups often need special tests for LMS. It is important to check for accessibility features. This can help all learners, and especially those with disabilities.

It is very important to follow government rules for data security and privacy. We must look closely at these areas to keep sensitive information safe. The testing should also check how well the platform works with different training methods. This includes online training, in-person training, and blended training. By doing this, we can support different learning styles and handle any issues that arise.

Working with experts in the organization is vital. This helps make sure the training content meets specific goals. It also makes certain it fits the needs of the learners.

Ensuring Accessibility and Inclusivity in LMS Testing

Accessibility and inclusivity are important for good LMS testing. Every learner, with any ability or disability, should have a chance to access and enjoy the learning content.

- Testing should follow accessibility rules.

- A key rule is the WCAG.

- This ensures that everyone can learn equally.

Guidelines for Accessible LMS Design and Testing

When choosing the right LMS, make sure to find platforms that follow web content accessibility guidelines (WCAG). This helps everyone access your content. It is especially helpful for learners with disabilities. They will be able to see, use, and understand the learning environment. This allows them to participate fully.

- Testing should find text that describes images.

- It should check if you can navigate using the keyboard.

- It needs to make sure it works with screen readers.

- Make sure videos have captions.

- Font sizes should be easy to change.

- Test using assistive technologies.

- Get feedback from users with disabilities.

- Make changes based on what they suggest.

You can create a learning experience that benefits everyone by using a simple design and thorough testing.

Measuring the Success of Your LMS Testing

To understand how effective an LMS testing strategy is, we need specific numbers and important points to evaluate. This includes counting how many problems we find and how long it takes to solve them. We should also look at user satisfaction, how many people are using the system, and the results of their learning.

By looking at these KPIs regularly, we can gather useful information. This will help us see how well our testing is going. It will also give us the facts we need to improve our processes.

Key Performance Indicators (KPIs) for LMS Testing

Key performance indicators, or KPIs, for checking learning management system (LMS) software show how good the testing is. You should pay attention to important numbers like test coverage, defect density, and how long tests take. These numbers help check the quality of LMS software. Other factors, such as user acceptance rates, system downtime, and pass rates of regression tests, also help provide a full view of the testing results. By using these KPIs, organizations can improve their testing and ensure their LMS works well.

Analyzing Feedback and Making Iterative Improvements

To improve your LMS testing, it’s key to create a smooth feedback loop. You need to gather input from several people. This includes learners, instructors, and administrators. Ask for their opinions at different times. This will help you find useful insights and identify what needs fixing. Use tools like surveys, focus groups, and one-on-one interviews. These can help you learn about the platform’s ease of use, content quality, and overall learning experience.

Check the feedback you gathered. Look for patterns, trends, and areas that need quick fixes or future updates. With this data, you can keep improving the LMS. This will help it meet the changing needs of users over time.

| Feedback Source |

Feedback Collection Method |

Key Insights |

| Learners |

Surveys, focus groups |

Usability, content relevance, learning experience |

| Instructors |

Individual interviews, feedback forms |

Platform functionality, ease of use, support materials |

| Administrators |

Data analytics, system performance reports |

System efficiency, data accuracy, reporting capabilities |

This method helps people feel that they can always get better. It ensures that the LMS is helpful and functions effectively for all users.

Conclusion

In summary, testing Learning Management Systems (LMS) is crucial. This helps to ensure that these systems work well and effectively. By having strong testing plans, resolving common problems, and using the right tools, companies can enhance the learning experience for students and professionals.

The success of an LMS depends on good testing. This testing should look at accessibility, inclusivity, and how engaged the users are. By following best practices and checking important performance indicators, you can make your LMS testing better. This way, you can keep improving over time.

- Be active in finding and fixing technical problems.

- Get stakeholders involved and pay attention to user feedback.

- This will help you make a better educational platform.

Frequently Asked Questions

-

What is LMS Testing and Why is It Important?

LMS testing is an important part of educational technology. It checks how well learning management systems work and if they are effective. This testing helps online training go smoothly. It also ensures that users have a good experience on any mobile device. Finally, it confirms that the training meets the learning objectives.

-

How Often Should LMS Testing Occur?

LMS testing should happen all the time. It must be included in every step of the LMS lifecycle. This starts when we choose the best LMS. It continues while we use it and goes on even later. We need to test after major software updates, user feedback, or any system changes. This helps keep everything working well and makes sure users are happy.

-

Can LMS Testing Improve User Engagement?

Testing an LMS can really boost user engagement. It helps us find and fix usability problems. By looking at the LMS features for online courses and making sure they are easy to access, we can improve mobile learning. This all leads to a better learning experience and a more engaging learning environment.

by Arthur Williams | Nov 21, 2024 | Software Development, Blog, Latest Post |

In today’s development of web applications, building RESTful APIs is crucial for effective client-server communication. These APIs enable seamless data exchange between the client and server, making them an essential component of modern web development. This blog post will show you how to build strong and scalable RESTful APIs with Node.js, a powerful JavaScript runtime environment. As part of our API Service offerings, we specialize in developing robust, high-performance REST APIs tailored to your business needs. Whether you are just starting or have some experience, this guide will provide useful information and tips for creating great APIs with Node.js.

Key Highlights

- This blog post is a full guide on making RESTful APIs with Node.js and Express.

- You will learn the key ideas behind REST APIs, their benefits, and how to create your first API.

- We will explore how to use Node.js as our server-side JavaScript runtime environment.

- We will use Express.js, a popular Node.js framework that makes routing, middleware, and handling requests easier.

- The blog will also share best practices and security tips for building strong APIs

Understanding RESTful APIs with Node.js and Their Importance

Before diving into the details, let’s start with the basics of RESTful APIs with Node.js and their significance in web development. REST, or Representational State Transfer, is an architectural style for building networked applications. It uses the HTTP protocol to facilitate communication between clients and servers.

RESTful APIs with Node.js are popular because of their simplicity, scalability, and flexibility. By adhering to REST principles, developers can build APIs that are stateless, cacheable, and easy to maintain—key traits for modern, high-performance web applications.

Definition and Principles of RESTful Services in Node.js

REST stands for Representational State Transfer. It is not a set protocol or a strict standard. Instead, it is a way to design applications that use the client-server model. A key part of REST is the use of a common set of stateless operations and standard HTTP methods. These methods are GET, POST, PUT, and DELETE, and they are used to handle resources.

A main feature of RESTful services is a uniform interface. This means you will connect to the server in the same way each time, even if the client works differently. This makes REST APIs easy to use and mix into other systems.

REST is now the top architectural style for web services. This is because it makes it easy for different systems to communicate and share data.

Benefits of Using RESTful APIs with Node.js for Web Development

The popularity of REST architecture in web development is because it has many benefits. These benefits make it easier to build flexible and fast applications. REST APIs are good at managing lots of requests. Their stateless design and ability to keep responses help lower server load and improve performance.

The best thing about REST is that it works with any programming language. You can easily connect JavaScript, Python, or other technologies to a REST API. This flexibility makes REST a great choice for linking different systems. Here are some good benefits of using REST APIs in web development:

- Scaling Up: Handle more requests fast without delay.

- Easy Connections: Quickly link with any system, no matter the tech.

- Easy to Use: A simple format for requests and responses makes APIs straightforward and clear.

Introduction to Node.js and Express for Building RESTful APIs

Now, let’s talk about the tools we will use to create our RESTful APIs. We will use Node.js and Express. Many people use these tools in web development right now. Node.js allows us to run JavaScript code outside the browser. This gives us several options for server-side applications.

Express.js is a framework that runs on Node.js. It offers a simple and efficient way to build web applications and APIs. With its easy-to-use API and helpful middleware, developers can concentrate on creating the app’s features. They do not need to worry about too much extra code.

Overview of Node.js: The JavaScript Runtime

Node.js is a JavaScript runtime environment. It lets developers run JavaScript without a web browser. This means they can create server-side applications using a language they already know. Node.js uses an event-driven and non-blocking I/O model. This helps Node.js manage many requests at the same time in an effective way.

One key part of Node.js is npm. It stands for Node Package Manager. Npm provides many free packages for developers. These packages include libraries and tools that make their work simpler. For example, they can help with managing HTTP requests or handling databases. Developers can add these ready-made modules to their projects. This helps them save time during development.

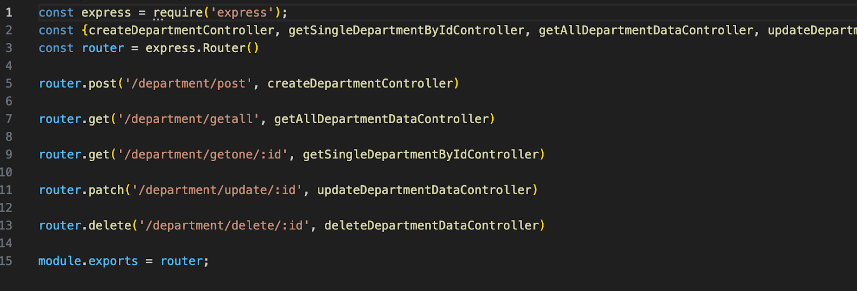

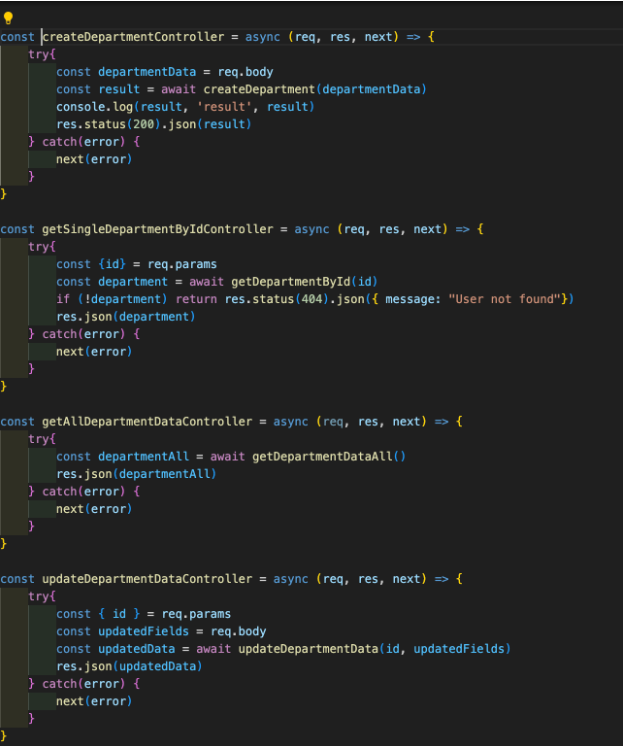

Express Framework: The Backbone of Node API Development

Node.js is the base for making apps. Express.js is a framework that helps improve API development. Express works on top of Node.js and simplifies tasks. It offers a clear way to build web apps and APIs. The Express framework has strong routing features. Developers can set up routes for certain URLs and HTTP methods. This makes it easy to manage different API endpoints.

Middleware is an important concept in Express. It allows developers to add functions that run during requests and responses. These functions can check if a user is logged in, log actions, or change data, including within the user’s module routes. This improves the features of our API. Express helps developers manage each request to the app easily, making it a good choice.

Getting Started with Node.js and Express

Now that we know why we use Node.js and Express, let’s look at how to set up our development environment for an Express application. Before we start writing any code, we need the right tools and resources. The good news is that getting started with Node.js and Express is easy. There is a lot of useful information about these technologies.

We will start by installing Node.js and npm. Next, we will create a new Node.js project. When our project is set up, we can install Express.js. Then, we can begin building our RESTful API.

Essential Tools and Resources for Beginners

To start, the first thing you should do is make sure you have Node.js and its package manager, npm, on your computer. Node.js is important, and npm makes it easy to install packages. You can get them from the official Node.js website. After you install them, use the command line to check if they are working. Just type “node -v” and “npm -v” in the command line.

Next, you should make a project directory. This will keep everything organized as your API gets bigger. Start by creating a new folder for your project. Then, use the command line to open that folder with the command cd. In this project directory, we will use npm to set up a new Node.js project, which will create a package.json file with default settings.

The package.json file has important information about your project. It includes details like dependencies and scripts. This helps us stay organized as we build our API.

Setting Up Your Development Environment

A good development setup is important for better coding. First, let’s make a new directory for our project. This helps us stay organized and avoids clutter. Next, we need to start a RESTful APIs with Node.js project in this new directory. Open your command prompt or terminal. Then, use the cd command to go into the project directory.

Now, type npm init -y. This command creates a package.json file. This file is very important for any Node.js project. It holds key details about our project. After you set up your Node.js project, it’s time to get Express.js. Use the command npm install express –save to add Express to your project.

Building RESTful APIs with Node.js and Express

We’ll walk through each step, from setting up your environment to handling CRUD operations and adding error handling. Let’s get started!

1. Setting Up the Environment

To start, you’ll need to have Node.js installed. You can download it from nodejs.org. Once installed, follow these steps:

mkdir rest-api-example

cd rest-api-example

- Initialize npm: Initialize a Node project to create a package.json file.

- Install Express: Install Express and nodemon (a tool to restart the server automatically on changes) as development dependencies.

npm install express

npm install --save-dev nodemon

- Configure Package.json: Open package.json and add a script for nodemon:

"scripts": {

"start": "nodemon index.js"

}

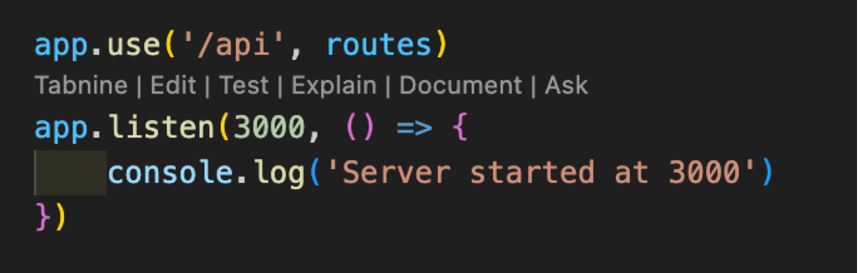

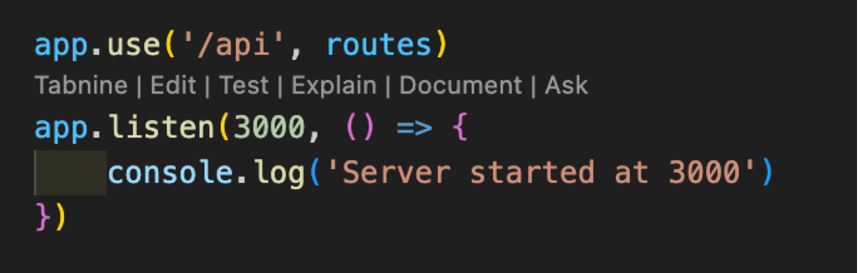

2. Creating the Basic Express Server

- Create index.js: Create an index.js file in the project root. This will be the main server file.

- Set Up Express: In index.js, require and set up Express to listen for requests.

const express = require('express');

const app = express();

app.use(express.json()); // Enable JSON parsing

// Define a simple route

app.get('/', (req, res) => {

res.send('Welcome to the API!');

});

// Start the server

const PORT = 3000;

app.listen(PORT, () => {

console.lo

- Run the Server: Start the server by running:

- You should see Server running on port 3000 in the console. Go to http://localhost:3000 in your browser, and you’ll see “Welcome to the API!”

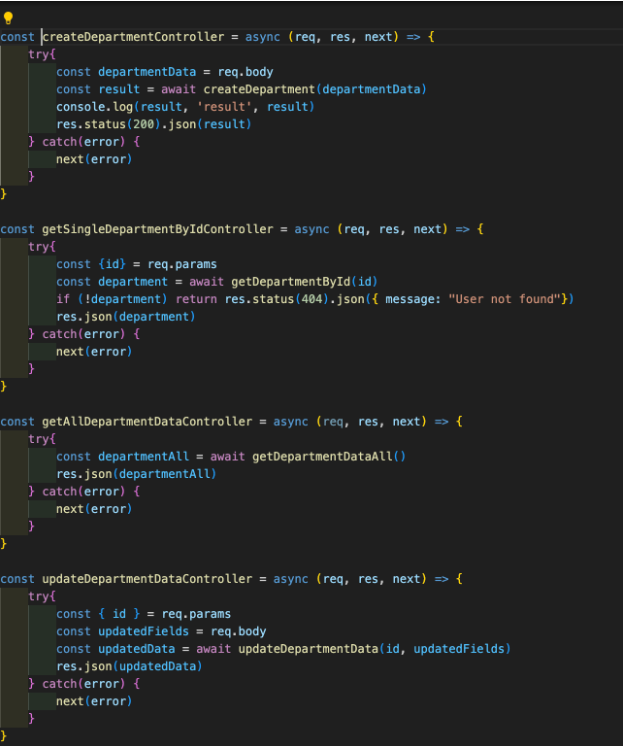

3. Defining API Routes for CRUD Operations

For our RESTful API, let’s create routes for a resource (e.g., “books”). Each route will represent a CRUD operation:

- Set Up the Basic CRUD Routes: Add these routes to index.js.

let books = [];

// CREATE: Add a new book

app.post('/books', (req, res) => {

const book = req.body;

books.push(book);

res.status(201).send(book);

});

// READ: Get all books

app.get('/books', (req, res) => {

res.send(books);

});

// READ: Get a book by ID

app.get('/books/:id', (req, res) => {

const book = books.find(b => b.id === parseInt(req.params.id));

if (!book) return res.status(404).send('Book not found');

res.send(book);

});

// UPDATE: Update a book by ID

app.put('/books/:id', (req, res) => {

const book = books.find(b => b.id === parseInt(req.params.id));

if (!book) return res.status(404).send('Book not found');

book.title = req.body.title;

book.author = req.body.author;

res.send(book);

});

// DELETE: Remove a book by ID

app.delete('/books/:id', (req, res) => {

const bookIndex = books.findIndex(b => b.id === parseInt(req.params.id));

if (bookIndex === -1) return res.status(404).send('Book not found');

const deletedBook = books.splice(bookIndex, 1);

res.send(deletedBook);

});

4. Testing Your API

You can use Postman or curl to test the endpoints:

- POST /books: Add a new book by providing JSON data:

{

"id": 1,

"title": "1984",

"author": "George Orwell"

}

- GET/books: Retrieve a list of all books.

- GET/books/:id: Retrieve a single book by its ID.

- PUT/books/:id: Update the title or author of a book.

- DELETE/books/:id: Delete a book by its ID.

5. Adding Basic Error Handling

Error handling ensures the API provides clear error messages. Here’s how to add error handling to the routes:

- Check for Missing Fields: For the POST and PUT routes, check that required fields are included.

app.post('/books', (req, res) => {

const { id, title, author } = req.body;

if (!id || !title || !author) {

return res.status(400).send("ID, title, and author are required.");

}

// Add book to the array

books.push({ id, title, author });

res.status(201).send({ id, title, author });

});

- Handle Invalid IDs: Check if an ID is missing or doesn’t match any book, and return a 404 status if so.

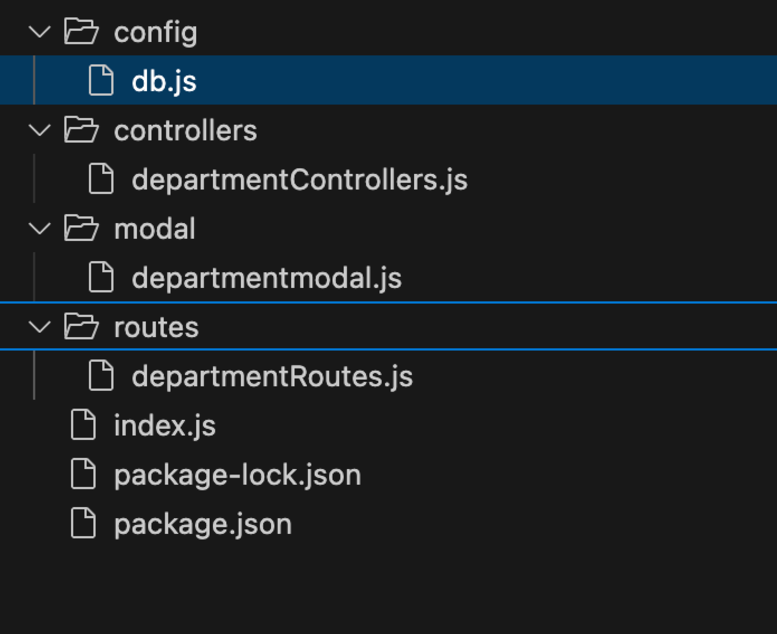

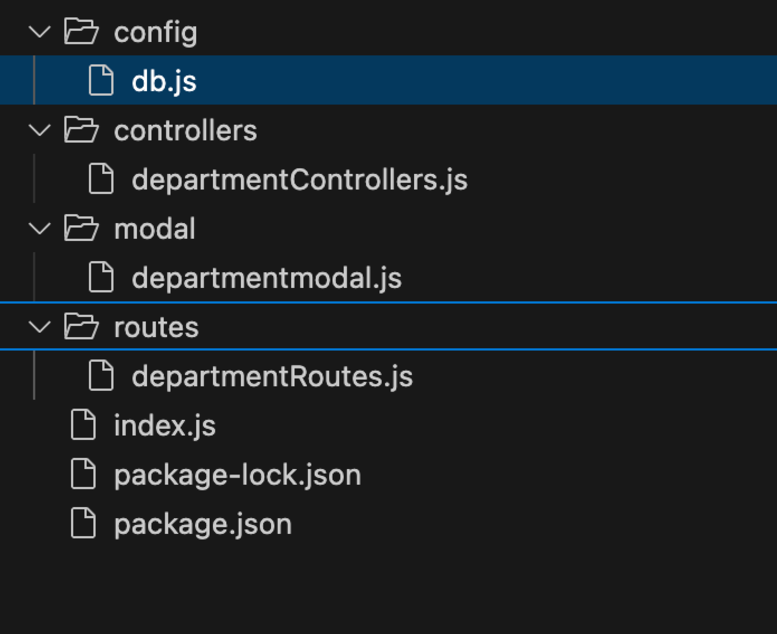

6. Structuring and Modularizing the Code

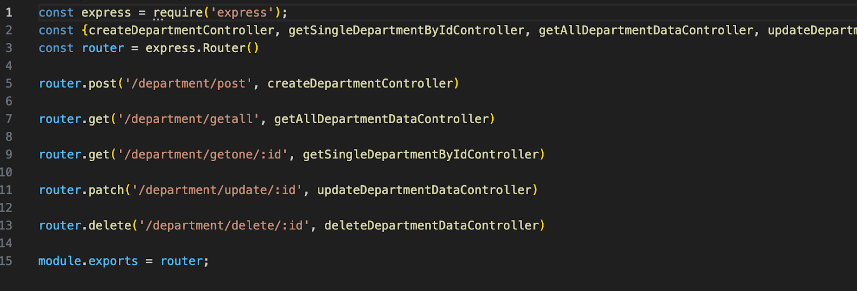

As the application grows, consider separating concerns by moving routes to a dedicated file:

- Create a Router: Create a routes folder with a file books.js.

const express = require('express');

const router = express.Router();

// Add all book routes here

module.exports = router;

- Use the Router in index.js:

const bookRoutes = require('./routes/books');

app.use('/books', bookRoutes);

7. Finalizing and Testing

With everything set up, test the API routes again to ensure they work as expected. Consider adding more advanced error handling or validation with packages like Joi for better production-ready APIs.

Best Practices for RESTful API Development with Node.js

As you start making more complex RESTful APIs with Node.js, it’s key to follow best practices. This helps keep your code easy to manage and expand. What feels simple in small projects turns critical as your application grows. By using these practices from the start, you set a strong base for creating good and scalable APIs that can handle increased traffic and complexity over time. Following these guidelines ensures your RESTful APIs with Node.js are efficient, secure, and maintainable as your project evolves..

One important tip is to keep your code tidy. Don’t cram everything into one file. Instead, split your code into different parts and folders. This will help you use your code again and make it easier to find mistakes.

Structuring Your Project and Code Organization

Keeping your code organized matters a lot, especially as your project grows. A clear structure makes it easier to navigate, understand, and update your code. Instead of placing all your code in one file, it’s better to use a modular design. For instance, you can create specific files for different tasks.

This is just the start. As your API gets bigger, think about using several layers. This means breaking your code into different parts, like controllers, services, and data access. It keeps the different sections of the code apart. This makes it simpler to handle.

Security Considerations: Authentication and Authorization

Security matters a lot when building any app, like RESTful APIs with Node.js. We must keep our users’ information safe from those who should not see it. Authentication helps us verify who a user is.

| Mechanism |

Description |

| JSON Web Tokens (JWTs) |

JWTs are an industry-standard method for securely transmitting information between parties. |

| API Keys |

API Keys are unique identifiers used to authenticate an application or user. |

| OAuth 2.0 |

OAuth 2.0 is a more complex but robust authorization framework. |

You can add an authorization header to HTTP requests to prove your identity with a token. Middleware helps to manage authentication easily. By using authentication middleware for specific routes, you can decide which parts of your API are for authorized users only.

Conclusion

In conclusion, creating RESTful APIs with Node.js and Express is a good way to build websites. By using RESTful methods and the tools in Node.js and Express, developers can make fast and scalable APIs. It is key to organize projects well, protect with authentication, and keep the code clean. This guide is great for beginners who want to build their first RESTful API. Follow these tips and tools to improve your API development skills. You can build strong applications in the digital world today.

Frequently Asked Questions

-

How Do I Secure My Node.js RESTful API?

To protect your APIs, you should use authentication. This checks who the users are. Next, use authorization to decide who can access different resources. You can create access tokens using JSON Web Tokens (JWTs). With this method, only users who have a valid token can reach secure endpoints.

by Jacob | Nov 20, 2024 | Security Testing, Blog, Latest Post |

n today’s digital world, a strong cybersecurity plan is very important. A key part of this plan is the penetration test, an essential security testing service. This test evaluates how secure an organization truly is. Unlike regular checks for weaknesses, a penetration test digs deeper. It identifies vulnerabilities and simulates real-world attacks to exploit them. This approach helps organizations understand their defense capabilities and resilience against cyber threats. By using this security testing service, businesses can address vulnerabilities proactively, strengthening their systems before malicious actors have a chance to exploit them.

Understanding Penetration Testing

A penetration test, or pen test, is a practice used to launch a cyber attack. Its goal is to find weak spots in a company’s systems and networks. This testing helps organizations see how safe they are. It also guides them to make better choices about their resources and improve security. In simple terms, it helps them spot problems before someone with bad intentions does.

There are two main kinds of penetration tests: internal and external. An internal penetration test checks for dangers that come from within the organization. These threats could come from a bad employee or a hacked insider account. On the other hand, an external penetration test targets dangers from outside the organization. It acts like hackers do when they attempt to access the company’s public systems and networks.

The Purpose of Penetration Testing

Vulnerability scanning helps find weak spots in your system. However, it does not show how bad these problems might be. That is why we need penetration testing. This type of testing acts like real cyber attacks. It helps us understand how strong our security really is.

The main goal of a penetration test is to find weaknesses before someone with bad intentions can. By pretending to be an attacker, organizations can:

- Check current security controls: Review the security measures to see if they are effective and lowering risks.

- Find hidden vulnerabilities: Look for weak areas that scanners or manual checks might miss.

- Understand the potential impact: Be aware of the possible damage an attacker could cause and what data they could take.

Diving Deep into Internal Penetration Testing

Internal penetration testing checks how someone inside your company, like an unhappy employee or a hacker who is already inside, might behave. It helps to find weak spots in your internal network, apps, and data storage. This testing shows how a person could navigate within your system and reach sensitive information.

Internal penetration testing shows how insider threats can work. It helps you find weak points in your security rules, employee training, and technology protections. This testing is important to see how damaging insider attacks can be. Often, these attacks can harm your company more than outside threats. This is because insiders already have trust and access.

Defining Internal Penetration Testing

Internal penetration testing checks for weak spots in your organization’s network. It’s a thorough security check by someone who is already inside. They look for ways to get initial access, find sensitive data, and disrupt normal operations.

This testing is very important. It helps us see if the main safety measures, like perimeter security, have been broken. A few things can cause this to happen. A phishing attack could work, or someone might steal a worker’s login details. Sometimes, a simple mistake in the firewall settings can also cause problems. Internal testing shows us how strong your internal systems and data are if a breach happens.

The main goal is to understand how a hacker can move through your network to find their target system. They might use weak security controls to gain unauthorized access to sensitive information. By spotting these weak areas, you can set up strong security measures. This will help lessen the damage from someone already inside your system or from an outside attacker who has bypassed the first line of defense.

Methodologies of Internal Pen Testing

Internal pen testing uses different ways to see how well your organization can keep its network safe from security threats.

- Social Engineering: Testers may send fake emails or act as someone else. This can trick employees into sharing private information or allowing unauthorized people in.

- Exploiting Weak Passwords: Testers try to guess simple passwords on internal systems. This highlights how bad password choices can lead to issues.

- Leveraging Misconfigured Systems: Testers look for servers, apps, or network devices that are set up incorrectly. These problems can cause unauthorized access or give more control to others.

Internal pen testing helps you check how well your company identifies and manages insider threats. It shows how effective your security controls are. It also highlights where you can improve employee training, awareness programs, and rules for access management.

Exploring External Penetration Testing

External penetration testing checks the network and public areas of an organization from the outside. This practice helps to see what attacks could occur from outside. The main aim is to find issues that attackers might use to gain access. It helps them get into your systems and data without permission.

External penetration testing checks how strong your defenses are against outside threats. Every organization, big or small, has some areas that could be exposed to these risks. This testing helps discover how safe your organization seems to anyone looking for weak spots in your systems that are available to the public.

What Constitutes External Penetration Testing?

External penetration testing checks the strength of your outside defenses. It seeks out weak points that attackers may exploit to get inside. You can think of it as a practice run. Ethical hackers act like real attackers. They use similar tools and methods to attempt to break into your network from the outside.

An external pentest usually covers:

- Web Applications: Looking for issues like SQL injection, cross-site scripting (XSS), and unsafe login methods on your sites and apps.

- Network Infrastructure: Checking that your firewalls, routers, switches, and other Internet-connected devices are secure.

- Wireless Networks: Testing your WiFi networks to find weak spots that could allow outsiders to reach your internal systems.

The information from an external penetration test is very useful. It reveals how weak your group is to outside threats. This helps you target issues to fix and improve your defenses. By doing this, you can stop real attackers.

Techniques Employed in External Pen Tests

External pen testers use various ways that mimic how real hackers work. These methods can include:

- Network Scanning and Enumeration: This means checking your organization’s IP addresses. You look for open ports and see what services are running. This helps you find any weak spots.

- Vulnerability Exploitation: This is about using known weaknesses in software or hardware. The goal is to gain unauthorized access to systems or data.

- Password Attacks: This happens when you try to guess weak passwords or bypass security. You might use methods like brute-force or face issues with credential stuffing.

- Social Engineering: This includes tricks like phishing emails, spear-phishing, or harmful posts on social media. The aim is to fool employees into sharing sensitive information or clicking on harmful links

.

These methods help you see your security posture. When you know how an attacker could try to get into your systems, you can build better defenses. This will make it much harder for them to succeed.

Comparing and Contrasting: Internal vs External

Both internal and external penetration testing help find and fix weaknesses. They use different methods and focus on different areas. This can lead to different results. Understanding these key differences is important. It helps you choose the best type of pen test for your organization’s needs.

Here’s a breakdown of the key differences:

| Feature |

Internal Penetration Testing |

External Penetration Testing |

| Point of Origin |

Simulates threats from within the organization, such as a disgruntled employee or an attacker with internal access |

Simulates threats from outside the organization, such as a cybercriminal attempting to breach external defenses |

| Focus |

Identifies risks related to internal access, including weak passwords, poorly configured systems, and insider threats |

Targets external-facing vulnerabilities in websites, servers, and network defenses |

| Methodology |

Employs techniques like insider privilege escalation, lateral movement testing, and evaluating physical security measures |

Utilizes methods such as network scanning, vulnerability exploitation, brute force attacks, and phishing campaigns |

| Goal |

Strengthen internal defenses, refine access controls, and improve employee security awareness |

Fortify perimeter security, remediate external vulnerabilities, and protect against unauthorized access |

| Key Threats Simulated |

Malicious insiders, compromised credentials, and accidental exposure of sensitive data |

Hackers, organized cyberattacks, and exploitation of publicly available information |

| Scope |

Focuses on internal systems, devices, file-sharing networks, and applications accessed by employees |

Concentrates on external-facing systems like web applications, cloud environments, and public APIs |

| Common Techniques |

Social engineering, phishing attempts, rogue device setups, and testing internal policy compliance |

Port scanning, domain footprinting, web application testing, and denial-of-service attack simulation |

| Required Access |

Typically requires insider-level access or simulated insider privileges |

Simulates an outsider with no prior access to the network |

| Outcomes |

Identifies potential breaches post-infiltration, improves internal security posture, and enhances incident response readiness |

Provides insights into how well perimeter defenses prevent unauthorized access and pinpoint external weaknesses |

| Compliance and Standards |

Often necessary for compliance with internal policies and standards, such as ISO 27001 and NIST |

Critical for meeting external regulatory requirements, such as PCI DSS, GDPR, and HIPAA |

| Testing Frequency |

Performed periodically to address insider risks and evaluate new systems or policy updates |

Conducted more frequently for organizations with a high exposure to public-facing systems |

| Challenges |

Requires detailed knowledge of internal architecture and may face resistance from employees who feel targeted by the process |

Often limited by the organization’s firewall configurations or network obfuscation strategies |

| Employee Involvement |

Involves training employees to recognize and mitigate insider threats |

Educates employees on best practices to avoid social engineering attacks from external sources |

Differentiating the Objectives

The main purpose of an internal penetration test is to see how secure an organization is from the inside. This can include a worker trying to create issues, a contractor who is unhappy, or a trusted user whose login information has been stolen.

External network penetration testing looks at risks from outside your organization. This test simulates how a hacker might try to enter your network. It finds weak spots in your public systems. It also checks for ways someone could get unauthorized access to your information.

Organizations can improve their security posture by looking for both internal and external threats. This practice helps them to understand their security better. They can identify weak spots in their internal systems and external defenses.

Analyzing the Scope and Approach

A key difference is what each test examines. External penetration testing looks at the external network of an organization. It checks parts that anyone can reach. This usually includes websites, web apps, email servers, firewalls, and anything else on the internet. The main goal is to see how a threat actor could break into your network from the outside.

Internal penetration testing happens inside the firewall. This test checks how someone who has already gotten in can move around your network. Testers act like bad guys from the inside. Their aim is to gain more access, find sensitive information, or disrupt important services.

The ways we do external and internal penetration testing are different. They each have their own focus. Each type needs specific tools and skills that match the goals, environment, and needs of the test.

Conclusion

In conclusion, knowing the differences between internal and external penetration testing is very important. This knowledge helps improve your organization’s network security. Internal testing looks for weakness inside your network. External testing, on the other hand, simulates real-world threats from outside. When you understand how each type works and what they focus on, you can protect your systems from attacks more effectively. It is important to regularly do both types of pen tests. This practice keeps your cybersecurity strong against bad actors. Stay informed, stay prepared, and prioritize the security of your digital assets.

Frequently Asked Questions

-

What Are the Primary Benefits of Each Testing Type?

Regular penetration testing helps a business discover and enhance its security measures. This practice ensures the business meets industry standards. There are different methods for penetration testing, such as internal, external, and continuous testing. Each method looks at specific security concerns. Over time, these tests create stronger defenses against possible cyber attacks.

-

How Often Should Businesses Conduct Pen Tests?

The number of pen tests you need varies based on several factors. These factors include your business's security posture, industry standards, and the type of testing you will do. It is important to regularly perform a mix of external pen testing, internal testing, and vulnerability assessments.

-

Can Internal Pen Testing Help Prevent External Threats?

Internal pen testing looks for issues within the organization. It can also help reduce risks from outside threats. When pen testers find security gaps that allow unauthorized access, they point out weaknesses that an external threat actor could exploit. A penetration tester may work like an insider, but their efforts still uncover these problems. They provide valuable insights from inside the organization.

-

What Are Common Misconceptions About Pen Testing?

Many people think external tests are more important than internal tests, or they feel the other way around. In reality, both tests are very important. External tests can help prevent data breaches. However, internal systems might have security flaws that hackers could exploit.

by Anika Chakraborty | Nov 19, 2024 | Artificial Intelligence, Blog, Latest Post |

Artificial Intelligence (AI) has transformed how we live, work, and interact with technology. From virtual assistants to advanced robotics, AI is all about speed, logic, and efficiency. Yet, one thing it lacks is the ability to connect emotionally.

Enter Artificial Empathy—a groundbreaking idea that teaches machines to understand human emotions and respond in ways that feel more personal and caring. Imagine a Healthcare bot that notices your anxiety before a procedure or a customer service chatbot that understands the frustration and adapts its tone.

While both AI and Artificial Empathy involve advanced algorithms, they differ in purpose, functionality, and potential impact. Let’s explore what sets them apart and how they complement each other.

Key Highlights:

- AI excels in data-driven tasks but often misses the emotional depth humans bring.

- Artificial Empathy enables machines to recognize and respond to emotions, making interactions more human-like.

- Applications of empathetic AI include healthcare, customer service, education, and more.

- Ethical concerns like privacy and bias must be addressed for responsible development.

- A balanced approach can unlock the full potential of AI and Artificial Empathy.

What Is Artificial Intelligence (AI)

AI refers to computer systems that can perform tasks requiring human-like intelligence. These tasks include decision-making, problem-solving, and pattern recognition. AI uses various techniques, such as:

- Natural Language Processing (NLP): Understanding and generating human language.

- Machine Learning: Learning from data to make predictions or decisions.

- Computer Vision: Recognizing and interpreting visual information.

Examples of AI in action include Google Maps predicting traffic, Netflix recommending shows, and facial recognition unlocking your smartphone.

However, AI’s logical approach often feels cold and detached, especially in scenarios requiring emotional sensitivity, like customer support or therapy.

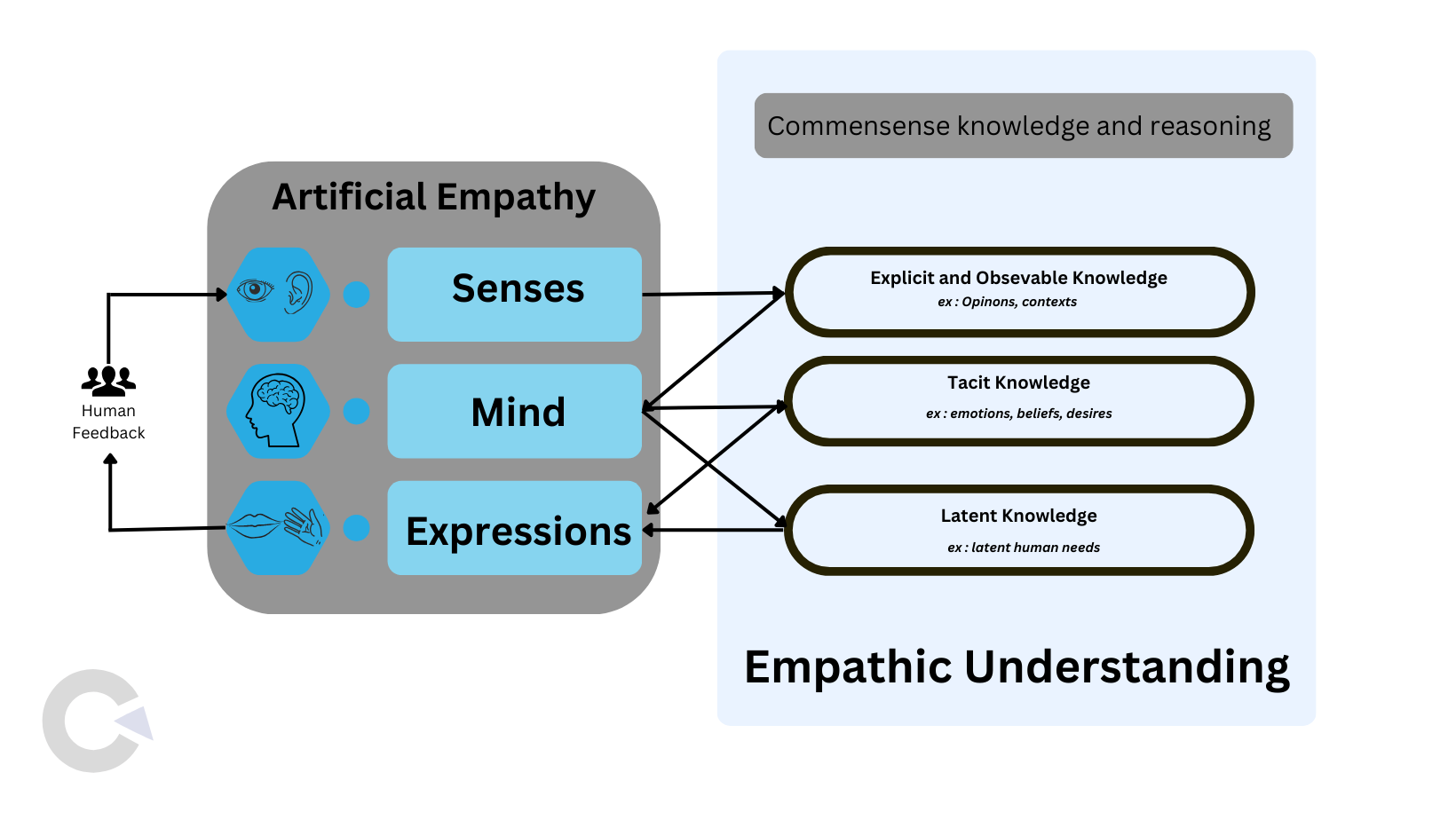

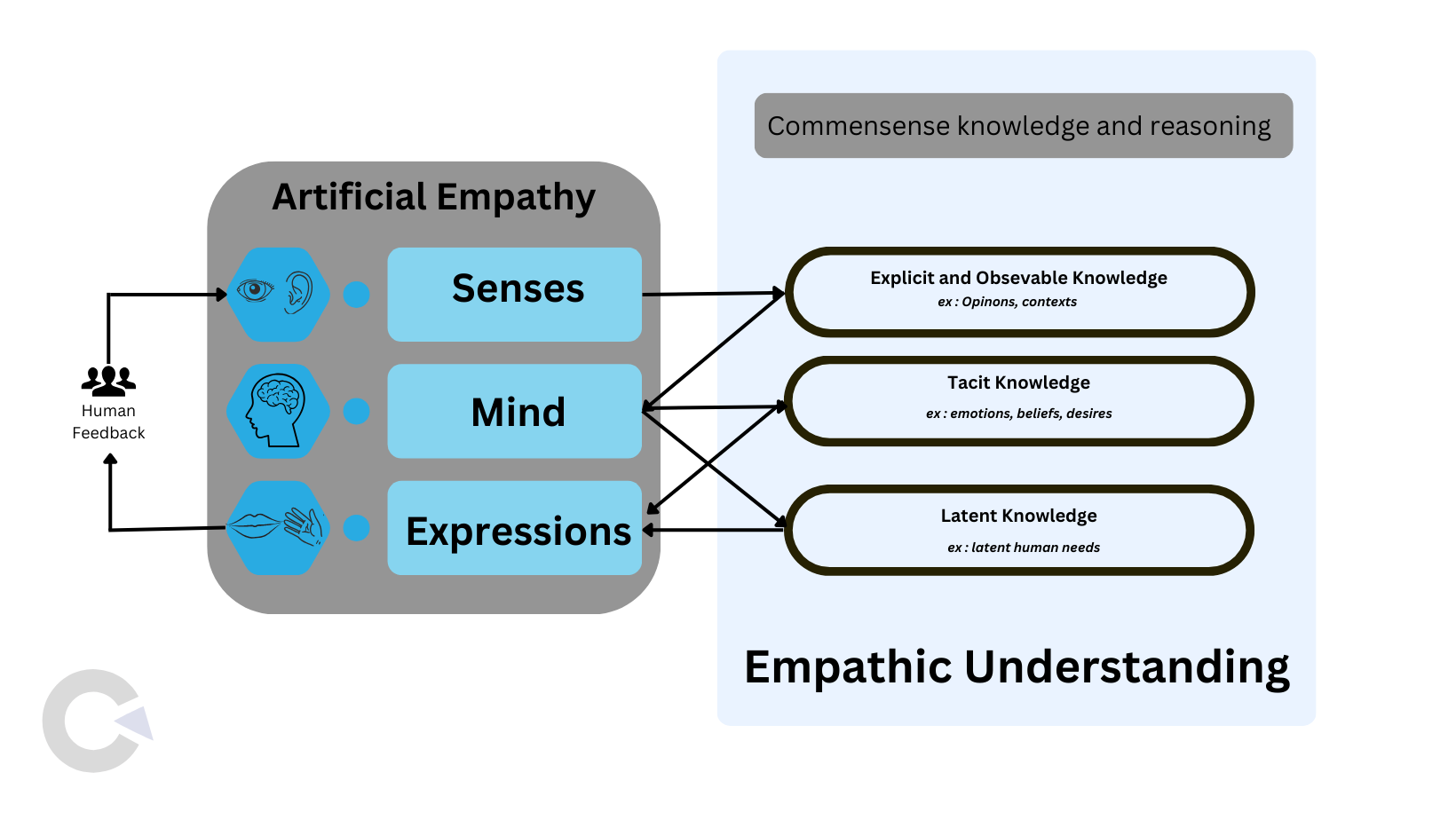

What Is Artificial Empathy

Artificial Empathy aims to bridge the emotional gap in human-machine interactions. By using techniques like tone analysis, facial expression recognition, and sentiment analysis, AI systems can detect and simulate emotional understanding.

For example:

- Healthcare: A virtual assistant notices stress in a patient’s voice and offers calming suggestions.

- Customer Service: A chatbot detects frustration and responds with empathy, saying, “I understand this is frustrating; let me help you right away.”

While Artificial Empathy doesn’t replicate genuine human emotions, it mimics them well enough to make interactions smoother and more human-like.

Key Differences Between Artificial Intelligence and Artificial Empathy

| Feature |

Artificial Intelligence |

Artificial Empathy |

| Purpose |

Solves logical problems and performs tasks. |

Enhances emotional understanding in interactions. |

| Core Functionality |

Data-driven decision-making and problem-solving. |

Emotion-driven responses using pattern recognition. |

| Applications |

Autonomous cars, predictive analytics, etc. |

Therapy bots, empathetic chatbots, etc. |

| Human Connection |

Minimal emotional engagement. |

Focused on improving emotional engagement. |

| Learning Source |

Large datasets of facts and logic. |

Emotional cues from voice, text, and expressions. |

| Depth of Understanding |

Lacks emotional depth |

Mimics emotions but doesn’t truly feel them. |

The Evolution of Artificial Empathy

AI started as a rule-based system focused purely on logic. Over time, researchers realized that true human-AI collaboration required more than just efficiency—it needed emotional intelligence.

Here’s how empathy in AI has evolved:

- Rule-Based Systems: Early AI followed strict commands and couldn’t adapt to emotions.

- Introduction of NLP: Natural Language Processing enabled AI to interpret human language and tone.

- Deep Learning Revolution: With deep learning, AI started recognizing complex patterns in emotions.

- Modern Artificial Empathy: Today, systems can simulate empathetic responses based on facial expressions, voice tone, and text sentiment.

Applications of Artificial Empathy

1. Healthcare: Personalized Patient Support

Empathetic AI can revolutionize patient care by detecting emotional states and offering tailored support.

- Example: A virtual assistant notices a patient is anxious before surgery and offers calming words or distraction techniques.

- Impact: Builds trust, reduces stress, and enhances patient satisfaction.

2. Customer Service: Resolving Issues with Care

Empathetic chatbots improve customer interactions by detecting frustration or confusion.

- Example: A bot senses irritation in a customer’s voice and adjusts its tone to sound more understanding.

- Impact: Shorter resolution times and better customer loyalty.

3. Education: Supporting Student Needs

AI tutors with empathetic capabilities can identify when a student is struggling and offer encouragement or personalized explanations.

- Example: A virtual tutor notices hesitation in a student’s voice and slows down its teaching pace.

- Impact: Boosts engagement and learning outcomes.

4. Social Robotics: Enhancing Human Interaction

Robots designed with empathetic AI can serve as companions for elderly people or individuals with disabilities, offering emotional support.

Ethical Challenges in Artificial Empathy

1. Privacy Concerns

Empathetic AI relies on sensitive data, such as emotional cues from voice or facial expressions. Ensuring this data is collected and stored responsibly is crucial.

Solution: Implement strict data encryption and transparent consent policies.

2. Bias in Emotion Recognition

AI may misinterpret emotions if trained on biased datasets. For example, cultural differences in expressions can lead to inaccuracies.

Solution: Train AI on diverse datasets and conduct regular bias audits.

3. Manipulation Risks

There’s potential for misuse, where AI might manipulate emotions for commercial or political gain.

Solution: Establish ethical guidelines to prevent exploitation.

Comparing Artificial Empathy and Human Empathy

| Aspect |

Human Empathy |

Artificial Empathy |

| Source |

Based on biology, emotions, and experiences. |

Derived from algorithms and data patterns. |

| Emotional Depth |

Genuine and nuanced understanding. |

Mimics understanding; lacks authenticity. |

| Adaptability |

Intuitive and flexible in new situations. |

Limited to pre-programmed responses. |

| Ethical Judgment |

Can evaluate actions based on moral values. |

Lacks inherent morality. |

| Response Creativity |

Innovative and context-aware. |

Relies on existing data; struggles with novel scenarios. |

The Future of Artificial Empathy

Artificial Empathy holds immense potential but also faces limitations. To unlock its benefits:

- Collaboration: Combine human empathy with AI’s efficiency.

- Continuous Learning: Use real-world feedback to improve AI’s emotional accuracy.

- Ethical Standards: Develop global guidelines for responsible AI development.

Future possibilities include empathetic AI therapists, social robots for companionship, and even AI tools for emotional self-awareness training.

Conclusion

Artificial Intelligence and Artificial Empathy are transforming the way humans interact with machines. While AI focuses on logic and efficiency, Artificial Empathy brings a human touch to these interactions.

By understanding the differences and applications of these technologies, we can leverage their strengths to improve healthcare, education, customer service, and beyond. However, as we integrate empathetic AI into our lives, addressing ethical concerns like privacy and bias will be crucial.

The ultimate goal? To create a harmonious future where intelligence and empathy work hand in hand, enhancing human experiences while respecting our values.

Frequently Asked Questions

-

Can AI truly understand human emotions?

AI systems can learn to spot patterns and signs related to human emotions. However, they do not feel emotions like people do. AI uses algorithms and data analysis, such as sentiment analysis, to act like it understands. Still, it lacks the cognitive processes and real-life experiences that people use to understand feelings.

-

Are there risks associated with artificial empathy in AI?

Yes, we should think about some risks. A key issue is ethics, particularly privacy. We must consider how we gather and use emotional data. AI might influence human emotions or benefit from them. This is called emotional contagion. Also, AI systems could make existing biases even worse.

-

What is AI empathy?

Artificial empathy is when an AI system can feel and understand human emotions. It responds as if it cares. This happens by using natural language processing to read emotional responses. After that, the AI changes how it talks to the user. You can see this kind of empathy in AI chatbots that want to be understanding.

-

Is ChatGPT more empathetic than humans?

ChatGPT is good at using NLP. However, it does not have human empathy. It can create text that looks human-like. It works by analyzing patterns in data to mimic emotional understanding. Still, it misses the real emotional depth and life experiences that come with true empathy.

-

Can robots show empathy?

Robots can be designed to display feelings based on their actions and responses. Using artificial emotional intelligence, they can talk in a more human way. This helps create a feeling of empathy. However, it's important to remember that this is just a copy of empathy, not true emotional understanding.

by Charlotte Johnson | Nov 15, 2024 | Software Development, Blog, Latest Post |

Serverless computing is changing how we see cloud computing and Software Development Services. It takes away the tough job of managing servers, allowing developers to focus on creating new apps without worrying about costs or the resources needed to run them. This shift gives businesses many benefits they become more flexible, easier to grow, and can save money on technology costs.

Key Highlights

- Serverless computing means the cloud provider manages the servers. This allows developers to focus on their work without needing to worry about the servers.

- This method has many benefits. It offers scalability, saves money, and helps speed up deployments. These advantages make it an attractive option for modern apps.

- However, serverless architecture can cause problems. These include issues like vendor lock-in, security risks, and cold start performance issues.

- Choosing the right serverless provider is important. Knowing their strengths can help you get the best results.

- By making sure the organization is prepared and training the staff, businesses can benefit from serverless computing. This leads to better agility and more innovation

Understanding Serverless Architecture

In the past, creating and running applications took a lot of money. People had to pay for hardware and software. This method often led to wasting money on things that were not used. Needs could change quickly. A better option is serverless architecture. This way, a cloud provider takes care of the servers, databases, and operating systems for you.

This changes the way apps are made, released, and handled. Now, it provides a quicker and simpler method for developing software today.

Serverless Architecture:

- Serverless architecture does not mean the absence of servers.

- A cloud provider manages the server setup, allowing developers to focus on code.

- Code runs as serverless functions, which are small and specific to tasks.

Serverless Functions:

- Functions are triggered by events, like user requests, database updates, or messages.