by Hannah Rivera | Mar 26, 2022 | QA Outsourcing, Blog, Top Picks |

Various companies outsource their QA needs due to various reasons specific to their needs. But the primary reason why QA should be outsourced is that QA is extremely important to your product’s success. A well-equipped QA team can make a huge difference in how successful your app is. You might have the most innovative idea or concept that seems like a sure shot success on paper. But execution matters and that is where QA enters the picture as it ensures that your product stays on track to success every step of the way.

A Model QA Outsourcing Company

There are numerous factors that one must consider before choosing the right QA outsourcing company, and we will be discussing those factors in the latter part of this blog. Before that, we will illustrate how a QA Outsourcing company performs various types of testing to help you understand the impact they could have on your project. Though not all QA companies will offer the options we generally provide, we wanted to use our approach as an example to establish the standards you should be looking for.

We’ll be starting with the onboarding process as it is the first stage that happens before the partnership begins and also cover different approaches for different types of testing so that you will be in a position to foresee the possibilities of a partnership with regards to your specific needs.

The Onboarding Process

Once you have found the team for your needs, the onboarding process will be carried out to reassure you that you have made the right decision.

The Initial Call

The initial call is when the QA team will be introduced to your project. Once you have expressed your interest through any medium, our sales team will understand your basic expectations and schedule a call with our highly talented technical team for the initial call.

Free POC & Demo

Once we have clearly understood your need from the initial call, we will start prepping for a no-cost Proof of Concept and Demo that will help us assure you of our expertise. Though not all QA companies provide a free POC, we do it because we have the utmost confidence in our capabilities. So here’s how it works.

Tailored to Your Needs

Being one of the top QA companies in the market, we have a wide range of clients from different domains and industries. Our vast experience enables us to focus on your needs and create demos with the appropriate device and platform combinations. Let’s look into the various Proof of concepts we offer as an outsourcing QA company.

Automation Testing POC

- We automate 3 test cases with complete JENKINS integration.

- Our POCs are equipped with a fully functional ReportPortal integration.

- Upon receiving special requests, we even create specific test cases based on our client’s needs.

- Known for having quick turnaround times, we don’t take forever to work our magic as we will have your demo up and running in just 10 to 14 days from the date of the first call.

Such an approach gives you an idea of the level of control you will have on the automation tests during the partnership as the JENKINS integration enables you to run them anytime and anywhere as per your needs. You will also be able to witness how effortless bug tracking can be with our ReportPortal integration. So by the end of our demo, you will have a clear picture of how effective this partnership with us can be and help you make the right decision.

Manual Testing POC

- We create both positive and negative test cases for 3 scenarios.

- We also make sure to show a demo on both web and mobile platforms.

Proposal Submission

We do not follow one size fits all approach as we are focused on delivering tailor-made services that satisfy all our client needs. Primarily we understand our client’s needs and suggest an engagement model that we believe will match their needs or even proceed as per the client’s preference. Thanks to our years of experience in servicing clients on a global scale, we can assure you that we will deliver no matter what the engagement model is. So you can count on us to be a part of your product’s success.

Turnkey

A cost-conservative working model with defined work plans, timeframes, and deliverables based on the scope of the project. So there is no need for you to be worried that your requirements are small or if the budget will go overboard, fear not as this model ensures that the work will be completed as expected.

Time & Material

Our highly flexible working model is great if the entire scope of the project is unknown or for long-term partnerships. Our 100% transparency throughout every step of the way ensures that you create a winning product.

B.O.T Model

If you are looking to fulfill both your current and future needs, B.O.T is the way to go as we will build a team that fits your needs, operate for a period of time to ensure that your expectations are met, and then transfer the team to you.

Our Automation Testing Approach

We have performed automation testing for numerous needs across various industries and can assure you that the approach has not been the same for every single scenario. But there is a basic outline we lay before we start optimizing the process to meet our client’s needs.

Feasibility analysis

It is a well-known fact that 100% automation is not possible. But it is also important to keep in mind that not all test cases that can be automated should be automated as well. So we use certain guidelines when it comes to choosing which test cases have to be automated. So after effective analysis of all the test cases, we identify

- High Risk or Business Critical test cases.

- Test cases that require repeated execution.

- Tedious test cases that will require a lot of manual effort or is subject to human error.

- Test cases that are time-consuming.

- Scenarios that require a significant amount of data.

- Consistent test cases that do not undergo constant changes.

- Functionalities that are shared across applications.

- Test cases that have a lot of downtime between steps.

- If cross-browser testing using the same test scenarios is possible.

Likewise, we also prefer not to automate test cases based on the below criteria

- Test cases that require exploratory or ad-hoc testing.

- Newly designed test cases that haven’t been executed at least once manually.

- Test cases that require subjective validation.

- Test cases that have low ROI.

Automation Test Tool Selection

Selecting the right automation testing tool for your project needs can be a tricky task. Though the automation tool selection largely depends on the technology the Application Under Test (AUT) is built on, the following criterion will help you select the best tool for your requirement.

- Environment Support

- Ease of use

- Testing of Database

- Object Identification Capabilities

- Image Testing Provision

- Error Recovery Testing

- Object Mapping

- The Required Scripting Language

- Support for the different types of tests, test management features, and so on.

- Support for multiple testing frameworks

- Ease of debugging the automation software scripts

- Ability to recognize objects in any environment

- The comprehensiveness of test reports & results

- Training cost to use the tool

- Licensing & maintenance cost of the tool

- The tool’s performance and stability

- The tool’s compatibility with the different devices and operating systems. (Windows, web, mobile, and native mobile apps).

- The features of the tool you will benefit from.

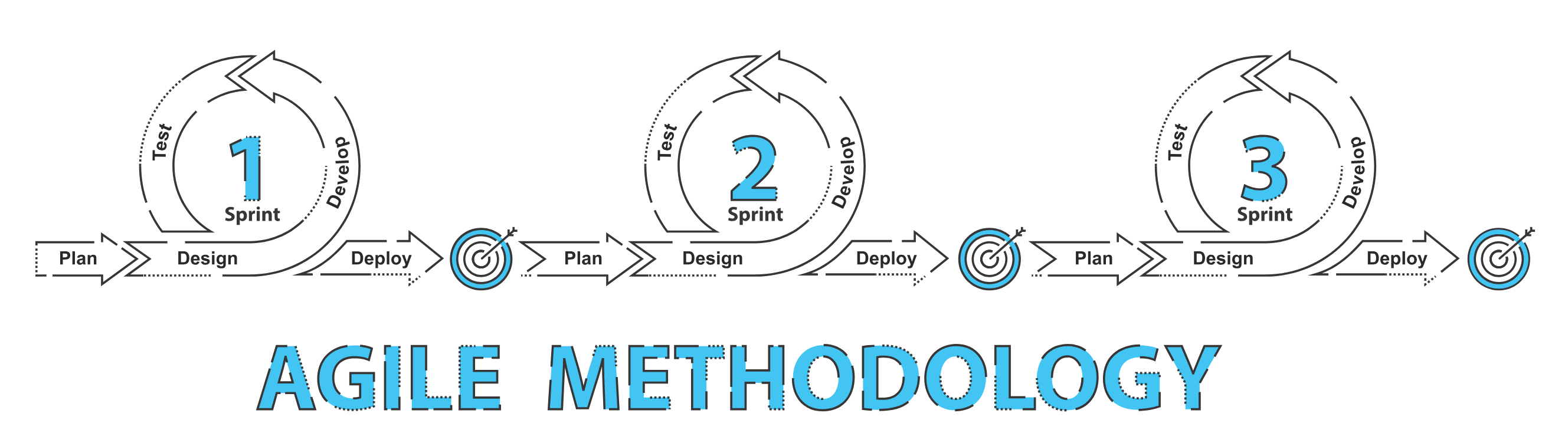

Planning, Design, and Development

Planning, Design, and Development are all important aspects of any project.

You establish an Automation strategy and plan during this phase, which includes the following details:

1. Tools for automation have been chosen.

2. The design of the framework and its features

3. Automation things that are in-scope and out-of-scope Automation

4. Scripting and execution schedules and timelines

5. Automation Testing Deliverables

Test Execution

Automation During this phase, scripts are run. Before they may be set to run, the scripts require input test data. They generate extensive test reports once they’ve been run.

The automation tool can be used directly or through the Test Management tool, which will invoke the automation tool.

Note: The execution can be done during the night, to save time.

Maintenance

Test Automated Maintenance Approach The Test Automation Maintenance Approach is an automation testing phase that is used to see if the new features added to the software are functioning properly. When new automation scripts are added, they must be reviewed and maintained in order to improve the effectiveness of automation scripts with each release cycle.

How we develop our Framework

- Define Goals

- Identify Tool

- Framework Design

- Framework Development

Choosing the right tool for your automation testing is very crucial as there are so many tools available in the market. In order to select the tools that best fit your needs, you should understand the requirements clearly and shortlist the tools that satisfy your needs. Since automation is possible for different types of testing, we have specific tools that we use based on the need.

Programming language: JAVA, C#, Python

Web Automation: Selenium

Mobile Automation: Appium, Java-Client,

Desktop Automation: FlaUI, TestStack White, Coded UI, WinApp Driver…

BDD Tool: Cucumber, Specflow, Behave

Reporting Tool: ReportPortal, Extent Report, Allure Report

Continuous Integration tool: Jenkins

IDE for script development:

How do we Guarantee Success

Not all QA companies that claim to do automated testing will be able to attain all the benefits it has to offer. But by being experts in the field, we ensure that we follow all the standards and the best practices to attain the best results.

- 1. Use a Proper Framework structure

- 2. We Avoid Flaky Tests by

- Tracking Failures

- Using Appropriate Object Locators

- Limiting Execution Duration

- Quarantining the Flaky Tests

- 3. Follow Naming Standards

- 4. Coding Standards

- 5. Environment Standards

- 6. Bug identification

- 7. Proper report

We don’t just stop once we have successfully automated various test cases as we understand that automated testing is not a replacement for manual testing. So now with the time available in our hands, we expand the test coverage by performing exploratory testing to unearth the various bugs that would usually go unnoticed.

Our Mobile-App Testing Approach

Forbes reported that there are a whopping 8.9 million mobile applications in the global market. But an average user is reported to use just 10 applications every day and a total of 30 apps every month. Calculating the odds of your app’s success in such a highly competitive market might be startling. But expecting low competition in an industry that is projected to reach $900 Billion by 2023 is not fair. Even if you are targeting a niche market, a low-quality mobile app with poor user experience has no chance of becoming a success. But with the right idea and the right people on board, you could definitely be successful. With our comprehensive mobile testing solutions, we ensure that your app doesn’t get lost in the shuffle.

The Different Types of Mobile App Testing We Do

Our approach is all about testing the mobile app in different real-world scenarios because if we fail to do that, the chances of the end-user having a good user experience while using the app becomes slim. So we have identified the focus points that directly impact the user experience and prioritize the tests that can help prevent such issues.

The Compatability Issue

An application might work fine in a few devices but still struggle in other devices due to the various hardware and software changes. Back in the day, we used to have mobile phones within fixed price slabs. There were either the basic phones or the flagship smartphones, and the number of smartphone manufacturers wasn’t this high. But now the competition is higher, the number of smartphones under each price bracket has rapidly increased, and the number of smartphones that get released every year is also reaching record highs. So here is the list of tests one has to cover.

- Version Testing

- Hardware Testing

- Software Testing

- Network Testing

- Operating System Testing

- Device Testing

Owning and maintaining all the real devices to perform such tests is an impossible task. But there is a workaround.

The Real Device Solution

We don’t prefer testing on emulators as it is not reliable as testing on real devices is the best way to go about mobile app testing. So we employ cloud-based mobile app testing solutions like BrowserStack to get access to the large variety of real devices that are available in the market. Getting access to real devices solves only a part of the problem as we still will not be able to test the app on every device manually. So we have to automate the process and perform product analysis to identify the critical device combinations that the target audience uses and test them manually.

Beating the competition with Performance

Though the flagship phones are the most talked-about models, the mid-range and budget devices are the models majorly used by the public. So ensuring that your mobile app performs in the most efficient way is an important step in mobile app testing. Even if your target audience uses the flagship phones, it doesn’t mean your app can chew up the mobile’s resources. It will lead to eventual uninstallation as users neither like an app that is slow nor an app that makes their device slow. That is why we will test your mobile app against the following KPIs.

- Load Time

- Render Time

- Frame Dropping

- Latency & Jitter

- CPU Choking

- Battery Utilization

Identifying Usability Issues

Even a small misstep in usability testing can prove to be costly as usability issues are one of the biggest contributors to negative reviews. Any mobile app with negative reviews will face a lot of business problems as the way the users perceive the app and the app is impacted heavily. So to avoid such issues that have lasting effects, we perform our usability tests after obtaining a lot of relevant granular data. Most importantly, our mobile app testers will test from an end-user’s point of view to get accurate results.

Knowing Your Audience

Understanding your target audience plays a big role in making the app usable. So we add a lot of context to our testing process by identifying the age demographic, geography, and needs of your target audience. We then take it a notch further by analyzing the possible usage frequency, load levels, competitors, and so on. With such data, we develop effective test cases that will definitely identify bugs prior to deployment.

Shift-Right Testing

But usability testing doesn’t end with deployment as we have to identify the features that are most-used and least-used by the end-user. By doing so, we will be able to identify if the user faces any hardship in the most widely used features and prioritize that. Though we can predict the usage scenarios and levels prior to release, it will not be enough to satisfy all the needs.

As we use Selenium and Java for our Web Application automation testing, we have chosen Appium as our primary mobile app automation framework. But we do not limit ourselves with just one tool or combination as the needs of our clients vary on a one-to-one basis. So our R&D team is always on the lookout for emerging technologies in the field to help us stay on top of all the trends. We have also used all the popular tools due to the wide range of our clients.

- Appium

- TestComplete

- Robotium

- Xamarin.UITest

- Calabash

- Ranorex Studio

- SeeTest

- EggPlant

- Apptim

- Perfecto

- Frank

- Espresso

Our API Testing Approach

We are all aware that there are three separate layers in a typical app: the user interface layer that the user interacts with, the database layer for modeling and manipulating data, and the business layer where the logical processing happens to enable all transactions between the UI and the DB. API testing is performed at the most critical layer (i.e) the business layer. So a malfunctioning API could invalidate both the UI and DB even though they are working properly. That is why API testing is very crucial when it comes to making your product successful. So let’s take a look at the different types of API testing we perform to ensure maximum quality.

Validation Testing

Before we start testing the functionalities of the API, we prefer to validate the product by performing validation testing during the final stages of the development process. It plays a crucial role as it helps us validate if the product has been developed as expected, behaves or performs the required actions in the expected fashion, and also validates the efficiency of the product. Once we have verified the product’s ability to deliver the expected results by adhering to the defined standards within the expected conditions, we go ahead and perform functional testing.

Functional testing

Functional testing is very different from validation testing as the latter only verifies if the product was developed properly. Whereas functional testing involves testing specific functions in the codebase. So we will check if the product functions properly within the expected parameters and also check how it handles issues when functioning beyond the expected parameters. We get the best results here by making sure that the edge test cases are also implemented.

Load testing

Since we have tested how functional the product is in the previous stage, we will test to see if the product performs as expected in different load conditions as well. It is usually performed after a specific unit or the entire codebase has been tested. We first establish a baseline with the number of requests to expect on a typical day and then the product with that traffic. We then take it to the next level by testing with both maximum expected traffic and also overload traffic to ensure the functionality is not affected. It will also help in monitoring the product’s performance at both normal and peak conditions.

Runtime and error detection

The previous tests were mainly focused on the results, whereas we would test the execution of the API at this stage.

- We would monitor the runtime of the compiled code for different errors and failures.

- The code will be tested with known failure scenarios to check if the errors are detected, handed, and routed correctly.

- In addition to that, we would also look for resource leaks by providing invalid requests, or dumping an overload of commands.

Security testing

Since APIs are prone to eminent external threats, security testing is an unavoidable phase of testing that has to be done effectively. But we don’t just stop there as we also test the validation of encryption methodologies, user rights management, and authorization validation. We would also follow it up with penetration and fuzz testing to eliminate the possibility of having any kind of security vulnerability in the product.

UI testing

Though the above-mentioned covers the primary focus points of API testing, we always feel that the UI that ties into the API can help us have an overview of the usability and efficiency of the front and back ends. By doing so, we make our API testing approach a holistic process that covers every factor that would contribute to the product’s overall quality.

Now that we have seen the various tests we would conduct to ensure maximum quality, let’s take a look at our API testing toolkit.

Postman – A Google chrome app used for verifying and automating API testing.

- It eases the process of creating, sharing, testing, and documenting APIs for developers by allowing the users to create and save simple & complex HTTP/s requests, and read their responses as well.

- It reduces the amount of tedious work as it is extremely efficient.

REST Assured – An open-source, Java-specific language that facilitates and eases the testing of REST APIs.

- It is open-source, Java-based, and supports both the XML & JSON formats.

- The support for Given/When/Then notations makes the tests more readable.

- No separate code is needed for basic steps such as HTTP method, sending a request, receiving & analyzing the response, and so on.

- It can be easily integrated with CI/CD frameworks like JUnit & TestNG as it has a Java Library.

SoapUI – The tool focuses on testing an API’s functionality in SOAP and REST APIs and web services.

Apache JMeter – An open-source tool for performing load and functional API testing.

Apigee – A cloud API testing tool from Google that can be used to perform API performance testing.

Swagger UI – An open-source tool that can be used to create a webpage that documents the defined APIs.

Katalon – An open-source application that helps with automated UI Open Source, Java Based and supports both XML & JSON format.

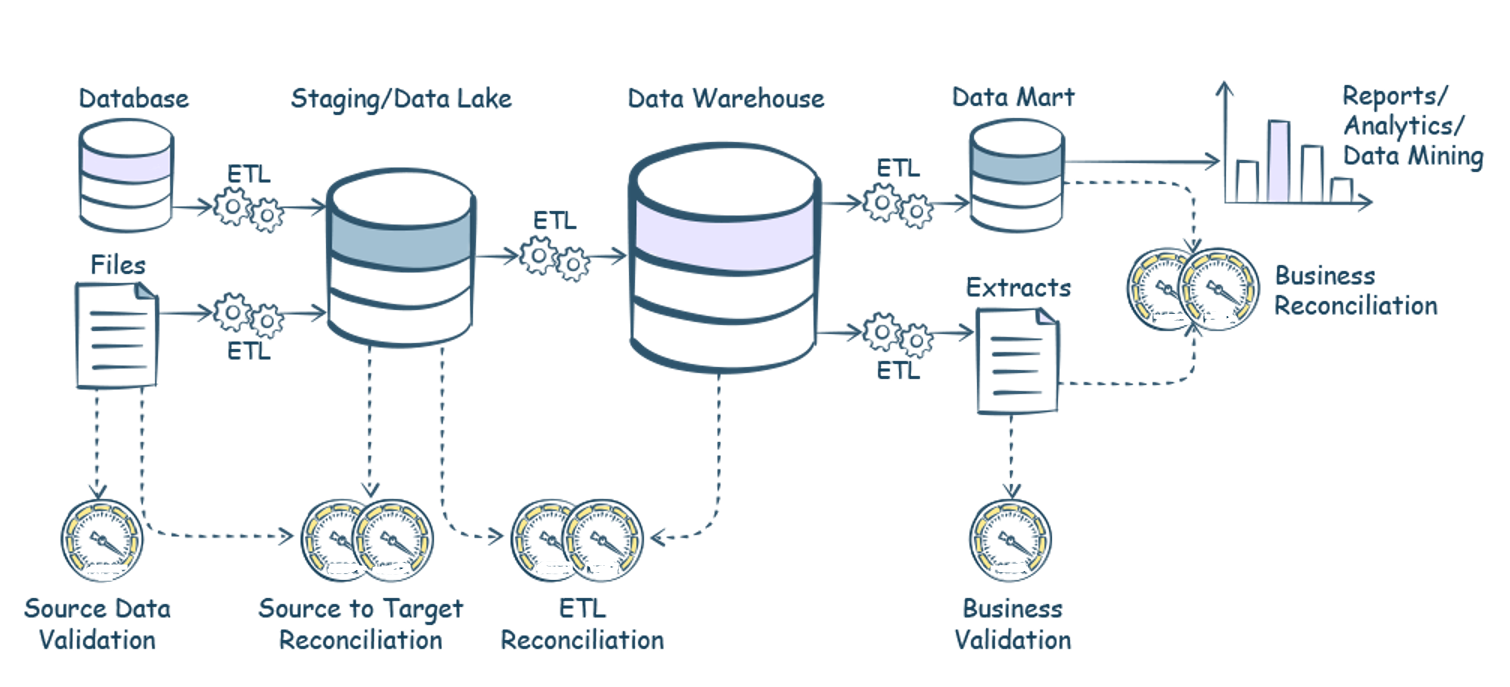

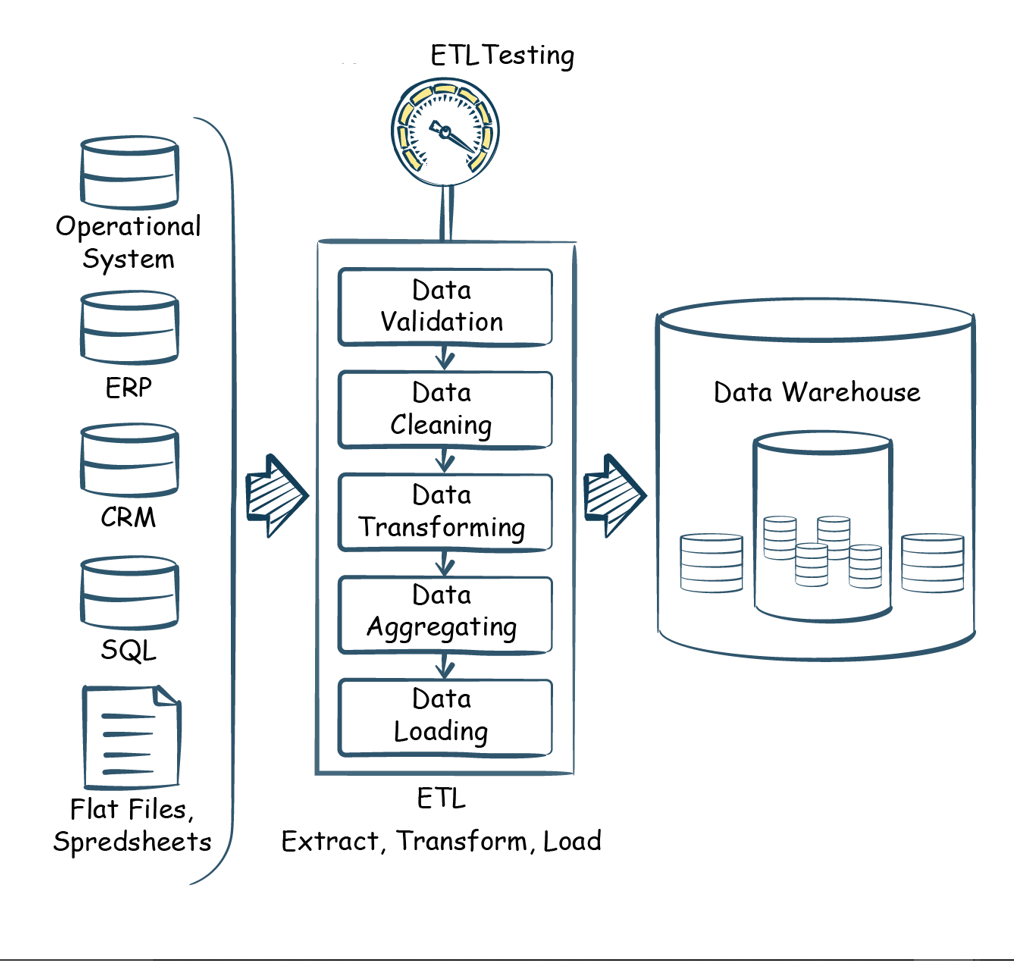

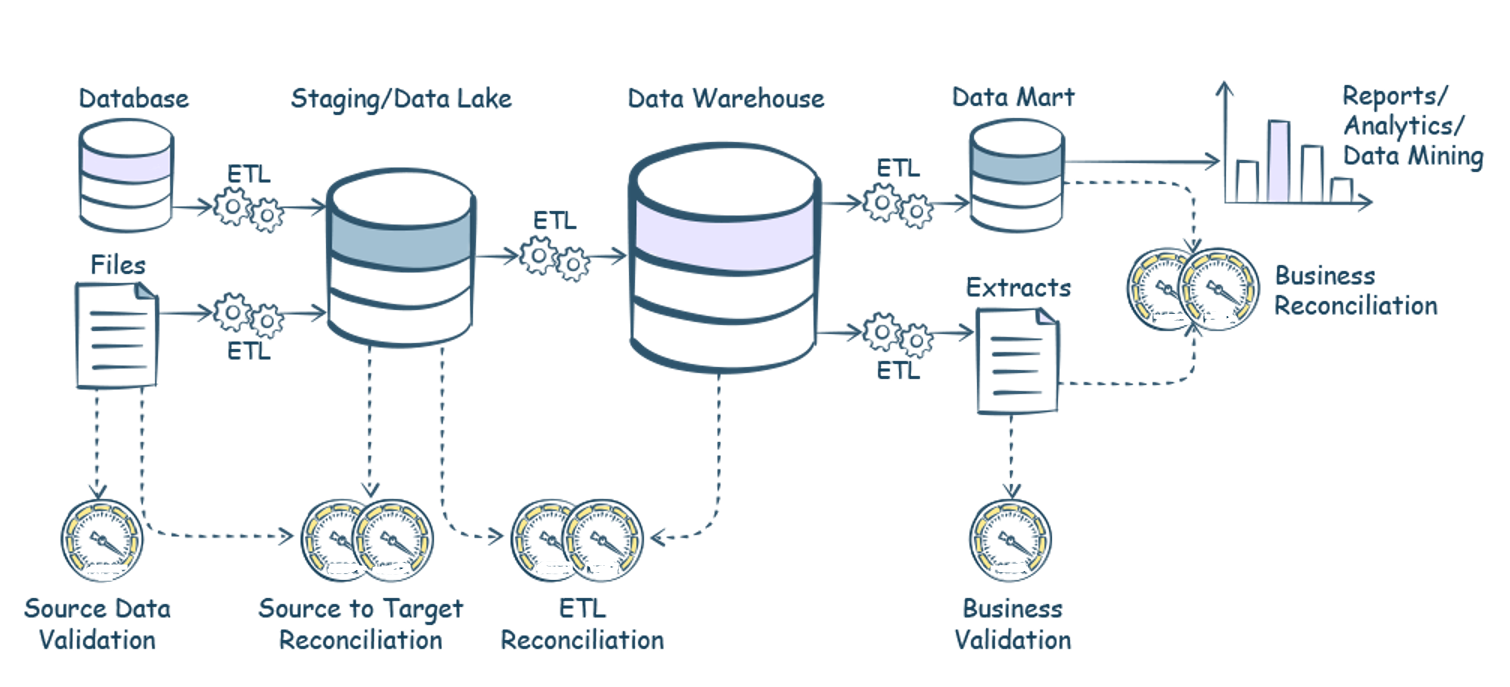

Our Data warehouse Testing Approach

Data warehouses are data management systems that generally contain large amounts of historical data that are centralized and consolidated from various sources such as applications’ log files and transactions. They even perform different queries and analyses to enable and support business intelligence activities and analytics. Since major business decisions are made based on the data from a data warehouse, data warehouse testing is of paramount importance when it comes to ensuring that the data is reliable, consistent, and accurate. According to a report by Gartner, 60% of the respondents weren’t aware of the financial loss their business faces due to bad data as they don’t even measure it. The size of the loss could be unimaginable as back in 2016, IBM reported that bad data quality is responsible for cutting $3.1 Trillion from America’s GDP.

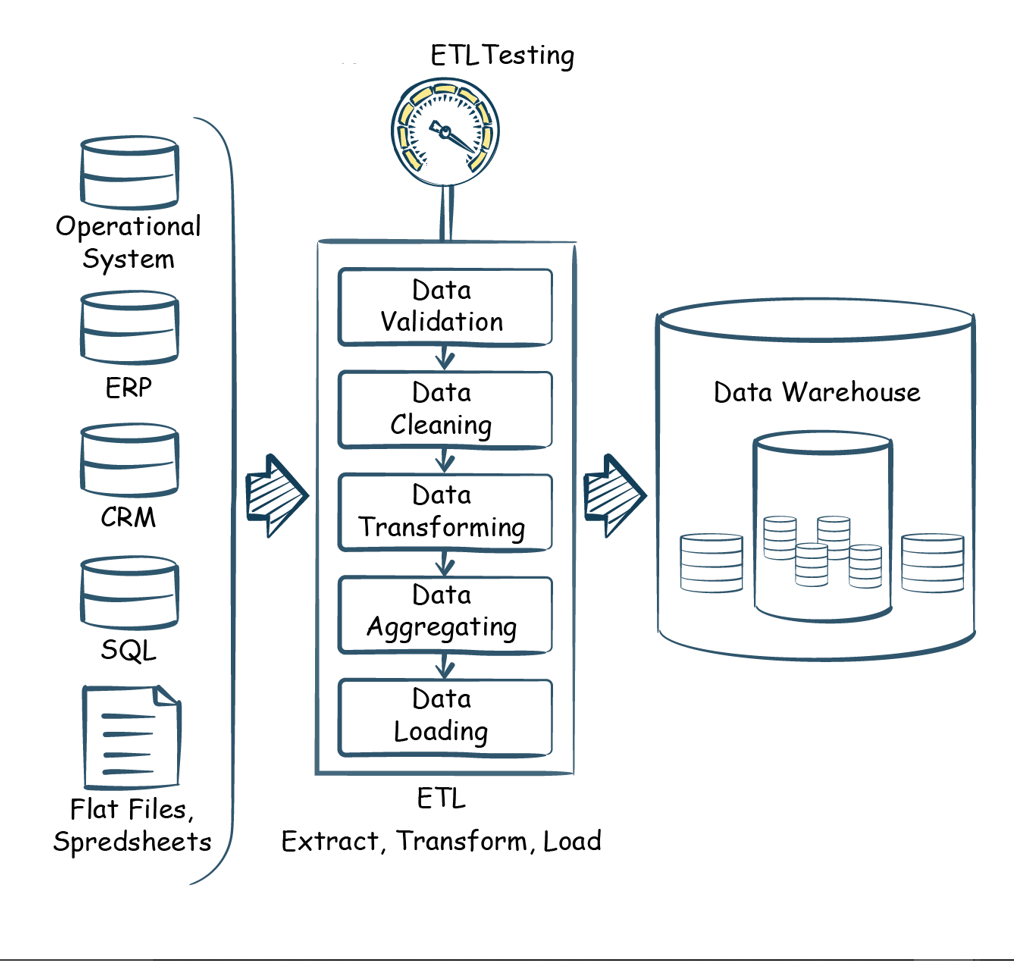

But the process of data warehouse testing is a much more intricate process than simply testing the data that land inside the warehouse from multiple sources. That is why we ensure that our data warehouse testing process addresses the complete data pipeline when the data is in flight during extract, transform, and load (ETL) operations. So instead of searching for a needle in a haystack, we validate the data at intermediate stages and identify, isolate, & resolve problem areas quickly. Our data warehouse testing also covers the equally important business intelligence (BI) reports and dashboards that run using the consolidated data as its source.

Data warehouses have 2 major concepts,

- OLAP – Online Analytical Processing (e.g: analytical processing like forecasting, reporting)

- OLTP – Online Transactional Processing (e.g: application like ATM)

The Different Types of Data Warehouse Testing we do:

As a leading QA company, we have years of experience delivering comprehensive solutions to all our clients without compromising on efficiency. So let’s take a look at the most common challenges that a QA company would have to overcome with their testing process and then move forward to see the different types of testing techniques we employ.

Challenges:

- Loss of data during the ETL process.

- Incorrect, incomplete, or duplicate data may occur while transferring data.

- Since the data warehouse system contains historical data, the data volume is generally very large and extremely complex making it hard to perform ETL testing in the target system.

- It is also difficult to generate and build test cases.

- It is tricky to perform data completeness checks for the transformed columns.

- The sample data used in production is not a true representation of all the possible business processes.

So apart from the specific data warehouse tests we will be exploring, we would also perform the standard testing that almost every product will be in need. It includes

- Smoke testing can be used to determine whether the deployed build is stable or not.

- Integration testing for upstream & downstream processes that includes Datavault, Datamart, and Data Lake testing at each stage.

- Regression testing to see if the ETL is providing the same output for a defined input,

-

- Finally, we employ automation to speed things up.

Duplication Issues – As the name suggests, duplicate data is any record that accidentally shares the same data with another record in a database. The most common type of duplicate data is a complete carbon copy of another record. They are usually created while moving data between systems. Since a large number of files have to be checked, we employ automation scripts to perform the duplicate check.

Null values check – A null value will be assigned when a value in a column might be unknown or missing. It is important to keep in mind that a null value is not an empty string or a non-zero mobile. So by using a non-null constraint in a table, we can ensure that some value is present and can be verified using a null check.

Pattern validation – We then test if the data in a specific column is in the defined format. For example, if the column is supposed to hold an email address, we test if the data has an ‘@’ followed by a domain name to ensure it is a valid input.

Length validation – Similar to the above process, we also test if the length of the data in a particular column is as expected. For example, if the data is supposed to be a US mobile number, then we will test if it has 11 digits. It should neither be lesser nor greater than that for the data to be valid.

Data completeness check – As mentioned earlier, making crucial business decisions with incomplete data can prove to be very costly. So with our data warehouse testing, we ensure that the data is complete without any gaps or missing information. We can identify such missing data by comparing the source and target tables.

Table & Column availability check – We also validate if tables and columns are present and available after any changes have been made in the build.

Fill Rate check – Fill Rate can be calculated by dividing the total number of filled entries at each column level by the total number of rows in a table. It will be instrumental in determining the completion level as if there is a form that requires 10 different data for it to be complete, then we will know how much of it has been completed.

Referential integrity check – Referential integrity is primarily about the relationship between tables as each table in a database will have a primary key, and this primary key can appear in other tables because of its relationship to the data within those tables. When a primary key from one table appears in another table, it is called a foreign key. Primarily, we test if the data in the child are present in the parent table and vice versa. In addition to that, we check for deviations and if there are any, we identify how much data is missing.

We primarily use Python for creating our automation scripts that perform the above-mentioned checks as its built-in data structures, dynamic typing, and binding make it a great choice. We use Behave and Lemon CheeseCake (LCC) frameworks for our testing. There are numerous tools in the market to perform testing, we use some among them,

- Data Build Tool(DBT) – An automation tool that is very effective when it comes to reducing dependencies. It is our trusted choice when it comes to transforming data in the warehouse.

- Jenkins – An open-source tool that is instrumental in helping us achieve true automation by scheduling test execution and report generation.

- Lookers – It aids in creating customized applications that help us with our workflow optimization.

- Snowflake – An SaaS option that enables data storage and processing to create faster, flexible, and easy-to-use analytical solutions.

- JIRA – Since we follow Agile methodology, JIRA is our go-to project management tool.

- Airflow – A platform that helps us create and run workflows to identify dependencies.

- AWS services (S3 bucket, Lamda) – We use AWS to run our code, manage data, and integrate applications by avoiding the hassle of managing servers.

- GCP (Google Cloud Platform) – We use BigQuery, Google Tag Manager, and Google Analytics to manage data, determine measurement codes, and identify the latest data trends.

- Git Stash – It is used to locally version files that can help us revert back to the prior commit state if needed. It is also helpful in having those versions not be seen by the other developers who share the same git repository.

Our Accessibility Testing Approach

Before we proceed to see how, let’s first focus on the why. Accessibility Testing is a commonly overlooked type of testing that has a lot of importance. According to a recent report from WHO, about 15% of the entire world population has at least one form of disability. That adds up to more than 1 billion people. So if your product isn’t accessible, you’re not just alienating such a large group of people, you are also denying their right to information. All this makes Accessibility testing the need of the hour.

The Guidelines we Follow

Apart from the various advantages it has to offer, did you know that lack of accessibility compliance can result in you getting sued? Though WCAG is the universally recognized guideline, there are certain variations depending on the region you are from or the region your website is accessed from.

Our conclusive accessibility testing services ensure that our client’s products are safe across the globe as we are experts in the various web accessibility guidelines such as

- WCAG (Web Content Accessibility Guidelines) – Published by the W3C Consortium and globally recognized.

- Section 508 – Followed in the United States of America for federal government agencies and services.

- The Web Accessibility Directive – Created by the European Union based on WCAG 2.0 with a few additional provisions as well.

The Different WCAG Compliance Levels

The level of compliance you target to achieve will also be an important factor as it determines the impact it will have on your product’s design and structure. Since partial compliance is not accepted by WCAG, it will also be helpful in planning out the work that has to be done.

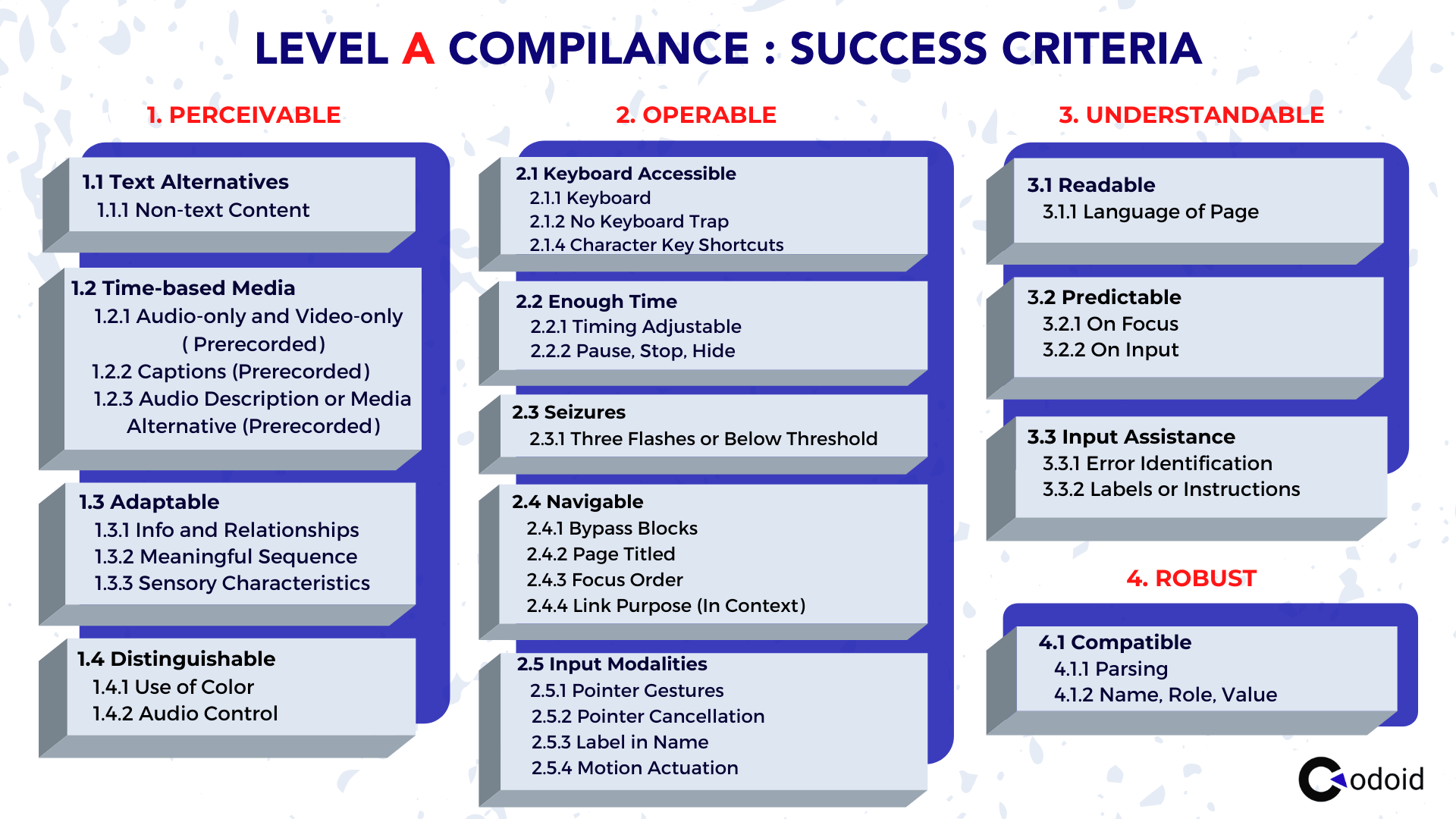

A – This is the most basic level of compliances and has a total of 30 success criteria in it. The primary objective of this level of compliance is to help people with disabilities understand and access the content. If this level of compliance is met, the screen readers will have enough information to function at a minimal level, help people with seizures, and so on. It is worth mentioning that it will also enhance the user experience of people without any disabilities as well. We have listed a few focus points from this level of compliance to help you get a better understanding.

- Alternative text for the image

- No Keyboard Traps

- Keyboard Navigations

- Captions and Audio Descriptions for videos

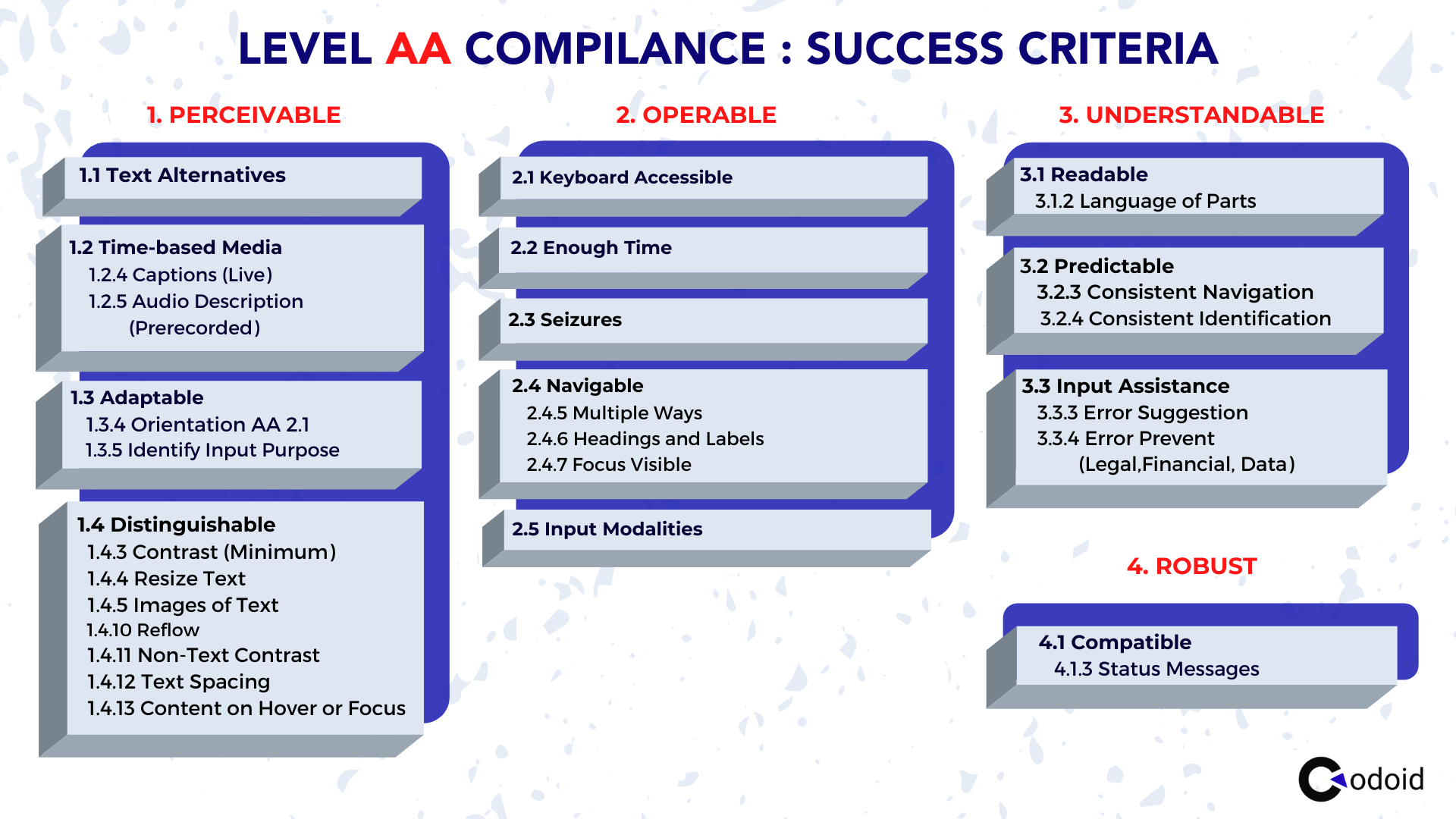

AA – Since the lower level of compliance is a subset of its higher compliance level, the product would have to meet 20 additional criteria once the first 30 success criteria of the Level A compliance are met. This is the level of compliance that most businesses tend to target as it would make the product compliant with most of the assistive technology that will help people with disabilities access your content. Though this level of compliance would not make much impact for regular users it will be very helpful for the disabled users as the basic level of user experience provided in the previous level will be enhanced. It goes beyond complete blindness to make the product accessible to people with other disabilities such as partial blindness. Here are a few focus points from this level that will help you get a clear picture.

- Heading order

- Color contrast

- Resize and Reflow

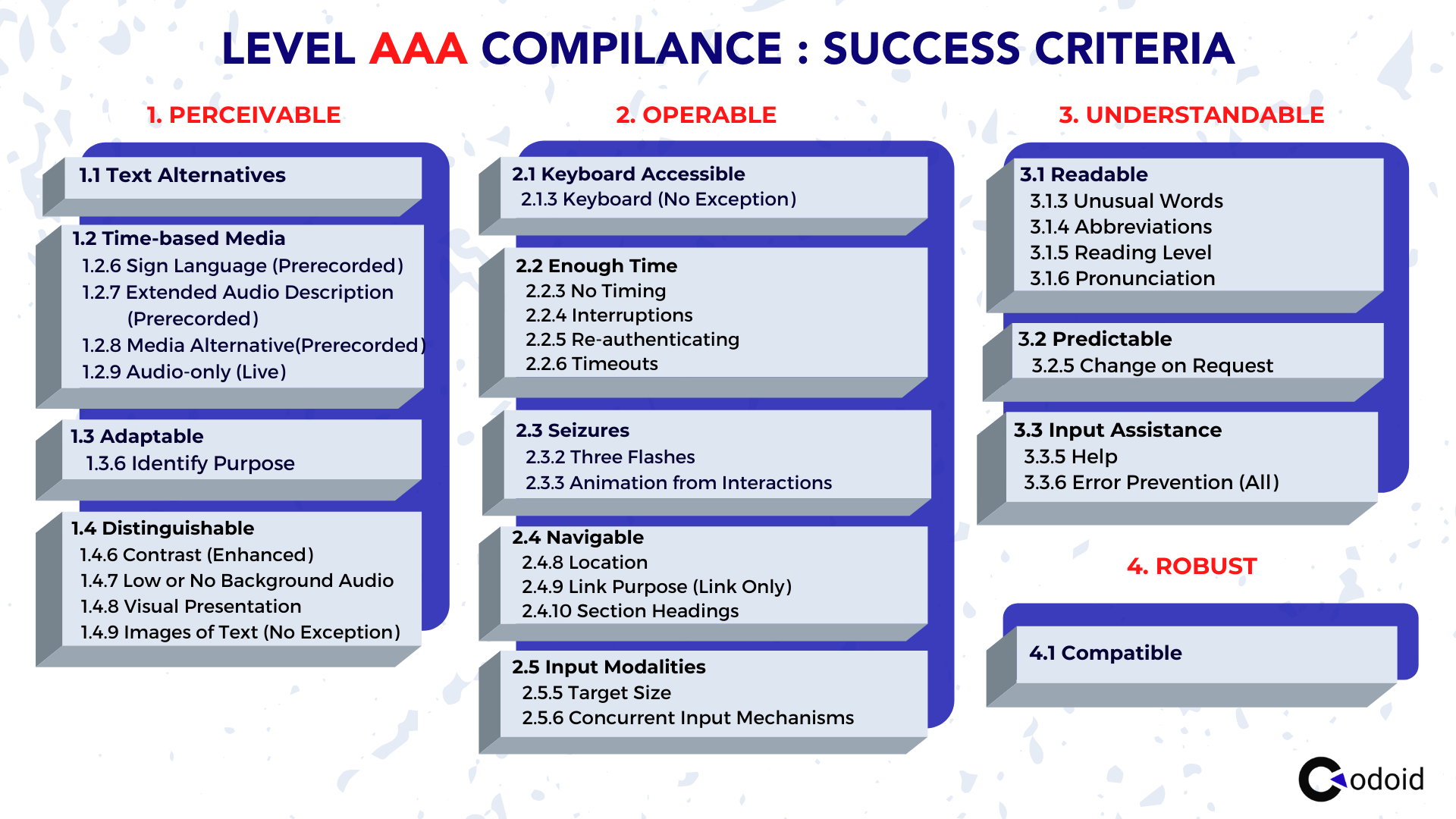

AAA – If you have the time and resources to make your product the best it can be, AAA level of compliance will be the way to go. The product would have to meet 28 additional success criteria after the first 50 criteria have been successfully met. For example, AAA compliance will be a great choice if you are looking to develop a specialist-level website. A few focus points for this level of compliance are as follows:

- Sign language Implementation

- Abbreviation explanations

- Pronunciation of words.

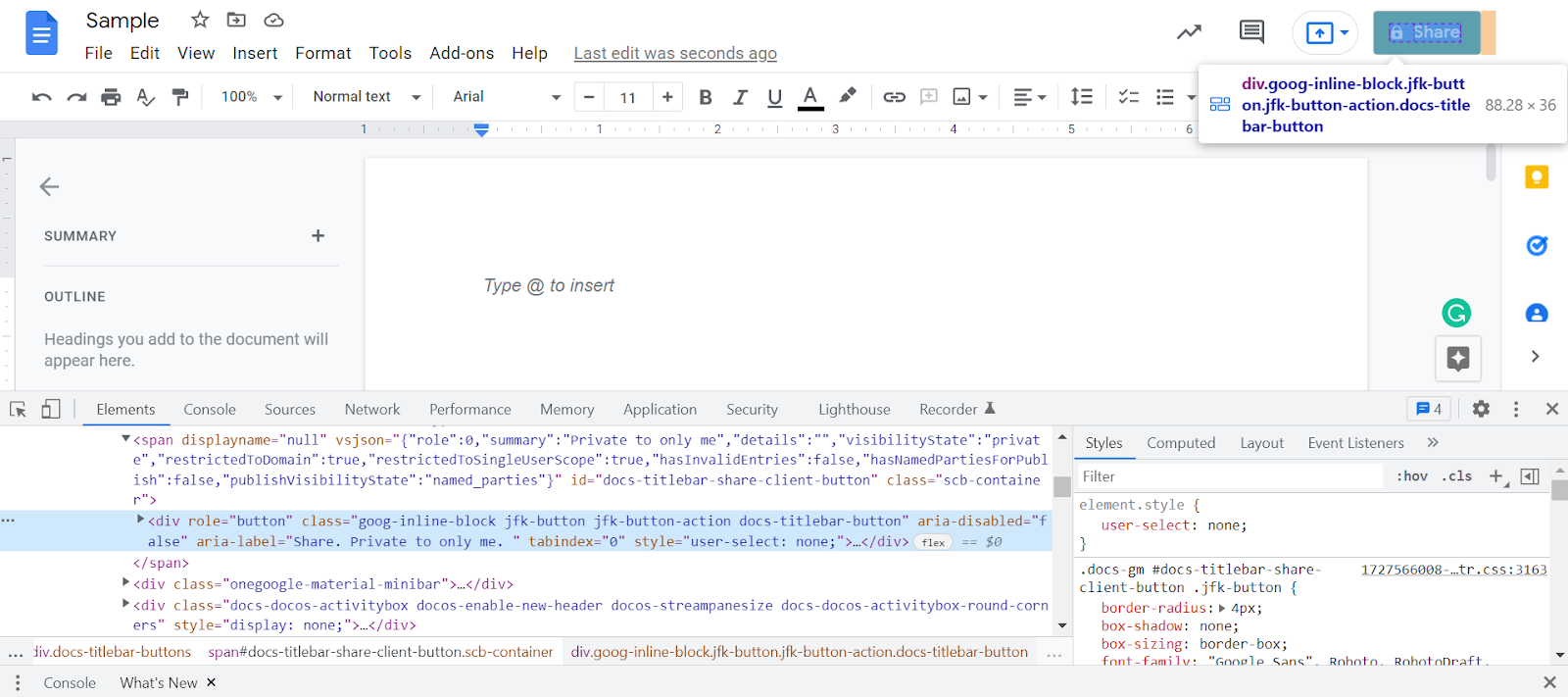

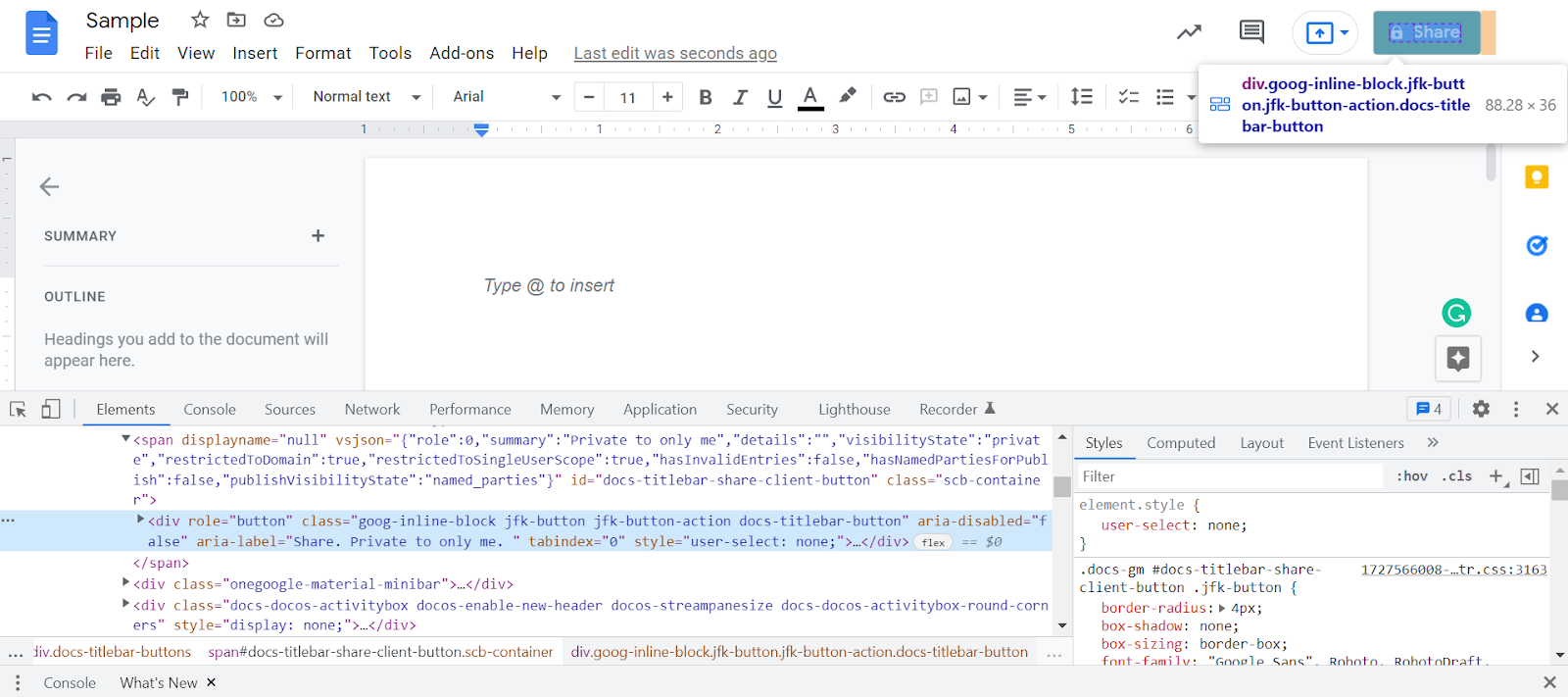

Accessibility Testing with ARIA

Being true to its name, ARIA (Accessible Rich Internet Applications) plays a very important role in making web pages accessible. One can say that it is almost impossible to make your web page accessible without ARIA as it adds vital additional information to elements that a screen reader will need to function smoothly. But the presence of ARIA alone will not guarantee accessibility as the correct roles should be assigned for the respective web elements.

For example, if you take a look at the above screenshot of a sample document in Google Docs, it is clear that it is the ARIA label that provides critical information to the screenreader beyond just the share button’s functionality. It even includes the information of who can access it. In this case, it is a document shared only with the user. So if you decide to share the document with only a specific set of people, then that information will be listed in the ARIA label to give a better user experience for people with disabilities. That is why we have made sure our accessibility testers are highly knowledgeable in all the concepts of ARIA.

Accessibility Testing Using Appropriate Personas

Our accessibility testers have been trained with different disability personas so that they will be able to effectively understand the difficulties and pain points a person with any form of disability might face. We achieved this by having our accessibility testers create different personas for different disabilities. Once we had about 50 different personas, we made them exchange personas with each other and had them use the application or web page we wanted to test by using that persona. Here’s a sample persona we created during one of our projects.

Sample Persona

Riya is a visually impaired child studying 4th grade. Despite the disability, she is highly motivated and eager to learn mathematical calculations. Since Riya can hear well, she utilizes screen readers to access the information on the web in audio form. So her teacher has provided an online that Riya can use to learn mathematical calculations with the aid of a screen reader. In this situation, if we were to test the online learning portal, we would follow the below checklist.

Keyboard Accessibility

Visually challenged people such as Riya can only navigate the page using the keyboard as using the mouse requires visual feedback. So we ensure that the pages with interactive elements are accessible using tab, and plain text & image contents are accessible using the down arrow key.

Labels

The links should have descriptive anchor text or labels as a user who employs a screen reader will not have enough context to click on a link when the indecisive anchor text such as ‘Click Here’ is used.

Alt text

We test if all the images have descriptive alternative texts. If the alt text isn’t sufficient to convey the entire context of the image, we check if long descriptions have been used properly. When terms like ‘Apple’ that might be understood as the tech company or the fruit, it is vital to check if the context has been made clear to avoid any misconceptions.

Headings

The page should have proper headings in the correct order as people like Riya will generally navigate through the webpage using headings. Apart from navigational issues, improper headings might even confuse her when being read out by the screen reader.

Titles for every page

The title of each module will be tested as it will be helpful for Riya to bookmark them, and navigate across modules in different tabs.

Audio description for Video

Like alt text for images, videos can use closed captions to convey the verbal context of the video. But what about the non-verbal visual aspects of the video that might play a vital role? That is why we ensure the audio description covers every action, activity, silence, animation, expression, and facial expression. While the audio description is read out, the paused screen should be clearly visible and no text, image, or element should appear blurred.

Though there are automation options for accessibility testing, we find that its cons outweigh the pros when we compare automated accessibility testing with manual accessibility testing. So let’s take a look at the various tools that our teams employ for various accessibility testing purposes.

The tools we select to perform accessibility testing differ from the regular tool selection process as a set of these tools will also be used by disabled people to access the content. For example, screen readers are used by visually challenged people. So we have trained employees with the prominent screen readers used across different platforms to deliver conclusive solutions.

- NVDA and JAWS for Windows.

- Voiceover for macOS and iOS.

- Talkback for Android.

- Orca for Linux.

There are also other tools that we use to ensure that all guidelines are met during accessibility testing.

Color Contrast Analyzer – One of the most widely recognized color contrast analyzing tools that can be used to check the contrast level between the used color schemes for elements such as background and foreground.

ARC Tool Kit – An browser extension tool used to identify the accessibility issues such as heading order, forms, ARIA, links, and so on that come under WCAG 2.0, WCAG 2.1, EN 301 549, and Section 508.

axe Dev Tool – An extension tool that is similar to the ARC Tool Kit that can be used to identify the above-mentioned accessibility issues. But it is usually more fast & accurate in comparison.

Wave – A highly reputable web accessibility evaluation tool developed by WebAIM to provide visual feedback about the accessibility issues of your web content with the help of indicators.

Bookmarklets – We also use various JavaScript-based Bookmarklets if we wish to highlight any specific roles, states, or properties of the accessibility elements on the page. They are also visual feedback tools like WAVE, but we can use different options based on our needs.

Our Case Study

We were tasked with a mammoth job of testing over 25,000 pages across 150+ assessments in an LMS for one of our Accessibility testing projects. Since we had a very short deadline to meet, the conventional accessibility testing approach wouldn’t have been enough. So we came up with the solution of testing the LMS platform features to identify the bugs that will be common in all the 25,000 pages of the assessment and have them fixed before starting our one-by-one approach. By doing so, we saved a lot of time by avoiding redundancy in the testing and bug reporting processes.

For example, if one radio button in one assessment did not meet the accessibility guidelines we were testing it against, then it is obvious that the other radio buttons in the other tests will also fail. But we wouldn’t have to raise the repeating issue over and over again as the initial phase of platform testing would have helped identify such issues. Fixing those issues was also easy as there was an existing template for the radio button component that was used throughout the assessment. So once the template was fixed, all its instances were also fixed. That is how we won the race against time and impressed our clients.

Benefits of QA outsourcing

Stay Focused

Having a dedicated team for Quality Assurance enables you to focus more on the core business needs and lets the quality assurance team ensure that your product meets the defined expectations without any deviations.

A Wider Talent Pool

You will be able to surpass boundaries and truly find the best of the best to test your product. So with that much expertise at your disposal, you can ensure that your app reaches the market in no time.

Fast and Successful Rollout

Yes, it is important to reach the market as early as possible. But it is also vital to have a winning product in your hands as a bad first impression can severely impact the perception of your product.

Reduced Costs and Better ROI

Offshore outsourcing can offer great Returns on Investments as the labor costs are significantly lower in the popular outsourcing countries in comparison to countries like the USA. Apart from the better ROI in the long run, you can reduce costs in terms of infrastructure, resources, and talent that you will need to have to perform software testing.

Hassle-free

You can hire teams based on your needs without having to maintain your own in-house team and all the resources that they will need to ensure optimum quality. Be it a one-time requirement or a long-term partnership, you can get what you want without any hassle or risk.

by Arthur Williams | Apr 29, 2022 | Accessibility Testing, Blog, Top Picks |

As of 2022, the internet has a whopping 4.95 billion users on a global level (i.e) 62.5% of the entire global population is on the internet. There are various reasons for us to believe that the internet is one of the best innovations of this century as it has reshaped the course of our lives by providing us with access to information at our fingertips. But the question to be asked here is if this access is universal to all of us. Truth be told, no. There are about 1 Billion people with at least one form of disability. Web accessibility is the way to ensure that the basic right to information is fulfilled for the 1 billion people, and accessibility testing is the key to ensuring it.

Accessibility testing is definitely very different from regular testing types. Its unique nature might even instill fear in various testers to even try to learn about accessibility testing. Being a leading web-accessibility testing service provider, we have years of experience in this domain. Our dedicated team of testers has mastered the accessibility testing process to not only comply with the various guidelines but to also ensure access and a great user experience for people with disabilities. So in this blog, we will be extensively covering the concepts you will need to know to perform accessibility testing, how web accessibility is measured, the various steps involved in accessibility testing, and much more. Before we head to all that, let’s first clear the air from any and all misconceptions by debunking the myths surrounding accessibility testing.

Debunking the Popular Accessibility Myths

The most common accessibility myth is that web accessibility testing is expensive, time-consuming, hard to implement, and that it is not necessary as only a few people suffer from disabilities. But the reality is very different from these assumptions. So let’s take a look at other such accessibility myths and debunk them.

Accessibility is beneficial only to a very few people

15% of the entire world population suffers from at least one form of disability. So assuming that millions of people need not access your website or app is definitely not a logical decision even when thinking from a business perspective.

Accessibility is time-consuming & hard to implement

Talking about the business perspective, we can say without any doubt that accessibility testing is not time-consuming or hard to implement. If you have a capable accessibility testing team such as us, you will be able to ensure that your product can reach and be used by a wider audience.

Accessibility makes sites ugly

This is a factor that started out to be a real challenge during the early days of the internet. It is now an accessibility myth as many of the limitations of the past are no longer a problem now. So this should no longer be an excuse to have your site inaccessible as no WCAG guideline prevents us from using multimedia options such as images and videos. Moreover, there are different compliance levels that one can target to achieve. So attaining the least level of compliance will at least ensure most users get access to your content.

Accessibility is only optional

Technically accessibility is not optional for a website or app in different parts of the world. Since we primarily build our products to work on a global scale, it will be highly recommended to keep your product accessible so you can stay out of legal trouble.

Accessibility provides no other added value

This accessibility myth is so far away from reality as the efforts we take to make the site accessible can greatly enhance the overall user experience for all users and even improve your SEO performance as using descriptive anchor text, alt text, and titles make it easier for Google to crawl your site.

Accessibility Laws Around the World

| S. No |

COUNTRY / REGION |

LAW NAME |

YEAR OF IMPLEMENTATION |

SCOPE |

WCAG VERSION |

| 1 |

AUSTRALIA |

Disability Discrimination Act 1992 |

1992 |

Public Sector, Private Sector |

WCAG 2.0 |

| 2 |

CANADA |

Canadian Human Rights Act |

1985 |

Public Sector, Private Sector |

WCAG 2.0 |

| 3 |

CHINA |

Law On The Protection Of Persons With Disabilities 1990 |

2008 |

Public Sector, Private Sector |

None |

| 4 |

DENMARK |

Agreement On The Use Of Open Standards For Software In The Public Sector, October 2007 (Danish) |

2007 |

Public Sector |

WCAG 2.0 |

| 5 |

EUROPEAN |

Web And Mobile Accessibility Directive |

2016 |

Public Sector |

WCAG 2.0 |

| 6 |

FINLAND |

Act On Electronic Services And Communication In The Public Sector |

2003 |

Government |

None |

| 7 |

FRANCE |

” Law N° 2005-102 Of February 11, 2005 For Equal Rights And Opportunities, Participation And Citizenship Of People With Disabilities (French)” |

2005 |

Public Sector |

None |

| 8 |

GERMANY |

Act On Equal Opportunities For Persons With Disabilities (Disability Equality Act – BGG) (German |

2002 |

Public Sector, Private Sector |

None |

| 9 |

HONG KONG(HKSAR) |

Guidelines On Dissemination Of Information Through Government Websites |

1999 |

Government |

WCAG 2.0 |

| 10 |

INDIA |

Rights Of Persons With Disabilities Act, 2016 (RPD) |

2016 |

Public Sector, Private Sector |

None |

| 11 |

IRELAND |

The Disability Act, 2005 |

2005 |

Public Sector |

WCAG 2.0 |

| 12 |

ISRAEL |

Equal Rights Of Persons With Disabilities Act, As Amended |

1998 |

Public Sector, Private Sector |

WCAG 2.0 |

| 13 |

ITALY |

Law 9 January 2004, N. 4 “Provisions To Facilitate The Access Of Disabled People To IT Tools” (Stanca Law) (Italian) |

2004 |

Public Sector, Government |

WCAG 2.0 |

| 14 |

JAPAN |

Basic Act On The Formation Of An Advanced Information And Telecommunications Network Society |

2000 |

Public Sector, Private Sector |

None |

| 15 |

NETHERLANDS |

Procurement Act 2012 (Dutch) |

2016 |

Government |

WCAG 2.0 |

| 16 |

NORWAY |

“Regulations On Universal Design Of Information And Communication Technology (ICT) Solutions (Norwegian)” |

2013 |

Public Sector, Private Sector |

WCAG 2.0 |

| 17 |

REPUBLIC OF KOREA |

Act On Prohibition Of Discrimination Against Persons With Disabilities And Remedy For Rights, Etc. (Korean) |

2008 |

Public Sector, Private Sector |

WCAG 2.0 |

| 18 |

SWEDEN |

Discrimination Act (2008:567) |

2008 |

Public Sector, Private Sector |

None |

| 19 |

SWITERZLAND |

Federal Law On The Elimination Of Inequalities Affecting People With Disabilities (French) |

2002 |

Public Sector, Private Sector |

WCAG 2.0 |

| 20 |

TAIWAN |

Website Accessibility Guidelines Version 2.0 (Chinese (Zhōngwén), Chinese, Chinese) |

2017 |

Public Sector |

WCAG 2.0 |

| 21 |

UNITED KINGDOM |

Equality Act 2010 |

2010 |

Public Sector, Private Sector |

WCAG 2.0 |

| 22 |

UNITED STATES |

Section 508 Of The US Rehabilitation Act Of 1973, As Amended |

1998 |

Government |

WCAG 2.0 |

Accessibility Metrics: How is Web Accessibility Measured?

Though there are different laws that are followed around the world, a majority of them are based on WCAG (Web Content Accessibility Guidelines). W3C (World Wide Web Consortium) developed WCAG and published it for the first time in 1999 in an effort to ensure accessibility on the internet. When it comes to accessibility metrics, web accessibility can be measured using three conformance levels, namely Level A, Level AA, and Level AAA. These 3 WCAG Compliance Levels have numerous success criteria that ensure accessibility to people with various disabilities.

Understanding the 3 WCAG Compliance Levels:

WCAG has developed these compliance levels with 4 primary guiding principles in mind. These 4 aspects are commonly referred to with the acronym POUR.

- Perceivable

- Operable

- Understandable

- Robust

The perceivable, operable, and understandable principles are pretty self-explanatory as every user should be able to perceive the content, operate or navigate through it, and understand it as well. Robust is focused on ensuring that they can do all the 3 in various devices to decrease numerous limitations. WCAG was first introduced in 1999 and has been going through constant upgrades by adding success criteria to meet the various accessibility needs. The most recent version of WCAG is WCAG 2.1 which was officially released in June 2018.

The 3 WCAG compliance levels are built around these guiding principles. Each level of compliance is built around the previous level. To put it in simple terms, If you have to attain level AAA compliance, you should satisfy all the success criteria from the AA compliance level. Likewise, if you wish to comply with AA level compliance, you should have satisfied all the success criteria of level A. So level A compliance is the most basic of the 3 available WCAG compliance levels. Since there are also no partial WCAG compliance levels, even if your website doesn’t satisfy one of the required success criteria, it will be termed non-compliant.

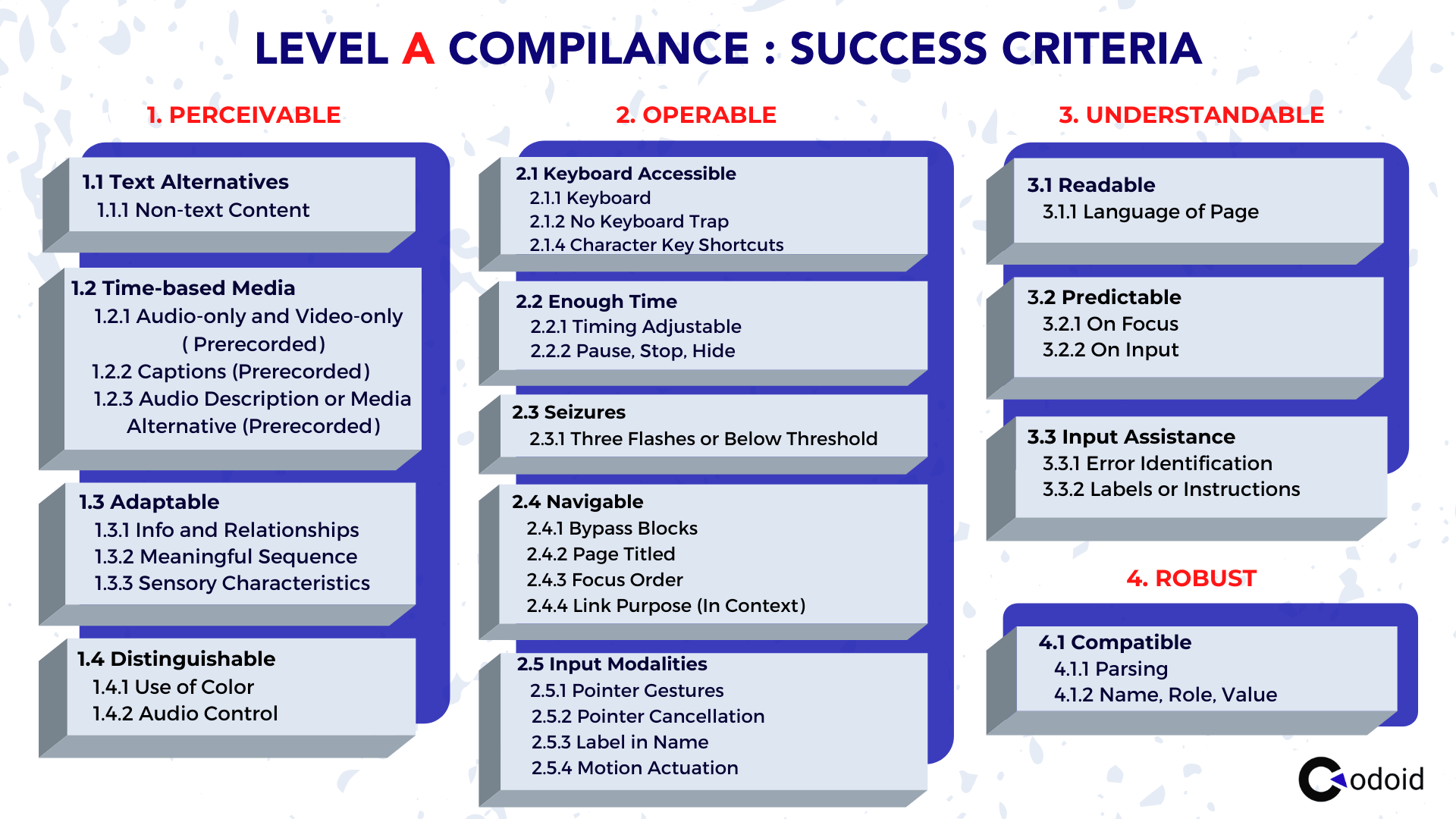

Level A Compliance: Minimum Level

Level A is the most basic level of accessibility that every website should aim to comply with. It solves the primal problem of people with disabilities finding your website unusable. Since each compliance level is built around its preceding levels, level A compliance lays the foundation for everything. Now let’s take a look at the 30 success criteria at this level.

If you are very new to accessibility, you may not be able to comprehend everything mentioned in the list. But this level of compliance ensures that people with visual impairments will be able to use screen readers effectively with the help of proper page structure and media alternatives such as alt-text for images, subtitles for videos, and so on. But it definitely doesn’t solve all the problems. So let’s see what level AA compliance has to offer.

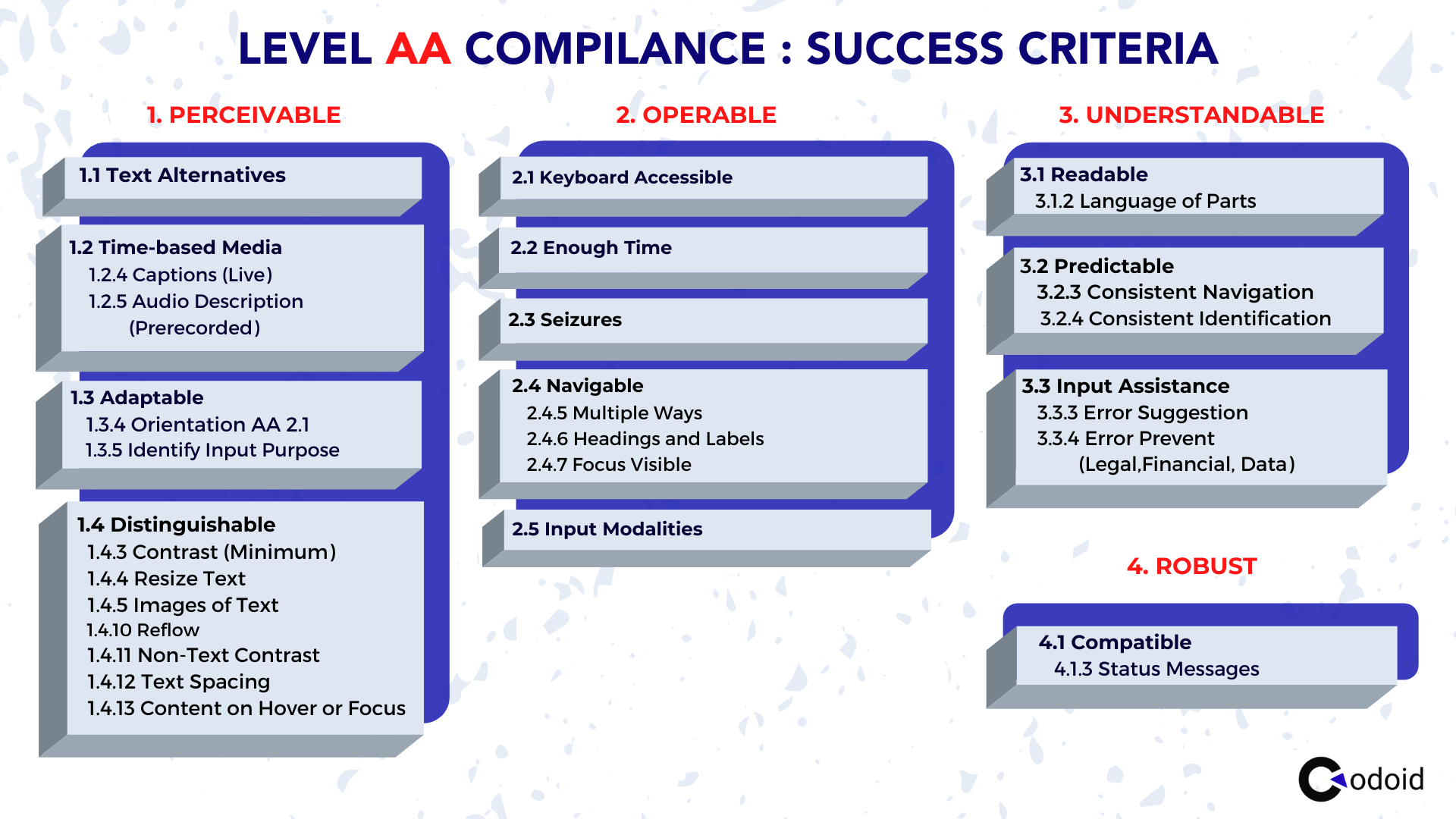

Level AA Compliance: Acceptable Level

If level A is the bare basics, then Level AA is the Acceptance level of compliance a website can look to achieve to enhance the user experience for disabled people. In addition to the 30 success criteria that have to be met in level A compliance, an additional 20 success criteria also have to be satisfied. Of the 50 total success criteria at level AA, let’s take a look at the success criteria exclusive to this level in a categorized way.

We can see the additional focus on notable aspects like color contrast levels, well-defined roles and labels in form labels, status messages, and so on.

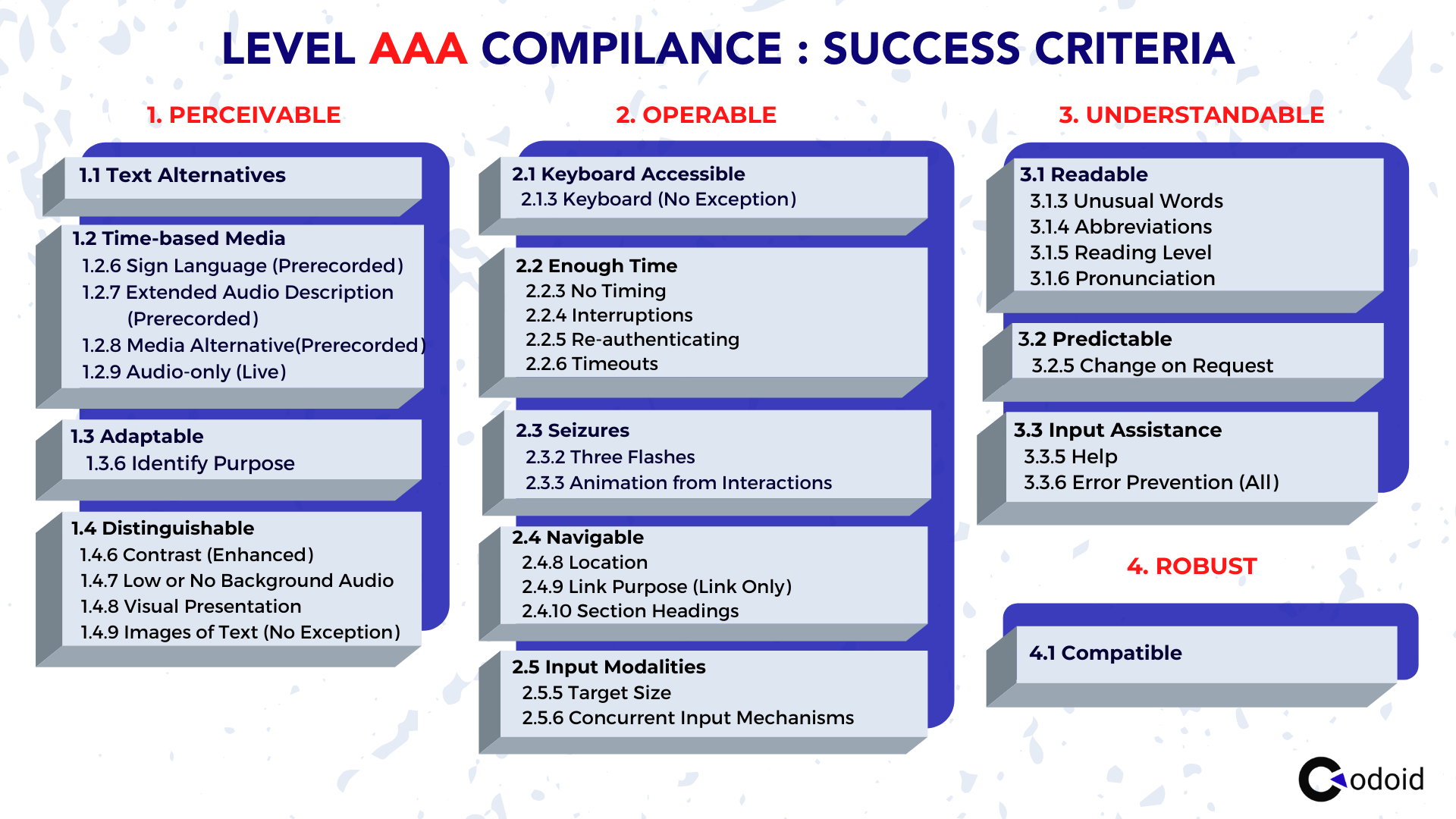

Level AAA Compliance: Optimal Level

Level AAA is the highest level of accessibility compliance that has an additional 28 success criteria that have to be satisfied. So the grand total of success criteria to be met for this level of compliance is 78 as of writing this blog. It is also the most difficult level of compliance to attain. Though achieving this level of compliance is hard, it has to be done if you are looking to build a specialist site for accessibility. Here is a list of the 28 success criteria that are exclusive to level AAA.

The level of difficulty in each of these success criteria is definitely high. For example, if you have a pre-recorded audio or video on your site, it definitely has to have sign language support. Since live videos are very uncommon on websites, the amount of work needed to achieve this is high. There are also focus areas such as mentioning the expanded form of abbreviations, providing context-sensitive help, and so on.

Which Disabilities Are Supported by WCAG?

Though there are numerous types of disabilities, not all disabilities are covered under the Web Content Accessibility Guidelines. Though there are new guidelines being added over time, as of now WCAG supports visual impairments, auditory disabilities, cognitive disabilities, and motor disabilities. The support is provided due to assistive technologies such as

- Screenreaders

- Braille Displays

- Text-to-speech systems

- Magnifiers

- Talking Devices

- Captioning

ARIA

Out of these assistive technologies, screen readers are the most used and so understanding what ARIA is and how it is used is an integral part of accessibility testing.

What is ARIA?

ARIA expanded as Accessible Rich Internet Applications, is a set of attributes that can be added to the HTML for developing more accessible web content applications. It is a W3C specification that fills in the gaps and makes up for the accessibility voids in regular HTML. It can be used to

- Help Screen readers and other assistive technologies perform better by improving the accessibility of interactive web elements.

- Enhances the Page Structure by defining helpful landmarks.

- Improves keyboard accessibility & interactivity.

Now let’s find out how ARIA improves accessibility by taking a look at the most commonly used ARIA attributes with the help of an example.

The most commonly used aria attributes:-

- 1. aria-checked.

- 2. Aria-disabled.

- 3. aria-expanded.

- 4. aria-haspopup.

- 5. Aria-hidden

- 6. Aria-labelledby

- 7. Aria-describedby

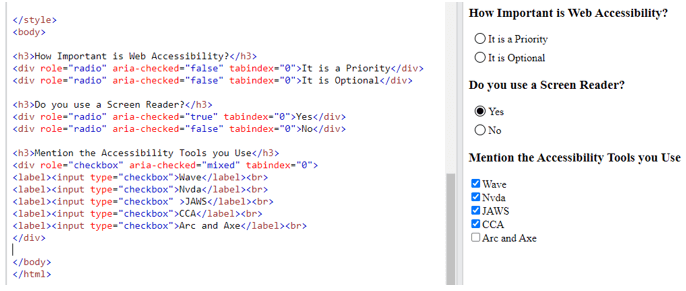

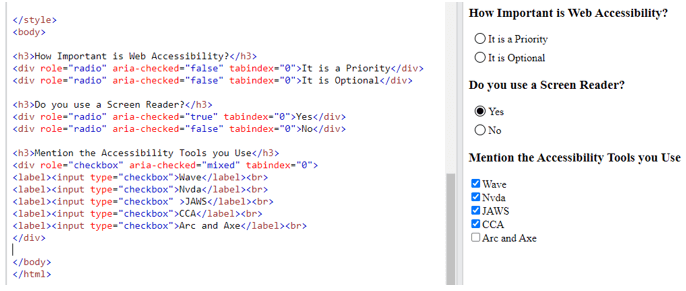

ARIA Attribute Example: Aria-checked

The aria-checked attribute can be used to denote the current state of checkboxes and radio buttons. So it will be able to indicate whether an element is checked or unchecked by using true and false statements. You can use JavaScript to ensure that the ARIA’s state changes when it is checked or unchecked.

- aria-checked=”true” indicates the element is checked.

- aria-checked=”false” indicates the element is in an unchecked state.

- aria-checked=”mixed” indicates partial check for elements that have tri-states

As the name of all the other commonly used attributes suggests, they can be used to indicate if a list is expanded, if there is a pop-up, and so on.

Web-Accessibility Testing Tutorial

Accessibility testing is very much different from the regular types of software testing and might seem daunting to many. As seen in the ‘How Accessibility is Measured’ section, there are 3 different WCAG compliance levels. So we will now be focusing on the various success criteria from the A-level compliance. To make it even easier for you to perform these tests, we have broken down the entire accessibility testing process based on the different web elements and content types we will generally add to a website. Once we have covered all that, we will then explore how to ensure general accessibility.

Page Title

The page title is one of the most basic properties of a web page that helps us differentiate the numerous webpages that appear in the search results on Google or when multiple webpages are opened in a browser. We will easily be able to see and identify the difference. But if you’re using a screen reader, it will not read out the domain name. And if the Page Titles are similar, it’ll become hard for the user to know the difference. So make sure to test if the titles clearly convey what the webpage is about.

Best Practice:

<html>

<head>

<title>How to do accessibility testing</title>

</head>

</html>

Bad Practice:

<html>

<head>...</head>

<body>...</body>

</html>

Places to Check:

- Browser Tab.

- Search Engine results page.

- Bookmarks.

- Title of other pages within the same website. (Eg. Blog Articles)

Structure check

Now that we have seen how the title can impact a disabled person’s usability, let’s shift to the structure of the webpage. A complicated structure can confuse even a regular end-user. So using a well-planned and clean structure will make it easier for regular users to scan through and read your content while making it accessible for people with cognitive and visual disabilities.

Checklist:

- 1. Check if the page has a defined header and footer.

- 2. Validate the main landmark and the complementary landmarks by navigating to specific sections.

- 3. Test the flow of the Heading structure.

- 4. If there are numbered lists, bullet lists, or tables, check if they are read properly by the screenreader.

- 5. Test if the bypass blocks work using a screen reader.

- 6. Check if the webpage is coded with human-readable language that can be programmatically determined by assistive technologies.

- 7. Check if the basic structural elements like sections, paragraphs, quotes(blackquotes), etc. have been defined properly.

- 8. Check for hyperlinks on the page and ensure that the anchor text is descriptive enough to convey the change of context to the user.

Headings

Headings in a webpage are very crucial as it outlines the main content of the page and helps convey which parts are important. So if your webpage has more than 1 Heading 1, doesn’t have any headings, or doesn’t follow a proper hierarchy, it will definitely cause confusion. That is why the heading structure flow is recommended to go from H1 to H6 even though it is not mandatory.

Best Practice:

*Heading level 1<h1>

*Heading level 2<h2>

*Heading level3<h3>

*Heading level4<h4>

*Heading level5<h5>

*Heading level6<h6>

*Heading level3<h3>

*Heading level4<h4>

*Heading level5<h5>

*Heading level6<h6>

*Heading level 2<h2>

*Heading level3<h3>

*Heading level4<h4>

Bad Practice:

*Heading level 1<h1>

*Heading level 2<h2>

*Heading level3<h3>

*Heading level 1<h1>

*Heading level 4<h4>

*Heading level5<h5>

Tables

Tables are used to present the data in an organized way and in logical order. The table should have a table header, the table header should be marked up with ‘th’. And the data inside the cells should be marked up with ‘td’. If there are any highly complex tables, check if the scope header and id have been provided.

Good Practice:

<table>

<tr>

<th>Types of Disabilities</th>

<th>Description</th>

</tr>

<tr>

<td>Vision</td>

<td>People who have low vision, complete blindness and partial blindness</td>

</tr>

</table>

Bad Practice:

<table>

<tr>

<td>Vision</td>

<td>People who have low vision, complete blindness and partial blindness</td>

</tr>

</table>

Checklist:

- Verify if all the column or row data are read out along with the respective column or row headers.

- Ensure that the number of total rows and columns are properly readout.

- Check if the row and column numbers are correctly readout when the focus shifts to them.

Lists

Typing out over-long paragraphs and not using lists in pages can greatly reduce the readability for even regular users. The right usage of lists can make it very easy for people with visual impairments using screen readers or for even people suffering from disabilities like Dyslexia. But if the proper HTML coding isn’t used in the lists, screen readers might just read all the words together like a sentence and confuse the user. Since there are 3 types of lists (Unordered list, Ordered list, and Descriptive list), we have shown what the proper code structure will look like.

Good Practice

<ul>

<li>Apple</li>

<li>Orange</li>

</ul>

<ol>

‘ <li>Vision</li>

<li>Speech</li>

</ol>

<dl>

<dt>Disabilities</dt>

<dd>Vision</dd>

<dd>Speech</dd>

</dl>

Other common components seen in various websites are text boxes, buttons, dropdowns, and so on. Since the user should be able to interact with these web elements, it is important for them to have accessible names and proper ARIA labels for the screen reader to read them properly.

Checklist:

- 1. Check if proper attributes are used for all required fields as the screen reader should be able to announce to the user that it is a required field.

- 2. If there are any radio buttons, check if they are grouped together.

- 3. Check if all elements should receive focus when navigating using the tab key.

- 4. If there are any errors in an input field, check if the error is mentioned and read out by the screen reader.

- 5. Check if all the dropdown options have aria attributes like “aria-expanded” and “aria-collapsed”.

- 6. Verify that the pop-up content has an ARIA attribute as “aria-haspopup”.

- 7. Check if all buttons have proper ARIA labels that help the user understand their usage.

- 8. Verify that the aria attributes for alert contents are implemented correctly.

Multimedia Content

Multimedia content such as images, audio, and videos will not be accessible to people with various disabilities. But there are steps developers can take to make them accessible to all by

- Adding alt text or long descriptions to images.

- Adding Closed Captions, transcripts, and audio descriptions for videos and audios.

Images – Alt text

Alt-text is nothing but the alternative text that explains the purpose or content of images, charts, and flowcharts for the people who will not be able to see them. So when an image is bought to focus, the screen reader will be able to read out the provided alternative text. If it is a very complex visual that requires more than 140 characters, then we could opt to use long descriptions over alt text.

<div>

<img style=”” src=”” alt=”” width=””>

</div>

The job doesn’t end by checking if the alt text is present or not, we should ensure that the alt text fulfills its purpose by carrying out the checks from the below checklist.

Checklist

- 1. Check if the alt text is descriptive enough to convey the intent or info from the image.

- 2. Be clear when using terms prone to misconception like ‘Apple’. Users might get confused if we are talking about the company named Apple or the fruit named Apple.

- 3. Make sure the alt text is not used as a holder of keywords for the various SEO needs.

- 4. Text alternatives are not needed for decorative images as they will not affect the user in understanding the main content of the page. For example, consider a tick mark near the text that says “Checkpoint”. Here it is not mandatory to mention the tick mark.

Videos & Audios

Things get a little more complicated when other multimedia contents such as videos and audio have to be added. We would have to verify if these multimedia contents are supported by closed captions, audio descriptions, or transcripts. Let’s take a look at each of these assistive technologies.

Closed captions & Transcripts

A visually impaired person will be able to understand the context of videos with dialogues or conversations. But what if a person with a hearing disability watches the same video? Though there are auto-generated captions, they are not that reliable. That is why it is important to include closed captions or transcripts for all the videos/audio you add. Though transcripts are not mandatory, they will be effective when used for podcasts, interviews, or conversations. So a person with hearing impairment can read the transcript if they wish to.

Checklist:

- 1. Check if the closed captions are in sync with the video.

- 2. Validate if the subtitles have been mapped word-by-word.

- 3. Make sure the closed captions are visible within the player.

- 4. If it is a conversational video, make sure the name of the speaker is also mentioned.

- 5. Check if all the numeric values are mentioned properly.

- 6. Make sure the closed captions mention important background audio elements such as applause, silence, background score, footsteps, and so on.

Audio Description

But what if the video doesn’t have any or much dialogues or conversations? What if the visuals are a key to understanding the video? Then employing subtitles alone will never be enough. That is where audio descriptions come into play as they will describe all the actions that are happening in the visual and help users understand it clearly.

Checklist

- 1. Ensure the description makes it possible for visually impaired users to create a clear mental image in their minds.

- 2. Make sure the audio description covers every action, activity, silence, animation, expression, facial expression, and so on.

- 3. The paused screen when the audio description is playing should be clearly visible and no text, image, or element should be blurred or misaligned.

- 4. Check if all the on-screen text is available correctly in the audio description.

- 5. Validate if all the activities and on-screen elements are in sync with the Audio description.

- 6. The screen should be paused at the same instance which is being described in the audio description.

Animations, Moving and blinking Contents

Animations, scroll effects, and such dynamic content are being widely used on various websites. As debunked earlier, accessibility guidelines don’t prevent us from doing so. Rather, it just guides us in implementing it in the right and usable way.

Checklist

- 1. Ensure all animations that are over 5 seconds in length have the provision to play, pause, or stop them.

- 2. If there are any blinking or flashing contents, ensure that it doesn’t flash more than 3 times in one second as it might affect people prone to seizures.

Color Contrast

A general rule to keep in mind when developing or adding such content is to ensure there is proper color contrast between the background color and foreground elements such as text, buttons, graphs, and so on. Be it a table, image, video, form, or any content type we have seen, a lack of proper color usage can make it hard for even regular users to view the content without any difficulty. So it is needless to say that people with low vision or color blindness will find it impossible to access the content. The preferred color of the background is white and the foreground is black.

Now that we have covered all the aspects of adding different types and categories of content, let’s progress to the usage aspects that you must know when it comes to accessibility testing.

Keyboard Control & Interaction

People with visual impairments and motor disabilities will not be able to use a mouse in the same way a regular user can. So using braille keyboards or regular will be the only option they have. The most important key on the keyboard will be the ‘Tab’ key as it will help navigate to all the interactive web elements. You can use the ‘Shift+Tab’ keys combination to move in the backward direction.

- Test if all the web elements receive focus when you hit the tab key. Also, check if the focus shifts from that element when the tab key is pressed again.

- The element in focus will be highlighted with a gray outline. So, test if it is visible as it will be helpful for people with motor disabilities.

- Ensure that the focus order is from left to right or right to left based on the language in use.

- For web elements such as drop-down lists, ensure that all the options are read out by the screen reader when navigating using the arrow keys.

- If there is any video or a carousel, the user must be able to control (Pause/Play/Volume Control) it using the keyboard actions alone.

- Likewise, if there are any auto-play functionalities, check if the playback stops in 3 seconds or provides an option to control the playback.

- If an interactive element does not receive the tab focus, then the element should be coded with “tab index=0”.

To make it easier for the users to access the web page with different input options such as the touchscreen on mobiles, and tablets without using the keyboard. While thinking about checking the web page without keyboard keys, we can use pointer cancellation, pointer gestures, and motion actuation.

Zooming

Since there are various types of visual impairments, people with low vision might be able to access the content on the webpage without the use of a screen reader. So we have to zoom in and view the page at 200 and 400% zoom levels and check for

- Text Overlaps

- Truncating issues

Other Checks

From all the points we have discussed above, it is obvious that any instructions or key elements that are crucial for understanding and operating the content should not rely only on sensory characteristics. For example, if there is a form to be completed, then the completion of the form shouldn’t be denoted with a bell sound alone as people who have hearing impairments will not be able to access it. Likewise, showing just a visual or color change to denote completion will also not be accessible to all.

If there are any defined time limits, make sure that people with disabilities will be able to use them correctly. So if we take a session expiration as an example.

- Check if the user is able to turn off or adjust the time limit before it starts.

- The adjusting limit should be at least 10 times more than the default setting.

- Make sure there are accessible warning messages before expiration and that there is at least a 20-second time gap for the user to extend the session with a simple action such as typing the space key.

- It is important to keep in mind that there are exceptions for expirations such as an online test as the ability to extend the provided time limit would invalidate the existence of a time limit. Likewise, these criteria can be exempted if the time limit is more than 20 hours or if a real-time event such as an auction is being conducted.

Most common Accessibility issues

So what kind of issues can you expect when following the above-mentioned checklist? Here’s a list of the most common accessibility issues that will give you a heads up about the various issues you will encounter when performing accessibility testing. Please note that this is not a comprehensive list and that you have to keep your eyes open for any unexpected accessibility issues that might come your way.

- 1. Heading level issues

- 2. Incorrect implementation of list tags

- 3. Missing alt-text for images

- 4. Incorrect focus order

- 5. Missing tab focus for interactive web elements

- 6. Poor Color Contrast

- 7. Incorrect reading order

- 8. Missing ARIA labels

- 9. State not announced.

- 10. Improper table implementation

- 11. Inability to access the contents using keyboard keys

- 12. Content is not visible at 400 % and 200% zoom levels

- 13. Screen reader not notifying alert messages

- 14. Screen reader not reading the dropdown list

- 15. Lack of expanded and collapsed state implementation

- 16. Missing closed captions, audio descriptions, or video transcripts for videos

Challenges of Accessibility Testing

There is no task without any challenges and Accessibility Testing also does come with its own share of challenges.

Dynamic Websites

Dynamic websites tend to have 100 or 1000’s changes every minute or every hour. So implementing web accessibility for dynamic content inside such web pages is considered to be very hard due to the presence of third-party content like ads and other such factors.

Large Websites

Though it would be easier to work with static content in comparison, it would still be a mammoth challenge if it has to be implemented for over 1000 pages in retrospect. So the next major challenge in implementing accessibility is the scale of the website as the more the number of pages, the higher the variety of content that will be available.

Limited Automation Scope

The scope for automating web accessibility tests is very low as even if we write scripts to detect the images with or without alt-text, it only solves half the problem. What if the alt-text is present but not descriptive enough or relevant to that image? We would once again need a manual tester to validate it. Lack of conformance verification is what makes scaling web accessibility tests with automation a challenge.

Test Artifacts over the User Experience

The focus of WCAG and its success criteria are primarily on technical artifacts and not on the user’s journey and goals. To put it in simple terms, their guidelines are only focused on the webpage as seen earlier, and not on the user experience. The clear gap between accessibility and the user experience can be bridged if the accessibility testing team focused on the user experience while performing testing. And that is why we ensure to train our accessibility testers to test by keeping the user experience in mind.

Web Accessibility Testing Tools

Since disabled people use various assistive technologies, as a tester you should know how to use those technologies too. That’s just the first step, as you should also use the assistive technology in a way that a real person with disabilities will use it. Putting yourself in their perspective is the key to maximizing the scope of usage. There are dedicated screen readers that come with built-in various devices and platforms. Though there will be various similarities when it comes to the usage of these screen readers, you should know how to use them individually.

Inbuilt List of Screen Readers

- Narrator for Windows

- ORCA for Linux

- Voiceover for macOS and iOS

- Talkback for Android

- ChromeVox for Chromebooks

Other Popular Screen Readers

- JAWS for Windows (Commercial)

- NVDA for Windows (Free)

Apart from these assistive technologies, we also employ a set of tools to ease the accessibility testing process. While no tool will work without human validation, it is still handy to have such options to speed up the evaluation process. In order to speed things up, even more, we also use various browser extensions and bookmarklets such as,

- Wave

- Color Contrast Analyzer

- arc & axe

Conclusion

So that pretty much sums everything up you will need to know to get started with accessibility testing. We hope you found our guide easier to understand when compared to the other conventional options as we have broken down the checks based on the different web elements as they are more tangible. Make sure to subscribe to our newsletter as we will be posting such highly useful content on a weekly basis. Have any doubts? Ask them in the comments section and we will try our best to answer them.

by Arthur Williams | Jun 20, 2022 | OTT Testing, Blog, Top Picks |

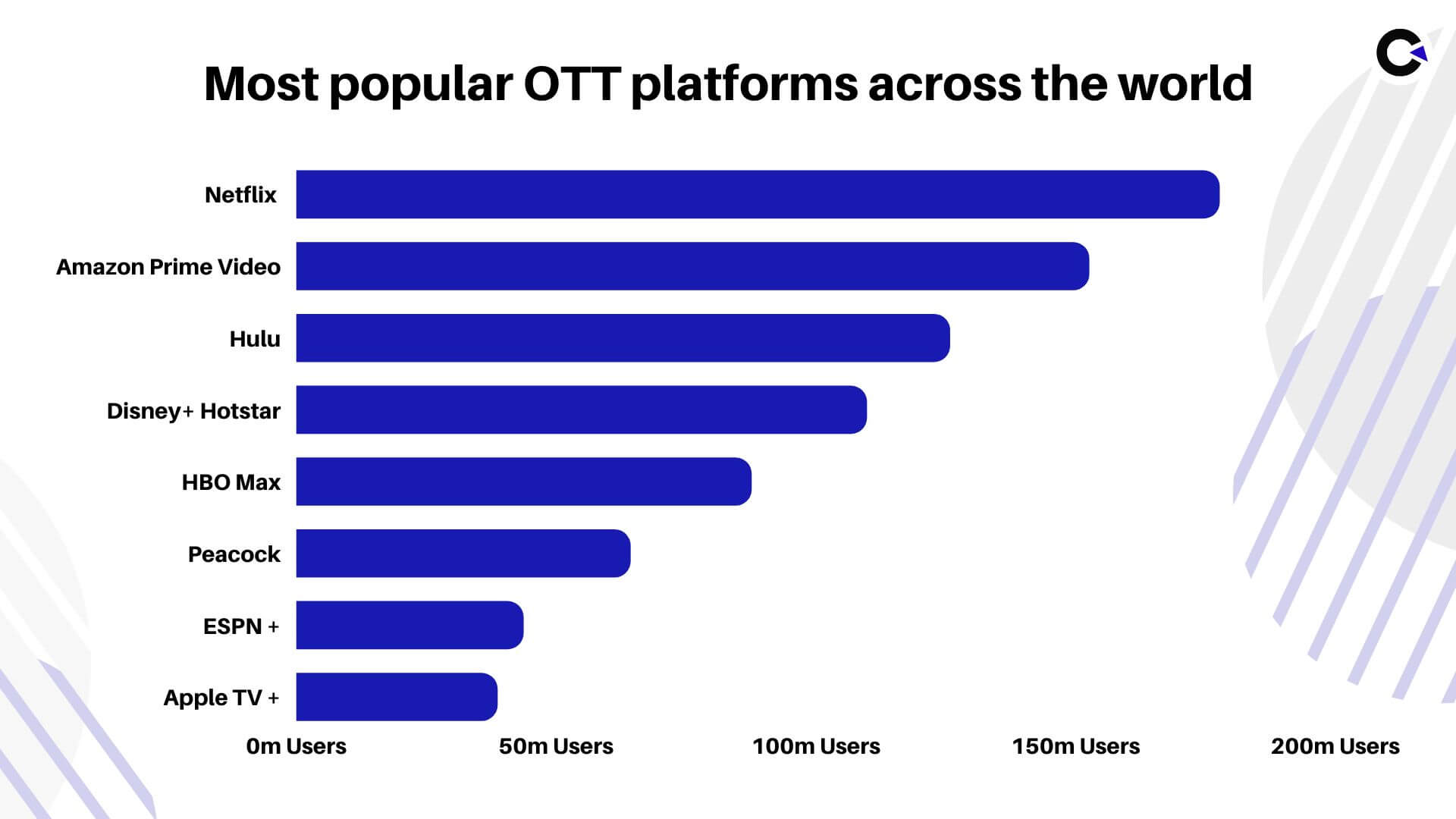

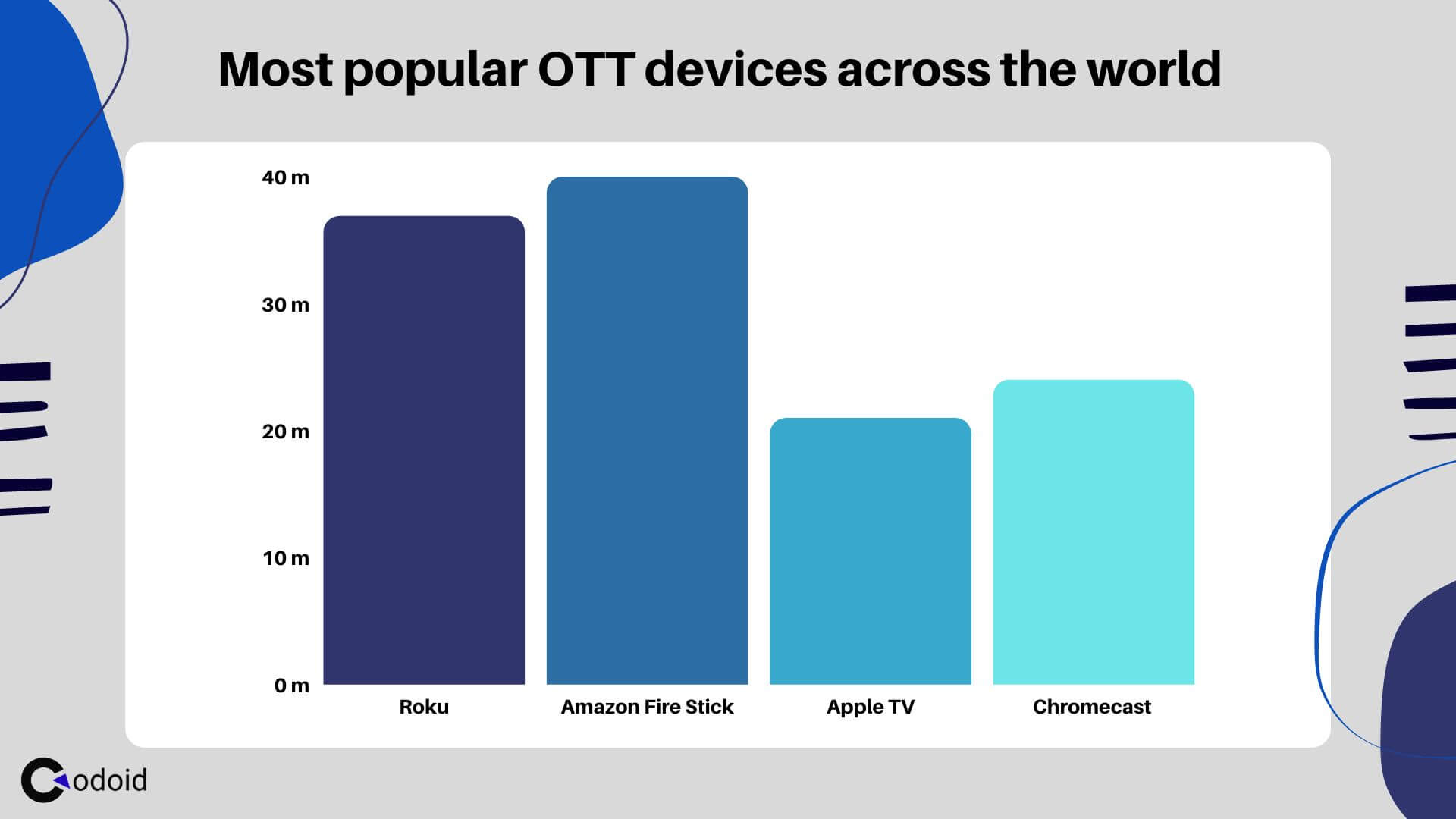

Content is King is a very famous quote that we all would have heard on so many occasions. Though it applies to OTT platforms, there is no denying that user experience is also an integral part of an OTT platform’s success. It is important to note that the technical aspects of the OTT platform also have to be strong to ensure the best user experience as nobody will be willing to watch even the greatest content if it is constantly buffering and taking forever to respond to your action. The only way to ensure that the platform delivers the best user experience is by performing OTT testing. So in this blog, we will be seeing how to perform OTT Automation testing across major devices and see a conclusive checklist as well.

What is OTT Testing?

An OTT (Over the Top) service is any type of streaming service that delivers content over the internet. Though there is a wide range of OTT services such as WhatsApp, Skype, Zoom, and so on, our focus will be entirely on OTT media services such as Netflix, Amazon Prime, etc. So OTT testing can be defined as the process of performing robust tests to ensure the successful delivery of video content such as films or tv shows with the best user experience.

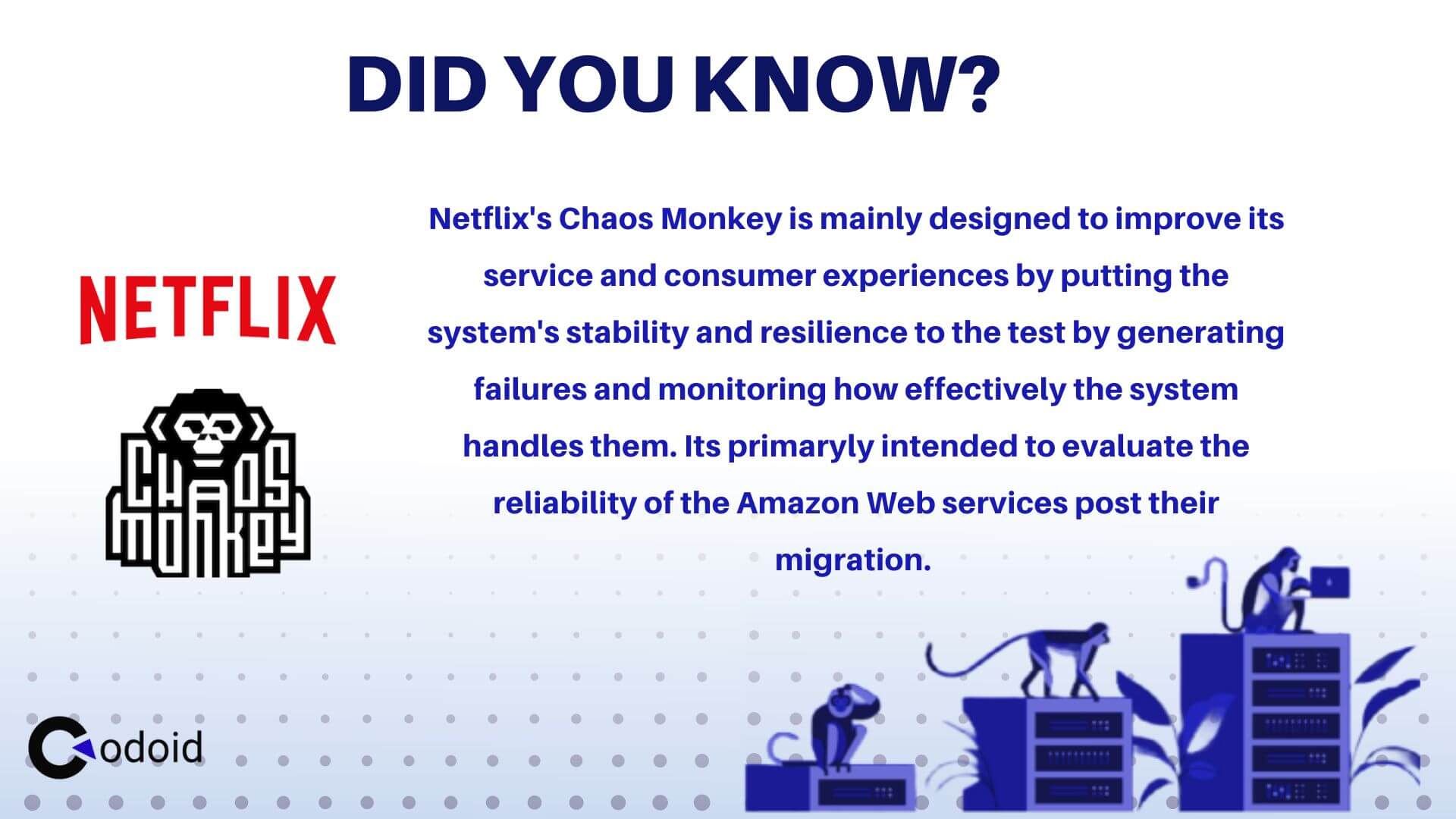

Did You Know?

A very fascinating aspect of OTT testing and Netflix is that Netflix developed its own testing tool called Chaos Monkey to test the resilience of its Amazon Web Services. The concept behind Chaos monkey was that it would intentionally disrupt the service by terminating key instances in production to see how well the other systems managed.

But why is the OTT giant so much focused on testing? To answer this question, we would have to take a look back in time to find out how Netflix and other OTT services emerged.

The Emergence of OTT