by Rajesh K | Apr 1, 2025 | Selenium Testing, Blog, Latest Post |

Automation testing is an integral part of modern software development, enabling faster releases and more reliable applications. As web applications continue to evolve, the demand for robust testing solutions has increased significantly. Selenium, a powerful and widely used open-source testing framework, plays a crucial role in automating web browser interactions, allowing testing teams to validate application functionality efficiently across different platforms and environments. However, while Selenium test automation offers numerous advantages, it also comes with inherent challenges in Selenium automation testing that can impact the efficiency,, stability, and maintainability of test suites. These challenges range from handling flaky tests and synchronization issues to managing complex test suites and ensuring seamless integration with CI/CD pipelines. Overcoming these obstacles requires strategic planning, best practices, and the right set of tools.

This blog post explores the most common challenges faced during Selenium test automation and provides practical solutions to address them. By understanding these difficulties and implementing effective strategies, teams can minimize risks, enhance test reliability, and improve the overall success of their Selenium automation efforts.

1. Flaky Tests and Instability

Flaky tests are those that can pass or fail unpredictably without any changes made to the application. This inconsistency makes testing unreliable and can lead to confusion in the development process.

Example Scenario:

Consider testing the “Proceed to Checkout” button on an e-commerce site. Occasionally, the test passes; at other times, it fails without any modifications to the test script or application.

Possible Causes:

1. Slow Page Load: The checkout page loads slowly, causing the test to attempt clicking the button before it’s fully rendered.

2. Rapid Test Execution: The test script executes actions faster than the page loads its elements, leading to interactions with elements that aren’t ready.

3. Network or Server Delays: Variations in internet speed or server response times can cause inconsistent page load durations.

Solutions to Reduce Flaky Tests

1. Identify & Quarantine Flaky Tests:

- Isolate unreliable tests from the main test suite to prevent them from affecting overall test stability. Document and analyze them separately for debugging.

2. Analyze and Address Root Causes:

- Investigate the underlying issues (e.g., synchronization problems, environment instability) and implement targeted fixes.

3. Introduce Retry Mechanisms:

- Automatically re-run failed tests to determine whether the failure is temporary or a real issue.

4. Optimize Page Load Priorities:

- Adjust the application’s loading sequence to prioritize critical elements like buttons and forms.

5. Regular Test Execution:

- Run tests frequently to identify patterns and potential flaky behavior.

2. Complex Synchronization Handling

In automated testing, synchronization ensures that the test waits for elements to load or become ready before interacting with them. If synchronization is not handled properly, tests can fail randomly.

Common Challenges:

1. Dynamic Content:

Some web pages load content after the page has already started, like product images or lists. If the test tries to click something before it’s ready, it will fail.

2. JavaScript Changes:

Modern apps often use JavaScript to change the page without fully reloading it. This can make elements appear or disappear unexpectedly, causing timing issues for the test.

3. Network Delays:

Sometimes, slow network connections or server delays can cause elements to load at different speeds. If the test clicks something before it’s ready, it can fail.

How to Handle Synchronization:

Explicit Waits:

- This is the best way to make sure the test waits until an element is ready before interacting with it. You set a condition, like “wait until the button is visible” before clicking it.

Implicit Waits:

- This tells the test to wait a set amount of time for all elements. It’s less specific than explicit waits but can be useful for general delays.

Retry Logic:

- Sometimes, tests fail due to temporary delays (e.g., slow page load). A retry mechanism can automatically try the test again a few times before marking it as a failure.

Polling for Changes:

- For elements that change over time (like loading spinners), you can set up the test to repeatedly check until the element is ready.

Wait for JavaScript to Finish:

- In JavaScript-heavy sites, make sure to wait until all scripts finish running before interacting with the page.

3. Steep Learning Curve for Beginners

A steep learning curve means beginners find it hard to learn something because it’s difficult or overwhelming at first. In automated testing, this happens when you’re learning complex tools and concepts all at once.

How to Make It Easier :

- Learn Programming Basics (variables, loops, functions)

- Start with Simple Frameworks (JUnit for Java, PyTest for Python)

- Use Version Control (Git for managing test scripts)

- Understand How WebDriver Works (locating elements, handling dynamic content)

- Learn Debugging (using logs and breakpoints to fix errors)

- Use Waits for Synchronization (explicit waits for elements, implicit waits for delays)

- Run Tests in Parallel (speed up testing with multiple browsers)

- Integrate with CI/CD (automate tests with Jenkins or GitLab CI)

4. Maintaining Large Test Suites

As your test suite grows, it becomes harder to manage due to frequent UI changes and redundant code.

Common Issues:

- When the UI changes, many tests that rely on specific buttons or fields may fail.

- When locators (like XPaths or CSS selectors) change, you need to update multiple tests, which is time-consuming.

- Without automated reports, it’s harder to track test results and failures.

Solutions:

Use Modular Design Patterns:

- Break tests into smaller parts (e.g., Page Object Model). This way, if an element changes, you only update it in one place, not across all tests.

Use Version Control and CI/CD:

- Version Control (Git): Keep track of changes to your test code and collaborate more easily.

- CI/CD Pipelines: Automatically run tests when code changes, ensuring your tests are always up-to-date.

Integrate Reporting Tools:

- Use tools like Allure or Extent Reports to automatically generate easy-to-read test reports that show which tests passed or failed.

Run Tests in Parallel:

- Use tools like Selenium Grid to run tests across multiple browsers at once, speeding up test execution.

5. Limited Native Support for Modern Web Features

Modern web apps often use dynamic loading, Shadow DOM, and JavaScript-heavy components, which Selenium struggles to handle directly.

Challenges in Selenium with Modern Web Features:

1. Dynamic Loading:

Many web pages load content dynamically using JavaScript, which means elements might not be available when Selenium tries to interact with them.

2. Shadow DOM:

The Shadow DOM encapsulates elements, making them hard to access with Selenium because it doesn’t natively support interacting with these hidden parts of the page.

3. JavaScript-Heavy Components:

Modern apps often rely heavily on JavaScript to manage interactive elements like buttons or dropdowns. Selenium can struggle with these components because it’s not built to handle complex JavaScript interactions.

4. Network Requests:

Selenium doesn’t have built-in tools for handling or intercepting network requests and responses (e.g., mocking API responses or checking network speed).

Solutions for Handling These Issues:

1. Use JavaScriptExecutor:

You can use JavaScriptExecutor in Selenium to run JavaScript code directly in the browser. This helps with handling dynamic or JavaScript-heavy components.

- Example: Trigger clicks or other actions that are hard to manage with Selenium alone.

2. Workaround for Shadow DOM:

Use JavaScriptExecutor to access the Shadow DOM and interact with its elements since Selenium doesn’t directly support it.

- Example: Retrieve the shadow root and then find elements inside it.

3. Use Puppeteer for Network Requests:

Puppeteer is a Node.js tool that works well with dynamic JavaScript and can handle network requests. You can integrate Puppeteer with Selenium to manage network conditions and API responses.

4. Headless Browsing:

Running browsers in headless mode (without the graphical interface) speeds up tests, especially when dealing with complex JavaScript or dynamic web features.

6. Scalability and Parallel Execution Challenges

Running multiple test cases simultaneously can significantly improve efficiency, but setting up Selenium Grid for parallel execution can be complex.

Challenges:

1. Configuration Complexity: Setting up Selenium Grid requires configuring multiple machines (or nodes) that interact with a central hub. This setup can be complex, especially when working with multiple browsers or environments.

2. Network Latency Between Hub and Nodes: When tests are distributed across different machines or environments, network delays can slow down the test execution. Communication between the Selenium Hub and its nodes can introduce additional latency.

3. Cross-Environment Inconsistencies: Running tests on different environments (e.g., different browsers, operating systems) can lead to inconsistencies. The tests might behave differently depending on the environment, making it harder to ensure reliability across all scenarios.

Solutions:

1. Use Cloud-Based Services: Instead of setting up your own Selenium Grid, use cloud-based services like BrowserStack or Sauce Labs. These services provide ready-to-use grids that allow you to run tests on multiple browsers and devices without worrying about setting up and maintaining your own infrastructure.

- Example: BrowserStack and Sauce Labs offer cloud infrastructure that automatically handles network latency and environment management, making parallel test execution easier.

2. Optimize Test Execution Order: To reduce bottlenecks in parallel test execution, carefully plan the test execution order. Prioritize tests that can run independently of others and group them by their dependencies. This minimizes waiting time and ensures that tests don’t block each other.

- Example: Run tests that don’t depend on previous ones in parallel, and sequence dependent tests in a way that they don’t block others from executing.

7. Poor CI/CD Integration Without Additional Setup

Integrating Selenium into CI/CD pipelines is crucial for continuous testing, but it requires additional setup and configurations.

Challenges:

1. Managing Browser Drivers on CI Servers: In a CI/CD environment, managing browser drivers (e.g., ChromeDriver, GeckoDriver) on CI servers can be a hassle. Different versions of browsers may require specific driver versions, and updating or maintaining them manually adds complexity.

2. Handling Headless Execution: Headless browsers are essential for running tests in CI/CD pipelines without the need for a graphical interface. However, configuring headless browsers to run properly on CI servers, especially when dealing with different environments, can be tricky.

3. Integrating Test Reports with Build Pipelines: Without proper integration, test results might not be easy to access or analyze. It’s important to have a smooth flow where test reports (e.g., from Selenium tests) can be integrated into build pipelines for easy tracking and troubleshooting.

Solution:

- Use WebDriverManager to manage browser drivers on CI servers automatically.

- Run browsers in headless mode to speed up test execution.

- Integrate test reports like Allure or Extent Reports into the build pipeline for easy access to test results.

- Use Docker containers to ensure consistent test environments across different setups.

8. No Built-in Support for API Testing

Selenium is great for UI testing, but it doesn’t support API testing natively. Modern applications require both UI and API tests to ensure everything works properly, so you need to use additional tools for API testing, which can make the testing process more complicated.

Impact of the Limitation:

1. Separate Tools for API Testing:

- You need tools like Postman or RestAssured for API testing, which means using multiple tools for different types of tests. This can create extra work and confusion.

2. Fragmented Testing Process:

- Having to use separate tools for UI and API testing makes managing and tracking results harder. It can lead to inconsistent test strategies and longer debugging times.

Solutions:

1. Use Hybrid Frameworks for Both API and UI Testing:

Combine Selenium with an API testing tool like RestAssured in one testing framework. This allows you to test both the UI and the API together in the same test suite, making everything more streamlined and efficient.

2. Use Tools Like Cypress or Playwright:

Cypress and Playwright are modern testing tools that support both UI and API testing in one framework. These tools simplify testing by allowing you to test the front-end and back-end together, reducing the need for separate tools.

9. Handling Browser Compatibility Issues

Selenium supports various browsers, but browser compatibility issues can lead to discrepancies in how tests behave across different browsers. This is common because browsers like Chrome, Safari, and Edge may render pages differently or handle JavaScript in slightly different ways.

Issues:

1. Scripts Fail on Different Browsers:

- A script that works perfectly in Chrome might fail in Safari or Edge due to differences in how each browser handles certain features or rendering.

2. Cross-Browser Rendering Inconsistencies:

- The appearance of elements or the behavior of interactive features can differ from one browser to another, leading to inconsistent test results.

Solutions:

1. Test Regularly on Multiple Browsers Using Selenium Grid:

- Set up Selenium Grid to run tests across different browsers in parallel. This allows you to test your application in multiple browsers (Chrome, Firefox, Safari, Edge, etc.) and identify browser-specific issues early.

- Selenium Grid lets you distribute tests to different machines or environments, ensuring you cover all target browsers in your testing.

2. Implement Browser-Specific Handling:

- In cases where browsers behave very differently (e.g., handling certain JavaScript features), you can add browser-specific logic in your tests. This ensures that your tests work consistently across browsers, even if some need special handling.

10. Dependency on Third-Party Tools

To achieve comprehensive automation with Selenium, you often need to integrate it with various third-party tools, which can increase the complexity of your test setup. These tools help extend Selenium’s functionality but also add dependencies that must be managed.

Dependencies Include:

1. Test Frameworks: Tools like TestNG, JUnit, and Pytest are needed to organize and execute tests.

2. Build Tools: Maven and Gradle are often required for dependency management and to build and run tests.

3. Reporting Libraries: Libraries like ExtentReports and Allure are used to generate and manage test reports.

4. Grid Setups or Cloud Services: For parallel execution and scaling tests, tools like Selenium Grid or cloud services like BrowserStack or Sauce Labs are used.

Conclusion

While Selenium remains a powerful automation tool, its limitations require teams to adopt best practices, integrate complementary tools, and continuously optimize their testing strategies. By addressing these challenges with structured approaches, Selenium can still be a valuable asset in modern automation testing workflows. Leading automation testing service providers like Codoid specialize in overcoming these challenges by offering advanced testing solutions, ensuring seamless test automation, and enhancing the overall efficiency of testing strategies.

Frequently Asked Questions

-

What are the biggest challenges in Selenium automation testing?

Some of the key challenges include handling dynamic web elements, flaky tests, cross-browser compatibility, pop-ups and alerts, CAPTCHA and OTP handling, test data management, and integrating tests with CI/CD pipelines.

-

How do you handle dynamic elements in Selenium automation?

Use dynamic XPath or CSS selectors, explicit waits (WebDriverWait), and JavaScript Executor to interact with elements that frequently change.

-

What are the best practices for cross-browser testing in Selenium?

Use Selenium Grid or cloud-based platforms like BrowserStack or Sauce Labs to run tests on different browsers. Also, regularly update WebDriver versions to maintain compatibility.

-

Can Selenium automate CAPTCHA and OTP verification?

Selenium cannot directly automate CAPTCHA or OTP, but workarounds include disabling CAPTCHA in test environments, using third-party API services, or fetching OTPs from the database.

-

How do you manage test data in Selenium automation?

Use external data sources like CSV, Excel, or databases. Implement data-driven testing with frameworks like TestNG or JUnit, and ensure test data is refreshed periodically.

-

What are the essential tools to enhance Selenium automation testing?

Popular tools that complement Selenium include TestNG, JUnit, Selenium Grid, BrowserStack, Sauce Labs, Jenkins, and Allure for test reporting and execution management.

by Rajesh K | Mar 28, 2025 | Performance Testing, Blog, Latest Post |

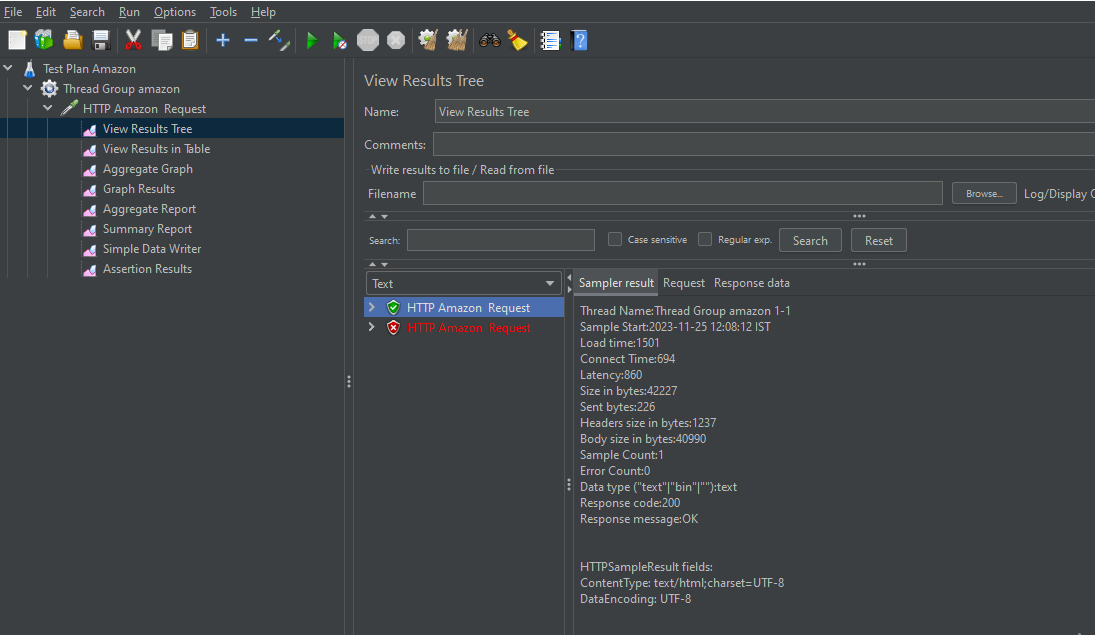

Before we talk about listeners in JMeter, let’s first understand what they are and why they’re important in performance testing. JMeter is a popular tool that helps you test how well a website or app works when lots of people are using it at the same time. For example, you can use JMeter to simulate hundreds or thousands of users using your application all at once. It sends requests to your site and keeps track of how it responds. But there’s one important thing to know. JMeter collects all this test data in the background, and you can’t see it directly. That’s where listeners come in. Listeners are like helpers that let you see and understand what happened during the test. They show the results in ways that are easy to read, like simple tables, graphs, or even just text. This makes it easier to analyze how your website performed, spot any issues, and improve things before real users face problems.

In this blog, we’ll look at how JMeter listeners work, how to use them effectively, and some tips to make your performance testing smoother even if you’re new to it. Let’s start by seeing the list of JMetere Listeners and what they show.

List of JMeter Listeners

Listeners display test results in various formats. Below is a list of commonly used listeners in JMeter:

- View Results Tree – Displays detailed request and response logs.

- View Results in Table – Shows response data in tabular format.

- Aggregate Graph – Visualizes aggregate data trends.

- Summary Report – Provides a consolidated one-row summary of results.

- View Results in Graph – Displays response times graphically.

- Graph Results – Presents statistical data in graphical format.

- Aggregate Report – Summarizes test results statistically.

- Backend Listener – Integrates with external monitoring tools.

- Comparison Assertion Visualizer – Compares response data against assertions.

- Generate Summary Results – Outputs summarized test data.

- JSR223 Listener – Allows advanced scripting for result processing.

- Response Time Graph – Displays response time variations over time.

- Save Response to a File – Exports responses for further analysis.

- Assertion Results – Displays assertion pass/fail details.

- Simple Data Writer – Writes raw test results to a file.

- Mailer Visualizer – Sends performance reports via email.

- BeanShell Listener – Enables custom script execution during testing.

Preparing the JMeter Test Script Before Using Listeners

Before adding listeners, it is crucial to have a properly structured JMeter test script. Follow these steps to prepare your test script:

1. Create a Test Plan – This serves as the foundation for your test execution.

2. Add a Thread Group – Defines the number of virtual users (threads), ramp-up period, and loop count.

3. Include Samplers – These define the actual requests (e.g., HTTP Request, JDBC Request) sent to the server.

4. Add Config Elements – Such as HTTP Header Manager, CSV Data Set Config, or User Defined Variables.

5. Insert Timers (if required) – Used to simulate real user behavior and avoid server overload.

6. Use Assertions – Validate the correctness of the response data.

Once the test script is ready and verified, we can proceed to add listeners to analyze the test results effectively.

Adding Listeners to a JMeter Test Script

Including a listener in a test script is a simple process, and we have specified steps that you can follow to complete it.

Steps to Add a Listener:

1. Open JMeter and load your test plan.

2. Right-click on the Thread Group (or any desired element) in the Test Plan.

3. Navigate to “Add” → “Listener”.

4. Select the desired listener from the list (e.g., “View Results Tree” or “Summary Report”).

5. The listener will now be added to the Test Plan and will collect test execution data.

6. Run the test and observe the results in the listener.

Key Point:

As stated earlier, a listener is an element in JMeter that collects, processes, and displays performance test results. It provides insights into how test scripts behave under load and helps identify performance bottlenecks.

But the key point to note is that all listeners store the same performance data. However, they present it differently. Some display data in graphical formats, while others provide structured tables or raw logs. Now let’s take a more detailed look at the most commonly used JMeter Listeners.

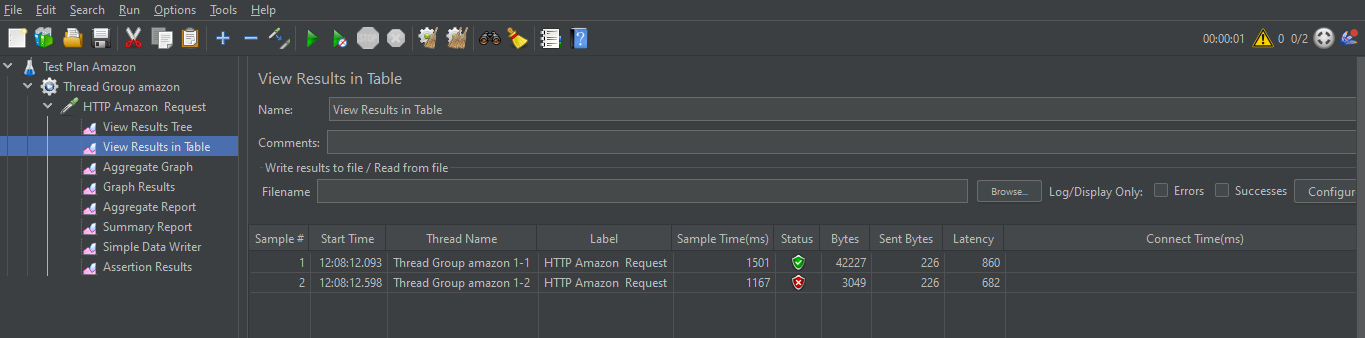

Commonly Used JMeter Listeners

Among all the JMeter listeners we mentioned earlier, we have picked out the most commonly used ones you’ll definitely have to know. We have chosen this based on our experience of delivering performance testing services addressing the needs of numerous clients. To make things easier for you, we have also specified the best use cases for these JMeter listeners so you can use them effectively.

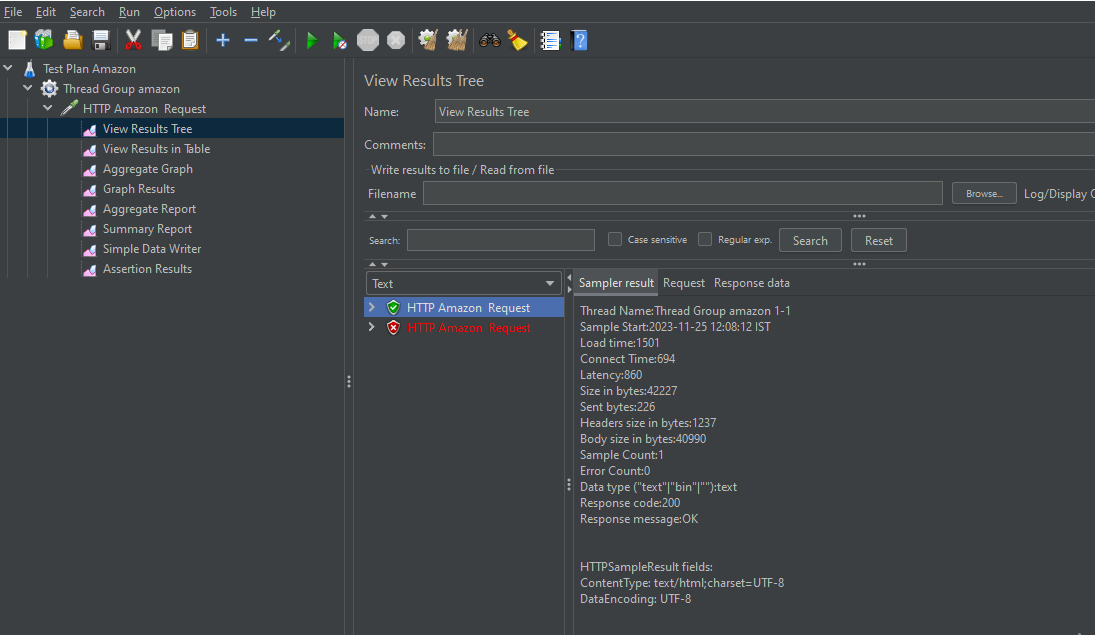

1. View Results Tree

View Results Tree listener is one of the most valuable tools for debugging test scripts. It allows testers to inspect the request and response data in various formats, such as plain text, XML, JSON, and HTML. This listener provides detailed insights into response codes, headers, and bodies, making it ideal for debugging API requests and analyzing server responses. However, it consumes a significant amount of memory since it stores each response, which makes it unsuitable for large-scale performance testing.

Best Use Case:

- Debugging test scripts.

- Verifying response correctness before running large-scale tests.

Performance Impact:

- Consumes high memory if used during large-scale testing.

- Not recommended for high-load performance tests.

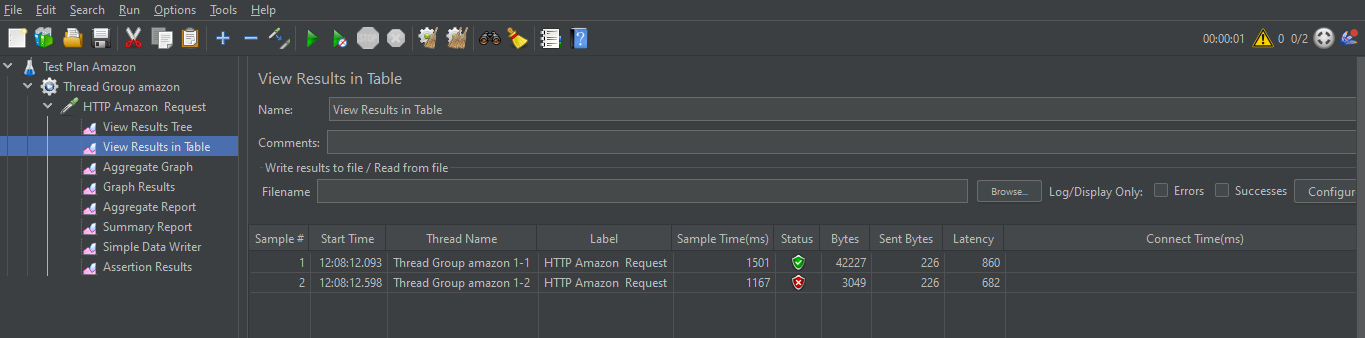

2. View Results in Table

View Results in Table listener organizes response data in a structured tabular format. It captures essential metrics like elapsed time, latency, response code, and thread name, helping testers analyze the overall test performance. While this listener provides a quick overview of test executions, its reliance on memory storage limits its efficiency when dealing with high loads. Testers should use it selectively for small to medium test runs.

Best Use Case:

- Ideal for small-scale performance analysis.

- Useful for manually checking response trends.

Performance Impact:

- Moderate impact on system performance.

- Can be used in moderate-scale test executions.

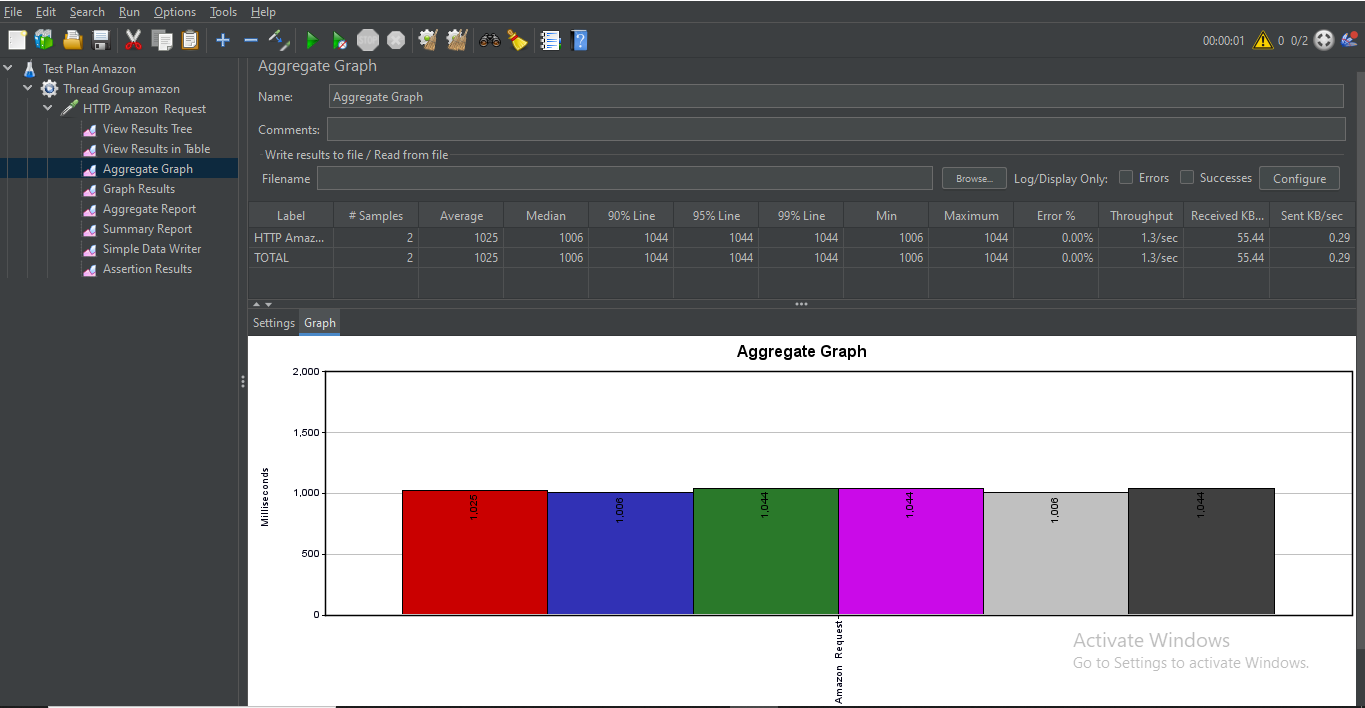

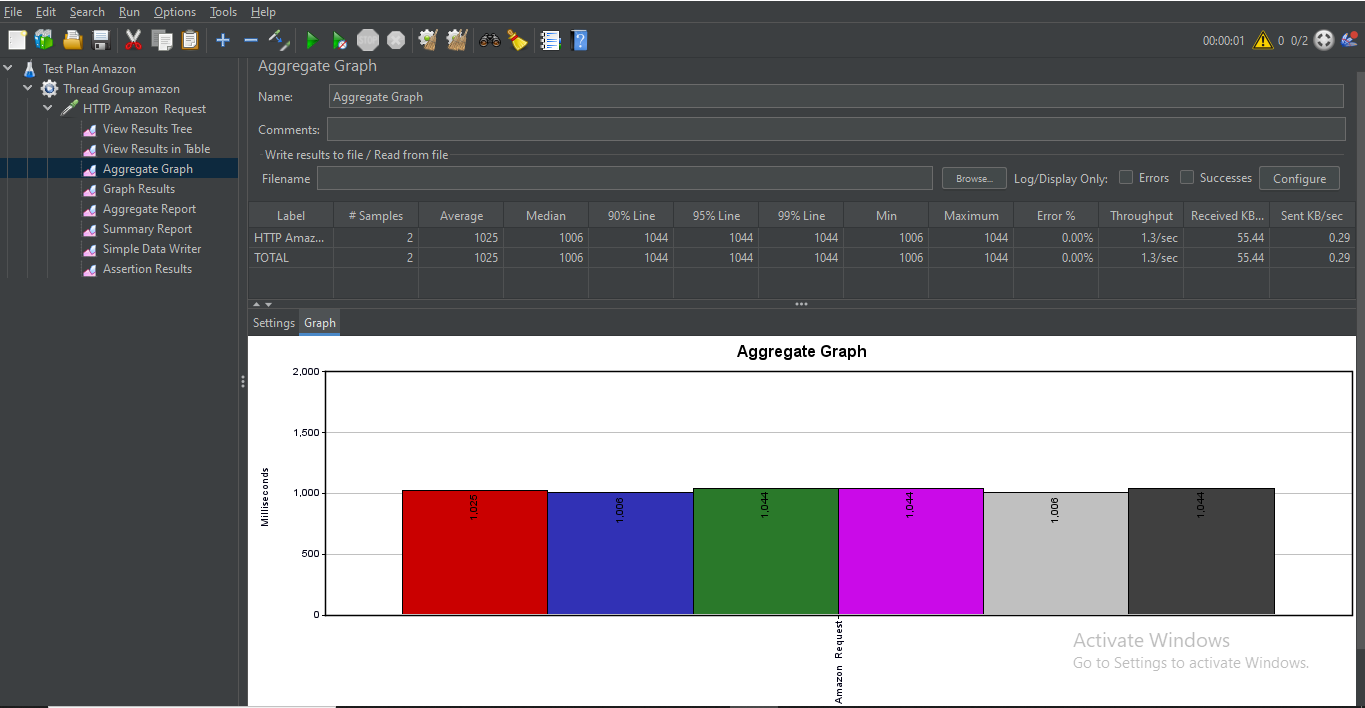

3. Aggregate Graph

Aggregate Graph listener processes test data and generates statistical summaries, including average response time, median, 90th percentile, error rate, and throughput. This listener is useful for trend analysis as it provides visual representations of performance metrics. Although it uses buffered data processing to optimize memory usage, rendering graphical reports increases CPU usage, making it better suited for mid-range performance testing rather than large-scale tests.

Best Use Case:

- Useful for performance trend analysis.

- Ideal for reporting and visual representation of results.

Performance Impact:

- Graph rendering requires additional CPU resources.

- Suitable for medium-scale test executions.

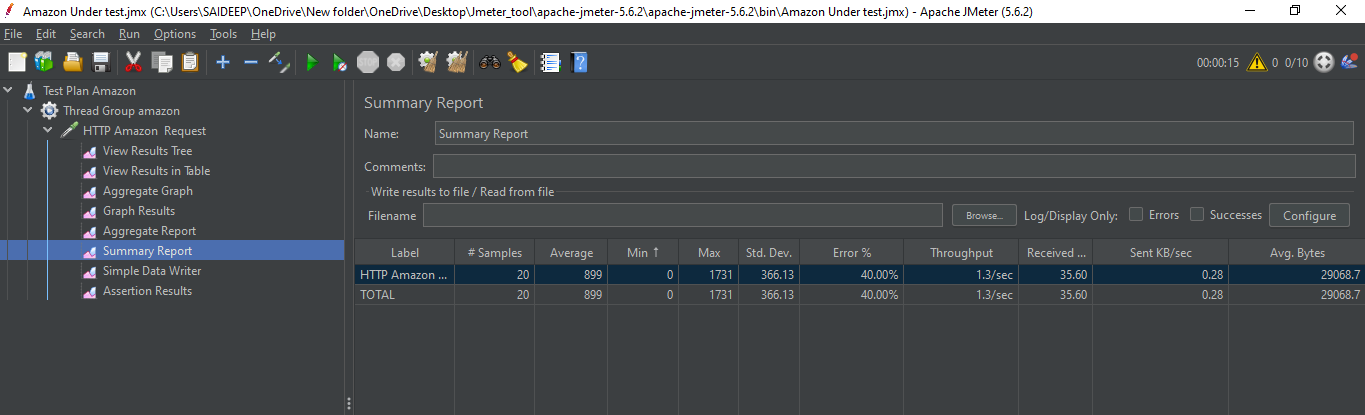

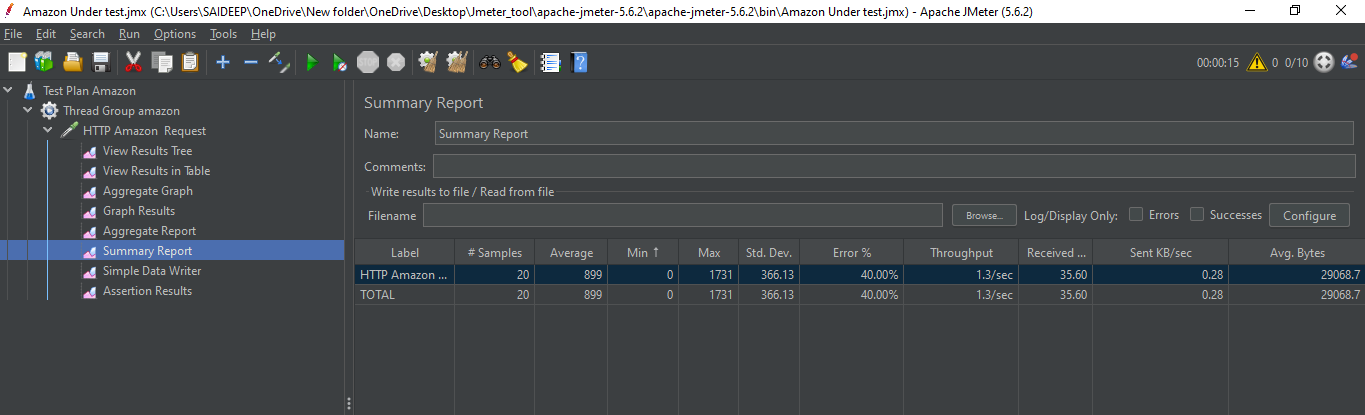

4. Summary Report

Summary Report listener is lightweight and efficient, designed for analyzing test results without consuming excessive memory. It aggregates key performance metrics such as total requests, average response time, minimum and maximum response time, and error percentage. Since it does not store individual request-response data, it is an excellent choice for high-load performance testing, where minimal memory overhead is crucial for smooth test execution.

Best Use Case:

- Best suited for large-scale performance testing.

- Ideal for real-time monitoring of test execution.

Performance Impact:

- Minimal impact, suitable for large test executions.

- Preferred over View Results Tree for large test plans.

Conclusion

JMeter listeners are essential for capturing and analyzing performance test data. Understanding their technical implementation helps testers choose the right listeners for their needs:

- For debugging: View Results Tree.

- For structured reporting: View Results in Table or Summary Report.

- For trend visualization: Graph Results and Aggregate Graph.

- For real-time monitoring: Backend Listener.

Choosing the right listener ensures efficient test execution, optimizes resource utilization, and provides meaningful performance insights.

Frequently Asked Questions

-

Which listener should I use for large-scale load testing?

For large-scale load testing, use the Summary Report or Backend Listener since they consume less memory and efficiently handle high user loads.

-

How do I save JMeter listener results?

You can save listener results by enabling the Save results to a file option in listeners like View Results Tree or by exporting reports from Summary Report in CSV/XML format.

-

Can I customize JMeter listeners?

Yes, JMeter allows you to develop custom listeners using Java by extending the AbstractVisualizer or GraphListener classes to meet specific reporting needs.

-

What are the limitations of JMeter listeners?

Some listeners, like View Results Tree, consume high memory, impacting performance. Additionally, listeners process test results within JMeter, making them unsuitable for extensive real-time reporting in high-load tests.

-

How do I integrate JMeter listeners with third-party tools?

You can integrate JMeter with tools like Grafana, InfluxDB, and Prometheus using the Backend Listener, which sends test metrics to external monitoring systems for real-time visualization.

-

How do JMeter Listeners help in performance testing?

JMeter Listeners help capture, process, and visualize test execution results, allowing testers to analyze response times, error rates, and system performance.

by Hannah Rivera | Mar 26, 2025 | Automation Testing, Blog, Latest Post |

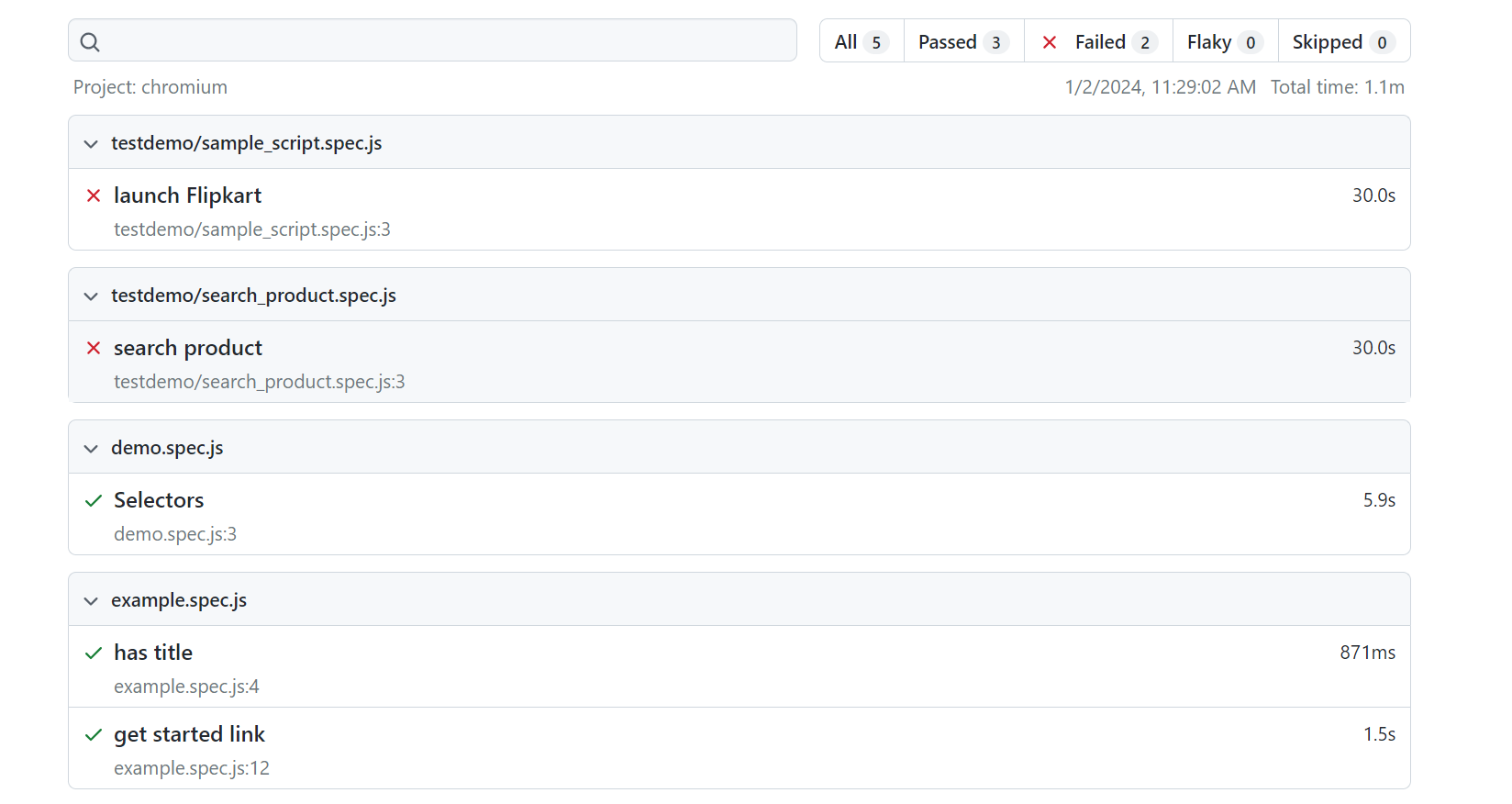

Reporting is a crucial aspect that helps QA engineers and developers analyze test execution, identify failures, and maintain software quality. If you are using Playwright Reports as your Automation Testing tool, your test automation reports will play a significant role in providing detailed insights into test performance, making debugging more efficient and test management seamless. Playwright has gained popularity among tech professionals due to its robust reporting capabilities and powerful debugging tools. It offers built-in reporters such as List, Line, Dot, HTML, JSON, and JUnit, allowing users to generate reports based on their specific needs. Additionally, Playwright supports third-party integrations like Allure, enabling more interactive and visually appealing test reports. With features like Playwright Trace Viewer, testers can analyze step-by-step execution details, making troubleshooting faster and more effective.

So in this blog, we will be exploring how you can leverage Playwright’s Reporting options to ensure a streamlined testing workflow, enhance debugging efficiency, and maintain high-quality software releases. Let’s start with the built-in reporting options in Playwright, and then move to the third party integrations.

Built-In Reporting Options in Playwright

There are numerous built-in Playwright reporting options to help teams analyze test results efficiently. These reporters vary in their level of detail, from minimal console outputs to detailed HTML and JSON reports. Below is a breakdown of each built-in reporting format along with sample outputs.

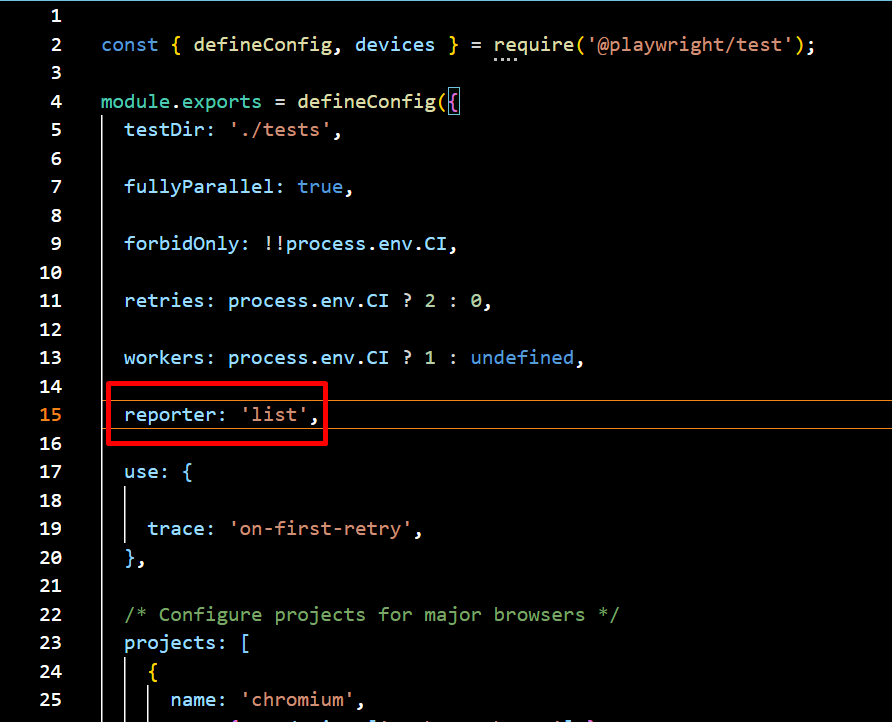

1. List Reporter

The List Reporter prints a line of output for each test, providing a clear and structured summary of test execution. It offers a readable format that helps quickly identify which tests have passed or failed. This makes it particularly useful for local debugging and development environments, allowing developers to analyze test results and troubleshoot issues efficiently

npx playwright test --reporter=list

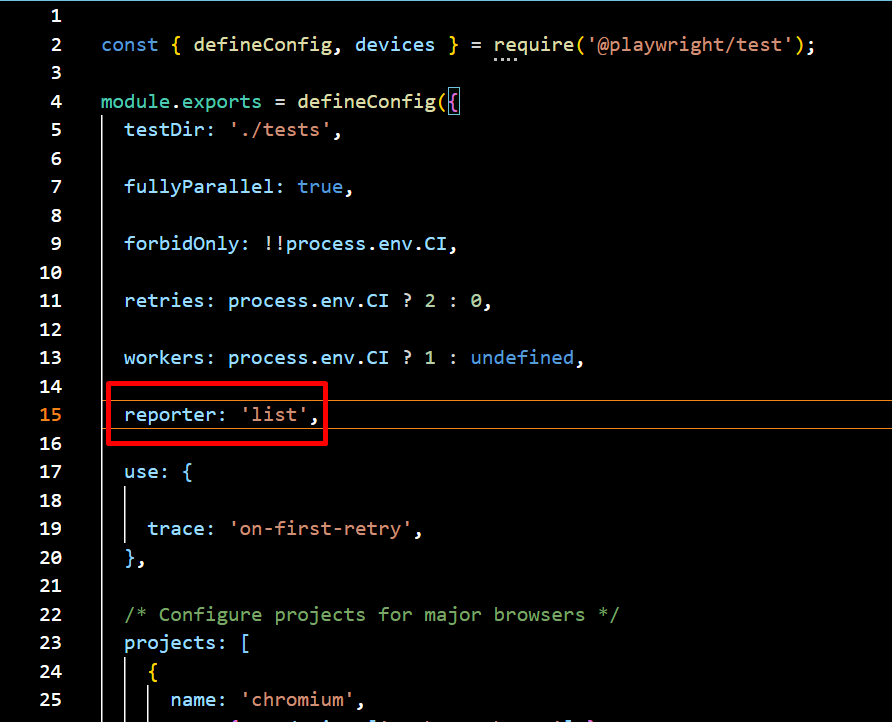

Navigate to the config.spec.js file and update the reporter setting to switch it to the list format for better readability and detailed test run output.

Sample Output:

✓ test1.spec.js: Test A (Passed)

✗ test1.spec.js: Test B (Failed)

→ Expected value to be 'X', but received 'Y'

✓ test2.spec.js: Test C (Passed)

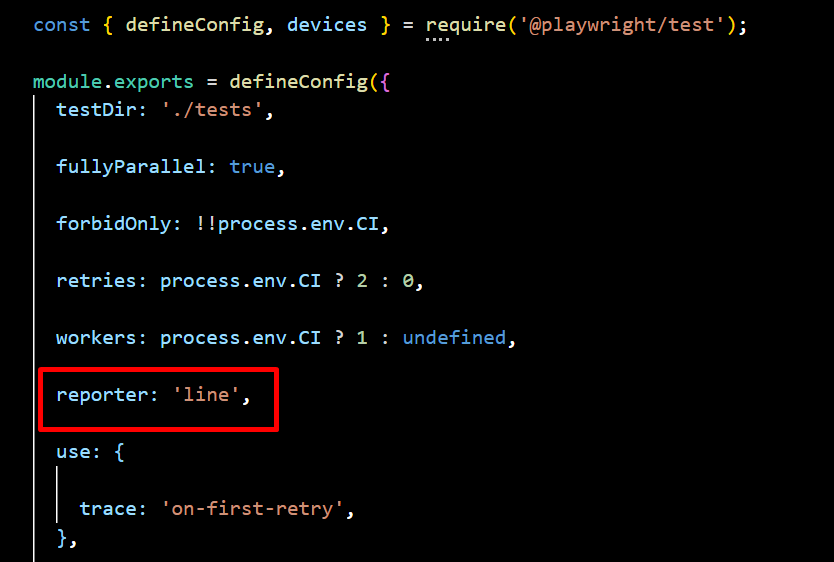

2. Line Reporter

The Line Reporter is a compact version of the List Reporter, displaying test execution results in a single line. Its minimal and concise output makes it ideal for large test suites, providing real-time updates without cluttering the terminal. This format is best suited for quick debugging and monitoring test execution progress efficiently.

npx playwright test --reporter=line

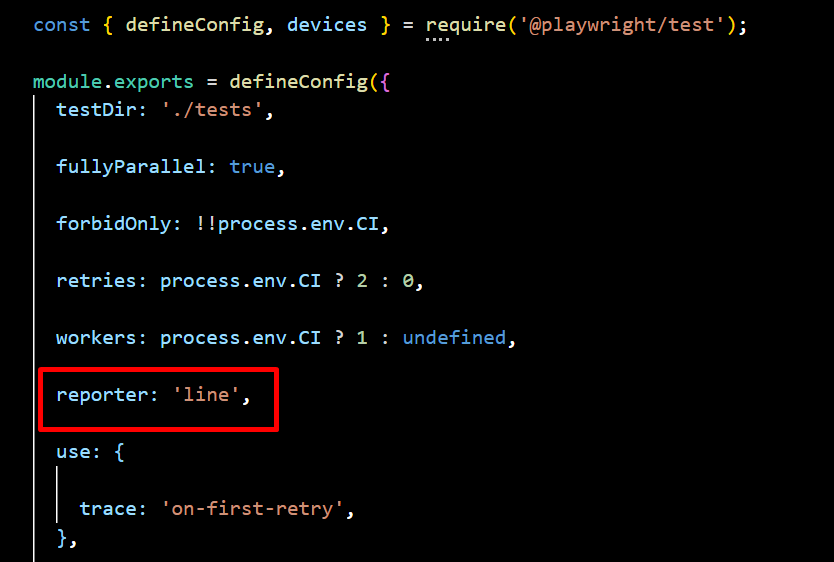

Navigate to the config.spec.js file and update the reporter setting to switch it to the line format for better readability and detailed test run output.

Sample Output:

3. Dot Reporter

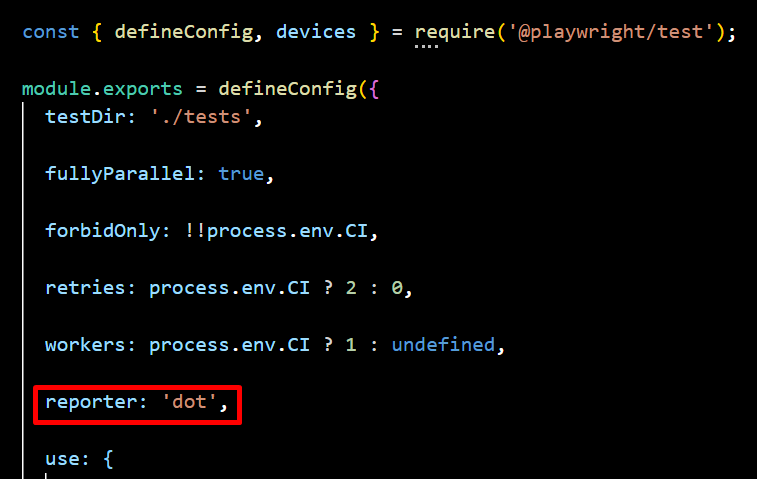

The Dot Reporter provides a minimalistic output, using a single character for each test—dots (.) for passing tests and “F” for failures. Its compact and simple format reduces log verbosity, making it ideal for CI/CD environments. This approach helps track execution progress efficiently without cluttering terminal logs, ensuring a clean and streamlined testing experience.

npx playwright test --reporter=dot

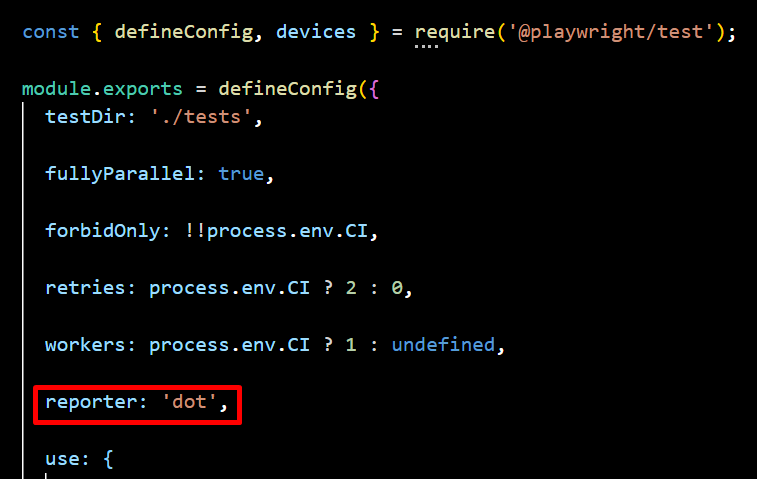

Navigate to the config.spec.js file and update the reporter setting to switch it to the dot format for better readability and detailed test run output.

Sample Output:

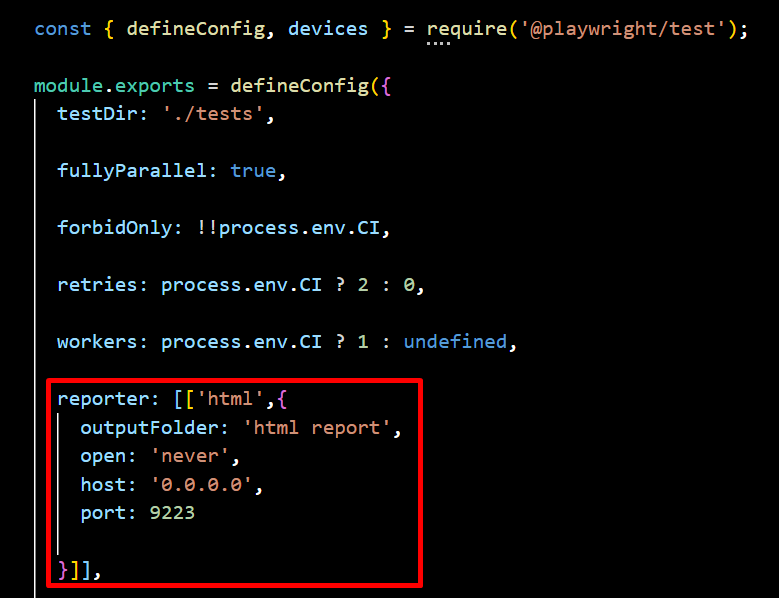

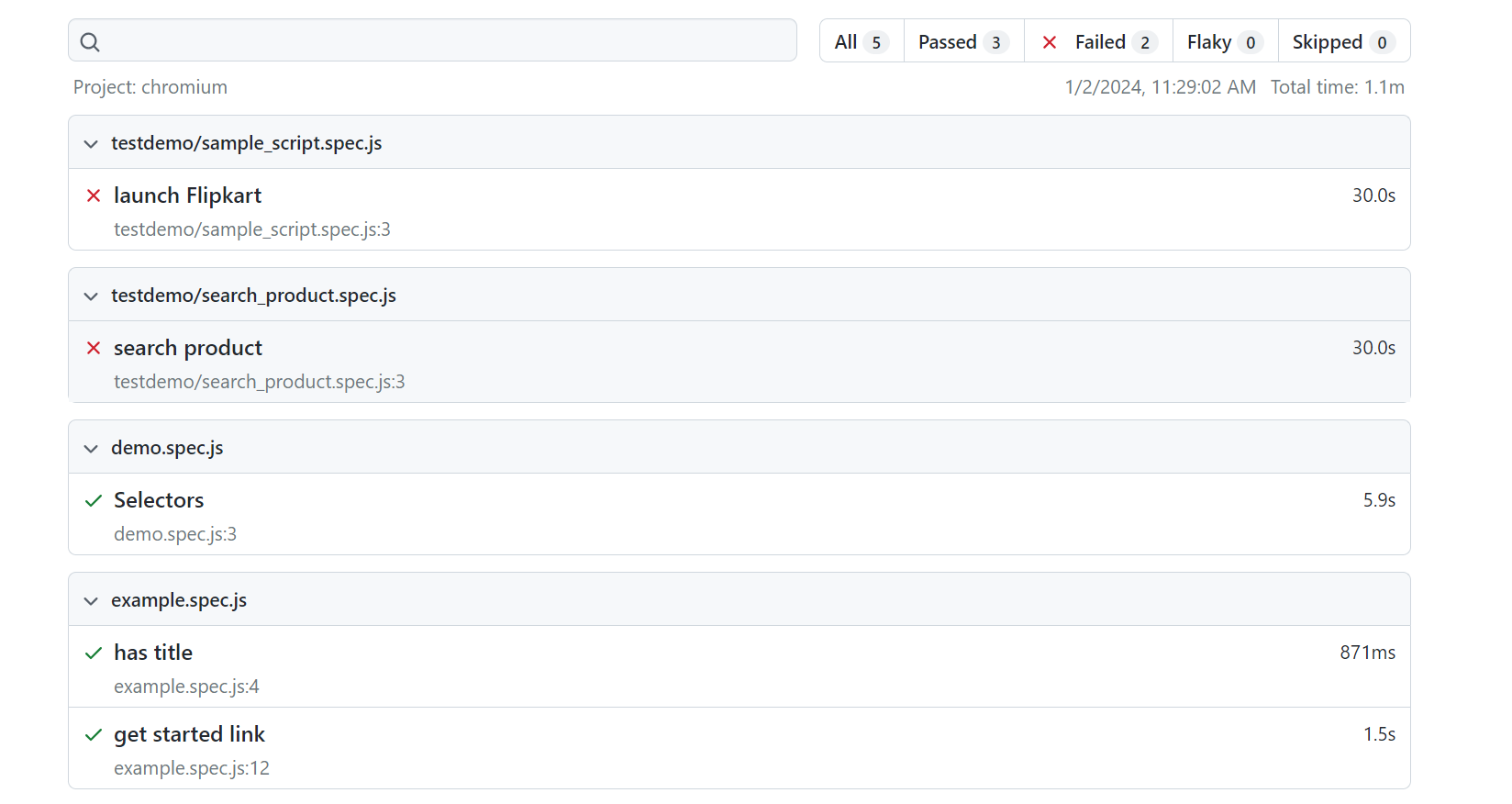

4. HTML Reporter

The HTML Reporter generates an interactive, web-based report that can be opened in a browser, providing a visually rich and easy-to-read summary of test execution. It includes detailed logs, screenshots, and test results, making analysis more intuitive. With built-in filtering and navigation features, it allows users to efficiently explore test outcomes. Additionally, its shareable format facilitates collaborative debugging across teams.

npx playwright test --reporter=html

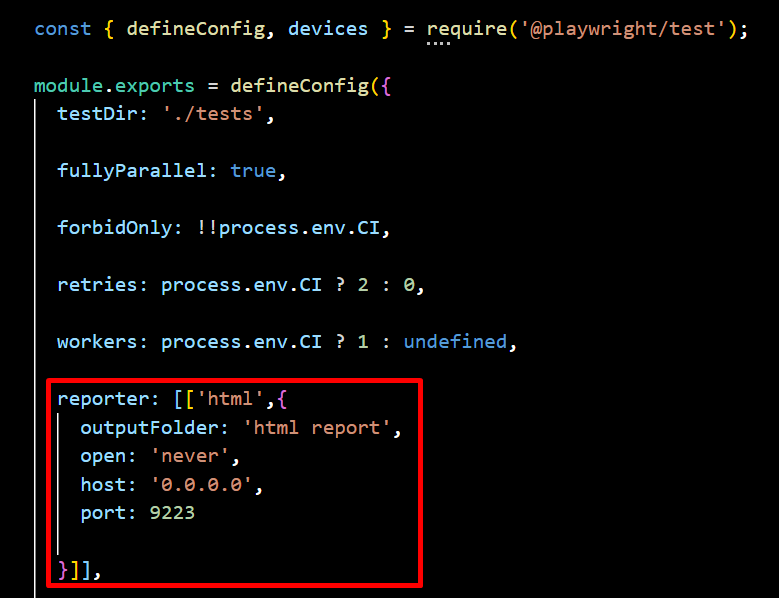

By default, when test execution is complete, the HTML Reporter opens the index.html file in the default browser. The open property in the Playwright configuration allows you to modify this behavior, with options such as always, never, and on-failures (default). If set to never, the report will not open automatically.

To specify where the report should open, use the host attribute to define the target IP address—setting it to 0.0.0.0 opens the report on localhost. Additionally, the port property lets you specify the port number, with 9223 being the default.

You can also customize the HTML report’s output location in the Playwright configuration file by specifying the desired folder. For example, if you want to store the report in the html-report directory, you can set the output path accordingly.

Sample Output:

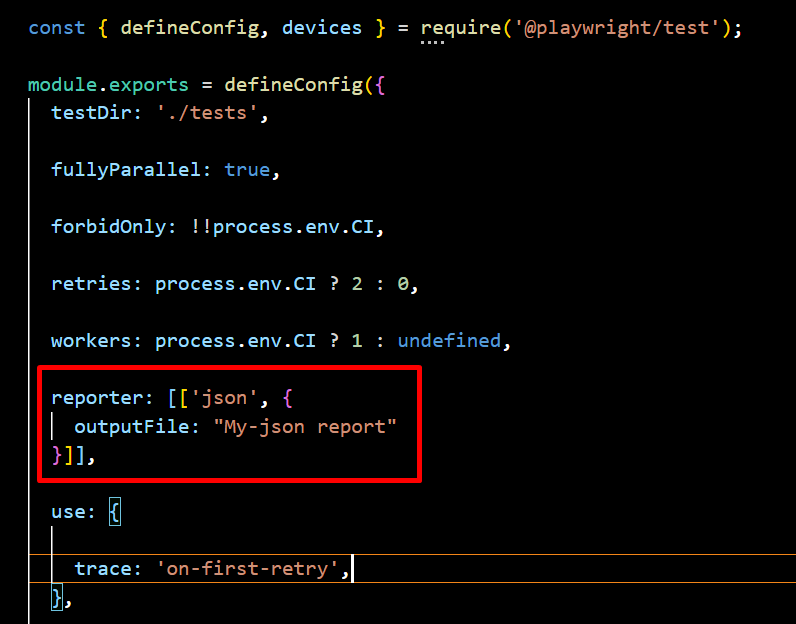

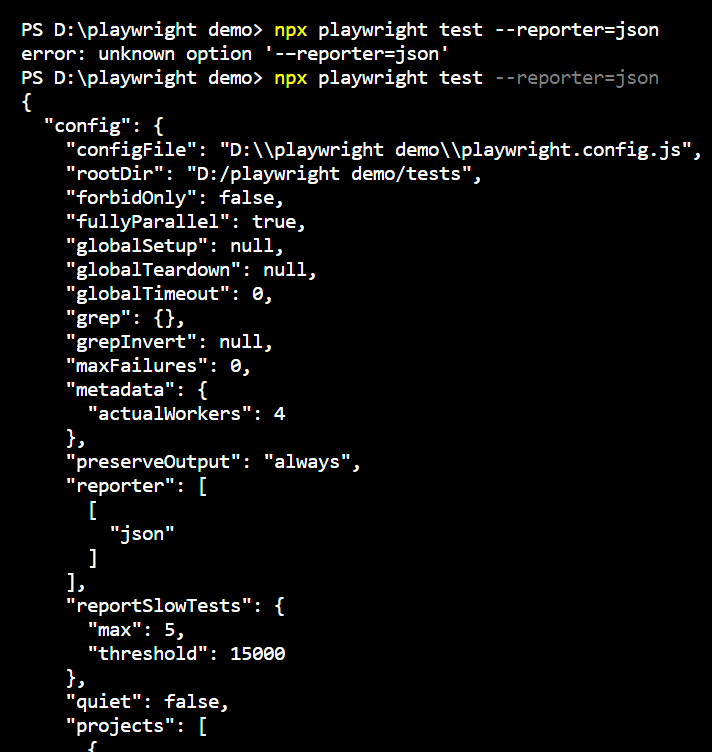

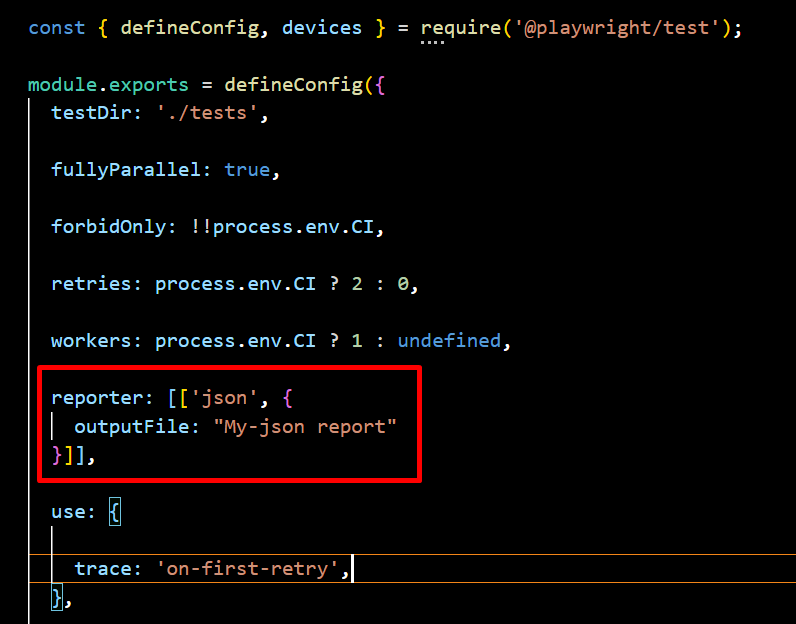

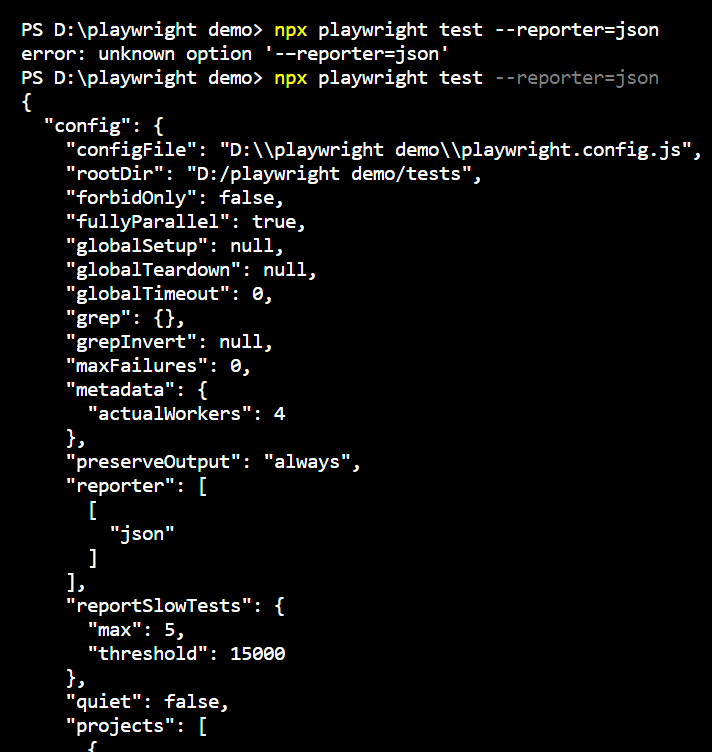

5. JSON Reporter

The JSON Reporter outputs test results in a machine-readable JSON format, making it ideal for data analysis, automation, and integration with dashboards or external analytics tools. It enables seamless test monitoring, log storage, and auditing, providing a structured way to track and analyze test execution.

npx playwright test --reporter=json

Navigate to the config.spec.js file and specify the output file, then you can write the JSON to a file.

Sample JSON Report Output:

{

"status": "completed",

"tests": [

{

"name": "Test A",

"status": "passed",

"duration": 120

},

{

"name": "Test B",

"status": "failed",

"error": "Expected value to be 'X', but received 'Y'"

}

]

}

You can generate and view the report in the terminal using this method.

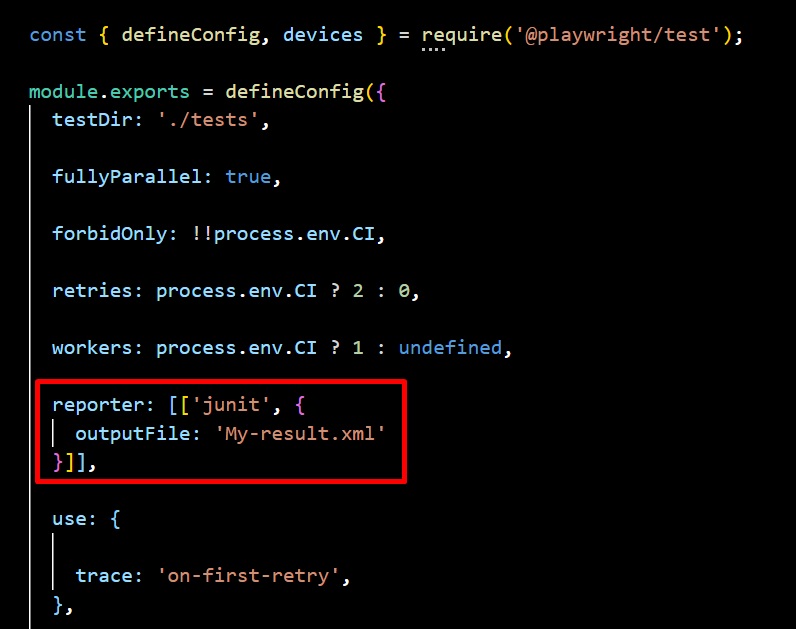

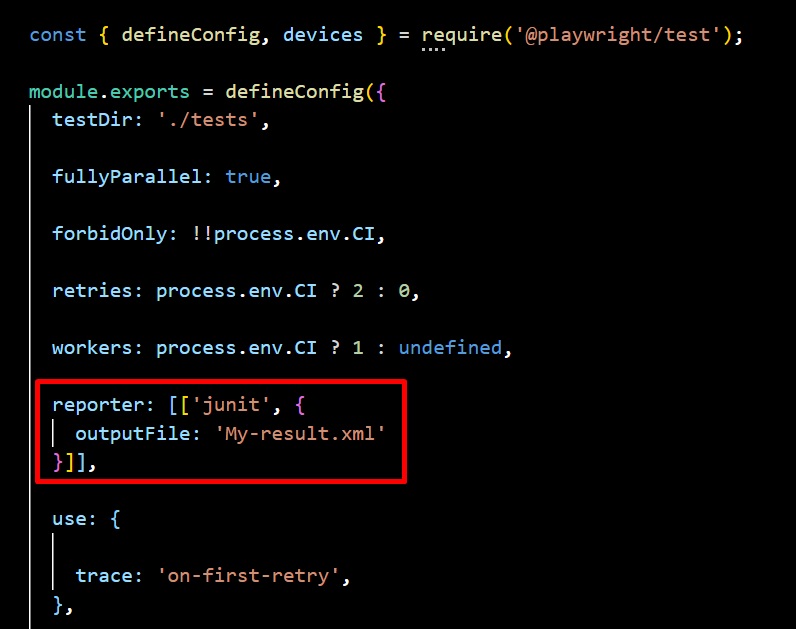

6. JUnit Reporter

The JUnit Reporter generates XML reports that seamlessly integrate with CI/CD tools like Jenkins and GitLab CI for tracking test results. It provides structured test execution data for automated reporting, ensuring efficient monitoring and analysis. This makes it ideal for enterprise testing pipelines, enabling smooth integration and streamlined test management.

npx playwright test --reporter=junit

Navigate to the config.spec.js file and specify the output file, then you can write the XML to a file.

Sample JUnit XML Output:

<testsuite name="Playwright Tests">

<testcase classname="test1.spec.js" name="Test A" time="0.12"/>

<testcase classname="test1.spec.js" name="Test B">

<failure message="Expected value to be 'X', but received 'Y'"/>

</testcase>

</testsuite>

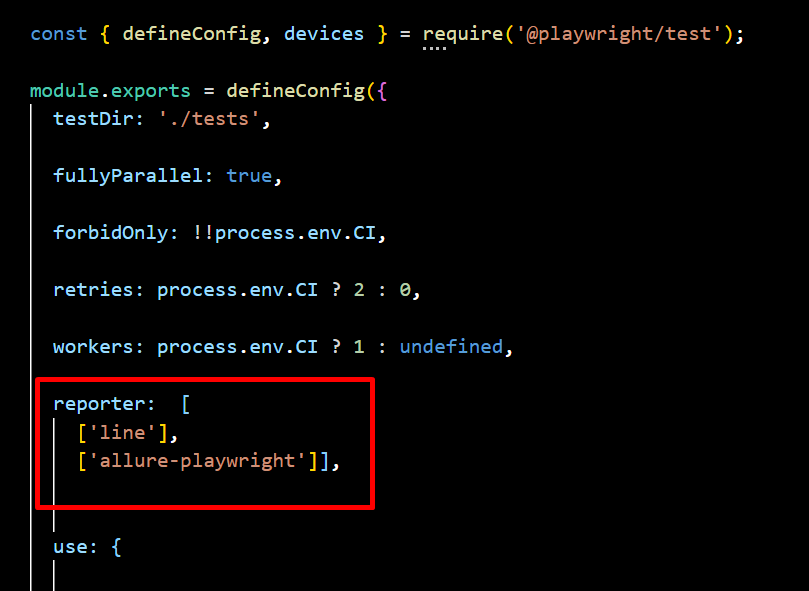

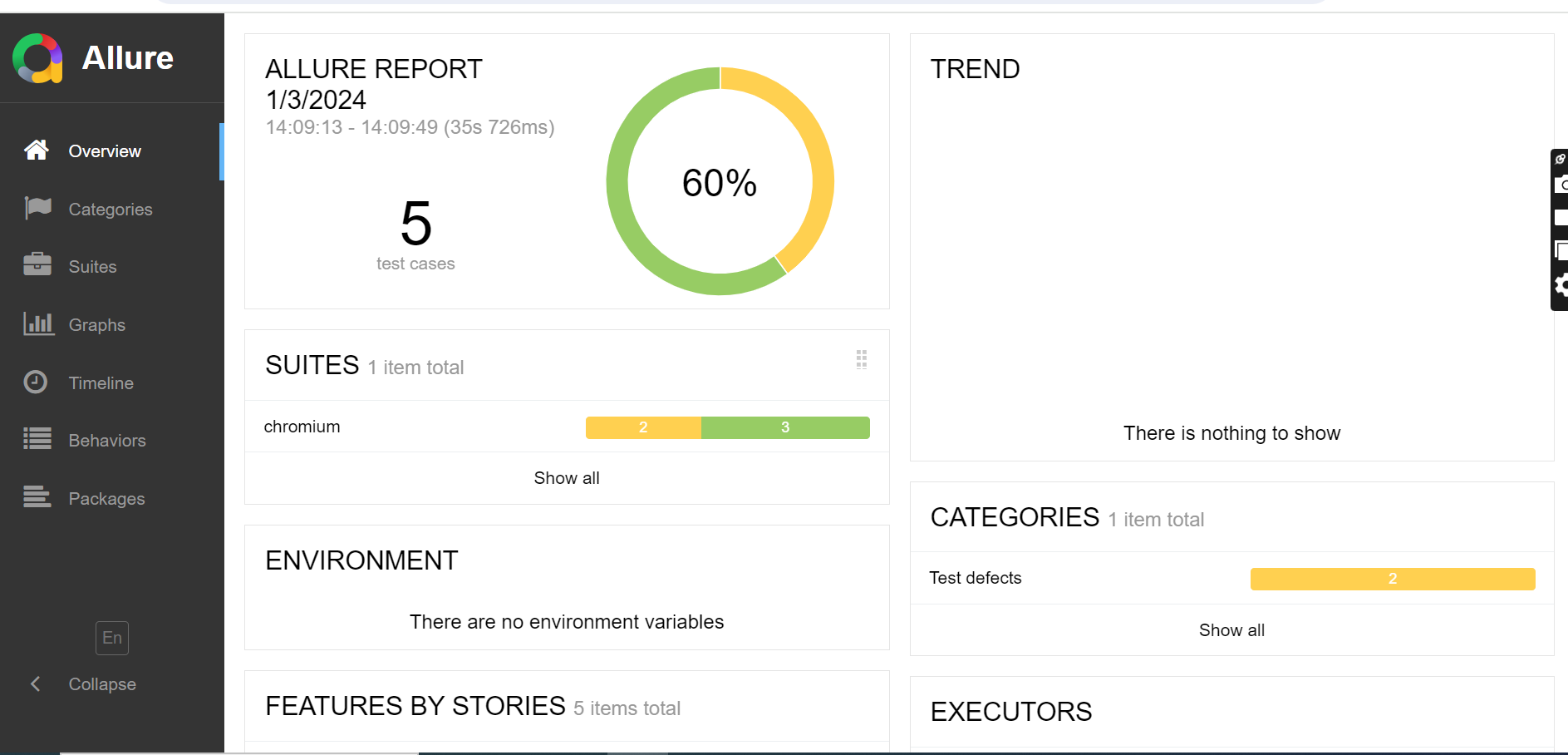

Playwright’s Third-Party Reports

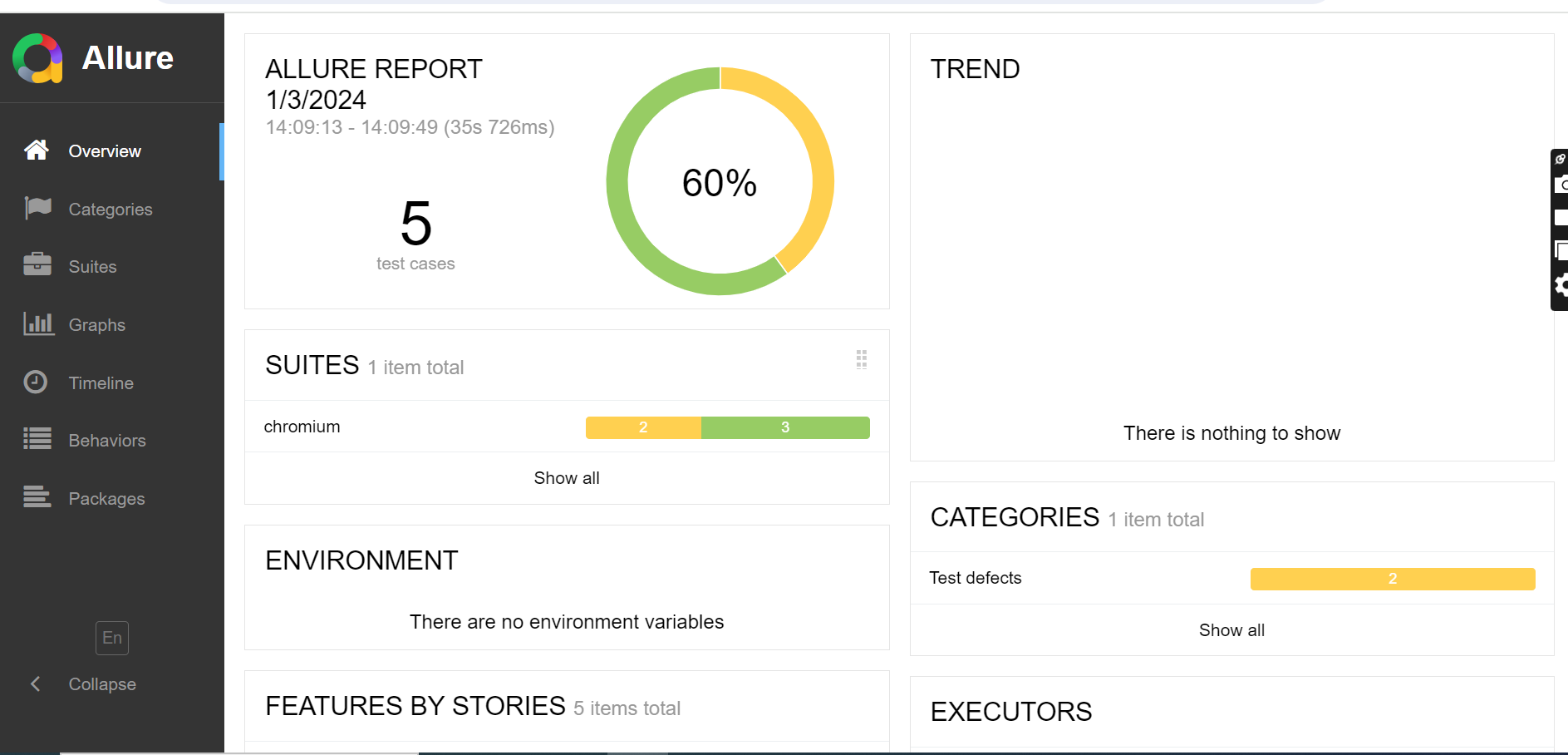

Playwright allows integration with various third-party reporting tools to enhance test result visualization and analysis. These reports provide detailed insights, making debugging and test management more efficient. One such integration is with Allure Report, which offers a comprehensive, interactive, and visually rich test reporting solution. By integrating Allure with Playwright, users can generate detailed HTML-based reports that include test statuses, execution times, logs, screenshots, and graphical representations like pie charts and histograms, improving test analysis and team collaboration.

Allure for Playwright

To generate an Allure report, you need to install the Allure dependency in your project using the following command

npm install allure-playwright --save-dev

Allure Command Line Utility

To view the Allure report in a web browser, you must install the Allure command-line utility as a project dependency

npm install allure-commandline --save-dev

Command Line Execution

You can run Playwright with Allure reporting by specifying the Allure reporter in the configuration and executing the following command:

npx playwright test --reporter=line,allure-playwright

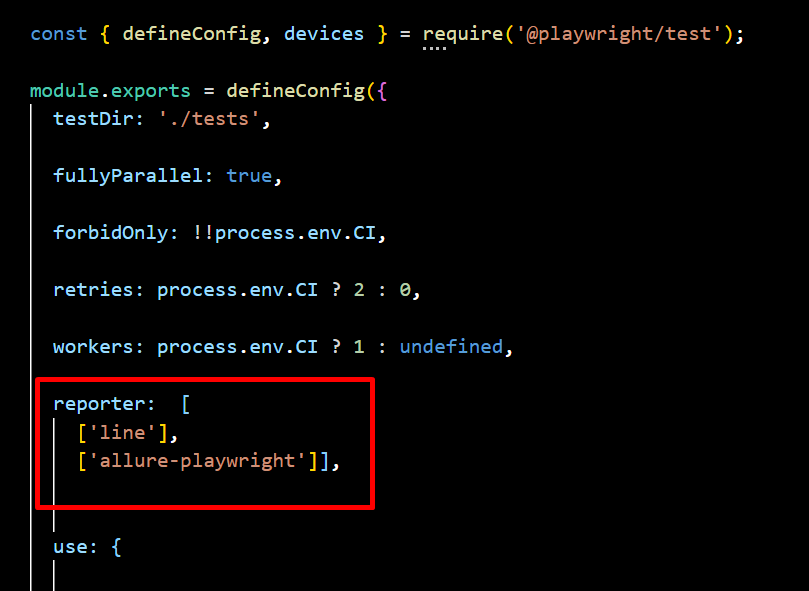

Playwright Config :

To run Playwright using a predefined configuration file, use the following command:

npx playwright test --config=playwright.allure.config.js

Upon successful execution, this will generate an “allure-results” folder. You then need to use the Allure command line to generate an HTML report:

npx allure generate ./allure-results

If the command executes successfully, it will create an “allure-report” folder. You can open the Allure report in a browser using:

npx allure open ./allure-report

Conclusion

Choosing the right Playwright report depends on your team’s needs. Dot, Line, and List Reporters are perfect for developers who need quick feedback and real-time updates during local testing. If your team needs a more visual approach, the HTML Reporter is great for analyzing results and sharing detailed reports with others. For teams working with CI/CD pipelines, JSON and JUnit Reporters are the best choice as they provide structured data that integrates smoothly with automation tools. If you need deeper insights and visual trends, third-party tools like Allure Report offer advanced analytics and better failure tracking.

Additionally, testing companies like Codoid can help enhance your test reporting by integrating Playwright with custom dashboards and analytics. Your team can improve debugging, collaboration, and software quality by picking the right report.

Frequently Asked Questions

-

Which Playwright reporter is best for debugging?

The HTML Reporter is best for debugging as it provides an interactive web-based report with detailed logs, screenshots, and failure traces. JSON and JUnit Reporters are also useful for storing structured test data for further analysis.

-

Can I customize Playwright reports?

Yes, Playwright allows customization of reports by specifying reporters in the playwright.config.js file. Additionally, JSON and JUnit reports can be processed and visualized using external tools.

-

How does Playwright’s reporting compare to Selenium?

Playwright provides more built-in reporting options than Selenium. While Selenium requires third-party integrations for generating reports, Playwright offers built-in HTML, JSON, and JUnit reports, making test analysis easier without additional plugins.

-

How do I choose the right Playwright reporter for my project?

The best reporter depends on your use case:

For quick debugging → Use List or Line Reporter.

For minimal CI/CD logs → Use Dot Reporter.

For interactive analysis → Use HTML Reporter.

For data processing & automation → Use JSON Reporter.

For CI/CD integration → Use JUnit Reporter.

For advanced visual reporting → Use Allure or Codoid’s reporting solutions.

-

How does Playwright Report compare to Selenium reporting?

Unlike Selenium, which requires third-party libraries for reporting, Playwright has built-in reporting capabilities. Playwright provides HTML, JSON, and JUnit reports natively, making it easier to analyze test results without additional setup.

by Arthur Williams | Mar 22, 2025 | Software Testing, Blog, Latest Post |

As applications shift from large, single-system designs to smaller, flexible microservices, it is very important to ensure that each of these parts works well and performs correctly. This guide will look at the details of microservices testing. We will explore various methods, strategies, and best practices that help create a strong development process. A clear testing strategy is very important for applications built on microservices. Since these systems are independent and spread out, you need a testing approach that solves their unique problems. The strategy should include various types of testing, each focusing on different parts of how the system works and performs.

Testing must be a key part of the development process. It should be included in the CI/CD pipeline to check that changes are validated well before they go live. Automated testing is essential to handle the complexity and provide fast feedback. This helps teams find and fix issues quickly.

Key Challenges in Microservices Testing

Before diving into testing strategies, it’s important to understand the unique challenges of microservices testing:

- Service Independence: Each microservice runs as an independent unit, requiring isolated testing.

- Inter-Service Communication: Microservices communicate via REST, gRPC, or messaging queues, making API contract validation crucial.

- Data Consistency Issues: Multiple services access distributed databases, increasing the risk of data inconsistency.

- Deployment Variability: Different microservices may have different versions running, requiring backward compatibility checks.

- Fault Tolerance & Resilience: Failures in one service should not cascade to others, necessitating chaos and resilience testing.

To tackle these challenges, a layered testing strategy is necessary.

Microservices Testing Strategy:

Testing microservices presents unique challenges due to their distributed nature. To ensure seamless communication, data integrity, and system reliability, a well-structured testing strategy must be adopted.

1. Services Should Be Tested Both in Isolation and in Combination

Each microservice must be tested independently before being integrated with others. A well-balanced approach should include:

- Component testing, which verifies the correctness of individual services in isolation.

- Integration testing, which ensures seamless communication between microservices

By implementing both strategies, issues can be detected early, preventing major failures in production.

2. Contract Testing Should Be Used to Prevent Integration Failures

Since microservices communicate through APIs, even minor changes may disrupt service dependencies. Contract testing plays a crucial role in ensuring proper interaction between services and reducing the risk of failures during updates.

- API contracts should be clearly defined and maintained to ensure compatibility.

- Tools such as Pact and Spring Cloud Contract should be used for contract validation.

- Contract testing should be integrated into CI/CD pipelines to prevent deployment issues.

3. Testing Should Begin Early (Shift-Left Approach)

Traditionally, testing has been performed at the final stages of development, leading to late-stage defects that are costly to fix. Instead, a shift-left testing approach should be followed, where testing is performed from the beginning of development.

- Unit and integration tests should be written as code is developed.

- Testers should be involved in requirement gathering and design discussions to identify potential issues early.

- Code reviews and pair programming should be encouraged to enhance quality and minimize defects.

4. Real-World Scenarios Should Be Simulated with E2E and Performance Testing

Since microservices work together as a complete system, they must be tested under real-world conditions. End-to-End (E2E) testing ensures that entire business processes function correctly, while performance testing checks if the system remains stable under different workloads.

- High traffic simulations should be conducted using appropriate tools to identify bottlenecks.

- Failures, latency, and scaling issues should be assessed before deployment.

This helps ensure that the application performs well under real user conditions and can handle unexpected loads without breaking down.

Example real-world conditions :

- E-Commerce Order Processing: Ensures seamless communication between shopping cart, inventory, payment, and order fulfillment services.

- Online Payments with Third-Party Services: Verifies secure and successful transactions between internal payment services and providers like PayPal or Stripe.

- Public API for Inventory Checking: Confirms real-time stock availability for external retailers while maintaining data security and system performance.

5. Security Testing Should Be Integrated from the Start

Security remains a significant concern in microservices architecture due to the multiple services that expose APIs. To minimize vulnerabilities, security testing must be incorporated throughout the development lifecycle.

- API security tests should be conducted to verify authentication and data protection mechanisms.

- Vulnerabilities such as SQL injection, XSS, and CSRF attacks should be identified and mitigated.

- Security tools like OWASP ZAP, Burp Suite, and Snyk should be used for automated testing.

6. Observability and Monitoring Should Be Implemented for Faster Debugging

Since microservices generate vast amounts of logs and metrics, observability and monitoring are essential for identifying failures and maintaining system health.

- Centralized logging should be implemented using ELK Stack or Loki.

- Distributed tracing with Jaeger or OpenTelemetry should be used to track service interactions.

- Real-time performance monitoring should be conducted using Prometheus and Grafana to detect potential issues before they affect users.

Identifying Types of Tests for Microservices

1. Unit Testing – Testing Small Parts of Code

Unit testing focuses on testing individual functions or methods within a microservice to ensure they work correctly. It isolates each piece of code and verifies its behavior without involving external dependencies like databases or APIs.

- They write test cases for small functions.

- They mock (replace) databases or external services to keep tests simple.

- Run tests automatically after every change.

Example:

A function calculates a discount on products. The tester writes tests to check if:

- A 10% discount is applied correctly.

- The function doesn’t crash with invalid inputs.

Tools: JUnit, PyTest, Jest, Mockito

2. Component Testing – Testing One Microservice Alone

Component testing validates a single microservice in isolation, ensuring its APIs, business logic, and database interactions function correctly. It does not involve communication with other microservices but may use mock services or in-memory databases for testing.

- Use tools like Postman to send test requests to the microservice.

- Check if it returns correct data (e.g., user details when asked).

- Use fake databases to test without real data.

Example:

Testing a Login Service:

- The tester sends a request with a username and password.

- The system must return a success message if login is correct.

- It must block access if the password is wrong.

Tools: Postman, REST-assured, WireMock

3. Contract Testing – Making Sure Services Speak the Same Language

Contract testing ensures that microservices communicate correctly by validating API agreements between a provider (data sender) and a consumer (data receiver). It prevents breaking changes when microservices evolve independently.

- The service that sends data (Provider) and the service that receives data (Consumer) create a contract (rules for communication).

- Testers check if both follow the contract.

Example:

Order Service sends details to Payment Service.

If the contract says:

{

"order_id": "12345",

"amount": 100.0

}

The Payment Service must accept this format.

- If Payment Service changes its format, contract testing will catch the error before release.

Tools: Pact, Spring Cloud Contract

4. Integration Testing – Checking If Microservices Work Together

Integration testing verifies how multiple microservices interact, ensuring smooth data flow and communication between services. It detects issues like incorrect API responses, broken dependencies, or failed database transactions.

- They set up a test environment where services can talk to each other.

- Send API requests and check if the response is correct.

- Use mock services if a real service isn’t available.

Example:

Order Service calls Inventory Service to check stock:

- Tester sends a request to place an order.

- The system must reduce stock in the Inventory Service.

Tools: Testcontainers, Postman, WireMock

5. End-to-End (E2E) Testing – Testing the Whole System Together

End-to-End testing validates the entire business process by simulating real user interactions across multiple microservices. It ensures that all services work cohesively and that complete workflows function as expected.

- Test scenarios are created from a user’s perspective.

- Clicks and inputs are automated using UI testing tools.

- Data flow across all services is checked.

Example:

E-commerce checkout process:

- User adds items to cart.

- User completes payment.

- Order is confirmed, and inventory is updated.

- Tester ensures all steps work without errors.

Tools: Selenium, Cypress, Playwright

6. Performance & Load Testing – Checking Speed & Stability

Performance and load testing evaluate how well microservices handle different levels of user traffic. It helps identify bottlenecks, slow responses, and system crashes under stress conditions to ensure scalability and reliability.

- Thousands of fake users are created to send requests.

- System performance is monitored to find weak points.

- Slow API responses are identified, and fixes are suggested.

Example:

- An online shopping website expects 1,000 users at the same time.

- Testers simulate high traffic and see if the website slows down.

Tools: JMeter, Gatling, Locust

7. Chaos Engineering – Testing System Resilience

Chaos engineering deliberately introduces failures like server crashes or network disruptions to test how well microservices recover. It ensures that the system remains stable and continues functioning even in unpredictable conditions.

- Use tools to randomly shut down microservices.

- Monitor if the system can recover without breaking.

- Check if users get error messages instead of crashes.

Example:

- Tester disconnects the database from the Order Service.

- The system should retry the connection instead of crashing.

Tools: Chaos Monkey, Gremlin

8. Security Testing – Protecting Against Hackers

Security testing identifies vulnerabilities in microservices, ensuring they are protected against cyber threats like unauthorized access, data breaches, and API attacks. It checks authentication, encryption, and compliance with security best practices.

- Test login security (password encryption, token authentication).

- Check for common attacks (SQL Injection, Cross-Site Scripting).

- Run automated scans for security vulnerabilities.

Example:

- A tester tries to enter malicious code into a login form.

- If the system is secure, it should block the attempt.

Tools: OWASP ZAP, Burp Suite

9. Monitoring & Observability – Watching System Health

Monitoring and observability track real-time system performance, errors, and logs to detect potential issues before they impact users. It provides insights into system health, helping teams quickly identify and resolve failures.

- Use logging tools to track errors.

- Use tracing tools to see how requests travel through microservices.

- Set up alerts for slow or failing services.

Example:

If the Order Service stops working, an alert is sent to the team before users notice.

Tools: Prometheus, Grafana, ELK Stack

Conclusion

A structured microservices testing strategy ensures early issue detection, improved reliability, and faster software delivery. By adopting test automation, early testing (shift-left), contract validation, security assessments, and continuous monitoring, organizations can enhance the stability and performance of microservices-based applications. To maintain a seamless software development cycle, testing must be an ongoing process rather than a final step. A proactive approach ensures that microservices function as expected, providing a better user experience and higher system reliability.

Frequently Asked Questions

-

Why is testing critical in microservices architecture?

Testing ensures each microservice works independently and together, preventing failures, maintaining system reliability, and ensuring smooth communication between services.

-

What tools are commonly used for microservices testing?

Popular tools include JUnit, Pact, Postman, Selenium, Playwright, JMeter, OWASP ZAP, Prometheus, Grafana, and Chaos Monkey.

-

How is microservices testing different from monolithic testing?

Microservices testing focuses on validating independent, distributed components and their interactions, whereas monolithic testing typically targets a single, unified application.

-

Can microservices testing be automated?

Yes, automation is critical in microservices testing for unit tests, integration tests, API validations, and performance monitoring within CI/CD pipelines.

by Charlotte Johnson | Mar 21, 2025 | Automation Testing, Blog, Latest Post |

Although Cypress is a widely used tool for end-to-end testing, many QA engineers find it limiting due to flaky tests, slow CI/CD execution, and complex command patterns. Its lack of full async/await support and limited parallel execution make testing frustrating and time-consuming. Additionally, Cypress’s unique command chaining can be confusing, and running tests in parallel often require workarounds, slowing down development. These challenges highlight the need for a faster, more reliable, and scalable testing solution—this is where Playwright emerges as a better alternative. Whether you’re looking for improved test speed, better browser support, or a more efficient workflow, migrating Cypress to Playwright will help you achieve a more effective testing strategy.

If you have not yet made the decision to migrate to Playwright, we will first cover the primary reasons why Playwright is better and then take a deep dive into the Migration strategy that you can use if you are convinced.

Why Playwright Emerges as a Superior Alternative to Cypress

When it comes to front-end testing, Cypress has long been a favorite among developers for its simplicity, powerful features, and strong community support. However, Playwright, a newer entrant developed by Microsoft, is quickly gaining traction as a superior alternative. But what makes Playwright stand out? Here are 6 aspects that we feel will make you want to migrate from Cypress to Playwright.

1. Cross-Browser Support

- Playwright supports Chromium, Firefox, and WebKit (Safari) natively, allowing you to test your application across all major browsers with minimal configuration.

- This is a significant advantage over Cypress, which primarily focuses on Chromium-based browsers and has limited support for Firefox and Safari

Why It Matters:

- Cross-browser compatibility is critical for ensuring your application works seamlessly for all users.

- With Playwright, you can test your app in a real Safari environment (via WebKit) without needing additional tools or workarounds.

2. Superior Performance and Parallel Execution

- Playwright is designed for speed and efficiency. It runs tests in parallel by default, leveraging multiple browser contexts to execute tests faster.

- Additionally, Playwright operates outside the browser’s event loop, which reduces flakiness and improves reliability.

- Cypress, while it supports parallel execution, requires additional setup such as integrating with the Cypress Dashboard Service or configuring CI/CD for parallel runs.

Why It Matters:

- For large test suites, faster execution times mean quicker feedback loops and more efficient CI/CD pipelines.

- Playwright’s parallel execution capabilities can significantly reduce the time required to run your tests, making it ideal for teams with extensive testing needs.

3. Modern and Intuitive API

- Playwright’s API is built with modern JavaScript in mind, using async/await to handle asynchronous operations.

- This makes the code more readable and easier to maintain compared to Cypress’s chaining syntax.

- Playwright also provides a rich set of built-in utilities, such as automatic waiting, network interception, and mobile emulation.

Why It Matters:

- A modern API reduces the learning curve for new team members and makes it easier to write complex test scenarios.

- Playwright’s automatic waiting eliminates the need for manual timeouts, resulting in more reliable tests.

4. Advanced Debugging Tools

Playwright comes with a suite of advanced debugging tools, including:

- Trace Viewer: A visual tool to go through test execution and inspect actions, network requests, and more.

- Playwright Inspector: An interactive tool for debugging tests in real time.

- Screenshots and Videos: Automatic capture of screenshots and videos for failed tests.

Cypress also provides screenshots and videos, but Playwright offers deeper debugging with tools.

Why It Matters:

Debugging flaky or failing tests can be time-consuming. Playwright’s debugging tools make it easier to diagnose and fix issues, reducing the time spent on troubleshooting.

5. Built-In Support for Modern Web Features

- Playwright is designed to handle modern web technologies like shadow DOM, service workers, and Progressive Web Apps (PWAs).

- It provides first-class support for these features, making it easier to test cutting-edge web applications.

- Cypress has limited or workaround-based support for features like shadow DOM and service workers, often requiring custom plugins or additional effort.

Why It Matters:

- As web applications become more complex, testing tools need to keep up. Playwright’s built-in support for modern web features ensures that you can test your app thoroughly without needing a workaround.

6. Native Mobile Emulation

- Playwright offers native mobile emulation, allowing you to test your application on a variety of mobile devices and screen sizes.

- This is particularly useful for ensuring your app is responsive and functions correctly on different devices.

- Cypress does not provide true mobile emulation. While it supports viewport resizing, it lacks built-in device emulation capabilities such as touch events or mobile-specific user-agent simulation.

Why It Matters:

- With the increasing use of mobile devices, testing your app’s responsiveness is no longer optional.

- Playwright’s mobile emulation capabilities make it easier to catch issues early and ensure a consistent user experience across devices.

Strategy for Migrating Cypress to Playwright

Before migrating Cypress to Playwright or any type of migration, having a clear strategy is key. Start by assessing your Cypress test suite’s complexity, and identifying custom commands, helper functions, and dependencies. If your tests are tightly linked, adjustments may be needed for a smoother transition. Also, check for third-party plugins and find Playwright alternatives if necessary.

Creating a realistic timeline will make the transition easier. Set clear goals, break the migration into smaller steps, and move test files or modules gradually. Ensure your team has enough time to learn Playwright’s API and best practices. Proper planning will minimize issues and maximize efficiency, making the switch seamless.

Sample Timeline

| Phase |

Timeline |

Key Activities |

| Pre – Migration |

Week 1-2 |

Evaluate test suite, set goals, set up Playwright, and train team. |

| Pilot – Migration |

Week 3-4 |

Migrate critical tests, validate results, gather feedback. |

| Full – Migration |

Week 5-8 |

Migrate remaining tests, replace Cypress features, optimize test suite. |

| Post – Migration |

Week 9-10 |

Run and monitor tests, conduct retrospective, train teams on best practices. |

| Ongoing Maintenance |

Ongoing |

Refactor tests, monitor metrics, stay updated with Playwright’s latest features. |

Migrating from Cypress to Playwright: Step-by-Step Process

Now that we have a timeline in place, let’s see what steps you need to follow to migrate from Cypress to Playwright. Although it can seem daunting, breaking the process into clear, actionable steps makes it manageable and less overwhelming.

Step 1: Evaluate Your Current Cypress Test Suite

Before starting the migration, it’s crucial to analyze existing Cypress tests to identify dependencies, custom commands, and third-party integrations. Categorizing tests based on their priority and complexity helps in deciding which ones to migrate first.

1. Inventory Your Tests:

- List all your Cypress tests, including their purpose and priority.

- Categorize tests as critical, high-priority, medium-priority, or low-priority.

Identify Dependencies:

- Note any Cypress-specific plugins, custom commands, or fixtures your tests rely on.

- Determine if Playwright has built-in alternatives or if you’ll need to implement custom solutions.

Assess Test Complexity:

- Identify simple tests (e.g., basic UI interactions) and complex tests (e.g., tests involving API calls, third-party integrations, or custom logic).

Step 2: Set Up Playwright in Your Project

Installing Playwright and configuring its test environment is the next step. Unlike Cypress, Playwright requires additional setup for managing multiple browsers, but this one-time effort results in greater flexibility for cross-browser testing.

1) Install Playwright:

Run the following command to install Playwright:

npm init playwright@latest

Run the install command and do the following to get started:

- You’ll be asked to pick TypeScript (default) or JavaScript as your test language.

- Name your tests folder (default is tests or e2e if tests already exists).

- Optionally, Playwright will offer to add a GitHub Actions workflow so you can easily run your tests in Continuous Integration (CI).

- Finally, it will install the necessary Playwright browsers (this is enabled by default).

2) Configure Playwright:

The playwright.config is where you can add configuration for Playwright including modifying which browsers you would like to run Playwright on

playwright.config.js

package.json

package-lock.json

tests/

example.spec.js

tests-examples/

demo-todo-app.spec.js

Step 3: Migrate Tests Incrementally

Instead of rewriting everything at once, tests should be migrated in phases. This involves replacing Cypress-specific commands with their Playwright equivalents and validating that each test runs successfully before proceeding further.

Update Basic Commands

| S. No |

Cypress |

Playwright Equivalent |

| 1 |

cy.get(‘selector’) |

await page.locator(‘selector’); |

| 2 |

cy.visit(‘url’) |

await page.goto(‘url’); |

| 3 |

cy.click() |

await page.click(‘selector’); |

| 4 |

cy.type(‘input’) |

await page.fill(‘selector’, ‘input’); |

| 5 |

cy.wait(time) |

await page.waitForTimeout(time); |

Step 4: Convert a Cypress Test to Playwright

A direct one-to-one mapping of test cases is necessary to ensure a smooth transition. This step involves modifying test syntax, replacing assertions, and adapting test structures to Playwright’s async/await model.

Cypress Example

describe('Login Test', () => {

it('should log in successfully', () => {

cy.visit('https://example.com');

cy.get('#username').type('user123');

cy.get('#password').type('password123');

cy.get('#login-btn').click();

cy.url().should('include', '/dashboard');

});

});

Playwright Equivalent

const { test, expect } = require('@playwright/test');

test('Login Test', async ({ page }) => {

await page.goto('https://example.com');

await page.fill('#username', 'user123');

await page.fill('#password', 'password123');

await page.click('#login-btn');

await expect(page).toHaveURL(/dashboard/);

});

Step 5: Handle API Requests

Since Cypress and Playwright have different approaches to API testing, existing Cypress API requests need to be converted using Playwright’s API request methods, ensuring compatibility.

Cypress API Request

cy.request('GET', 'https://api.example.com/data')

.then((response) => {

expect(response.status).to.eq(200);

});

Playwright API Request

const response = await page.request.get('https://api.example.com/data');

expect(response.status()).toBe(200);

Step 6: Replace Cypress Fixtures with Playwright

Cypress’s fixture mechanism is replaced with Playwright’s direct JSON data loading approach, ensuring smooth integration of test data within the Playwright environment.

Cypress uses fixtures like this:

cy.fixture('data.json').then((data) => {

cy.get('#name').type(data.name);

});

In Playwright, use:

const data = require('./data.json');

await page.fill('#name', data.name);

Step 7: Parallel & Headless Testing

One of Playwright’s biggest advantages is native parallel execution. This step involves configuring Playwright to run tests faster and more efficiently across different browsers and environments.

Run Tests in Headed or Headless Mode

npx playwright test --headed

or

npx playwright test --headless

Run Tests in Multiple Browsers Modify playwright.config.js:

use: {

browserName: 'chromium', // Change to 'firefox' or 'webkit'

}

Step 8: Debugging & Playwright Inspector

Debugging in Playwright is enhanced through built-in tools like Trace Viewer and Playwright Inspector, making it easier to troubleshoot failing tests compared to Cypress’s traditional debugging.

Debugging Tools:

Run tests with UI inspector:

npx playwright test --debug

Slow down execution:

Step 9: CI/CD Integration

Integrating Playwright with CI/CD ensures that automated tests are executed consistently in development pipelines. Since Playwright supports multiple browsers, teams can run tests across different environments with minimal configuration.

name: Playwright Tests

on: [push, pull_request]

jobs:

test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Install dependencies

run: npm install

- name: Install Playwright Browsers

run: npx playwright install --with-deps

- name: Run tests

run: npx playwright test

Each step in this migration process ensures a smooth and structured transition from Cypress to Playwright, minimizing risks and maintaining existing test coverage. Instead of migrating everything at once, an incremental approach helps teams adapt gradually without disrupting workflows.

By first evaluating the Cypress test suite, teams can identify complexities and dependencies, making migration more efficient. Setting up Playwright lays the groundwork, while migrating tests in phases helps catch and resolve issues early. Adapting API requests, fixtures, and debugging methods ensures a seamless shift without losing test functionality.

With parallel execution and headless testing, Playwright significantly improves test speed and scalability. Finally, integrating Playwright into CI/CD pipelines ensures automated testing remains stable and efficient across different environments. This approach allows teams to leverage Playwright’s advantages without disrupting development.

Conclusion:

Migrating from Cypress to Playwright enhances test automation efficiency with better performance, cross-browser compatibility, and advanced debugging tools. By carefully planning the migration, assessing test suite complexity, and following a step-by-step process, teams can ensure a smooth and successful transition. At Codoid, we specialize in automation testing and help teams seamlessly migrate to Playwright. Our expertise ensures optimized test execution, better coverage, and high-quality software testing, enabling organizations to stay ahead in the fast-evolving tech landscape

Frequently Asked Questions

-

How long does it take to migrate from Cypress to Playwright?

The migration time depends on the complexity of your test suite, but with proper planning, most teams can transition in a few weeks without major disruptions.

-

Is Playwright an open-source tool like Cypress?

Yes, Playwright is an open-source automation framework developed by Microsoft, offering a free and powerful alternative to Cypress.

-

Why is Playwright better for end-to-end testing?

Playwright supports multiple browsers, parallel execution, full async/await support, and better automation capabilities, making it ideal for modern end-to-end testing.

-

Do I need to rewrite all my Cypress tests in Playwright?

Not necessarily. Many Cypress tests can be converted with minor adjustments, especially when replacing Cypress-specific commands with Playwright equivalents.

-

What are the key differences between Cypress and Playwright?

-Cypress runs tests in a single browser context and has limited parallel execution.

-Playwright supports multiple browsers, headless mode, and parallel execution, making it more flexible and scalable.

-

How difficult is it to migrate from Cypress to Playwright?

The migration process is straightforward with proper planning. By assessing test complexity, refactoring commands, and leveraging Playwright’s API, teams can transition smoothly.

-

Does Playwright support third-party integrations like Cypress?

Yes, Playwright supports various plugins, API testing, visual testing, and integrations with tools like Jest, Mocha, and Testbeats for enhanced reporting.

by Jacob | Mar 20, 2025 | Automation Testing, Blog, Latest Post |

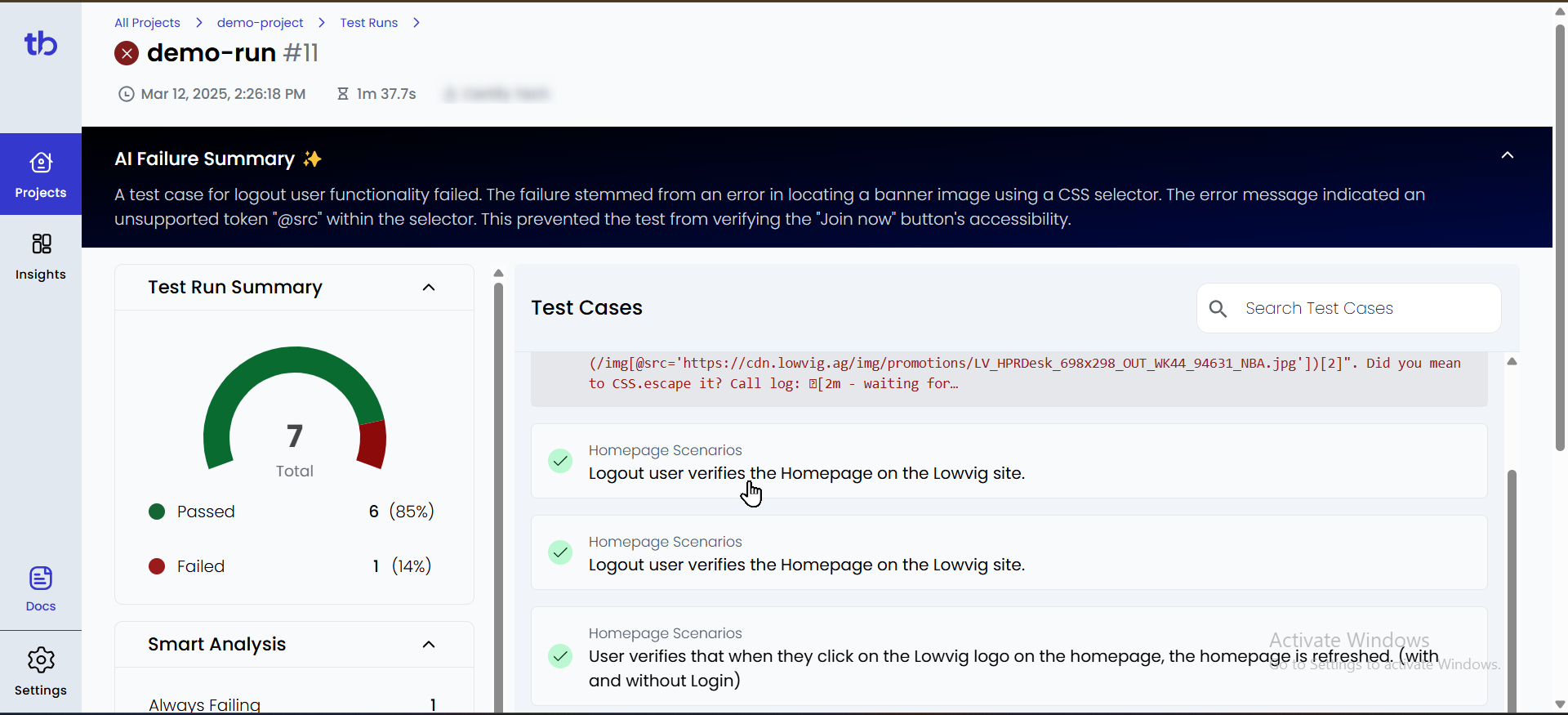

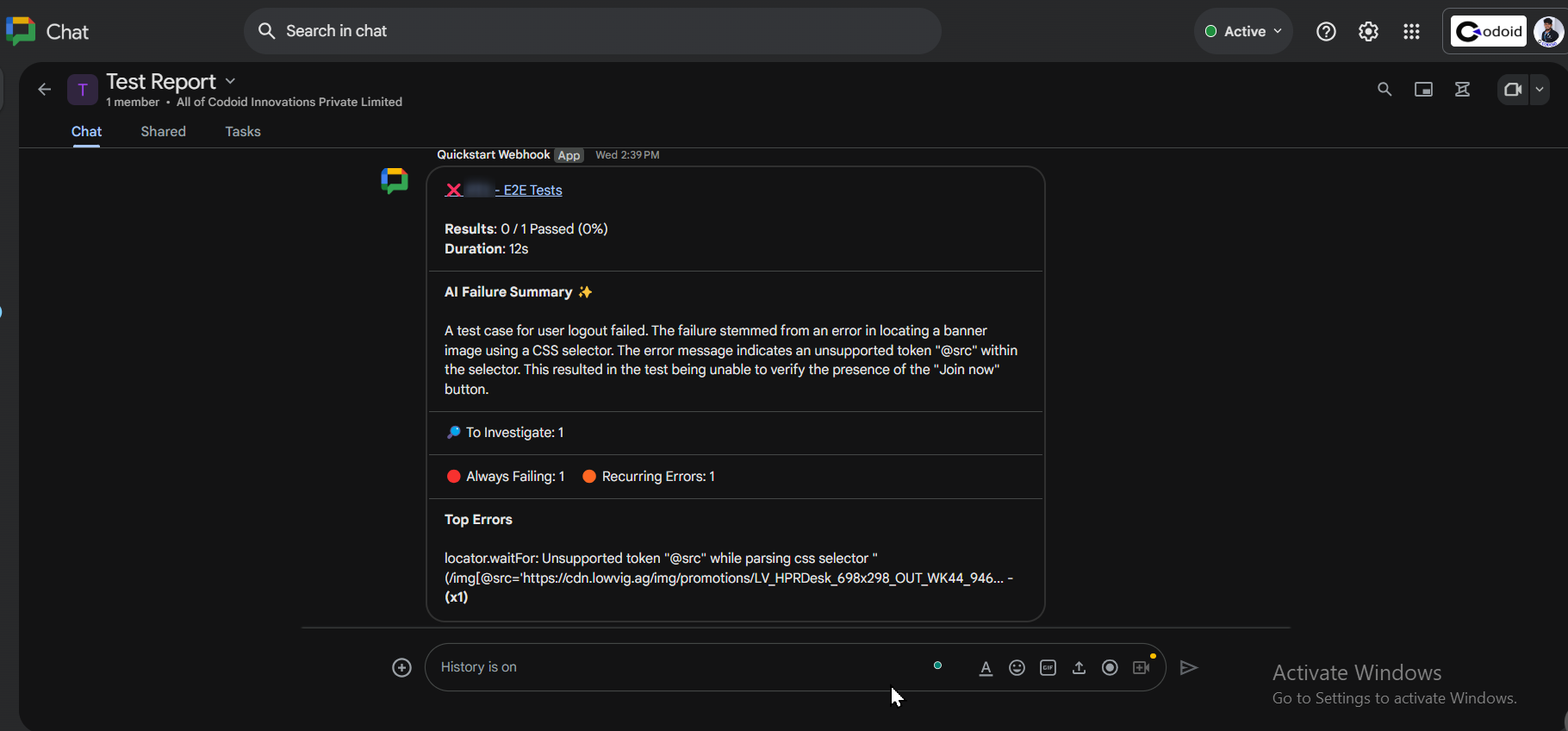

Testbeats is a powerful test reporting and analytics platform that enhances automation testing and test execution monitoring by providing detailed insights, real-time alerts, and seamless integration with automation frameworks. When integrated with Playwright, Testbeats simplifies test result publishing, ensures instant notifications via communication tools like Slack, Microsoft Teams, and Google Chat, and offers structured reports for better decision-making. One of the key advantages of Testbeats is its ability to work seamlessly with CucumberJS, a behavior-driven development (BDD) framework that runs on Node.js using the Gherkin syntax. This makes it an ideal solution for teams looking to combine Playwright’s automation capabilities with structured and collaborative test execution. By using Testbeats, QA teams and developers can streamline their workflows, minimize debugging time, and enhance visibility into test outcomes, ultimately improving software reliability in agile and CI/CD environments.

This blog explores the key features of Testbeats, highlights its benefits, and demonstrates how it enhances Playwright test automation with real-time alerts, streamlined reporting, and comprehensive test analytics.

Key Features of Testbeats

- Automated Test Execution Tracking – Captures and organizes test execution data from multiple automation frameworks, ensuring a structured and systematic approach to test result management.

- Multi-Platform Integration – Seamlessly connects with various test automation frameworks, making it a versatile solution for teams using different testing tools.

- Customizable Notifications – Allows users to configure notifications based on test outcomes, ensuring relevant stakeholders receive updates as needed.

- Advanced Test Result Filtering – Enables filtering of test reports based on status, execution time, and test categories, simplifying test analysis.

- Historical Data and Trend Analysis – Maintains test execution history, helping teams track performance trends over time for better decision-making.

- Security & Role-Based Access Control – Provides secure access management, ensuring only authorized users can view or modify test results.

- Exportable Reports – Allows exporting test execution reports in various formats (CSV, JSON, PDF), making it easier to share insights across teams.

Highlights of Testbeats

1. Streamlined Test Reporting – Simplifies publishing and managing test results from various frameworks, enhancing collaboration and accessibility.

2. Real-Time Alerts – Sends instant notifications to Google Chat, Slack, and Microsoft Teams, keeping teams informed about test execution status.

3. Comprehensive Reporting – Provides in-depth test execution reports on the Testbeats portal, offering actionable insights and analytics.

4. Seamless CucumberJS Integration – Supports behavior-driven development (BDD) with CucumberJS, enabling efficient execution and structured test reporting.

By leveraging these features and highlights, Testbeats enhances automation workflows, improves test visibility, and ensures seamless communication within development and QA teams. Now, let’s dive into the integration setup and execution process

Guide to Testbeats Integrating with Playwright

Prerequisites

Before proceeding with the integration, ensure that you have the following essential components set up:

- Node.js installed (v14 or later)

- Playwright installed in your project

- A Testbeats account

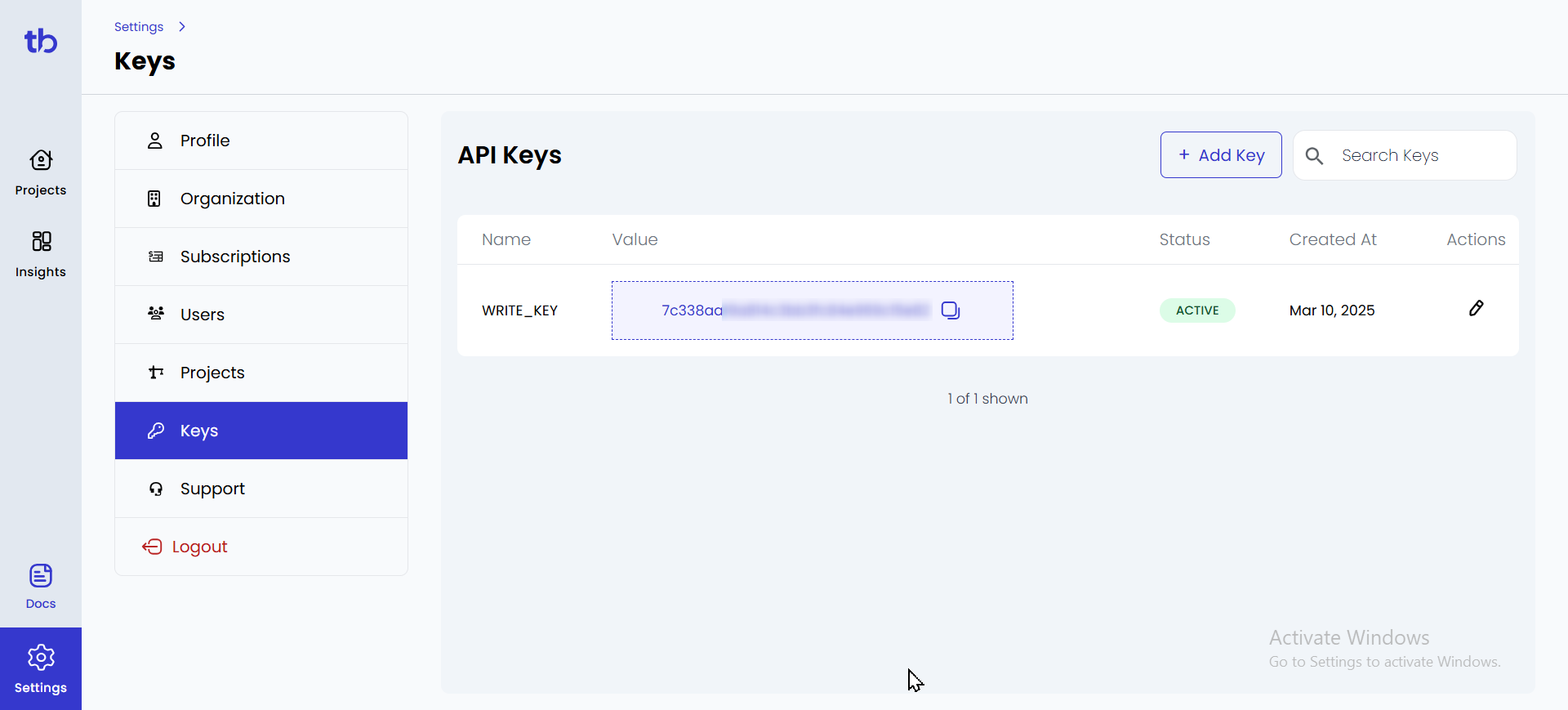

- API Key from Testbeats

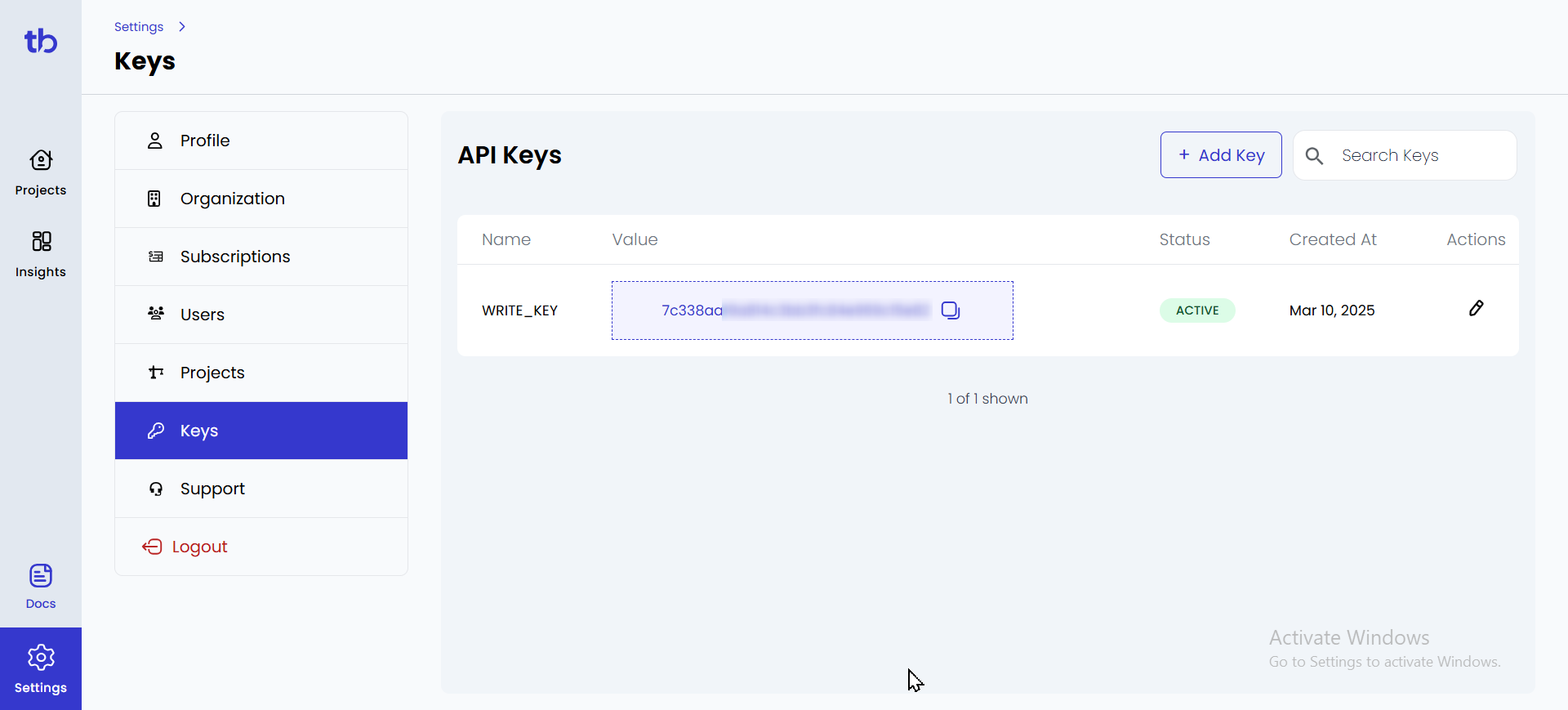

Step 1: Sign in to TestBeats

- Go to the TestBeats website and sign in with your credentials.

- Once signed in, create an organization.

- Navigate to Settings under the Profile section.

- In the Keys section, you will find your API Key — copy it for later use.

Step 2: Setting Up a Google Chat Webhook

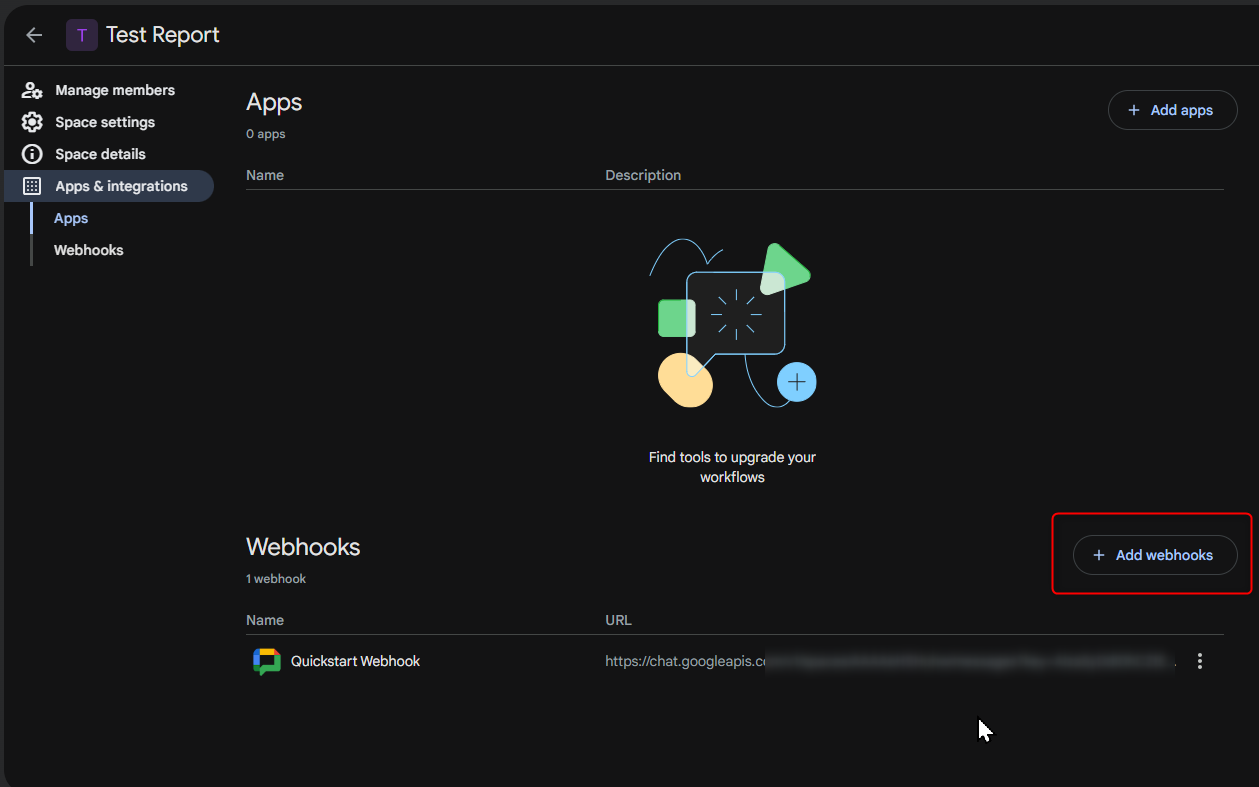

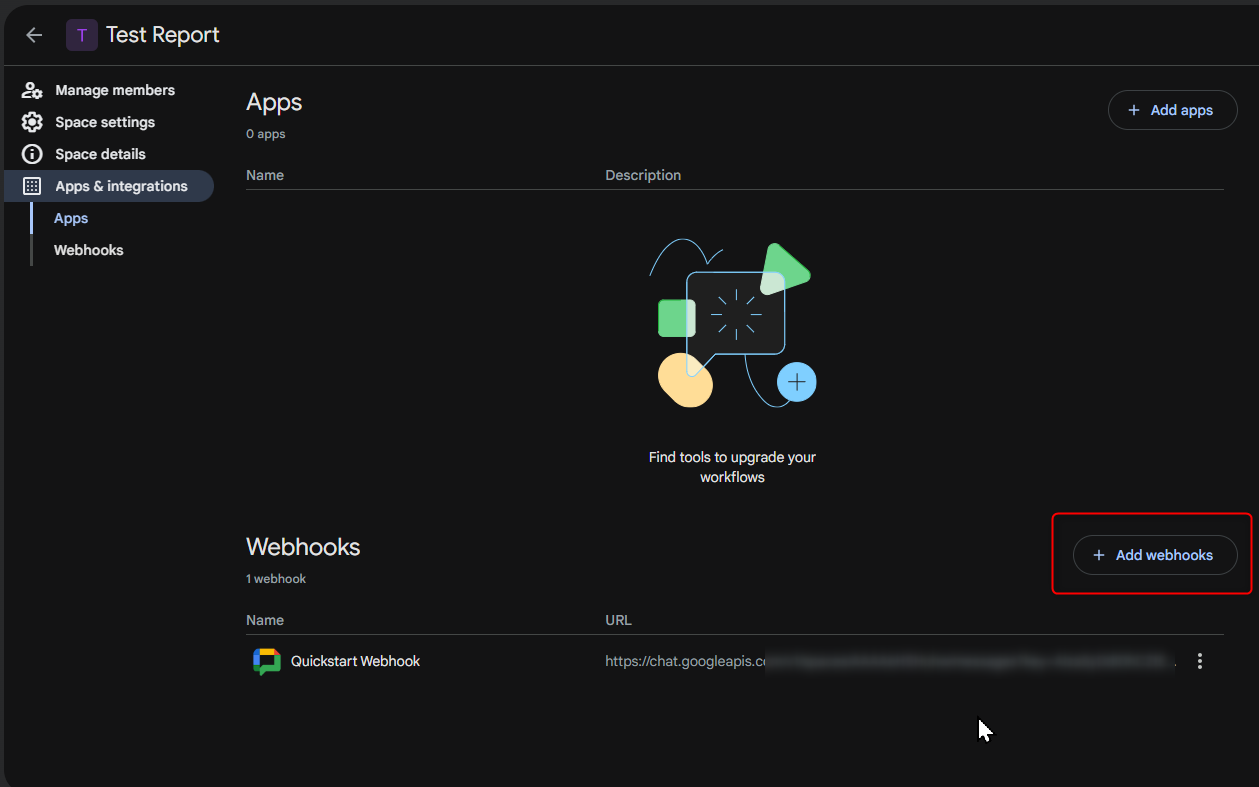

Google Chat webhooks allow TestBeats to send test execution updates directly to a chat space.

Create a Webhook in Google Chat

- Open Google Chat on the web and select the chat space where you want to receive notifications.

- Click on the space name and select Manage Webhooks.

- Click Add Webhook and provide a name (e.g., “Test Execution Alerts”).

- Google Chat will generate a Webhook URL. Copy this URL for later use.

Step 3: Create a TestBeats Configuration File

In your Playwright Cucumber framework, create a configuration file named testbeats.config.json in the root directory.

Sample Configuration for Google Chat Webhook

{

"api_key": "your_api_key",

"targets": [

{

"name": "chat",

"inputs": {

"url": "your_google_chat_webhook_url",

"title": "Test Execution Report",

"only_failures": false

}

}

],

"extensions": [

{

"name": "quick-chart-test-summary"

},

{

"name": "ci-info"

}

],

"results": [

{

"type": "cucumber",

"files": ["reports/cucumber-report.json"]

}

]

}

Key Configuration Details:

- “api_key” – Your TestBeats API Key.

- “url” – Paste the Google Chat webhook URL here.

- “only_failures” – If set to true, only failed tests trigger notifications.

- “files” – Path to your Cucumber JSON report.

Step 4: Running and Publishing Test Results

1. Run Tests in Playwright

Execute your test scenarios with:

npx cucumber-js --tags "@smoke"

After execution, a cucumber-report.json file will be generated in the reports folder.

2. Publish Test Results to TestBeats

Send results to TestBeats and Google Chat using:

npx testbeats@latest publish -c testbeats.config.json

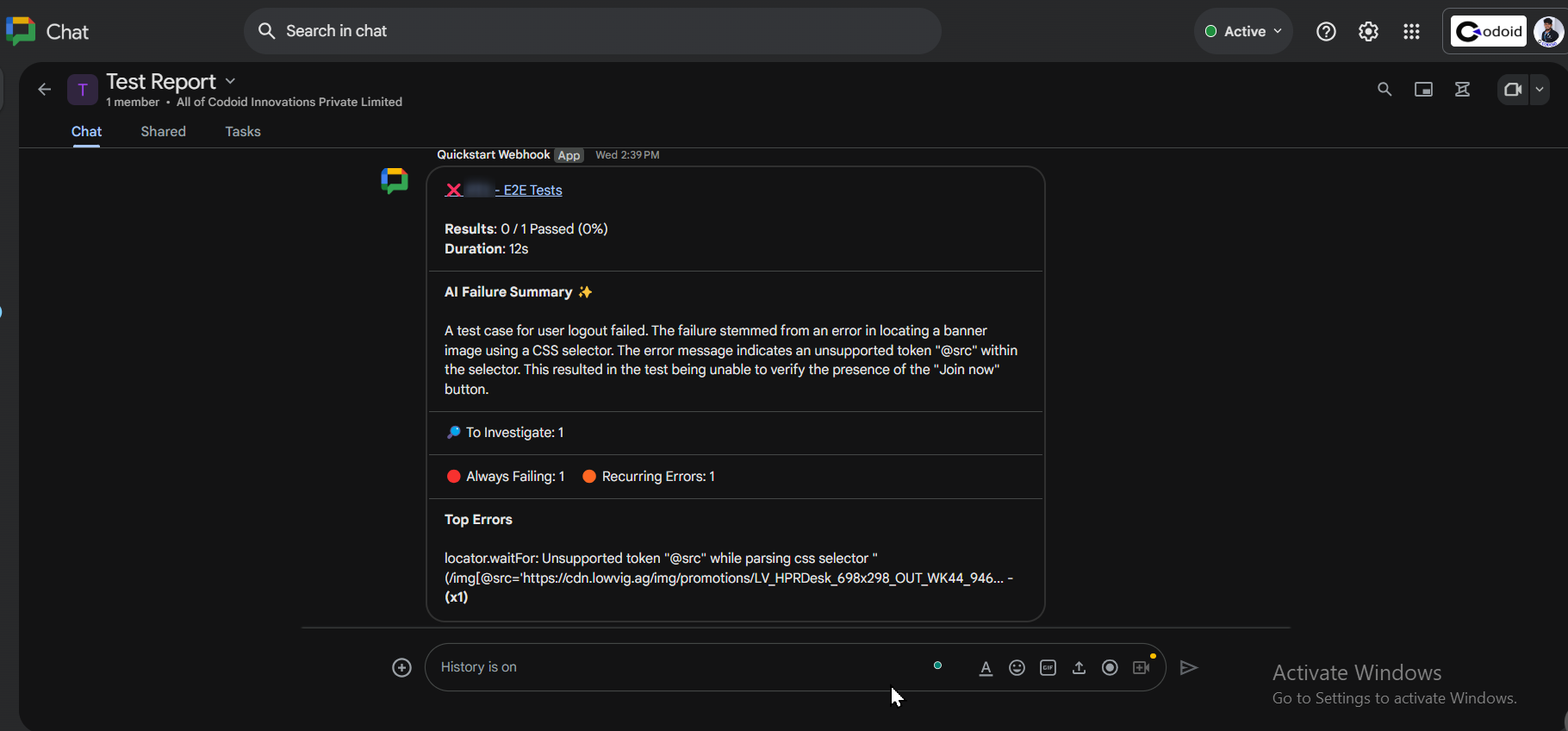

Now, your Google Chat space will receive real-time notifications about test execution!

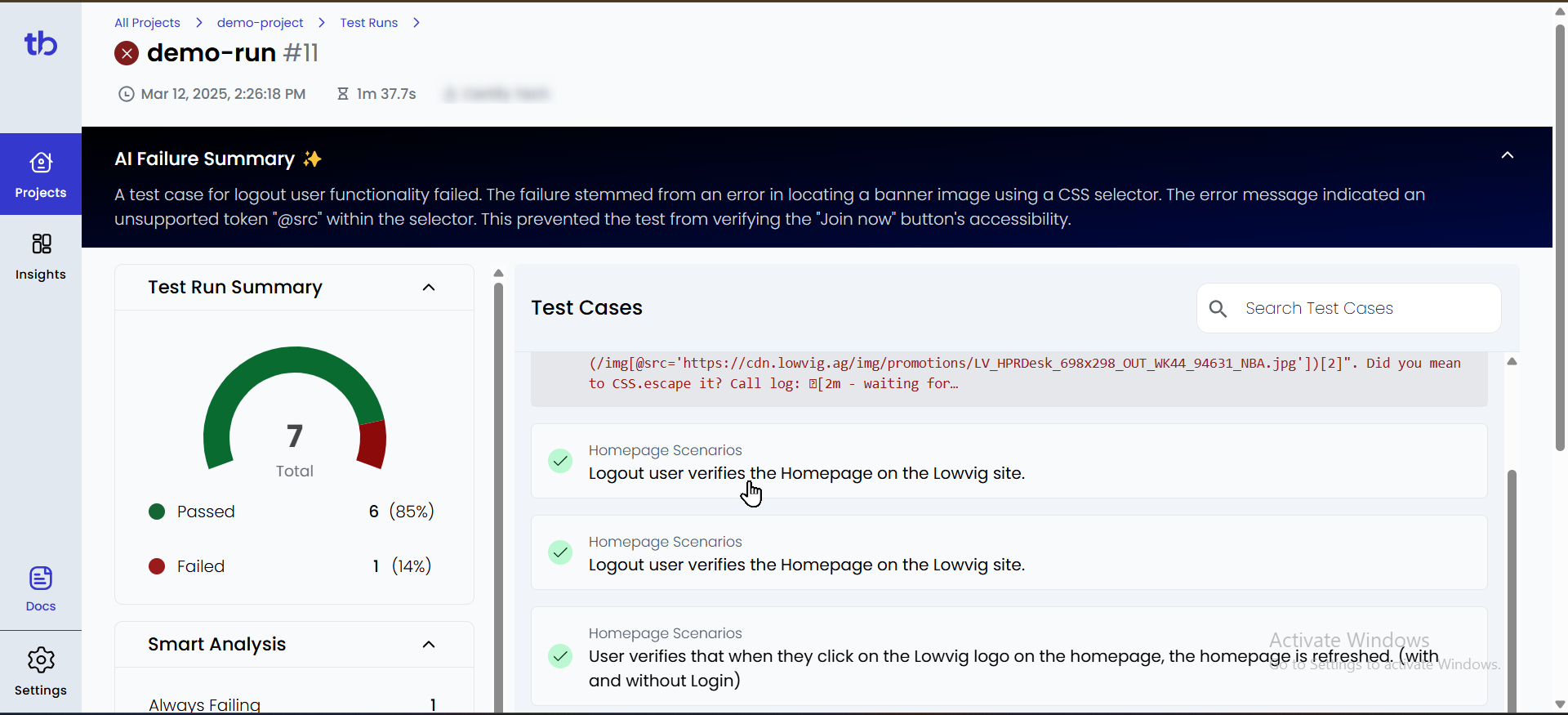

Step 5: Verify Test Reports in TestBeats

- Log in to TestBeats.

- Click on the “Projects” tab on the left.

- Select your project to view test execution details.

- Passed and failed tests will be displayed in the report.

Example Notification in Google Chat

After execution, a message like this will appear in your Google Chat space:

Conclusion:

Integrating Playwright with Testbeats makes test automation more efficient by providing real-time alerts, structured test tracking, and detailed analytics. This setup improves collaboration, simplifies debugging, and helps teams quickly identify issues. Automated notifications via Google Chat or other tools keep stakeholders updated on test results, making it ideal for agile and CI/CD workflows. Codoid, a leading software testing company, specializes in automation, performance, and AI-driven testing. With expertise in Playwright, Selenium, and Cypress, Codoid offers end-to-end testing solutions, including API, mobile, and cloud-based testing, ensuring high-quality digital experiences.

Frequently Asked Questions

-

What is Testbeats?

Testbeats is a test reporting and analytics platform that helps teams track and analyze test execution results, providing real-time insights and automated notifications.

-

What types of reports does Testbeats generate?

Testbeats provides detailed test execution reports, including pass/fail rates, execution trends, failure analysis, and historical data for better decision-making.

-

How does Testbeats improve collaboration?

By integrating with communication tools like Google Chat, Slack, and Microsoft Teams, Testbeats ensures real-time test result updates, helping teams stay informed and react faster to issues.

-

Does Testbeats support frameworks other than Playwright?

Yes, Testbeats supports multiple testing frameworks, including Selenium, Cypress, and CucumberJS, making it a versatile reporting solution.

-

Does Testbeats support CI/CD pipelines?

Yes, Testbeats can be integrated into CI/CD workflows to automate test reporting and enable real-time monitoring of test executions.