by admin | Oct 21, 2021 | Software Testing, Blog, Latest Post |

User Acceptance Testing is one of the last phases of testing in the software development lifecycle that involves the product being used by the end-user to see if it meets the business requirements and if it can handle real-world scenarios. So it goes without saying that intricate planning and disciplined execution are required as it assures the stakeholders that the software is ready for rollout. As one of the best software testing companies in India, we have vast experience when it comes to performing user acceptance testing. So in this User Acceptance Testing Tutorial, we will be explaining the important factors to consider when performing User Acceptance Tests.

Prerequisites

There are various reasons why user acceptance testing happens at the very end. For starters, we can start only when the application code has been fully developed without any major errors. To ensure that there are no major errors, we should have completed unit testing, system testing, integrated testing, and regression testing as well. The only acceptable errors are cosmetic errors as they don’t affect further processing of the application. The business requirements should also be available to design the test cases accurately. Apart from that, the environment for performing UAT should also be ready. Now that we have covered all the prerequisites, let’s explore how to get the job done.

The Stages of User Acceptance Testing

For an easier understanding of how to efficiently execute UAT, we have split the main process into different stages to indicate the purpose.

Strategic Planning

As in every process, we should start by coming up with a plan to perform UAT. To develop the most effective plan for your project, you should analyze the business requirements and define the main objectives of UAT. This is when you will also be defining the exit criteria for UAT. An exit criterion is nothing but the minimum goals that should be achieved when performing the planned User Acceptance Tests. Exit criteria can be defined by combining the system requirements along with user stories.

Designing effective Test Cases

Once the plan is ready, you will have a clear vision as to what has to be achieved for the business. So we will be using that to design test cases that cover all the functions that needed to be tested in real-world scenarios. You also have to be crystal clear about the expected result of each test and define the steps that have to be followed to get that result. This step doesn’t end here as you will also be needed to prepare the required test data required to perform such tests.

Choosing the right team

There are primarily two ways to go about UAT. Either you can have end-users sign up to beta test your application by making it available to them or you can create your own team. You can either execute it using an in-house team or you can also employ outsourcing software testing options. Irrespective of which way you choose, you have to make sure the right people are on the task. If you are choosing people for your beta program, then make sure they are people from the same demographic as the targeted users of the application. Make sure to check their knowledge of the business and ability to detect bugs as well.

User Acceptance Testing

All the groundwork has been laid for you to start with the tests. So in this part of the User Acceptance Testing Tutorial, we will be focusing on how to perform UAT. Make sure to follow all the steps from your test cases without any deviation. If you wish to test something else in a random manner, do it separately and not while performing a particular test case. Once you start, you will also have to create meticulous records of the results, remarks, and so on from each test. Then only it will be possible for the development team to fix all the bugs and issues that were discovered during the process. Once the bugs are fixed, you can rerun those tests to see if the changes have been made without any issues.

Sign-Off

This step is the final step and the name makes it self-explanatory as well. So once you have verified that the application meets all the business requirements, you can indicate to the team that the software is ready for rollout by sending a sign-off email.

General Tip: Using the right pools is also pivotal in yielding the best results. As a leading software testing services company, we use the best tools to make the process more efficient. Test data management can become a nightmare if you don’t monitor and feed the test data in a frequent manner. We use data management tools to feed all the required test data and remove the hassle from this process. Similarly, we also use bug reporting systems to keep track of all the bugs that were discovered and make sure everything is resolved in the most efficient manner.

Conclusion

We hope that our User Acceptance Testing Tutorial has helped you get a clear picture of the UAT process. Based on your specific project demands, you could make some tweaks to this process. Also, make sure to choose the appropriate tools to get the best results.

by admin | Oct 7, 2021 | Software Testing, Blog, Latest Post |

Since software testing is an integral part of the software development lifecycle, the need for talented QAs is constantly on the rise. There are also many out there who aspire to become a software tester by setting them apart from the overwhelming competition that has a bachelor’s degree in computer science. Certifications are a great way to accomplish just that, but there is a catch as not all certifications will be worth the effort and resources. So in this blog, we will be taking a look at the best QA testing certifications that will help a QA either kickstart their career or move higher in their career.

ISTQB – Foundation Level

The International Software Testing Qualifications Board is one of the most renowned and globally acclaimed QA testing certifications in the industry. The primary reason we are starting with this certification is that it can help you get a perfect start to your software testing career. It will help you understand all the fundamentals of software testing that are needed to fit into the various software testing roles such as test automation engineer, mobile-app tester, user-acceptance tester, and so on. The syllabi that they follow are constantly improved to keep up with the growing business needs. But the primary advantage that their syllabi have is that they are open to the public and you can go through their material before deciding to attend the exam that will cost you $229.

The foundation level certification isn’t the only one offered by ISTQB and we will be exploring the rest as we progress.

CAST – Certified Associate In Software Testing

CAST is yet another apt choice for the ones looking to start their software testing career. It is a foundation-level QA testing certification like the ISTQB certification we saw earlier and it will help you learn the important principles and best practices you would need to know. Let’s take a look at the prerequisites for this certification,

- Have a 3 or 4-year degree from an accredited college-level institution.

- If you have a 2-year degree at the same level, then a year of experience in testing will make you eligible.

- You could do the certification without a relevant degree if you have at least 3 years of experience in testing.

So it is evident here that you have the option to get this high-value QA testing certification just with your degree. So like ISTQB, it is a great option for freshers, and also a viable option for people with experience to certify themselves. CAST would cost you $100 to attempt and you should make sure that you attend the examination within 1 year of payment and get 70% to clear it.

CSTE — Certified Software Test Engineer

Now that we have seen two entry-level QA testing certifications to get started with, let’s move on to the next level. CSTE is more of a professional-level certification that helps you establish your competence and achieve better visibility in your team. So you will be able to present yourself as someone who can take up some additional responsibility and take a step forward in their career. The prerequisites also change accordingly,

- Must have a 4-year degree from an accredited college-level institution and 2 years of experience in testing.

So if you have a 3-year degree, you will need 3 years of experience in testing.

- Similarly, if you have a 2-year degree, then you will need 4 years of experience in testing.

- If you don’t have an appropriate degree, then you could compensate for that with 6 years of experience in testing.

Similar to CAST, you would have to score 70% to pass the test and it would cost you around $350 to $420.

CTM — Certified Test Manager

If CSTE was the first step towards a management role, CTM is the one that helps you get better at it. As the name suggests, this QA testing certification is targeted towards improving your management skills. It is a great option if you are currently in a leadership role as it can help you better your skills. You will be learning about various skills like how to manage test processes & test projects, how to measure a test process so that you can work on improving it, risk management, and so much more. Since it is management-level certification, you would also require experience in any leadership role.

The prerequisite is that you should have 3 years of experience in testing that includes 1 year of experience in a leadership role that is relevant to software testing. This has to be met with by the time the CTM will be granted. You would require a lot of supporting documents for this one. So make sure to check over the complete procedure on their website. Make sure you familiarise yourself with the Test Management Body of Knowledge book as it is the key to this certification.

CSSBB — Six Sigma Black Belt Certification

Another option to sharpen up your management skills is the National Commission for Certifying Agencies (NCCA) certified CSSBB. Like ISTQB, there are other belts available as well, but the black belt certification helps you understand the team dynamics and assign roles & responsibilities accordingly. You will be able to use the Six Sigma principles to optimize the DMAIC (Design, Measure, Analyze, Improve, and Control) model. In addition to that, you will also be equipped with the basics of lean management and how to identify non-value elements. The prerequisites are,

- You would require a minimum of 3 years of experience in one or more areas of the CSSBB Body of Knowledge.

- You would require a minimum of 1 or 2 completed projects with signed affidavits.

The exam would cost you $538 and would be an open-book test. If you are an ASQ member, then you would get a discount of $100 in the cost.

ISTQB – Advanced & Expert Level

The Advanced level of ISTQB is on par with the CTM certification that we saw earlier and the expert level is the one with the highest recognition. So starting with the foundation level certification, you should work yourself up the ladder and complete the advanced level first and then follow it up with the expert level. The expert level is the epitome of all these QA testing certifications as in addition to the in-depth concepts you will be learning about, you will also be handling practical exercises and discussions for almost half of the entire time. The prerequisites would also be very high for this certification.

- One must have at least 5 years of practical testing experience in testing.

- In that 5 years, at least 2 years should be industry experience in the specific topic that you are looking to become an expert in.

Conclusion

As one of the top software testing companies in Chennai, we always concentrate on getting our employees certified with ISTQB. We are currently a Silver-level partner with ISTQB and on a path to become a Platinum-level partner in the near future. We are aware of how valuable these ISTQB certifications are, and that is why we have started and ended the list with them. So we hope you have found this list of our top QA testing certifications to be useful. Make sure to pick the courses best for you and also remember that even though certifications are important, it is the knowledge you build that matters. So pour your heart and soul into it and be on a path of continuous learning.

by admin | Oct 5, 2021 | Software Testing, Blog, Latest Post |

Achieving Continuous Delivery is no child’s play as your team should be equipped with certain technical capabilities because deploying the code into production on demand throughout the software delivery cycle is not easy in any way. It is also important to acknowledge that it’s not just the technical practices that will help you achieve Continuous Delivery. It has to be coupled along with good management practices as well. So as a first step, we will be focusing on the key technical capabilities that drive Continuous Delivery in this blog.

Version Control

Keeping the application code in version control alone will not help you achieve Continuous Delivery as you would have to do all the deployments manually when both the system and application configurations stay out of version control. Since manual tasks stall continuous delivery (CD), you have to automate the delivery pipeline to achieve CD.

Test Automation

Automated unit & acceptance tests should be kicked off as soon as developers commit the code in version control. The simple underlying logic here is that if the developers are provided with faster feedback, they will be able to work on the changes and fix the issues instantaneously. This advantage alone doesn’t make test automation a vital component in the Continuous Delivery pipeline. It is also very reliable in ensuring that only high-quality software gets delivered to the end-users.

But that doesn’t mean you can just use flaky test automation scripts as they would only produce false positives and negatives and not add any value to the Continuous Delivery pipeline. So if you want your software to be more reliable and resilient, writing and adding robust automation scripts to the pipeline is the best way to go about it. If at all you find any of the scripts to be unstable, you can move them to a quarantine suite and run them independently until it becomes stable

Test Data Management (TDM)

As mentioned earlier, manual works delay continuous delivery. But at the same time, if your automated test scripts have to be manually fed with test data at frequent intervals, it will cause delays in the delivery. This is a clear sign that your automated test suites are not fulfilling their purpose. You can overcome this roadblock by creating a test data management (TDM) tool to which you can upload all the adequate data required for automated script execution. So, your scripts will call the TDM API to get the required data instead of getting it from an Excel or a YAML file.

In addition to that, once the TDM tool reaches its threshold, it should be able to pull and allocate more data automatically from the system DB. As an automation testing services company, we have a TDM for automation testing alone. We feed the data to it once every month and as a result, the automation test scripts no longer have to wait for the test data to be fed since the adequate data has already been allocated.

Trunk-Based Development

A long-lived branch leads your team towards reworking and merging issues that can’t be fixed immediately. So developers should make sure to create a feature branch, commit the changes within 24 hours into the trunk, and kill the feature branch. If a commit makes a build unstable, everyone on the team should jump in to resolve the issue and not push any changes into the trunk until the merge is fixed.

When a developer pushes the changes from a short-lived branch, your team will resolve the merge issues quickly as the push will be considered a small batch. Trunk-based development is a key enabler for Continuous Integration and Continuous Delivery. So, if the developers commit the changes into the trunk multiple times a day, and fix the merge issues then & there, then the trunk will be release-able.

Information Security

The information security process should not be carried out after releasing the software. Rather, it should be incorporated from the very beginning. Since fixing security-related issues is a time-consuming effort, integrating information security-related practices into the daily work plan of your teams will be instrumental in achieving Continuous Delivery.

Conclusion

Technical and Management practices are crucial for enabling Continuous Delivery. As a software testing company, we use these technical practices with our development team that works on developing automation testing tools. Following such practices alone is not a game-changer. Your team needs to learn and improve the process continuously. Continuous Improvement is pivotal in building a solid development pipeline for Continuous Delivery.

by Anika Chakraborty | Oct 4, 2021 | Software Testing, Blog, Latest Post |

Some still believe that the need for manual testing can be completely eliminated by automating all the test cases. However, in reality, you simply can’t eradicate manual testing as it plays an extremely important role in covering some edge cases from the user’s perspective. Since it is apparent that there is a need for both automation and manual testing, it is up to us to choose between the two and strike a perfect balance. So let’s pit Automation Testing vs Manual Testing and explore how it is possible to balance them both in the software delivery life cycle.

Stable Automated Tests

Stable test automation scripts help your team provide quick feedback at regular intervals. Automated tests boost your team’s confidence levels and help certify if the build is release-able or not. If you have a good talent pool to create robust automated scripts, then your testers can concentrate on other problem-solving activities (Exploratory & Usability Testing) which add value to the software.

Quarantined Automation Test Suites

Flaky automated tests kill the productivity of QA testers with their false positives and negatives. But you can’t just ignore the unstable automated test scripts as your team would have invested a lot of time & effort in developing the scripts. The workaround here is to quarantine the unstable automated test scripts instead of executing both the stable and unstable scripts in the delivery pipeline. So stabilizing & moving the quarantined test scripts to the delivery pipeline is also a problem-solving activity. As a leading automation testing company, we strongly recommend avoiding flaky automation test scripts from the very beginning. You can make that possible by following the best practices to create robust automated test scripts.

Tips to stabilize the unstable scripts

- Trouble-shoot and fix the script failures immediately instead of delaying them.

- Train the team to easily identify the web elements and mobile elements.

- Avoid boilerplate codes.

- Implement design patterns to minimize script maintenance.

- Execute the scripts as much as possible to understand the repetitive failures.

- Run batch by batch instead of running everything in one test suite.

- Make the automated test report visible to everyone in the team.

- If your team is not able to understand the test results, then you need to make the reports more readable.

Exploratory Testing

Test automation frees testers from repetitive scripted testing. Scripted automated testing does enable fast feedback loops, but your team will be able to unearth new product features & bugs, and identity additional test scenarios when performing exploratory testing.

Benefits of Exploratory Testing (ET)

- Scripted testing provides confidence, but ET helps your team to understand whether the new features make sense from the end user’s standpoint.

- ET is usually performed by testers who have good product knowledge. Every feedback from ET is also an input for your automated test suites.

- Some test cases cannot be automated. So you can allocate those test cases in ET sessions.

- ET helps you to create additional tests which are not covered in automated test suites.

Usability Testing

Usability testing is an experiment to identify and rectify usability issues that exist in the product. Let’s say a functional tester is testing the product check-out scenario of an e-commerce app. Here, the tester has good knowledge about the product and knows where the important buttons (Add to Cart & Proceed to Checkout) are placed on the app. However, in real-time, if the end-users struggle to find these buttons, they will definitely have second thoughts about using your app again. So it is clearly evident that functional UI tests can’t help you identify usability issues.

Usability Testing Steps

1. Prepare the research questions and test objectives

2. Identity participants who represent the end-users

3. Setup an actual work environment

4. Interview the participants about the app experience

5. Prepare test reports with recommendations

Conclusion

We hope you’re able to understand that using any one of the two types of testing will only lead to the creation of surface-level solutions. For robust solutions, you should use both manual and automation testing to test the features which are released continuously in the delivery pipeline. So compare Automation Testing vs Manual Testing and use the one that is more apt for the requirement. Testers should be performing exploratory testing continuously against the latest builds that come out of Continuous Integration. Whereas, scripted testing should be taken care of by automation.

by admin | Oct 1, 2021 | Software Testing, Blog |

There are different testing approaches available today; besides manual testing, automated testing is also possible today. There are many test automation frameworks and tools on the market—sometimes, though, manual testing is still the better option. If you want to hire software testers and are choosing between manual testing services and automated testing, you should keep these in mind.

Setting up a Manual Test Takes Less Time

Though the execution is faster with automated testing, setting up takes a lot of time. In many cases, it is faster to do a manual test. If you are starting with the project, you can create the infrastructure for an automated test.

If the project is already in full swing and has various interconnected parts, automation will take up time and resources. If it is too complex, it could even be impossible to create the proper environment for automatization.

Manual Testing Simultaneously Improves UX

Automated tests only check for values and functionality, not aesthetics. However, it isn’t enough for end-users that software is functional—it should also be easy to use. If the user interface has plenty of interactive elements, it’s easier and more affordable to go for manual testing. You can automate SDK and API portions of the interface, but you need a human touch when reviewing the visual details.

Testing Requires High-Security Access

If you have a complex project, you likely have multiple access levels for people from different departments. In manual testing, you can provide access to the systems and environments that the tester will directly handle and prevent them from accessing others.

This isn’t the case in some automated test projects. Carefully weigh whether you should use automated testing—you cannot test systems where the engineers don’t have the required access level.

Changing Many Things? Choose Manual Testing

If you are still developing your application and have not reached a stable version, don’t waste resources in creating an automated test. It is because each version update would require you to update your tests as well. Automated test outcomes are likely to be false if the app is not stable; otherwise, you will get reliable outcomes.

However, if you have the product out in the market and have a steady user base, automation could help you prevent regressions between updates. Software testing companies should help you determine the better option for your company at the moment.

Manual Tests Help Validate User Stories

Finally, you cannot validate user stories if you don’t do manual tests. You need to verify the functionality against the documentation, and only a test engineer can do this. If you add an automated test right away, you won’t be able to verify the functionality of the changes—automating could even raise defects.

Once you have the manual tests finished, the engineer can create test scenarios. Objective testing of functionality requires defects to be fixed—after that, it is possible to create automated tests.

Conclusion

Manual and automated testing have their advantages and disadvantages. While automated tests create a more efficient workflow, they aren’t always the best option to start with, especially for companies with ongoing and complex projects. It is up to the test engineer to determine the methods which suit the project and at what stage they should implement these.

Codoid is an industry-leading software testing company. Our manual testing services include UX and usability testing to DB, GUI, localization, and more. Let’s talk about your project; contact us for inquiries!

by admin | Oct 1, 2021 | Software Testing, Blog, Latest Post |

DevOps testing strategy can be defined as more of a team effort as it categorizes the best working shift that is conclusive of both the timing and frequency of a developer’s testing practice in an automated fashion. Though such radical transformation might not be easy for some testers, proper use of strategies can be a game-changer. As a leading QA company, we always make sure to implement the best DevOps Testing Strategy to streamline our testing process. Such best strategies can be created by following the tips and tools that we will be exploring in this blog. So in this blog, we will be introducing you to the benefits of an effective DevOps Testing Strategy and the tips & tools you could use to implement that strategy for yourself.

Benefits of using an effective DevOps Testing Strategy:

A company can stay ahead of the pack in today’s competitive market by becoming more efficient with its process to offer the finest features to customers on time. Here are some of the major advantages that a firm may gain by implementing the DevOps methodology.

Higher Release Velocity:

DevOps practices help in increasing the release velocity which enables us to release the code to production at faster rates. But it is not just about the speed as we will be able to perform such releases with more confidence.

Shorter Development Cycle:

With the introduction of DevOps, the complete software development cycle starting right from the initial design up to the production deployment becomes shorter.

Earlier Defect Detection:

With the implementation of the DevOps approach, we will be able to identify defects much earlier and prevent them from getting released to production. So improved quality of software events becomes a natural byproduct of DevOps.

Easier Development Rollback:

In addition to earlier defect detection, we will also be able to predict possible failures due to any bug in the code or issue in production are analyzed in DevOps, we need not worry about the downtime for rollback. It either avoids the issue altogether or makes us better equipped to handle the situation. Even in the event of an unpredicted failure, a healthy DevOps process will make it easier for us to recover from it.

Curates a Collaborative Culture:

The core concept of DevOps itself is to enhance the collaboration between the Dev & Ops team so that they work together as one team with a common goal. The DevOps culture brings more than that to the table as it encourages employee engagement and increases employee satisfaction. So engineers will be more motivated to share their insight and continuously innovate, enabling continuous improvement.

Performance-Oriented Approach:

DevOps can ensure an increase in your team’s performance as it encourages a performance-oriented approach. Coupling that with the collaborative culture, the teams become more productive and more innovative.

More Responsibility:

Since DevOps engineers share responsibility for key deliverables and goals, we will be able to witness an enhanced perspective that prevents siloed thinking and helps guarantee success.

Better Hireability:

DevOps cultures require a variety of skill sets and specializations for successful implementation. Studying DevOps is a lucrative career path for development staff, operations staff, and also a great advantage for digital & IT spheres.

On the whole, DevOps focuses on removing siloed perspectives that ensure pipelines are delivered with as much business value as possible.

Tips to create an effective DevOps Testing Strategy:

Now that we have seen all the benefits that an effective DevOps Testing Strategy has to offer, let’s explore a few tips that one would have to follow to reap such benefits.

1. Deploy small changes as often as possible:

Deploy small changes frequently as it allows for a more stable and controllable production environment. Even in the case of a crucial bug, it will be easier to identify it and come up with a solution.

2. Infrastructure as code:

An incentive to adopt infrastructure as code is to allow more governance of the deployment process between different environments to enable a faster, more efficient, and reliable deployment by automation management, rather than a manual process.

3. GIT log commit messages:

Viewing the GIT log can be very messy. A good way to make GIT log clear and understandable is to write a meaningful commit message. A good commit message consists of a clear title (first line) and a good description (body of the message).

4. Good read – “DevOps Handbook”:

Books are always one of the best ways to learn and the DevOps Handbook can answer a lot of questions you might have such as,

What is DevOps culture?

What are its origins?

How to evaluate DevOps work culture?

How to find the problems in your organization’s process and improve them?

5. Build Stuff:

Linux, Cloud, DevOps, coding, AWS Training, DevOps to AWS free tier, Docker play, KBS play, and code on Github.

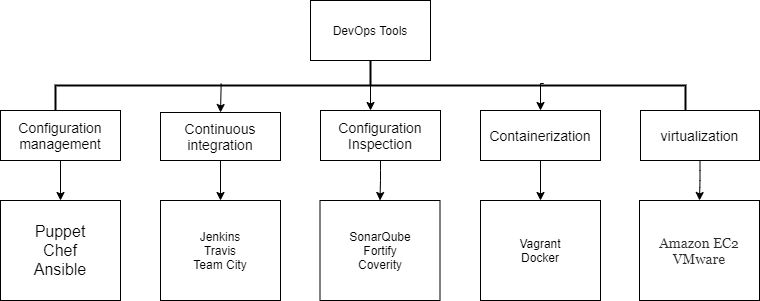

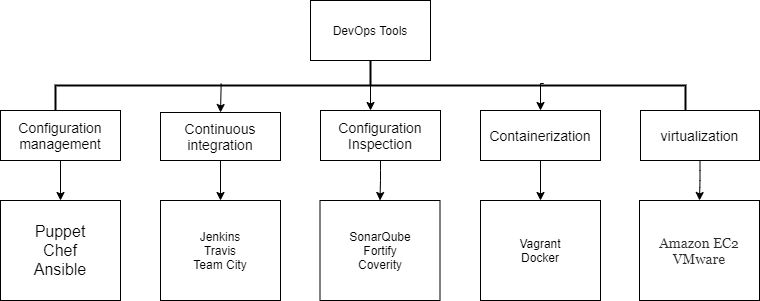

Tools to make an effective DevOps Testing Strategy:

We have already established that the DevOps movement encourages IT departments to improve developer, sysadmin, and tester teamwork. So it’s more about changing processes and streamlining workflows between departments than it is about using new tools when it comes to “doing DevOps.” As a result, there will never be an all-in-one DevOps tool.

However, if you have the correct tools, you can always benefit. DevOps tools are divided into five categories based on their intended use at each stage of the DevOps life cycle.

Configuration Management:

First off, we have configuration management and it is about managing all the configurations of all environments of a software application. To implement continuous delivery, use an automated process for version control. Even with the manual process, apply all changes automatically. It will be deployed through automation by checking the scripts.

Configuration management is classified in two ways:-

1. Infrastructure as code

2. Configuration as code

Infrastructure as code:

It is defining the entire environment definition as a code or script. Environment definition generally includes setting up the servers for comparing & configuring the networks and setting up other computing resources. Basically, they are part of the IT infrastructure setup. All these details would be written out as a text file or in the form of code. They are then checked out into a version control tool where they would become the single source of defining the environments or even updating the environments. This crushes the need for a developer or tester to be a system admin expert to set up their servers for development or testing activity. So the infrastructure setup process in DevOps would be completely automated.

Configuration as code:

It is defining the configuration of the servers and the components as a script and checking them into version control. It either includes parameters that define the recommended settings for software to run successfully or a set of commands to be done initially to set up the software applications or it even could be a configuration of each of the components of the software that are set up. It could also be any specific user rules or a user privilege.

Puppet:

Puppet is a well-established Configuration Management platform. When we talk about configuration management in terms of servers (i.e.) when you have a lot of servers in a data center or in an in-house setup. You will want to keep your servers in a particular state. Since Puppet is also a deployment tool, a simple code can be written and deployed onto the servers to automatically deploy the software on the system. Puppet implements infrastructure as code. The policies and configurations are also written as code.

Chef:

Chef is infrastructure as code. Chef ensures our configurations are applied consistently in every environment at any scale with the help of infrastructure automation. Chef is best suited for organizations that have a heterogeneous infrastructure and are looking for the following mature solutions.

1. Programmatically provision and configure components.

2. Treat it like any other codebase.

3. Recreate business from code repository, data backup, and compute resources.

4. Reduce management through abstraction.

5. Reserve the configuration of your infrastructure in version control.

Chef also ensures that each node compiles with the policy and the policy is determined by the configurations in each node’s run list.

Ansible:

Ansible has a lot going for itself as it’s configuration management, deployment, and orchestration tool. Since it is focused mainly on multiple profiles, we will be able to configure them automatically. After which, we can automate them and benefit from them with deployment purposes like Docker as we also have an authorization tool available in Ansible. We know that it is a “push-based” configuration management tool. When it comes to pushing, let’s say we want to apply changes on multiple servers, we can just push the changes and not configure the entire system or nodes. It automates your entire IT infrastructure by providing large productivity gains.

Continuous integration (CI):

Continuous integration is a DevOps software development practice that enables the developers to merge their code changes in the central repository so that the automated builds and tests can be run. Continuous integration is considered as such an important process due to the following reasons.

1. It avoids merge conflicts when we sync our source code from our systems to the shared repository. It helps different developers to collaborate their source code into a single shared repository without any issues.

2. The time we spend on code review is something that we can easily decrease with the help of this continuous integration process.

3. Since the developers can easily collaborate with each other, it speeds up the development process.

4. We will also be able to reduce project backlog as we will be able to make frequent changes in the repositories. So if any kind of product backlog is pending for a long time, it can be managed easily.

Jenkins:

It is a continuous integration tool that allows continuous development, test, and deployment of newly created codes. It is an open-source automation server. Jenkins is a web-based application that is completely developed in Java. Jenkins script uses groovy in the back end. It is used for integrating all DevOps stages with the help of plugins to enable continuous delivery. There are actually two ways you can develop. You can either develop by using GUI or by groovy. The usage basically depends on the project. In a few of our projects, we would use a Jenkins file created in the project territory. This Jenkins file will be run on the job, and so would have to be written in the GUI. Some people will write everything in the GUI, and that is why it is called Jenkins.

Travis:

Travis CI is undoubtedly one of the most straightforward CI servers to use. Travis CI is an open-source, distributed continuous integration solution for building and testing GitHub projects. It can be set up to run tests on a variety of machines, depending on the software that is installed.

Team City:

Team City is a powerful, expandable, and all-in-one continuous integration server. The platform is provided through JetBrains and is written in Java. A total of 100 ready-to-use plugins support the platform in various frameworks and languages. The installation of TeamCity is very straightforward, and there are multiple installation packages for different operating systems.

Configuration Inspection:

Now let’s take a look at a few top tools used during Configuration Inspection. Any points to be added?

SonarQube:

SonarQube is the central location for code quality management. It provides visual reporting on and across projects. It also has the ability to replay previous code in order to examine metrics evolution. Though it’s written in Java, it can decipher code from more than 20 different programming languages making it a tool you cannot avoid.

Fortify:

The Fortify Static Code Analyzer (SCA) assists you in verifying the integrity of your program, lowering expenses, increasing productivity, and implementing best practices for secure coding. It examines the source code, determines the source of software security flaws, then correlates and prioritizes the findings. As a result, you’ll have a line–of–code help for fixing security flaws.

Coverity:

Coverity identifies flaws that are actionable and have a low false-positive rate. The team is encouraged to develop better, cleaner, and more robust code as a result of their use of the tool. Coverity is a static analysis (SAST) solution for development and security teams that helps them resolve security and quality flaws early in the software development life cycle (SDLC), track & manage risks throughout the application portfolio, and assure security & coding standards compliance. Coverity offers security and quality checking support for over 70 frameworks and 21 languages.

Containerization:

The practice of distributing and deploying applications in a portable and predictable manner is known as containerization. We can achieve this by packing application code and its dependencies into containers that are standardized, isolated, and lightweight process environments.

Docker:

Docker is an open platform used by DevOps teams to make it easier for developers and sysadmins to push code from development to production without having to use multiple conflicting environments throughout the application life cycle. Docker’s containerization technology gives apps mobility by allowing them to run in self-contained pieces that can be moved around.

Vagrant:

A vagrant is a virtual machine manager that is available as an open-source project. It’s a fantastic tool that allows you to script and package the VM configuration and provisioning setup for several VMs, each with its own puppet and/or chef setups.

Virtualization:

Multiple independent services can be deployed on a single platform using virtualization. Virtualization refers to the simultaneous use of multiple operating systems on a single machine. Virtualization is made feasible by a software layer known as a “hypervisor.” Virtual Machine contains dependencies, libraries, and configurations. It is an operating system that allows one server to share its resources. They have their own infrastructure and are cut off from the rest of the world. Virtual machines run applications on different operating systems without the need for additional hardware.

Amazon EC2:

The Amazon Elastic Compute Cloud (Amazon EC2) uses scalable processing capabilities in the Amazon Web Services (AWS) cloud to deliver virtualization. By reducing the initial cost of hardware, Amazon EC2 reduces capital expenditure. Virtual servers, security and networking configurations, and storage management are all available to businesses.

VMWare:

Virtualization is provided by VMWare through a variety of products. Its vSphere product virtualizes server resources and provides crucial capacity and performance control capabilities. Network virtualization and software-defined storage are provided by VMware’s NSX virtualization and Virtual SAN, respectively.

Conclusion:

So we have made a comprehensive coverage of many benefits we can get from DevOps, the tips to follow while implementing DevOps, and also the various tools you would need at every stage of the DevOps process. We hope you had an enjoyable read while still learning how these tips & tools make an effective DevOps Testing Strategy. We have been able to make large strides and grow with DevOps to provide some of the best automation testing services to our clients and felt it was necessary to share some valuable information that we have learned with experience.