by admin | Mar 1, 2020 | Automation Testing, Fixed, Blog |

In this blog we are going to discuss the software test automation lifecycle followed by the feasibility analysis that is required. The feasibility study is a must before taking any action with the automation since it is the deciding factor for automation sign-off based on the value that is going to add to the business.

Life cycle of Software Test Automation

Life Cycle in layman definition is the series of steps/changes that happens in a process to get the desired result.

Life cycle denotes the subsequent actions in a timely fashion. Below are the lists of things to be considered for test automation life cycle.

Feasibility study

Tool identification

Framework identification

Proof of concept

Test scripts design

Test scripts execution

Test scripts review

Feasibility analysis & its significance

As discussed at the start, the feasibility study is something without which we can’t judge the outcome of automation efforts also can’t identify the right automation candidate. This study helps the test team in the forecast on the following activities.

Can the application be automated or not?

What type of tool/ test automation framework can be used?

How much automation is possible?

In spite of high efforts, what’s the value add in automating?

Below are some key parameters while performing the feasibility study, let’s look into each one of them in brief

Feasibility study on the application and understand the functional flow

Before we proceed with automating any application, the automation tester must possess the knowledge of the application. Without having this understanding when any test scenario is considered for automation and we may get into several problems and the task either would be left incomplete or likely delayed.

As an automation tester the focus would be to gain sufficient functional knowledge, get the relevant test cases from the functional (manual) team then conduct feasibility analysis to know how many scenarios can be automated. If at all some cases can’t be automated there must be a valid rationale and the agreement with the stakeholders must be taken.

The analysis helps the test team to showcase their deliverables because after thorough examination team will be in a position to understand what will be covered in automation and how long they require to complete them. Failing to conduct this study could lead to irksome state.

Feasibility study in tool selection

We must conduct a careful study before finalizing the tool. The key points to be considered are

Whether or not the tool can detect all the objects of an application?

The tool that we are going to use for automation must be able to locate all the elements of the application as the locators are the key for the script to interact with the application elements

In case of selenium, we rely on Xpath, CSS selectors, name, Id..etc

If the case is to automate the windows application then selection of a tool that can interact with windows must be chosen

Eg: Coded UI – can locate the windows much better.

Whether or not the tool is best compatible with the application technologies?

There should be a sample POC conducted with all the list of proposed tools to automate the applications then decide which one should be of the good match by considering the application development technologies and its compatibility with the tool.

Eg: while selenium is used to automate all the java, .net, PHP oriented applications, it’s essential to opt for protractor when the application front end is mainly designed with Angular JS. Because angular elements can’t be caught with selenium

Whilst choosing the tool, the cost that would be required for investment should be taken care of. If at all there is a tool exists which does the job w/o any degradation of quality we can fairly go with that as that’s going to add a lot of savings, which will eventually prefer to get better ROI.

Community that’s available for that tool, should be given the importance to an extent as that helps in troubleshooting some of the issues we see in our daily routines.

More the community more preferable the tool is

Feasibility study on framework development

Once the tool choice has come to a conclusion, the next step is to perform the study on framework piece. It should be chosen in a way that the tool supports also it should be quite generic. It should give us better reports to analyze the results

A POC has to be conducted, then after observed the results, we must think of coming up with more reusable components. We might take a lot of hours of time than what is really required for manual validation. But in the long run, as we pass by different phases, the suite adds a lot of value since the execution time will come down to a great level.

A proper check on the regression scripts and baselining them is needed as well because that helps to add more coverage.

Feasibility study on test strategical approach

When it comes to the test approach, the business should take a call whether what between the below strategies should be followed.

Test driven approach

This approach is first to write the tests and then try to accomplish the fix for it. This would be a sophisticated approach and it’s not that easy for non-technical stakeholders to decipher properly. This is completely a call taken by the business based on their budget and other parameters that make sense for a project.

Behavior driven approach

This is buzzing in recent times, as the progress is made by documenting the task in plain English then try to map them with the technical actions. This gives more insight into what a particular test case was written for, what validations are performed. This business model delivers a better report than the above model.

Eg: Cucumber, Specflow, Gauge..etc

Feasibility study on infrastructure availability

In order to proceed with hassle-free automation the test team must not have any issues at infra level, the possible issues that might fall under this category are

Not having access to create project/ branch in a chosen configuration management tool

Not having access to the application/ DB servers to work independently

Software license procurement by the business

Network availability and kind of access to Application, which will delay the execution and cause failures at times

Not holding admin rights to perform certain tasks

The above-listed problems will prohibit automation activities from point to point. Test team must raise the access requests to all the needed things in the project and gain access.

Stable environment and a major part of development should be completed

As a test team we should know when to automate a particular application. If the build quality is at an infancy stage, we see the basic smoke tests are failing then there is no point in automating the test cases at that stage.

When we go for automation when the major development is in progress, we end up adding a lot of maintenance to the test suite as the UI locator elements might get changed often or at times there are some changes expected at functional level, then we may need to re-write the scripts as per new design which is tedious.

Assessing how much automation can be achieved

100% automation is fallacy! Given the complexities of whether or not they are technical or no-technical, that may occur during automation development.

As an automation tester doing this exercise helps the business understand the index of cost and effort saving. Ideally, this is achieved by comparing the functional test cases with the automation test cases. This metric defines what percentage of the tests has been automated and accordingly, the savings were understood.

Calculating the ROI metrics

Metrics are key numbers, which will denote the status, progress, outcome in various ways. As a test engineer/ development engineer when we bother about the accomplishment of tasks, but the management always looks at the numbers extracted as metrics. This is one short and explainable way of presenting data to understand where we are? What have we achieved?

In the same way, in automation world we have certain metrics to be calculated to project the savings to the business, following are a couple of quick examples

Automation savings

This is to understand how much time in man-hours and effort saved because of bringing in automation

Savings = Manual test efforts – Automation test efforts

Return on investments

Certainly we are investing during automation in the means of script development, managing talents and the cost of procuring the infrastructure that’s needed.

Cost of automation investments = Cost of talents + Cost of script development and maintenance + Cost of infrastructure.

Conclusion

More the ROI, the more the profits and that is what business needed as well. In order to achieve better ROI, we should focus on below

Try to bring in more automation

Focus of in-sprint automation development

Right choice of tools, which will incur no or as minimal budget as possible

Develop a generic framework with more reusable components, to avoid extra and often maintenance

Frequently Asked Questions

-

What is Automation Feasibility in Software Testing?

Automation feasibility analysis is the process of determining what test cases should be automated based on factors such as the required tools or frameworks, infrastructural availability, ROI, and so on.

-

Which are the Factors to be considered to justify the Feasibility of Automation?

Major factors to be considered during feasibility analysis are ROI, available infrastructure, test strategy, tool & framework being used, availability of time, repetitive nature & complexity of the test cases, and so on.

-

Why is Automation Feasibility Analysis important?

It is known that 100% Automation is not possible. But in a few cases, not all test cases that can be automated should also be automated due to various factors. So starting automation testing without a feasibility analysis would make the process inefficient.

-

What is an RPA Feasibility study?

An RPA Feasibility study is the process of analyzing the technical feasibility of automating a process after thoroughly examining the step-by-step process right down to the most minute detail

-

Why do Automation Projects Fail?

Most Automation testing projects fail due to a lack of clarity on which test cases have to be automated, usage of wrong tools, lack of automation goals, and the skill to achieve those goals. An automation feasibility study ensures that your team overcomes such issues.

by admin | Feb 27, 2020 | Performance Testing, Fixed, Blog |

This blog is mainly going to cover performance testing and its strategy. Performance Testing helps the business to understand the stability and reliability of the application upon stringent circumstances.

What is performance Testing?

To define in laymen terms, a Performance test is conducted to mainly observe the behavior of application which is hosted on a server upon various loads. The goal is not to validate the functional flow and identify the bugs in that manner, rather test a maturity attained application under critical load, volume, stress conditions to verify the set benchmark parameters are satisfied before the application goes live.

Types of Performance Testing

In levels of testing, the performance test is classified under the non-functional testing , given the nature of the test. This is again categorized into various types of testing which are listed below

Load testing

This test determines the application behavior under a certain load. When we say load we can consider a couple of illustrations as the number of user hits per second or the number of transactions. The goal is to verify how the application performance when there is a load on the server.

Stress Testing

Unlike the above test, this is done by injecting the load higher than the threshold to see the behavior of the application; this can tell us the breakeven point of an application also the frequency of getting down.

Volume Testing

This test is also called as flood testing as we are going to inject too much of data into the application to see, how the queue or any other interface is withstanding the inflow

Endurance Testing

This testing is done by subjecting the application to a nominal load but to make it operate for quite longer than its usage in the field. This test gives the reliability of the application as we are considering the time that the application lasts before it sees failure.

Spike testing

This test is performed by applying a significant steep of raise load on the application to see the withstanding nature of an application.

Scalability testing

This testing is done to verify how the application behaving when the load is kept increasing by adding a lot of user data volume.

Why is performance testing needed?

Given the capabilities of functional testing, a performance test is more useful to understand the reliability parameters. The intention of the test is not just to find the defects but more about examining the application behavior under load. Spec is defined in such a way that it mainly denotes following

How many concurrent users can log in to the application?

How many transactions should the application be processing upon peak load?

How much minimum the response time must be of?

There are a few other parameters which are gathered as part of Non-Functional Requirements (NFR) gathering and tested accordingly.

Has there been no performance testing we wouldn’t be able to understand the capacity, flexibility and the critical parameters of an application under load and there are great chances that we fail in field due to the improper understanding of the benchmark parameters.

Parameters that are validated during test

When any test is conducted, we look for certain parameters as validation elements. In performance testing, these parameters are again considered as two parameters

Server-side parameters:-

CPU utilization

Memory utilization

Disc utilization

Garbage collector

Heap dump/ thread pooling

Client-side parameters:-

Load

Hits per second

Transactions per second

Response times

Entry & Exit criteria

Like every other type of test, performance test also defines its own entry and exit criteria.

Entry criteria

This imperatively defines, when the application is eligible to undergo a performance test. Following are the key pre-requisites to define entry criteria

Functional testing must have been thoroughly conducted

Application must be stable

No major showstoppers must have been in open state

When a dedicated NFT environment is made available

Needed test data should be ready

Exit criteria

This defines how and when to tear down the test, the following activities must be completed to give a proper sign-off from a performance test perspective

An application must have been tested with all the NFR requirements

No major defects or customer use cases are to be in failing state

All the test results should be properly analyzed

Reports and results must be published to all stakeholders for sign-off

After discussing about various parameters and basics about Performance Testing, we will now discuss in detail about Performance Testing Strategy

Performance Testing Strategy

Below are the key pointers to consider in defining the Performance Testing strategy

Understanding Organization Requirement

Assess the Application nature and category

Take stock of last year’s performance of existing live applications

Defining Scope

Tool identification

Environment readiness

Risk assessment

Results analysis and report preparation

Understanding Organization Requirement

Every Organization provides certain service to Customer and based on the services and the nature of assessing requirement to its Customer, Performance Testing requirement gain importance. For example, an e-commerce company is relying 100% on Customer hit rates and successful transactions. 1000+ customers login at a time to buy products. During Offer sale, this can turn to the peak of million customers login at a time. This type of Organization needs strong performance testing before hosting it live. Their spending on Performance tool is inevitable.

Assess the Application nature and category

Identify the # of Applications that are in life on that year and assess the application based on its nature, whether it is Customer facing or assessed by the back end-user or assessed by operations team.

This brings in the criticality of application for performance testing. Also, understand the technology in which it is built with and the number of releases planned with a criticality of release. Prepare the list based on the above pointers.

Take stock of last year’s performance of existing live applications

Few applications would be in live for the past few years. Understand the challenges with respect to performance it faced and do the impact assessment based on past history. This exercise would give us the concrete requirement for Performance Testing for that application.

Defining Scope

After assessing the application nature and taking stock of past history, it gives us a requirement on what to test. Prepare NFR Requirements. This is nothing but the process of coming up with a well-documented test plan for that application. This phase essentially includes the identification of the non-functional requirements such as stated below

Whether the application is a multiuser purpose one or a limited user purpose one.,

Identification of key business transactions that are needed to test

Identify key blockers in the past from performance perspective and target to include in the testing scope

Forecasting the future load on certain services and conducting testing as needed

Tool identification

This is essential in the business; the right choice of tool will help in managing the cost as well as the hassle-free test conduction. The proper analysis must be done before we go for a selection that best suits the assignment.

Whether or not it’s an open-source tool or licensed

The tool that is selected should support any test activity with minimal configuration

The tool should help us in generating the best reporting mechanism

The tool must have got a decent community to get any solution for the problem being faced

To insist on the first point, the tool need not be freeware all the time, it’s completely a business call considering the high-end features that a licensed tool offers, no possibility for any breaches. If at all the test engineer identifies that the same can be accomplished by going with any other alternative tool that is available for free and supports the operation equally with a licensed one, then the choice of open-source one is desired as that way we can save the cost that is incurred. Below are some tools list

Popular Performance Testing tools are:

Jmeter (open source)

Load runner (commercial)

Silk performer (commercial)

Neo load (Commercial)

Application performance management tools:

App Dynamics

Wily-introscope

Perf mon

N-mon

Environment Readiness

This is one of the major considerations as far as the performance test is concerned. Unless and otherwise there is an isolated environment for NFT testing we can deem the test results and the application critical parameters (response time, think time, throughput..etc). So, a dedicated environment is highly recommended as the tasks more or related to

Insert a huge number of transactions in DB through API hit

Perform concurrent login scenario

Observe the response time while navigating between the pages

The environment must be maintained properly and should be highly stable

Risk assessment

It is quite obvious that on certain tasks, there are one or multiple hurdles seen down the line. We should foresee them during the initial discussions about the planning and proactively calling them out to all stakeholders will help come up with a stringent plan, that way we can reduce the negative impact on the business.

This concept is to learn the impact on a business if at all

Analyzing the dependency with the development and environmental team to get the support required during application being broke/ downtime instances

Going live with the presence of a defect that may occur at customer sight

Can’t test a particular scenario due to the unavailability of the infrastructure in the lower environments

Testing a feature by taking some deviation

Results analysis and report preparation

For any execution we make, test results are the key as they are the outcome of the effort that is being spent.

Post execution, results generated from the performance testing tool have to be analyzed and various parameters are assessed and a decent report in an easily understandable way has to be shared to all stakeholders on the outcome. We have tools which capture results of a test run, we can fairly extract from them.

Few instances, performance engineering activity also done by going one step further deep to understand where the blockers are and what could be the solution. This activity requires architectural understanding and finding the error-prone areas.

by admin | Apr 16, 2020 | Automation Testing, Fixed, Blog |

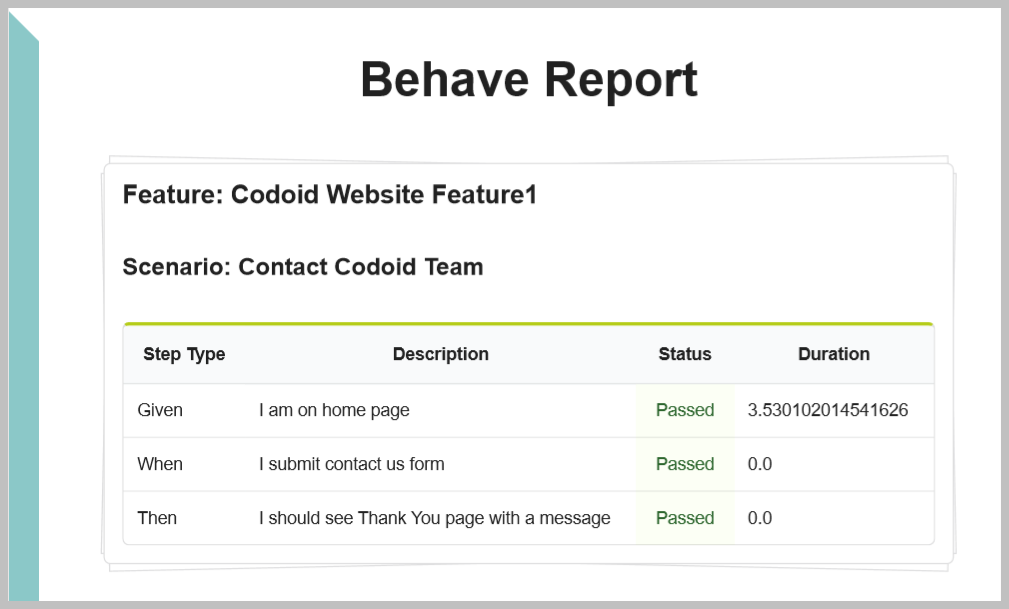

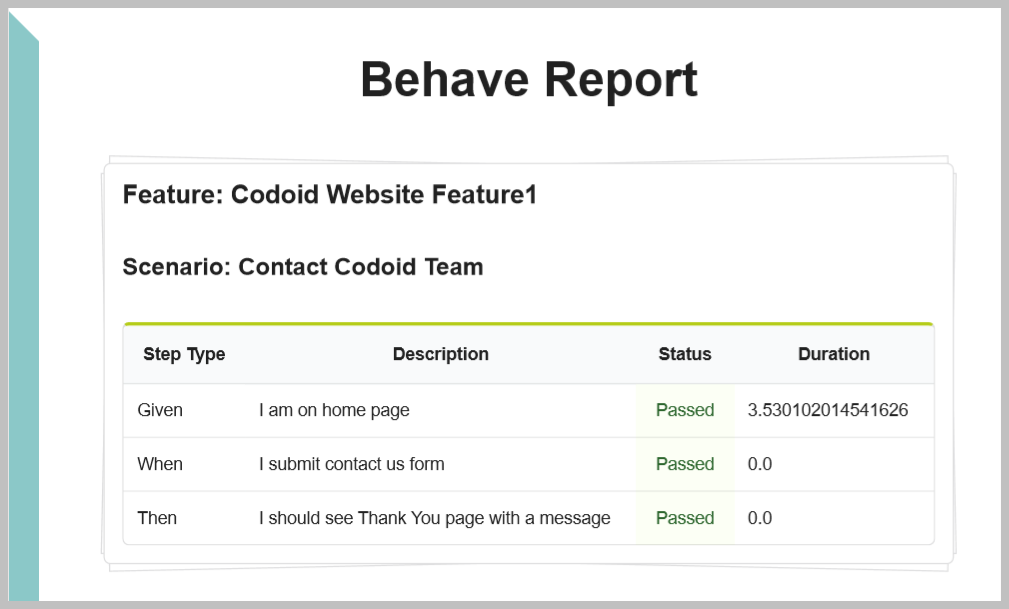

Generating Customized Test Automation PDF report is a great value add to your team. Let’s say for instance if you wish to share a high-level automation test report with your business stakeholders. Then emailing the report as a PDF is a simple and viable solution. However, sending predefined report or technical information is insignificant and uninteresting to the business. As a software testing services company, we use different report templates for different audiences. In this blog article, you will learn how to create Customized Automation Testing report using Behave & Python.

PDF Report Package Installation

For Windows users: If you see OSError: dlopen() failed to load a library: cairo / cairo-2, then you need to install GTK+. Refer the following URL for more details – Install GTK+ with the aid of MSYS2

Generating Behave JSON Outputs

In this blog article, we are using behave framework JSON output to create PDF report. To create JSON report from behave, use the below command.

behave -f json -o reports.json

Consuming the JSON Output

Create a python file and paste the below code.

from pdf_reports import pug_to_html, write_report

import json

file = open(r'../reports.json')

json_report = json.load(file)

html = pug_to_html("template.pug", title1="My report", json_report=json_report)

write_report(html, "example.pdf")

Code Explanation

Line #1 & #2 – Package Imports

Line #3 – Opening the json output file which is generated by behave framework.

Line #4 – Loading the json file

Line #5 – Calling pug_to_html method to generate the PDF report.

Note – To generate the report, you need template.pug file. Please refer the pug_to_html method call, you can see template.pug file as one of the arguments.

Template.pug file

Pug is a template engine. The PDF_Reports package uses Pug to generate the PDF output. Let’s see how to create the template file in your project.

1) File Creation – Create & open template.pug file in your project

2) Report title – The below line creates an H1 tag and applies Semantic UI CSS.

h1.ui.header Behave Report

3) Sidebar – Creating sidebar in the report which enhances the look and feel.

#sidebar: p Test Automation Report

4) Iterate features from the json data

each feature in json_report

if feature.keyword =='Feature'

div.ui.piled.segment

h4.ui.header Feature: {{feature.name}}

5) Iterate Scenarios

each scenario in feature.elements

if scenario.type =='scenario'

h5.ui.header Scenario: {{scenario.name}}

6) Iterate Steps

table.ui.olive.table

thead

tr

th Step Type

th Description

th Status

th Duration

each step in scenario.steps

tr

td {{step.keyword}}

td {{step.name}}

if step.result.status == 'passed'

td.positive.center.aligned Passed

else

td.negative.center.aligned Failed

td {{step.result.duration}}

7) Run the python file which is calling pug_to_html method. If the report is generated successfully, you can see the ouput as shown below.

Full Code

h1.ui.header Behave Report

#sidebar: p Test Automation Report

each feature in json_report

if feature.keyword =='Feature'

div.ui.piled.segment

h4.ui.header Feature: {{feature.name}}

each scenario in feature.elements

if scenario.type =='scenario'

h5.ui.header Scenario: {{scenario.name}}

table.ui.olive.table

thead

tr

th Step Type

th Description

th Status

th Duration

each step in scenario.steps

tr

td {{step.keyword}}

td {{step.name}}

if step.result.status == 'passed'

td.positive.center.aligned Passed

else

td.negative.center.aligned Failed

td {{step.result.duration}}

In ConclusionThe above template is ( quite simple / very straightforward,) it does not have implementation to handle BDD Scenario Outline, Tags, and Screenshots. As an automation testing company, we use comprehensive Pug reporting template to generate PDF reports. Once the template is in place, you can create multiple reports for different audiences. For successful test automation, make your reports easily accessible to everyone in the team.

by admin | Mar 21, 2020 | Regression Testing, Fixed, Blog |

Regression testing is conducted to ensure, the code fixes have not caused any new breakage of the application in some other place. In this test instead of running the failed case alone, we run all the test cases that were passed before to confirm the presence or absence of defects. Regression testing helps us to find the stability of the applications as we pass by the timelines.

This test should be run with an agreed frequency to ensure that addition of new functionality, integration of any other new component and any bug fixes have not affected any other existing system functionalities.

Why is regression testing necessary?

We might have a question in mind that why to spend time in testing the same again and again though it was passed before. But there could be a high chance that new thing will inject a new problem which didn’t exist before.

Below are the possible scenarios as to when it’s highly recommended.

When we are adding up new features

In any application development projects it’s true that as long as we pass by different sprint cycles we keep adding the new set of features to the application. Every time there is a new feature developed on top of it regression testing must be done in order to ensure that the new flow or use case is not impacting other existing functional flows.

When bug fixes were delivered

When the development team fixes the bugs observed in the system it’s the responsibility of the test team to consider them for re-testing then do a regression testing. Let us quickly understand the difference between both of them.

1. Re-testing- fixing the bug that was fixed to confirm it’s no longer happening.

2. Regression- ensuring that fix has not affected any other related functionality.

So, now we understood the importance of doing both the tests. This testing can help us realize which broke the system that was doing fine earlier. Based on the newly occurred bug impact as to how it is affecting or blocking the testing activities accordingly it would be rolled back if needed.

When application re-platforming happens

Often times we see that the applications are considered to be re-platformed. Especially after the cloud technology emerged this has started happening a lot, many applications are considered for migration for the conventional virtual environment to a cloud infrastructure.

Usually in the migration activities we don’t see any new changes to the application at the functional level. Whatever the existing system would just be moved or re-hosted. In this case, regression testing is mandatory because the whole application was migrated and need to confirm that there it works intended.

Types of regression tests

As regression appears to be a redundant test, since we do the same thing, again and again, irrespective of the previous outcome, whether or not they pass just to learn new issues. It also becomes overhead if we don’t have a proper regression plan. Because there would be many challenges with regards to timelines, resources and budget and a lot of other constraints to contribute. Given those parameters, there can be two types of regression tests.

Complete regression test

Full regression test is needed on the following cases

When there are significant changes into the application from the functional/non-functional standpoint.

When we have major launch release is planned.

When the core functionalities or the business logic were modified.

In order to be able to test thoroughly regression suite has to be updated every now and then to ensure we keep the suite of the latest one. Through this testing, we will understand that the application didn’t break and is working intended.

Partial regression test

This kind of testing is mainly adopted when we have changes to a particular module within the application and the timelines for testing are very limited or a small release with minimal bug fixes is planned. All we need to satisfy the case is just identify the edge cases and the scenarios where the integration of this module with other modules happens. These cases should be identified based on the experience also from the past learnings.

Given this rationale sanity test is called a subset of regression test as we will not execute the whole test cases given the time crunch. We will pick scenarios of the critical edge case.

Automation testing role in regression

If there is a type of test where automation can best fit is regression. Automation implementation in regression can give the best return on investment as the manual effort that is being spent is reduced to a great value. In addition to that automation testing also brings inaccuracy. The testing team needs to leverage the suite scope by adding the features delivered in each iteration. After every iteration running one set of regression execution can help to find the possible side effects.

DevOps integration with regression test suite

As we all know that DevOps is the buzzing term in the industry at this point in time with the help of continuous integration and deployment now the team can achieve frequent builds and frequent deployments we can avoid the possible downtimes. The same can be implemented in testing as well, integration of regression with DevOps will help us to manage frequent executions. As the execution frequency is more the intensity of the regression test becomes more. That’s what leads to the successful validation of the software product. The more we do regression less likely the system fails in production.

Regression Testing Best Practice:

Identify incremental Regression Test cases based on releases.

Plan effort dedicated for performing Regression Testing (Thumb rule is 30% of Functional Testing effort should be spent in Regression) for every release.

Accommodate a percentage of Regression comprising of End to End Testing as well.

Have a session with Business team to identify critical scenario based on Application functionality and accommodate it in the Regression suite.

Plan to have maximum Regression coverage in Automation to minimize Test Execution effort.

Major defect fixes, each cycle completion of Test Execution, Major deployment are all qualifiers for performing Regression Testing.

by admin | Mar 24, 2020 | Software Testing, Fixed, Blog |

In this blog it is assumed that we know the importance of testing thus, for now, it’s time to understand what are all the different software testing types that are available and when to use what. A proper test plan must have documentation and the timelines of each type of testing alongside the description. The three major classifications based on the mode of execution are:

White/clear box testing

White box testing talks about the testing conducted at a technical and very low level. This testing is to validate the structure, design, and implementation of the system. Writing the unit tests, integration tests also designing scripts at the system level is part of this classification.

This type of testing is done by understanding the source code. The objective of this testing is to validate all part of the source code like decision branch, loop and statement are verified.

Unit testing which is done by developer is a part of White box testing.

Black box testing

This is solely the functional validation done by the manual functional testing. These tests are designed by understanding the requirement then execute them manually then verify the results. The test coverage techniques used in this process are to ensure the test coverage is high with not taking overhead of documenting too many repetitive tests.

The testers who do this type of testing will not conduct code review / assess the code coverage/statements etc. They don’t have any understanding of what code is written. But they are executed and functionally validated to ensure that Application does what is expected out of it.

Grey box testing

This is a blended mix of both white box as well as the black box testing. In this, we try to cover both the strategies. A tester who does this Grey box testing has partial knowledge of code. Predominantly they execute a function written with different functions input and assess the quality of function.

The black box testing can further be divided into three major types

Functional testing

In this testing type, our focus is to validate the functionality by understanding the requirement. This is a simulation of a user test. The intention of this test is to determine whether the product built-in right fashion.

This functional testing is further classified into a few types they are

Smoke testing

This is a build verification test, once the development gives build its essential to execute the most common scenarios that are needed to confirm the given revision of build is a good candidate for further testing.

Sanity testing

Sanity test is a prioritized test, if at all the timelines given and the amount of testing to be done are unparalleled then we pick the edge cases and the most priority test cases to deem the sanity of a build. Ideally speaking this is a subset of regression testing.

Adhoc testing

This is a functional test without any concrete plan, based on the experience we have developed on the application front we execute the scenarios.

Non-functional testing

In this testing alongside validating the functionality we mainly focus on testing the performance of an application by subjecting that to a specific load as determined. It’s not just limited to the performance we validate so many other characteristics of applications. The types of Non-Functional testing done are

Performance testing

It helps to assess the stability of software under a certain workload

Volume testing

It helps to test the software with a huge volume of data and assess the response of the Software to huge volume.

Load testing

When a huge load of data is given to a program, how it handles the load. Huge Spike is given in a short span of time and understands how it handles.

Security testing

When some vulnerability is injected into the system under test, how it handles such condition. It is to validate if it is able to recover from such vulnerability. It is predominantly used to test the application whether it is free from any threat or risk.

Localization testing

When we declare an application has multi-lingual support, we need to perform Localization testing to ensure whether the application is able to display pages/content in the current local language. Here all the links and contents are validated to ensure that content is designed to specific to that local language.

Compatibility testing

When we publish software, we declare on what all different hardware, Operating system, network, browsers, devices, etc it will support. This will be a part of the release notes. We need to ensure sunder such conditions this software supports without any issue. Testing done to validate on different hardware, Operating system, devices, etc are called compatibility testing.

Endurance testing / Soak Testing

A specific load is given to the Application for a prolonged period of time to assess system performance/behavior.

Regression testing

Regression testing is conducted to ensure, the code fixes have not caused any new breakage of the application in some other place. In this test instead of running the failed case alone, we run all the test cases that were passed before to confirm the presence or absence of defects. Regression testing helps us to find the stability of the applications as we pass by the timelines.

This test should be run with an agreed frequency to ensure that the addition of new functionality, integration of any other new component and any bug fixes have not affected any other existing system functionalities.

Levels of software testing

In the process of the software development life cycle, we have corresponding testing phase involved. The ideology is to conduct testing at different levels to ensure the functionality and we don’t deviate from the process. When we discuss the levels of testing, we don’t specifically limit the scope to the testing phase, rather we discuss the kind of testing done at every phase of SDLC and here it could be the fact that many teams intervention might be observed. Following are the different levels of testing that we conduct in SDLC process.

Unit testing

This is the first low-level test that’s conducted by the developer. In this testing, we test only the particular component for its working condition also the developer has to ensure the proper statement, decision and path coverage was done with reference to the requirement built. If unit tests are failed developer has to look into the problem and fix them immediately as no further testing can be unless this is completed successfully.

Integration testing

This is the next phase of unit testing, in this level of testing we focus on the integration that is established between the modules and check whether the services give proper requests and responses. This is done by both testing team development team. Integration tests, in general, are kind of API tests as the whole system not ready yet. We have two more subcategories of these tests based on the unavailability of a specific master or slave module.

Top down approach

This approach is adopted when any slave or listener component in the application is not available for testing but we need to conduct testing to meet the deadline. In this case whatever the module that’s not available yet would get replaced by a stub. The stub is a simulating system, which acts as the regular component that we should have got ideally in place.

Bottom down approach

It’s the reciprocal process of the above-mentioned one. If at all the master component or service is not ready to test and we have to test the other components then the master component would get replaced by a dummy driver system. Although the driver is not a real system we will give the power to drive the other components as per the need.

System testing

This testing is done once the whole system is made available, the focus of the test is to validate within the system. All possible scenarios and customer use cases must be thoroughly tested. This is the most prioritized official test as we learn a lot of bugs and also this is the level where we see the system turns out to be a reliable one.

System integration testing

This level of testing is conducted when the complete end to end connectivity of the application with all possible external downstream/ upstream systems was connected. This is the real time test where the test replicates the usage of the customer flow once the application gets launched.

User acceptance testing

Use acceptance test is conducted by the customer or product owner. This is the final verification test that’s performed before the application goes live. This test builds confidence in the business as to whether or not the reliability criteria that’s set was met and application is good to be launched. This is again classified into two sorts based on the environment where it’s conducted. The rationale behind doing this test at two sites is to isolate any external parameters that are actually intervening in the results we observe. This will rather help to pin down certain problems and root cause the problems.

Alpha testing

This test is done at the actual test team sites to understand the results. All observations are made a note to compare against with other types of the UAT testing.

Beta testing

This test is done at the customer sight, after the execution the results are compared to understand and conclude

1. If the same problems have occurred.

2. The problems observed at one’s sight are due to the improper or slow infrastructure and not of the application.

Conclusion:

The above listed are types of testing that are used predominantly used in most of the Organization. There are some special testing conducted for specific needs like to test Gaming Application, different techniques, and testing types will be used. But this blog extensively covers the testing type which is for testing the most common type of Applications which covers 80% of the Organization requirement. Thanks for reading this blog.

by admin | Mar 26, 2020 | Automation Testing, Fixed, Blog |

In this blog article, we have listed tools and libraries we use for Python Test Automation Services. Few popular tools to automate web and mobile apps are Selenium and Appium.

However, there are few widely used Python Packages which will help you create and manage your automated test scripts effectively.

Let’s see them one by one.

PyCharm-Python IDE

PyCharm is an excellent IDE choice for automation script development. PyCharm comes in Professional and Community editions. We use Community edition since we need it only for automation testing purpose.

Behave BDD Framework

There are many BDD frameworks like (Lettuce, Radish, Freshen, & Behave) which goes hand in hand with Python. We use Behave BDD frameworks for automation testing projects. Behave has all the required features of BDD.

openpyxl

openpyxl is an Excel library to read and write xls & xlsx files. If you want to install and explore openpyxl, please refer the following blog article. How to read & write Excel using Python

Page Object Pattern

When you apply Page Object Pattern, you can enable re-usability and readability. For example: Every test case in your test suite may instruct you to visit login page multiple times. However, when you do this in automation testing, you no need to write Login page steps in multiple test case files. Create a Page Object Class and call the methods whenever you want the login steps. You can write much cleaner test code using Page Object Pattern.

ReportPortal

Creating and maintaining an automation reporting dashboard is never an easy task for a testing team. Writing robust automated tests is a cumbersome task for an automation tester. Creating your own test automation reporting dashboard is an additional effort. We have created many test automation reporting dashboard for our clients. However, nowadays, we have started using ReportPortal instead of reinventing the wheel.

In Conclusion

Python Test Automation is gaining much popularity among automation testers. Follow automation testing best practices, have a right team and use right tools & libraries. This will undoubtedly help you succeed in automation testing.