by admin | Mar 13, 2020 | Automation Testing, Fixed, Blog |

As we all know that the importance of automation in the IT industry revolution is in pinnacle. If we just hold breathe and ask ourselves a question as to why? Due to its high accuracy of executing the redundant things, reporting results and it needs no or less human intervention hence the cost that a company spends on a resource is relatively reduced in a long run.

Whilst it’s true that the automation is so much beneficial in terms of bringing accuracy in and cost savings to the company, it is also true that if at all the planning and implementation is not taken utmost care it equally gives the negative results

So, in this blog, we are going to talk about the automation best practices.

Understand the application technology then identify the tool

Before we kick start with automation, there has to be a lot of groundwork to be done from the application perspective. Thorough understanding of Application development infrastructure like

What technologies are being used to develop

It’s front end

Business logic

Backend

This will help us identify the tool that best fit for the application which in turn helpful for hassle-free test automation implementation.

Eg:- if at all the application front end is developed with angular js then protractor is preferred to selenium because it’s not possible for selenium to deal with some of the locators.

Determine the type of automation tests. We need to identify what types of testing to be performed on the application then do a feasibility study to proceed with automation. When we say types of test we are dealing with two things

Levels of testing

This is a conventional test approach in almost all the projects, that first unit testing then integration testing followed by system testing. In this testing pyramid as we reach from the top to the base we intensify the test process to make sure there are no leaks, in the same fashion we need to ensure that each level of these tests are automated as well.

Unit tests

This is anyways done by the development team, these tests are automated most likely in the process of building and deployment process. Automated unit tests can give us some confidence that all components are running properly and can further be tested.

Integration tests

It’s well known that Integration tests are performed after unit tests. In the recent agile model in order to conduct parallel testing alongside development activities, we need to consider automating the integration tests as well

These tests come under the classification API and involve verifying the communication between specified microservices or controllers. UI might not have been implemented by the team we perform this.

System testing

System tests are designed when the system is available, in this level we deal with writing more number of UI tests which would deal with the core functionalities of the application. It adds great value when we consider these tests for automation

Types of tests that can be automated

UI layer tests

User interfaces the most important piece of the application because that’s what exposed to the customer, more often than not business focuses on automating the UI tests. Alongside doing in sprint automation leveraging the regression test suit gives a great benefit.

API layer tests

We should be relying on UI tests alone, given their time-consuming factor. API tests are par quicker than UI tests, automating tests in this layer will help us confirm the application stability without UI. This way we can conduct some early testing on the application to understand any major bugs earlier.

Database tests

Database test automation adds a great value to the team when there is a need to test new schema or during data migration or to test any quartz job that runs in the DB table.

Conduct feasibility study diligently to deem the ROI

While bringing automation into the testing process is essential, it is also very much needed to do an analysis to understand the return on investment factor. Jumping on to automate everything is not a good approach. A proper understanding of the application then taking a decision on the tool and framework selection is recommended.

Failing to conduct feasibility study will lead to problems like weak scripts with flakiness, more maintenance effort.

Points to Consider as a best practice for Automation

Choose the best framework

Choosing a suitable framework is part of a feasibility study. Based on the agreed test approach such as TDD- test-driven development or BDD- behavior-driven development, framework selection, and implementation should be taken into consideration

It’s recommended to go with the BDD framework approach considering the current agile methodologies. As the spec file which can be understood by any non-technical professional drives the test case, its quite easy to follow and define the scope of any test case in the application.

Separate tests and application pages code

In test automation while designing the tests, it’s essential to write test cases and the application pages method. This kind of page factory implementation helps us to get rid of unnecessary maintenance. It’s expected that the application screens likely be changing over cycles if at all the tests are not separated from pages we need to edit all the test cases even for a single change.

Showcase meaningful reports

The main purpose of test execution is to observe the results, be it manual testing or automated testing. Given the context, we need to integrate a reporting mechanism that should clearly state each test step with the corresponding results. When we share the reports to any business stakeholders they should find these meaningful and seem to capture all the correct information.

We have many third party reports being integrated to our test suites such as extent reports, allure reports, and testing reports. The choice of reporting tool should be based on the framework implementation. Dashboards should be very clear. Again BDD frameworks such as cucumber, the gauge would produce better Html reports and these are inbuilt.

Follow distinguishable naming convention for test cases

Not naming the tests properly is the silly mistake we ever do and its costlier. A good name given to the test case itself will speak for the functionality it checks for. This will also make the reports appear better as we are going to showcase the test case name and test case description in the report, it will also help the stakeholders to decipher the reports. Alongside naming the tests a meaningful name, it would be of great help if we can agree to a similar pattern for all test cases in test suit.

Follow the best coding standards as recommended

Despite the programming language when we are scripting the test cases it’s highly important to use the right methods and data structures. It’s not just recommended to find the solution but also to think of the best approach that reduces the execution time. Peer review and review by a senior or team leader in the team before it has been pushed to VCS system.

Here are some best practices when we say coding standards

Use try- catch blocks

Whenever we are writing any generic method or any test method we must ensure its being surrounded by try with possible catch blocks and as a good practice we must print a useful, relevant message according to the exception that’s being caught.

Use static variable to set some constant values

In any automation framework we certainly have some variables which we don’t want to change them at all it’s always a best practice to have them declared as static ones. One good example of this is web driver object is made as static variable.

Considering the scope of variables, we must consider declaring them. Global variables are recommended as we can use them in different methods as the test case executes

Generate random data and append to testdata

In recent times applications have become more advanced when it comes to the point of dealing with the data. In a data-driven test when we are fetching the data from an excel workbook, since the same test data is going to be used upon multiple runs, during the second run if at all application doesn’t accept the duplicate data, we need to supply another set of data. Instead, if we can just append a random number or an alphabet by using the java Random class, it serves the purpose.

Write generic methods

While designing the test automation framework and designing the tests, it’s very important to understand the common functionalities and write them as generic methods. Anyone in the team who is developing a new script needs to make use of these methods as much as possible and any new method that doesn’t exist can be added with the discretion of senior automation developer or team lead. Failing to implement this process will add so much of maintenance to the test code which is tedious.

Relevant comments to the code

Comments are much needed to any code snippet to help with understanding. It need not be a comment good documentation such as,

What exception is being thrown by the method upon failure?

What parameters it takes?

What it returns back?

Documenting the above things would give crystal clear understanding to any newbie who joins the team and there won’t be any hassles to kick start with script development

Using of properties files/ xml files in the code base

Instead of hard coding or supplying a few of the parameters required for a test such as a browser info, driver exe path, environment URLs, database connection related passwords, hostnames it would be good practice to have them mentioned in a properties file or any XML file, the one advantage is that, these files need not be built every time we trigger the execution. It makes the process a lightweight one also it is easy for maintenance.

Use of proper wait handling

On many occasions we see some of our tests will exhibit flakiness, its highly uncertain to find them why are they failing. If we ever conduct a proper triage we might end up answering ourselves that it has to do with waits. It’s better to go with implicit wait and wherever it’s required we can go with explicit wait so that we are not adding too much of execution time to the script. Unless there is a specific requirement it’s not recommended to use Thread.sleep

VCS system availability

When we as a team automating the application, it must have a version controlling system in place, so that we can manage the code changes and merge without any conflicts or re-works if all goes good under proper guidance. Having a VCS system is ideally recommended for any project that has automation test service.

Bring CI/CD flavor to test automation

DevsecOps is the buzzing thing in the industry in this era, this not just for developers to build the code and deploy but also for testing to automate the execution process, having this infrastructure will help set the execution on a timely fashion or with a particular frequency. We can set the goals as to what should be pre-execution goals and post-execution goals. It can also help the team with sending the reports as an email, the only job left is to analyze the results. This is one good thing to execute the regression tests with more frequency to ensure all functionalities work intact.

Capture screenshots upon failure and implement proper logging mechanism

As the test runs we need to capture the screenshots and have them in a specific directory as evidence. We can take a call whether or not screenshots required for all passed steps or only for failed scenarios based on the memory constraints. The screenshot can further be used share with the development team to understand the error when we report that as a bug.

Also a good framework must write log files to a directory, in order to see the steps have been executed. Dumping these into a file would also help future reference.

Best practices are the things learned from experiences, so adopting them would help us developing and maintaining the automation framework easily and that’s how we pave a tussle free path for our daily routines, some part of this discussion can be a debated as well because in automation world we have many flavors of tools, applications and frameworks so probably there could have been different solutions. The best thing to remember here is, basis the need for analyzing and finding a solution then make it as a practice is recommended.

Hope there could have been some takeaways while thanks a ton

by admin | Mar 19, 2020 | Web Service Testing, Fixed, Blog |

Since there is an increase in number of Technologies, Operating systems, web services, interfaces with Web Apps with various touchpoints and Browsers, Web Application Testing has become more tedious and more complex. With continuous and seamless delivery, web App testing is much more critical to the success of delivery to ensure the quality.

Below are the challenges in testing Web Application:

Defining Scope:

First and foremost challenge in Web App Testing is defining the scope. Since there are multiple teams working simultaneously on multiple touchpoints, a change in scope/scope of 1 Application will have an impact in the delivery of other App since both are interdependent of each other.

Careful release planning is expected across teams to ensure the right delivery at right time. From a testing perspective, such scope has to be thought through and clearly called out. If there are unknown areas, it has to be called out in dependencies section of Test plan and signed off from Program team.

Scope Creep and Gold Plating:

Often in the projects particularly in Web App testing during delivery scope creep / Gold Plating are natural tendencies.

Scope creep occurs when there is a change in the scope defined by business stating “Must have” for the current release. Such requirements were not thought through by the Business team during the planning phase and hence it would not have been accommodated earlier. Now this feature will be developed and tested with the same timeline

Gold Plating is an additional feature added as a part of release considering to please Client / Business to create a “Wow factor” without adding any cost to it

For both of these, there are additional scopes to be tested and it is to be delivered within the stipulated timeline and handling such items in testing will be a challenging one.

Technology Challenges:

This is one critical area when it comes to testing. Due to changing technologies every day, where additional features are also added by product companies, testers need to know the features of the product + Technologies so as to test it thoroughly.

Testers need to know the technology and how it behaves on the certain condition so that they can define scenarios according to the Technology and coverage will be good

Schedule slippage:

Whenever there is a scheduled slippage by the development team, it adversely impacts the testing timeline as there is no compromise from a business on the delayed deliverable and hence it has to be buy-in by testing timeline.

There is also a schedule slippage due to defects and timeline taken by the development team to fix it. Ultimately Testing team has to complete the work on time and to be delivered on time to Business.

Security:

For the growing Internet, security is the major constraint. For banking and Insurance Applications, most of the payments are initiated through Web and there is a need to validate all the security App to ensure right transactions are sanctioned and a malicious attempt should be recognized and stopped.

For Retail domain, transactions/purchase happens online and during offer period lakhs of transactions/attempt to purchase happens. Web App should support the right transaction and orders should be authorized properly.

For Healthcare Industries, most of the Transactions are done online from procuring medical equipment till Doctor Consultations happen online through Web Interface and it is recommended to govern all these transactions to provide the right service.

For Automobile Industries as well, most of the Orders are procured through a Web interface and there is a critical testing requirement to test all these without missing features.

Performance

Since millions of transactions irrespective of the type of industries happen through Web Applications, performance testing of Web App is crucial. There are various types of Performance Testing can be performed like Load Testing, Stress Testing, Volume Testing, Soak Testing, etc.

Identifying the right performance testing based on the nature and type of application is a challenge.

Application Response

Based on the type of device and the network bandwidth application response to the user varies. It could be an annoying experience to Users if such responses are not captured and notified by the testing team before delivery so that Response improvement can be carried out and delivered with the better response time.

During Test Planning phase, we have to plan for such testing as this response analysis could be time-consuming and it comes under non-functional testing and should engage the right resource for this analysis

Usability Testing

Sometimes as a Web page and navigation it might look correct from development and testing team, whereas from the End User perspective, Website might look complicated than expected and users might find it tough to navigate to the right page and collect/process the information. In such a case, this Website might be unusable from an End-user perspective. The challenge from the testing team would be to understand the Application from an End User perspective and provide the right feedback to the development team which could lead to higher failure rate in delivery if it is missed out.

Compatibility Testing

Before commencing the testing, the detailed thought process has to go in to find out what all devices / OS / Browsers this Web App will be supported. Such information should be captured from the development / Architects / Program team and detailed planning has to be done by the testing team.

Since testing carried out in each device / OS/browser is an additional effort and hence during execution, it would increase the effort if not planned properly. Usage of Automated tools like selenium / UFT / Python to be planned if many devices / OS/browsers testing are required.

API Testing:

Since for most of the Web Apps, there is an underlying API layer which helps in getting the request from Web App and provides a back response back to Application, including API Testing apart from UI validation is mandatory.

API Testing itself is huge and technology has gone beyond Web service to microservices. The testing team has to understand API / Microservice concepts and perform API testing and it is a challenge.

Database Testing:

Database also has gone beyond from relational database to Big Data and it is again an extension to Web Testing. Testers need to be strong in SQL, PL / SQL and it is considered as a specialized stream in Testing.

Reports Validation:

Reports are the basic querying feature provided by many Web Apps. This helps users to know about their application status as well as monthly/quarterly and Yearly summary of their Account details.

User can know the list of transactions made / items purchased / items rejected / invoice dates / Payment dates etc through a single click through Reporting feature.

For every type of report, set of queries will be used and Tester should have knowledge on SQL to retrieve the information from Database and the output generated through reports has to be compared and validated.

Products Testing:

There are a lot of products developed using Web Application. Some of the standard applications are SAP, Peoplesoft, Business Process tools like PEGA, IBM BPM, etc.

Apart from this, for each of the industry type like BFSI, retail, Hospitality there are numerous products are available like Kronos, Investran, WMOS, OMS, Calypso, Murex, T 24, etc which require extensive learning and mostly BA / QA kind of role is picking up to test such products which are customized to various customer need. And always there is a scarcity of QA professional to test such products.

Conclusion:

With the invention of various technologies and products to meet Client requirements, there is always a lot of challenges from a QA perspective to test such applications. It involves careful planning right from the project release perspective until identifying the right skilled QA professionals for the project. Understanding the requirements, interface touchpoints and arriving right scoping is a mandate for successful QA delivery.

Happy Testing!

by admin | Mar 17, 2020 | Software Testing, Fixed, Blog |

On this planet everything that is developed should be tested, officially or unofficially. In our rota, we tend to do a lot of testing but we don’t realize because they are part and parcel of daily routines. In a similar profession, the information technology stream adopted the testing domain as one of their power to ensure that the application or software designed working as intended and it serves the purpose.

In this blog we are going to give the knowledge related software testing from the very basic level even a novice will be able to follow.

One must brainstorm themselves to get the motivation of being a software tester. Being an art testing requires some of below skillset.

Intelligence

Out of box thinking

Disciplinary and punctual actions

Planning

Leadership abilities

The mentioned skills were quite common for any profession but when it comes to the point of testing they play an additional role but are mandatory to possess. We keep everything crossed for you to choose to test as your profession, be ready to break the system in a procedural manner and ensure that you are of great help to your team always to launch a perfect flawless product.

Understanding the SDLC

As a pre-requisite, we would strongly recommend one to give yourself a walkthrough on SDLC (software testing life cycle), while going through the below content. A blog can still be understood without SDLC knowledge but it’s recommended as that way you connect the dots while undergoing discovery learning to understand better.

What is software testing?

Software testing a process of identifying the bugs present in the software by validating all possible scenarios that are applicable to the software. In this formal process, we design test cases basis the understanding of the application then executes to deem the correctness of the software

Why is software testing required?

For an illustration, let’s consider that a bank has launched one of its internet banking system applications into production for use without testing. It is evident that customers are going to initiate transactions and apply for various services that are offered by the system.

There is a high probability for the customer-friendly scenarios could fail, which in turn cause the bank to lose its reputation and trust in the market and it’s very expensive for the bank to correct the mistake and re-work on the application

1. Initiated transactions might get failed due to various reason

2. Fund would have not credited at the receiving end

3. Can’t be able to query about the balance?

4. Can’t apply for cheque leaves through the internet banking system.

In the above discussion, it’s a software imagine its software component that performs some physical actions based on the input such as airbag release system in the car, automotive alarms elevators, and lift..etc. failing which can cost lives. So we need to keep the mindset in such a way that, if the application or a working software is not tested we are going to see a huge amount of loss which is difficult to recover from. So we know why testing is essential now, great understanding!!!

Principles of testing

There are a few principles that must be learned which explain the reasoning of testing in all possible views, why wait? Let’s see them quickly

Early testing is beneficial

How early we start to test, that early we can stabilize the system also it’s true that early bugs are cheaper than bugs that are identified late in the business. When we say cheaper if we can identify and fix them early we can avoid doing late regression testing and that as well helps to meet the deadlines. So we should adopt a process that helps us proceed with test activities early in the software life cycle.

Error absence is the fallacy

Though the intention is to test as much as we can and identify all possible bugs, we can guarantee that system if bug-free and 100% flawless. We might have misbehavior seen at a very low level that might not have caught up in our test scope or an unfortunate miss. Despite the principle, we should always ensure that we don’t cover any bug present in customer use cases.

Exhaustive testing can’t be conducted

As part of the testing phase in the software development life cycle, we will conduct various levels and types of testing, but it’s still difficult to cover all the scenarios including positive and negative. Given the timelines for the testing phase we should understand the prioritized edge tests also we need to apply the proper test case techniques to make sure a few test cases will give more coverage.

Pesticide paradox (redundant test case)

We should be continuously reviewing the test cases in order to ensure we have powerful tests and no more duplicates present. If the same tests are being executed again and again we end up identifying no defects. Every time there is an operational change or enhancement in the module, we should build the tests accordingly.

Defect clustering

In any software system, every component has to be treated and tested thoroughly, it could be that most of the defects might be happening in smaller components or from a module which we don’t even think of. We should always focus on the integration pieces and also focus on edge cases as that gives confidence that either boundary are working.

Testing shows the presence of defects

It’s evident that only testing allows us to find defects, how much diligence we possess while testing that much defect rate can be achieved and in turn can help the business to make the application more stable within the nominal time.

Testing is situational dependent

We should keep changing the mindset and the game plan when we are supposed to test different applications or different software. The same strategy will not help us to find the bugs and which will cause so many complexities within the domain. For an illustration, the plan that’s applied for testing a banking-related application may not be useful to test the retail application or insurance application as the requirements and usage are significantly varying.

Software testing life cycle

STLC is a part of SDLC, it’s one of the phases which is executed after completion of development. It’s essential to understand the phases involved in the testing life cycle as that helps us apply the right testing approach. The steps involved in STLC are

Requirement analysis understanding

In this phase, the goal is to analyze the requirements thoroughly to understand the functionality. Any clarification that would be required should be clarified by discussing with the business analysts or the subject matter experts.

Test plan

Once the requirements are understood we should plan for proper testing. We should also define the scope as what comes into the scope and what can’t be. We should also plan the number of resources that would be required also the timelines.

Test case design

Basis the understanding the test case design should be commenced. By following the proper test case development techniques also keeping the type of test in scope we should have the test cases designed. Since the tests designed are going to be executed for finding the defects. Hence proper understanding of the design techniques are needed.

Test data preparation

Test data plays an important role in the execution front. Based on the test data the test execution flow changes, hence apparently we can believe that test data has the ability to drive the test case. We should identify possible combinations of negative and positive scenarios. Test data plays a major role in automation as well, in a pure data-driven or hybrid framework, we can execute the same test with different sets of inputs than writing them as a separate test case. Test data can be maintained in Database or in excel spreadsheet or in any other known form.

Test execution

Once the designing of the test cases and identification of test data is done we need to execute the test cases. Any underlying bugs present in the system can possibly be found in this phase. Due diligence is required while executing the tests as we need to catch up with minor cosmetic bugs as well. Every execution report should be shared with all the stakeholders for their reference and also help with understanding the progress of the test

Test closure

As a test closure activity we will share the final results observed during the execution phase in a detailed format. We will put in graphs, bar charts and presentations so that the higher management can actually understand the status better. Also, the sign-off documents, test risk assessments, and test completion reports are being circulated to get the approval from all business stakeholders.

Test case development techniques

While writing the test cases it’s essential to adopt the techniques to be smart enough also to ensure the coverage with a minimum set of test cases as possible. If the techniques are not followed we end up writing invalid tests also test cases might appear as redundant ones.

Given the two major classifications of testing, we have respective test case techniques designed as well

White box testing techniques

As discussed above it’s a test designed or executed on the structure or design of an application and proper understanding of the code and system is a must.

Statement coverage

Let’s consider a developer is conducting a unit testing, the developer must have a thorough understanding of what that module or component should do? Also, the developer must aware of the code that has been written. Predominantly with the help of this technique, we try to get rid of any unreachable code that’s available in the system. Ideally, the statement coverage is equal to the number of statements being written.

Eg.

Main(){

If(a>0&&b>0){

If(a>b){

Print is bigger;

Else

Print b is bigger;

}

Else{

Print error message saying, negative values are not allowed;

}

}

The statement coverage for the above program is 9, as there are 9 statements.

Decision coverage

The decision coverage mechanism of a white box testing technique ensures that we make proper conditional calls and don’t miss any edge cases. The decision coverage is equal to the number of decisions we have in the system.

Eg:

Main(){

If(a>0&&b>0){

If(a>b){

Print is bigger;

Else

Print b is bigger;

}

Else{

Print error message saying, negative values are not allowed;

}

}

In the above snippet we are making two decisions as to whether or not both the given numbers are non-negative integers then considering a decision as which number is bigger

So the decision coverage for the snippet is equaled to 2

Path coverage

Path coverage ensure that how many use cases and flows can be derived based on the given inputs. Also, we need to ensure that all possible paths are covered to ensure that the system is not open to breaking in any scenario. This can be understood by drawing some UML, flow chart diagrams.

Black box testing techniques

As we already discussed above that black box is a manual validation and test cases are mainly focused to test the functional validation. These techniques will help us to do some permutations and combinations and identify the test cases based on the edge cases or doing some partitioning

Boundary value analysis

In this technique based on the condition, the boundary values such one immediate left and one immediate right boundary values are tested to ensure the state transition is happening properly on the conditional edge.

Eg lets consider that a person whose age is 18 or above only can vote and below age group, people can’t cast their vote.

In order to test this condition we will understand the deciding factor age as “18” as it’s mentioned then identify one value just below 17 and one above 19 as boundary values to test that critical conditional logic.

Equivalence partitioning

This is another technique, which will help us to avoid the number of test cases but gives more coverage in this testing. In this technique based on the condition, we will partition the negative and positive slots and then pick one of those values to test. The lore is that, if the test case is behaving as expected for one value it is understood rest other values would be passed from the same slot.

Eg: let’s consider the same example as above that person with 18 or above can vote and rest can’t

We will identify three partitions as

Age- 0<=17- negative slot

Age- 18= positive slot

Age- 19 to any greater value- positive slot

We will pick any one test data from each classification then with the help of 3 test cases all the scenarios can be covered.

Decision tables

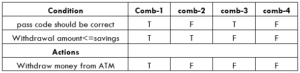

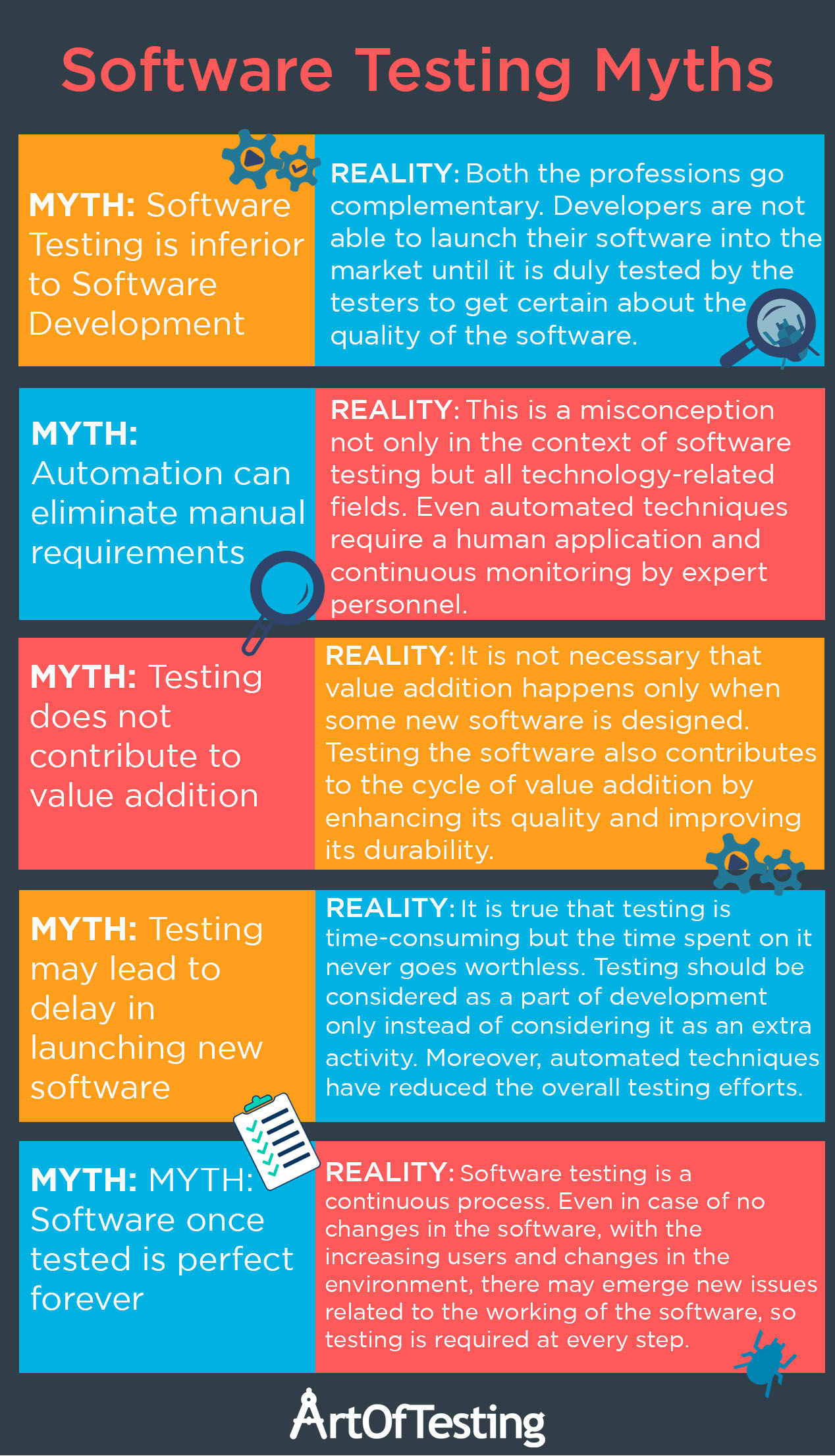

When we have multiple combinations of inputs to test, if we choose to write test cases for all the values it becomes an overhead. Then forming a table with all the conditions and actions put in would help better to identify the cases.

Eg: let’s consider a scenario of withdrawing money from the automated teller machine.

The machine will dispense money only when

Cond-1 user put incorrect passcode

Cond-2 withdrawal money should be lesser or equal to the savings balance.

Action / Goal: Withdraw money from the teller machine.

The above requirement can be converted into a decision table as,

State transition testing

In this technique we need to understand the state flow of the use case then come up with a design flow diagram. This flow can help us derive how many flows and how many are positive and negative. In order to do this, we must have proper knowledge of the application.

Use case testing

Use case tests are designed to be more customer-specific test. The test case is written in the form of a user guide where the steps are written in a procedural format. This gives more insight on the test procedure as it talks about the actors, events n pre and post conditions in specific.

Eg: let’s consider a login scenario into an application

Actor

Actor is the person who performs that events

Pre-condition

Actor should own a good network provision

Actor should be on the login page to be able to enter credentials

Events

Steps are addressed as events, the events involved in this step are

Enter the username and password

Click on the login button

Alternatives

This says what other actions can be possible when actor on that page.

The actor can look for help link or actor can try signing up if the account is not created yet.

Exceptions

These are the problems that user might encounter during the execution of events

Application might be throwing an error upon entering the credentials

After the clicking the login button, occurrence of any HTTP error

Post- actions

These are the subsequent actions executed after the completion of events.

Logging out from the application

Performing any business functionality with in the application

Conclusion

We reckon it’s a vague discussion, but every piece in this is important to be a competitive and distinguished tester. Adopting these fundamentals will help us test the applications with due diligence and with enhanced coverage. How much its the fact that the principles have to be understood, in the same fashion we must apply the sense to determine what technique to be used and when. I keep everything crossed for every reader to be a successful tester.

Thanks for reading!!! More we know more we grow.

by admin | Mar 11, 2020 | Software Testing, Fixed, Blog |

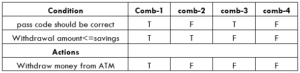

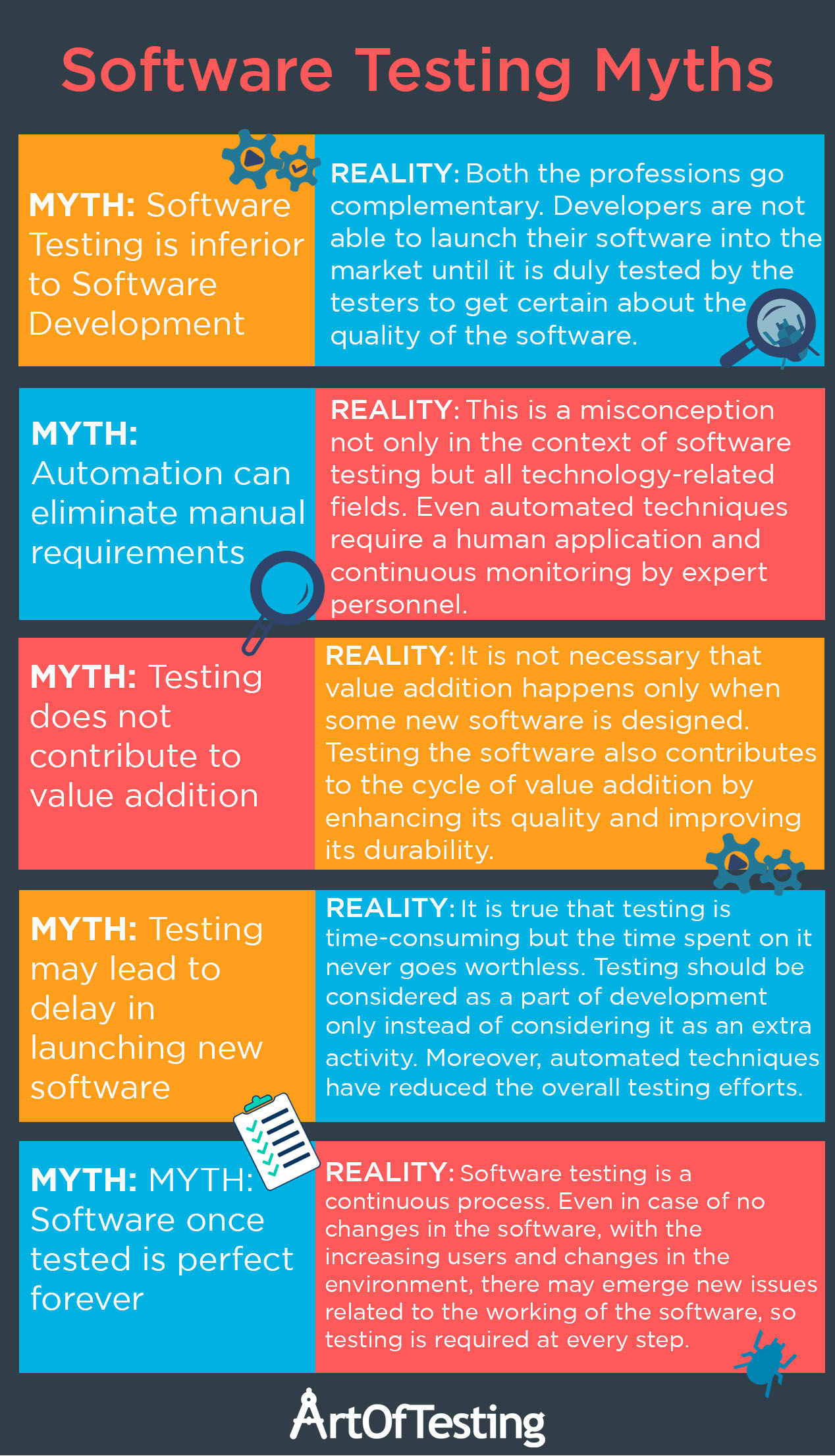

Software testers are considered to be the gate-keeper of the quality and hence are very vital to the success of any software. Still, this ever-evolving career option Software Testing is surrounded by many myths and misconceptions. Let us unveil the reality behind them:

MYTH: Fighting against the bugs is the only Bull’s Eye

REALITY: To most of us, even the tasks of a software tester are ambiguous. Some of us say the core task is to make the software bug-free but it is not at all the truth. Software testers have a huge list of functions to be performed, including checking the interoperability, inter-browser acceptance, smooth working, security against malicious codes, and fighting against the bugs. So making the software bug-free is just one of the tasks. There is indeed a bigger picture beyond this little point.

Also, even if the software is fully protected against the bugs, there may be other issues hampering the smooth functioning of the software viz, server crashes in the period of huge traffic on the site or some browsers not supporting the software, some users are unable to access or transact through it, and much more

MYTH: Software Testing is inferior to Software Development

REALITY: This myth is too harsh to be real for software testers who actually put in so much effort day and night to ensure the quality of the software. So in reality, both the professions go complementary. Developers are not able to launch their software into the market until it is duly tested by the testers to get certain about the security and QA of the software. So the developers are dependent on testers.

Also, vice versa is true. The testers have to work according to the codes and programs used by the developers during the development stage. The testers have to gather information about bottlenecks in the software, which can only be known by its creators. So the testers are dependent on developers.

Hence, none of them are inferior or superior to each other.

MYTH: Software Testing requires no specialized qualification or less expertise

REALITY: This is one of the biggest misconceptions that we think that any person from an engineering background can join a team of QA Analysts. In order to fully understand the system and debug the root cause of any issue, testers need to know about the underlying database structure and the different technologies used.

Apart from that some testing activities like white box testing, automated testing, security testing, etc requires specialized programming language and/or a particular tool’s expertise.

MYTH: Automation can eliminate manual testing requirement

REALITY: This is a misconception not only in the context of software testing but all technology-related fields. We believe that with the increase in automation, human intervention will decrease but this is not the case. Even automated techniques require a human application and continuous monitoring by expert personnel. So automation testing can never substitute manual testing techniques completely, rather the integration of both in the right proportion will bring greater results.

MYTH:Testing does not contribute to value addition

REALITY: It is not necessary that value addition happens only when some new software is designed. Testing the software also contributes to the cycle of value addition by enhancing its quality and improving its durability.

Without ideal testing, launching a new software can prove to be worthless, in that case even the primary value addition will become a zero. So testing is secondary but immensely affects the value addition of the primary activities like software designing so it is a part of value addition itself. So testing is never an extra expense, it bears fruits if done in the right way.

MYTH: Testing may lead to delay in launching new software

REALITY: It is true that testing is time-consuming but the time spent on it never goes worthless. Testing should be considered as a part of development only instead of considering it as an extra activity. Thus, time utilized in testing or QA is counted in the process of designing and development itself. Moreover, automated techniques have reduced the wastage of time in lengthy manual methods so we can not conclude that testing leads to any delay in launching if new software.

MYTH: Software once tested is perfect forever

REALITY: Software testing is a continuous process. As and when there are changes in any coding or programming of the software, testing is required to risk-proof those changes, even if they are minor. With increasing users of your site, there may emerge new issues related to the performance of software, so testing is required at every step.

It is not only done once before it is launched, rather the process continues even after years of its initial designing and introduction in the market. The malicious codes or worms can come up any time to damage your software, so it is a whole-time job.

Conclusion

If any of these myths were stopping you to choose software testing as a career, so clear your minds and be confident about your choice. And if you are already a software tester and you had wrong notions about your tasks, I hope this article will show you the true picture and help you work with more efficiency.

Author Bio

Kuldeep Rana is the founder of ArtOfTesting, a software testing tutorial blog. He is a QA professional with a demonstrated history of working in the e-commerce, education and technology domain. He is skilled in test automation, performance testing, big data, and CI-CD.

by admin | Mar 8, 2020 | Mobile App Testing, Fixed, Blog |

Mobiles these days have become mandatory given the capabilities that it has and the virtue of the fact that it diversifies to provide service to the customers in more areas. Since it plays a significant role in human life in the current era, releasing the mobile with high-end features and with no possible bugs or as a minimum number of bugs as possible is essential.

There are plethoras of mobiles and the market has cut-throat competition, so in order to catch the nerve of the target market, we should always ensure that we hit the market at right time also with right and new set of futures. Whilst we focus on delivery, it’s equally important to offer the best qualitative features.

In order to ensure we deliver the feature with lots of quality we need to adopt best testing practices. Below are the certain types of testing techniques that are to be considered for testing to go market with enough confidence.

Before we move on to the actual test techniques we apply, let’s understand the testing objectives when we say mobile testing we need to view this in two angles, let’s see each one of them.

Mobile Device Testing

The main purpose of this test is to ensure the mobile as a device is fully functional in terms of both software and hardware perspective. Also the compatibility of the software with respect to the electronic component system. During this test we will end up finding more bugs related memory leaks, crashes due to hardware and any shutdown issues that will lead to the maintenance or replacements. Any compromise in quality in this area would lead to a great business impact as well as the reputation loss.

The main types of testing conduct here are

System Reliability Test

The purpose of the system to confirm the stability of the mobile device, it varies with load and performance testing by a large far. In this test, we mainly test based on customer usage then track the shutdown rate of the device. This test is being run as long as we reach or come down the agreed % of the problem rate that is defined at the test start. This will give us confidence while launch, also the testing is being done as per customer usage policy there is a high chance for finding bugs.

<

Mobile Application Testing

During this test the motivation is in such a way that, the application that is designed and set to be working with mobile platforms must be fully functional also needs to satisfy the end-user needs in all perspectives. Some common things that we look for while testing are

1) How is the application works when it is used in a mobile phone?

2) Can it be installed and uninstalled easily?

3) The response time of the application under desired conditions?

4) The compatibility of the application with various mobile operating systems and hardware?

The mobile applications are further classified into 3 different types

Native Application

Any application that is specific to an operating system is called a native application. These applications will not support other operating systems. Due to this reason, these are comparatively faster than other apps.

Eg: iTunes, specific to IOS and will not work on android platform and vice versa.

Web Application

These applications are generally hosted in web servers and operate on mobile browsers. The application will render the web pages to service the user. Since the applications are hosted on webservers these are comparatively slower than native apps.

Hybrid Application

Hybrid apps are mixed apps, they belong to both the native and web apps that we have discussed to provide the extended support to the user community.

Eg: Twitter, Facebook, yahoo.

Basis the understanding of the above types of applications we should identify the types of testing that are needed.

Usability Testing

The purpose of this test is to evaluate how friendly the application or the device for use, the operation must be very easy so that users at all levels of expertise should be able to use the product without any hassles. Things to be considered in order to gain more customer confidence

Documentation

There should be clear documentation about the operational approach and it should be quite understandable. Self-Explanatory documentation will help customer to sort out any difficulties on them own by going through the guide.

Response time

Customer will never encourage delay, this is one of the key parameter basis in which the usability of the device is rated. Less the response time, more the user convenience

Feedback notifications

A good feedback/ notification system will help deem the product more customer-friendly. Clearer the response message more the

There are certain parameters which will actually decide the usability of a product

User-friendly operation

Response time

Compatibility testing

The main objective of this test to check the application is compatible with various operating systems, browsers and different devices. This being categorized as a non-functional testing technique.

Localization testing

The main purpose of this test is to ensure the application supports quite a few regional languages. This is a key feature as there are consumers from different regions who expects the mobile display fonts and keypad to be in their native language. Since the usage is fair in the society proper testing must be in place to ensure there are no underlying bugs during the language transition. And the process of switching between them should be hassle-free

Load and performance testing

CAs the name indicates, this testing is to subject the application to critical conditions and test how the device or application performance is.

How the device reacts when multiple apps are opened simultaneously

How the battery charge is being consumed by the different applications

How reliable the network performance under various conditions

Security testing

As we learned the importance of privacy from many incidents, it’s very much needed to be compliant with all the security policies to make sure the customer data is treated with utmost care and not used, manipulated and exposed to outer world. Having failed in this means can lead to a great loss of business and trust.

Keep the customer updated on the software maintenance information, using security pins and proper encryption and decryption techniques during data transmission should be adopted.

Installation testing

This test is to verify the scenario of installation and uninstallation. This is also to ensure how user-friendly the process is? Also to ensure the process of installation or upgrade is not crashing the system and user data is not affected.

Functional Testing

In this testing the whole functionality of the device is tested such as the app flow and screen sequence and complete working end to end functionality. Below are a few illustrations of functional testing

Check whether or not the login happens properly

Able to install and un-install the application

Application responds properly, all the buttons are working and response is received.

Automation testing

In this testing the functional validation is done through the script in order to ensure we don’t spend much time in regression execution. We have the best tools and frameworks available to conduct this test.

Tools for mobile automation

1) TestComplete

2) Kobiton

3) Calabash

4) Appium

5) Monkey Talk

6) EarlGrey

7) Testdroid

8) Appium Studio

Conclusion

Mobile application testing with the use of the above techniques is key, given the usage of the mobile apps in this era. Keeping the process and technical facts aside the intention is to deliver an application that is user-friendly and quite responsive. The motivation while testing the application should be set up in a way that the above-mentioned qualities are served to the best. The mobile application is an area for investment where unexpected returns and reputation can be gained. So more we test by getting into the user’s shoe more the benefit that it adds.

Thanks for the read.

by admin | Mar 4, 2020 | Software Testing, Fixed, Blog |

In this blog we are going to discuss what test strategy is all about, why test strategy is required when we would require a test strategy document and finally about how to write Test strategy document

What is a Test Strategy

A Test strategy is a static document that describes how the testing activity will be done. This is like a handbook to stakeholders which describes the test approach that we undertake, how we manage risk as well as how we do testing and what all levels of testing along with entry and exit criteria of each activity. This will be a generic document that the testing team would refer to prepare their test plan for every project.

Test strategy act as a guidelines/plan at the global Organization/Business Unit level whereas Test plan will be specific to project and always we should ensure that we are not deviating from what we commit in Test strategy

Why Test Strategy is required

It gives an Organization a standard approach about how Quality is ensured in the SDLC. When there is any typical risk involved in a program, how to mitigate those risk and how can those risks be handled. When every project adopt to the same standard, quality improvement will be witnessed across the Organization and hence Test strategy is important

When do we need a Test Strategy

Below could be some of the circumstances which would require Test Strategy.

When an Organization is forming a separate QA wing

When there is a change in QA Organization from normal QA model to TCoE / QCoE where the operating model and structure is changed

When QA leadership changes who comes with different perception

When Organization is adopting different tool approach for Automation / Performance etc

Contents of Test Strategy

Below are the contents of Test Strategy document

1) Brief Introduction about programs covered in this Strategy

2) Testing Scope and Objective

3) Test Planning / Timeline

4) TEM Strategy

5) TDM Strategy

6) Performance Strategy

7) Automation Strategy

8) Release and Configuration Management

9) Test schedule, cycles, and reporting

10) Risk and Mitigation

11) Assumptions

Brief Introduction about programs covered in this Strategy

This section covers the overall scope this Test strategy is going to be covered with. Important engagement and programs which will run based on this Test strategy.

Testing Scope and Objective

This section describes the following

What will be the different levels of testing conducted like Integration, System, E2E, etc

The process of review and approval of each stage

Roles and Responsibility

Defect process and procedure till final Test sign off

Test Planning and Timeline

This section covers in-brief the following

What are the programs going to be executed

What is the timeline that each program is going to constitute

What will be the infrastructure and tooling needs to support this program

Different Environment that will be used

TEM Strategy

Prior section describes the overall requirement and this section describes in detail about the Test Environment Strategy and covers the following

1) How to book Environment for project purpose including booking 3rd party Environment

2) Assess to Environment to users for project requirement

3) Environment integration and stability management

4) Test data refresh co-ordination

5) Post release Environment validation

6) Support and co-ordination requirement

TDM Strategy

Similar to Environment, different project test teams would require different data requirement and some would need to mock up live data. This section in TDM strategy would provide the below information

What are the forms to be filled and agreement to be in place to serve this data need

What type of data to be loaded and when it will be available

Who will be the point of contact for each program

If any 3rd party Vendor support is required, this would describe in detail of that

Performance Strategy

Based on various programs and its schedules listed in the prior section, performance strategy would describe how Performance Testing requirements would be fulfilled and what their tool strategy, license model is and team availability. If the team also does Performance Engineering, it would describe in detail about how it is performed

It cover details about Requirement phase (How NFR requirement will be gathered from stakeholders, Proof of concept procedure, how critical scenarios are defined.), Testing phase (How testable scripts are created, how data setup is done), Analysis and Recommendation (How performance issues are reported, sign off procedure)

Automation Testing Strategy

Similar to Performance Strategy, Automation strategy would describe how requirements are defined, tool strategy, license model, etc and also elaborate how the Automation team conducts feasibility approach till defining framework, developing scripts till execution and Continuous Testing if it is in-scope

Release and Configuration Management

This section describe the following areas of Release and configuration management

Overall release and configuration strategy

QA deployment and release calendar

How different Test assets are loaded and tracked

Co-ordination of QA Deployment

How QA audit is conducted

How production deployment is co-ordinated by the QA team

Change Management for any CR post-release is managed

Test schedule, cycles and reporting

This section details out about each program, its schedules, Test timeline, Test cycles and how reporting will be carried out.

Risk and Mitigation

This section describes overall risk management and risk mitigation process along with how it will be tracked and reported.

It also classifies various risk levels like Project level and program level risk and describes how it will be handled during the execution phase

Assumption and Dependencies

During Test strategy development the timeline of program etc are in a predictive stage as it could be for a period of 1-5 years and hence there is going to be a lot of assumption which should be listed in this section. Also, there are different teams which Testing team has to co-ordinate with like TEM, TDM, vendors, etc which needs to be called out in advance in the assumption and dependencies as any change in Project-level / Program level scope will have an adverse impact and it has to be called out in strategy as assumption and dependencies

How to write Test Strategy document

For now we have covered about what Test strategy is all about, why it is important and looked in detail about the contents of the Test strategy, it is now easier to define how to write better Test strategy document.

Before writing Test strategy we need to collect few information which is listed below:

List of programs that need to be handled in this stream

Programs and its releases objective and basic requirement it is going to meet

Criteria for Environment and Data management requirement

Timeline of each releases and it’s key stakeholders

Third party integration and vendor management details

Information about Test type and release frequency

Tool requirements/procurement process understanding

Basic assumption and dependency with outside QA Organization

This information will be collected by Program Test Manager after discussing with Key stakeholders of the program.

The collected information has to be discussed internally within the QA team Managers and assign responsibility to detail out further requirements to refine the Test Strategy.

If the program is going to be from the QA Transformation perspective, then further information has to be collected on the key QA levers that are going to run the program run differently.

After identifying the key levers, assign the responsibility of each lever to a dedicated person so that Strategy can be further fine-tuned and can be circulated to wider group.